Cluster networking

This chapter describes the cluster networking considerations and which network topology is required to meet the minimum requirements in terms of throughput and latency to and from an IBM Elastic Storage Server (ESS).

This chapter includes the following topics:

2.1 Cluster networking overview

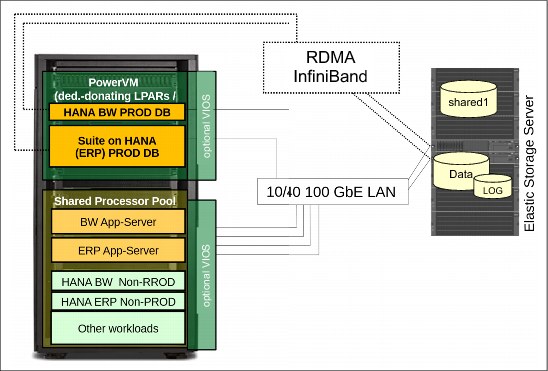

IBM Spectrum Scale supports various network scenarios. A typical high-level architecture overview is shown in Figure 2-1.

Figure 2-1 Cluster networking overview

Because POWER8 and ESS storage servers can easily use 10 GbE network capabilities, the infrastructure can be enhanced by adding networks or InfiniBand connectivity. An SAP HANA setup is shown in Figure 1-1 on page 2.

Although the minimum configuration requires at least one TCP-based network, you can add an InfiniBand network to the environment and connect all or a subset of nodes directly through InfiniBand host channel adapters (HCAs).

For planning purposes, if you do not configure Remote Direct Memory Access (RDMA) over InfiniBand, IBM Spectrum Scale relies on Ethernet only. Therefore, all data is transferred by a TCP/IP socket-based communication between HANA node (client) and storage server. To enhance the bandwidth of your network connectivity, you might consider bonding multiple devices. However, the theoretical possible bandwidth that a node can reach is still limited by the number of sockets a client opens to the storage servers.

In IBM Spectrum Scale, a so-called NSD client has one open socket for daemon communication to each corresponding NSD server, which provides access to the block storage. Therefore, the number of open network sockets scales by the number of NSD servers.

For a IBM Spectrum Scale environment, it does not make sense to configure more than two network ports into a bonding device on the client side when your IBM Spectrum Scale Cluster runs with one ESS building block that consists of a pair of NSD servers. The Linux TCP bonding layer cannot scale higher than two ports.

2.2 Network topology summary

In a 10 GbE network topology with a single building block (ESS), the maximum theoretical bandwidth per client cannot exceed the bandwidth of two sockets, which provides a throughput of approximately 2 GBps. In comparison within a 40 GbE network, you can scale up to 8 GBps.

For all GL4 and GL6 models, consider RDMA/InfiniBand or a 40 GbE or 100 GbE topology. Otherwise, the performance benefits from an ESS building block are limited by the connectivity between NSD server and clients. However, the minimum requirements by SAP for operating an ESS solution for SAP HANA still can be met with a standard 10 GbE network infrastructure.

For more information about a sample configuration for configuring a bond device on SUSE Linux Enterprise Server, see Appendix A, “Sample configuration for bonding on SUSE Linux Enterprise Server” on page 33.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.