7

Data Migration

Data migration is essentially the process of taking data from one or more systems, performing operations upon it (such as cleansing, transformation, and enrichment), and then subsequently loading that data into one or more systems.

Typically, when implementing Salesforce, data migration is treated as a discrete workstream with questions to answer. How do you get good-quality data into the Salesforce Platform? How do you ensure loading large amounts of data is smooth, reliable, repeatable, and timely? How do you effectively export data from the Salesforce Platform? What’s the right set of steps to follow when importing or exporting data to or from the Salesforce Platform? Where do you begin?

In this chapter, we will learn the methods available to import data into the Salesforce Platform, including tuning and optimization techniques. Then, we’ll explore some of the concepts and processes that need to be considered and understood when undertaking any large data movement in or out of the Salesforce Platform. We’ll also learn how to effectively export data from the Salesforce Platform.

Data migrations are needed due to several reasons, such as when a new system (such as Salesforce) has been implemented to serve as a single customer view or a company may have acquired another and is merging data into another system. Another example is when data is regularly purchased or acquired, and that data needs to be migrated into a company CRM. Therefore, data migrations are an important, ongoing process.

Data migrations are seen by some as big, scary, and best left to someone else, but once the concepts are understood, migrating data in or out of the Salesforce Platform needn’t be feared. Data migration accounts for 15% of the exam outline. By understanding the material in this chapter, you will be equipped to understand the process, concepts, and intricacies surrounding data movement and the Salesforce Platform.

In this chapter, we will cover the following topics:

- Understanding data movement and its considerations

- Loading high-quality data

- Migrating Large Data Volumes to Salesforce

- Exporting data from Salesforce

Understanding data movement and its considerations

In this section, we’ll cover the various stages involved in data migration, and why it is important to invest time in the definition phase of data migration. When considering moving data from one system or another, it is important to ask yourself the following questions:

- Is it appropriate to move the data?

- What will I gain by moving the data?

- Is the data of sufficient quality?

- How would the data be maintained in the long term?

- What governance or other processes should be put in place?

By tackling the questions, we’ve essentially started our data migration process. While one system in the IT enterprise may store a certain part of the data, it may not always make sense to move the data if it is being moved into a target system for the sake of it, just because that system is a shiny new piece of the IT enterprise. For example, I worked with a client in the past who invested in Salesforce to provide better marketing and customer service and, as part of the implementation, immediately believed that all of the data from their repair management system needed to be migrated into Salesforce. This client produced physical devices for measuring the weights of fresh produce, such as fruits and vegetables. When these devices developed issues, their inspection and repair processes would be carried out using a cloud-based system that only support staff and repair engineers had access to. When Salesforce was introduced as the new system of record for customer data in the IT enterprise, time was taken to assess the systems Salesforce was to interact with to see what data needed to be surfaced for marketing, sales, and support agents to interact with what was being termed the connected customer. Essentially, we’re talking about Salesforce pulling together data from several data sources (sound familiar? We covered the concepts behind this scenario in Chapter 4, Salesforce Data Management).

When speaking with the client, they wanted to redefine their service process, so we decided that, with Salesforce being the new customer master, we would implement a case-based workflow. For the existing repair data, we decided not to import data from a system that was going to be put into a read-only state before eventually being decommissioned in several years. To meet this requirement, we decided to use external objects to surface the repair data but leave it in the existing system. However, what we actually decided to do was pull in the information relating to the service entitlement and contract for support, which will be driven from Salesforce going forward. Essentially, the client was looking to provide better service through Salesforce by migrating only the needed data. By allowing existing records to be surfaced in Salesforce, only the required data was moved across.

The point of the questioning exercise at the start of the process of migrating data led us to the design I’ve explained here in terms of what data will be moved.

The next stage in questioning the data migration process is to understand if the data is of sufficient quality. When talking to clients, some are quite open about there being lots of duplicates or incomplete fields in their primary systems. Others may not have an honest answer as to how good their data quality is. Luckily, we can work with clients to define the metrics that will underline what quality data means to them. For example, what fields are considered necessary? When profiling data, we can quickly determine what fields, as an overall percentage of the total record count, have no value in them or only a very low percentage have been populated. We should be questioning whether these fields are required to build a picture of what our quality dataset looks like. We should also look at the completeness of data, duplicate counts, and so on. We’ll look at this in more detail in the next section. For my client, the data wasn’t too bad before it was migrated. Field completeness was good, but about 5% of the migrated records were duplicates. Therefore, the client had a decision to make – fix the duplicates before or after migrating the data. They decided to fix them afterward, once the migration was complete.

Before we’re done with our considerations for migrating data, we need to think about data maintenance in the long term. Do any controls or procedures need to be put in place during or after migration? What happens to source systems in terms of access and modification rights to data? Does a process need to be put in place to process or enrich data at regular intervals? Is this a manual or automated exercise? What metrics make that process successful? This falls slightly into the governance aspects of data migration since, often, the processes surrounding maintaining data will form part of a wider governance structure. For my client, they decided that the Salesforce matching and de-duplicating functionality would serve as the main entrance to avoid duplicate Account and Contact records being entered into the system. They would monitor the process using Salesforce’s reporting functionality and decide to procure a suitable tool, should data quality issues occur in the future.

Now, let’s learn how to define a process for migrating data. In the next section, we will cover the steps we’ll take throughout the rest of the data migration process.

Defining a process for migrating data

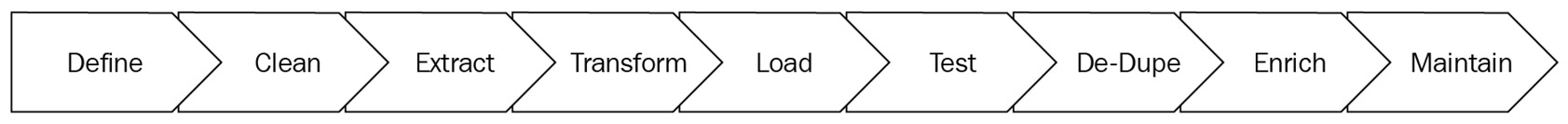

Once the questioning as to the what and why of data migration has been done, a more formalized process can be considered. The following sequence of steps is typical in data migration from one or more systems to another, and some steps can be omitted or moved, depending on certain requirements – for example, deduplicating data before or after migration.

I’ve used the following sequence of steps in several data migration workstreams as part of a Salesforce implementation:

Figure 7.1 – A data migration process

Next, let’s explore each of these stages to understand what’s typically involved.

Define

We begin by defining the target data model and Customer Relationship Management (CRM) data hierarchy. This is very likely to be done as a result of capturing and validating requirements concerning how best to support target business processes. With the target data model defined, we can turn our attention to defining where the masters of data are in the IT enterprise. As alluded to previously in this chapter, profiling data is useful for determining the quality and completeness of data held in the source systems from which data is being migrated.

Next, it is necessary to understand the as-is data models from the source systems so that the data structures can be understood when we get to the Extract phase of the process. Imagine migrating from another CRM system such as Dynamics 365. Even though Dynamics 365 has the concept of Accounts, Contacts, and Opportunities, it is built upon SQL Server, so it follows a more traditional SQL database for storing data. When inspecting the as-is data model, the SQL Server database schema for the instance needs to be understood. Our data dictionary should be started in the Define phase. As we learn more about the data sources and target data structure, the data dictionary that defines the types and formats of fields (including any rules that need to be applied) can be produced.

With our source systems, data structures, selected entities, and fields identified, we can start mapping the data. We do this by defining how the source entities and fields will be mapped to the target data model, including any transformations that may need to be applied. Next, the strategy for cleaning, de-duplicating, and enriching data (if required) can be defined, including where in the process those steps should take place (pre- or post-extraction, for example).

Clean

Data cleaning can be defined simply as the act of performing data cleansing exercises, either as a manual process or by using automation and software tools. The definition of what cleansing means in terms of the data will be different for each IT enterprise; for example, ensuring that no contact name contains invalid characters. The activities that are typically involved in all cleansing activities include defining any tools to be used to assist in the process, as well as any matching rules and scoring thresholds.

Extract

Extracting data involves taking the defined source data from source systems. Therefore, to extract data effectively, it is necessary to have the source systems, as-is data models, and any other relevant information well defined and understood. It may be desirable to combine all extracted data into a staging area if any cleansing, de-duplication, or enrichment activities are to be done once all the data has been extracted.

Transform

Once the data has been extracted, or after the extraction phase of a fully automated Extract, Transform, Load (ETL) process, it is usually necessary to transform the data before it can be loaded into the target system. For this to be successful, it is necessary to know about any entity mappings, field mappings, and other rules, conditions, or logic to be applied at transformation time.

Load

We’re now at the stage of the process where we can load data into the target system. With all of that definition and careful attention paid to preparing the data that’s being loaded, we can choose a tool to use for the loading activity, such as the humble Salesforce Data Loader. You might be surprised at just how much can be automated when scripting Data Loader to run on the command line or as part of a wider automated process. We also need to consider acceptable load performance. We may have millions of records to load, so doing that in an acceptable time frame may differ, depending on the parameters of the data migration exercise, client industry, and so on. For example, I oversaw the data migration process for a bank, which naturally could only take place (and needed to be completed) when the bank wasn’t open for business. Luckily, we tested the process several times, so that was a relatively straightforward Friday evening in terms of getting the data loaded promptly.

Test

There are generally two types of testing applicable to data migrations:

- Testing to specification

- Testing for intent

Simply put, testing to specification involves ensuring the right amount of data is loaded. For example, the extract gave us 1,000 company records, so we loaded 1,000 Account records into Salesforce. If the load count was 990, then 1% of the data hadn’t loaded in and we needed to investigate why. This should ideally be identified and rectified as part of testing, but I appreciate that on the day of the production data migration, this may not always be the case.

Testing for intent can be thought of as User Acceptance Testing (UAT) for data. Does the data loaded stand up to real-world use? Will our users be able to carry out their duties as desired with the data loaded? Do the data relationships correlate correctly?

Something else to consider in the testing part of the process is the reconciliation strategy for data. How can we ensure that the sets of data from the various source systems and the target system are in agreement, accounting for the transformations we’ve applied? With specification, intent, and reconciliation covered, we should be in a position to say that we’ve loaded the correct amount of records that work as intended for the users and are correct in their desired end state, as dictated by the transform logic.

De-duplicate

As mentioned previously, the de-duplication step of a data migration process may take place earlier in the process but can take place after migration if it is deemed more suitable. When de-duplicating data, a suitable tool can be used to facilitate the process. This is typically given a set of rules that determine what criteria are used to match data. This is, in turn, used to generate a score of the likelihood of data being a duplicate and therefore should be considered for removal. For example, it isn’t entirely unreasonable to build a rule that matches Contact records that have the same email address and postcode. It’s likely these are the same person, so they can be flagged for intervention (in this case, marked for deletion). If you’re migrating data to a new data master, it may be worth considering the intended behavior concerning propagating data changes and sending duplicate records to downstream systems.

Enrich

Once the data has been loaded and is in a clean state, it may be desirable to enrich the data by incorporating a one-time or ongoing process. For example, Salesforce has a plethora of AppExchange packages available to it that can provide enriched company address and employee information, credit scoring information, and so on. If there is a desire to enrich data, it generally makes sense to do this on a cleansed, de-duplicated dataset.

Maintain

So far, we’ve defined a process, carefully mapped our data, and built and executed that process by extracting, transforming, and loading data. We’ve cleansed, de-duplicated, and enriched the data along the way. But before we’re done with this process, we need to cover the last step (and some may argue the hardest one): we need to consider how we’re going to maintain the data we’ve spent so long and so much effort on getting into our target system in the right way. Maintenance naturally takes a different form, depending on the characteristics of the business that owns the target system. Salesforce provides tools (even down to basic reporting) that can help ensure the data stays in as good a shape as possible. This includes how data is added over time as users adopt and use the system.

With our data migration process and steps now understood, let’s dive a bit deeper into loading high-quality data.

Loading high-quality data

When users interact with data, the data must be of good quality for it to be effective. For example, salespeople may go to look at a Contact record in Salesforce to make a call to try and up-sell or cross-sell to that customer. If users are presented with multiple Contact records for the same person (duplicates), and those records are at different levels of completeness, they may end up very annoyed. Due to this, those users may not have all of the information for a Contact or, worse, are still jumping between records to deduce the correct phone number to call or email address to use.

As mentioned previously, practical steps can be taken as part of a data migration process. When preparing data before loading it, or once the data has been loaded into the target system, cleansing, de-duplication, and (optionally) enrichment must take place. When thinking about the quantity of data as well as its quality, it may make sense for some enterprises to perform the cleansing, de-duplication, and enrichment activities before loading it. This way, the smallest, most complete, and duplicate-free dataset will be loaded.

Turning our attention to what can be done in Salesforce to facilitate loading high-quality data, we have several tools at our disposal. Validation rules can be used to enforce the correct format of field values. This can ensure that records are at a baseline level of completeness. This includes ensuring certain fields are filled in (such as not being able to set an Opportunity to a particular stage unless certain fields relating to the sale have been completed). We also have matching and duplication rules, which are used to flag up and assist users in preventing the creation of duplicate records. Salesforce reports can be produced off the back of the matching rules in the system to highlight those records that require attention. Lastly, Flow can be used to perform operations in an automated fashion when creating and updating data records. When processing records en masse, automation should generally be turned off. When working with one record at a time from day to day, automation such as Flow can be used to drive up the completeness of data on records or related records.

Now that we’ve covered various aspects related to data quality, let’s look at migrating Large Data Volumes (LDVs) to Salesforce.

Migrating Large Data Volumes to Salesforce

When loading LDVs (hundreds of thousands or millions of records) into Salesforce, there are various tools and considerations to keep in mind. Firstly, the Application Programming Interface (API) used to load the data into Salesforce can make a huge difference. The Bulk API can be used to load lots of data in parallel (by setting it to parallel mode). This can cause record-locking issues, whereby records cannot be loaded as their parent is locked. However, typically, this results in a faster load time compared to loading data in one batch at a time (known as serial mode). A way to reduce the likelihood of getting record-locking issues is to order the records to be loaded by the parent record IDs. Thinking purely in terms of performance, the Bulk API in parallel mode is the fastest way to load millions of records into Salesforce in the shortest amount of time. An external ID field can be used to avoid duplicates while importing records, as the external system ID is used to identify records. This can be particularly useful when performing, for example, scripted data loader tasks as the external ID can be used as the key for associating parent and child records.

Next, think about the operation that will be used to create and update data. Salesforce provides insert, update, and upsert operations. Insert is used for data creation, update is used for updating existing records, and upsert is used for updating existing records, or creating a new record if no record can be found to update. As you can imagine, an upsert operation is costlier to perform in terms of Salesforce resources as the calculation is done on the fly for every record when determining the operation to perform. Therefore, the best practice is to use the insert and update operations when migrating LDVs to the Salesforce Platform as they are faster than upsert.

Lastly, it is important to keep in mind that data storage on Salesforce is finite and generally linked to the number of user licenses purchased. If you keep loading millions upon millions of records into Salesforce, eventually, your data storage will run out. However, the limits are quite generous when a Salesforce environment is provisioned. Typically, data storage is set at 10 GB (for Salesforce orgs provisioned from late March 2019) plus either 20 MB or 120 MB per licensed user, depending on the Salesforce edition purchased (so a 20-user org on Salesforce Professional Edition will have 10.4 GB of data storage).

Important note

More details on data allocation and storage limits can be found at https://help.salesforce.com/s/articleView?id=sf.overview_storage.htm&type=5.

This leads us nicely to the next section, where we’ll look at exporting data from Salesforce.

Exporting data from Salesforce

As much as we may want to maximize the investment our clients make in Salesforce by moving data into it, we should consider the requirements surrounding exporting data from Salesforce. This may involve exporting certain data (for example, to a data warehouse platform), which typically involves an automated process being performed at a scheduled interval (such as every night). As expected, several techniques can be used to accomplish this task.

Data Export

Salesforce offers two methods for exporting data out of the box. The first is the Data Export service, which is accessible from within the Salesforce setup. Data Export can be used to manually export data weekly or monthly (depending on the edition of Salesforce you have), as well as to schedule regular data exports weekly (every 7 days) or monthly (every 29 days).

The second method for exporting data is the humble Data Loader application from Salesforce. This can be used to extract object data and can use queries to filter the data if desired, such as for filtering all Accounts by the last modified date of the current day. Data Loader can be scripted for export operations as well since it supports a command-line interface that’s only available on the Windows operating system.

Bulk API

Salesforce’s Bulk API can be used just as effectively for exporting data as it is for importing data when working with millions of records. For example, a Bulk API Query can be used to efficiently query large amounts of data (up to 15 GB), split into (up to 15) 1 GB batches. The query must return within 2 minutes (otherwise, a QUERY_TIMEOUT error is returned). If the results are within the 1 GB batch size limit, and the batch was processed within a 10-minute time limit, then the batch is processed, and results are returned. Salesforce will try 15 batch attempts (hence the 15 GB limit), so if this is breached, the batch is considered unsuccessful and a Retried more than 15 times error is returned.

PK chunking

Added to the Bulk API back in 2015, Primary Key (PK) chunking is used to increase the performance of large data downloads from the Salesforce Platform. All custom objects and most standard objects are supported by PK chunking (a complete list is available at https://developer.salesforce.com/docs/atlas.en-us.234.0.api_asynch.meta/api_asynch/async_api_headers_enable_pk_chunking.htm).

The way PK chunking works is that when enabled in a Bulk API request, along with a chunk size (say 250,000), Salesforce gets the minimum and maximum IDs for the dataset to be returned, and then creates a set of SOQL statements that each contains a WHERE clause to retrieve records for the given chunk size. Salesforce combines all the results and returns a complete dataset. If we take an example whereby we select all email addresses for every contact in Salesforce, we’d write a SELECT SOQL statement, as follows:

SELECT Email FROM ContactNow, suppose we have 1 million contacts in Salesforce and we set a chunk size of 100,000. Here, Salesforce would generate 10 SOQL statements, similar to what’s shown in the following code block (where the <ID of record x> placeholders would be replaced with the actual record IDs):

SELECT Email FROM Contact WHERE Id >= <ID of record 1> AND Id < <ID of record 100,000>SELECT Email FROM Contact WHERE Id >= <ID of record 100,000> AND Id < <ID of record 200,000>SELECT Email FROM Contact WHERE Id >= <ID of record 200,000> AND Id < <ID of record 300,000>SELECT Email FROM Contact WHERE Id >= <ID of record 300,000> AND Id < <ID of record 400,000>SELECT Email FROM Contact WHERE Id >= <ID of record 400,000> AND Id < <ID of record 500,000>SELECT Email FROM Contact WHERE Id >= <ID of record 500,000> AND Id < <ID of record 600,000>SELECT Email FROM Contact WHERE Id >= <ID of record 600,000> AND Id < <ID of record 700,000>SELECT Email FROM Contact WHERE Id >= <ID of record 700,000> AND Id < <ID of record 800,000>SELECT Email FROM Contact WHERE Id >= <ID of record 800,000> AND Id < <ID of record 900,000>SELECT Email FROM Contact WHERE Id >= <ID of record 900,000> AND Id < <ID of record 1,000,000>The reason PK chunking is faster than a straight export is that, by dividing the query into several subqueries, the Salesforce Platform Query Optimizer processes the queries more efficiently. This is because queries will be below the selectivity threshold.

Now, let’s summarize what we’ve covered in this chapter.

Summary

In this chapter, we learned how to migrate data, covering each step in detail to ensure you have the requisite knowledge to answer questions on these topics in the exam. Next, we looked at the Salesforce perspective on loading high-quality data, including considerations for LDVs being migrated to the Salesforce Platform. Lastly, we looked at exporting data from Salesforce while covering the various techniques available for us to do so, including Data Loader, Data Export, and the Bulk API, including PK chunking.

This effectively wraps up the theoretical side of the exam content. In the next chapter, we will cover the design aspects of the exam, and we will begin with Accounts and Contacts.

Practice questions

Test your knowledge of the topics covered in this chapter by answering the following questions:

- What does LDV stand for?

- What does the PK in the term PK chunking stand for?

- What is the name of the in-browser tool used for manual or scheduled weekly or monthly data exports?

- What method is used to speed up the performance of large SELECT SOQL queries when exporting large amounts of data from Salesforce?

- What data API facilitates the asynchronous processing of data in parallel batches?

- Ordering records to be imported by their parent record ID reduces the likelihood of what happening?

- True or false: upsert is considered good for performance when loading large amounts of data.

- PK chunking is a feature of which Salesforce API?

- What’s the first step in any data migration process?

- Why is PK chunking considered faster than a straight Export All operation?

Answers

The following are the answers to the previous questions. How many of them were you able to answer correctly? Check your answers to the previous questions here:

- Large Data Volume

- Primary key

- Data Export

- PK Chunking

- Bulk API

- Parent record locking

- False. Use insert and then update as separate operations.

- The Bulk API

- Define

- Because by breaking the query down into sub-queries, the Salesforce Query Optimizer threshold isn’t reached and therefore the performance of each query is optimal.

Further reading

See the following resources. For more information on what was covered in this chapter:

- Data Export options from Salesforce: https://trailhead.salesforce.com/content/learn/modules/lex_implementation_data_management/lex_implementation_data_export

- PK chunking in Salesforce: https://developer.salesforce.com/docs/atlas.en-us.234.0.api_asynch.meta/api_asynch/async_api_headers_enable_pk_chunking.htm

- Data allocation and storage limits: https://help.salesforce.com/s/articleView?id=sf.overview_storage.htm&type=5

- Bulk API Query: https://developer.salesforce.com/docs/atlas.en-us.api_asynch.meta/api_asynch/asynch_api_bulk_query_intro.htm