|

15 |

IT Policy Compliance and Compliance Technologies |

MAINTAINING COMPLIANCE with laws and regulations in a complex IT environment is difficult. The vast array of regulations a company must comply with is constantly increasing and changing. Consider, too, that it’s not usual for different regulating agencies to issue conflicting rules. This means sometimes you have to manage to the intent of regulations as much as to the letter. At the center of most regulations’ intent is data protection. Stop the flow of data, and just as quickly you will disrupt the delivery of products and services. If the loss of data lasts long enough, the viability of the organization itself comes into question.

Laws that require notifying consumers of data breaches are a good example of conflicting regulatory rules. Each state has its own set of laws and regulations that indicate who is covered by the law and what event triggers the notification. For example, the State of Alaska (under Alaska Statue Title 45.48.010) requires notification of a data breach for any organization with 10 or more employees that maintains unencrypted personal information about Alaska residents. However, there’s a provision in the law waiving the notification requirement if there’s a reasonable determination that no harm will come to the consumer. In contrast, the State of Illinois (815 Ill. Comp Stat. Ann. 530/1–/30) requires notification of data breaches for any entity that collects data on Illinois residents. Notification is triggered upon discovery of the breach. No exemption is cited.

The exact language of the laws is not important for this discussion. The point is that two breach notification laws that look at the same event and the same data require potentially two different actions. In the case of Alaska, no notification may be needed. In the case of Illinois, the size of the company makes no difference. Initial reaction might be, Why the concern? Two states and two laws. But the Internet has made businesses borderless. Just consider the confusion of reporting if an Alaskan company that ships product from a warehouse in Illinois had a data breach. Which law applies? Not to mention the question whether the order that contained the personal information was received and stored in a third state. A company now must navigate a maze of jurisdictions and conflicting laws.

That’s how vital data is for many organizations today. Now consider the regulatory view of how to process, manage, and store data properly. To address this challenge across a changing landscape of regulation requires a solid set of information security policies and the right tools. A lot of time and energy goes into ensuring that security policies cover what is important to an organization. These policies help an organization comply with laws, standards, and industry best practices. Implemented properly, they reduce outages and increase the organization’s capability to achieve its mission. The alternative could be fines, lawsuits, and business disruptions.

A comprehensive security policy is a collection of individual policies covering different aspects of the organization’s view of risk. For management, security policies are valuable for managing risk and staying compliant. The policies define clear mandates from many regulators on how risk should be controlled. IT security policies can help management identify risk, assign priority, and show, over time, how risk is controlled. This is an extremely valuable tool for management. This is an example of how to build value that solves a real problem for management. For example, a company wanting to offer services to the government may have to prove it’s compliant with the Federal Information Security Management Act (FISMA). In that case, implementing National Institute of Standards and Technology (NIST) standards clearly demonstrates to regulators how risks are controlled in accordance with FISMA requirements. As policies are perceived as solving real business problems, management and users are more likely to embrace the policies. This is an important step toward building a risk-aware culture.

Many tools are available to ease the effort to become and stay compliant. These tools can inventory systems, check configuration against policies, track regulations and changes, and much more. Combining policy management with the right set of tools creates a powerful ability to help ensure compliance. The most important way to stay compliant is to be aware of your environment, manage to a solid set of policies, and use tools that will be effective in keeping you up with changes. If you are able to achieve this, then you show regulators that you not only have good policies, but are also able to use them effectively to manage changing risks.

This chapter covers the following topics and concepts:

• What a baseline definition for information systems security is

• How to track, monitor, and report IT security baseline definitions and policy compliance

• What automating IT security policy compliance involves

• What some compliance technologies and solutions are

• What best practices for IT security policy compliance monitoring are

• What some case studies and examples of successful IT security policy compliance monitoring are

Chapter 15 Goals

When you complete this chapter, you will be able to:

• Explain a baseline definition for information systems security

• Define the vulnerability window and information security gap

• Describe the purpose of a gold master copy

• Discuss the importance of tracking, monitoring, and reporting IT security baseline definitions and policy compliance

• Compare and contrast automated and manual systems used to track, monitor, and report security baselines and policy compliance

• Describe the requirements to automate policy distribution

• Describe configuration management and change control management

• Discuss the importance of collaboration and policy compliance across business areas

• Define and contrast Security Content Automation Protocol (SCAP), Simple Network Management Protocol (SNMP), and Web-Based Enterprise Management (WBEM)

• Describe digital signing

• Explain how penetration testing can help ensure compliance

• List best practices for IT security policy compliance monitoring

FYI

A security baseline defines a set of basic configurations to achieve specific security objectives. These security objectives are typically represented by security policies and a well-defined security framework. The security baseline reflects how you plan to protect resources that support the business.

Creating a Baseline Definition for Information Systems Security

Taking your policies and building security baselines is a good way to ensure compliance. For example, suppose you have about 200 servers and an Active Directory (AD) server that enforces password rules. Configuring the servers to use AD to authenticate ensures that their passwords meet standard requirements. Additionally, if the password rules within the policy are compliant with NIST standards, then AD might be an effective tool to enforce that aspect of regulatory compliance. So a baseline is a good starting point for enforcing compliance.

Within IT, a baseline provides a standard focused on a specific technology used within an organization. When applied to security policies, the baseline represents the minimum security settings that must be applied.

For example, imagine that an organization has determined every system needs to be hardened. The security policy defines specifically what to do to harden the systems. For example, the security policy could provide the following information:

• Protocols—Only specific protocols listed within the security policy are allowed. Other protocols must be removed.

• Services—Only specific services listed within the security policy are allowed. All other services are disabled.

• Accounts—The administrator account must be renamed. The actual name of the new administrator may be listed in the security policy, or this information could be treated as a company secret.

The security policy would have much more information but these few items give you an idea of what is included. The point is that baselines can be complex, referencing multiple policies. A server baseline, for example, may be configured to enforce both passwords and monitoring standards.

Baselines have many uses in IT. Anomaly-based intrusion detection systems (IDSs) use baselines to determine changes in network behavior. Server monitoring tools also use baselines to detect changes in system performance. This chapter focuses on the use of baselines as part of enforcing compliance with security policies and detecting security events.

An anomaly-based IDS detects changes in the network’s behavior. You start by measuring normal activity on the network, which becomes your baseline. The IDS then monitors activity and compares it against the baseline. As long as the comparisons are similar, activity is normal. When the network activity changes so that it is outside a predefined threshold, activity is abnormal.

Abnormal activity doesn’t always mean a security event. An alert could be what’s referred to as a “false positive.” That means the alert was triggered by activity that appears unusual but after closer examination can be explained. Consider an online retailer’s spike in activity during the holiday season, for instance, or the introduction of new application on the network, generating new transactions. Much of this abnormal activity can be anticipated or detected. The IDS can be configured to ignore it.

However, the point of an IDS device is to detect security threats. For example, if a worm infected a network, it would increase network activity. The IDS recognizes the change as an anomaly and sends an alert. The anomaly-based IDS can’t work without first creating the baseline. You can also think of an anomaly-based IDS as a behavior-based IDS because it is monitoring the network behavior.

Administrators commonly measure server performance by measuring four core resources. These resources are the processor, the memory, the disk, and the network interface. When these are first measured and recorded, it provides a performance baseline. Sometime later, the administrator measures the resources again. As long as the measurements are similar, the server is still performing as expected. However, if there are significant differences that are not explainable, the change indicates a potential problem. For example, a denial of service (DoS) attack on the server may cause the processor and memory resource usage to increase.

A security baseline is also a starting point. For example, a security baseline definition for the Windows operating system identifies a secure configuration for the operating system in an organization. As long as all Windows systems use the same security baseline, they all start in a known secure state. Later, you can compare the security baseline against the current configuration of any system. If it’s different, something has changed. The change indicates the system no longer has the same security settings. If a security policy mandated the original security settings, the comparison shows the system is not compliant.

Policy-Defining Overall IT Infrastructure Security Definition

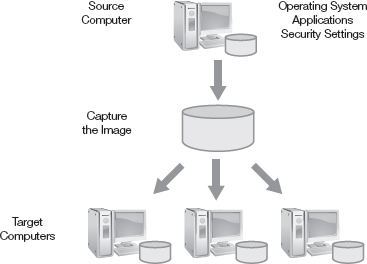

Many organizations use imaging techniques to provide baselines. An image can include the full operating system, applications, and system settings. This includes all the desired security and configuration settings required for the system.

Figure 15-1 shows an example of how imaging is accomplished. You start with a clean system referred to as a source computer. You install the operating system and any desired applications on the source computer. Next, you configure specific settings needed by users. Then you configure the security settings to comply with the security policy. For example, you could remove unneeded protocols, disable unused services, and rename the administrator account. You can lock this system down with as much security as you need or desire.

Next, you capture an image of the system. You can think of this as being like taking a snapshot. Imaging captures the entire software contents of the system at that moment. Symantec Ghost is a popular imaging program used to capture images of any operating system.

Once you’ve captured the image, you can deploy it to other systems. The original image is often referred to as the gold master. Each system that receives the image will have the same operating system and applications. It will also have the same security and configuration settings.

This baseline improves security for systems. It also reduces the total cost of ownership. Imagine that the security policy required changing 50 different security settings. Without the baseline, these settings would have to be configured separately across potentially hundreds or thousands of servers. The time involved to configure them separately may be substantial. Additionally, there’s no guarantee that all the settings would be configured exactly the same on each system when done manually.

If all the systems are configured the same, help desk personnel can troubleshoot them more quickly. This improves availability. Imagine if hundreds (or thousands) of different systems were configured in different ways. Help desk personnel would first need to determine the normal configuration when troubleshooting problems. Once they determined normal operation, they would then determine what’s abnormal in order to fix it. Each time they worked on a new system, they’d repeat the process. Worse yet, the same servers configured radically differently mean lack of consistency and lack of compliance with security standards.

However, if all the systems are the same, help desk personnel need to learn only one system. This knowledge transfers to all the other systems. Troubleshooting and downtime are reduced. Availability is increased. Compliance with security policies is consistently enforced.

Vulnerability Window and Information Security Gap Definition

The vulnerability window is the gap between when a new vulnerability is discovered and when software developers start writing a patch. Attacks during this time are referred to as zero-day vulnerabilities. You don’t know when the vulnerability window opens, since you don’t know when an attacker will find the vulnerability. However, most vendors will start writing patches as soon as they learn about the vulnerability. For example, Microsoft announced on April 26, 2014, a zero-day vulnerability that resides in all versions of Internet Explorer. By May 1 a patch was released.

Similarly, there’s a delay between the time a patch is released and an organization patches their systems. Even if you start with a baseline, there is no way that it will always be up to date or will meet the needs for all your systems. The difference between the baseline and the actual security needs represents a security gap. For example, you may create an image on June 1. One month later, you may deploy the image to a new system. Most of the configuration and security settings will be the same. However, there may have been some changes or updates that occurred during the past month. These changes present attackers with a vulnerability window and must be plugged.

If your organization uses change management procedures, you can easily identify any changes that should be applied to the system. If you have automated methods to get updates to systems, you can use those to update it. If not, the system will need to be updated manually.

Patch management is an important security practice. Software vulnerabilities are routinely discovered in operating systems and applications. Vendors release patches or updates to plug the vulnerability holes. However, if the patches aren’t applied, the system remains vulnerable. Keeping systems patched helps an organization avoid significant attacks and outages.

Within a Microsoft domain, Group Policy deploys many settings. Group Policy allows an administrator to configure a setting once and it will automatically apply to multiple systems or users. If Group Policy is used to change settings, the changes will automatically apply to the computer when it authenticates on the domain. Additional steps are not required.

Tracking, Monitoring, and Reporting IT Security Baseline Definition and Policy Compliance

A baseline is a good place to start. It ensures that the systems are in compliance with security requirements when they are deployed. However, it’s still important to verify that the systems stay in compliance. An obvious question is how the systems may have been changed so they aren’t in compliance. Administrators or technicians may change a setting to resolve a problem. For example, an application may not work unless security is relaxed. These changes may weaken security so that the application works. Malicious software (malware) such as a virus may also change a security setting.

It doesn’t matter how or why the setting was changed. The important point is that if it was an unauthorized change, you want to know about it. You can verify compliance using one or more of several different methods. These methods simply check the settings on the systems to verify they haven’t been changed. Several common methods include:

• Automated systems

• Random audits and departmental compliance

• Overall organizational report card for policy compliance

Automated Systems

Automated systems can regularly query systems to verify compliance. For example, the security policy may dictate that specific protocols be removed or specific services be disabled. For instance, the policy may require password-protected screen savers. An automated system can query the systems to determine if these settings are enabled and match the gold master.

Many automated tools include scheduling abilities. You can schedule the tool to run on a regular basis. Advanced tools can also reconfigure systems that aren’t in compliance. All you have to do is review the resulting report to verify systems are in compliance. For example, assume your company has 100 computers. You could schedule the tool to run every Saturday night. It would query each of the systems to determine their configuration and verify compliance. When the scans are complete, the tool would provide a report showing all of the systems that are out of compliance including the specific issues. If your organization is very large, you could configure the scans to run on different computers every night.

Microsoft provides several automated tools you can use to manage Microsoft products. Although Microsoft isn’t the only tool developer to choose from, it does have a large installed base of computers in organizations. It’s worthwhile knowing which tools are available. These include:

• Microsoft Baseline Security Analyzer (MBSA)—MBSA is a free download that can query systems for common vulnerabilities. It starts by downloading an up-to-date Extensible Markup Language (XML) file. This file includes information on known vulnerabilities and released patches. MBSA can scan one or more systems in a network for these vulnerabilities. It keeps a history of reports showing all previous scans.

• Systems Management Server (SMS)—This is an older server product but is still used in many organizations. It combines and improves the capabilities of several other products. It can query systems for vulnerabilities using the same methods used by MBSA. It can deploy updates. It adds capabilities to these tasks, such as the ability to schedule the deployment of updates and the ability to push out software applications.

• System Center Configuration Manager (SCCM)—SCCM is an upgrade to SMS.

It can do everything that SMS can do and provides several enhancements. SCCM can deploy entire operating system images to clients. In addition to the Microsoft products, there is a wide assortment of other automated tools. These can run on other operating systems and scan both Microsoft and other operating systems such as UNIX and UNIX derivatives. Many scanner types and versions are available. The following are several that are on the market today:

• Nessus—Nessus is considered by many to be the best UNIX vulnerability scanner. Nessus was being used by more than 75,000 organizations worldwide before it switched from a free product to one you had to purchase in 2008.

• Nmap—A network scanner that can identify hosts on a network and determine services running on the hosts. It uses a ping scanner to identify active hosts. It uses a port scanner to identify open ports, and likely protocols running on these ports. It is also able to determine the operating system.

• eEye Digital Security Retina—Retina is a suite of vulnerability management tools. It can assess multiple operating systems such as Microsoft, Linux, and other UNIX distributions. It also includes patch deployment and verification capabilities.

• SAINT (Security Administrators Integrated Network Tool)—SAINT provides vulnerability assessments by scanning systems for known vulnerabilities. It can perform penetration testing which attempts to exploit vulnerabilities. SAINT runs on a UNIX/Linux platform but it can test any system that has an Internet Protocol (IP) address. It also provides reports indicating how to resolve the detected problems.

• Symantec Altiris—Altiris includes a full suite of products. It can manage and monitor multiple operating systems. This includes monitoring systems for security issues and deployed patches.

FYI

While vulnerability scanners continue to be important tools, they do have their limitations. First, they are only as good as their testing scripts and approach. No scanner can find all vulnerabilities. Second, some scanners need elevated access to the system’s configuration file in order to get the best results. This makes the scanner itself a potential point of vulnerability that could become compromised by malware.

It’s also possible to use logon scripts to check for a few key settings. For example, a script can check to see if anti-malware software is installed and up to date, or if the system has current patches. The script runs each time a user logs on.

Some organizations quarantine systems that are out of compliance. In other words, if a scan or a script shows the system is not in compliance, a script modifies settings to restrict the computer’s access on the network. The user must contact an administrator to return the system to normal.

SCAP Compliance Tools

The Security Content Automation Protocol (SCAP) is presented later in this chapter. SCAP is one of the programs of the National Institute of Standards and Technology (NIST). SCAP defines protocols and standards used to create a wide variety of automated scanners and compliance tools.

NIST accredits independent laboratories. These independent laboratories validate SCAP-compliant tools for the following automated capabilities:

• Authenticated configuration scanner—The scanner uses a privileged account to authenticate on the target system. It then scans the system to determine compliance with a defined set of configuration requirements.

• Authenticated vulnerability and patch scanner—The scanner uses a privileged account to authenticate on the target system. It then scans the system for known vulnerabilities. It can also determine if the system is patched, based on a defined patch policy.

• Unauthenticated vulnerability scanner—The scanner doesn’t use a system account to scan the system. This is similar to how an attacker may scan the system. It scans the system over the network to determine the presence of known vulnerabilities.

• Patch remediation—This tool installs patches on target systems. These tools include a scan or auditing component. The patch scanner determines a patch is missing and the patch remediation tool applies the missing patch.

• Misconfiguration remediation—This tool reconfigures systems to bring them into compliance. It starts by scanning the system to determine if a defined set of configuration settings are accurate. It then reconfigures any settings that are out of compliance.

You can read more about the SCAP validation program on NIST’s site here: http://scap.nist.gov/validation/index.html.

Random Audits and Departmental Compliance

You can also perform random audits to determine compliance. This is often useful when IT tasks have been delegated to different elements in the organization. For example, a large organization could have a decentralized IT model. A central IT department manages some core services such as network access and e-mail, and individual departments manage their own IT services. The organization still has an overall security policy. However, the individual departments are responsible for implementing them. In this case, the central IT department could randomly audit the departments to ensure compliance. Some larger organizations employ specialized security teams. These teams have a wide variety of responsibilities in the organization such as incident response and boundary protection. They could also regularly scan systems in the network and randomly target specific department resources.

It’s important to realize the goal of these scans. It isn’t to point fingers at individual departments for noncompliance. Instead, it’s to help the organization raise its overall security posture. Of course, when departments realize their systems could be scanned at any time, this provides increased motivation to ensure the systems are in compliance.

Overall Organizational Report Card for Policy Compliance

Many organizations use a report card format to evaluate policy compliance. These report cards can be generated from multiple sources such as a quality assurance program. Organizations can create their own grading criteria. However, just as in school, a grade of A is excellent while a grade of F is failing. The included criteria depend on the organization’s requirements. For example, the following elements can be included in the calculation of the grade:

• Patch compliance—This compares the number of patches that should be applied versus the number of patches that are applied. A time period should be considered. Patches that close major vulnerabilities might be considered critical and must be applied sooner. For example, the organization could state a goal of having 100 percent of critical patches deployed within seven days of their release. The only exception would be if testing of any patch verified that the patch caused problems.

FYI

One approach determining whether a patch is critical is to determine its risk score. You can look at the likelihood and impact to the organization if an attack were to happen without the patch having been applied. This approach applies numerical values to likelihood and impact (say from 0 to 9), with the higher number indicating an attack is very likely and, if successful, would have significant impact to the organization. With these values assigned, risk can be scored as Risk = Likelihood × Impact. The higher the risk score, the more critical the patch. This system is referred to as the OWASP Risk Rating Methodology. More information is available at www.owasp.org.

• Security settings—The baseline sets multiple security settings. These should all stay in place. However, if an audit or scan shows that the settings are different, it represents a conflict. Each security setting that is not in compliance is assigned a value. For example, every setting that is different from the baseline could represent a score of 5 percent. If scans detect three differences, the score could be 85 percent (100 percent minus 15 percent).

• Number of unauthorized changes—Most organizations have formal change control processes. When these are not followed, or they do not exist, changes frequently cause problems. These problems may be minor problems affecting a single system or major problems affecting multiple systems or the entire network. Every unauthorized change would represent something less than 100 percent. For example, 15 unauthorized changes within a month could represent a score of 85 percent, or a grade of B in this category.

Once you identify the rules or standards, you could use a spreadsheet to calculate the grades. An administrator could pull the numbers from the scans and enter them into the spreadsheet monthly. You could use individual grade reports for each department that manages IT resources, and combine them into a single grade report for the entire organization.

Automating IT Security Policy Compliance

The one thing that computers are good at is repetition. They can do the same task repeatedly and always give the same result. Conversely, people aren’t so good at repetitious tasks. Ask an administrator to change the same setting on 100 different computers and it’s very possible that one or more of the computers will not be configured correctly. Additionally, automated tasks take less labor and ultimately cost less money. For example, a task that takes 15 minutes to complete will take 25 labor hours (15 × 100 / 60). If an administrator took 2 hours to write and schedule a script, it results in a savings of 23 labor hours. Depending on how much your administrators are paid, the savings can be significant.

Many security tasks can also be accomplished with dedicated tools. For example, the “Automated Systems” section earlier in this chapter listed several different tools with a short description of some of their capabilities. However, if you plan to automate any tasks related to IT security policy compliance, you should address some basic concerns. These include:

• Automated policy distribution

• Configuration and change management

technical TIP

Open source configuration management software has become widely available and accepted in many organizations. Many of the open source solutions support newer technologies such as integrating with cloud providers. For example, a product named “Chef” offers configuration management for network nodes either on premises or in the cloud.

Automated Policy Distribution

Earlier in this chapter, imaging was described as a method to start all computers with a known baseline. This is certainly an effective way to create baselines. However, after the deployment of the baseline, how do you ensure that systems stay up to date? For example, you could deploy an image to a system on July 1. On July 15, the organization approves a change. You can modify the image for new systems, but how do you implement the change on the system that received the image on July 1, as well as on the other existing systems? Additionally, if you didn’t start with an image as a baseline, how do you apply these security settings to all the systems on the network?

The automated methods you use are dependent on the operating systems in the organization. If the organization uses Microsoft products, you have several technologies you can use. Some such as SMS and SCCM were mentioned previously. Another tool is Group Policy.

Group Policy is available in Microsoft domains. It can increase security for certain users or departments in your organization. You could first apply a baseline to all the systems with an image. You would then use Group Policy to close any security gaps, or increase security settings for some users or computers. This method can also ensure regulatory and standards compliance such as with those controls required to protect credit cards under the Payment Card Industry Data Security Standard (PCI DSS).

Consider Figure 15-2 as an example. The organization name is ABC Corp and the domain name is ABCCorp.int. The Secure Password Policy is linked to the domain level to require secure passwords for all users in the domain. The organization could define a password policy requiring all users to have strong passwords of at least six characters. If the organization later decides it wants to change this to a more secure eight-character policy, an administrator could change the Secure Password Policy once and then it would apply to all users in the domain. It doesn’t matter if there are 50 or 50,000 users—a single change applies to all equally.

All the PCI DSS server objects are placed in the PCI Servers organizational unit (OU). A policy named PCI DSS Lock Down Policy is linked to this OU. It configures specific settings to ensure these servers are in compliance with PCI DSS requirements.

Human resources (HR) personnel handle health data covered by the Health Insurance Portability and Accountability Act (HIPAA). These users and computers can be placed in the HR OU. The HIPAA Compliance Policy includes Group Policy settings to enforce HIPAA requirements. For example, it could include a script that includes a logon screen reminding users of HIPAA compliance requirements and penalties.

The figure also shows a research and development OU, named RnD OU, for that area. Users and computers in the research and development department would be placed in the RnD OU. The RnD policy could include extra security settings to protect data created by the RnD department.

Group Policy not only configures the changes when systems power on, but also provides automatic auditing and updating. Systems query the domain every 90 to 120 minutes by default. Any Group Policy changes are applied to affected systems within two hours. Additionally, systems will retrieve and apply security settings from Group Policy every 16 hours even if there are no changes.

Training Administrators and Users

These tools and technologies aren’t always easy to understand. They include a rich set of capabilities that require a deep understanding before they can be used effectively. It takes time and money to train administrators and users how to get the most out of these tools.

Almost all the companies that sell these tools also sell training. You can send administrators to train at a vendor location. If you have enough employees who require training, you can conduct it on-site.

The important point to remember is that even the most “valuable” tool is of no value if it’s not used. Training should be considered when evaluating tools. Determine if training is available and its cost.

Organizational Acceptance

Organizational acceptance is also an important consideration. Resistance to change can be a powerful block in many organizations. If the method you’re using represents a significant change, it may be difficult for some personnel to accept. As an example, using a baseline of security settings can cause problems. Applications that worked previously may no longer work. Web sites that were accessible may no longer be. There are usually work-around steps that will resolve the problem, but it will take additional time and effort.

To illustrate, assume that a baseline is being tested for deployment. Administrators in one department determine that the baseline settings prevent an application from working. There are three methods for resolving the problem. First, the administrators could weaken security by not using the baseline settings. However, weakening security is not a good option. Second, the department may decide it can no longer use the application. If the application is critical to its mission, this may not be a good option. Third, a work-around method can be identified that doesn’t bypass the original baseline settings, but also allows the application to work. The third option will require digging in to resolve the problem. If the administrators are already overburdened, or don’t have the knowledge or ability to resolve the problem, they may push back. This requires a climate of collaboration to address the problem.

Testing for Effectiveness

Not all automated tools work the same. Before investing too much time and energy into any single tool, it’s worthwhile testing them to determine their effectiveness. Some of the common things to look for in a tool include:

FYI

Vulnerability scanning is a common tool and part of normal security operations. Ideally, it quickly and routinely identifies vulnerabilities to be fixed. Penetration testing takes that capability to the next level. A penetration test is designed to actually exploit weaknesses in the system architecture or computing environment. Typically, a penetration test involves a team pretending to be hackers, using vulnerability scanners, social engineering, and other techniques to try to hack the network or system.

• Accurate identification—Can the tool accurately identify systems? Does it know the difference between a Microsoft server running Internet Information Services (IIS) and a Linux system running Apache, for example?

• Assessment capabilities—Does it scan for common known vulnerabilities? For example, can it detect weak or blank passwords for accounts?

• Discovery—Can the system accurately discover systems on the network? Can it discover both wired and wireless systems?

• False positives—Does it report problems that aren’t there? For example, does it report that a patch has not been applied when it has?

• False negatives—Does it miss problems that exist? In other words, if a system is missing a patch, can the tool detect it?

• Resolution capabilities—Can the tool resolve the problem, or at least identify how it can be resolved? Some tools can automatically correct the vulnerability. Other tools provide directions or links to point you in the right direction.

• Performance—The speed of the scans is important, especially in large organizations. How long does the scan of a single system take? How long will it take to scan your entire network?

Audit Trails

If a tool makes any changes on the network, it’s important that these changes are recorded. Changes are recorded in change management logs that create an audit trail. The tool making the change can record changes it makes on any systems. However, it’s common for logs to be maintained for individual systems being changed, separate from the change management log.

Logs can be maintained on the system, or off-system. The value in having off-system logs is that if the system is attacked or fails, the logs are still available. Additionally, some legal and regulatory requirements dictate that logs be maintained off-system.

Audit trails are especially useful for identifying unauthorized changes. Auditing logs the details of different events. This includes who, what, where, when, and how. If a user made a change, for example, the audit log would record who the user was, what the user changed, when the change occurred, and how it was done.

Imagine a security baseline is deployed in the organization. You discover that one system is regularly being reconfigured. The security tool fixes it, but the next scan shows it’s been changed again. You may want to know who is making this change. If auditing is enabled, it will record the details. You only need to view the audit trail to determine what is going on.

Configuration Management and Change Control Management

The Information Technology Infrastructure Library (ITIL) includes five books that represent the ITIL life cycle, as shown in Figure 15-3. A central part of ITIL is Service Transition. This relates to the transition of services into production. It includes configuration management and change management.

ITIL isn’t an all-or-nothing approach. Organizations often adopt portions of ITIL practices without adopting others. Configuration management and change management are two elements within the Service Transition stage that many organizations do adopt.

FIGURE 15-3

The ITIL life cycle.

Configuration management (CM) establishes and maintains configuration information on systems throughout their life cycle. This includes the initial configuration established in the baseline. It also includes recording changes. The initial public draft of NIST SP 800-128 defines CM as a collection of activities. These activities focus on establishing the integrity of the systems by controlling the processes that affect their configurations. It starts with the baseline configuration. CM then controls the process of changing and monitoring configuration throughout the system’s lifetime.

Change management is a formal process that controls changes to systems. One of the common problems with changes in many organizations is that they cause outages. When an unauthorized change is completed on one system, it can negatively affect another system. These changes aren’t done to cause problems. Instead, well-meaning technicians make a change to solve a smaller problem and unintentionally create bigger problems. Successful change management ensures changes have minimal impact on operations. In other words, change management ensures that a change to one system does not take down another system.

Change management is also important to use with basic security activities such as patching systems. Consider for a moment what the worst possible result of a patch might be. Although patches are intended to solve problems, they occasionally cause problems. The worst-case scenario is when a patch breaks a system. After the patch is applied, the system no longer boots into the operating system.

If a patch broke your home computer, it would be inconvenient. However, if a patch broke 500 systems in an organization, it could be catastrophic. Patches need to be tested and approved before they are applied. Many organizations use a change management process before patching systems.

Configuration Management Database

A configuration management database (CMDB) holds the configuration information for systems throughout a system’s life cycle. The goal is to identify the accurate configuration of any system at any moment.

The CMDB holds all the configuration settings, not just the security settings. However, this still applies to security. The security triad includes confidentiality, integrity, and availability. Many of the configuration settings ensure the system operates correctly. A wrongly configured system may fail, resulting in loss of availability.

Tracking, Monitoring, and Reporting Configuration Changes Many organizations use a formal process for change requests.

It’s not just a technician asking a supervisor if he or she can make a change. For example, a Web application could be used to submit changes. Administrators or technicians submit the request via an internal Web page. The Web page would collect all the details of the change and record them in the change control work order database. Details may include the system, the actual change, justification, and the submitter.

Key players in the organization review the change requests and provide input using the same intranet Web application. These key players may include senior IT experts, security experts, management personnel, and disaster recovery experts. Each will examine the change as it relates to his or her area of expertise and determine if it will result in a negative impact. If all the experts agree to the change, it’s approved.

Collaboration and Policy Compliance Across Business Areas

It’s always important for different elements of a business to get along. One element of the business should not make changes without any thought to how it may affect other areas. Collaboration is also important within IT and security policy compliance.

Some security policies must apply to all business areas equally. However, other policies can be targeted to specific departments. If you look back to Figure 15-2, this shows an excellent example of how different requirements are addressed within a Microsoft domain. Group Policy applies some settings to all business areas equally. Additionally, it can configure different settings for different departments or business areas if needed.

In Figure 15-2, different policies were required for PCI DSS servers, HR personnel, and research and development personnel. Each of these business areas has its own settings that don’t interfere with the other units.

No matter how hard you try to communicate through the approval process, inevitably some miscommunication will occur. Someone will be caught off guard by a change, or two changes will conflict. You might find multiple changes need to backed out, but the resources are not available as expected to perform the recovery. Or the miscommunication will occur because the full scope of the change was not fully understood.

There’s no magic formula to solving all these problems. A good rule of thumb is to overcommunicate and build change into a predictable schedule and set of resources. For example, maybe routine (non-critical) patches are always applied the first Saturday of every month. Create a SharePoint site to house the descriptions of the changes. Commit to having descriptions of the changes available the week prior. Send reminder e-mails both to approvers and stakeholders. In short, be transparent and make a best effort to communicate accurately and often. This provides every opportunity for stakeholders to be engaged and offer comment.

Version Control for Policy Implementation Guidelines and Compliance

Another consideration related to automating IT security policy compliance is version control. First, it’s important to use version control for the security policy itself. In other words, if the security policy is changed, the document should record the change. A reader should be able to determine if changes have occurred since the policy was originally released, with an idea of what those changes were.

Version control requirements for a document can be as simple as including a version control page. The page identifies all the changes made to the original in a table format. It would often include the date and other details of the change. It may also include who made the change.

It’s also important to record the actual changes to systems. However, these changes are also recorded in the change control work order database and the CMDB discussed in the previous section.

Compliance Technologies and Solutions

Organizations use both emerging and existing technologies to ensure compliance. One particular challenge is how to update and track regulatory changes and new rules, including how to use them to coordinate policy management and compliance training.

This section presents some of the notable technologies. They are:

• COSO Internal Compliance Framework

• Security Content Automation Protocol (SCAP)

• Simple Network Management Protocol (SNMP)

• Web-Based Enterprise Management (WBEM)

• Digital signatures

COSO Internal Controls Framework

The COSO Internal Control—Integrated Framework was developed by the “Committee of Sponsoring Organizations of the Treadway Commission.” That led to the term COSO. The organization was formed in 1992 with the main idea of creating a framework of controls to ensure a company’s financial reports were accurate and free from fraud. The COSO framework has evolved over the years with the latest version published in May 2013. Since 1992, both technology in general and the Internet in particular have evolved. Not surprisingly, technology and information security have become major parts of the COSO controls framework.

In fact, COSO, like COBIT, is often used by auditors, compliance professionals, and risk professionals. COSO is widely used and recognized as a major US standard that has been adopted worldwide. Because COSO controls apply both to business functions (such financial accounting) and to technology (such as information security), they make a powerful framework. The framework can describe how controls should be built, in both business and technology terms. This has enormous benefits for the security team. The security team can build controls in a way that the business side is more likely to understand. That makes it a little easier to talk the language of the business side. That, in turn, leads to greater business support for adopting the security controls.

The COSO control framework works well with other frameworks, such as COBIT. In fact, COBIT 5 leverages both COSO and ISO principles and extends the work into many information security areas not handled by COSO. So rather than competing, these frameworks actually complement each other.

COSO outlines how controls should be built and managed in order to ensure compliance with many major regulations today. For example, the governance body for the Sarbanes-Oxley (SOX) Act of 2002 recommends the COSO internal framework as a means of compliance with SOX. In other words, if you implement COSO control framework, you will be compliant with SOX regulations.

Consequently, COSO is a powerful framework to ensure that risks are well managed and the right controls built to keep systems compliant with many laws and regulations.

SCAP

The Security Content Automation Protocol (SCAP), pronounced “S-cap,” is a technology used to measure systems and networks. It’s actually a suite of six specifications. Together these specifications standardize how security software products identify and report security issues. SCAP is a trademark of NIST.

NIST created SCAP as part of its responsibilities under the Federal Information Security Management ACT (FISMA). The goal is to establish standards, guidelines, and minimum requirements for tools used to scan systems. Although SCAP is designed for the creation of tools to be used by the U.S. government, private entities can use the same tools.

The six specifications are:

• eXtensible Configuration Checklist Description Format (XCCDF)—This is a language used for writing security checklists and benchmarks. It can also report results of any checklist evaluations.

• Open Vulnerability and Assessment Language (OVAL)—This is a language used to represent system configuration information. It can assess the state of systems and report the assessment results.

• Common Platform Enumeration (CPE)—This provides specific names for hardware, operation systems, and applications. CPE provides a standard system-naming convention for consistent use among different products.

• Common Configuration Enumeration (CCE)—This provides specific names for security software configurations. CCE is a dictionary of names for these settings. It provides a standard naming convention used by different SCAP products.

FYI

The MITRE Corporation is a private company that performs a lot of work for U.S. government agencies. For example, MITRE maintains the CVE for the National Cyber Security Division of the U.S. Department of Homeland Security. Many of the original employees came from MIT and they work on research and engineering (RE). However, MITRE is not an acronym. Additionally, MITRE is not part of Massachusetts Institute of Technology (MIT).

• Common Vulnerabilities and Exposures (CVE)—This provides specific names for security related software flaws. CVE is a dictionary of publicly known software flaws. The MITRE Corporation manages the CVE.

• Common Vulnerability Score Systems (CVSS)—This provides an open specification to measure the relative severity of software flaw vulnerabilities. It provides formulas using standard measurements. The resulting score is from zero to 10 with 10 being the most severe.

SCAP isn’t a tool itself. Instead, it’s the protocol used to build the tools. Compare this to HTTP, the protocol that transmits traffic over the Internet so that applications can display data in user applications. Web browsers can display pages written in Hypertext Markup Language (HTML) and Extensible Markup Language (XML). However, HTTP can’t display the traffic itself. Instead, Web browsers such as Internet Explorer are the tools that use HTTP to transmit and receive HTTP traffic and display the HTML-formatted pages. Similarly, SCAP-compliant tools use the underlying specifications of SCAP to scan systems and report the results. There are a wide variety of tool purposes. These include the ability to audit and assess systems for compliance of specific requirements. They can scan systems for vulnerabilities. They can detect systems that don’t have proper patches or are misconfigured.

Some of the tools currently available are:

• BigFix Security Configuration and Vulnerability Management Suite (SCVM) by BigFix

• Retina by eEye Digital Security

• HP SCAP Scanner by HP

• SAINT vulnerability scanner by SAINT

• Control Compliance Suite—Federal Toolkit by Symantec

• Tripwire Enterprise by Tripwire

If you want to read more about SCAP, read NIST SP 800-126. This is the technical specification for SCAP version 1.0. At this writing, NIST SP 800-126 rev 1 is in draft. It is the technical specification for SCAP version 1.1. You can access NIST SP 800-126 and other NIST 800-series special publications at http://csrc.nist.gov/publications/PubsSPs.html.

The Simple Network Management Protocol (SNMP) is used to manage and query network devices. SNMP commonly manages routers, switches, and other intelligent devices on the network with IP addresses. SNMP is a part of the TCP/IP suite of protocols, so it’s a bit of a stretch to call it an emerging technology. However, SNMP has improved over the years. The first version of SNMP was SNMP v1. It had a significant vulnerability. Devices used community strings for authentication. The default community string was “Public” and SNMP sent it over the network in clear text. Attackers using a sniffer such as Wireshark could capture the community string even if it was changed from the default. They could then use it to reconfigure devices.

SNMP was improved with versions 2 and 3. Version 3 provides three primary improvements:

• Confidentiality—Packets are encrypted. Attackers can still capture the packets with a sniffer. However, they are in a ciphered form, which prevents attackers from reading them.

• Integrity—A message authentication code (MAC) (not to be confused with Media Access Control) is used to ensure that data has not been modified. The MAC uses an abbreviated hash. The hash is calculated at the source and included in the packet. The hash is recalculated at the destination. As long as the data has not changed, the hash will always provide the same result. If the hash is the same, the message has not lost integrity.

• Authentication—This provides verification that the SNMP messages are from a known source. It prevents attackers from reconfiguring the devices without being able to prove who they are.

WBEM

Web-Based Enterprise Management (WBEM) is a set of management and Internet standard technologies. They standardize the language used to exchange data among different platforms for management of systems and applications. Just as SCAP provides the standards used to create tools, WBEM also provides standards used in different management tools. The tools can be graphical user interface (GUI)-based tools. Some tools are command line tools that don’t use a GUI.

WBEM is based on different standards from the Internet and from the Distributed Management Task Force (DMTF), Inc. DMTF is a not-for-profit association. Members promote enterprise and systems management and interoperability. These standards include:

• CIM-XML—The Common Information Model (CIM) over XML protocol. This protocol allows XML-formatted data to be transmitted over HTTP. CIM defines IT resources as related objects in a rich object-oriented model. Just about any hardware or software element can be referenced with the CIM. Applications use the CIM to query and configure systems.

• WS-Management—The Web Services for Management protocol. This protocol provides a common way for systems to exchange information. Web services are commonly used for a wide assortment of purposes on the Internet. For example, Web services are used to retrieve weather data or shipping data on the Internet. Clients send Web service queries and receive Web service responses. The WS-Management protocol specifies how these queries and responses retrieve data from devices. It can also be used to send commands to devices.

• CIM Query Language (CQL)—This language is based on the Structured Query Language (SQL) used for databases, and the W3C XML Query language. The CQL defines the specific syntax rules used to query systems with CIM-XML and WS-Management.

Digital Signing

A digital signature is simply a string of data that is associated with a file. Digital signing technologies provide added security for files. A file signed with a digital signature provides authentication and integrity assurances. It also provides nonrepudiation. In other words, it provides assurances that a specific sender sent the file. It also provides assurances that the file has not been modified.

A public key infrastructure (PKI) is needed to support digital signatures. A PKI includes certificate authorities (CAs) that issue certificates. The certificate includes a public key matched to a private key. Anything encrypted with the private key can be decrypted with the public key. Additionally, anything encrypted with the public key can be decrypted with the private key.

Digital signatures provide added security for many different types of policy compliance files. For example, consider patches and other update files. You would download these files and use them to patch vulnerabilities. If an attacker somehow modified the patch, instead of plugging vulnerability, you would be installing malware. Similarly, many definition updates for security tools are digitally signed.

If a file is digitally signed, you know it has not been modified. The following steps show one way that a digital signature is used for a company named Acme Security. The company first obtains a certificate from a CA with the following steps:

1. Acme Security creates a public and private key pair.

2. Acme Security includes the public key that is part of its key pair to the CA with the company’s request. It keeps the private key private and protected.

3. The CA verifies Acme Security is a valid company and is who it says it is. The CA then creates a digital certificate for Acme Security. The certificate includes the public key provided by Acme Security.

At this point, the company is able to digitally sign files. Consider Figure 15-4 as you follow the steps for creating and using a digital signature.

4. Acme Security creates the file.

5. Acme Security hashes the file. The hash is a number normally expressed in hexadecimal. The hash in this example is 123456.

6. Acme Security encrypts the hash with its private key. The result is “MFoGCSsGAQ”. Remember, something encrypted with a private key can only be decrypted with the matching public key. Said another way, if you can decrypt data with a public key, you know it was encrypted with the matching private key.

7. Acme Security packages the file and the digital signature together. It sends them to the receiving client. The digital certificate could be sent at the same time or separately.

8. The receiving client uses the certificate to verify that Acme Security sent the file. Additionally, the client checks with the CA to verify the certificate is valid and hasn’t been revoked.

9. The client decrypts the encrypted hash (MFoGCSsGAQ) with the public key from the certificate. The decrypted hash is 123456.

10. The received file is hashed giving a hash of 123456. This hash is compared to the decrypted hash. If they are both the same, the file is the same. In other words, it has not been modified. Additionally, it provides verification that the digital signature was provided by Acme Security because only the matching public key can decrypt data encrypted with the private key.

Digital signatures aren’t a new technology. However, their use with security tools and downloads has significantly increased over the years. A digital signature provides you with an additional tool to verify authentication and integrity for downloaded files.

Best Practices for IT Security Policy Compliance Monitoring

When implementing a plan for IT security policy compliance monitoring, you can use several different best practices. The following list shows some of these:

• Start with a security policy—The security policy acts as the road map. This is a written document, not just some ideas in someone’s head. Without the written document, the actual security policy becomes a moving target and monitoring for compliance is challenging if not impossible.

• Create a baseline based on the security policy—Use images whenever possible to deploy new operating systems. These images will ensure that systems start in a secure state. They also accelerate deployments, save money, and increase availability.

• Track and update regulatory and compliance rule changes—Formally track regulatory and rule changes as a routine. Map changes to these rules to policies and configurations controls. Then update security policies and technology as needed.

• Audit systems regularly—After the baseline is deployed, regularly check the systems. Ensure that the security settings that have been configured stay configured. Well-meaning administrators can change settings. Malware can also modify settings. However, auditing will catch the modifications.

• Automate checks as much as possible—Use tools and scripts to check systems. Some tools are free. Other tools cost some money but all are better than doing everything manually. They reduce the amount of time necessary and increase the accuracy.

• Manage changes—Ensure that a change management process is used. This allows experts to review the changes before they are implemented and reduces the possibility that the change will cause problems. It also provides built-in documentation for changes.

Case Studies and Examples of Successful IT Security Policy Compliance Monitoring

The following sections show different case studies and examples related to IT security policies and compliance monitoring. Private sector, public sector, and critical infrastructure case studies and examples are included.

Private Sector Case Study

In this example a large sales organization with a dedicated IT staff suffered a major outage due to a minor change to a printer. The lack of compliance with change management policies was a contributing cause for the outage.

The organization has a subnet hosting multiple servers and a printer. Routers connect this subnet to other subnets on the network. All systems were working until a new server was added to the network with the same IP address as the printer. This IP address conflict prevented the printer from printing, and prevented the new server from communicating on the network. This problem is like having two identical street addresses in the same Zip code. At best, each address will get some mail, but not all of it. Similarly, on a network, each IP address will receive some traffic, but not all traffic that is expected. At best, you will see inconsistent performance.

The problem with the new server wasn’t discovered right away. However, a technician began troubleshooting a problem with the printer on the same subnet. While trouble-shooting, the technician suspected a problem with the IP address. The technician changed the IP address for the printer to 10.1.1.1, as shown in Figure 15-5. This change didn’t solve the problem, but the technician forgot to change the IP address back to normal. As you can see in the figure, the change caused a conflict between the printer and the default gateway. The printer and the near side of the router both had the same IP address and neither was working.

The default gateway is the path for all server communications out of the subnet. Because there was a conflict with the default gateway, none of the servers on the subnet were able to get traffic out of the subnet. They were all operating, but clients outside of the subnet couldn’t use them. With several servers no longer working, the problem was quickly escalated. Senior administrators were called in and discovered the problems. They corrected the IP address on the printer and provided some on-the-job training to the original technician.

Notice how this problem started with a small error. The new server was added using an IP address that was already used by the printer. After the printer IP address change, all the servers on the subnet lost network access. Change management and configuration management processes would have ensured this new server was added using a configuration that didn’t interfere with other systems. Additionally, change management would have prevented the change to the printer’s IP address.

As it turns out, this was the turning point for this company. It was one of a long string of problems caused by unauthorized changes. Management finally decided to implement a formal change management process and hired an outside consultant to help them.

Public Sector Case Study

One of the problems the U.S. government has is ensuring that basic errors are not repeated by different agencies. Security experts know that certain settings should be required. They know how systems are hacked. They know how to protect the systems. However, communicating this information so that every system in the government is protected isn’t so easy.

Indeed, many attacks on government systems have been the result of common errors. If these systems had been configured correctly, many attacks wouldn’t have succeeded. Security experts knew they needed a solution. The Federal Desktop Core Configuration (FDCC) was the solution.

Experts from the Defense Information Systems Agency (DISA), the National Security Agency (NSA), and NIST contributed to the FDCC. It includes a wide assortment of security settings. These settings reduce the systems’ vulnerabilities to attack. The FDCC is not perfect. However, it is a solid baseline. The baseline was loaded onto target computers and images were captured from these systems. For agencies that didn’t want to redo their machines by casting the image onto their systems, extensive checklists were available. The agency could manually follow the checklist to apply the changes to the systems.

In 2007, the U.S. Office of Management and Budget (OMB) mandated the use of the FDCC for all systems used within all federal agencies. The compliance deadline was February 2008.

There was some pushback with the mandate. However, the OMB stressed that compliance was required. Organizations were mandated to report their compliance. If they didn’t reach full compliance, they were required to identify their progress. NIST and the OMB work with individual agencies to identify solutions for applications or systems not working with the FDCC. For example, if an agency can’t comply, the security experts at NIST may be able to identify what needs to be done. This becomes a much better solution than an agency simply accepting that security needs to be weakened. Even worse, many agencies weren’t aware that their security was weakened.

Several SCAP tools include an FDCC scanner. These scanners audit systems in the agency’s network and report compliance. These reports can be used to verify to the OMB that the agency is in compliance. You can view a list of SCAP tools that include an FDCC scanner here: http://nvd.nist.gov/scapproducts.cfm.

At this stage, the FDCC is deployed in all federal agencies. Even though the FDCC presented some technical challenges for some organizations, it significantly raised the security posture of all systems in the U.S. government. No one will say that it has eliminated all the security issues. Far from it. However, it has helped computers throughout the entire U.S. government raise the security baseline.

Nonprofit Sector Case Study

In June 2013 it was reported that Stanford University’s Lucile Packard Children’s Hospital had had a data breach. An employee reported on May 8, 2013, that an unencrypted laptop containing medical information on pediatric patients had been stolen. The laptop contained personal information on 13,000 patients, including their names, ages, medical record numbers, and surgical procedures, as well as the names of physicians involved in the procedures, and telephone numbers. The computer involved was no longer in use; it was, in fact, nonfunctioning. But it had not been properly disposed of when it was taken out of service. This was the fifth major data breach for the hospital within a space of four years. In January 2013, to give another example, the hospital reported that a laptop containing medical information of 57,000 patients had been stolen from a physician’s car.

These events show a lack of compliance with HIPAA regulations. Encrypting laptops with sensitive information is a de facto standard in the healthcare industry. It’s also a requirement for many regulations, including those of HIPAA.

So how could this happen when the regulations and industry best practices are clear? That there were five major breaches within such a brief period suggests a systemic failure of the university to define its security policies, train its staff, and then hold them accountable with strict enforcement.

The Stanford episodes highlight three areas worth thinking about:

• Policy enforcement

• Awareness training

• Equipment disposal

With HIPAA standard and industry best practices so clear, you might assume that policy enforcement was lacking. There should have been a complete inventory of laptops and a crosscheck to ensure they were all encrypted.

Enforcement needs to be aggressive. Some organizations are very strict about enforcing these policies. For example, all laptops are required to be encrypted before they are issued. Scans are performed regularly to ensure laptops remain encrypted. When a device is found to be unencrypted (as in the case of an older or “legacy” device) the user is given a short window—a matter of days—to have it encrypted. A user who fails to comply loses access, and leadership is notified to take management action. Having a consequence for failing to follow security policy is an important enforcement tool.

University officials cite new and improved “HIPAA training” being put in place. This again might suggest that policies were in place but were not followed. Congress enacted HIPAA in 1996. The law’s requirements are well defined and known. The need to put new HIPAA training in place suggests a lack of employee awareness. If this is one of the causes, it illustrates that having a good policy is not enough. Compliance with the policy depends on a skilled workforce that embraces its implementation as part of day-to-day duties.

Equipment disposal seems the most puzzling part of the story. Why a broken device was still in the possession of the end user is confusing. As soon as a device is disabled, it should be immediately turned over to IT personnel and the information contained within properly disposed of. In this case, the delay in turning over the old device seems inappropriate. The employee had been issued a new device and the old laptop was considered “nonfunctional.” Many organizations in such a case would insist on receiving the damaged device before issuing a new one. This is a good policy.

It is also interesting is that university officials emphasized that the “nonfunctioning” laptop had “a seriously damaged screen.” The screen has nothing to do with the data contained within the laptop. Offering screen damage as a reason to be less concerned about the theft of the device raises questions about officials’ understanding of the threat. The hard drive is what matters. A laptop screen can be replaced or, even more easily, bypassed with an external monitor. Or the hard drive can be quickly moved to another machine.

This chapter covered some of the technologies used to ensure IT policy compliance. Imaging technologies can deploy identical baseline images for new systems. The chapter discussed the importance of a gold master image. However, the baseline is up to date only for a short period of time. As patches are released or other changes are approved, the baseline becomes out of date. The difference between the baseline and the required changes represents a vulnerability or a security gap. This gap must be closed to ensure systems stay secure.

Many automated tools are available to IT administrators today. These tools can examine systems to ensure the baseline security settings have not changed. They can also scan systems for vulnerabilities such as ensuring the computers have current patches. Many tools include the ability to scan for issues, and deploy changes to correct the issues. NIST published standards for SCAP in SP 800-126. These standards are resulting in a wealth of available tools to increase security for networks today. You read how penetration testing is an important test of the effectiveness of controls. You also read case studies illustrating how the lack of compliance can lead to significant impact to an organization.

![]() KEY CONCEPTS AND TERMS

KEY CONCEPTS AND TERMS

Configuration management (CM)

Federal Desktop Core Configuration (FDCC)

Gold master

Group Policy

Imaging

Penetration test

Security baseline

Security Content Automation Protocol (SCAP)

Simple Network Management Protocol (SNMP)

Web-Based Enterprise Management (WBEM)

1. A ________ is a starting point or standard. Within IT, it provides a standard focused on a specific technology used within an organization.

2. An operating system and different applications are installed on a system. The system is then locked down with various settings. You want the same operating system, applications, and settings deployed to 50 other computers. What’s the easiest way?

A. Scripting

B. Imaging

C. Manually

D. Spread the work among different departments

3. After a set of security settings has been applied to a system, there is no need to recheck these settings on the system.

A. True

B. False

4. The time between when a new vulnerability is discovered and when software developers start writing a patch is known as a ________.

5. Your organization wants to automate the distribution of security policy settings. What should be considered?

A. Training of administrators

B. Organizational acceptance

C. Testing for effectiveness

D. All of the above

6. Several tools are available to automate the deployment of security policy settings. Some tools can deploy baseline settings. Other tools can deploy changes in security policy settings.

A. True

B. False

7. An organization uses a decentralized IT model with a central IT department for core services and security. The organization wants to ensure that each department is complying with primary security requirements. What can be used to verify compliance?

A. Group Policy

B. Centralized change management policies

C. Centralized configuration management policies

D. Random audits

8. Change requests are tracked in a control work order database. Approved changes are also recorded in a CMDB.

A. True

B. False

9. An organization wants to maintain a database of system settings. The database should include the original system settings and any changes. What should be implemented within the organization?

A. Change management

B. Configuration management

C. Full ITIL life cycle support

D. Security Content Automation Protocol

10. An organization wants to reduce the possibility of outages when changes are implemented on the network. What should the organization use?

A. Change management

B. Configuration management

C. Configuration management database

D. Simple Network Management Protocol

11. A security baseline image of a secure configuration that is then replicated during the deployment process is sometimes call a ________.

A. Master copy

B. Zero-day image

C. Gold master

D. Platinum image

12. Microsoft created the Web-Based Enterprise Management (WBEM) technologies for Microsoft products.

A. True

B. False

13. A common method of scoring risk is reflected in the formula as follows, Risk = ________ × ________.

14. What is a valid approach for validating compliance to security baseline?

A. Vulnerability scanner

B. Penetration test

C. A and B

15. It is important to protect your gold master because an infected copy could quickly result in widespread infection with malware.

A. True

B. False

16. A ________ can be used with a downloaded file. It offers verification that the file was provided by a specific entity. It also verifies the file has not been modified.

17. If an organization implements the COSO internal control framework, then it cannot implement another controls framework like COBIT.

A. True

B. False