2.1

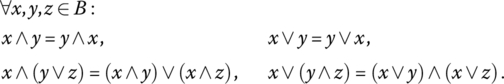

Graded Logic as a Generalization of Classical Boolean Logic

This chapter introduces graded logic as a system of realistic models of observable properties of human aggregation reasoning. After an introduction to aggregation as the fundamental activity in evaluation logic, we discuss the relationships between graded evaluation logic and fuzzy logic. Then, we present a survey of classical bivalent Boolean logic and introduce evaluation logic as a weighted compensative generalization of the classical Boolean logic. At the end of this chapter, we present a brief history of graded logic.

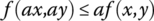

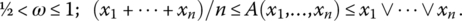

Three of the most frequent words in this book are means, logic, and aggregators. If we have n real numbers ![]() , then according to common sense the mean value of these numbers is a value M(x1, …, xn), which is located somewhere between the smallest and the largest of the numbers:

, then according to common sense the mean value of these numbers is a value M(x1, …, xn), which is located somewhere between the smallest and the largest of the numbers:

This property of function M is called internality. In our case, x1, …, xn are degrees of truth, and they belong to the unit interval ![]() . So,

. So, ![]() and

and ![]() . We can also rewrite relation (2.1.1) as follows:

. We can also rewrite relation (2.1.1) as follows:

Therefore, means are functions between conjunction and disjunction, and relation (2.1.2) obviously suggests that means can be interpreted as logic functions (and indeed, they are logic functions, assuming that logic means modeling observable properties of human reasoning). In particular, relation (2.1.2) indicates that the mean M (as a logic function) can be linearly interpolated between AND and OR as follows:

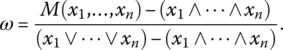

The parameter ![]() defines the location of M in the space between conjunction and disjunction. More precisely, ω denotes the proximity of M to disjunction, or the similarity between M and disjunction, and it can be called the disjunction degree or similarity to OR, or orness. According to (2.1.3), the disjunction degree of mean M is

defines the location of M in the space between conjunction and disjunction. More precisely, ω denotes the proximity of M to disjunction, or the similarity between M and disjunction, and it can be called the disjunction degree or similarity to OR, or orness. According to (2.1.3), the disjunction degree of mean M is

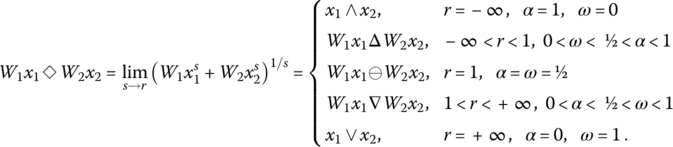

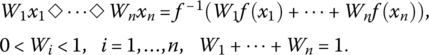

Relations (2.1.3) and (2.1.4) indicate that each mean could be interpreted as a mix of disjunctive and conjunctive properties. From that standpoint, we are particularly interested in parameterized means. Such means have an adjustable parameter r(ω) that can be used to adjust the logic properties of mean and provide a continuous transition from AND to OR:

The function M(x1, …, xn; r(ω)) can be interpreted as a logic function: it has an adjustable degree of similarity to disjunction (or to conjunction) and represents a fundamental component for building a graded logic. We call this function the graded (or generalized [DUJ07a]) conjunction/disjunction (in both cases, the abbreviation is GCD).

The above short story and relations (2.1.1 to 2.1.5) exactly describe my initial reasoning in 1972–1973, when I started developing a logic based on functions that provide continuous transition from AND to OR. My goals were to generalize the classic Boolean logic, to model observable properties of human reasoning, and to apply that methodology in the area of evaluation. These are also the main goals of this book.

2.1.1 Aggregators and Their Classification

Our first step is to introduce logic aggregators, i.e., functions that aggregate two or more degrees of truth and return a degree of truth in a way similar to observable patterns of human reasoning. The meaning and role of inputs and outputs of logic aggregators can be used as the necessary restrictive conditions that filter those functions and properties that have potential to serve in mathematical models of human evaluation reasoning. Not surprisingly, a general goal of mathematical definitions is the ultimate generality. In the area of aggregators, the mathematical generality means that highly applicable logic aggregators are mixed with lots of unnecessary mathematical ballast. Consequently, it is useful to first briefly investigate the families of functions that are closely related to logic aggregators. Such families are means, general aggregation functions, and triangular norms.

2.1.1.1 Means

Means are fundamental logic functions that we use in graded logic. Let us again note that our logic is continuous, all variables belong to unit interval ![]() and all logic phenomena and their models occur inside the unit hypercube

and all logic phenomena and their models occur inside the unit hypercube ![]() . So, before we start using means as graded logic functions, it is necessary to have a definition of mean.

. So, before we start using means as graded logic functions, it is necessary to have a definition of mean.

Mathematics provides various definitions of means. According to Oscar Chisini [CHI29], the mean M(x1, …, xn) (known as Chisini mean) generates a mean value x of n components of vector (x1, …, xn) if the following holds:

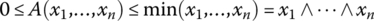

Both Chisini and Gini [GIN58] considered this definition too general and not implying internality (2.1.1). For purposes of applicability in logic, the internality (2.1.1) is the fundamental assumption, and consequently, we can consider that (2.1.1) is the most important component of any definition of means, and such means satisfy (2.1.6). By inserting ![]() in (2.1.1) we have

in (2.1.1) we have ![]() and we directly get idempotency:

and we directly get idempotency:

All means are internal and idempotent. Similarly, all nondecreasing idempotent aggregation functions ![]() are internal, i.e., they are means [GRA09].

are internal, i.e., they are means [GRA09].

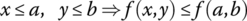

Another fundamental property of means is monotonicity: Means should be nondecreasing in each variable. More precisely, Mitrinović, Vasić, and Bullen [MIT77, BUL03] specify that a function f(x, y), in order to be considered a mean, should have the following fundamental properties:

- Continuity:

.

. - Internality:

.

. - Monotonicity:

.

. - Idempotency (reflexivity):

.

. - Symmetry:

.

. - Homogeneity:

.

.

The above properties reflect the special case where all variables have the same weight. In a general case, we assume that symmetry is excluded because each argument may have a different degree of importance and commutativity is not desirable. More mathematical details about definitions and properties of means can be found in [CHI29, DEF31, GIN58, KOL30, NAG30, ACZ48, MIT77, MIT89, BUL88, BUL03, BEL16].

2.1.1.2 General Aggregation Functions

In addition to means, decision models also use the concept of aggregation functions on [0, 1] or general aggregators [FOD94, CAL02, GRA09, BEL07, MES15, BEL16]. We call these aggregators “general” to differentiate them from the subclass of logic aggregators that will be defined and used later.

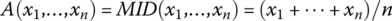

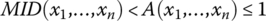

According to (2.1.7), a general aggregator is defined less restrictively than a mean. Primarily, such aggregators do not need to support internality and idempotency. For example, ![]() is an aggregator, but it is not idempotent and not a mean, while

is an aggregator, but it is not idempotent and not a mean, while ![]() is both an aggregator and a mean. So, all means that we use are aggregators, but all aggregators are not means.

is both an aggregator and a mean. So, all means that we use are aggregators, but all aggregators are not means.

Graded logic decision models predominantly use idempotent aggregators (means) but sometimes may also use nonidempotent aggregators. As we already mentioned, all internal functions are idempotent, and all idempotent nondecreasing functions are internal. All means that we use in GL support internality and idempotency.

Aggregators have a diagonal section function ![]() that is defined as a value that an aggregator generates along the main diagonal of the unit hypercube. Obviously, all idempotent aggregators have the diagonal section function

that is defined as a value that an aggregator generates along the main diagonal of the unit hypercube. Obviously, all idempotent aggregators have the diagonal section function ![]() . For example, the idempotent aggregator

. For example, the idempotent aggregator ![]() has the diagonal section function

has the diagonal section function ![]() . The nonidempotent t‐norm aggregator

. The nonidempotent t‐norm aggregator ![]() has the diagonal section function

has the diagonal section function ![]() and its De Morgan dual, the t‐conorm

and its De Morgan dual, the t‐conorm ![]() has the diagonal section function

has the diagonal section function ![]() . Since

. Since ![]() , in this particular case we also have

, in this particular case we also have ![]() .

.

Mathematical literature [FOD94, BEL07, GRA09, MES15, BEL16] uses the following classification of aggregators:

- Conjunctive aggregators:

.

. - Disjunctive aggregators:

.

. - Averaging aggregators:

.

. - Mixed aggregators: aggregators that do not belong to groups (1), (2), (3).

Unfortunately, this classification is not oriented toward the logic interpretation of aggregators. Primarily, the variables x1, …, xn are not assumed to be degrees of truth of corresponding statements, and aggregators are not assumed to be functions of propositional calculus. In addition, the averaging aggregation is not interpreted as a continuum of conjunctive or disjunctive logic operations, but mostly as a statistical computation of mean values. Simultaneity (conjunctive aggregation) is recognized only in the lowest region of the unit hypercube, and substitutability (disjunctive aggregation) is recognized only in the highest region of the unit hypercube. This is not consistent with the propositional logic interpretation of aggregation functions ![]() . Therefore, we will not use the above definition and classification of general aggregators. We need a more restrictive definition of logic aggregators, as well as a different classification, outlined below and discussed in detail in subsequent sections.

. Therefore, we will not use the above definition and classification of general aggregators. We need a more restrictive definition of logic aggregators, as well as a different classification, outlined below and discussed in detail in subsequent sections.

2.1.1.3 Logic Aggregators

Our classification of logic aggregators will be based on the fact that basic logic aggregators are models of simultaneity or models of substitutability. In addition, the centroid of all logic aggregators is the logic neutrality, modeled as the arithmetic mean. Various degrees of predominant simultaneity can be modeled using aggregation functions that are located below neutrality. Various degrees of predominant substitutability can be modeled using aggregation functions that are located above neutrality. Therefore, assuming nonidentical arguments, we will use the following basic classification of logic aggregators:

- Neutral logic aggregator:

.

. - Conjunctive aggregators:

.

. - Disjunctive aggregators:

.

.

More specifically, conjunctive and disjunctive aggregators can be regular if they are means. On the other hand, all nonidempotent conjunctive logic aggregators that satisfy ![]() will be denoted as hyperconjunctive. Similarly, all nonidempotent disjunctive aggregators that satisfy

will be denoted as hyperconjunctive. Similarly, all nonidempotent disjunctive aggregators that satisfy ![]() will be denoted as hyperdisjunctive. A full justification of this classification, the definition of logic aggregators, and more details can be found in Sections 2.1.2 and 2.1.3.

will be denoted as hyperdisjunctive. A full justification of this classification, the definition of logic aggregators, and more details can be found in Sections 2.1.2 and 2.1.3.

2.1.1.4 Triangular Norms and Conorms

The areas of hyperconjunctive and hyperdisjunctive aggregators offer models of very high degrees of simultaneity and substitutability. These areas overlap with the areas of triangular norms (t‐norms) and triangular conorms (t‐conorms).

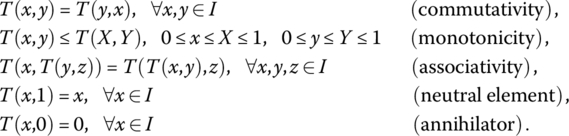

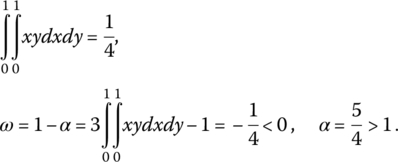

According to [FOD94] a t‐norm is a function ![]() that satisfies the following conditions:

that satisfies the following conditions:

These properties indicate conjunctive behavior of t‐norms [MES15], i.e., the possibility to use some of t‐norms for modeling simultaneity. Particularly important in that direction are Archimedean t‐norms that satisfy ![]() . In other words, the diagonal section function of Archimedean t‐norms satisfies

. In other words, the diagonal section function of Archimedean t‐norms satisfies ![]() , showing that the surface of such t‐norm is located below the pure conjunction function:

, showing that the surface of such t‐norm is located below the pure conjunction function: ![]() . For example,

. For example, ![]() . Therefore, Archimedean t‐norms can be used as models of hyperconjunctive aggregators. The associativity of t‐norms permits the use of t‐norms in cases of more than two variables.

. Therefore, Archimedean t‐norms can be used as models of hyperconjunctive aggregators. The associativity of t‐norms permits the use of t‐norms in cases of more than two variables.

T‐conorms are defined using duality: ![]() . That yields the following properties of t‐conorms that are symmetric to the properties of t‐norms:

. That yields the following properties of t‐conorms that are symmetric to the properties of t‐norms:

Among a variety of t‐norms and t‐conorms the most frequently used t‐norms/conorms in literature are min/max (M), product (P), Łukasiewicz (L), and drastic (D), defined as follows:

Among these aggregators, M and P are sometimes used in logic aggregation for modeling very high levels of simultaneity and substitutability. Others have rather low applicability, as discussed in [DUJ07c]. The primary reasons are the incompatibility with observable properties of human reasoning: the absence of idempotency (except for M), the absence of weights, discontinuities (L, D), insensitivity to improvements (M, L, D), and insufficient support for modeling simultaneity or substitutability (L). For example, ![]() , and there is absolutely no reward for the average satisfaction of inputs. This model might be called the “excess 50%” norm, because its main concern is to eliminate candidates who cannot make an average score of 50%. After that, TL(x, y) behaves similarly to the arithmetic mean, providing simple additive compensatory features. For example,

, and there is absolutely no reward for the average satisfaction of inputs. This model might be called the “excess 50%” norm, because its main concern is to eliminate candidates who cannot make an average score of 50%. After that, TL(x, y) behaves similarly to the arithmetic mean, providing simple additive compensatory features. For example, ![]() and in this case the decrement Δx is insufficiently penalized because it can be compensated with the increment having the same size:

and in this case the decrement Δx is insufficiently penalized because it can be compensated with the increment having the same size: ![]() . So, TL(x, y) shows inability to properly penalize the lack of simultaneity, which is a fundamental issue in all models of simultaneity.

. So, TL(x, y) shows inability to properly penalize the lack of simultaneity, which is a fundamental issue in all models of simultaneity.

Such properties are not useful in graded evaluation logic and were among reasons for defining logic aggregators in Section 2.1.3 in a way that excludes TL(x, y) and TD(x, y) from the status of logic aggregators, in an attempt to reduce mathematical ballast generated by too permissive (insufficiently restrictive) definition of general aggregators (Definition 2.1.0). The main restrictive concept of our approach is that logic aggregators must be applicable as models of human reasoning, and we try to focus only on mathematical infrastructure that supports that fundamental goal.

Duals of Archimedean t‐norms (Archimedean t‐conorms) can be used as models of hyperdisjunctive aggregators. For example, ![]() is a t‐norm and a hyperconjunctive aggregator and

is a t‐norm and a hyperconjunctive aggregator and ![]() is a t‐conorm and a hyperdisjunctive aggregator. On the other hand,

is a t‐conorm and a hyperdisjunctive aggregator. On the other hand, ![]() can be used as a hyperconjunctive aggregator but it is not a t‐norm. Its dual,

can be used as a hyperconjunctive aggregator but it is not a t‐norm. Its dual, ![]() can be used as a hyperdisjunctive aggregator, but is not a t‐conorm.

can be used as a hyperdisjunctive aggregator, but is not a t‐conorm.

Some of presented general aggregators are consistent and some are inconsistent with observable properties of human evaluation reasoning. Keeping in mind these basic types of aggregators and their properties, we can now focus on studying aggregation functions that are provably suitable for building models of evaluation reasoning.

2.1.2 How Do Human Beings Aggregate Subjective Categories?

The only way to answer this crucial question is to observe characteristic patterns of human aggregative reasoning and to identify necessary properties of aggregation. If we can identify a single case of an indisputably valid reasoning pattern, such a case is a sufficient proof of the existence of that reasoning pattern, as well as a proof that the mathematical models of logic reasoning must include and correctly quantitatively describe the properties of such reasoning.

Initial attempts to investigate human aggregation of subjective categories using empirical analysis based on experiments with human subjects can be found in [THO79, ZIM80, ZIM87, KOV92, ZIM96)]. These valuable efforts were restricted to the study of nonidempotent gamma aggregator ![]() ,

, ![]() and unfortunately remained isolated.

and unfortunately remained isolated.

In this section, we identify basic characteristic patterns of aggregation of subjective categories. For each pattern, we first define the characteristic property and then provide the proof of existence. Our analysis is focused on aggregation in the context of evaluation reasoning. We assume that a decision maker has input percepts of the suitability degrees ![]() of a group of

of a group of ![]() components of an evaluated object. The decision maker then aggregates input suitability degrees to create a composite percept of the output (fused) suitability of the analyzed group of n components of the evaluated object,

components of an evaluated object. The decision maker then aggregates input suitability degrees to create a composite percept of the output (fused) suitability of the analyzed group of n components of the evaluated object, ![]() ,

, ![]() . In most practical cases the evaluated objects are artifacts (products made by humans), but the evaluation can also include other forms of decision alternatives.

. In most practical cases the evaluated objects are artifacts (products made by humans), but the evaluation can also include other forms of decision alternatives.

There are two clearly visible types of aggregation of subjective categories: idempotent and nonidempotent. Idempotent aggregation is based on assumption that any object is as suitable as its components in all cases where all components have the same suitability.

Nonidempotent aggregation is based on the assumption that if all components of an evaluated object have the same value, then the overall value of the object can be less then or greater than the value of components [ZIM96, DUJ07c]. One of such aggregators (originating in probability theory) is ![]() . So, if the values of two components x and y are 50%, then the value of the whole object is 25%. Proponents of nonidempotent aggregation rarely relate aggregation with human reasoning and decision making. An exception is the nonidempotent gamma aggregator of Zimmermann and Zysno [ZIM80], which was empirically validated.

. So, if the values of two components x and y are 50%, then the value of the whole object is 25%. Proponents of nonidempotent aggregation rarely relate aggregation with human reasoning and decision making. An exception is the nonidempotent gamma aggregator of Zimmermann and Zysno [ZIM80], which was empirically validated.

In the context of evaluation, human beings aggregate subjective categories using observable reasoning patterns. This process is based on the following fundamental reasoning patterns that are further investigated in Section 2.1.8, as well as in Chapter 2.2.

Pattern 1. Idempotent Aggregation

This aggregation pattern is based on the assumption that the output (aggregated) suitability degree must be between the lowest and the highest input suitability degrees. This property is called internality. Consequently, if all input suitability degrees are equal, the output suitability must have the same value (typical idempotent operations include means, conjunction, disjunction, set union, and set intersection).

Proof of Existence

In all schools, the grade point average (GPA) reflects the overall satisfaction of educational requirements, and it is universally accepted as a valid percept of academic performance of students. Assuming a set of different individual grades, the GPA score is always higher than the lowest individual grade and lower than the highest individual grade. This reasoning is equally accepted by students, teachers, and schools as the most reasonable grade aggregation pattern.

Pattern 2. Nonidempotent Aggregation

This aggregation pattern is based on assumption that the aggregated suitability degree can be lower than the lowest or higher than the highest input suitability degree (![]() or

or ![]() ). This property is called externality.

). This property is called externality.

Proof of Existence

In situations where suitability can be interpreted as probability (or likelihood), it is possible to find cases where input suitability degrees reflect independent events and the overall suitability is a product of input suitability degrees. For example, biathlon athletes combine cross‐country skiing and rifle shooting. These two rather different skills can be considered almost completely independent. Consequently, if the performance of an athlete in cross‐country skiing is x1 and in rifle shooting is x2, then the overall suitability of such an athlete for biathlon competitions might be ![]() . This model becomes obvious if x1 and x2 are interpreted as two independent probabilities: x1 is the probability of winning in skiing and x2is the probability of winning is rifle shooting.

. This model becomes obvious if x1 and x2 are interpreted as two independent probabilities: x1 is the probability of winning in skiing and x2is the probability of winning is rifle shooting.

In a reversed case where x1 denotes patient’s motor impairment (disability) and x2 denotes patient’s visual impairment, it is not difficult to find unfortunate situations where the overall disability satisfies the condition ![]() .

.

In the area of evaluation the idempotent aggregation is significantly more frequent and more important than the nonidempotent aggregation. The reason for the high importance of idempotent aggregation is that objects of evaluation are most frequently human products (both physical and conceptual), human properties (like academic or professional performance), etc., where evaluation reasoning is similar as in the case of GPA: the overall suitability of an evaluated complex object cannot be higher than the suitability of its best component or less than the suitability of its worst component.

The industrial evaluation projects are predominantly focused on complex industrial products. Contrary to the assumption of fully independent components, all industrial products have components characterized by positively correlated suitability degrees. Indeed, all engineers develop products that have very balanced quality of components (positively correlated with the price of product). For example, if a typical car is driven 15,000 miles per year and typically lasts up to 200,000 miles, then it can be used approximately 14 years. It would be meaningless to install in such a car a windshield wiper mechanism that is designed to last 40 years. The design logic of engineering products is based on selected price range that is closely related to the expected overall quality degree. Consequently, the designers try to make all components as close as possible to the selected overall quality level. For example, an expensive car regularly has an expensive radio; a computer with a very fast processor usually has a very large main memory and large disk storage. Similarly, student grades on midterm exam are highly correlated with the grades on the final exam because both of them depend on student’s ability and effort. Obviously, the evaluated components are not independent, and usually they have highly correlated suitability degrees. In such cases, the result of evaluation is an overall suitability degree, acting as a kind of centroid of suitability degrees of all relevant components and located inside the [min, max] range. Such evaluation models must be based on the idempotent aggregation. Therefore, we next focus on evaluation reasoning patterns that are necessary and sufficient for idempotent aggregation.

Pattern 3. Noncommutativity

In human reasoning, each truth has its degree of importance. Commutative (symmetric) aggregators are either special cases, or unacceptable oversimplifications.

Proof of Existence

In the GPA aggregation example, the grade G4 of a course that has 4 hours of lectures per week cannot be equally important as the grade G2 of a course that has 2 hours of lectures per week (G4 should be two times more important than G2). For most car buyers, the car safety features are much more important than the optional heating of the front seats. For most homebuyers, the quality of a living room is not as important as the quality of a laundry room.

Pattern 4. Adjustable Simultaneity (Partial Conjunction)

This is the most frequent aggregation pattern. It reflects the condition for simultaneous satisfaction of two or more requirements. The degree of simultaneity (or the percept of importance of simultaneity) can vary in a range from low to high and must be adjustable. In some cases, a moderate simultaneity is satisfactory, and in other cases only the high simultaneity is acceptable. The degree of simultaneity is called andness.

Proof of Existence

A homebuyer simultaneously requires a good quality of home, and a good quality of home location, and an affordable price of home. A biathlon athlete must simultaneously be a good skier and a good rifle shooter.

Pattern 5. Adjustable Substitutability (Partial Disjunction)

This aggregation pattern reflects the condition where the satisfaction of any of two or more requirements significantly satisfies an evaluation criterion. The degree of substitutability (or the percept of importance of substitutability) can vary in a range from low to high and must be adjustable. In some cases a low or moderate substitutability can be satisfactory, and in other cases decision makers may require a high substitutability. The degree of substitutability is called orness.

Proof of Existence

A patient has a medical condition that combines motor impairments (decreased ability to move) and sensory symptoms (pain). Patient disability degree is the consequence of either sufficient motor impairments or sufficiently developed sensory problems, yielding disjunctive aggregation.

Pattern 6. The Use of Annihilators

Human evaluation reasoning is frequently based on aggregators that support annihilators. The annihilator is an extreme value of suitability (either 0 or 1) that is sufficient to decide the result of aggregation regardless of the values of other inputs [BEL07a]. In the case of necessary conditions the annihilator is 0: If any of mandatory requirements is not satisfied, the evaluated object is rejected (in other words, ![]() ). In a dual case of sufficient conditions the annihilator is 1: If any of sufficient requirements is fully satisfied, the evaluation criterion is fully satisfied (in other words,

). In a dual case of sufficient conditions the annihilator is 1: If any of sufficient requirements is fully satisfied, the evaluation criterion is fully satisfied (in other words, ![]() ). The aggregators that support annihilators 0 and 1 are called hard and aggregators that do not support annihilators are called soft.

). The aggregators that support annihilators 0 and 1 are called hard and aggregators that do not support annihilators are called soft.

Proof of Existence

In many schools, a failure grade in a single course is sufficient to annihilate the effect of all other course grades and produce the overall failure, forcing the student to repeat the whole academic year. This annihilator has dual interpretation:

- The overall failure is the failure in class #1 or the failure in class #2 or the failure in any other class (the annihilator is 1).

- The overall passing grade assumes the passing grade in class #1 and the passing grade in class #2, and the passing grades in all other classes (the annihilator is 0).

Pattern 7. Partial and Full Hard Simultaneity (Hard Partial Conjunction)

This pattern is encountered in situations where it is highly desirable to simultaneously satisfy two or more inputs, and the satisfaction of all inputs is mandatory. The percept of aggregated satisfaction of hard simultaneous requirements will automatically be zero if any of inputs has zero satisfaction. It is going to be nonzero only if all inputs are partially satisfied. In the extreme case of full hard simultaneity, the aggregated suitability is equal to the lowest input suitability. In extreme cases, the lowest suitability cannot be compensated by increasing suitability of other inputs. In a more frequent case of partial hard simultaneity, nonzero low inputs can be partially compensated by higher values of other inputs.

Proof of Existence

In the process of selecting a home location, a senior citizen who cannot drive a car needs to be in the proximity of public transportation and food stores. If any of these mandatory requirements is not satisfied, the aggregated percept of satisfaction with a home location is zero, and the corresponding home location is rejected. Home locations are acceptable if and only if all inputs are positive (partially satisfied). In an extreme case of full hard simultaneity, the stakeholder does not want to accept any low input (neither remote food stores nor remote public transport). In such cases, the lowest input cannot be compensated by other higher inputs, and the aggregated percept of suitability is affected solely by the lowest of input suitability degrees. Much more frequently, however, the percept of aggregated suitability depends on all mandatory inputs and not only the lowest input. In other words, an adjustable partial compensation of the lowest (but always positive) suitability degree is usually possible.

Pattern 8. Soft Simultaneity (Soft Partial Conjunction)

This pattern is encountered in situations where it is desirable to simultaneously satisfy two or more inputs, but none of the inputs is mandatory. The percept of aggregated satisfaction of soft simultaneous requirements will not automatically be zero if one of the inputs has zero satisfaction. It is going to be nonzero as long as at least one of inputs is partially satisfied.

Proof of Existence

In the process of selecting a home location, a senior citizen would like to live in the proximity of park, restaurants, and public library. The percept of satisfaction with a given location depends on simultaneous proximity to park, restaurants, and libraries, but the stakeholder is usually ready to accept situations where some (but not all) of the desired amenities are missing.

Pattern 9. Partial and Full Hard Substitutability (Hard Partial Disjunction)

This pattern is encountered in situations where it is desirable to satisfy two or more inputs that can partially or completely replace each other. Each input is sufficient to alone completely satisfy all requirements. The percept of aggregated satisfaction of hard substitutability requirements will automatically be complete if any of inputs is completely satisfied. In the case of incomplete satisfaction of input requirements, each input has the capability to partially compensate the lack of other inputs. The percept of aggregated suitability is nonzero as long as at least one of inputs is partially satisfied. In the extreme case of full hard substitutability, the aggregated suitability is equal to the highest input suitability, and lower suitability degrees do not affect the aggregated suitability. In a more frequent case of partial hard substitutability and incomplete satisfaction of requirements, low inputs can affect (decrease) the aggregated percept of suitability.

Proof of Existence

A homebuyer in an area with very poor (or nonexistent) street parking needs a parking solution, and the options are: a private garage or a shared garage or a reserved space in an outdoor parking lot. If any of these three options is completely satisfied, the homebuyer is fully satisfied. If the satisfaction with these options is incomplete (e.g., the homebuyer needs space for two cars but the available private garage has space for only one car), then the aggregated suitability of parking depends on all available options, or in an infrequent extreme case, it can depend only on the best option.

Pattern 10. Soft Substitutability (Soft Partial Disjunction)

This pattern is encountered in situations where it is desirable to satisfy two or more inputs that can partially replace each other, but none of them is sufficient to alone completely satisfy all requirements. The percept of aggregated satisfaction of soft substitutability requirements will not automatically be complete unless all inputs are completely satisfied. However, each input has the capability to partially compensate for the lack of other inputs. The percept of aggregated suitability is nonzero as long as at least one of inputs is partially satisfied.

Proof of Existence

A homebuyer with a very limited budget has a list of amenities (sport stadiums, restaurants, parks, etc.) that are desirable in the vicinity of home. None of the amenities is sufficient to completely satisfy the homebuyer, but a homebuyer who has a small number of choices is forced to be partially satisfied with any subset of them.

The patterns 6 to 10 are denoted in Fig. 2.1.1 as logically symmetric. In this context, the symmetry does not mean commutativity, but the fact that all inputs support (or do not support) annihilators in the same way. So, either all inputs are hard or all inputs are soft.

Figure 2.1.1 Types of aggregation and the classification of logic aggregators (bold frames).

Pattern 11. Asymmetric Simultaneity (Conjunctive Partial Absorption)

This pattern is encountered in situations where it is desirable to simultaneously satisfy a mandatory input requirement and an optional input requirement. This form of aggregation is conjunctive but asymmetric. The mandatory input supports the annihilator 0 (if it is not satisfied the percept of aggregated suitability is zero regardless the level of satisfaction of the optional input). The optional input does not support the annihilator 0. If the mandatory requirement is partially satisfied, the zero optional input decreases the output suitability below the level of the mandatory input, but does not yield the zero result, showing the asymmetry of this form of simultaneity. Similarly, if the optional input is perfectly satisfied, it will, to some extent, increase the output suitability above the level of the mandatory input.

Proof of Existence

A homebuyer who needs parking in a moderately populated urban area would like to have a private garage in the home and good street parking in front of the home. However, the private parking garage is mandatory, while good street parking for the homeowner and occasional visitors is optional, i.e., it is desirable but not mandatory. The home without a private garage will be rejected regardless of the availability of good street parking, while a nice home with a private garage but poor street parking will be acceptable.

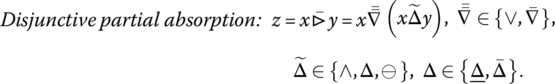

Pattern 12. Asymmetric Substitutability (Disjunctive Partial Absorption)

This pattern is encountered in situations where it is desirable to satisfy a sufficient input requirement and/or an alternative optional input requirement. This form of aggregation is disjunctive but asymmetric. The sufficient input supports the annihilator 1 (if it is fully satisfied the percept of aggregated suitability is the full satisfaction regardless the level of satisfaction of the optional input). The optional input does not support the annihilator 1. If the sufficient requirement is completely not satisfied, then a positive degree of satisfaction of the optional input can partially compensate for the lack of sufficient input, but it cannot yield the full satisfaction. That shows the asymmetry of this form of substitutability. Similarly to the case of asymmetric simultaneity, if the sufficient requirement is partially satisfied, the zero optional input decreases the output suitability below the level of the sufficient input but does not yield the zero result. On the other hand, if the optional input is perfectly satisfied, it will to some extent increase the output suitability above the level of the sufficient input.

Proof of Existence

A homebuyer who needs parking in a highly populated urban area would like to have a private garage for two cars in the home or a good street parking in front of the home. A high‐quality two‐car private parking garage is sufficient to completely satisfy the parking requirements and in such a case the street parking becomes irrelevant. In the absence of private garage, a good street parking is acceptable as a nonideal (partial) solution of the parking problem. In addition, if a single‐car (i.e., medium suitability) private garage is available, then a good street parking can increment (improve) the overall percept of parking suitability, and the corresponding parking suitability score will be higher than the private garage score. Similarly, in the case of single‐car private garage, poor street parking can further decrement the overall percept of parking suitability and the parking suitability score, below the level of the medium suitability of the single‐car private garage. These suitability increments and decrements are called reward and penalty. They can be used to adjust desired properties of all partial absorption aggregators.

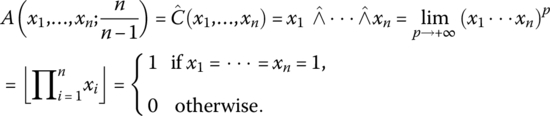

Pattern 13. Neutrality (The Centroid of Logic Aggregators)

In its extreme version, simultaneity means that an aggregation criterion is completely satisfied only if all input components are simultaneously completely satisfied. In the extreme case of substitutability, any single input can completely satisfy an aggregation criterion and compensate the absence of all other inputs. Not surprisingly, human reasoning uses extreme versions of simultaneity and substitutability only in extreme situations. In normal situations, simultaneity and substitutability are used as complementary components in the process of aggregation. Humans can continuously adjust degrees of simultaneity and substitutability, making a smooth continuous transition from extreme simultaneity to extreme substitutability. Along that path, there is a central point where conjunctive and disjunctive properties are perfectly balanced. This point can be denoted as neutrality, and it represents a kind of centroid of basic logic aggregators. In the case of idempotent aggregators, the middle point between conjunction and disjunction is the arithmetic mean, as indicated in the simplest case of two inputs: ![]() . It is most likely that neutrality is an initial default aggregator in human reasoning, which is subsequently adjusted (refined) moving in conjunctive or disjunctive direction, and using different weights of inputs. This is the reason why neutrality can be considered the centroid of all logic aggregators.

. It is most likely that neutrality is an initial default aggregator in human reasoning, which is subsequently adjusted (refined) moving in conjunctive or disjunctive direction, and using different weights of inputs. This is the reason why neutrality can be considered the centroid of all logic aggregators.

Proof of Existence

The logic behind using arithmetic mean as a school grade aggregator in computing the GPA score is the simultaneous presence and the perfect balance of two completely contradictory requirements: (1) excellent students must simultaneously have all excellent course grades (conjunctive property), and (2) any subset of good grades can partially compensate any subset of bad grades (disjunctive property). The logic neutrality reflects human ability to seamlessly combine and balance the effects of such diverse requirements.

Various patterns of human aggregation of subjective categories are summarized and classified in Fig. 2.1.1. There are nine idempotent and two nonidempotent aggregation patterns that are easily observable in human evaluation reasoning. These types of aggregation combined with negation are necessary and sufficient to model graded logic used in human reasoning, including evaluation and other applications of soft propositional logic. A detailed analysis supporting the concept of sufficiency is provided in subsequent sections.

In all presented reasoning patterns, exemplifying the characteristic property of reasoning is equivalent to proving that the property is necessary. Consequently, in the special case of idempotent logic aggregators, fundamental properties of aggregation can be specified as follows:

- There are nine fundamental necessary and sufficient idempotent logic aggregation patterns used in evaluation logic: soft and (full or partial) hard symmetric conjunctive and disjunctive patterns, neutrality, and asymmetric conjunctive and disjunctive patterns.

- If available aggregators do not explicitly support all the fundamental patterns of human aggregation of subjective categories, they cannot be used in logic aggregation models. Of course, there are many aggregators that can model only a subset of fundamental aggregation patterns, and such aggregators can be used if the aggregation problem does not need all aggregation patterns.

In the case of nonidempotent aggregators, we noticed their low applicability in the area of modeling human evaluation reasoning, but nevertheless, they are present in human reasoning. In the human mind, there is no switch for discrete transition from idempotent to nonidempotent aggregators. The transition from idempotent to nonidempotent aggregators and vice versa must be seamless, and logic aggregators must be developed to enable that form of transition. That is going to be one of our goals in the next chapters.

Let us again emphasize that in this book we are interested in logic aggregation (i.e., the aggregation of degrees of truth) and not in aggregation in the mathematical theoretical sense. Many aggregators that satisfy the extremely permissive aggregation function conditions (Definition 2.1.0) have properties that are obviously incompatible with properties of human reasoning (poor support for modeling simultaneity and substitutability, discontinuities of first derivatives, etc.). Therefore, the general study of aggregators is a much wider area than the study of logic aggregators. Logic aggregators satisfy the conditions (2.1.7), but they must satisfy a number of other restrictive conditions that reduce the number of possible aggregation function and restrictively specify their fundamental properties.

The basic logic reasoning patterns presented in this section are the point of departure for any study of evaluation logic reasoning. There are two “aggregation roads” diverging from this point of departure. One is to ignore the observable reasoning patterns and to build aggregation models as general (formal) mathematical structures; there are many travelers we can encounter along the aggregation theory road. The second option is to take “the road less traveled by” and to try to strictly follow, model, and further develop the observable reasoning patterns. If we want to reach the territory of professional evaluation applications, we must take the second road.

2.1.3 Definition and Classification of Logic Aggregators

Mathematical literature devoted to aggregators [GRA09, BEL07, BEL16] defines an aggregator A(x1, …, xn) as a function that is monotonically increasing in all arguments and satisfies two boundary conditions as shown in Definition 2.1.0. It is rather easy to note that the Definition 2.1.0 is extremely permissive, yielding various families of functions, which have properties not encountered in logic models, and not observable in human reasoning. Unfortunately, such functions are still called conjunctive or disjunctive, and these terms imply the proximity to logic and applicability in models of human reasoning. Therefore, it is useful to define the concept of logic aggregator in a more restrictive way, to exclude properties that are inconsistent with observable properties of human aggregative reasoning.

If aggregators are applied in evaluation logic, then we assume that input variables x1, …, xn have semantic identity as degrees of truth of value statements, and aggregators are functions of propositional calculus (also called sentential logic, sentential calculus, or propositional logic). Thus, undesirable properties of logic aggregators include the following:

- Discontinuities: natura non facit saltum (nature makes no leap) and logic aggregators should be continuous functions.

- If all arguments are to some extent true (i.e., positive), their aggregate cannot be false (0). To create aggregated falsity, at least one input argument must be false.

- If all arguments are not completely true, their aggregate cannot be completely true. To create a complete (perfect) aggregated truth, at least one input argument must be completely true.

- Discontinuities and/or oscillations of first derivatives are questionable properties, incompatible with human reasoning. They occur in special cases (e.g., as a consequence of using min and max functions), but not as a regular modus operandi in logic aggregation.

Taking into account these observations, we are going to use the following definition of logic aggregators.

Therefore, we restricted the general Definition 2.1.0 by requesting the continuity of logic aggregation functions and two additional logic conditions. For example, according to this definition, both Łukasiewicz and drastic t‐norms are not logic aggregators. Of course, in propositional logic we regularly use logic functions that are not classified as logic aggregators. In most cases, such functions use the standard negation (![]() ), which can destroy nondecreasing monotonicity and result in logic functions that are not aggregators. On the other hand, it is easy to note that from Definition 2.1.1 it follows that compound functions created using superposition of logic aggregators are again logic aggregators. In this book, we are interested only in logic aggregators and in functions created using logic aggregators and standard negation. Therefore, wherever we use the term aggregator we assume logic aggregators based on Definition 2.1.1 and not general aggregators based on Definition 2.1.0.

), which can destroy nondecreasing monotonicity and result in logic functions that are not aggregators. On the other hand, it is easy to note that from Definition 2.1.1 it follows that compound functions created using superposition of logic aggregators are again logic aggregators. In this book, we are interested only in logic aggregators and in functions created using logic aggregators and standard negation. Therefore, wherever we use the term aggregator we assume logic aggregators based on Definition 2.1.1 and not general aggregators based on Definition 2.1.0.

The central position in propositional logic is reserved for logic aggregators that are models of simultaneity (conjunction), substitutability (disjunction), and negation. The most frequent logic models are based on combining various forms of conjunction, disjunction, and negation to create logic functions of higher complexity. Methods for building such models belong to propositional calculus. Consequently, our first step is to investigate models of simultaneity and substitutability.

According to [DUJ74a] and the analysis presented in Section 2.1.1, the area of partial conjunction is located between the arithmetic mean and the pure conjunction ![]() , and the area of partial disjunction is located between the arithmetic mean and the pure disjunction

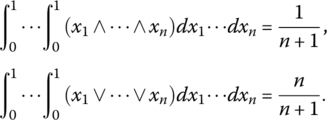

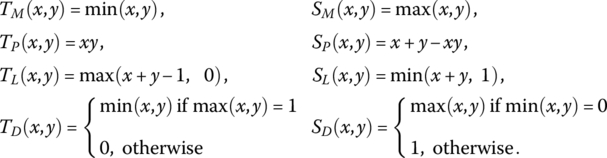

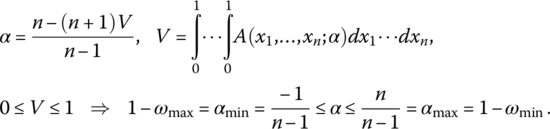

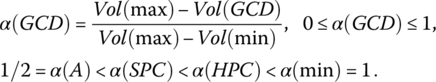

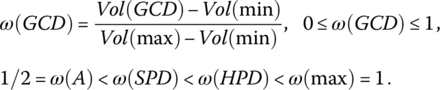

, and the area of partial disjunction is located between the arithmetic mean and the pure disjunction ![]() . Logic aggregators are characterized by their location in the space between the pure conjunction and the pure disjunction. The location of an aggregator can be quantified as the volume under the aggregator surface inside the unit hypercube. The intensity of partial conjunction is measured using the conjunction degree (or andness) α defined as follows1 [DUJ73a, DUJ74a]:

. Logic aggregators are characterized by their location in the space between the pure conjunction and the pure disjunction. The location of an aggregator can be quantified as the volume under the aggregator surface inside the unit hypercube. The intensity of partial conjunction is measured using the conjunction degree (or andness) α defined as follows1 [DUJ73a, DUJ74a]:

The intensity of partial disjunction is measured using the disjunction degree (or orness) ω defined as the complement of α:

Obviously, for idempotent aggregators, ![]() . In the case of pure conjunction, we have

. In the case of pure conjunction, we have ![]() , and in the case of pure disjunction we have

, and in the case of pure disjunction we have ![]() . In the case of arithmetic mean

. In the case of arithmetic mean ![]() the andness and orness are equal:

the andness and orness are equal:

Therefore, the arithmetic mean is the central point (a centroid of logic aggregators) between the pure conjunction and the pure disjunction. Conjunctive aggregators are less than the arithmetic mean and therefore located in the area between the arithmetic mean and the pure conjunction where ![]() . Similarly, the disjunctive aggregators are greater than the arithmetic mean and therefore located in the area between the arithmetic mean and the pure disjunction where

. Similarly, the disjunctive aggregators are greater than the arithmetic mean and therefore located in the area between the arithmetic mean and the pure disjunction where ![]() .

.

Let us now investigate the basic means. The geometric mean ![]() is obviously a conjunctive logic function: It is less than the arithmetic mean2

is obviously a conjunctive logic function: It is less than the arithmetic mean2 ![]() , and for

, and for ![]() and

and ![]() it gives the same mapping as the pure conjunction:

it gives the same mapping as the pure conjunction: ![]() . Similar conjunctive logic function is the harmonic mean

. Similar conjunctive logic function is the harmonic mean ![]() . Since

. Since ![]() and

and ![]() , it follows

, it follows ![]() (the geometric mean g is located between a and h). In other words, the harmonic mean is more conjunctive than the geometric mean. However, the quadratic mean

(the geometric mean g is located between a and h). In other words, the harmonic mean is more conjunctive than the geometric mean. However, the quadratic mean ![]() is disjunctive. From

is disjunctive. From ![]() it follows that

it follows that ![]() . Since

. Since ![]() it follows

it follows ![]() and

and ![]() . Consequently, we have proved for two variables the inequality of basic conjunctive and disjunctive aggregators:

. Consequently, we have proved for two variables the inequality of basic conjunctive and disjunctive aggregators:

In this expression, the equality holds only if ![]() . Using more complex proving technique [BUL03], it is possible to show that the same inequality also holds for n variables, and in the general case of different normalized weights:

. Using more complex proving technique [BUL03], it is possible to show that the same inequality also holds for n variables, and in the general case of different normalized weights:

This interpretation of conjunctive and disjunctive aggregation is different from the interpretation used in mathematical literature about general aggregators [BEL07, GRA09]. Indeed, in propositional logic which is consistent with classical Boolean logic, the extreme logic aggregators are the pure conjunction (the min function) and the pure disjunction (the max function).

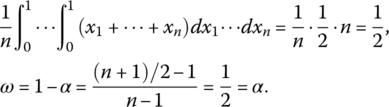

However, aggregation can sometimes go beyond the pure conjunction and the pure disjunction. For example, in the case of the nonidempotent t‐norm aggregator ![]() from (2.1.8) and (2.1.9) we have

from (2.1.8) and (2.1.9) we have

Similarly, for the t‐conorm ![]() we have

we have

Similar to drastic t‐norm/conorm, GL models of simultaneity and substitutability inside the unit hypercube [0, 1]n have limit cases called the drastic conjunction and the drastic disjunction. The drastic conjunction is the most conjunctive GL function: ![]() with exception

with exception ![]() . The drastic disjunction is the most disjunctive GL function:

. The drastic disjunction is the most disjunctive GL function: ![]() with exception

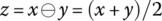

with exception ![]() . These limit cases, which are graded logic functions, but not aggregators according to Definition 2.1.1, are discussed in Section 2.1.7.6. So, from formulas (2.1.8) and (2.1.9) we get the minimum and maximum possible values of andness and orness:

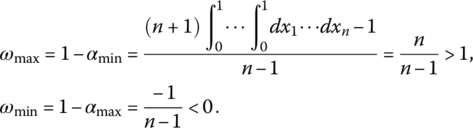

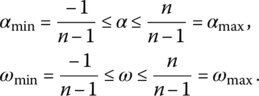

. These limit cases, which are graded logic functions, but not aggregators according to Definition 2.1.1, are discussed in Section 2.1.7.6. So, from formulas (2.1.8) and (2.1.9) we get the minimum and maximum possible values of andness and orness:

Therefore, in a general case of n variables, the ranges of global andness and orness of graded conjunctive and disjunctive logic functions are

The values αmin, αmax, ωmin, ωmax correspond to the drastic conjunction and the drastic disjunction, which by Definition 2.1.1 are not logic aggregators. Thus, the range of andness/orness of logic aggregators is ![]() , and the range of andness/orness of GL functions is

, and the range of andness/orness of GL functions is ![]() .

.

For the minimum number of variables ![]() we have the largest range of andness and orness of GL functions:

we have the largest range of andness and orness of GL functions:

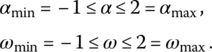

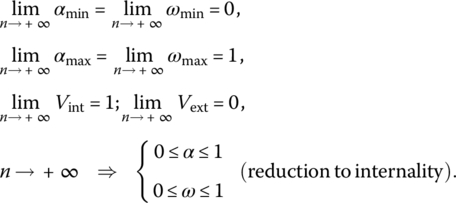

However, the range of andness and orness decreases as the number of variables increases. According to [DUJ73a], we have:

Let Vint denote the volume of the region inside the unit hypercube where internality and idempotency hold. Similarly, let Vext denote the volume of the region where externality and nonidempotency hold. According to (2.1.10), these regions can be compared as follows:

If the number of variables increases, then the region of internality increases, and the region of externality decreases. For example, for ![]() ,

, ![]() ,

, ![]() . Furthermore,

. Furthermore,

Taking into account these properties, the graded logic aggregators should be classified as follows:

- Neutral aggregator:

- The arithmetic mean:

- The arithmetic mean:

- Conjunctive aggregators:

- Regular conjunctive aggregators:

- Hyperconjunctive aggregators:

- Regular conjunctive aggregators:

- Disjunctive aggregators:

- Regular disjunctive aggregators:

- Hyperdisjunctive aggregators:

- Regular disjunctive aggregators:

We assume that regular conjunctive and regular disjunctive aggregators are implemented as idempotent means. Hyperconjunctive and hyperdisjunctive aggregators are not idempotent. They can be implemented as t‐norms and t‐conorms, or as various other hyperconjunctive and hyperdisjunctive functions. Conjunctive models of simultaneity and disjunctive models of substitutability cover the maximum possible range of andness/orness ![]() shown in Fig. 2.1.2. The centroid of all logic aggregation models is the arithmetic mean, which is the model of conjunctive/disjunctive balance and neutrality.

shown in Fig. 2.1.2. The centroid of all logic aggregation models is the arithmetic mean, which is the model of conjunctive/disjunctive balance and neutrality.

Figure 2.1.2 Andness/orness‐based classification of logic aggregation functions used in the soft computing propositional logic.

In the area of evaluation logic, the idempotent regular conjunctive and regular disjunctive aggregators are much more frequent than the nonidempotent hyperconjunctive and hyperdisjunctive aggregators. For simplicity, in cases where only regular idempotent aggregators are used, we can omit the attribute regular, assuming that by default all conjunctive and disjunctive aggregators are regular.

2.1.4 Logic Bisection, Trisection, and Quadrisection of the Unit Hypercube

Let us now investigate the location of aggregators inside the unit hypercube [0, 1]n. Logic aggregators are distributed in two main regions of the unit hypercube: conjunctive and disjunctive. The border between the conjunctive area and the disjunctive area is the neutrality plane (i.e., the arithmetic mean). The bisection of unit hypercube for ![]() is shown in Fig. 2.1.3. All conjunctive aggregators, used as models of simultaneity, are located under the neutrality plane. All disjunctive aggregators, serving as models of substitutability, are located in the region above the neutrality plane. Not surprisingly, for any

is shown in Fig. 2.1.3. All conjunctive aggregators, used as models of simultaneity, are located under the neutrality plane. All disjunctive aggregators, serving as models of substitutability, are located in the region above the neutrality plane. Not surprisingly, for any ![]() , the volumes of the conjunctive region (Vc) and the volume of the disjunctive region (Vd) are the same:

, the volumes of the conjunctive region (Vc) and the volume of the disjunctive region (Vd) are the same: ![]() .

.

Figure 2.1.3 Bisection of unit cube using the neutrality plane  .

.

Two important classic aggregators are the pure (or full) conjunction (C) and the pure (or full) disjunction (D), shown in Fig. 2.1.4. They provide a trisection of the unit hypercube: The resulting regions are idempotent logic aggregators (ILA), hyperconjunctive aggregators (CC), and hyperdisjunctive aggregators (DD). For example, nonidempotent t‐norms are located in the CC area and nonidempotent t‐conorms are located in the DD area. According to formulas (2.1.10) and (2.1.11), in the case ![]() , the resulting three regions have the same size

, the resulting three regions have the same size ![]() . However, already in the case of three variables, the situation changes:

. However, already in the case of three variables, the situation changes: ![]() . In other words, as the number of variables n grows, the region of ILA is growing, and the regions of CC and DD are shrinking.

. In other words, as the number of variables n grows, the region of ILA is growing, and the regions of CC and DD are shrinking.

Figure 2.1.4 Trisection of the unit hypercube based on conjunction and disjunction.

If a logic aggregator A(x1, …, xn) has andness greater than 1 (or orness greater than one), it must be nonidempotent. Informal proof of this theorem for ![]() directly follows from Definition 2.1.1 and geometric properties of conjunction and disjunction shown in Fig. 2.1.4. If the andness is greater than 1, then the volume under the surface of aggregator A(x, y) must be less than the volume under the pure conjunction

directly follows from Definition 2.1.1 and geometric properties of conjunction and disjunction shown in Fig. 2.1.4. If the andness is greater than 1, then the volume under the surface of aggregator A(x, y) must be less than the volume under the pure conjunction ![]() . Obviously, there are only two ways to achieve such a property: either (1) the diagonal section function of such an aggregator must be below the idempotency line (i.e.,

. Obviously, there are only two ways to achieve such a property: either (1) the diagonal section function of such an aggregator must be below the idempotency line (i.e., ![]() ), or (2) we keep the idempotency line

), or (2) we keep the idempotency line ![]() and bend the surface of A(x, y) so that it is below the planar wings of the pure conjunction. So, if

and bend the surface of A(x, y) so that it is below the planar wings of the pure conjunction. So, if ![]() then

then ![]() , but if we want to increase the andness of A(x, y), then for

, but if we want to increase the andness of A(x, y), then for ![]() we must have

we must have ![]() . In other words, the aggregator A(x, y) must be decreasing in y and it would no longer be nondecreasing. Since the cost of higher andness is the loss of nondecreasing monotonicity (and the loss of the status of aggregator), the option (2) must be rejected, and the only way to increase the andness is to use the option (1), i.e., to sacrifice the idempotency, and to get nonidempotent monotonic aggregators of higher andness such as

. In other words, the aggregator A(x, y) must be decreasing in y and it would no longer be nondecreasing. Since the cost of higher andness is the loss of nondecreasing monotonicity (and the loss of the status of aggregator), the option (2) must be rejected, and the only way to increase the andness is to use the option (1), i.e., to sacrifice the idempotency, and to get nonidempotent monotonic aggregators of higher andness such as ![]() . Using duality, a similar reasoning can be applied in the case of orness greater than one, and in all cases where

. Using duality, a similar reasoning can be applied in the case of orness greater than one, and in all cases where ![]() . Therefore, the full conjunction min(x1, …, xn) is the most conjunctive idempotent aggregator, and the full disjunction max(x1, …, xn) is the most disjunctive idempotent aggregator. All ILA are means, and all means can be used (more or less successfully) as ILA.

. Therefore, the full conjunction min(x1, …, xn) is the most conjunctive idempotent aggregator, and the full disjunction max(x1, …, xn) is the most disjunctive idempotent aggregator. All ILA are means, and all means can be used (more or less successfully) as ILA.

The most important logic decomposition of the unit hypercube is the quadrisection shown in Fig. 2.1.5. The four regions of the unit hypercube are denoted as follows:

- DD: nonidempotent hyperdisjunctive logic aggregators

- DI: regular disjunctive idempotent logic aggregators

- CI: regular conjunctive idempotent logic aggregators

- CC: nonidempotent hyperconjunctive logic aggregators

Figure 2.1.5 Quadrisection of the unit hypercube and four regions of logic aggregators.

The volumes of these regions are:

In the case of three variables, ![]() . For

. For ![]() the regions of DI and CI continue to grow and the regions of hyperconjunctive and hyperdisjunctive aggregators decrease, as shown in the table in Fig. 2.1.5. These facts have practical consequences in evaluation, because all hyperconjunctive and all hyperdisjunctive aggregators belong to the relatively small regions with volume

the regions of DI and CI continue to grow and the regions of hyperconjunctive and hyperdisjunctive aggregators decrease, as shown in the table in Fig. 2.1.5. These facts have practical consequences in evaluation, because all hyperconjunctive and all hyperdisjunctive aggregators belong to the relatively small regions with volume ![]() and consequently, with increasing n, these aggregators must be rather similar. For example, if

and consequently, with increasing n, these aggregators must be rather similar. For example, if ![]() then

then ![]() and for

and for ![]() the values of Πxi quickly come close to 0, reducing the diversity of hyperconjunctive and hyperdisjunctive aggregators for all values of arguments except those that are close to 1.

the values of Πxi quickly come close to 0, reducing the diversity of hyperconjunctive and hyperdisjunctive aggregators for all values of arguments except those that are close to 1.

Further investigation of properties of aggregators located in four characteristic regions of the unit hypercube can be found in Sections 2.1.7 and 2.1.8.

2.1.5 Propositions, Value Statements, Graded Logic, and Fuzzy Logic

The meaning of logic permanently expands, including more and more human mental activities. A classic definition (e.g., one offered by the Webster’s encyclopedic unabridged dictionary) is that logic is the science that investigates the principles governing correct or reliable inference. The central point of all classic definitions of logic is the valid human reasoning and its use.

We are interested in evaluation reasoning as a human mental activity, and in logic as a discipline focused on mathematical models of observable human reasoning. Of course, many authors go far beyond this basic approach. One direction is logic as an area for building abstract mathematical formalisms, and another area is logic as an engineering discipline interested in hardware and software solutions that use some basic logic components or deal with imprecision, uncertainty, and fuzziness.

Human communication is based on natural languages and consists of linguistic sentences. Some sentences are truth bearers, i.e., they can be true or false (or partially true and partially false). In logic, such sentences are called propositions or statements. In addition to propositions, human communication also includes sentences that are not truth bearers (e.g., questions, requests, commands, etc.). Many sentences (both truth bearers and not truth bearers) may include fuzzy expressions. For our purposes, the linguistic sentences and related logics can be classified as shown in Fig. 2.1.6.

Figure 2.1.6 Simplified classification of various types of propositions and logics

In propositional logic, we study methods for correct use of propositions. Propositions can be crisp or graded, depending on the type of truth value they bear. Declarative sentences that express assertions that are either completely true (coded as 1) or completely false (coded as 0) are called crisp propositions or crisp statements. For example, the statement “a square has four sides” is a crisp proposition that is (completely) true. The classical logic (a propositional calculus from Aristotle to George Boole) deals with crisp propositions only. It is used to decide whether a statement is true or false.

The statement “Concorde is an ideal passenger jet airliner” is not crisp because it is neither completely true nor completely false. Of course, those who know history will agree that it is truer than the statement “Tu‐144 is an ideal passenger jet airliner.” These statements assert the value of evaluated object and their degree of truth is located between true and false. Such statements are called the value statements.

Truth comes in degrees. Graded propositions use degree of truth that can be continuously adjustable from false to true and coded in the interval [0,1]. Such a degree of truth can also be interpreted as the degree of membership in a fuzzy set where the full membership corresponds to the degree of truth 1, and no membership corresponds to the degree of truth 0. If a logic uses graded truth and process it using graded aggregators then we call it a graded logic (GL). Consequently, it is necessary to discuss relationships between GL and fuzzy logic. That brings us to the definition of fuzzy logic. The originator of the concept of fuzzy logic is Lotfi Zadeh and it is natural to accept his explanation of this concept. On 1/25/2013, after receiving the BBVA Award, Zadeh addressed the BISC community with the message entitled “What is fuzzy logic?” that contains the following short but very precise definitions of fuzzy logic concepts in the way seen by the originator of these concepts:

The BBVA Award has rekindled discussions and debates regarding what fuzzy logic is and what it has to offer. The discussions and debates brought to the surface many misconceptions and misunderstandings. A major source of misunderstanding is rooted in the fact that fuzzy logic has two different meanings – fuzzy logic in a narrow sense, and fuzzy logic in a wide sense. Informally, narrow‐sense fuzzy logic is a logical system which is a generalization of multivalued logic. An important example of narrow‐sense fuzzy logic is fuzzy modal logic. In multivalued logic, truth is a matter of degree. A very important distinguishing feature of fuzzy logic is that in fuzzy logic everything is, or is allowed to be, a matter of degree. Furthermore, the degrees are allowed to be fuzzy. Wide‐sense fuzzy logic, call it FL, is much more than a logical system. Informally, FL is a precise system of reasoning and computation in which the objects of reasoning and computation are classes with unsharp (fuzzy) boundaries. The centerpiece of fuzzy logic is the concept of a fuzzy set. More generally, FL may be a system of such systems. Today, the term fuzzy logic, FL, is used preponderantly in its wide sense. This is the sense in which the term fuzzy logic is used in the sequel. It is important to note that when we talk about the impact of fuzzy logic, we are talking about the impact of FL. Intellectually, narrow‐sense fuzzy logic is an important part of FL, but volume‐wise it is a very small part. In fact, most applications of fuzzy logic involve no logic in its traditional sense.

According to Zadeh’s dual (narrow/wide) classification, the wide‐sense fuzzy logic includes all intellectual descendants of the concept of fuzzy set.3 The narrow‐sense fuzzy logic includes logic systems where truth is a matter of degree. Zadeh’s classification is adopted in Fig. 2.1.6. According to this classification, GL is a generalization of classical bivalent and multivalued logic, as well as a fundamental component and refinement of the narrow-sense fuzzy logic.

The link between classical Boolean logic and GL in Fig. 2.1.6 shows that GL is a successor (and seamless generalization) of classical bivalent Boolean logic. All main properties of GL can be derived within the framework of classical logic, without explicitly using the concept of fuzzy set. On the other hand, the partial truth of a value statement can also be interpreted as a degree of membership of the evaluated object in a fuzzy set of maximum‐value objects. Consequently, we can link FL/N and GL, and relationships between GL and FL are discussed in several sections of this book, particularly in Chapter 2.2.

Fuzzy logic has specific areas that deal with various forms of imprecision and uncertainty, and includes type 1 fuzzy logic [WIK11b, ZAD89, ZAD94, KLI95], interval type 2 fuzzy logic [WU11, MEN01, CAS08], and others.

In the area of evaluation we are primarily interested in value statements (propositions that affirm or deny value, based on decision maker goals and requirements). Typical examples of value statements are “the area of home H completely satisfies all our needs,” “the location of airport A is perfectly suitable for city C,” and “student S deserves the highest grade.” All these statements can be partially true and partially false. The degree of truth of a value statement is a human percept, interpreted as the degree of satisfaction of stakeholder’s requirements. Generally, we define GL as follows.

According to this definition, GL is used for processing degrees of truth. The degree of truth of a value statement can be interpreted as the degree of suitability or the degree of preference. To have a compact notation we usually call these degrees simply “suitability” or “preference.” Of course, the degrees of truth are human percepts and GL can also be interpreted as a mathematical infrastructure for perceptual computing. So, the term suitability means the human percept of suitability. One of our main objectives is to compute the overall suitability of a complex object as a logic function of the suitability degrees of its components (attributes).

The most distinctive GL properties (and the most frequently used) are internality and idempotency of graded simultaneity (partial conjunction) and substitutability (partial disjunction) models. In addition, using interpolative aggregators [DUJ05c, DUJ14], GL provides seamless connectivity between idempotent and nonidempotent logic aggregators [DUJ16a], covering all regions of the unit hypercube (see Section 2.1.7 and Chapter 2.4).

Internality holds in all cases where the overall suitability of a complex object cannot be greater than the suitability of its best component, or less than the suitability of its worst component. Internality yields the possibility to interpret means as logic functions, located between the pure conjunction (the minimum function) and the pure disjunction (the maximum function). Based on this interpretation, the first form of graded (or generalized) conjunction/disjunction (GCD) was proposed in [DUJ73b] as a general logic function that provides a continuous transition from conjunction to disjunction by selecting a desired conjunction degree (andness), or the desired disjunction degree (orness). By making conjunction a matter of degree and disjunction a matter of degree, and using weights as degrees of importance of inputs we made an explicit move toward a graded logic, where everything is a matter of degree.

Relationships between the classical bivalent Boolean logic (BL), the graded logic (GL), the fuzzy logic in the narrow sense (FL/N) and the fuzzy logic in the wide sense (FL/W) are subset‐structured as follows: ![]() . BL is primarily a crisp bivalent propositional calculus. GL includes BL plus graded truth, graded idempotent conjunction/disjunction, weight‐based semantics, and (less frequently) nonidempotent hyperconjunction/hyperdisjunction (Section 2.1.7). GL also supports all nonidempotent basic logic functions (e.g., partial implication, partial equivalence, partial nand, partial nor, partial exclusive or, and others). All such functions are “partial” in the sense that they use adjustable degrees of similarity or proximity to their “crisp” equivalents in traditional bivalent logic. FL/N includes GL plus variety of forms of nonidempotent conjunction/disjunction, and other generalizations of multivalued logic. FL/W includes FL/N plus all a wide variety of models of reasoning and computation based on the concept of fuzzy set.

. BL is primarily a crisp bivalent propositional calculus. GL includes BL plus graded truth, graded idempotent conjunction/disjunction, weight‐based semantics, and (less frequently) nonidempotent hyperconjunction/hyperdisjunction (Section 2.1.7). GL also supports all nonidempotent basic logic functions (e.g., partial implication, partial equivalence, partial nand, partial nor, partial exclusive or, and others). All such functions are “partial” in the sense that they use adjustable degrees of similarity or proximity to their “crisp” equivalents in traditional bivalent logic. FL/N includes GL plus variety of forms of nonidempotent conjunction/disjunction, and other generalizations of multivalued logic. FL/W includes FL/N plus all a wide variety of models of reasoning and computation based on the concept of fuzzy set.

Because of its location between BL and FL/N, we can interpret GL as a descendant of both the classical bivalent logic, and the fuzzy logic. These two interpretations are complementary. In the case of logic interpretation, all variables represent suitability, i.e. the degrees of truth of value statements that assert the highest values of evaluated objects or their components. In the case of fuzzy interpretation, the variables represent the degrees of membership in corresponding fuzzy sets of highest‐value objects. In the case of bivalent logic, GL is a direct and natural seamless generalization of BL (in points {0, 1}n of hypercube [0, 1]n we have GL=BL). In the case of fuzzy logic, GL is a special case, even if the fuzzy logic is defined in a narrow sense, because GL excludes various fuzzy concepts and techniques that are not related to logic. Since GL is primarily a propositional calculus, it is more convenient and more natural to interpret GL as a weighted compensative generalization of classical bivalent Boolean logic, than to interpret GL as a relatively narrow subarea in a heterogeneous set of models of reasoning and computation derived from the concept of fuzzy set. However, contacts of GL and the area of fuzzy sets are frequent and natural, in particular because GL provides logic infrastructure in all cases where fuzzy models need to include human percepts and human logic reasoning.

2.1.6 Classical Bivalent Boolean Logic

Omne enuntiatum aut verum aut falsum est.

(Every statement is either true or false.)

—Marcus Tullius Cicero, De Fato, 44 BC

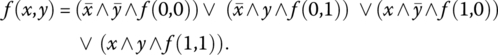

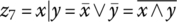

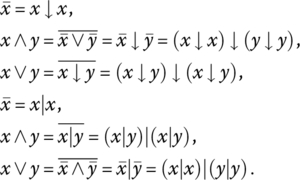

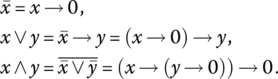

In this section we summarize a classical bivalent propositional calculus with crisp truth values formalized as a Boolean algebra. Let us assume that the only logic values are true (numerically coded as 1) and false (numerically coded as 0), and all logic variables, as well as andness and orness,4 belong to the set {0,1}. The basic logic functions are the pure conjunction (and function) ![]() , the pure disjunction (or function)

, the pure disjunction (or function) ![]() , and negation

, and negation ![]() . Obviously,

. Obviously, ![]() (involution),

(involution), ![]() ,

, ![]() ,

, ![]() . Under these assumptions a Boolean function of n variables