My speed is my greatest asset.

Software can possess a broad array of useful features. Certain applications, such as Microsoft Office, include features that can help a user accomplish a near infinite number of tasks, many of which a normal user might never even discover. Other applications, like Notepad, may contain only the features necessary to accomplish a few simple tasks, which might leave certain users desiring more functionality. In either case, the goal of the software is the same: to provide functionality that helps users to accomplish a particular set of tasks. If we also consider how quickly users are able to accomplish that set of tasks, then performance, as suggested by the Peter Bondra quote, should also be considered an important feature of a software application.

As application developers, we spend considerable effort planning and building the key features of our applications. These features are cohesive, enhance the quality of our product, and implicitly improve overall functionality. One of the most important aspects of all features of a software product is performance. Performance is often overlooked or considered late in product design and development. This can lead to inadequate performance results for key features and overall poorer product quality. Performance is critical to the quality of any application but especially to Web applications. By contrast to desktop applications, Web applications depend on the transmission of data and application assets over a worldwide network. This presents architectural and quality challenges for Web application developers that must be mitigated during the design and construction of their applications.

Web application quality extends beyond the visible bugs that end users encounter when using the application. Network latency, payload size, and application architecture can have negative impacts on the performance of online applications. Therefore, performance considerations should be part of every Web application design. Deferring these considerations until late in the development cycle can create significant code churn after performance bugs are discovered. Application developers must understand the impact of the design choices that affect adversely performance and mitigate the risks of releasing a poorly performing Web application by applying many of the best practices discussed in this chapter.

Throughout the remainder of this chapter, we will evaluate some common problems that can negatively affect the performance of Web-based software, and we will discuss several practices that can be applied to proactively address performance bottlenecks. Although this chapter will not focus on techniques that are unique to managed code development, it will discuss several ways to apply performance best practices to your application development life cycle in order to increase the overall quality of your Web-based application, as well as the satisfaction for your application’s users.

Web-based applications that rely on interactions between servers and a user’s Web browser inherently require certain design considerations to address the performance challenges present in the application execution environment. These factors are not specific to Web applications developed using ASP.NET; they also affect application developers who utilize Web development programming models like PHP or Java. They include the latency or quality of the connection between the client and server, the payload size of the data being transmitted, as well as poorly optimized application code, to name a few. Let’s explore each of these in greater depth.

To understand the impact of network latency and throughput on your Web application, we must first understand the general performance and throughput of the Internet in key regions around the world. This may prove to be an eye-opening experience for many Web application developers. The data in 4-1 illustrates how end users are affected by the network topology of the Internet. The data in this table was gathered during daily ping tests conducted between January through September 2008 and provide a breakdown of the average round-trip time (measured in milliseconds [ms]) and average packet loss for users in each specified region. Let us briefly review the definitions of each of these metrics before further evaluating the data in the table.

Table 4-1. Internet Network Statistics by Region

Region | Average Round-Trip Time (ms) | Average Packet Loss (%) |

|---|---|---|

Africa | 469 | 3.70 |

Australia | 204 | 0.23 |

Balkans | 202 | 0.74 |

Central Asia | 597 | 1.24 |

East Asia | 192 | 0.68 |

Europe | 178 | 0.48 |

Latin America | 270 | 1.15 |

Middle East | 279 | 0.87 |

North America | 59 | 0.09 |

Russia | 243 | 2.48 |

South Asia | 424 | 1.89 |

South East Asia | 254 | 0.03 |

Average round-trip time. This refers to the average amount of time required for a 100-byte packet of data to complete a network round trip. The value in 4-1 is computed by evaluating the round-trip time for daily tests conducted over a period from January through September 2008.

Average packet loss. This metric evaluates the reliability of a connection by measuring the percentage of packets lost during the network round trip of a 100-byte packet of data. In the same way that average round-trip time is determined, the average packet loss is also computed by evaluating the results of daily tests conducted over a period of January through September 2008.

Note

This data is based upon the results of tests being conducted between Stanford University in Northern California and network end points in 27 countries worldwide. Data obtained from each test is subsequently averaged across all end points within a particular region. The complete data set can be obtained from http://www-iepm.slac.stanford.edu/. Data is also available from this site in a summarized, percentile-based format, which shows what users at the 25th, 50th, 75th, 90th, and 95th percentile are likely to experience in terms of average round-trip time and packet loss. At Microsoft, teams generally assume that most of their users will experience connectivity quality at the 75th percentile or better.

There are a few key points to take away from the data presented in this table:

The general throughput of data on the Internet varies according to region. This means that even if your Web application is available 100 percent of the time and performing perfectly, an end user in Asia might be affected by suboptimal network conditions such as high latency or packet loss and not be able to access your application easily. Although this seems to be a situation beyond an application developer’s control, several mitigation strategies do exist and will be discussed later in this chapter. That said, it is definitely useful to understand the general network behavior across the Internet when you consider what an end user experiences when using your Web application.

We also notice that the average round-trip time for a piece of data to travel from a point within North America to a point within another region is quite high in certain cases. For example, a single Transmission Control Protocol (TCP) packet of data traveling on the Internet between North America and Central Asia has an average round-trip time of 597 ms. This means that each individual file required by a Web application will incur 597 ms of latency during transfer between the server and the client. Thus, as the number of required requests increases, the performance of the application gets worse. Fortunately, the number of round trips between the client and the server is something every Web application developer can influence.

In conjunction with average round-trip time, packet loss also increases significantly for users outside North America. Both of these factors are related to general throughput on the network, so they usually go hand-in-hand. These results demonstrate that, as packet loss increases, additional round trips are required between the browser and the server to obtain the packets of data lost in transmission. Hence, higher packet loss means decreased performance of your Web application. Even though developers cannot control the amount of packet loss a user is likely to experience, you can apply certain tactics to help mitigate the effects, such as decreasing payload size, which will be discussed later in this chapter.

The term "payload size" loosely refers to the size of data being transmitted over the network to render the requested page. This could include the dynamic ASPX page content as well as static files such as JavaScript files, images, or cascading style sheets (CSS). The number of TCP requests required to retrieve the data is referred to as the "network round trips." Web application performance is most negatively affected by a combination of the payload size and the required round trips between the browser and the server. Let’s take a look at a few examples of how typical Web application designs might contribute to poorly performing Web applications.

Compressing static and dynamic files are not necessarily part of your Web server’s default configuration. Compression is strongly recommended for Web applications that use high amounts of bandwidth or when you want to use bandwidth more effectively. Many Web application developers might not be aware of this feature and could be unknowingly sending larger amounts of data to the client browser, thereby increasing the size of the payload. When enabled, compression can significantly reduce the size of the file being transmitted to the client browser. Compression requires additional CPU utilization when compressing dynamic content such as .aspx files. Therefore, if the CPU usage on your Web servers is already high, enabling Internet Information Services (IIS) dynamic compression is not recommended. However, enabling IIS static compression on file types such as JavaScripts, CSS, or HTML files does not increase CPU usage and is, therefore, highly recommended. Hosting static files with a Content Delivery Network service provider generally includes compression with the service offering.

Most Web application developers naturally use references to individual images or iconography throughout their code. This is how most of us were taught to write our HTML. The reality is that each of these files, no matter how small we make them, results in a separate round trip between the browser and the server. Consider how bad this might be for image-rich Web pages where rendering a single page could generate dozens of round trips to the server!

These are just two simple examples of how typical Web applications could be delivering unnecessarily large payloads as well as initiating numerous round trips. The challenge facing Web application developers is to both reduce the amount of data being transmitted between the server and the client as well as optimize their Web application’s architecture to minimize the number of network round trips. Fortunately, a number of tactics can help Web application developers accomplish this, all of which we’ll explore later in this chapter. For now, we will continue reviewing some of the more common performance problems facing Web application developers.

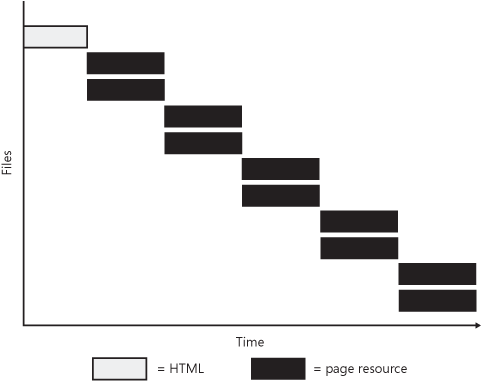

We’ve discussed how an individual HTTP request is made for each resource (such as JavaScript files, CSS, or images) within a Web page, which can negatively affect the rendering performance of the page. However, it may come as a surprise to you to learn that the HTTP/1.1 specification suggests that browsers should download only two resources at a time in parallel for a given hostname. This implies that, if all content necessary to render a page is originating from the same hostname (e.g., http://www.live.com), the browser will retrieve only two resources at a time. Thus, the browser will utilize only two TCP connections between the client and the server. This phenomenon is illustrated in 4-1, and although configurable in some browsers and ignored by newer browsers like Internet Explorer 8, it very likely affects users of your Web application.

Note

Even though Internet Explorer allows the number of parallel browser sessions to be configured, normal users are unlikely to do this. For more information on how to change this setting in Internet Explorer, see the following Microsoft Knowledge Base article: http://support.microsoft.com/?kbid=282402.

Note

Several figures in this chapter intend to illustrate how parallel downloading of resources theoretically works in a Web browser. In reality, resources are often of varying sizes and therefore will download in a less structured way than illustrated here. To understand this phenomenon in greater detail, download and run HTTPWatch (http://www.httpwatch.com) against your Web application.

As you probably realize, the TCP connection limitation could have profoundly negative effects on performance for Web applications that require a good deal of content to be downloaded. It is critically important for Web application developers to consider this limitation and properly address this phenomenon in the design of their applications. We will evaluate mitigation strategies for this later in this chapter.

Performance challenges for Web application developers are not solely related to network topology or data transmission behavior between client browsers and Web servers. It is true that connectivity and data transmission play a big role in the performance of Web applications, but application architecture and application coding play a big role as well. Oftentimes Web application developers will choose a particular implementation within their application architecture or code without fully realizing the impact of the decision on a user of the application. Some examples of common implementations that have a negative impact on Web application performance include the overuse of URL redirects, excessive Domain Name System (DNS) lookups, excessive use of page resources, and poor organization of scripts within a Web page. Let us review each of these in greater detail.

These are typically used by developers to route a user from one URL to another. Common examples include use of the <meta equiv-http="refresh" content="0; url=http://contoso.com"> directive in HTML and the Response.Redirect("http://fabrikam.com") method in ASP.NET. While redirects are often necessary, they obviously delay the start of the page load until the redirect is complete. This could be an acceptable performance degradation in some instances, but, if overused, it could cause undesirable effects on the performance of your Web application’s pages.

DNS lookups are generally the result of the Web browser being unable to locate the IP address for a given hostname in either its cache or the operating system’s cache. If the IP address of a particular hostname is not found in either cache, a lookup against an Internet DNS server will be performed. In the context of a Web page, the number of lookups required will be equal to the number of unique hostnames, such as http://www.contoso.com or http://images.contoso.com, found in any of the page’s JavaScript, CSS, or inline code required to render that page. Therefore, multiple DNS lookups could degrade the performance of your Web application’s pages by upwards of n times the number of milliseconds required to resolve the IP address through DNS, where n is equal to the number of unique hostnames found in any of the page’s JavaScript, CSS, or inline code requiring a DNS lookup. While there are exceptions to this rule that we will explore when discussing the use of multiple hostnames to increase parallel downloading, it is generally not advisable to include more than a few unique hostnames within your Web applications.

Web application developers may not have given a lot of thought to how code organization affects performance of Web applications. In many cases, developers choose to separate JavaScript code from CSS for maintainability. While this practice generally makes sense for code organization, it actually hurts performance because it increases the number of HTTP requests required to retrieve the page. In other cases, the location of script and CSS within the structure of the HTML page can have a negative effect on gradual or progressive page rendering and download parallelization.

It is important for Web application developers to understand how these simple choices can affect their Web application’s performance, so they can take the appropriate mitigation steps when designing their applications. Let’s review an example of how to analyze Web page performance and begin discussing mitigation strategies for the common problems we have been discussing thus far.