Chapter 2. Traditional Transmission Media

Transmission media are the physical pathways that connect computers, other devices, and people on a network—the highways and byways that comprise the information superhighway. Each transmission medium requires specialized network hardware that has to be compatible with that medium. You have probably heard terms such as Layer 1, Layer 2, and so on. These refer to the OSI reference model, which defines network hardware and services in terms of the functions they perform. (The OSI reference model is discussed in detail in Chapter 5, “Data Communications Basics.”) Transmission media operate at Layer 1 of the OSI model: They encompass the physical entity and describe the types of highways on which voice and data can travel.

It would be convenient to construct a network of only one medium. But that is impractical for anything but an extremely small network. In general, networks use combinations of media types. There are three main categories of media types:

- Copper cable—Types of cable include unshielded twisted-pair (UTP), shielded twisted-pair (STP), and coaxial cable. Copper-based cables are inexpensive and easy to work with compared to fiber-optic cables, but as you’ll learn when we get into the specifics, a major disadvantage of cable is that it offers a rather limited spectrum that cannot handle the advanced applications of the future, such as teleimmersion and virtual reality.

- Wireless—Wireless media include radio frequencies, microwave, satellite, and infrared. Deployment of wireless media is faster and less costly than deployment of cable, particularly where there is little or no existing infrastructure (e.g., Africa, Asia-Pacific, Latin America, eastern and central Europe). Wireless is also useful where environmental circumstances make it impossible or cost-prohibitive to use cable (e.g., in the Amazon, in the Empty Quarter in Saudi Arabia, on oil rigs).

- There are a few disadvantages associated with wireless, however. Historically, wireless solutions support much lower data rates than do wired solutions, although with new developments in wireless broadband, that is becoming less of an issue (see Part IV, “Wireless Communications”). Wireless is also greatly affected by external impairments, such as the impact of adverse weather, so reliability can be difficult to guarantee. However, new developments in laser-based communications—such as virtual fiber—can improve this situation. (Virtual fiber is discussed in Chapter 15, “WMANs, WLANs, and WPANs.”) Of course, one of the biggest concerns with wireless is security: Data must be secured in order to ensure privacy.

- Fiber optics—Fiber offers enormous bandwidth, immunity to many types of interference and noise, and improved security. Therefore, fiber provides very clear communications and a relatively noise-free environment. The downside of fiber is that it is costly to purchase and deploy because it requires specialized equipment and techniques.

No one of the three categories of media types can be considered best. Each is useful in different situations, and most networks need to take advantage of a number of media types. When building a new network or upgrading an old one, the choice of media type should be based on the prevailing conditions.

The saying “Don’t put all your eggs in one basket” can be applied to networking. Having multiple pathways of fibers in and out of a building is not always enough. Diversity—terrestrial and nonterrestrial facilities combined—is important because in disastrous events such as earthquakes, floods, and fires, if one alternative is completely disabled, another medium might still work. As discussed in this chapter, you can assess various parameters to determine which media type is most appropriate for a given application.

This chapter focuses on the five traditional transmission media formats: twisted-pair copper used for analog voice telephony, coaxial cable, microwave and satellite in the context of traditional carrier and enterprise applications, and fiber optics. (Contemporary transmission solutions are discussed in subsequent chapters, including Chapter 11, “Optical Networking,” and Chapter 16, “Emerging Wireless Applications.”) Table 2.1 provides a quick comparison of some of the important characteristics of these five media types. Note that recent developments in broadband alternatives, including twisted-pair options such as DSL and wireless broadband, constitute a new categorization of media.

Table 2.1 Traditional Transmission Media Characteristics

The frequency spectrum in which a medium operates directly relates to the bit rate that can be obtained with that medium. You can see in Table 2.1 that traditional twisted-pair affords the lowest bandwidth (i.e., the difference between the highest and lowest frequencies supported), a maximum of 1MHz, whereas fiber optics affords the greatest bandwidth, some 75THz.

Another important characteristic is a medium’s susceptibility to noise and the subsequent error rate. Again, twisted-pair suffers from many impairments. Coax and fiber have fewer impairments than twisted-pair because of how the cable is constructed, and fiber suffers the least because it is not affected by electrical interference. The error rate of wireless depends on the prevailing conditions, especially weather and the presence of obstacles, such as foliage and buildings.

Yet another characteristic you need to evaluate is the distance required between repeaters. This is a major cost issue for those constructing and operating networks. In the case of twisted-pair deployed as an analog telephone channel, the distance between amplifiers is roughly 1.1 miles (1.8 km). When twisted-pair is used in digital mode, the repeater spacing drops to about 1,800 feet (550 m). With twisted-pair, a great many network elements must be installed and subsequently maintained over their lifetime, and they can be potential sources of trouble in the network. Coax offers about a 25% increase in the distance between repeaters over twisted-pair. With microwave and satellite, the distance between repeaters depends on the frequency bands in which you’re operating and the orbits in which the satellites travel. In the area of fiber, new innovations appear every three to four months, and, as discussed later in this chapter, some new developments promise distances as great as 4,000 miles (6,400 km) between repeaters or amplifiers in the network.

Security is another important characteristic. There is no such thing as complete security, and no transmission medium in and of itself can provide security. But using encryption and authentication helps ensure security. (Chapter 9, “IP Services,” discusses security in more detail.) Also, different media types have different characteristics that enable rapid intrusion as well as characteristics that enable better detection of intrusion. For example, with fiber, an optical time domain reflectometer (OTDR) can be used to detect the position of splices that could be the result of unwanted intrusion. (Some techniques allow you to tap into a fiber cable without splices, but they are extremely costly and largely available only to government security agencies.)

Finally, you need to consider three types of costs associated with the media types: acquisition cost (e.g., the costs of the cable per foot [meter], of the transceiver and laser diode, and of the microwave tower), installation and maintenance costs (e.g., the costs of parts as a result of wear and tear and environmental conditions), and internal premises costs for enterprises (e.g., the costs of moves, adds, and changes, and of relocating workers as they change office spaces).

The following sections examine these five media types—twisted-pair, coaxial cable, microwave, satellite, and fiber optics—in detail.

Twisted-Pair

The historical foundation of the public switched telephone network (PSTN) lies in twisted-pair, and even today, most people who have access to networks access them through a local loop built on twisted-pair. Although twisted-pair has contributed a great deal to the evolution of communications, advanced applications on the horizon require larger amounts of bandwidth than twisted-pair can deliver, so the future of twisted-pair is diminishing. Figure 2.1 shows an example of four-pair UTP.

Figure 2.1 Twisted-pair

Characteristics of Twisted-Pair

The total usable frequency spectrum of telephony twisted-pair copper cable is about 1MHz (i.e., 1 million cycles per second). Newer standards for broadband DSL, also based on twisted-pair, use up to 2.2MHz of spectrum. Loosely translated into bits per second (bps)—a measurement of the amount of data being transported, or capacity of the channel—twisted-pair cable offers about 2Mbps to 3Mbps over 1MHz of spectrum. But there’s an inverse relationship between distance and the data rate that can be realized. The longer the distance, the greater the impact of errors and impairments, which diminish the data rate. In order to achieve higher data rates, two techniques are commonly used: The distance of the loop can be shortened, and advanced modulation schemes can be applied, which means we can encode more bits per cycle. A good example of this is Short Reach VDSL2 (discussed in Chapter 12, “Broadband Access Alternatives”), which is based on twisted copper pair but can support up to 100Mbps, but over a maximum loop length of only 330 feet (100 m). New developments continue to allow more efficient use of twisted-pair and enable the higher data rates that are needed for Internet access and Web surfing, but each of these new solutions specifies a shorter distance over which the twisted-pair is used, and more sophisticated modulation and error control techniques are used as well.

Another characteristic of twisted-pair is that it requires short distances between repeaters. Again, this means that more components need to be maintained and there are more points where trouble can arise, which leads to higher costs in terms of long-term operation.

Twisted-pair is also highly susceptible to interference and distortion, including electromagnetic interference (EMI), radio frequency interference (RFI), and the effects of moisture and corrosion. Therefore, the age and health of twisted-pair cable are important factors.

The greatest use of twisted-pair in the future is likely to be in enterprise premises, for desktop wiring. Eventually, enterprise premises will migrate to fiber and forms of wireless, but in the near future, they will continue to use twisted-pair internally.

Categories of Twisted-Pair

There are two types of twisted-pair: UTP and STP. In STP, a metallic shield around the wire pairs minimizes the impact of outside interference. Most implementations today use UTP.

Twisted-pair is divided into categories that specify the maximum data rate possible. In general, the cable category term refers to ANSI/TIA/EIA 568-A: Commercial Building Telecommunications Cabling Standards. The purpose of EIA/TIA 568-A was to create a multiproduct, multivendor standard for connectivity. Other standards bodies—including the ISO/IEC, NEMA, and ICEA—are also working on specifying Category 6 and above cable.

The following are the cable types specified in ANSI/TIA/EIA 568-A:

- Category 1—Cat 1 cable was originally designed for voice telephony only, but thanks to some new techniques, long-range Ethernet and DSL, operating at 10Mbps and even faster, can be deployed over Cat 1.

- Category 2—Cat 2 cable can accommodate up to 4Mbps and is associated with token-ring LANs.

- Category 3—Cat 3 cable operates over a bandwidth of 16MHz on UTP and supports up to 10Mbps over a range of 330 feet (100 m). Key LAN applications include 10Mbps Ethernet and 4Mbps token-ring LANs.

- Category 4—Cat 4 cable operates over a bandwidth of 20MHz on UTP and can carry up to 16Mbps over a range of 330 feet (100 m). The key LAN application is 16Mbps token ring.

- Category 5—Cat 5 cable operates over a bandwidth of 100MHz on UTP and can handle up to 100Mbps over a range of 330 feet (100m). Cat 5 cable is typically used for Ethernet networks running at 10Mbps or 100Mbps. Key LAN applications include 100BASE-TX, ATM, CDDI, and 1000BASE-T. It is no longer supported, having been replaced by Cat 5e.

- Category 5e—Cat 5e (enhanced) operates over a bandwidth of 100MHz on UTP, with a range of 330 feet (100 m). The key LAN application is 1000BASE-T. The Cat 5e standard is largely the same as Category 5, except that it is made to somewhat more stringent standards. Category 5e is recommended for all new installations and was designed for transmission speeds of up to 1Gbps (Gigabit Ethernet). Although Cat 5e can support Gigabit Ethernet, it is not currently certified to do so.

- Category 6—Cat 6, specified under ANSI/TIA/EIA-568-B.2-1, operates over a bandwidth of up to 400MHz and supports up to 1Gbps over a range of 330 feet (100 m). It is a cable standard for Gigabit Ethernet and other network protocols that is backward compatible with the Cat 5/5e and Cat 3 cable standards. Cat 6 features more stringent specifications for crosstalk and system noise. Cat 6 is suitable for 10BASE-T/100BASE-TX and 1000BASE-T (Gigabit Ethernet) connections.

- Category 7—Cat 7 is specified in the frequency range of 1MHz to 600MHz. ISO/IEC11801:2002 Category 7/Class F is a cable standard for Ultra Fast Ethernet and other interconnect technologies that can be made backward compatible with traditional Cat 5 and Cat 6 Ethernet cable. Cat 7, which is based on four twisted copper pairs, features even more stringent specifications for crosstalk and system noise than Cat 6. To achieve this, shielding has been added for individual wire pairs and the cable as a whole.

The predominant cable categories in use today are Cat 3 (due to widespread deployment in support of 10Mbps Ethernet—although it is no longer being deployed) and Cat 5e. Cat 4 and Cat 5 are largely defunct.

Applications of Twisted-Pair

The primary applications of twisted-pair are in premises distribution systems, telephony, private branch exchanges (PBXs) between telephone sets and switching cabinets, LANs, and local loops, including both analog telephone lines and broadband DSL.

Analog and Digital Twisted-Pair

Twisted-pair is used in traditional analog subscriber lines, also known as the telephony channel or 4KHz channel. Digital twisted-pair takes the form of Integrated Services Digital Network (ISDN) and the new-generation family of DSL standards, collectively referred to as xDSL (see Chapter 12).

ISDN

Narrowband ISDN (N-ISDN) was introduced in 1983 as a network architecture and set of standards for an all-digital network. It was intended to provide end-to-end digital service using public telephone networks worldwide and to provide high-quality, error-free transmission. N-ISDN entails two different specifications:

- Basic Rate Interface (BRI)—Also referred to as Basic Rate Access (BRA), BRI includes two B-channels and one D-channel (often called 2B+D). The B-channels are the bearer channels, which, for example, carry voice, data, or fax transmissions. The D-channel is the delta channel, where signaling takes place. Because signaling doesn’t occur over long periods of time, where allowed by the service provider, the D-channel can also be used to carry low-speed packet-switched data. Each B-channel offers 64Kbps, and the D-channel provides 16Kbps. So, in total, 2B+D offers 144Kbps, delivered over a single twisted-pair with a maximum loop length of about 3.5 miles (5.5 km). BRI is used in residences, in small businesses that need only a couple lines, and for centrex customers. (A centrex customer leases extensions from the local exchange rather than acquiring its own PBX for the customer premise. Thus, the local exchange pretends to be a private PBX that performs connections among the internal extensions and between the internal extensions and the outside network.)

- Primary Rate Interface (PRI)—Also referred to as Primary Rate Access (PRA), PRI is used for business systems. It terminates on an intelligent system (e.g., a PBX, a multiplexer, an automatic call distribution system such as those with menus). There are two different PRI standards, each deployed over two twisted-pair: The North American and Japanese infrastructure uses 23B+D (T-1), and other countries use 30B+D (E-1). As with BRI, in PRI each of the B-channels is 64Kbps. With PRI, the D-channel is 64Kbps. So, 23B+D provides 23 64Kbps B-channels for information and 1 64Kbps D-channel for signaling and additional packet data. And 30B+D provides 30 64Kbps channels and 1 64Kbps D-channel.

Given today’s interest in Internet access and Web surfing, as well as the availability of other high-speed options, BRI is no longer the most appropriate specification. We all want quicker download times. Most people are willing to tolerate a 5-second download of a Web page, and just 1 second can make a difference in customer loyalty. As we experience more rapid information access, our brains become somewhat synchronized to that, and we want it faster and faster and faster. Therefore, N-ISDN has seen better days, and other broadband access solutions are gaining ground. (ISDN is discussed further in Chapter 7, “Wide Area Networking.”)

xDSL

The DSL family includes the following:

- High-Bit-Rate DSL (HDSL)

- Asymmetrical DSL (ADSL, ADSL2, ADSL2+)

- Symmetrical (or Single-Line) DSL (SDSL)

- Symmetric High-Bit-Rate DSL (SHDSL)

- Rate-Adaptive DSL (RADSL)

- Very-High-Bit-Rate DSL (VDSL, VDSL2)

Some of the members of the DSL family are symmetrical and some are asymmetrical, and each member has other unique characteristics.

As in many other areas of telecommunications, with xDSL there is not one perfect solution. One of the main considerations with xDSL is that not every form of xDSL is available in every location from all carriers. The solution also depends on the environment and the prevailing conditions. For example, the amount of bandwidth needed at the endpoint of a network—and therefore the appropriate DSL family member—is determined by the applications in use. If the goal is to surf the Web, you want to be able to download quickly in one direction, but you need only a small channel on the return path to handle mouse clicks. In this case, you can get by with an asymmetrical service. On the other hand, if you’re working from home, and you want to transfer images or other files, or if you want to engage in videoconferencing, you need substantial bandwidth in the upstream direction as well as the downstream direction; in this case, you need a symmetrical service.

The following sections briefly describe each of these DSL family members, and Chapter 12 covers xDSL in more detail.

HDSL Carriers use HDSL to provision T-1 or E-1 capacities because HDSL deployment costs less than other alternatives when you need to think about customers who are otherwise outside the permitted loop lengths. HDSL can be deployed over a distance of about 2.2 miles (3.6 km). HDSL is deployed over two twisted-pairs, and it affords equal bandwidth in both directions (i.e., it is symmetrical).

HDSL is deployed as two twisted-pairs, but some homes have only a single pair of wires running through the walls. Therefore, a form of HDSL called HDSL2 (for two-pair) has been standardized for consumer/residential action. HDSL2 provides symmetrical capacities of up to 1.5Mbps or 2Mbps over a single twisted-pair.

ADSL ADSL is an asymmetrical service deployed over one twisted-pair. With ADSL, the majority of bandwidth is devoted to the downstream direction, from the network to the user, with a small return path that is generally sufficient to enable telephony or simple commands. ADSL is limited to a distance of about 3.5 miles (5.5 km) from the exchange point. With ADSL, the greater the distance, the lower the data rate; the shorter the distance, the better the throughput. New developments allow the distance to be extended because remote terminals can be placed closer to the customer.

There are two main ADSL standards: ADSL and ADSL2. The vast majority of the ADSL that is currently deployed and available is ADSL. ADSL supports up to 7Mbps downstream and up to 800Kbps upstream. This type of bandwidth is sufficient to provide good Web surfing, to carry a low grade of entertainment video, and to conduct upstream activities that don’t command a great deal of bandwidth. However, ADSL is not sufficient for things such as digital TV or interactive services. For these activities, ADSL2, which was ratified in 2002, is preferred. ADSL2 supports up to 8Mbps downstream and up to 1Mbps upstream. An additional enhancement, known as ADSL2+, can support up to 24Mbps downstream and up to 1Mbps upstream.

SDSL SDSL is a symmetrical service that has a maximum loop length of 3.5 miles (5.5 km) and is deployed as a single twisted-pair. It is a good solution in businesses, residences, small offices, and home offices, and for remote access into corporate facilities. You can deploy variable capacities for SDSL, in multiples of 64Kbps, up to a maximum of 2Mbps in each direction.

SHDSL SHDSL, the standardized version of SDSL, is a symmetric service that supports up to 5.6Mbps in both the downstream and upstream directions.

RADSL RADSL has a maximum loop length of 3.5 miles (5.5 km) and is deployed as a single twisted-pair. It adapts the data rate dynamically, based on any changes occurring in the line conditions and on the loop length. With RADSL, the rates can vary widely, from 600Kbps to 7Mbps downstream and from 128Kbps to 1Mbps upstream. RADSL can be configured to be a symmetrical or an asymmetrical service.

VDSL VDSL provides a maximum span of about 1 mile (1.5 km) over a single twisted-pair. Over this distance, you can get a rate of up to 13Mbps downstream. But if you shorten the distance to 1,000 feet (300 m), you can get up to 55Mbps downstream and up to 15Mbps upstream, which is enough capacity to facilitate delivery of several HDTV channels as well as Internet access and VoIP. With VDSL2 you can get up to 100Mbps both downstream and upstream, albeit over very short distances.

Advantages and Disadvantages of Twisted-Pair

Twisted-pair has several key advantages:

- High availability—More than 1 billion telephone subscriber lines based on twisted-pair have been deployed, and because it’s already in the ground, the telcos will use it. Some say that the telcos are trapped in their copper cages; rather than build an infrastructure truly designed for tomorrow’s applications, they hang on to protecting their existing investment. It is a huge investment: More than US$250 billion in terms of book value is associated with the twisted-pair deployed worldwide. This can be construed as both an advantage and a disadvantage.

- Low cost of installation on premises—The cost of installing twisted-pair on premises is very low.

- Low cost for local moves, adds, and changes in places—An individual can simply pull out the twisted-pair terminating on a modular plug and replace it in another jack in the enterprise, without requiring the intervention of a technician. Of course, this assumes that the wiring is already in place; otherwise, there is the additional cost of a new installation.

Twisted-pair has the following disadvantages:

- Limited frequency spectrum—The total usable frequency spectrum of twisted-pair copper cable is about 1MHz.

- Limited data rates—The longer a signal has to travel over twisted-pair, the lower the data rate. At 30 feet (100 m), twisted-pair can carry 100Mbps, but at 3.5 miles (5.5 km), the data rate drops to 2Mbps or less.

- Short distances required between repeaters—More components need to be maintained, and those components are places where trouble can arise, which leads to higher long-term operational costs.

- High error rate—Twisted-pair is highly susceptibility to signal interference such as EMI and RFI.

Although twisted-pair has been deployed widely and adapted to some new applications, better media are available to meet the demands of the broadband world.

Coaxial Cable

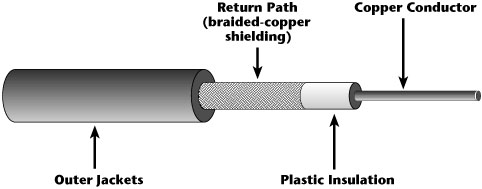

The second transmission medium to be introduced was coaxial cable (often called coax), which began being deployed in telephony networks around the mid-1920s. Figure 2.2 shows the components of coax. In the center of a coaxial cable is a copper wire that acts as the conductor, where the information travels. The copper wire in coax is thicker than that in twisted-pair, and it is also unaffected by surrounding wires that contribute to EMI, so it can provide a higher transmission rate than twisted-pair. The center conductor is surrounded by plastic insulation, which helps filter out extraneous interference. The insulation is covered by the return path, which is usually braided-copper shielding or aluminum foil–type covering. Outer jackets form a protective covering for coax; the number and type of outer jackets depend on the intended use of the cable (e.g., whether the cable is meant to be strung in the air or underground, whether rodent protection is required).

Characteristics of Coaxial Cable

Coax affords a great deal more frequency spectrum than does twisted-pair. Traditional coaxial cable television networks generally support 370MHz. Newer deployments, such as hybrid fiber coax (HFC) architectures, support 750MHz or 1,000MHz systems. (HFC is discussed in detail in Chapter 12.) Therefore, coax provides from 370 to 1,000 times more capacity than single twisted-pair. With this additional capacity, you can carve out individual channels, which makes coax a broadband facility. Multiplexing techniques can be applied to coax to derive multiple channels. Coax offers slightly better performance than twisted-pair because the metallic shielding protects the center conductor from outside interferences; hence the performance of coax is on the order of 10–9 (i.e., 1 in 1 billion) bps received in error. Amplifiers need to be spaced every 1.5 miles (2.5 km), which is another improvement over twisted-pair, but it still means a substantial number of amplifiers must be deployed throughout the network.

Cable TV operators, like the telephony network providers, have been prevalent users of coax, but in the past decade, they have been reengineering their backbone networks so that they are fiber based, thereby eliminating many amplifiers and subsequently improving performance. Remember from Chapter 1, “Telecommunications Technology Fundamentals,” that amplifiers accumulate noise over a distance. In a large franchise area for a cable TV operator, toward the outer fringes, greater noise accumulates and a lower service level is provided there than to some of the users upstream. By reengineering their backbones to be fiber based, cable providers can also limit how many amplifiers have to be deployed, along with reaping other advantages, such as high bandwidth, immunity to electrical interference, and improved security.

One problem with coax has to do with the deployment architecture. Coaxial cable and HFC architectures are deployed in bus topologies. In a bus topology, the bandwidth is shared, which means congestion levels increase as more users in the neighborhood avail themselves of these features and services. A bus topology also presents security risks. It’s sort of like going back to the party line in telephony. You do not have your own dedicated twisted-pair that’s yours and only yours. Instead, several channels devoted to voice telephony are shared by everyone in the neighborhood, which makes encryption important. Also, there are some problems with noise in bus topologies. The points where the coax connects into set-top boxes or cable-ready TV sets tend to collect noise, so the cable tends to pick up extraneous noise from vacuum cleaners or hair dryers or passing motorcycles. Thus, if every household on the network is running a hair dryer at 6:30 AM, the upstream paths are subjected to this noise, resulting in some performance degradation. (Bus topologies are discussed in more detail in Chapter 6, “Local Area Networking.”)

Applications of Coaxial Cable

In the mid-1920s, coax was applied to telephony networks as interoffice trunks. Rather than having to add more copper cable bundles with 1,500 or 3,000 pairs of copper wires in them, it was possible to replace those big cables (which are very difficult to install cost-effectively) with a much smaller coaxial cable.

The next major use of coax in telecommunications occurred in the 1950s, when it was deployed as submarine cable to carry international traffic. It was then introduced into the data-processing realm in the mid- to late 1960s. Early computer architectures required coax as the media type from the terminal to the host. LANs were predominantly based on coax from 1980 to about 1987.

Coax has been used in cable TV and in the local loop, in the form of HFC architectures. HFC brings fiber as close as possible to the neighborhood; then on a neighborhood node, it terminates that fiber, and from that node it fans the coax out to the home service by that particular node. (This is described in detail in Chapter 12.)

Advantages and Disadvantages of Coaxial Cable

The advantages of coax include the following:

- Broadband system—Coax has a sufficient frequency range to support multiple channels, which allows for much greater throughput.

- Greater channel capacity—Each of the multiple channels offers substantial capacity. The capacity depends on where you are in the world. In the North American system, each channel in the cable TV system is 6MHz wide, according to the National Television Systems Committee (NTSC) standard. In Europe, with the Phase Alternate Line (PAL) standard, the channels are 8MHz wide. Within one of these channels, you can provision high-speed Internet access—that’s how cable modems operate. But that one channel is now being shared by everyone using that coax from that neighborhood node, which can range from 200 to 2,000 homes.

- Greater bandwidth—Compared to twisted-pair, coax provides greater bandwidth systemwide, and it also offers greater bandwidth for each channel. Because it has greater bandwidth per channel, it supports a mixed range of services. Voice, data, and even video and multimedia can benefit from the enhanced capacity.

- Lower error rates—Because the inner conductor is in a Faraday shield, noise immunity is improved, and coax has lower error rates and therefore slightly better performance than twisted-pair. The error rate is generally 10–9 (i.e., 1 in 1 billion) bps.

- Greater spacing between amplifiers—Coax’s cable shielding reduces noise and crosstalk, which means amplifiers can be spaced farther apart than with twisted-pair.

The main disadvantages of coax are as follows:

- Problems with the deployment architecture—The bus topology in which coax is deployed is susceptible to congestion, noise, and security risks.

- Bidirectional upgrade required—In countries that have a history of cable TV, the cable systems were designed for broadcasting, not for interactive communications. Before they can offer to the subscriber any form of two-way services, those networks have to be upgraded to bidirectional systems.

- Great noise—The return path has some noise problems, and the end equipment requires added intelligence to take care of error control.

- High installation costs—Installation costs in the local environment are high.

- Susceptible to damage from lightning strikes—Coax may be damaged by lightning strikes. People who live in an area with a lot of lightning strikes must be wary because if that lightning is conducted by a coax, it could very well fry the equipment at the end of it.

Microwave

Microwave was used during World War II in military applications, and when it was successful in that environment, it was introduced into commercial communications. Microwave was deployed in the PSTN as a replacement for coaxial cable in the late 1940s.

As mentioned earlier, twisted-pair and coax both face limitations because of the frequency spectrum and the manner in which they are deployed. But microwave promises to have a much brighter future than twisted-pair or coax. Many locations cannot be cost-effectively cabled by using wires (e.g., the Sahara, the Amazon, places where buildings are on mountaintops, villages separated by valleys), and this is where microwave can shine. In addition, the microwave spectrum is the workhorse of the wireless world: The vast majority of wireless broadband solutions operate in the microwave spectrum.

Note that the discussion of microwave in this chapter focuses on its traditional application in carrier and enterprise private networks, but there are indeed many more systems and applications to discuss, and those are covered at length in Chapter 13, “Wireless Communications Basics,” and Chapter 16, “Emerging Wireless Applications.” This chapter focuses on the general characteristics of microwave and its use in traditional carrier backbones and enterprise private networks.

Characteristics of Microwave

Microwave is defined as falling in the 1GHz to 100GHz frequency band. But systems today do not operate across this full range of frequencies. In fact, current microwave systems largely operate up to the 50GHz range. At the 60GHz level, we encounter the oxygen layer, where the microwave is absorbed by the surrounding oxygen, and the higher frequencies are severely affected by fog. However, we are now producing systems called virtual fiber that operate in the 70GHz to 95GHz range at very short distances. Given the growing demand for wireless access to all forms of media, we can expect to see many developments in coming years that take advantage of the high-bandwidth properties of the higher frequency bands.

The amount of bandwidth that you can realize out of the very large microwave spectrum is often limited by regulations as much as by technology. Before you can deploy a microwave system outside your own private campus, you have to be licensed to operate that system in all environments. In your own private territory, you can use unlicensed bands, but if you want to cross the public domain using licensed spectrum, you must first be granted approval by your spectrum management agency to operate within a given frequency allocation.

Some communities are very concerned about the potential health hazards of microwave and create legislation or council laws that prohibit placement of such systems. In addition, some communities are very sensitive to the unsightliness of towers and argue that the value of real estate will drop if they are constructed. Therefore, several companies specialize in building camouflaged towers. When you see a tall tree, a church steeple, a light post, or a chimney, it could be a wireless tower disguised to protect your aesthetic balance.

Microwave is generally allocated in chunks of 30MHz to 45MHz channels, so it makes available a substantial amount of bandwidth to end users and operators of telecommunications networks.

Microwave is subject to the uncertainties of the physical environment. Metals in the area, precipitation, fog, rainfall, and a number of other factors can cause reflections and therefore degradations and echoes. The higher (in elevation) we move away from land-based systems, the better the performance because there is less intrusion from other land-based systems, such as television, radio, and police and military systems.

Repeater spacing with microwave varies depending on the frequency of transmission. Remember from Chapter 1 that lower frequencies can travel farther than higher frequencies before they attenuate. Higher frequencies lose power more rapidly. In microwave systems that operate in the 2GHz, 4GHz, and 6GHz bands, towers can be separated by 45 miles (72 km). In the higher-frequency allocations, such as 18GHz, 23GHz, and 45GHz, the spacing needs to be much shorter, in the range of 1 to 5 miles (1.6 to 8 km). This is an important issue in network design and, depending on the scope over which you want to deploy these facilities, it can have a significant impact on the investment required.

Another important design criterion is that microwave requires line of sight and is a highly directional beam. Microwave requires a clear, unobstructed view, and it can’t move through any obstacles, even things you wouldn’t think would be obstacles, such as leaves on a tree. Technologies that depend on line of sight may work brilliantly in areas that have the appropriate terrain and climate, and they may not perform very well where there are many obstacles or where there is a lot of precipitation. Furthermore, line of sight is restricted by the curvature of the earth, which interrupts the line of sight at about 90 miles (144 km). However, new spectrum utilization techniques such as Orthogonal Frequency Division Multiplexing (OFDM) permit non-line-of-sight operation, greatly expanding the use of microwave. (Chapter 15 discusses OFDM in detail.)

The impact of precipitation on microwave can be great. Microwave beams are small, and as you go up into the higher bands, the waveforms get smaller and smaller. Pretty soon, they’re smaller than a raindrop, and they can be absorbed by a raindrop and then scattered in a million directions. Therefore, in wet atmospheric conditions, there is a great potential for problems with microwave. As a result, practicing network diversity—using both terrestrial and nonterrestrial alternatives—is critical.

Traditional Applications of Microwave

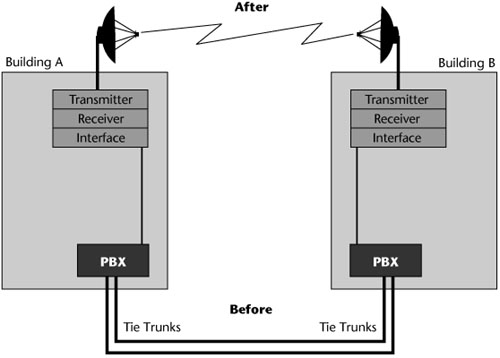

One application associated with microwave is to replace the use of leased lines in a private network. Figure 2.3 shows a simple voice environment that initially made use of dedicated leased lines, also known as tie trunks, to link two PBXs in two different buildings across town from one another. Because these tie trunks were billed on a monthly basis and were mileage sensitive, they were going to be a cost factor forever. Therefore, a digital microwave system was purchased to replace the tie trunks. This system provides capacity between the buildings and does away with the monthly cost of the leased lines. This setup is commonly used by multinode or multilocation customers (e.g., a health care facility with clinics and hospitals scattered throughout a state or territory, a university with multiple campuses, a retail location with multiple branches, a bank with multiple branches).

Figure 2.3 Connecting PBXs by using microwave

Another key application of microwave is bypassing, which can be interpreted in multiple ways. Initially, this technique was used to bypass the local telecommunications company. With the introduction of competition in the long-distance marketplace, end users in the United States initially had choices about who would be their primary long-distance carrier (i.e., interexchange carrier). But to get to that carrier to transport the long-distance portion of the call, we still needed to get special local access trunks that led through the local operator to the competing interexchange provider. That meant paying an additional monthly fee for these local access trunks. In an attempt to avoid those additional costs, businesses began to bypass the local telephone company by simply putting up a digital microwave system—a microwave tower with a shot directly to the interexchange carrier’s point of presence.

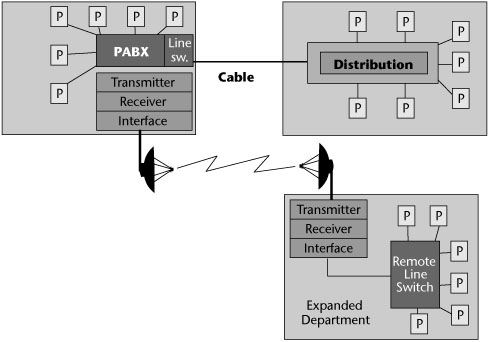

Bypassing can also be used to circumvent construction. Say that a pharmaceutical company on a large campus has a public thoroughfare, and across the street there’s a lovely park where the employees take their lunch and otherwise relax during the day. No one foresaw the fame and fortune the company would achieve with its latest migraine medicine, so it had not planned to build another facility to house the 300 people it now needed to add. Nobody ever provisioned conduit leading to that park across the street. The cost and time to get permission to break ground, lay conduit, pull cable, repave, and relandscape would be cost- and time-prohibitive. To bypass that entire operation, microwave could be used between the main campus and the remote park (see Figure 2.4). This is essentially the same strategy that wireless local loop is pursuing. Rather than take the time and money to build a wireline facility, you can do it much more rapidly and much more cost-effectively on a wireless basis.

Figure 2.4 A bypassing construction that uses microwave

Whereas provisioning twisted-pair or coaxial cable costs roughly US$1,000 to US$1,500 per subscriber and requires a 12- to 18-month deployment time, wireless costs US$300 to US$800 per subscriber and requires 3 to 6 months of deployment time. Something could always delay the process (e.g., contractual problems with the building developer), but, generally, you can deploy a microwave system much more rapidly and at a much lower price point. Therefore, these systems are especially popular in parts of the world where there is not already a local loop infrastructure. In addition, there are several unlicensed bands in the microwave spectrum, which means anyone can use them without needing to apply for and receive a license from the spectrum management agency. This includes the popular 2.4GHz and 5GHz bands used for Wi-Fi, the popular wireless LAN (WLAN) technology used in thousands of “hot spots” around the globe. This makes it even easier for a service provider or an entrepreneur to quickly deploy a wireless network to serve constituents.

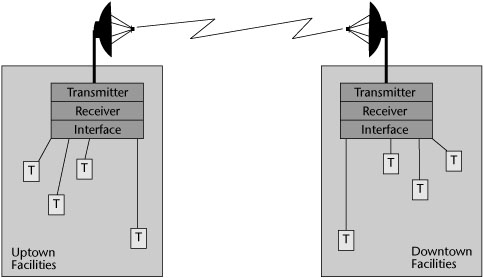

Another application for microwave is in the data realm. Say that in your company, the buildings that have telephone systems today are going to have LANs as well, and you want to unite the disparate LANs to create a virtual whole. You can use microwave technology as a bridge between two different LANs, to give the combined network the appearance of being one LAN (see Figure 2.5).

Figure 2.5 A LAN interconnect using microwave

The main factor that inhibits or potentially slows the growth of microwave is that only so many people can be operating on the same frequencies in the same area. Therefore, a big limitation of microwave is potential congestion in key metropolitan areas.

Microwave has a disaster-recovery application as well. Because microwave is relatively inexpensive and quick to deploy, it is a good candidate for use after a disaster damages wireline media, systems, or structures.

Advantages and Disadvantages of Microwave

The advantages of microwave are as follows:

- Cost savings—Using microwave is less expensive than using leased lines.

- Portability and reconfiguration flexibility—You can pick up a microwave transceiver and carry it to a new building. You can’t do that with cables.

- Substantial bandwidth—A substantial amount of microwave bandwidth is allocated, so high-speed data, video, and multimedia can be supported.

The main disadvantages of microwave are as follows:

- Line-of-sight requirement—You need to ensure that there are no obstacles between towers.

- Susceptibility to environmentally caused distortions—Because the environment (e.g., heavy rainstorms) can cause distortion, you need to have backups.

- Regulatory licensing requirement—The requirement for regulatory licensing means that you must have time and flexibility to deal with the spectrum agency.

- Potential environmental restrictions—Some communities do not allow microwave towers or require that they be camouflaged.

The New Era of Microwave: Wireless Broadband

As mentioned earlier, the initial application of microwave was focused on the replacement of twisted-pair and coaxial cables used in the PSTN. In fact, MCI first utilized microwave to provide voice and data service, introducing the first major competitive action against AT & T, which until 1984 had a monopoly on local and long-distance telephone service in the United States. The 1984 divestiture ruling formally sanctioned competition in the long-distance arena. Microwave offered great cost efficiencies because no cables had to be laid, allowing a new entrant to easily build a network of long-haul trunks to serve busy routes.

The role of microwave has been greatly expanded since the 1980s, with applications in just about every network domain. It is beyond the scope of this discussion of basic characteristics to go into detail on all the options available today, but detailed coverage is provided in Part IV. The following is a summary of the wireless systems that rely on microwave:

- Wireless wide area networks (WWANs)—In the context of WWANs, microwave is used to support 2G cellular PCS services (TDMA, GSM, CDMA), 2.5G enhanced data services (GPRS, HSCSD, EDGE), 3G high-speed data and multimedia services (W-CDMA, UMTS, CDMA2000, TD-SCDMA), 3.5G IP backbones (HSDPA, HSUPA, HSOPA), and 4G mobile broadband systems (using OFDM and MIMO technologies). WWANs are discussed further in Chapter 14, “Wireless WANs.”

- Wireless metropolitan area networks (WMANs)—When it comes to WMANs, the microwave spectrum is used in support of broadband fixed wireless access (BFWA) systems, IEEE 802.16 WiMax standards, the Korean WiBro specification, ETSI’s broadband radio access network (BRAN), HiperMAN and HiperAccess, Flash-OFDM, IEEE 802.20 Mobile-Fi, iBurst Personal Broadband System, IEEE 802.22 Wi-TV standards, and virtual fiber or millimeter wave technology. WMANs are discussed further in Chapter 15.

- Wireless local area networks (WLANs)—The ever-so-popular WLANs, including the IEEE 802.11 family of protocols and ETSI HiperLAN and HiperLan2, operate in the microwave band, relying on the unlicensed bands of 2.4GHz and 5GHz. WLANs are discussed further in Chapter 15.

- Wireless personal area networks (WPANs)—WPAN standards make use of unlicensed portions of the microwave spectrum, including IEEE 802.15.1 Bluetooth, IEEE 802.15.3 WiMedia, Ultra-Wideband (UWB), IEEE 802.15.4 ZigBee, and some applications of RFID. WPANs are discussed further in Chapter 15.

As you can see, microwave is truly the workhorse of the wireless world. Part IV of this book therefore dedicates much attention to its variations.

Satellite

In May 1946, Project RAND released Preliminary Design of an Experimental World-Circling Spaceship, which stated, “A satellite vehicle with appropriate instrumentation can be expected to be one of the most potent scientific tools of the Twentieth Century. The achievement of a satellite craft would produce repercussions comparable to the explosion of the atomic bomb.” In 1947, Arthur C. Clarke (well known for his science fiction, particularly 2001: A Space Odyssey) presented a paper to the scientific community in which he suggested that if we explored orbits in higher elevations above the earth, we might achieve an orbit at which a satellite would be able to serve as a communications broadcast tool. Until that point, we were beginning early explorations of satellites, but they were what we today would call low-earth-orbit satellites, which means they were at relatively low altitudes over the earth and revolved around the earth much faster than the earth rotates on its own axis. Clarke theorized that if we sent a satellite into a higher orbit, it would encounter a geosynchronous orbit, meaning that the satellite would rotate around the earth at exactly the same rate at which the earth rotates on its axis; the orbiting device would appear to hang stationary over a given point on earth. Clarke’s hypotheses were supported and thus began the development of the communications sector for the space industry.

The first artificial satellite was Sputnik 1, launched by the Soviet Union on October 4, 1957. In the United States, NASA launched the first experimental communications satellite in 1963. The first commercial communications satellite was launched two years later, so 1965 marked the beginning of the use of satellite communications to support public telephony as well as television, particularly international television. Since then, large numbers of satellites have been launched. At this point, there are more than 250 communications-based satellites in space, as well as hundreds of other specialized satellites used for meteorological purposes, defense, remote sensing, geological exploration, and so on, for a total of more than 700 satellites orbiting the earth. And it seems that many more satellites will be launched in the future.

There are still approximately 3 billion people on the planet who are not served by even basic communications services, and we can’t possibly deploy enough wireline facilities in a short enough time frame to equalize the situation worldwide. Therefore, satellites are very important in bringing infrastructure into areas of the world that have previously not enjoyed that luxury.

In descriptions of satellite services, three abbreviations relate to the applications that are supported:

- FSS—Fixed satellite services, the conventional fixed services, are offered in both the C-band and the Ku-band allocations.

- BSS—Broadcast satellite services include standard television and direct broadcast. These largely operate in the Ku-band, at 18GHz. Because the general application of television so far has been one way, 18GHz shows just the downlink frequency allocation. As we begin to move toward interactive TV, we’ll start to see the use of two different bands in BSS.

- MSS—Mobile satellite services accommodate mobility (i.e., mobile users). They make use of either Ka-band or L-band satellites.

A satellite’s footprint refers to the area of earth that the satellite’s beams cover. Much of the progress in satellite developments has been in new generations of antennas that can provide more spot beams that can deliver higher-quality service to targeted areas rather than simply one big beam, with which users at the fringes of the footprint begin to see a degradation in service.

Another thing that’s very important about and unique to satellites is the broadcast property. After you send data uplink to a satellite, it comes back downlink over the entire footprint. So a satellite can achieve point-to-multipoint communications very cost-effectively. This had a dramatic impact on the media business. Consider a big newspaper that has seven regional printing presses within the United States. Before satellites, the paper would have had to send a separate transmission to each of those printing locations so local ads could be inserted and such, but with a satellite, you beam it up once, and when it comes down, it rains over the entire footprint of the United States. If each of the printing presses has a satellite station properly focused on the satellite and knows what frequency to receive on, it will get the information instantaneously.

An interesting design parameter associated with satellites is that as the number of locations increases, the economic benefit of using satellites increases. With leased lines, the more locations and the greater the distances between them, the more expensive the network. But when using satellite technology, the more locations that are sharing the hub station and transponder, the less expensive the network becomes for all concerned. Thus, satellite technology presents a very attractive networking solution for many customers.

Remember that there are 700 or so satellites in space. On top of those 700 satellites, there are about 250,000 pieces of debris that have been cataloged by the space agencies. Furthermore, we are seeing an increase in comet and solar flare activity. A solar flare can decommission a satellite in one pulse, and a little speck of comet dust can put a baseball-sized crater into a solar panel. Because of these types of hazards, strategies must be put in place to protect existing satellites.

We often don’t recognize what great capabilities satellite can provide. But if we set our sights on more than just the planet earth and realize that there is a great frontier to explore, we realize that although fiber may be the best solution on the planet, when we want to advance in space, satellite communication is extremely important.

Frequency Allocations of Satellite

The frequency spectrum in which most satellites operate is the microwave frequency spectrum. (GPS runs at VHF frequencies and is a very important application.) Therefore, microwave and satellite signals are really the same thing. The difference is that with satellite, the repeaters for augmenting the signals are placed on platforms that reside in high orbit rather than on terrestrial towers. Of course, this means that the power levels associated with satellite communications are greater than those of terrestrial microwave networks. The actual power required depends on the orbit the satellite operates in (geosynchronous-orbit satellites require the most power, and low-earth-orbit satellites require the least), as well as the size of the dish on the ground. If the satellite has a lot of power, you don’t need as big a dish on the ground. This is particularly important for satellite TV, where the dish size should be 2 feet (0.6 m) or smaller. A number of factors are involved in the bandwidth availability of satellite: what spectrum the regulatory agencies have allocated for use within the nation, the portion of the frequency spectrum in which you’re actually operating, and the number of transponders you have on the satellite. The transponder is the key communications component in satellite. It accepts the signal coming from the earth station and then shifts that signal to another frequency. When the signal is on the new frequency, it is amplified and rebroadcast downlink.

In satellite communications, the frequency allocations always specify two different bands: One is used for the uplink from earth station to satellite and one for the downlink from satellite to earth station. Many different bands are specified in the various satellite standards, but the most dominant frequency bands used for communications are C-band, Ku-band, Ka-band, and L-band.

C-Band

C-band transmits uplink around the 6GHz range and downlink around the 4GHz range. The advantage of C-band, as compared to other bands, is that because it operates in the lower frequency bands, it is fairly tolerant of adverse weather conditions. It has larger waveforms, so it doesn’t suffer as much disturbance as do smaller waveforms in the presence of precipitation, for instance.

The disadvantage of C-band is that its allocation of frequencies is also shared by terrestrial systems. So selecting sites can take time because you have to contend with what your neighbors have installed and are operating. Licensing can take time, as well.

Ku-Band

Ku-band was introduced in the early 1980s, and it revolutionized how we use satellite communications. First, it operates on the uplink at around 14GHz and on the downlink at around 11GHz. The key advantage of Ku-band is that this frequency band allocation is usually reserved specifically for satellite use, so there are no conflicts from terrestrial systems. Therefore, site selection and licensing can take place much more rapidly. Second, because it doesn’t interfere with terrestrial systems, it offers portability. Therefore, a Ku-band dish can be placed on top of a news van or inside a briefcase, and a news reporter can go to a story as it is breaking to broadcast it live and without conflict from surrounding systems.

The disadvantage of Ku-band is that it is a slightly higher frequency allocation than C-band, so it can experience distortions under bad climactic conditions (e.g., humidity, fog, rain).

Ka-Band

The new generation of satellite—the broadband satellites—operates in the Ka-band. The key advantage of Ka-band is that it offers a wide frequency band: about 30GHz uplink and about 20GHz downlink. The difference between 20GHz and 30GHz for Ka-band is much greater than the difference between 4GHz and 6GHz for C-band. This expanded bandwidth means that Ka-band satellites are better prepared than satellites operating at other bands to accommodate telemedicine, tele-education, telesurveillance, and networked interactive games.

A disadvantage of Ka-band is that it’s even higher in the frequency band than the other bands, so rain fade (i.e., degradation of signal because of rain) can be a more severe issue. Thus, more intelligent technologies have to be embedded at the terminal points to cost-effectively deal with error detection and correction.

L-Band

L-band operates in the 390MHz to 1,550MHz range, supporting various mobile and fixed applications. Because L-band operates in the lower frequencies, L-band systems are more tolerant of adverse weather conditions than are other systems. It is largely used to support very-small-aperture terminal (VSAT) networks and mobile communications, including handheld terminals (such as PDAs), vehicular devices, and maritime applications.

We’ve progressed very quickly in satellite history. The first earth station that accompanied the Early Bird satellite in 1965 had a massive facility. The dome on the building was 18 stories high, the antenna weighed 380 tons, and the entire building had liquid helium running through it to keep it cool. This was not something an end user could wheel into a parking lot or place on top of a building. But we have continued to shrink the sizes of earth stations, and today many businesses use VSATs, in which the dish diameter is 2 feet (0.6 m) or less. You can literally hang a VSAT outside your window and have a network up and running within several hours (assuming that you have your frequency bands allocated and licensed).

Satellite Network Segments

Satellite networks have three major segments:

- Space segment—The space segment is the actual design of the satellite and the orbit in which it operates. Most satellites have one of two designs: a barrel-shaped satellite, normally used to accommodate standard communications, or a satellite with a very wide wingspan, generally used for television. Satellites are launched into specific orbits to cover the parts of the earth for which coverage is desired.

- Control segment—The control segment defines the frequency spectrum over which satellites operate and the types of signaling techniques used between the ground station and the satellite to control those communications.

- Ground segment—The ground segment is the earth station—the antenna designs and the access techniques used to enable multiple conversations to share the links up to the satellite. The ground segment of satellites continues to change as new technologies are introduced.

Satellite Orbits

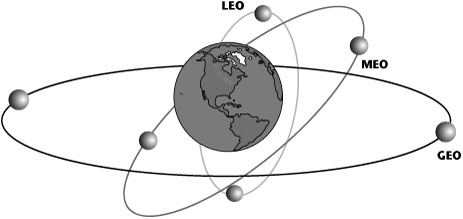

Another important factor that affects the use and application of satellites is the orbits in which they operate. As shown in Figure 2.6, there are three major orbits: geosynchronous orbit (GEO), middle earth orbit (MEO), and low earth orbit (LEO). The majority of communications satellites in use are GEOs.

Figure 2.6 Satellite orbits

GEO Satellites

A GEO satellite is launched to 22,300 miles (36,000 km) above the equator. A signal from such a satellite must travel quite a distance; as a result, there is a delay of 0.25 seconds in each direction, so from the time you say, “Hello, how are you?” to the time that you hear the person’s response, “Fine,” there is a 0.5-second delay, which results in somewhat of a stilted conversation. Many data applications, especially those that involve entertainment, such as games, cannot perform with a delay this great.

GEO satellites have the benefit of providing the largest footprint of the satellite types. Just three GEO satellites can cover the entire world, but the delay factor in getting to that orbit inhibits its use with the continuously growing range of real-time applications that are very sensitive to delay.

The fact that it is launched at such a high orbit also means that a GEO satellite requires the most power of all the satellite systems. And because the trend in satellites has been to deliver more data as well as interactive services (which are very delay sensitive) directly to the user, more satellites are now being launched at the lower orbits.

We are beginning to see data rates of up to 155Mbps with GEO systems, particularly in the Ka-band. That data rate is not commonly available today, but it is feasible with the new generation of broadband satellites. Going to higher data rates, however, necessitates larger antennas, more so for GEO systems than for satellites in other orbits. Parabolic satellite antennas 33 feet (10 m) in diameter can now be built, and it should soon be possible to extend them to 66 feet (20 m) or 100 feet (30 m).

The main applications of GEO systems are one-way broadcast, VSAT systems, and point-to-multipoint links. There are no delay factors to worry about with one-way broadcasts from GEO systems. As a result, international television is largely distributed over these satellite networks today.

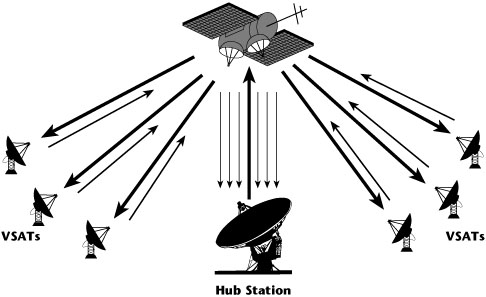

VSATs Business enterprises use VSAT networks as a means of private networking, essentially setting up point-to-point links or connections between two locales. A VSAT station is so compact that it can be put outside a window in an office environment. VSATs are commonly deployed to reduce the costs associated with leased lines, and depending on how large the network is, they can reduce those costs by as much as 50%. Most users of VSATs are enterprises that have 100 or more nodes or locations (e.g., banks that have many branches, gas stations that have many locations, convenience stores). VSATs elegantly and economically help these types of enterprises do things such as transport information relevant to a sale made from a remote location to a central point, remotely process information, and process reservations and transactions. (See Figure 2.7.)

Figure 2.7 A VSAT system

Another use for VSATs is business video. Before about 1994, the only way to do point-to-multipoint video was by satellite. No terrestrial conference bridges would allow it. So, if you wanted to have a CEO provide a state-of-the-company address to all employees at different locations, the easiest way to get that footprint was by satellite.

Because you can set up a VSAT system very quickly, VSATs offer valuable help in the event of a major disaster, when land-based facilities are disabled.

VSATs are also useful in vehicle-tracking systems, to communicate with drivers, arrange for payload drop-offs and pickups, and handle standard messaging without disrupting drivers’ transportation schedules.

An emerging application for VSAT is broadband Internet access. Products such as Hughes DIRECWAY (www.hns.com) provide Internet downloads at up to 1Mbps. Similarly, intranets (i.e., site-to-site connections between company locations) can be based on VSATs.

The following are the key advantages of VSATs:

- Easy access to remote locations—Where it would be difficult to facilitate a wireline arrangement, such as on an oil rig, a VSAT can easily be set up.

- Rapid deployment—A VSAT system can be installed in two to four hours (as long as you have already secured the license to operate within an allocated spectrum).

- Scalable—A VSAT system can grow to facilitate more bandwidth with the addition of more interfaces.

- Platform agnostic—A VSAT can support a variety of data networking protocols.

- Insensitive to distance-related transmission costs—The transmission cost is the same whether your locations are 100 miles (150 km) apart or 3,000 miles (5,000 km) apart. As long as the locations are within the footprint of the satellite, the costs are distance insensitive. (This does not necessarily translate to distance-insensitive pricing.)

- Cost reductions via shared-hub facilities—The more you share the system, the more your costs are reduced.

- Flexible network configuration—You can be flexible with your network configurations. As with a microwave system, you can pick it up and move it.

Disadvantages of VSATs include the following:

- Transmission quality subject to weather conditions—Stormy weather can cause disruptions. Most large users of VSAT networks have some leased lines available to use as backup when disruptions occur.

- Potentially high startup costs for small installations—A small company may face a high startup cost if it does not have many nodes. (If VSAT is the only available recourse—if there is no way to communicate without it—then the installation cost is a moot point.)

MEO Satellites

MEO satellites orbit at an elevation of about 6,200 to 9,400 miles (10,000 to 15,000 km). MEO satellites are closer to the earth than GEO satellites, so they move across the sky much more rapidly—in about one to two hours. As a result, to get global coverage, you need more satellites (about five times more) than with GEO systems. But because the altitude is lower, the delay is also reduced, so instead of a 0.5-second delay, you see a 0.1-second delay.

The main applications for MEOs are in regional networks, to support mobile voice and low-speed data, in the range of 9.6Kbps to 38Kbps. The companies that use MEOs tend to have their movement within a region rather than over the entire globe, so they want a larger footprint over, for example, the Asia-Pacific region, to support mobility of mainly voice communications.

The orbiting altitudes between about 1,000 miles and 6,200 miles (about 1,600 km and 10,000 km) cannot be used for satellites because the Van Allen radiation belts act negatively on satellites. The Van Allen belts are an area of charged particles (resulting from cosmic rays) above the earth trapped by the earth’s magnetic field.

LEO Satellites

LEOs are a lot like cellular networks, except that in the case of LEOs, the cells as well as the users are moving. Because LEOs are in such low orbits, they greatly reduce the transit times, so the delays are comparable to what you’d experience on the PSTN, and therefore delay-sensitive applications can survive. Their low orbits also mean that power consumption is much lower than with a higher-orbiting satellite, so you can direct their transmissions into a user’s handheld. Also, LEOs offer greatly increased network capacity overall on a global basis.

LEOs orbit at about 400 to 1,000 miles (640 to 1,600 km). LEOs can be used with smaller terminals than can the other satellites because they are much closer to the earth (40 times closer). But, again, because they are closer, you need many more LEOs than other satellites to get the same coverage (about 20 times more LEOs than GEOs and 5 times more LEOs than MEOs). A user must always be able to see at least one LEO satellite that is well clear of the horizon.

As mentioned earlier, the very first satellites rotated around the earth so fast that we couldn’t use them for communications. But LEO satellite platforms include switching, so as a LEO satellite begins to go out of view, a call is switched—that is, handed over—to the next satellite coming in. This works very much like the cellular system, where a caller is handed off to different frequencies during a conversation while transiting between cells. Of course, a benefit of being lower in the sky means that the delay with LEOs is reduced—to only about 0.05 seconds—and this makes LEOs very appealing for interactive services.

The key applications for LEOs are support for mobile voice, low-speed data, and high-speed data. There are three categories of LEOs, and each category is optimized for certain applications:

- Little LEOs—Little LEOs offer 2.4Kbps to 300Kbps and operate in the 800MHz range. They are ideal for delivering messaging, paging, and vehicle location services.

- Big LEOs—Big LEOs offer 2.4Kbps to 9.6Kbps and operate in the 2GHz range. They have rather low data rates and are largely designed to provide voice services to areas that aren’t currently served by any form of terrestrial or cellular architecture.

- Broadband LEOs—Broadband LEOs offer 16Kbps to 155Mbps. They operate in the Ka-band, at 20GHz to 30GHz, and they support data and multimedia files at up to 155Mbps.

Applications of Satellite

The traditional applications for satellites have been to serve remote areas where terrestrial facilities were not available; to provide the capability for point-to-multipoint communications for cost-efficiencies; to provide disaster-recovery support; to provide remote monitoring and control; to facilitate two-way data messaging, vehicle tracking, mobile communications, and maritime and air navigation; to distribute TV, video, and multimedia; and to provide for defense communications.

Two recent applications of new consumer satellite services are automotive navigation and digital audio radio, such as the SIRIUS system, with which you can receive more than 100 radio stations throughout the footprint of the satellite. Not only do you receive the music or programming you want, but you can immediately determine who the artist is and what CD it is from, and you can even compile a customized version for yourself. By now everyone is at least aware of the benefits of automotive systems like GM’s OnStar, which not only provide navigational assistance but also support vital emergency services, with the potential to make the difference between life and death.

Satellite is being used in a number of other applications. Use of satellite in Internet backbones is another emerging application because of the huge growth in traffic levels. Terrestrial facilities can’t handle all this traffic alone, so we have to rely on some satellite backbones, especially to reach into places such as Africa, Antarctica, and Latin America. Additional emerging applications include Internet access, data caching, and multimedia. The farther you are from the point at which an application resides, the worse the experience you have. If we put applications on a satellite, everybody within the footprint is always only one hop away, thereby greatly reducing the latencies, or delays, encountered in ultimately drawing on the content. Again, what may make a difference here is whether it’s an interactive application and what orbit that satellite is in, but this is one application that merges the ISP and satellite industries. Other emerging applications of satellites include telemedicine, distance learning, remote imaging, and weather information.

Satellites have seen a number of innovations and are facing yet more in the future. The most significant change in the coming years is that satellites will increasingly deliver information directly to the consumer rather than to a commercial data hub. This might mean that aerospace corporations become major competitors of traditional telecom carriers.

Another key innovation is phased-array antennas. A phased-array antenna consists of multiple transmitting elements, arranged in a fixed geometric array. These small, flat antennas are steered electronically, and they provide great agility and fast tracking. Phased-array antennas, as well as other new designs in antennas, are discussed in Chapter 13.

Key challenges for satellites today are related to power and mobile services. Because the small antennas now used in portable transceivers intercept only a tiny fraction of the satellite signal, satellites must have a lot of power and sensitivity; a typical solar array on a GEO satellite increased in power from 2kW to more than 10kW in just several years. Another innovation involves moving further up the frequency spectrum, to make use of extremely high frequencies (EHF). The highest-frequency satellite systems today use wavelengths comparable to the size of a raindrop. Consequently, a raindrop can act as a lens, bending the waves and distorting the signals. But these ill effects can be mitigated by using error correction techniques, by applying more power when necessary, or by using more ground terminals so data can follow diverse paths. When wavelengths are smaller than a millimeter, there are yet more obstacles. Infrared and optical beams are easily absorbed in the atmosphere. So, in the near future, they may very well be restricted to use within buildings; however, there have been some new developments in virtual fiber, which is discussed in Chapter 15.

A slightly different orbit is being introduced: high-altitude, long-endurance (HALE) satellites, which the ITU also calls high-altitude platform stations (HAPS). HALEs are in extremely low orbit, actually circling a city or metro area. They are about 11 miles (17.8 km) overhead, and they operate at about 47GHz, although the ITU has also designated other bands of operation, including 3G bands. The main applications are Internet access, resource monitoring, and data networking in metropolitan areas. In essence, they are aircraft that hover over a city area, providing network capacity for metropolitan area networking. Some may be dirigibles, and others may have wings and fly like conventional aircraft.

The key advantage associated with HALEs is a very moderate launch cost compared to the cost associated with traditional satellites. By applying what we are learning to do with phased-array antennas (discussed in Chapter 13) to HALEs, we can provide a wide capacity: Some 3,500 beams could enable mobile two-way communications and video distribution in an area about 300 miles (about 500 km) across.

Advantages and Disadvantages of Satellite

The advantages of satellite include the following:

- Access to remote areas

- Coverage of large geographical areas

- Insensitivity to topology

- Distance-insensitive costs

- High bandwidth

The disadvantages of satellite include the following:

- High initial cost

- Propagation delay with GEO systems

- Environmental interference problems

- Licensing requirements

- Regulatory constraints in some regions

- Danger posed by space debris, solar flare activity, and meteor showers

Fiber Optics

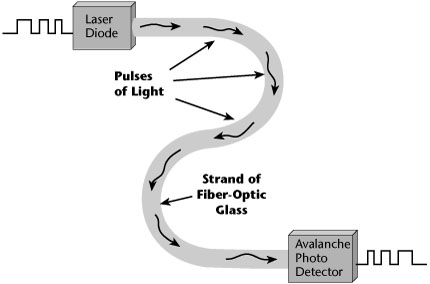

In the late 1950s and early 1960s, a number of people were working in the realm of fiber optics simultaneously. Charles Kao, who was a scientist with ITT, is often acknowledged as being one of the fathers of fiber optics. Kao theorized that if we could develop a procedure for manufacturing ultrapure, ultrathin filaments of glass, we could use them as a revolutionary new communications pipeline. Thus began the move toward researching and developing optical technology.

In 1970 came the developments that have allowed us to deploy large amounts of fiber. Corning Glassworks introduced the first development, broomsticking, a procedure for manufacturing ultrapure filaments of glass. Glass that has an inner core etched into it is melted at extremely high temperatures, and as the glass melts and drops down the tube, it begins to cool and form a strand. By the time it gets to the bottom of the tube, it is a fiber-optic thread. Being able to create the fiber cable itself solved half the equation. Because the fiber’s diameter is minuscule (measured in micrometers, or microns, abbreviated μ), the light source that pulses energy on this tiny fiber also has to be minuscule. In 1970, Bell Labs completed the equation by introducing the first laser diode small enough to fit through the eye of a needle.

Characteristics of Fiber Optics

Fiber optics operates in the visible light spectrum, in the range from 1014Hz to 1015Hz. Wavelength is a measure of the width of the waves being transmitted. Different fiber-optic materials are optimized for different wavelengths. The EIA/TIA standards currently support three wavelengths for fiber-optic transmission: 850, 1,300, and 1,550 nanometers (nm). Each of these bands is about 200 nm wide and offers about 25THz of capacity, which means there is a total of some 75THz of capacity on a fiber cable. The bandwidth of fiber is also determined by the number of wavelengths it can carry, as well as by the number of bits per second that each wavelength supports. (As discussed in Chapter 1, each year, wavelength division multiplexers are enabling us to derive twice as many wavelengths as the year before, and hence they enable us to exploit the underlying capacity of the fiber cables.)

With fiber, today we can space repeaters about 500 miles (800 km) apart, but new developments continue to increase the distance or spacing. Trials have been successfully completed at distances of 2,500 miles (4,000 km) and 4,000 miles (6,400 km).

Components of Fiber Optics

The two factors that determine the performance characteristics of a given fiber implementation are the type of cable used and the type of light source used. The following sections look at the components of each.

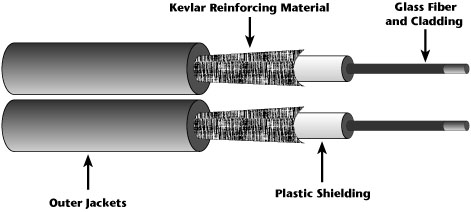

Fiber-Optic Cable

Fiber-optic cable is available in many sizes. It can have as few as a couple pairs of fiber or it can have bundles that contain upward of 400 or 500 fiber pairs. Figure 2.8 shows the basic components of fiber-optic cable. Each of the fibers is protected with cladding, which ensures that the light energy remains within the fiber rather than bouncing out into the exterior. The cladding is surrounded by plastic shielding, which, among others things, ensures that you can’t bend the fiber to the point at which it would break; the plastic shielding therefore limits how much stress you can put on a given fiber. That plastic shielding is then further reinforced with Kevlar reinforcing material—material that is five times stronger than steel—to prevent other intrusions. Outer jackets cover the Kevlar reinforcing material, and the number and type of outer jackets depend on the environment where the cable is meant to be deployed (e.g., buried underground, used in the ocean, strung through the air).

Figure 2.8 Fiber-optic cable

There are two major categories of fiber: multimode and single mode (also known as monomode). Fiber size is a measure of the core diameter and cladding (outside) diameter. It is expressed in the format xx/zz, where xx is the core diameter and zz is the outside diameter of the cladding. For example, a 62.5/125-micron fiber has a core diameter of 62.5 microns and a cladding diameter of 125 microns. The core diameter of the fiber in multimode ranges from 50 microns to 62.5 microns, which is large relative to the wavelength of the light passing through it; as a result, multimode fiber suffers from modal dispersion (i.e., the tendency of light to travel in a wave-like motion rather than in a straight line), and repeaters need to be spaced fairly close together (about 10 to 40 miles [16 to 64 km] apart). The diameter of multimode fiber also has a benefit: It makes the fiber more tolerant of errors related to fitting the fiber to transmitter or receiver attachments, so termination of multimode is rather easy.