Chapter 9. IP Services

This chapter discusses the evolution from circuit-switched to Internet Protocol (IP)—based packet-switched infrastructures to support the variety of IP services in use today, including IP telephony (IPT), IP television (IPTV), and virtual private networks (VPNs). IPT involves the transmission of voice, fax, and related services over IP-based packet-switched networks. IPTV systems deliver digital television service to a subscriber, using IP over a broadband connection. VPNs are a critical requirement for businesses because they distribute mission-critical and sensitive traffic to remote locations; they offer networking security and performance comparable with that available at the office.

The Evolution to IP Services

Service providers are in the midst of a gradual evolution from circuit-switched to IP-based packet-switched infrastructures. IP is attractive for two main reasons: cost savings and revenue. Carriers expect operational and infrastructure savings from deploying new IP-based services because they believe that implementing applications on IP networks will be much less expensive than running them on circuit-switched networks. In addition, every carrier is looking for new ways to enhance its service suites, which are rapidly becoming commodities.

The evolution to IP-based infrastructures means more shared networks. Because IP emphasizes logical over physical connections, IP makes it easier for multiple carriers to coexist on a single network. This encourages cooperative sharing of interconnected networks, structured as anything from sales of wholesale circuits to real-time capacity exchanges. It also means increased competition because there are reduced barriers to entry; new companies can enter the market without the huge fixed costs associated with traditional network models.

Most of the evolution so far has taken place in the transport and access parts of the network and in the development of IP infrastructure elements, such as gateways, softswitches, and the IP Multimedia Subsystem (which is discussed in Chapter 10, “Next-Generation Networks”). The market for next-generation IP application services is beginning to take shape, and IP-based application servers have been developed to deliver actual revenue-generating services for carriers.

The problem is that IP has been positioned as a magical potion that will cure everything, and the industry has suffered from too much hype and wishful thinking. Equipment and service providers have been guilty of promoting such thinking, claiming that their products can enable multiple new services without clearly identifying just what those new services will be. While there are indeed very good reasons to transition to IP, it is important to be realistic and pragmatic about them. The migration to IP is not without risk. In terms of the IP application services market, two key questions need to be answered: Will the quality of the real-time services over IP be good enough in the next few years to drive carriers and customers to switch? To what extent will enterprises take advantage of their existing IP infrastructure and data communications expertise to implement their own services, thus avoiding additional service provider charges?

Traditional Internet applications, such as e-mail, Telnet, FTP, and the World Wide Web, are referred to as elastic applications because they are tolerant of network delays and packet losses. Unlike those applications, advanced real-time applications are highly sensitive to timely data delivery. Quality of service (QoS) is the most important obstacle for the Internet and IP networks to overcome, especially in light of the growing use of voice and video. As QoS emerges within the Internet, the ability to differentiate services will result in differentiated pricing. QoS capabilities will allow revenue-generating service levels to be implemented and service-level agreements (SLAs) to be negotiated.

The ability to control and manage a network effectively will allow the creation of value-added services and packages. Providing value-added IP services implies increased attention to tools and equipment that help service providers succeed. These include software that gives service providers greater network control and visibility; intrusion prevention systems that support always-on stateful inspection (i.e., a form of stateful inspection that provides cumulative data against which subsequent communication attempts can be evaluated and acted on in real-time) and deep packet analysis capabilities; software that can deliver flexible bandwidth; tools that offer granular analysis of bandwidth consumption by customers; and tools that enable creative solutions that reduce costs, such as the robots from CityNet Telecommunications (www.citynettelecom.com) that string fiber through sewers at a fraction of the cost of digging up city streets to lay the fiber.

Value-added IP services allow carriers greater differentiation from their competitors. Evolving next-generation IP services include IP virtual private networks (VPNs), IP telephony (IPT) and Voice over IP (VoIP), IP centrex and IP call centers, application hosting, mobility management/follow-me services, unified messaging, instant messaging (IM), presence management, Video over IP, IP television (IPTV), and different types of conferencing, including audioconferencing, videoconferencing, and Web/data conferencing. As carriers consolidate and wireless broadband networks are deployed, a major challenge will be developing viable business models to market, bill, provision, and provide excellent customer care for all these services.

Many incumbent carriers are choosing to initially implement IP-based services on an overlay network. Carriers that take this approach do not have to replace circuit-switched network elements, which usually have minimal ongoing operational expenses. In an overlay network scenario, the packet-switched network is isolated from the circuit-switched network, and the two are connected via a gateway.

IPT

IPT has been drawing a lot of attention in the past couple years, and terminology in this area is confusing, with VoIP being the most common expression, even though there are in fact technical differences between IPT (the umbrella term), VoIP (IPT over private IP networks), and Internet telephony (voice over the Internet). The following sections cover the types of applications anticipated for IPT as well as what network elements are required in order to make IPT work and provide similar capabilities to what we are used to from the PSTN.

Although IPT has a very important place, it is not yet taking over the traditional circuit-switched approach to accommodating voice telephony. The truly exciting future of IPT, or VoIP, lies in advanced and interesting new applications, an environment where voice is but one of the information streams comprising a rich media application. While such applications are being developed, there are other compelling reasons to adopt IPT, including the reduction of toll and international calling charges, which makes a strong argument for many businesses and consumers. The network-specific cost for VoIP on dedicated networks is quite a bit lower than the cost of calls on circuit-switched networks, not to mention the benefit an average residential user, with family members and friends scattered around the globe, can gain by avoiding the normally sky-high charges associated with international calling. Many analysts expect that sales of VoIP equipment will grow rapidly in the coming months and years.

People use several IP-related terms interchangeably. However, according to the International Telecommunication Union (ITU; www.itu.int), there are distinctions between the following terms:

- IPT—The transmission of voice, fax, and related services over packet-switched IP-based networks. Internet telephony and VoIP are specific subsets of IPT.

- Internet telephony—Telephony in which the principal transmission network is the public Internet. Internet telephony is commonly referred to as Voice over the Net, Internet phone, and net telephony, with appropriate modifications to refer to fax as well, such as Internet fax.

- VoIP—IPT in which the principal transmission network or networks are private, managed IP-based networks.

This chapter generally uses the encompassing term IPT.

With leading telecommunications carriers and cable companies unveiling major VoIP initiatives, consumers and businesses will be hearing a great deal more about VoIP in the near future. Despite the long road ahead, consumer and business migration to VoIP is on the verge of moving out of the early adoption phase and into the mainstream, and the hope is that widespread (although not ubiquitous) VoIP use will finally take off by 2007. It appears that some 20% of businesses have already adopted VoIP technology. Interestingly, companies are citing benefits other than reduced costs as the reasons for switching to VoIP. The biggest reasons mentioned have to do with strategic investments, integration with existing IP VPNs, employee mobility, and increased productivity. Reduced costs alone are not likely to propel IPT or VoIP to the pedestal envisioned by its proponents.

The IPT Evolution

We know that IPT is here for several reasons. First, we can do it effectively, and greater processing power (following Moore’s Law) applied to signal processing along with lower costs makes VoIP attractive. Second, as discussed later in this chapter, standards for VoIP have made progress. Third, IP has become pervasive: It is easier now for both businesses and consumers to enter the IPT realm. Finally, the marketplace is now home to many commercial entries.

Moore’s Law refers to a prediction made in 1965 by Gordon Moore, cofounder of Intel. Moore said that the number of transistors occupying a square inch of integrated circuit material had doubled each year since the invention of the integrated circuit, and he predicted that this multiplication of circuitry would continue. For the most part, the prediction held true until the late 1970s, when the time span of a year increased to about 18 months. Today Moore’s Law is commonly used as an expression to state that the power of microprocessor technology doubles and its cost of production halves every 18 months.

Many variations of phones, gateways, IP PBXs, and open-source PBXs are now available to both consumers and enterprises. Several key milestones have been reached. There has been a surge of Session Initiation Protocol (SIP) support and offerings for SIP-based enterprise communications, and SIP products are now widely available. Avaya (www.avaya.com), Lucent (www.lucent.com), and NEC (www.nec.com) have announced SIP enterprise product lines. Microsoft (www.microsoft.com), IBM (www.ibm.com), Novell (www.novell.com), and Sun Microsystems (www.sun.com) are all moving to SIP for their enterprise collaboration tools. Cisco (www.cisco.com) has announced the migration of its proprietary call manager technology to SIP. (SIP is discussed in detail later in this chapter.)

Most enterprises today are not using or considering IPT services, but many are using or considering migrating to Multiprotocol Label Switching (MPLS), in part because combining voice, video, and data can finally justify the cost of MPLS. Most organizations are currently using predominantly ATM or Frame Relay, with some IP VPNs here and there. The cost differential between Frame Relay or ATM and MPLS is less than 10%. But if voice and video are added, it can reach 25% and justify the move to both MPLS and IPT.

However, many unresolved issues challenge widespread deployment of IPT. For example, the connection to emergency service access systems is problematic. Callers may not know that the IPT phone they are using is not connected to emergency services, creating potentially fatal problems. Surveillance is another public safety issue. Data encryption schemes are making it more difficult for law enforcement agencies to conduct surveillance because they may not be able to listen to IPT calls in the manner that has become standard in the circuit-switched world. For example, if police officers have to decrypt an IPT call instead of listening in real-time, they may have to wait three weeks after the call took place in order to use it as a basis for arrest.

IPT is being deployed today on a trial basis. The main application is currently LAN-to-LAN or cross-enterprise solutions, typically using matched gateways linked by private lines. The cost justifications are roughly split between toll bypass or WAN savings and cost reductions in moves, adds, and changes. Justifying the move to IPT therefore is largely centered initially around cost justification. Besides the benefit of toll bypass, IPT offers a reduction in the cost associated with staff; increased efficiencies due to using the existing IP data networks to also carry voice; less expensive moves, adds, and changes; and applications benefits, such as the ability to combine the Web and computer telephony integration applications and to provide a universal desktop device.

Regulatory Issues Surrounding IPT

IPT services are not seeing large growth yet, but they are definitely affecting decisions about WAN architectures. Ultimately, whether and how quickly we move to IPT is not just a question of technical solutions; the regulatory environment may determine whether these services flourish in the long run.

In an interview he did with the San Jose Mercury News on December 31, 2003, then-Chairman of the U.S. Federal Communications Commission (FCC; www.fcc.gov) Michael Powell said, “To be a phone company you don’t have to weave tightly the voice service into the infrastructure. You can ride it on top of the infrastructure. So if you’re a Vonage, you own no infrastructure, you own no trucks, you roll to no one’s house. They turn voice into an application and shoot it across one of these platforms and suddenly you’re in business. And that’s why if you’re the music industry you’re scared. And if you’re the television studio, movie industry, you’re scared. And if you’re an incumbent infrastructure carrier, you’d better be scared because this application separation is the most important paradigm shift in the history of communications and will change things forever. I have no problem if a big and venerable company no longer exists tomorrow, as long as that value is transferred somewhere else in the economy.” These are interesting words to contemplate as we try to predict what the regulatory approach toward IP communications will be, and the approach will no doubt vary around the world.

The area of international regulation has seen increasing activity, mostly from the United Nations (UN) and the ITU (which has regulated spectrum and telephony for decades and was placed under the auspices of the UN). For some time, the ITU has been considering what role it should take in relationship to the Internet, a medium that grew up completely independent of the ITU and where most activists see no positive role for the ITU. Another UN/ITU activity, the World Summit on the Information Society, has established a working group on Internet governance to look into these issues. A new set of European Union (EU) directives will significantly affect the licensing requirements of IPT in Europe. The European Commission (EC) is the driving force for determining whether IPT should be treated and licensed as conventional telephony. Member states are allowed some discretion as to the implementation of the EC’s directives, but the EC does not issue telephony licenses; that is left to the member states. However, many issues still require resolution, including wire tapping, interconnection and regulatory fees, universal service contributions, and access to a system that allocates telephone numbers and a directory system.

European legislators and regulators have not finished the debate on how to treat IPT and are seeking suggestions from the industry on new developments and technical solutions. For instance, regarding wire tapping, Europeans are closely observing any new developments in the United States. In particular, at the FCC, VoIP is by default treated as an information service and currently does not contribute to the Universal Service Fund.

The next generation of IPT will offer strategic value to the enterprise by combining presence-aware communications applications with extended converged networks. This is possible thanks to two technological advances: first, the ability of extended converged IP networks to unify voice, data, wireline, and wireless domains, supporting applications that unify the end users’ communications experience, and second, the continued growth and processing power of bandwidth and storage. As bandwidth and storage become less expensive and more abundant, there will be better and more choices for software services and content.

The Next Generation of IPT

The IPT evolution has so far been through three phases:

- Point-to-point communications over IP networks—These communications are largely based on the use of ITU H.323 standards, a philosophy of having intelligence everywhere.

- Master/slave architectures—These architectures rely on standards such as Megaco and Media Gateway Control Protocol (MGCP). This approach is an effective way to implement rich feature sets in dumb endpoints.

- The integration of communications media with the Internet—This strategy involves intelligent endpoints and a dumb network. The relevant standards include SIP and SIP for Instant Messaging and Presence Leveraging Extensions (SIMPLE).

Next-generation IPT applications are most distinguished by two specific applications: (1) synchronous communications that streamline and enrich business processes and (2) presence awareness that allows one to see who is available for collaboration and how to reach them. Key IPT productivity benefits for the field force include increased efficiencies among service employees, shortened reaction times, improved service quality, and an increase in the number of customer contacts. For the sales force, the benefits of IPT include a reduction in the number of client appointments needed, lower service costs, shortened proposal and negotiation processes, and improved transparency of the sales processes. For the supply chain, the key productivity benefits include reduction of inventory levels, improved data quality, fewer disruptions in downstream processes due to logistical errors, and lower total logistical costs.

The IPT Network

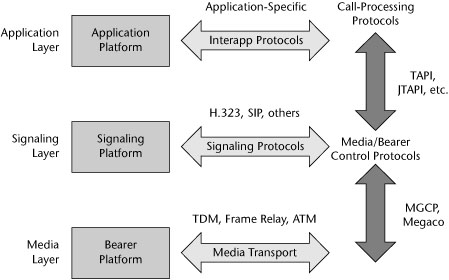

As shown in Figure 9.1, the IPT network taxonomy has three major layers:

- Media layer—In this layer, on the bearer platform, media is processed, including the media transport, QoS, and items such as tones and announcements. Two bearer platforms communicate with one another over media transport, and this is where TDM, Frame Relay, ATM, and MPLS apply.

- Signaling layer—Signal processing, signaling conversion, resource management, and bearer control occur on this layer, on the signaling platform. Signaling platforms talk with one another by using a signaling technique. This is where H.323, SIP, and other call control protocols apply.

- Application layer—This layer, referred to as the application platform, is home to call intelligence, and it is where service creation and execution as well as provisioning management occur. Application platforms talk to one another with application-specific interapplication protocols.

Figure 9.1 The IPT network taxonomy

Between the bearer and signaling platforms are media/bearer control protocols. This is the realm of MGCP and Megaco. Between the signaling and application layers are call-processing protocols such as Telephony Applications Programming Interface (TAPI) and Java TAPI (JTAPI).

In terms of the transport infrastructure, a number of packet network alternatives can be used, and the full range of packet-switching techniques—including IP, Frame Relay, ATM, MPLS, and GMPLS, as well as Ethernet, Voice over Wireless LAN (VoWLAN), Voice over DSL (VoDSL), and Voice over Cable—can act as the transport infrastructure.

Until recently, the IPT business model has been much like the traditional circuit-switched world, with each vendor supplying its own platform, handset, gateway, call-processing, and end-user feature sets. Convergence for the early IPT player involved converging voice onto the data network and selling telephony to the IT buyer. To this day, one of the greatest challenges is for traditional voice vendors to learn to sell what the data people buy.

Many in the industry view long-distance as the first major market disruption in the transition from TDM to IP and handsets as the second. Initially, IPT had the most disruptive effect on carriers’ transmission networks. This promoted a competitive triangle between the handset, the platform, and the network. Industry analysts predict that the next few years will see battle between these three industries as each attempts to commoditize the other two.

The IPT Network Architecture

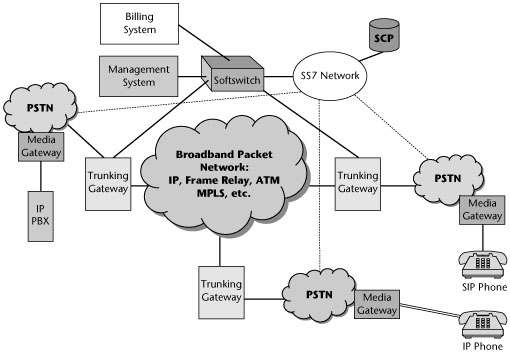

The first requirement for an IPT network is an IP local exchange or media gateway (also referred to as a VoIP gateway). Gateways are carrier-class products that reside in the service provider network. They provide PBX-like telephony service to multiple business and telecommuting customers as well as basic telephony services for consumers. Another requirement is the softswitch (also called a call server or call agent) for call-processing functions and administrative software. End-user services are delivered by IP phones and other devices. Finally, IP-based voice enterprise systems include IP PBXs, open-source PBXs, and service provider solutions. Figure 9.2 shows an example of the IPT network architecture, and the following sections describe the components.

Figure 9.2 IPT network architecture

Media Gateways

Gateways provide seamless interoperability between circuit-switching and packet-switching network domains. They interconnect with the Signaling System 7 (SS7) network and handle IP services. They support a variety of telephony signaling protocols, such as H.323, Megaco (H.248) and MGCP, and SIP. They support Class 4 (i.e., toll) switches and Class 5 switches (as in local exchange services). They operate in the classic public network environment, where call control is separate from media flow. They support a variety of traffic, data, voice, fax, multimedia, and so on over a data backbone.

Softswitches

In enhanced applications such as network conferencing, network interactive voice response, fax serving, network voicemail, and directory services, a softswitch controls the voice or data traffic path by signaling between the media gateways that transport the traffic. The gateway provides the connection between an IP or another packet-based network (such as ATM or MPLS) and the traditional circuit-switched network, acting as a multiprotocol cross-connect. The softswitch ensures that a call’s or connection’s underlying signaling information gets communicated between gateways. The signaling information may include automatic number identifiers, billing data, or other call triggers.

Softswitches must communicate with packet switches, VoIP gateways, media gateways, and SS7 networks by using standardized protocols. A number of different technical specifications, protocols, and standards are involved in delivering these services and the desired end functions. As discussed in more detail later in this chapter, they include H.323, Megaco and MGCP, SIP, Real-Time Transport Protocol (RTP), Real-Time Transport Control Protocol (RTCP), RTCP Reporting Extension (RTCP XR), and Secure Real-Time Transport Protocol (SRTP).

IP Devices

A number of IP devices are available:

- Legacy support—Simple terminal adapters are available for standard telephones.

- IP hard phones—These are IP phones that look and work just like a traditional multiline business display phone. They plug in to an Ethernet RJ-45 jack. Typically, they’re proprietary and part of a vendor’s platform. Their original prices were similar to PBX phones, around US$300 and up, but are now down to less than US$100. Emerging IP phone-on-a-chip technologies promise dramatically lower prices in the near future.

- IP soft phones—IP soft phones are software that runs on a user’s PC and graphically resembles a phone. The key advantage of this approach is its low price. IP soft phones are gaining acceptance because applications such as presence management and IM are much better on a PC than on other devices. The disadvantages lie in the fact that an IP soft phone relies on the PC sound card, which can create problems with the volume level when switching between the soft phone and other applications that use the PC sound card. However, products such as the Clarisys USB handset (www.clarisys.com) overcome these problems. This device replaces the headset, rings audibly, includes a speakerphone, and, as a USB device, avoids the PC sound card.

- IP Web phones—These devices combine a complete analog phone device and a diskless processor, providing an e-mail browser and a variety of organizer applications such as a call list and directory manager, a notepad, and a calendar.

- SIP phones—SIP has the potential to realize the ultimate IPT dream: low cost, feature-rich headsets from third-party phone vendors. SIP for VoIP phones is mature and solid, with at least six models passing the test. Major platform vendors continue to rely on propriety protocols for both their platforms and their handset features. This suggests that despite talk of support for SIP, complete openness and interoperability have yet to be achieved. However, vendors can test the interoperability of their SIP implementations with other vendors three times per year at the SIP Forum SIP IT events (www.sipforum.org).

- Mobile IP devices—This category includes 3G handsets, Wi-Fi SIP phones, PDAs equipped to handle VoIP, and game platforms.

In addition, the emerging generation of intelligent wearables, automobiles, smart appliances, smart rooms, and ultimately implants will all add to the expanding universe of IP devices.

IP-Based Voice Enterprise Systems

There are three main approaches to IP-based voice enterprise systems: IP PBXs, open-source PBXs, and service provider solutions, which include IP centrex, hosted PBX, and virtual PBX services. IP PBX and IP centrex offer network management advantages over legacy PBX solutions.

IP PBXs What can an IP PBX environment do that a traditional PBX can’t? IP PBXs boast dozens of new applications, ushering in a new era of human communications, productivity, and mobility. These enhanced and advanced applications are a very hot area, representing the potential for incredible revenue. Significant progress has been made in several key applications areas: unified messaging, which is defined today as the merger of voicemail, e-mail, and fax; conferencing applications; collaboration applications; presence awareness; multimedia packages; mobility features; and extensible SIP support.

The most notable trends include the fact that video is coming to the desktop. Point-to-point or multiparty video is being offered by the majority of leading IP PBX vendors, and the cost per desktop is now generally under US$200. Document collaboration now allows document sharing to be more than a one-way display of PowerPoint slides. Impressive new capabilities from leading vendors include real-time coediting and collaborative working on the same document. In addition, exciting new mobility capabilities are being developed, ranging from a simple SMS gateway that lets local IM users send short messages directly to coworkers’ mobile phones and pagers to sophisticated call control features with which remote and traveling workers can easily place calls and set up complex conferences.

Not every product will contain each and every one of the key collaboration and conferencing features, but the following is a comprehensive list of the types of features that are available: soft phone support; the use of presence, be it dynamic or static; mobility features; conferencing, either scheduled or ad hoc; search capabilities; contacts and database access and integration; video (either point-to-point or multipoint); call control of external devices as well as call control filters that allow you to find me and follow me; recent call history; the ability to sort or quick-dial; IM, with chat or multiparty IM; document viewing and presentation; document collaboration and coediting; and whiteboarding and Web cobrowsing. As this list shows, a significant number of productivity-enhancing features are becoming available and adding a new dimension of value to IP PBX functionality.

The key unified messaging features include redundant voicemail servers; text-to-speech readout of e-mail (in multiple languages); automatic speech recognition; an inbox that shows the caller by automatic number identification and/or name; voicemail and e-mail that indicate the message duration; the ability to reply to and forward voicemail and e-mail; the ability to add other attachments; the ability to send voicemail and e-mail via distribution lists; telephone user interface–based retrieval of voicemail or e-mail; callout of system voicemail delivery; voicemail notification options; scheduled delivery of voicemails; the ability to dial back from the inbox interfaces; and mobility features.

Early marketing efforts for IPT systems focused on a variety of cost-saving and productivity benefits, with little emphasis on the advantages of a more flexible system design. However, it is becoming evident that these two benefits are best realized by taking advantage of a third aspect of IP PBXs: their inherent ability to provide a more modular and survivable configuration compared with traditional circuit-switched TDM PBXs. Customers with multiple premises can configure an IP PBX to operate seamlessly across two or more locations at a lower cost and with greater performance capabilities than a network of standalone systems would offer.

One of the major factors in the accelerated migration to the new IP-based platforms is their ability to replace multiple standalone systems, such as key telephone systems, hybrids, or standard PBXs, with a single IP PBX system that uses survivable remote equipment to create a dispersed architecture. The ability to leverage an existing data network infrastructure for both call control signaling and voice communications transmission is a relatively recent enhancement to IPT. However, because IPT architectures are based on LAN/WAN connections, the enterprise must ensure that backup systems are in place in the event of a loss of LAN/WAN connectivity.

Several IPT design options are available to customers who favor a single-system approach to serve multiple premises. These options range from the simplicity of remote, standalone IP telephones to standalone servers coupled with fully provisioned distributed port carrier cabinets that function as media gateways between traditional station trunk connections and the LAN/WAN. The customer money saved using a distributed or dispersed IPT system solution for multiple-premises communications requirements can be substantial, and a single-system design can also provide significantly enhanced performance and operating benefits. Using an IP PBX system design that supports multiple locations allows advanced features such as station user roaming and automatic call distribution to be accessed and implemented by system subscribers, regardless of their physical location. This contributes to the enhanced performance potential of the single distributed system solution with local survivability options.

Open-Source PBXs The theory behind open source is that too many cooks actually improve the broth. Widespread peer review finds and fixes bugs faster than the efforts of a smaller, albeit dedicated, project team. The result is often more robust software, lower support costs, and more rapid innovation. Of course, this challenges the established IP PBX pricing model while facilitating standards-based cooperation. However, customer concerns about product schedules and quality detract from trust in the open-source model.

Service Provider VoIP Solutions The three major approaches to service provider VoIP solutions are IP centrex, hosted PBX, and virtual PBX services, such as provider-managed call servers, application servers, and media gateways.

The main driver of service provider VoIP solutions is reducing the enterprise administration and reducing capital expenditures. Voice quality is often comparable to that in the PSTN. But the greatest benefit is the cost-efficient bundling of voice, Internet access, and enhanced features, including an auto-attendant, a Web-based dashboard for each user and administrator, integrated messaging (e.g., voicemail as e-mail file attachments), redirection to other phones, and simultaneous ringing of up to 10 phones.

Standards for IP Voice

Before we talk about the standards for IP voice, we need to discuss the characteristics of voice traffic. First of all, voice traffic is an isochronous traffic flow, meaning it involves real-time communications that are delay sensitive as well as loss sensitive. Voice has a low bandwidth requirement, but as a tradeoff, it requires very high QoS.

Digital Voice Technologies

A number of digital voice technologies are enabling IP voice as we know it today. The primary component is the codec (coder-decoder), which is used to digitize the voice stream. Two broad categories of codecs are used:

- Waveform coders—Waveform coders directly encode speech in an efficient way by exploiting temporal and/or spectral characteristics. There are several categories of waveform coders. The first is time domain, or predictive, coders, and these include the well-known standards G.711, known as Pulse Code Modulation (PCM; µ-Law in the North American and Japanese standards, using 56Kbps, and A-Law for those following the ITU standards, at 64Kbps), and G.726, known as Adaptive Differential PCM (ADPCM), which encodes voice at a reduced rate of 32Kbps, 24Kbps, or 16Kbps. Two other types of waveform coders are G.722 subband coders and transform coders, which are used to convert between different digitizing techniques.

- Source coders, or vocoders—Vocoders estimate and efficiently encode a parametric representation of speech. The standards under this category include G.728, which is also known as Low-Delay Code-Excited Linear Prediction (Low-Delay CELP) and supports digital voice at 16Kbps; G.729, known as Conjugate Structure Algebraic CELP, which reduces voice down to 8Kbps; and G.723.1, known as Multipulse Maximum Likelihood Quantization, which carries voice at 6.4Kbps.

Another element of digital voice technologies is echo cancellers, which are used to eliminate echoes. The voice echo path is like an electrical circuit: If a break or a cancellation is made anywhere in the circuit, an echo canceller can eliminate the echo. The easiest place to make the break is with a canceller looking into the local analog/digital telephony network. The echo canceller at the local device cancels local echoes from the hybrid reflection, the echo canceller at the other end of the call eliminates the echoes you hear, and vice versa.

The last part of digital voice technologies is noise generation. As it turns out, silence is not always golden. When speech stops, we generate comfort noise to help assure people that the “circuit” is still live, that the call hasn’t dropped. The simple techniques involve playing white or pink noise or replaying the last receiver packet over and over. More sophisticated techniques include options in which the transmitter measures the local noise environment. The transmitter then sends a special comfort noise packet as the last packet before silence. The receiver generates noise based on the comfort noise packet. One of the major issues in carrying voice in a packet form, be it IP, Frame Relay, ATM, or MPLS, is delay, which is discussed in the following section.

Delay, Jitter Buffers, and Error Concealment

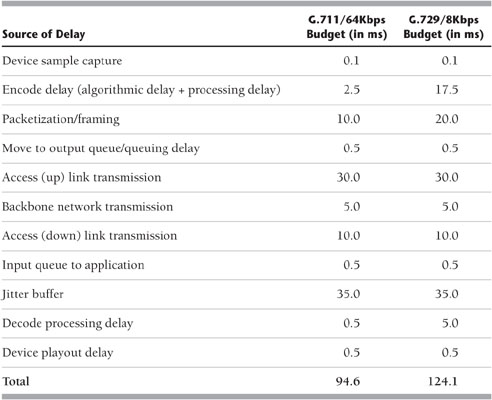

Table 9.1 shows some of the myriad sources of delay associated with VoIP. The two columns at the right are based on different codec standards. As you can see, the device sample capture introduces a bit of delay, followed by encoding delay, packetizing and framing delay, queuing delay, access uplink and downlink as well as transmission over the backbone, and various jitters that might be associated with the transmission, the decoding process, and ultimately, the playout process. The budget that results is fairly high. In G.711 at 64Kbps, the total delay is 94.6 milliseconds, while with G.729 at 8Kbps, the total delay is 124.1 milliseconds. That’s very large, especially when you consider the recommended standards for maximum delay. The ITU-T, for purposes of voice, recommends a maximum delay of 100 milliseconds end to end, with a preference, of course, for much less.

Table 9.1 Standards for IP Voice Delay

Delay is very important in real-time communications. For instance, for video, the ITU-T recommendation is 80 milliseconds maximum end-to-end delay. And when we get into some of the interactive applications such as teleimmersion or videogames, the tolerance for delay is only 30 to 50 milliseconds maximum end to end. There is a lot to consider in adequately addressing the QoS for voice from the standpoint of delay, both end to end and jitter.

As mentioned earlier in this chapter, speech is inherently isochronous. It occurs in real-time, requires a fixed amount of bandwidth, and is not very tolerant of delays or losses. Transmitters emit packets at fixed intervals, but packet networks have queues. As a result, the receivers see interarrival jitter. What do we do about this? The solution is jitter buffers. There are two varieties: fixed jitter buffers and adaptive jitter buffers. Fixed jitter buffers work as follows: A playout point is set to some fixed number of packets in the future, and the hope is that no packets arrive later than the playout point. Late arrival equals lost packets, so there is a delicate tradeoff between delay-induced impairment and loss-induced impairment. Fixed jitter buffers are useful when delay doesn’t matter, as with Internet radio, or with nonstop sources, such as fax. With adaptive jitter buffers, the ideal is to converge to minimal delay with zero loss. You need breathing room to move the playout point to address talk spurt boundaries or silence. Adaptive jitter buffers can do fancy audio processing, such as speeding up or slowing down the rate. They rely on algorithms that adapt the playout point close to measured jitter, and if loss occurs, they back off.

Another issue we have to deal with is error concealment. The techniques for this depend on the codec standards used. With waveform coders, the technique is to replay the previous packet, to interpolate, and to predict. With the CELP coders, coefficients are used to predict the gain, pitch, and so on of the missing packet. There are also some advanced adaptation techniques, such as forward error correction (FEC), in which extra packets with FEC are sent over some number of the packets. As another example, with layered coders, you send higher- or lower-fidelity or different encodings on different streams. Receivers then subscribe to appropriate layers. This is especially valuable for multicast.

Media Transport Requirements

Media transport requirements include identification of the payload, the type of codec used, the framing used, timing reconstruction, when to play out each packet within a stream, and how to synchronize multiple streams. Note that an external reference clock is needed to correlate time stamps of different RTP streams. Another requirement is sequencing—that is, how to play out in the correct order and how to detect losses.

The media transport functions include moving bits, which is accomplished with RTP and SRTP. Feedback and statistics need to be provided as well, and this is accomplished using RTCP as well as the emerging standard RTCP XR, which is a reporting extension being developed by the IETF’s AVT (Audio/Video Transport) working group.

These are the main transport protocols:

- RTP—RTP, specified in RFC 3550, is a transport protocol for real-time applications. It is used by all VoIP signaling protocols, and it is based on User Datagram Protocol (UDP). RTP provides media transport functions as well as additional features. It is multicast friendly and easy to encrypt, it provides QoS feedback with RTCP, and it requires minimal session control.

- RTCP—RTCP is based on the periodic transmission of control packets to all participants in the session, using the same distribution mechanism as the data packets. RTCP provides feedback on the quality of the data distribution. This is an integral part of RTCP’s role as a transport protocol and is related to the flow and congestion control functions of other transport protocols.

- SRTP—There is considerable interest in the use of SRTP, a relatively new protocol, to prevent eavesdropping on VoIP calls. SRTP permits the voice packet payload to be encrypted. It also allows other protocol messages, such as RTCP messages, to be protected. Encryption protects privacy. However, diagnostic troubleshooting tools cannot analyze encrypted voice payload. That’s where RTCP Reporting Extension (RTCP XR) comes in. It allows information hidden by SRTP to be extracted directly from the digital signal processing software, IP phones, and gateways and reported directly in the RTCP XR message. Of course, this assumes that the RTCP XR messages themselves are not encrypted.

- The advantages of SRTP are that it provides both privacy via encryption and authentication via message integrity checking. It uses very little bandwidth for overhead, and it uses modern and strong cryptology suites, such as Advanced Encryption Standard (AES) countermode for encryption and Keyed Hashing for Message Authentication for message integrity.

IPT QoS

QoS is managed unfairness because some users purposely get better service than others. As discussed further in Chapter 10, QoS is needed to address the stringent delay requirements of voice traffic.

Several techniques, including the following, can be used to improve various aspects of a network’s QoS:

- Random Early Detection (RED)—RED is a queue management algorithm and a congestion avoidence algorithm. RED monitors the average queue size and drops packets based on statistical probabilities. If the buffer is almost empty, all incoming packets are accepted. As the queue grows, the probability for dropping an incoming packet grows, too. On a shared voice/data queue, RED does not prevent large delay and jitter for voice. RED is primarily effective for avoiding congestion, and it works best for congestion-responsive flows such as TCP. But voice has constant bit rate and, hence, is not usually responsive, and it uses UDP. So although RED is a good mechanism for many network applications, it is not the most effective for IP voice.

- Weighted Fair Queuing (WFQ)—With WFQ, each flow gets a share of the server link bandwidth in proportion to its weight. Per-packet delay guarantee in a WFQ scheduler depends on the accuracy of the WFQ implementation.

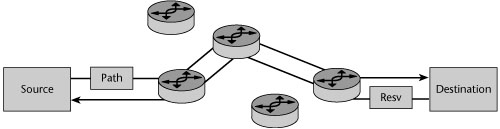

- IntServ RSVP architecture—As discussed in Chapter 10, RSVP is the primary specification for handling multimedia traffic over IP subnets (see Figure 9.3). RSVP messages are carried over IP/UDP. RSVP enhances connectionless best-effort service by providing QoS requests and guarantees. There are two main classes of service: RFC 2211 specifies control-load or best-effort service, and RFC 2212 is the guaranteed class of service (CoS), offering bandwidth and delay guarantees. RSVP relies on a router-to-router signaling scheme, which allows IP applications to request priority, delay, and bandwidth guarantees. The connection is established link by link and is denied if a router cannot accept the request. Signaling is distinct from routing. Transparent operation across non-RSVP routers is possible. RSVP supports shared and distinct reservations, applies to unicast and multicast applications, and is simplex and receiver oriented. RSVP is well suited for real-time applications and delay-sensitive traffic. However, it does not scale well in large networks, so it is not used much in WANs.

Figure 9.3 VoIP QoS: IntServ RSVP

- IP precedence—IP precedence is the poor-man’s approach to QoS. The IP precedence or DiffServ Code Point (DSCP; discussed in detail in Chapter 8, “The Internet and IP Infrastructures”) is set higher on voice packets. This puts them in a different queue, resulting in isolation from best-effort traffic. It can be done by the endpoint, by a proxy, or in routers through heuristics. IP precedence scales better than RSVP, and it keeps QoS control local. It pushes the work to the edges of the boundaries and can provide QoS by either customer or network. However, it has no admission control, so too much high-precedence traffic can still swamp the network.

- DiffServ—DiffServ employs a small, well-defined set of building blocks from which a variety of services can be built. Although DiffServ evolved from the IntServ architecture, DiffServ is a prioritization model with preferential allocation of resources based on traffic classification. DiffServ uses the DSCP to carry information about IP packet service requirements. It classifies traffic by marking IP headers at ingress to the network with flags corresponding to a small number of per-hop behaviors. In other words, queues get different treatments in terms of priority, share of bandwidth, and probability of discard. The DiffServ architecture assumes a relatively small number of feasible queue-scheduling algorithms for high link speeds. It also assumes a large number of individual flows, with many different rules, often being policy driven. The nodes at the edge of the boundaries do the hard work: They group packets explicitly by the per-hop behavior they are to receive (the queue service, the classification, and the conditioning and policing of those packets). The nodes in the middle of a cloud only have to deal with traffic aggregates. QoS is managed by local rules within the cloud, and QoS information exchange is limited to boundaries. It is bilateral, not multilateral, and it is not necessarily symmetric. Inter–service provider DiffServ and end-to-end Internet QoS need further standardization and commercial arrangements.

- Compressed Real-Time Transport Protocol (CRTP)—CRTP is basically RTP compression. Normally, the IP header is 20 bytes, the UDP header is another 8 bytes, and the RTP header is 12 bytes, resulting in a 40-byte header. CRTP can reduce the header to 2 to 4 bytes, which is really useful on slow links.

- Multi-Class Multi-Link (MCML) PPP—The idea with MCML PPP is that single-packet latency is unacceptable on slow links. A 1,500-byte message transfer unit takes 215 milliseconds to transmit on a 56Kbps link, which is significantly more than the recommended delay. MCML PPP allows multiple fragment streams on a multilink PPP session. It sets the fragment size to match the delay budget of the link (e.g., 128 bytes equals 18 milliseconds). Small voice packets are interleaved between the fragments of big packets by applying WFQ at the fragment level.

VoIP Call-Signaling Protocols

Three major VoIP protocols are used in call signaling. The first standard applied to support interoperability between VoIP systems was the ITU-T’s H.323. While it has the advantage of being a mature standard, there are some negatives associated with it, including its complexity, its tuning for PC conferencing rather than telephony, its very bad scaling properties, and its difficulty working over firewalls. The second protocol that came into play is MGCP, which advocates a centralized control architecture. It is fairly simple: It models current PSTN call control architecture but requires high-reliability and high-availability call servers. The third protocol is SIP, which advocates a decentralized control architecture. SIP is transaction based, which means it is a good match for what’s called the stupid network paradigm, where it is relatively easy to build in scalability and reliability and there are built-in security mechanisms.

H.323

H.323 is a protocol suite defined by the ITU-T for voice transmission over the Internet. First published in 1996, the latest version of H.323, Version 5, was completed in 2003. In addition to voice applications, H.323 provides mechanisms for video communication and data collaboration. H.323 defines a centralized architecture for creating multimedia applications that use VoIP, and it is an umbrella specification that includes various other ITU standards.

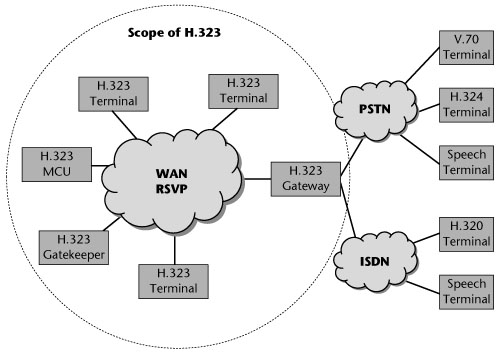

The H.323 architecture includes the following components (see Figure 9.4):

- Terminals—A terminal is the end device of every connection. It provides real-time two-way communications with another H.323 terminal, gateway, or MCU. This communication consists of speech, speech and data, speech and video, or a combination of speech, data, and video.

- Gateways—Gateways establish the connections between the terminals in the H.323 network and the terminals that belong to networks with different protocol stacks, such as the traditional PSTN network, SIP, or Megaco endpoints.

- Gatekeepers—Gatekeepers are responsible for translating between telephone numbers and IP addresses. They also manage the bandwidth and provide mechanisms for terminal registration and authentication. A gatekeeper also provides services such as call transfer and call forwarding. The main gatekeeper functions fall into two categories: mandatory services and optional services. Mandatory services address translation, admission control, bandwidth control, and zone management. Optional services include call control signaling, call authorization, bandwidth management and reservation, call management, and directory services.

- Multipoint control units (MCUs)—MCUs establish multipoint conferences. An MCU consists of a mandatory multipoint control, which is for call signaling and conference control, and an optional multipoint processor, which is for switching or mixing of media streams and sometimes real-time transcoding of the received audio/video streams.

Figure 9.4 The H.323 architecture

Five types of information exchange are enabled in the H.323 architecture: audio; digitized voice; digitized video; data, including files or images; and communication control, which involves the exchange of supported functions, control of logic channels, and control of connections and sessions (i.e., the setup and teardown).

H.323 has a number of strengths. For one thing, it is an ITU standard. In addition, it is a mature protocol with many large-scale deployments. It has widespread vendor support and market acceptance, and it facilitates interoperability between vendors. Finally, it has defined standards for supplementary services, the network retains the call state for the duration of the call (providing greater call control for the user), and application services are available through the gatekeeper and best-of-breed application platforms.

H.323 suffers from some limitations as well: As mentioned earlier, it is complex, it is tuned for PC conferencing rather than telephony, it has very bad scaling properties, and getting it to work reliably over firewalls is quite a challenge, especially if both parties in a call are behind firewalls (e.g., only four TCP connections per call are allowed). Maintaining call state in the network actually increases the cost to scale. Many soft phones are proprietary, so they are not widely deployed.

Megaco and MGCP

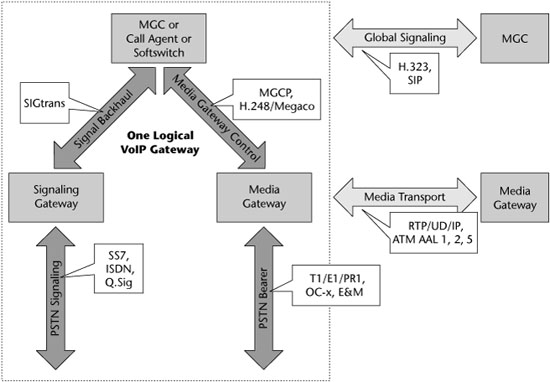

Both Megaco (Media Gateway Controller) and MGCP (Media Gateway Control Protocol) are protocols for control of elements in a physically decomposed multimedia gateway, which enables separation of call control from media conversion. This means that the system is composed of a call agent, at least one media gateway whose function is to perform the conversion of media signals between circuits and packets, and at least one signaling gateway when connected to the PSTN. Both Megaco and MGCP are media/device control protocols. They both embrace a philosophy in which the network is smart and the endpoint is dumb. Services are provided by intelligent network elements.

So why are there two standards, Megaco and MGCP? As usually is the case, it is the result of different parties with different interests and agendas. Megaco was created by joint efforts of the IETF and the ITU. Megaco is the IETF name and is defined in IETF RFC 3525. Within the ITU it is known as ITU Recommendation H.248. MGCP originated with Cisco and is defined in an informational (nonstandard) IETF document, RFC 3435. Megaco is less widely implemented than MGCP. Megaco and MGCP are client/server protocols, so they allow service providers to have more control over subscribers through the use of more network intelligence. This is in contrast to H.323 and SIP, which are both peer-to-peer protocols. With peer-to-peer protocols, all the intelligence is distributed to the network edge, embedded in the terminating devices or endpoints, requiring only a simple core network and great scalability but reducing the network’s control over the user.

Megaco addresses the relationship between a media gateway, which converts circuit-switched voice to packet-based traffic, and a media gateway controller (also referred to as a call agent or softswitch), which dictates the service logic of that traffic. Megaco instructs a media gateway to connect streams coming from outside a packet or cell data network onto a packet or cell stream such as RTP. Megaco is similar to MGCP from an architectural standpoint and in terms of the controller-to-gateway relationship, but Megaco supports a broader range of networks, such as ATM.

As shown in Figure 9.5, MGCP is a VoIP protocol used between elements of a decomposed multimedia gateway that consists of a call agent, which contains the call control intelligence, and a media gateway, which contains the media functions (i.e., conversation from TDM voice to VoIP). Media gateways contain endpoints on which the call agent can create, modify, and delete connections in order to establish and control media sessions with other multimedia endpoints. A media gateway is typically a network element that provides conversion between the audio signals carried on telephone circuits and data packets carried over the Internet or over other packet networks. The call agent can instruct the endpoints to detect certain events and generate signals. The endpoints automatically communicate changes in service state to the call agent. Furthermore, the call agent can audit endpoints as well as the connections on endpoints. MGCP assumes a call control architecture where the call control intelligence is outside the gateways and handled by call agents. It assumes that call agents will synchronize with each other to send coherent commands and responses to the gateways under their control. MGCP does not define a mechanism for synchronizing call agents. It is, in essence, a master/slave protocol where the gateways are expected to execute commands sent by the call agent.

Figure 9.5 Megaco and MGCP

MGCP assumes a connection model where the basic constructs are endpoints and connections. Endpoints are sources and/or syncs of data and can be physical or virtual. Creation of physical endpoints requires hardware installation, while creation of virtual endpoints can be done by software. Connections can be either point to point or multipoint. A point-to-point connection is an association between two endpoints with the purpose of transmitting data between those endpoints. When that association is established for both endpoints, data transfer between them can take place. A multipoint connection is created by connecting the endpoint to a multipoint session. Connections can be established over several types of bearer networks. In the MGCP model, the gateways focus on the audio signal translation function, and the call agent handles the call-signaling and call-processing functions.

SIP

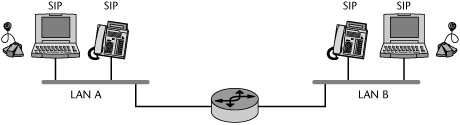

SIP, which is standardized under RFC 2543, is a peer-to-peer protocol in which end devices, known as user agents, initiate sessions. SIP is designed in conformance with the Internet model. It is an end-to-end signaling protocol, which means that all the logic is stored in end devices, except the routing of SIP messages. State is also stored in end devices only. There is no single point of failure with SIP, and networks designed this way scale well. The tradeoff for the distributiveness in scalability is the higher message overhead that results from the messages being sent end to end. The aim of SIP is to provide the same functionality as the traditional PSTN, but with an end-to-end design that makes SIP networks much more powerful and open to the implementation of new services.

SIP is an application-layer control protocol that can establish, modify, and terminate multimedia sessions. (Examples of a session include Internet telephone calls, distribution of multimedia, multimedia conferences, and distribution of computer games.) SIP can also invite participants to already existing sessions, such as a multicast conference. Media can be added to and removed from an existing session. SIP transparently supports name-mapping and redirection services, which make personal mobility possible. Users can maintain a single externally visible identifier, regardless of their network locations.

SIP support five facets of establishing and terminating multimedia communications: user location (the determination of the end system to be used for communication), user availability (the determination of the willingness of the called party to engage in communications), user capabilities (the determination of the media and media parameters to be used), session setup (the establishment of session parameters at both the called and calling parties), and session management (the transfer and termination of sessions, modification of session parameters, and invocation of services).

SIP can be used with other IETF protocols to build a complete multimedia architecture. For example, it can be used with RTP for transporting real-time data and providing QoS feedback, with Real-Time Streaming Protocol (RTSP) for controlling delivery of streaming media, with MGCP for controlling gateways to the PSTN, or with Session Description Protocol (SDP) for describing multimedia sessions. Although SIP should be used in conjunction with other protocols to provide complete services to users, the basic functionality and operation of SIP do not depend on any other protocols.

SIP, which works with both IPv4 and IPv6, operates as follows for Internet telephony sessions: Callers and callees are identified by SIP addresses. When making a SIP call, a caller locates the appropriate server and then sends a SIP request. The most common SIP operation is the invitation. Instead of directly reaching the intended callee, a SIP request may be redirected or may trigger a chain of new SIP requests by proxies. Users can register their locations with SIP servers; SIP addresses can be embedded in Web pages and, therefore, can be integrated as part of powerful applications such as click-to-talk.

The purpose of SIP is just to make the communication possible (see Figure 9.6). The communication itself must be achieved by another means and possibly another protocol. As mentioned earlier, two protocols that are most often used along with SIP are RTP and SDP. RTP is used to carry the real-time multimedia data, including audio, video, and text. RTP makes it possible to encode and split the data into packets and transport the packets over the Internet. SDP is used to describe and encode the capabilities of session participants. This description is then used to negotiate the characteristics of the session so that all the devices can participate. For example, the description is used in negotiation of the codecs used to encode media so all the participants will be able to decode it and in negotiation of the transport protocol to be used.

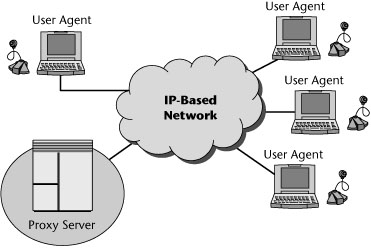

SIP Network Elements

Basic SIP elements include user agents, proxies, registrars, and redirect servers (see Figure 9.7). User agents usually but not necessarily reside on a user’s computer in the form of an application, but user agents can also be cellular phones, PSTN gateways, PDAs, automated integrated voice response systems, and so on. User agents are often referred to as the user agent server (UAS) and user agent client (UAC). The UAS and UAC are logical entities only. Each user agent actually contains both. The UAC is the part of the user agent that sends requests and receives responses; the UAS is the part of the user agent that receives requests and sends responses.

Figure 9.7 SIP network elements

A proxy server is an optional SIP component. Proxy servers handle routing of SIP signaling, but they don’t initiate SIP messages. User agents can send messages to a proxy server. The most important task of a proxy server is to route session invitations closer to the callee. The session invitation usually traverses a set of proxies until it finds one that knows the actual location of the callee. Such a proxy then forwards the session invitation directly to the callee, and the callee then accepts or declines the session invitation.

A registrar server is another optional SIP component. It is a special SIP entity that handles registration from SIP user agents but does not route SIP messages. It receives registrations from users, extracts information about their current locations (such as IP address, port, and user name), and then stores the information in local databases. A registrar is very often a logical entity only and is usually collocated with proxy servers.

A redirect server is yet another optional SIP component. A redirect server receives requests and looks up the intended recipient of a request in the location database created by a registrar. It then creates a list of current locations of the user and sends this list to the request originator. The redirect server does not route SIP messages; rather, it returns a redirect to the user agent for direct routing.

SIP and Presence Systems

Presence is defined as the ability, willingness, desire, and capability of a user to communicate across media end devices and even time and space. Presence systems collect and distribute presence information to interested parties such as other users or applications. Policy—that is, who is allowed to see what and when—is central to presence. The value of presence is based on the richness of the data it has access to. Presence is the ability to see in real-time where someone is, how that person prefers to be reached, and even what the person is doing.

Today, only one in seven business calls is completed successfully. Instead, we get busy signals or are routed to voicemail. Users spend a lot of time trying many different numbers for the same person and calling many numbers in succession, trying to reach someone. Presence, therefore, has great value in improving the productivity of an organization because it can see ahead of time whether a call will succeed. It can tell the caller which is the best way to reach a user, and it can allow a caller to quickly see who among a set of candidates is available for a call.

Accuracy is paramount to presence. The greater the accuracy, the greater the value of presence. The worse the accuracy, the lower the value. Productivity enhancement depends on accurate presence data, and accuracy is achieved by combining multiple sources of presence data. There are many sources for presence data within an enterprise, such as detecting whether somebody is on a call or off a call, based on information from enterprise phones; determining whether a person is in a meeting or out of a meeting, based on information from the enterprise calendar systems; determining whether a person is in a conference or out of a conference, based on enterprise conferencing systems; or determining whether a person has logged in or logged out of the enterprise IM system.

Enterprise presence applications show presence on IP PBX phones and on PC-based IM applications. They can also support presence-based call routing as well as voicemail ringback. Two standards are involved in presence. The SIMPLE standard is a set of SIP extensions for IM and presence supported by Microsoft, IBM, Sun, and Novell. A competing standard, Extensible Messaging and Presence Protocol (XMPP), is an XML-based extensible open-source option that drives the Jabber IM client (www.jabber.com).

For all the good news about presence, there are also arguments against it. Most revolve around IM and the perception that it is intrusive and, at times, invasive. Most companies today use some form of IM, through mostly public services. The industry forecasts that we are only one to three years away from embedding presence in enterprise applications. Of course, standards, privacy, and security are major concerns.

SIP is creating new opportunities for platform providers that are introducing new soft phones. Proprietary soft phones incorporate SIP-based presence capabilities across both voice and IM, secure SIP-based IM behind the corporate firewall, IM archiving, click-to-talk voice functionality, speech-to-text functionality, and specialty modes for road warriors and telecommuters. There are also open-source SIP products. Not only do platform vendors have to worry about soft phones displacing their high-dollar hard phones, they have to worry about losing market share to some other open-source provider or to Microsoft.

Currently, SIP has no video specifications. When a vendor states that it uses SIP for video, it only means SIP is used for the signaling; it does not imply interoperability with other SIP video clients. In the near term, desktop video will be dominated by SIP-based endpoints, while the traditional group conferencing market will remain H.323 based; they will meet through SIP-to-H.323 gateways, as needed.

The SIP Forum (www.sipforum.org) and the IETF SIP protocol process are making it possible for many new SIP-based products to enter the market. SIP could disrupt the handset business, blurring the distinction between desk phones and mobiles because SIP can cross many boundaries, including desk phones, mobiles, PCs, PDAs, soft phones, intelligent appliances, and wearables.

SIP’s Strengths and Weaknesses

SIP’s strengths include tremendous industry support and widespread development. SIP soft phones, Ethernet phones, and application servers are available to integrate with IP clients and IM. SIP facilitates application development, and minimal call state duration is maintained in the network. SIP is central to all the latest solutions for telephony, ranging from hosted services to proprietary platforms, from handsets to soft phones to open-source software. The concepts behind SIP are disruptive and much more dramatic than VoIP on its own. The IETF still has a substantial amount of work to do to replicate in SIP the hundreds of TDM PBX calling features, but SIP is not just telephony: It involves many applications, such as presence, that enable capabilities that are not possible in the TDM world.

One shortcoming of SIP is a shortage of commercial and large-scale deployments at this time, resulting in a lack of real-world experience. Another problem is the lack of call state in the network, which affects billing and security issues. And of course, SIP is a moving target, with rapid development in progress. It is likely to be three to five years before presence and real-time communication standards become stabilized.

ENUM: Telephone Number Mapping

How do you find a telephone number on the Internet? How do you find islands of connectivity across domain boundaries? The answer is the Electronic Number Mapping Standard (ENUM). ENUM, defined in RFC 2916, is an IETF protocol that will assist in the convergence of the PSTN and the IP network. It maps a telephone number from the PSTN to Internet services: You put a telephone number in and get a URL out.

ENUM was developed as a solution to the question of how to find services on the Internet by using only a telephone number and how telephones, which have an input mechanism limited to 12 keys on a keypad, can be used to access Internet services. ENUM resolves a complete international telephone number to a series of URLs by using an architecture based on the Domain Name System (DNS). ENUM puts telephone numbers into the DNS, so it allows for a wide range of applications based solely on a phone number. Probably the most exciting application is an improvement in VoIP. Other applications include addressing for fax machines, e-mail, IM, and Web sites. The possibilities are enormous.

The ENUM protocol is the result of work of IETF’s working group for telephone number mapping (www.enum.org). The charter of this group was to define a DNS-based architecture and protocols for mapping a telephone number to a uniform resource identifier (URI) that can be used to contact a resource associated with that number. (The syntax of the URI is defined in RFC 2396.) The protocol itself is defined in the standards-track document E.164, which describes the international public telecommunication telephony numbering plan.

How ENUM Works

ENUM makes extensive use of the Naming Authority Pointer Resource Records (which are defined in RFC 2915) in order to identify available ways or services for contacting a specific node identified through the E.164 number. In a nutshell, ENUM involves the following steps:

1. ENUM turns a phone number into a fully qualified domain name (FQDN). It does this by first adding the city, or area, and country code. For example, 555-1234 dialed in Washington, DC, becomes +1-202-555-1234, where 202 is the area code, the 1 represents the North American country code, and the + indicates that the number is a fully qualified E.164 number. Then ENUM removes all the characters except for the digits and reverses the order (e.g., +1-202-555-1234 becomes 43215552021). Finally, it places dots between the digits and appends the domain E164.ARPA to the end of the string (e.g., 4.3.2.1.5.5.5.2.0.2.1.E164.ARPA).

2. ENUM issues a DNS query on the FQDN created in step 1.

3. DNS returns a list of URIs that contain information about what resources, services, and applications are associated with that specific phone number.

An important feature of the ENUM protocol is that more than one type of contact information can be stored in the DNS record that belongs to a specific ENUM number. An ENUM record associated with The LIDO Organization might contain instructions for a VoIP call (e.g., h323:[email protected] or sip:[email protected]), a fax call (e.g., fax:[email protected]), and e-mail communications (e.g., mailto:[email protected]). Additional services can be developed in the future and included in the ENUM name records. The phone number in ENUM can therefore be the single contact number for multiple types of communication, such as voice, fax, e-mail, mobile, text messaging, location-based services, and Web pages.

ENUM does not replace the numeric IPv4 or IPv6 addresses that will be used to interact within IP directly. ENUM performs no conversion of signaling messages and media streams. Instead, ENUM is a framework for mapping and processing addresses of different network types. ENUM provides another way to determine the desired destination to be used to initiate communication over a next-generation network. Although ENUM will help facilitate VoIP calls, it is important to understand that VoIP phone calls do not require ENUM, and ENUM implementation is not mandatory, at least not at this point. VoIP calls can be made wholly without ENUM by using their defined Internet addressing scheme (e.g., sip:user@host.com).

The Future of ENUM

The Internet Architecture Board (IAB; www.iab.org) and ITU Study Group 2 are discussing a collaboration on the operational administration and delegation issues related to deployment of ENUM-based services. As you can imagine, this requires extensive consultation with administrators of resources derived from the international E.164 numbering plan, including national and international numbering plan administrators.

Under current practice, service providers generally develop their own address-mapping solutions and negotiate their own traffic exchange arrangements among themselves. Experts predict a gradual shift toward public ENUM as the number of IP voice endpoints grows. The following ENUM structures are possible:

- Private ENUM—Service and equipment providers are finding ways to make money on private ENUM, or ENUM-like, proprietary mapping and signaling. ENUM creates and enables the opportunity for a third party to enter and build the technology and then make money by charging fees to all the different providers who have numbers stored in the system. In this scenario, the third party would make fractions of a penny per number, per month. A grander vision would include transport service between VoIP providers, combining call routing and transmission. The service providers would have to have a sufficient number of customers and amount of traffic to warrant such services.

- User ENUM—User ENUM gives end users on the Internet and end users on the PSTN a possibility to find services of other end users on the public Internet. In this case, ENUM service providers provide a form of electronic business card or basic buddy list. The phone number becomes a universal key that is globally accessible by all potential correspondents of the user. With user ENUM, the data is public. Anyone who knows the universal key—that is, the phone number—can have access to the information, which may have privacy implications. Even if the user subscribes to several applications and services, the user would remain the only party who has complete control over the set of identifiers. User ENUM is a capability provided for end users and is optional both for the calling user and for the called user.

- Infrastructure ENUM—Network operators using IP-based technology within their networks cannot rely on an optional technology used by end users. They need an independent routing mechanism to find the ingress points to their networks. Using DNS and ENUM technology for this purpose, as described in RFC 3761, is called infrastructure ENUM, or carrier, or operator, ENUM. The basic premise of infrastructure ENUM is to provide information only to IP service providers and, in some cases, only to selected peers. The end user typically cannot use this information or has no access to it. This type of system must be implemented as an independent system.

If every IP communications service provider is only providing data for numbers hosted by the operator itself, a later merging of trees should not be a problem. If, on the other hand, providers are entering data for numbers they are not hosting themselves (e.g., data for numbers where they provide transit services), a later merging of trees will cause problems. Therefore, it is not recommended to use infrastructure ENUM for providing transit information.

Another application for infrastructure ENUM technology is to provide access to national number portability information, which is currently stored in databases. However, this information will have only national significance (e.g., national routing numbers). As a result, this data cannot be used in supranational infrastructure ENUM implementations.

The reality is that ENUM is not currently required in networks, based on the type of applications that carriers and service providers are supporting. In terms of U.S. domestic policy, the U.S. government endorses moving forward with ENUM based on the concept of an industry management limited liability corporation similar to that used with number portability. It involves an arm’s-length contractual relationship with an administrative entity. This is similar to existing relationships with ICANN (www.icann.org) for IP address space, top-level domain names, and root server management; with NeuStar (www.neustar.com) for numbering plan and portability management; and with VeriSign (www.verisign.com) for .com and .net registries.

The U.S. ENUM Forum (www.enumf.org) is developing policies and taking steps to implement ENUM in the United States. Some early participants include AT&T, Sprint, SBC, Verizon, Neustar, Cox Cable & Wireless, Cisco, and Telcordia. However, it is anticipated that it will be a while before the United States sees public ENUM. Several national forums are under way in the United Kingdom, Austria, Sweden, Japan, the Netherlands, Germany, Brazil, Austria, Poland, and elsewhere. A number of resources are available on the Web, including the approved ENUM delegation list (www.ripe.net/enum/request-archives) and the ITU ENUM Web page (www.itu.int/osg/spu/enum/index.html).

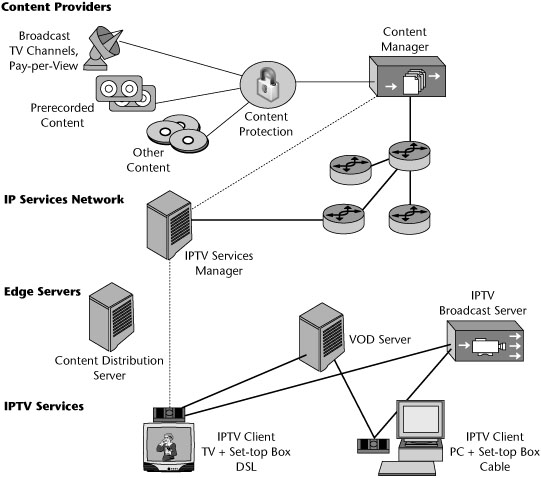

IPTV

IPTV is generally described as a system that delivers digital television service to a subscriber using IP over a broadband connection, often provided in conjunction with video-on-demand (VOD), Internet services, and VoIP, as part of triple-play services (a combination of voice, data, and video services over the same infrastructure). In its simplest definition, IPTV is the delivery of television content through technologies used for the World Wide Web rather than traditional formats.

Some say that incumbent telcos have developed their current interest in offering video or TV services as a result of fear—fear that cable operators are beginning to encroach on their territory with residential voice services, fear that competition is eroding the number of access lines being deployed, and fear that traditional landline revenues will continue to fall even further. IPTV therefore holds great promise for telcos. But more importantly, with IPTV, telcos and cable providers alike can offer triple-play services. It is also important to note that IPTV is not just a replication of the passive cable TV viewing environment of old; instead, it offers services including interactive gaming, access to massive libraries of video content on demand, and e-commerce activities, with a view to future features such as energy management and security services.