Text Entry by Gaze: Utilizing Eye Tracking

9.1 INTRODUCTION

Text entry by eyes is essential for severely disabled people with motor disorders who have no other means of communication. By using a computer equipped with an eye-tracking device, people suffering from amyotrophic lateral sclerosis or the locked-in syndrome, for instance, can produce text by using the focus of gaze as a means of input.

In one sense, text entry by eye gaze is quite similar to any screen-based text entry technique, such as the on-screen keyboards used with tablet PCs. The interface is more or less the same, only the interaction technique for pointing and selecting changes. Instead of a stylus or other pointing device, eye gaze is used. However, this change in the interaction technique brings with it fundamental differences, giving rise to a number of design issues that make text entry by eye gaze a unique technique with its own set of research problems.

The fundamental reason for this is that the eye is a perceptual organ: eyes are normally used for observation, not for control. Nevertheless, gaze direction does indicate the focus of the viewer’s visual attention (Just & Carpenter, 1976). Thus, despite eyes being primarily a perceptual organ, gaze can be considered as a natural means of pointing. It is easy to focus on items by looking at them (Stampe & Reingold, 1995). However, gaze provides information only about the direction of the user’s interest, not the user’s intentions. All on-screen objects touched by the eye gaze cannot be activated. Doing so would cause the so-called Midas touch problem (Jacob, 1991): everywhere you look something gets activated. At least one additional bit of information is needed to avoid the Midas touch, such as a click from a separate switch or an “eye press” by staring at a button for a predetermined amount of time (“dwell time”).

The inaccuracy of the measured point of gaze presents another challenge. Most of the current eye-tracking devices achieve an accuracy of 0.5° (equivalent to a region of approximately 15 pixels on a 17-in. display with a resolution of 1024 × 768 pixels viewed from a distance of 70 cm). Even if eye trackers were perfectly accurate, the size of the fovea restricts the practical accuracy to about 0.5–1°. Everything inside the foveal region is seen in detail. Visual attention can be retargeted within the foveal region at will without actually moving the eyes. Thus, an absolute, pixel-level accuracy may never be reached by gaze pointing alone.

The accuracy of eye tracking is also very much dependent on successful calibration. Before an eye-tracking device can be used for gaze pointing, it has to be calibrated for each user. The calibration is done by showing a few (typically 5–12) points on screen and asking the user to look at them one by one. The camera takes a picture of the eye when the user looks at the calibration points. This enables the computer then to analyze any eye image while the user is looking at the screen and associate the image with screen coordinates.

In addition to the design challenges, gaze-based interaction also provides some new opportunities. Eyes are extremely fast. Eye movements anticipate actions: people typically look at things before they act on them (Land & Furneaux, 1997). Hence, eyes have the potential to enhance interaction.

We will continue by presenting different techniques for text entry by gaze, followed by a review of various evaluations concerning the speed of the entry by gaze and detailed design issues. We close by suggestions for further reading.

9.2 DIFFERENT APPROACHES TO TEXT ENTRY BY GAZE

Systems that utilize eye tracking for text entry have existed since the 1970s (Majaranta & Räihä, 2002). In fact, the first real-time applications using eye tracking in human–computer interaction were targeted at people with disabilities (Jacob & Karn, 2003). Eyes can be used for text entry in various ways. In this section we first focus on systems that utilize information from the gaze direction (direct gaze pointing). Then, a brief review of other ways of using the eyes is given.

9.2.1 Text Entry by Direct Gaze Pointing

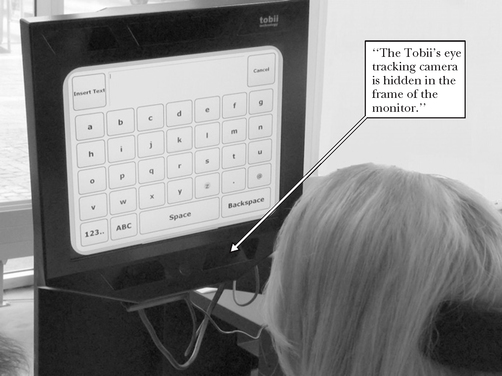

The most common way to use gaze for text entry is direct pointing by looking at the desired letter. A typical setup has an on-screen keyboard with a static layout, an eye-tracking device that tracks the user’s gaze, and a computer that analyzes the user’s gaze behavior (see Fig. 9.1).

FIGURE 9.1 The Tobii eye-tracking device has a camera integrated into the frame of the monitor that shows the on-screen keyboard.

To type by gaze, the user focuses on the desired letter by looking at one of the keys on the on-screen keyboard. The system gives feedback on the focused item, for example, by highlighting the focused item or by moving a “gaze cursor” over the focused key. The focused item can be selected by using, for instance, a separate switch, a blink, a wink, or even a wrinkle or any other (facial) muscle activity. Muscle activity is typically measured by electromyography (see, e.g., Surakka et al., 2003), though blinks and winks can also be detected from the same video signal used to analyze eye movements.

For severely disabled people, dwell time is the best and often the only way of selection (“eye press”). A dwell means a prolonged gaze: the user needs to fixate on the key for longer than a predefined threshold (typically, 500–1000 ms) in order for the key to be selected. Requiring the user to fixate for a long time helps in preventing false selections, but it is uncomfortable for the user, as fixations longer than 800 ms are often broken by blinks or saccades1 (Stampe & Reingold, 1995).

The inaccuracy of the measured point of gaze was a considerable problem especially in the early days of eye tracking. Therefore, the keys on the screen had to be quite large. For example, the first version of the ERICA system (Hutchinson et al., 1989) had only six selectable items available on the screen at a time. The letters were organized in a tree-structured menu hierarchy. The user first selected a group of letters and then either another group of letters or the single target letter. Typing was slow, as it took from two to four menu selections to select a single letter. Letters were arranged in the hierarchy based on word frequencies, so that the expected number of steps for text entry was minimized (Frey et al., 1990).

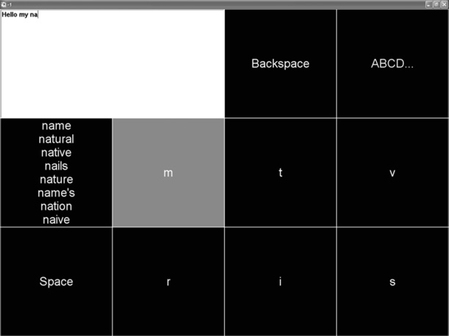

Layouts with large keys are still needed and used in today’s systems. Even though the state-of-the-art eye trackers are fairly accurate, the so-called “low-cost systems” still do not reach the accuracy needed for a Qwerty keyboard. For example, the GazeTalk system (Hansen et al., 2001) was developed with a standard Web camera in mind (Hansen et al., 2002). It divides the screen into a 3 × 4 grid (see Fig. 9.2). The six cells on the bottom right contain the six most likely letters to continue the word that is being entered (shown in the two top left cells). The leftmost entry on the middle row provides a short-hand access to the eight most likely completions: by activating that button, the screen changes into one on which those words appear in the cells in the two bottom rows. If none of the suggested continuations (words or letters) is correct, the user has access to the “A to Z” button that appears in the bottom row of cells with the next hierarchical level, like in the ERICA system above.

Finally, even if the eye trackers were perfectly accurate, systems with large buttons are still useful. In addition to the accuracy limitations due to the size of the fovea, some medical conditions cause involuntary head movements or eye tremors, which prevent getting a good calibration (Donegan et al., 2005).

9.2.2 Text Entry by Eye Switches and Gaze Gestures

Voluntary eye blinks can be used as binary switches (see, e.g., Grauman et al., 2003). For text entry, blinks are usually combined with a “scanning” technique (see Chap. 15), in which letters are organized into a matrix. The system moves the focus automatically by scanning the alphabet matrix line by line. The highlighted line is selected by an eye blink. Then the individual letters on the selected line are scanned through and again the user blinks when the desired letter is highlighted.

In addition to eye blinks, coarse eye movements can also be used as switches. The Eye-Switch Controlled Communication Aids by Ten Kate et al. (1979) used large (about 15°) eye movements to the left and to the right as switches. It, too, applied the scanning technique. The user could start the scanning by glancing to the left and select the currently focused item by glancing to the right.

I4Control (Fejtová et al., 2004) can be considered to be a four-direction eye-operated joystick, as looking at any of the four directions (left, right, up, down) causes the cursor to move at that direction until the eye returns to the home position (center). The cursor can also be stopped by blinking. A blink emulates a click or double-click. Text entry by I4Control is achieved by moving the mouse cursor over the desired key on an on-screen keyboard and selecting the key by an eye blink.

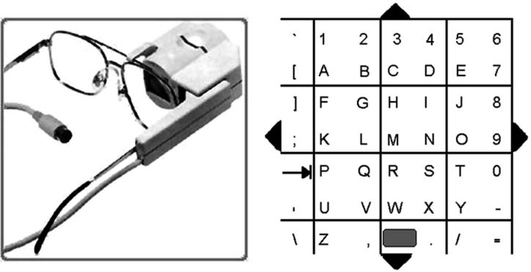

The four different eye movements recognized by I4Control can be considered to be four switches, each activated by a different glance or eye gesture. Several eye gestures can also be combined into one operation, just as several key presses are used to enter a letter in the multitap method for mobile phones. This approach is used in VisionKey (Kahn et al., 1999), in which an eye tracker and a keyboard display (Fig. 9.3, right) are attached to eyeglass frames (Fig. 9.3, left). As the key chart is attached in front of the user’s eye, it is important to make sure that plain staring at a letter does not select it. The VisionKey selection method avoids the Midas touch problem by using a two-level selection method, i.e., two consecutive eye gestures for activating a selection. To select a character, the user must first gaze at the edge of the chart that corresponds to the location of the character in its block. For example, if the user wants to select the letter “G”, the user first glances at the upper right corner of the chart and then looks at the block where “G” is located (or simply looks at “G”). After a predefined dwell time the selected key is highlighted to confirm a successful selection.

FIGURE 9.3 VisionKey is attached to eyeglass frames (left). It has a small screen integrated to it (right) (http://www.eyecan.ca).

Using eye blinks or gaze gestures (instead of direct gaze pointing) is needed by people who are able to move their eyes only in one direction or who have trouble fixating their gaze due to their medical condition (Chapman, 1991; Donegan et al., 2005).

9.2.3 Text Entry by Continuous Gaze Gestures

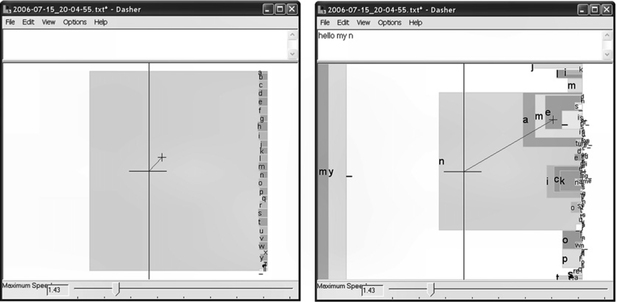

The techniques presented in Section 9.2.2 made use of discrete, consecutive gaze gestures. Dasher (Ward & MacKay, 2002) is a zooming interface that is operated by continuous pointing gestures. At the beginning, the letters of the alphabet are located in a column on the right side of the screen (see Fig. 9.4). Letters are ordered alphabetically (Fig. 9.4, left). The user moves the cursor to point at the region that contains the desired letter by looking at the letter. The area of the selected letter starts to zoom in (grow) and move to the left, closer to the center of the screen (Fig. 9.4, right). Simultaneously, the language model of the system predicts the most probable next letters. The areas of the most probable letters start to grow (compared to other, not as probable, letters) within the chosen region. This brings the next most probable letters closer to the current cursor position, thus minimizing the distance and time to select the next letter(s). The letter is typed when it crosses the horizontal line on the center of the screen. Canceling the last letter is done by looking to the left; the letter then moves back to the right side of the screen.

FIGURE 9.4 Dasher facilitates text entry by navigation through a zooming world of letters. In the initial state, the letters are ordered alphabetically on the right side of the screen (image on the left). As the user looks at the desired letter, its area starts to grow and simultaneously the language prediction system gives more space to the next most probable letters. In the image on the right, the user is in the middle of writing “name,” with “n” already selected. Dasher is freely available online at http://www.inference.phy.cam.ac.uk/dasher/ and http://www.cogain.org.

Dasher’s mode-free continuous operation makes it especially suitable for gaze as only one bit of information is required: the direction of gaze. No additional switches or dwell time are needed to make a selection or to cancel. Furthermore, instead of adding separate buttons or lists with the next most probable words, Dasher embeds the predictions into the writing process itself. Many successive characters can be selected by a single gesture, and often-used words are easier and faster to write than rare words. This not only speeds up the text entry process but also makes it easier: In a comparative evaluation, users made fewer mistakes with Dasher than with a standard Qwerty keyboard.

The continuous gestures used in Dasher make it a radically different technique compared to all the others we have discussed. Some gestures can essentially select more than one character (the selection point does not have to be moved, since the display moves dynamically), which can speed up text entry. The most likely characters occupy a large portion of the display space, so that gestures do not always need to be accurate. Dasher can be used with any input device that is capable of gesturing. It has been implemented on a pocket PC to be used by a stylus and can even be controlled by breathing using a special breath-mouse.

9.3 CASE STUDIES AND GUIDELINES

The speed of text entry by eye gaze depends on a number of factors. The evaluation methods and metrics discussed in the earlier chapters can be applied, but it is typical that even with the same basic technique, many parameters, such as the threshold for dwell-time selection, can be adjusted, and this affects the theoretical maximum speed obtainable by a technique. Also, learning to master text entry by eye typing requires a long learning period. Gips et al. (1996) report that “for people with severe disabilities it can take anywhere from 15 minutes to many months to acquire eye control skill to run the system.” The speed of real experts has not been systematically measured for any of the eye-controlled text entry systems.

With on-screen keyboards and dwell-time selection (the most common setup), text entry can never be very fast. Assuming, for instance, a 500-ms dwell time (usually too short for all but the most experienced eye typists), and a 40-ms saccade from one key to the next, the maximum speed obtainable would be 22 WPM with error-free performance and no time for cognitive processing to search for the next key from the keyboard. Measured entry speeds have been significantly lower. A starting speed with a dwell time of 900 ms was on the order of 7 WPM in the controlled experiments reported by Majaranta et al. (2006), which is in line with previous results, e.g., those by Frey et al. (1990). However, performance improved slightly yet significantly during just four trials. Changing the dwell time to 450 ms had a remarkable effect of improving the text entry speed to about 10 WPM. Not surprisingly, the error rates increased, but were still on the order of 1% of all characters entered.

Hansen and Hansen (2006) experimented with text entry by gaze using a common, “off-the-shelf” video camera with the GazeTalk system. They observed text entry speeds of 3–5 WPM by untrained users using large (3 × 4) on-screen buttons. It is not surprising that systems requiring hierarchical navigation or multiple gestures are slower than on-screen Qwerty keyboards with accurate eye trackers, although the prediction and completion features can improve the text entry speed. Their use depends heavily on both individual styles and extended use, so they are difficult to evaluate without longitudinal studies.

The language of the text also has an effect on text entry speed. All the figures given so far are for English. GazeTalk and Dasher also support entering text in Japanese. Itoh et al. (2006) report on a typing speed of 22–24 Kanji characters per minute, with performance improving from 19 to 23–25 characters per minute over seven short trials over 3 days. Both systems reached these text entry rates.

The Dasher system does not have such intrinsic limitations for maximum text entry speed as the dwell-based systems. It is, in fact, the fastest of the current gaze-based text entry techniques. Ward and MacKay (2002) report that after an hour of practice, their users could write up to 25 WPM and experts were able to achieve 34 WPM. The results are based on only a few participants and a relatively short practice time. Controlled experiments with more participants and longitudinal studies with expert users would be interesting.

In addition to the standard metrics, some additional metrics that are pertinent just to text entry by eye gaze have been proposed (see, e.g., Majaranta et al., 2006; Aoki et al., 2006). Such metrics focus on gaze behavior and its interaction with text entry events.

9.3.2 Experiments for Guiding Design Decisions

General guidelines on feedback in graphical user interfaces may not be suitable as such for dwell-time selection. There is a noteworthy difference between using dwell time as an activation command compared to, for example, a button click. With a button click, the user makes the selection and defines the exact moment when the selection is made. Using dwell time, the user only initiates the action; the system makes the actual selection after a predefined interval. Furthermore, when using gaze for text entry, the locus of gaze is engaged in the input process: the user needs to look at the letter to type it. To review the text written so far, the user needs to move the gaze from the virtual keyboard to the typed text field. As discussed below, proper feedback can significantly facilitate the tedious task of text entry by gaze. Even a slight improvement in performance makes a difference in a repetitive task of text entry, in which the effect accumulates.

Majaranta et al. (2006) conducted three experiments to study the effects of feedback and dwell time on speed and accuracy during text entry by gaze. Results showed that the type of feedback significantly affects text entry speed, accuracy, gaze behavior, and subjective experience. For example, adding a simple audible “click” after each selection significantly facilitates text entry by gaze. Compared with plain visual feedback, the added auditory feedback increases text entry speed and improves accuracy. It confirms the selection by giving a sense of finality that does not surface through visual feedback alone. The participants’ gaze paths showed that added auditory feedback significantly reduced the need to review and verify the typed text.

Spoken feedback is useful for novices using long dwell times: speaking out the letters as they are typed significantly helps in reducing errors. However, with short dwell times, spoken feedback is problematic since speaking a letter takes time. People tend to pause to listen to the speech, which introduces double-entry errors: the same letter is unintentionally typed twice (Majaranta et al., 2006). This, in return, reduces the text entry speed.

Most participants preferred an audible click over spoken or plain visual feedback because it not only clearly marks selection but also supports the typing rhythm. Typing rhythm is especially important with short dwell times, where the user no longer waits for feedback but learns to take advantage of the rhythm inherent in the dwell time duration.

For novices using a long dwell time, it is useful to give extra feedback on the dwell-time progress. This can be done by animation or by giving two-level feedback: first on the focus and then, after the dwell time has run out, feedback on selection. Showing the focus first gives the user the possibility of canceling the selection before reaching the dwell-time threshold. Moreover, it is not natural to fixate on a target for a long time. Animated feedback helps in maintaining focus on the target. However, with short dwell times, there is no time to give extra feedback to the user. Two-level feedback (focus first, then selection) is especially confusing and distracting if the interval between the two stages is too short.

Majaranta et al. (2006) conclude that the feedback should be matched with the dwell time. Short dwell times require simplified feedback, while long dwell times allow extra information on the eye-typing process. One should also keep in mind that the same dwell time (e.g., 500 ms) may be “short” for one user and “long” for another. Therefore, the user should be able to adjust the dwell-time threshold as well as the feedback parameters and attributes.

The need to adjust parameters is of uttermost importance for people with disabilities. Donegan et al. (2005) emphasize that user requirements for gaze-based communication systems are all about choice. The system needs to be flexible in order to accommodate the varied needs of the users with varied disabilities. For example, due to the medical condition, the user may have involuntary head or eye movements that prevent accurate calibration. Thus, the size of the selectable objects on screen need to be adjustable. For text entry, this means that only a few letters can be shown on screen at a time, which in turn requires special keyboard layout designs and word prediction, if one wants to achieve an adequate text entry speed.

Many users with severe disabilities also have visual impairment of some kind. Thus, in addition to varying selection methods (blink, dwell time, switch), a wide range of text output styles is needed, including a choice of font, background colors, and supporting auditory feedback.

9.4 FURTHER READING

For understanding the prospects and problems of text entry by gaze, it is instrumental to know in more detail how eye-tracking devices work and to understand their limitations. The best general introduction to eye tracking is the book by Duchowski (2003). The proceedings of the biennial conference series on Eye Tracking Research and Applications are accessible through the ACM Digital Library. They give an up-to-date view of the current research.

Eye trackers have been used for a variety of purposes. Jacob (1993) and Jacob and Karn (2003) provide good general introductions to the application areas. Recent comprehensive taxonomies of the various applications have been published by Hyrskykari et al. (2005) and Hyrskykari (2006).

Text entry by gaze is intended for users with disabilities. There are also other gaze-controlled applications intended for the same user group. Many of these, as well as reports on user experiences and requirements, are made available through the Web site (www.cogain.org) of the COGAIN Network of Excellence on Communication by Gaze Interaction. The site also contains a catalog of different eye-tracking systems.

An alternative to eye tracking is head tracking, in which the camera follows a marker on the user’s forehead. The cursor on the screen can be moved by moving the head, leaving the eyes free for observation instead of command and control. All the techniques discussed in this chapter also lend themselves to head control. Comparisons of gaze vs head control are given by Bates and Istance (2003) and Hansen et al. (2003). Gaze and head movements can also be used together (Gips et al., 1993).

We have discussed a variety of techniques for text entry by eye gaze. There are many influential systems, often used by a large number of disabled users, that use similar techniques. These include at least the Eyegaze system by LC Technologies (Cleveland, 1994), QuickGlance by EyeTech Digital Systems (Rasmusson et al., 1999), and VISIOBOARD by MetroVision (Charlier et al., 1997).

ACKNOWLEDGMENTS

This work was supported by the COGAIN Network of Excellence on Communication by Gaze Interaction.

REFERENCES

Available at http://www.cogain.org/ results/reports/COGAIN-D3.1.pdfAoki H., Hansen J.P., Itoh K. Towards remote evaluation of gaze typing systems. Proceedings of COGAIN 2006, 2006, 96–103.

Bates R., Istance H.O. Why are eye mice unpopular? A detailed comparison of head eye controlled assistive technology pointing devices. Universal Access in the Information Society. 2003;2:280–290.

Chapman J.E. The use of eye-operated computer system in locked-in syndrome. Proceedings of the Sixth Annual International Conference on Technology and Persons with Disabilities—CSUN ′91, 20–23 March 1991, Los Angeles, CA. 1991.

Charlier J., Buquet C., Dubus R., Hugeux J.P., Degroc B. VISIOBOARD: a new gaze command system for handicapped subjects. Medical and Biological Engineering and Computing. 1997;35:461–462.

Available at http://www.eyegaze.com/doc/cathuniv.htmCleveland N. Eyegaze human-computer interface for people with disabilities. Proceedings of 1st Automation Technology and Human Performance Conference, Washington, DC. 1994.

Available at http://www.cogain.org/results/reports/COGAIN-D3.1.pdfDonegan M., Oosthuizen L., Bates R., Daunys G., Hansen J.P., Joos M., Majaranta P., Signorile I. D3.1 user requirements report with observations of difficulties users are experiencing. Communication by Gaze Interaction (COGAIN), IST-2003-511598: Deliverable 3.1, 2005.

Duchowski A.T. Eye tracking methodology: Theory and practice. London: Springer-Verlag; 2003.

Fejtová M., Fejt J., Lhotská L. Controlling a PC by eye movements: The MEMREC project. Miesenberger K., Klaus J., Zagler W., Burger D., eds., eds. Proceedings of Computers Helping People with Special Needs: 9th international conference (ICCHP 2004). Lecture Notes in Computer Science, 3118. 2004, 770–773.

Frey L.A., White K.P., Jr., Hutchinson T.E. Eye-gaze word processing. IEEE Transactions on Systems, Man, and Cybernetics. 1990;20:944–950.

Gips J., Dimattia P., Curran F.X., Olivieri P. Using EagleEyes—An electrodes based device for controlling the computer with your eyes—to help people with special needs. In: Klaus J., Auff E., Kremser W., Zagler W., eds. Interdisciplinary aspects on computers helping people with special needs. Vienna: Oldenburg; 1996:630–635.

Gips J., Olivieri C.P., Tecce J.J. Direct control of the computer through electrodes placed around the eyes. In: Smith M.J., Salvendy G., eds. Human-computer interaction: Applications and case studies. Amsterdam: Elsevier; 1993:630–635.

Grauman K., Betke M., Lombardi J., Gips J., Bradski G.R. Communication via eye blinks and eyebrow raises: Video-based human-computer interfaces. Universal Access in the Information Society. 2003;2:359–373.

Hansen D.W., Hansen,J. P. Eye typing with common cameras. In: Proceedings of the Symposium on Eye Tracking Research & Applications—ETRA 2006. New York: ACM Press; 2006:55.

Hansen D.W., Hansen J.P., Nielsen M., Johansen A.S., Stegmann M.B. Eye typing using Markov and active appearance models. In: Proceedings of the Sixth IEEE Workshop on Applications of Computer Vision—WACV ′02. Los Alamitos, CA: IEEE Computer Society; 2002:132–136.

Hansen J.P., Hansen D.W., Johansen A.S. Bringing gaze-based interaction back to basics. In: Stephanidis C., ed. Universal access in HCI: Towards an information society for all. Mahwah, NJ: Lawrence Erlbaum; 2001:325–328.

Hansen J.P., Johansen A.S., Hansen D.W., Itoh K., Mashino S. Command without a click: Dwell time typing by mouse and gaze selections. In: Rauterberg M., Menozzi M., Wesson J., eds. Proceedings of Human-Computer Interaction—INTERACT ′03. Amsterdam: IOS Press; 2003:121–128.

Hutchinson T.E., White K.P., Martin W.N., Reichert K.C., Frey L.A. Human-computer interaction using eye-gaze input. IEEE Transactions on Systems, Man, and Cybernetics. 1989;19:1527–1534.

Published electronically inHyrskykari A. Eyes in attentive interfaces: Experiences from creating iDict, a gaze-aware reading aid. [Available at http://acta.uta.fi/pdf/951-44-6643-8.pdf]. Dissertations in interactive technology, No. 4, Department of Computer Sciences, University of Tampere. Acta Electronica Universitatis Tamperensis. 2006, 531.

CD-ROMHyrskykari A., Majaranta P., Räihä K.-J. From gaze control to attentive interfaces. In: Proceedings of the 11th International Conference on Human-Computer Interaction—HCII 2005, Vol. 7, Universal access in HCI: Exploring new interaction environments. Mahwah, NJ: Lawrence Erlbaum Associates, Inc.; 2005.

Itoh K., Aoki H., Hansen J.P. A comparative usability study of two Japanese gaze typing systems. In: Proceedings of the Symposium on Eye Tracking Research & Applications—ETRA 2006. New York: ACM Press; 2006:59–66.

Jacob R.J.K. The use of eye movements in human-computer interaction techniques: What you look at is what you get. ACM Transactions on Information Systems. 1991;9:152–169.

Jacob R.J.K. Eye movement-based human-computer interaction techniques: Toward non-command interfaces. Hartson H.R., Hix D., eds. Advances in human-computer interaction, Vol. 4. Ablex Publishing: Norwood, NJ, 1993:151–190.

Jacob R.J.K. Eye tracking in advanced interface design. In: Barfield W., Furness T.A., eds. Virtual environments and advanced interface design. New York: Oxford University Press; 1995:258–288.

Jacob R.J.K., Karn K.S. Eye tracking in human-computer interaction and usability research: Ready to deliver the promises (section commentary). In: Hyönä J., Radach R., Deubel H., eds. The mind’s eye: Cognitive and applied aspects of eye movement research. Amsterdam: Elsevier; 2003:573–605.

Just M.A., Carpenter P.A. Eye fixations and cognitive processes. Cognitive Psychology. 1976;8:441–480.

Kahn D.A., Heynen J., Snuggs G.L. Eye-controlled computing: The VisionKey experience. Proceedings of the Fourteenth International Conference on Technology and Persons with Disabilities—CSUN ′99, 15–20 March 1999, Los Angeles, CA. 1999.

Land M.R., Furneaux S. The knowledge base of the oculomotor system. Philosophical Transactions: Biological Sciences. 1997;352:1231–1239.

Majaranta P., Räihä K.-J. Twenty years of eye typing: Systems and design issues. In: Proceedings of the Symposium on Eye Tracking Research & Applications—ETRA 2002. New York: ACM Press; 2002:15–22.

Majaranta P., Mackenzie I.S., Aula A., Räihä K.-J. Effects of feedback and dwell time on eye typing speed and accuracy. Universal Access in the Information Society. 2006;5:199–208.

Rasmusson D., Chappell R., Trego M. Quick Glance: Eye-tracking access to the Windows95 operating environment. Proceedings of the Fourteenth International Conference on Technology and Persons with Disabilities—CSUN ′99, Los Angeles. 1999.

Stampe D.M., Reingold E.M. Selection by looking: A novel computer interface and its application to psychological research. In: Findlay J.M., Walker R., Kentridge R.W., eds. Eye movement research: Mechanisms, processes and applications. Amsterdam: Elsevier; 1995:467–478.

Surakka V., Illi M., Isokoski P. Voluntary eye movements in human-computer interaction. In: Hyönä J., Radach R., Deubel H., eds. The mind’s eye: Cognitive and applied aspects of eye movement research. Amsterdam: Elsevier; 2003:473–491.

TenKate J.H., Frietman E.E.E., Willems W., Ter HaarRomeny B.M., Tenkink E. Eye-switch controlled communication aids. Proceedings of the 12th International Conference on Medical & Biological Engineering, 19–24 August 1979, Jerusalem, Israel. 1979.

Ward D.J., Mackay D.J.C. Fast hands-free writing by gaze direction. Nature. 418, 2002.

1Our eyes usually are stable for only a brief period at a time. Such fixations typically last 200–600 ms (Jacob, 1995). Saccades occur between fixations when we move our eyes. Saccades are ballistic movements that last about 30–120 ms.