In this chapter, we will build on the material in previous chapters to meet the real-life challenges of both large and small networks with relatively demanding applications or users. The sample configurations in this chapter are based on the assumption that your packet-filtering setups will need to accommodate services you run on your local network. We will mainly look at this challenge from a Unix perspective, focusing on SSH, email, and web services (with some pointers on how to take care of other services).

This chapter is about the things to do when you need to combine packet filtering with services that must be accessible outside your local network. How much this complicates your rule sets will depend on your network design, and to a certain extent, on the number of routable addresses you have available. We’ll begin with configurations for official, routable addresses, and then move on to situations with as few as one routable address and the PF-based work-arounds that make the services usable even under these restrictions.

How complicated is your network? How complicated does it need to be?

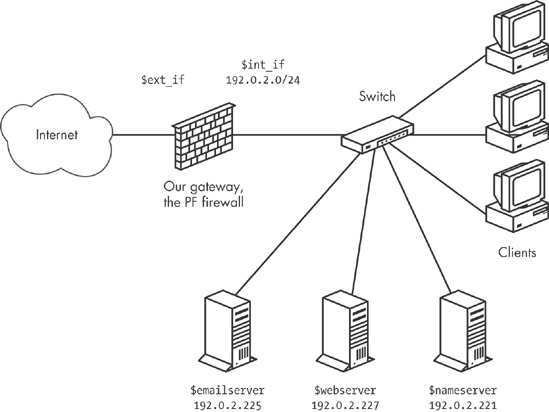

We’ll start with the baseline scenario of the sample clients from Chapter 3 that we set up behind a basic PF firewall, with access to a range of services hosted elsewhere but no services running on the local network. These clients get three new neighbors: a mail server, a web server, and a file server. In this scenario, we use official, routable addresses, since it makes life a little easier. Another advantage of this approach is that with routable addresses, we can let two of the new machines run DNS for our example.com domain: one as the master and the other as an authoritative slave.[24]

Note

For DNS, it always makes sense to have at least one authoritative slave server somewhere outside your own network (in fact, some top-level domains will not let you register a domain without it). You may also want to arrange for a backup mail server to be hosted elsewhere. Keep these things in mind as you build your network.

At this stage, we keep the physical network layout fairly simple. We put the new servers into the same local network as the clients, possibly in a separate server room, but certainly on the same network segment or switch as the clients. Conceptually, the new network looks something like Figure 5-1.

With the basic parameters for the network in place, we can start setting up a sensible rule set for handling the services we need. Once again, we start from the baseline rule set and add a few macros for readability.

The macros we need come rather naturally from the specifications:

We assume that the file server does not need to be accessible to the outside world, unless we choose to set it up with a service that needs to be visible outside the local network, such as an authoritative slave name server for our domain. Then, with the macros in hand, we add the pass rules. Starting with the web server, we make it accessible to the world with the following:

pass proto tcp to $webserver port $webports

On a similar note, we let the world talk to the mail server:

pass proto tcp to $emailserver port $email

This lets clients anywhere have the same access as the ones in your local network, including a few mail-retrieval protocols that run without encryption. That’s common enough in the real world, but you might want to consider your options if you are setting up a new network.

For the mail server to be useful, it needs to be able to send mail to hosts outside the local network, too:

pass log proto tcp from $emailserver to port smtp

Keeping in mind that the rule set starts with a block all rule, this means that only the mail server is allowed to initiate SMTP traffic from the local network to the rest of the world. If any of the other hosts on the network need to send email to the outside world or receive email, they need to use the designated mail server. This could be a good way to ensure, for example, that you make it as hard as possible for any spam-sending zombie machines that might turn up in your network to actually deliver their payloads.

Finally, the name servers need to be accessible to clients outside our network who look up the information about example.com and any other domains for which we answer authoritatively:

pass inet proto { tcp, udp } to $nameservers port domainHaving integrated all the services that need to be accessible from the outside world, our rule set ends up looking roughly like this:

ext_if = "ep0" # macro for external interface - use tun0 or pppoe0 for PPPoE

int_if = "ep1" # macro for internal interface

localnet = $int_if:network

webserver = "192.0.2.227"

webports = "{ http, https }"

emailserver = "192.0.2.225"

email = "{ smtp, pop3, imap, imap3, imaps, pop3s }"

nameservers = "{ 192.0.2.221, 192.0.2.223 }"

client_out = "{ ssh, domain, pop3, auth, nntp, http,

https, cvspserver, 2628, 5999, 8000, 8080 }"

udp_services = "{ domain, ntp }"

icmp_types = "{ echoreq, unreach }"

block all

pass quick inet proto { tcp, udp } from $localnet to port $udp_services

pass log inet proto icmp all icmp-type $icmp_types

pass inet proto tcp from $localnet to port $client_out

pass inet proto { tcp, udp } to $nameservers port domain

pass proto tcp to $webserver port $webports

pass log proto tcp to $emailserver port $email

pass log proto tcp from $emailserver to port smtpThis is still a fairly simple setup, but unfortunately, it has one potentially troubling security disadvantage. The way this network is designed, the servers that offer services to the world at large are all in the same local network as your clients, and you would need to restrict any internal services to only local access. In principle, this means that an attacker would need to compromise only one host in your local network to gain access to any resource there, putting the miscreant on equal footing with any user in your local network. Depending on how well each machine and resource are protected from unauthorized access, this could be anything from a minor annoyance to a major headache.

In the next section, we will look at some options for segregating the services that need to interact with the world at large from the local network.

In the previous section, you saw how to set up services on your local network and make them selectively available to the outside world through a sensible PF rule set. For more fine-grained control over access to your internal network, as well as the services you need to make visible to the rest of the world, add a degree of physical separation. Even a separate virtual local area network (VLAN) will do nicely.

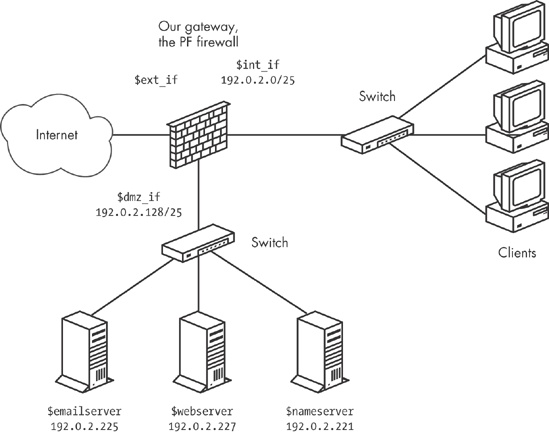

Achieving the physical and logical separation is fairly easy: Simply move the machines that run the public services to a separate network, attached to a separate interface on the gateway. The net effect is a separate network that is not quite part of your local network, but not entirely in the public part of the Internet either. Conceptually, the segregated network looks like Figure 5-2.

Note

Think of this little network as a zone of relative calm between the territories of hostile factions. It is no great surprise that a few years back, someone coined the phrase demilitarized zone, or DMZ for short, to describe this type of configuration.

For address allocation, you can segment off an appropriately sized chunk of your official address space for the new DMZ network. Alternatively, you can move those parts of your network that do not have a specific need to run with publicly accessible and routable addresses into a NAT environment. Either way, you end up with at least one more interface on which to filter. As you will see later, it is possible to run a DMZ setup in all-NAT environments as well, if you are really short of official addresses.

The adjustments to the rule set itself do not need to be extensive. If necessary, you can change the configuration for each interface. The basic rule set logic remains, but you may need to adjust the definitions of the macros (webserver, mailserver, nameservers, and possibly others) to reflect your new network layout.

In our example, we could choose to segment off the part of our address range where we have already placed our servers. If we leave some room for growth, we can set up the new dmz_if on a /25 subnet with a network address and netmask of 192.0.2.128/255.255.255.128. This leaves us with the range 192.0.2.129 through 192.0.2.254 as the usable address range for hosts in the DMZ. With that configuration and no changes in the IP addresses assigned to the servers, you do not really need to touch the rule set at all for the packet filtering to work after setting up a physically segregated DMZ. That is a nice side effect, which could be due to either laziness or excellent long-range planning. Either way, it underlines the importance of having a sensible address-allocation policy in place.

It might be useful to tighten up your rule set by editing your pass rules so the traffic to and from your servers is allowed to pass only on the interfaces that are actually relevant to the services:

pass in on $ext_if proto { tcp, udp } to $nameservers port domain

pass in on $int_if proto { tcp, udp } from $localnet to $nameservers

port domain

pass out on $dmz_if proto { tcp, udp } to $nameservers port domain

pass in on $ext_if proto tcp to $webserver port $webports

pass in on $int_if proto tcp from $localnet to $webserver port $webports

pass out on $dmz_if proto tcp to $webserver port $webports

pass in log on $ext_if proto tcp to $mailserver port smtp

pass in log on $int_if proto tcp from $localnet to $mailserver port $email

pass out log on $dmz_if proto tcp to $mailserver port smtp

pass in on $dmz_if from $mailserver to port smtp

pass out log on $ext_if proto tcp from $mailserver to port smtpYou could choose to make the other pass rules that reference your local network interface-specific, too, but if you leave them intact, they will continue to work.

Once you have set up services to be accessible to the world at large, one likely scenario is that over time, one or more of your services will grow more sophisticated and resource-hungry, or simply attract more traffic than you feel comfortable serving from a single server.

There are a number of ways to make several machines share the load of running a service, including ways to fine-tune the service itself. For the network-level load-balancing, PF offers the basic functionality you need via redirection to tables or address pools. In fact, you can implement a form of load balancing without even touching your pass rules.

Take the web server in our example. We already have the macro for the public IP address (webserver = "192.0.2.227"), which, in turn, is associated with the hostname that your users have bookmarked, possibly www.example.com. When the time comes to share the load, set up the required number of identical, or at least equivalent, servers, and then alter your rule set slightly to introduce the redirection. First, define a table that holds the addresses for your web server pool:

table <webpool> persist { 192.0.2.214, 192.0.2.215, 192.0.2.216, 192.0.2.217 }Then perform the redirection:

match in on $ext_if protp tcp to $webserver port $webports

rdr-to <webpool> round-robinUnlike the redirections in earlier examples, such as the FTP proxy in Chapter 3, this rule sets up all members of the webpool table as potential redirection targets for incoming connections intended for the webports ports on the webserver address. Each incoming connection that matches this rule is redirected to one of the addresses in the table, spreading the load across several hosts. You may choose to retire the original web server once the switch to this redirection is complete, or let it be absorbed in the new web server pool.

On PF versions earlier than OpenBSD 4.7, the equivalent rule is as follows:

rdr on $ext_if proto tcp to $webserver port $webports -> <webpool> round-robin

In both cases, the round-robin option means that PF shares the load between the machines in the pool by cycling through the table of redirection address sequentially.

When it is essential for accesses from each individual source address to always go to the same host in the back end (for example, if the service depends on client- or session-specific parameters that will be lost if new connections hit a different host in the back end), you can add the sticky-address option to make sure that new connections from a client are always redirected to the same machine behind the redirection as the initial connection. The downside to this option is that PF needs to maintain source-tracking data for each client, and the default value for maximum source nodes tracked is set at 10,000, which may be a limiting factor. (See Chapter 9 for advice on adjusting this and similar limit values.)

When even load distribution is not an absolute requirement, selecting the redirection address at random may be appropriate:

match in on $ext_if proto tcp to $webserver port $webports

rdr-to <webpool> randomNote

On pre-OpenBSD 4.7 PF versions, the random option is not supported for redirection to tables or lists of addresses.

Even organizations with large pools of official, routable addresses have opted to introduce NAT between their load-balanced server pools and the Internet at large. This technique works equally well in various NAT-based setups, but moving to NAT offers some additional possibilities and challenges.

After you have been running a while with load balancing via round-robin redirection, you may notice that the redirection does not automatically adapt to external conditions. For example, unless special steps are taken, if a host in the list of redirection targets goes down, traffic will still be redirected to the IP addresses in the list of possibilities.

Clearly, a monitoring solution is needed. Fortunately, the OpenBSD base system provides one. The relay daemon relayd[25] interacts with your PF configuration, providing the ability to weed out nonfunctioning hosts from your pool. Introducing relayd into your setup, however, may require some minor changes to your rule set.

The relayd daemon works in terms of two main classes of services that it refers to as redirects and relays. It expects to be able to add or subtract hosts’ IP addresses to or from the PF tables it controls. The daemon interacts with your rule set through a special-purpose anchor named relayd (and in pre-OpenBSD 4.7 versions, also a redirection anchor, rdr-anchor, with the same name).

To see how we can make our sample configuration work a little better by using relayd, we’ll look back at the load-balancing rule set. Starting from the top of your pf.conf file, add the anchor for relayd to fill in:

anchor "relayd/*"

On pre-OpenBSD 4.7 versions, you also need the redirection anchor, like this:

rdr-anchor "relayd/*" anchor "relayd/*"

In the load-balancing rule set, we had the following definition for our web server pool:

table webpool persist { 192.0.2.214, 192.0.2.215, 192.0.2.216, 192.0.2.217 }It has this match rule to set up the redirection:

match in on $ext_if proto tcp to $webserver port $webports

rdr-to <webpool> round-robinOr on pre-OpenBSD 4.7 versions, you would use the following:

rdr on $ext_if proto tcp to $webserver port $webports -> <webpool> round-robin

To make this configuration work slightly better, we remove the redirection and the table, and let relayd handle the redirection by setting up its own versions inside the anchor. (Do not remove the pass rule, however, because your rule set will still need to have a pass rule that lets traffic flow to the IP addresses in relayd’s tables.)

Once the pf.conf parts have been taken care of, we turn to relayd’s own relayd.conf configuration file. The syntax in this configuration file is similar enough to pf.conf to make it fairly easy to read and understand. First, we add the macro definitions we will be using later:

web1="192.0.2.214" web2="192.0.2.215" web3="192.0.2.216" web4="192.0.2.217" webserver="192.0.2.227" sorry_server="192.0.2.200"

All of these correspond to definitions we could have put in a pf.conf file. The default checking interval in relayd is 10 seconds, which means that a host could be down for almost 10 seconds before it is taken offline. Being cautious, we’ll set the checking interval to 5 seconds to minimize visible downtime, with the following line:

interval 5 # check hosts every 5 seconds

Now we make a table called webpool that uses most of the macros:

table <webpool> { $web1, $web2, $web3, $web4 }For reasons we will return to shortly, we define one other table:

table <sorry> { $sorry_server }At this point, we are ready to set up the redirect:

redirect www {

listen on $webserver port 80 sticky-address

tag relayd

forward to <webpool> check http "/status.html" code 200 timeout 300

forward to <sorry> timeout 300 check icmp

}This says that connections to port 80 should be redirected to the members of the webpool table. The sticky-address option has the same effect here as with the rdr-to in PF rules: New connections from the same source IP address (within the time interval defined by the timeout value) are redirected to the same host in the back-end pool as the previous ones.

The relayd daemon should check to see if a host is available by asking it for the file /status.html, using the protocol HTTP, and expecting the return code to be equal to 200. This is the expected result for a client asking a running web server for a file it has available.

No big surprises so far, right? The relayd daemon will take care of excluding hosts from the table if they go down. But what if all the hosts in the webpool table go down? Fortunately, the developers thought of that too, and introduced the concept of backup tables for services. This is the last part of the definition for the www service, with the table sorry as the backup table: The hosts in the sorry table take over if the webpool table becomes empty. This means that you need to configure a service that is able to offer a “Sorry, we’re down” message in case all the hosts in your web pool fail.

With all of the elements of a valid relayd configuration in place, you can enable your new configuration. Reload your PF rule set, and then start relayd. If you want to check your configuration before actually starting relayd, you can use the -n command-line option to relayd:

$ sudo relayd -nIf your configuration is correct, relayd displays the message configuration OK and exits.

To actually start the daemon, we do not need any command-line flags, so the following sequence reloads your edited PF configuration and enables relayd.

$sudo pfctl -f /etc/pf.conf$sudo relayd

With a correct configuration, both commands will silently start, without displaying any messages. You can check that relayd is running with top or ps. In both cases, you will find three relayd processes, roughly like this:

$ ps waux | grep relayd

_relayd 9153 0.0 0.1 776 1424 ?? S 7:28PM 0:00.01 relayd:

pf update engine (relayd)

_relayd 6144 0.0 0.1 776 1440 ?? S 7:28PM 0:00.02 relayd:

host check engine (relayd)

root 3217 0.0 0.1 776 1416 ?? Is 7:28PM 0:00.01 relayd:

parent (relayd)In almost all cases, you will want to enable relayd at startup. You do that by adding this line to your rc.conf.local file:

relayd_flags="" # for normal use: ""

However, once the configuration is enabled, most of your interaction with relayd will happen through the relayctl administration program. In addition to letting you monitor status, relayctl lets you reload the relayd configuration and selectively disable or enable hosts, tables, and services. You can even view service status interactively, like this:

$ sudo relayctl show summary

Id Type Name Avlblty Status

1 redirect www active

1 table webpool:80 active (2 hosts)

1 host 192.0.2.214 100.00% up

2 host 192.0.2.215 0.00% down

3 host 192.0.2.216 100.00% up

4 host 192.0.2.217 0.00% down

2 table sorry:80 active (1 hosts)

5 host 127.0.0.1 100.00% upIn this example, the web pool is seriously degraded, with only two of four hosts up and running. Fortunately, the backup table is still functioning. All tables are active with at least one host up. For tables that no longer have any members, the Status column changes to empty. Asking relayctl for host information shows the status information in a host-centered format:

$ sudo relayctl show hosts

Id Type Name Avlblty Status

1 table webpool:80 active (3 hosts)

1 host 192.0.2.214 100.00% up

total: 11340/11340 checks

2 host 192.0.2.215 0.00% down

total: 0/11340 checks, error: tcp connect failed

3 host 192.0.2.216 100.00% up

total: 11340/11340 checks

4 host 192.0.2.217 0.00% down

total: 0/11340 checks, error: tcp connect failed

2 table sorry:80 active (1 hosts)

5 host 127.0.0.1 100.00% up

total: 11340/11340 checksIf you need to take a host out of the pool for maintenance (or any time-consuming operation), you can use relayctl to disable it like this:

$ sudo relayctl host disable 192.0.2.217In most cases, the operation will display command succeeded to indicate that the operation completed successfully. Once you have completed maintenance and put the machine online, you can reenable it as part of relayd’s pool with this command:

$ sudo relayctl host enable 192.0.2.217Again, you should see the message command succeeded almost immediately to indicate that the operation was successful.

In addition to the basic load-balancing demonstrated here, relayd has been extended in recent OpenBSD versions to offer several features that make it attractive in more complex settings. For example, it can now handle Layer 7 proxying or relaying functions for HTTP and HTTPS, including protocol handling with header append and rewrite, URL path append and rewrite, and even session and cookie handling. The protocol handling needs to be tailored to your application. For example, the following is a simple HTTPS relay for load-balancing the encrypted web traffic from clients to the web servers.

http protocol "httpssl" {

header append "$REMOTE_ADDR" to "X-Forwarded-For"

header append "$SERVER_ADDR:$SERVER_PORT" to "X-Forwarded-By"

header change "Keep-Alive" to "$TIMEOUT"

query hash "sessid"

cookie hash "sessid"

path filter "*command=*" from "/cgi-bin/index.cgi"

ssl { sslv2, ciphers "MEDIUM:HIGH" }

tcp { nodelay, sack, socket buffer 65536, backlog 128 }

}This protocol handler definition demonstrates a range of simple operations on the HTTP headers, and sets both SSL parameters and specific TCP parameters to optimize connection handling. The header options operate on the protocol headers, inserting the values of the variables by either appending to existing headers (append) or changing the content to a new value (change).

The URL and cookie hashes are used by the load-balancer to select to which host in the target pool the request is forwarded. The path filter specifies that any get request, including the first quoted string as a substring of the second, is to be dropped. The ssl options specify that only SSL version 2 ciphers are accepted, with key lengths in the medium-to-high range; in other words, 128 bits or more.[26] Finally, the tcp options specify nodelay to minimize delays, specify the use of the selective acknowledgment method (RFC 2018), and set the socket buffer size and the maximum allowed number of pending connections the load-balancer keeps track of. These options are examples only; in most cases, your application will perform well with these settings at their default values.

The relay definition using the protocol handler follows a pattern that should be familiar from the earlier definition of the www service:

relay wwwssl {

# Run as a SSL accelerator

listen on $webserver port 443 ssl

protocol "httpssl"

table <webhosts> loadbalance check ssl

}Still, your SSL-enabled web applications will likely benefit from a slightly different set of parameters.

Note

We’ve added a check ssl, assuming that each member of the webhosts table is properly configured to complete an SSL handshake. Depending on your application, it may be useful to look into keeping all SSL processing in relayd, thus offloading the encryption-handling tasks from the back ends.

Finally, for CARP-based failover of the hosts running relayd on your network (see Redundancy and Failover: CARP and pfsync in Redundancy and Failover: CARP and pfsync), relayd can be configured to support CARP interaction by setting the CARP demotion counter for the specified interface groups at shutdown or startup.

Like all parts of the OpenBSD system, relayd comes with informative man pages. For the angles and options not covered here (there are a few), dive into the man pages for relayd, relayd.conf, and relayctl, and start experimenting to find just the configuration you need.

[24] In fact, the example.com network here lives in the 192.0.2.0/24 block, which is set aside in RFC 3330 as reserved for example and documentation use. We use this address range mainly to differentiate from the NAT examples elsewhere in this book, which use addresses in the “private” RFC 1918 address space.

[25] Originally introduced in OpenBSD 4.1 under the name hoststated, the daemon has seen active development (mainly by Reyk Floeter and Pierre-Yves Ritschard) over several years, including a few important changes to the configuration syntax, and was renamed relayd in time for the OpenBSD 4.3 release.

[26] See the OpenSSL man page for further explanation of cipher-related options.