Overview

C# and .NET provide a highly effective way to run concurrent code, making it easy to perform complex and often time-consuming actions. In this chapter, you will explore the various patterns that are available, from creating tasks using the Task factory methods to continuations to link tasks together, before moving on to the async/await keywords, which vastly simplify such code. By the end of this chapter, you will see how C# can be used to execute code that runs concurrently and often produces results far quicker than a single-threaded application.

Introduction

Concurrency is a generalized term that describes the ability of software to do more than one thing at the same time. By harnessing the power of concurrency, you can provide a more responsive user interface by offloading CPU-intensive activities from the main UI thread. On the server side, taking advantage of modern processing power through multi-processor and multi-core architectures, scalability can be achieved by processing operations in parallel.

Multithreading is a form of concurrency whereby multiple threads are used to perform operations. This is typically achieved by creating many Thread instances and coordinating operations between them. It is regarded as a legacy implementation, having largely been replaced by parallel and async programming; you may well find it used in older projects.

Parallel programming is a class of multithreading where similar operations are run independently of each other. Typically, the same operation is repeated using multiple loops, where the parameters or target of the operation themselves vary by iteration. .NET provides libraries that shield developers from the low-level complexities of thread creation. The phrase embarrassingly parallel is often used to describe an activity that requires little extra effort to be broken down into a set of tasks that can be run in parallel, often where there are few interactions between sub-tasks. One such example of parallel programming could be counting the number of words found in each text file within a folder. The job of opening a file and scanning through the words can be split into parallel tasks. Each task executes the same lines of code but is given a different text file to process.

Asynchronous programming is a more recent form of concurrency where an operation, once started, will complete at some point in the future, and the calling code is able to continue with other operations. This completion is often known as a promise (or a future in other languages) and is implemented through the task and its generic Task<> equivalent. In C# and .NET, async programming has become the preferred means to achieve concurrent operations.

A common application of asynchronous programming is where multiple slow-running or expensive dependencies need to be initialized and marshaled prior to calling a final step that should be called only when all or some of the dependencies are ready to be used. For example, a mobile hiking application may need to wait for a reliable GPS satellite signal, a planned navigation route, and a heart-rate monitoring service to be ready before the user can start hiking safely. Each of these distinct steps would be initialized using a dedicated task.

Another very common use case for asynchronous programming occurs in UI applications where, for example, saving a customer's order to a database could take 5-10 seconds to complete. This may involve validating the order, opening a connection to a remote server or database, packaging and sending the order in a format that can be transmitted over the wire, and then finally waiting for confirmation that the customer's order has been successfully stored in a database. In a single-threaded application, this would take much longer, and this delay would soon be noticed by the user. The application would become unresponsive until the operation was completed. In this scenario, the user may rightly think the application has crashed and may try to close it. That is not an ideal user experience.

Such issues can be mitigated by using async code that performs any of the slow operations using a dedicated task for each. These tasks may choose to provide feedback as they progress, which the UI's main thread can use to notify the user. Overall, the operation should complete sooner, thus freeing the user to continue interacting with the app. In modern applications, users have come to expect this method of operation. In fact, many UI guidelines suggest that if an operation may take more than a few seconds to complete, then it should be performed using async code.

Note that when code is executing, whether it's synchronous or asynchronous code, it is run within the context of a Thread instance. In the case of asynchronous code, this Thread instance is chosen by the .NET scheduler from a pool of available threads.

The Thread class has various properties but one of the most useful is ManagedThreadId, which will be used extensively throughout this chapter. This integer value serves to uniquely identify a thread within your process. By examining Thread.ManagedThreadId, you can determine that multiple thread instances are being used. This can be done by accessing the Thread instance from within your code using the static Thread.CurrentThread method.

For example, if you started five long-running tasks and examined the Thread.ManagedThreadId for each, you would observe five unique IDs, possibly numbered as two, three, four, five, and six. In most cases, the thread with ID number one is the process's main thread, created when the process first starts.

Keeping track of thread IDs can be quite useful, especially when you have time-consuming operations to perform. As you have seen, using concurrent programming, multiple operations can be executed at the same time, rather than using a traditional single-threaded approach, where one operation must complete before a subsequent operation can start.

In the physical world, consider the case of building a train tunnel through a mountain. Starting at one side of a mountain and tunneling through to the other side could be made considerably faster if two teams started on opposite sides of the mountain, both tunneling toward each other. The two teams could be left to work independently; any issues experienced by a team on one side should not have an adverse effect on the other side's team. Once both sides have completed their tunneling, there should be one single tunnel, and the construction could then continue with the next task, such as laying the train line.

The next section will look at using the C# Task class, which allows you to execute blocks of code at the same time and independently of each other. Consider again the example of the UI app, where the customer's order needs to be saved to a database. For this, you would have two options:

Option 1 is to create a C# Task that performs each step one after another:

- Validate the order.

- Connect to the server.

- Send the request.

- Wait for a response.

Option 2 is to create a C# Task for each of the steps, executing each in parallel where possible.

Both options achieve the same end result, freeing the UI's main thread to respond to user interactions. Option one may well be slower to finish, but the upside is that this would require simpler code. However, Option two would be the preferred choice as you are offloading multiple steps, so it should complete sooner. Although, this could involve additional complexity as you may need to coordinate each of the individual tasks as they are complete.

In the upcoming sections, you will first get a look at how Option one could be approached, that is, using a single Task to run blocks of code, before moving on to the complexity of Option two where multiple tasks are used and coordinated.

Running Asynchronous Code Using Tasks

The Task class is used to execute blocks of code asynchronously. Its usage has been somewhat superseded by the newer async and await keywords, but this section will cover the basics of creating tasks as they tend to be pervasive in larger or mature C# applications and form the backbone of the async/await keywords.

In C#, there are three ways to schedule asynchronous code to run using the Task class and its generic equivalent Task<T>.

Creating a New Task

You'll start off with the simplest form, one that performs an operation but does not return a result back to the caller. You can declare a Task instance by calling any of the Task constructors and passing in an Action based delegate. This delegate contains the actual code to be executed at some point in the future. Many of the constructor overloads allow cancellation tokens and creation options to further control how the Task runs.

Some of the commonly used constructors are as follows:

- public Task(Action action): The Action delegate represents the body of code to be run.

- public Task(Action action, CancellationToken cancellationToken): The CancellationToken parameter can be used as a way to interrupt the code that is running. Typically, this is used where the caller has been provided with a means to request that an operation be stopped, such as adding a Cancel button that a user can press.

- public Task(Action action, TaskCreationOptions creationOptions): TaskCreationOptions offers a way to control how the Task is run, allowing you to provide hints to the scheduler that a certain Task might take extra time to complete. This can help when running related tasks together.

The following are the most often used Task properties:

- public bool IsCompleted { get; }: Returns true if the Task completed (completion does not indicate success).

- public bool IsCompletedSuccessfully { get; }: Returns true if the Task completed successfully.

- public bool IsCanceled { get; }: Returns true if the Task was canceled prior to completion.

- public bool IsFaulted { get; }: Returns true if the Task has thrown an unhandled exception prior to completion.

- public TaskStatus Status { get; }: Returns an indicator of the task's current status, such as Canceled, Running, or WaitingToRun.

- public AggregateException Exception { get; }: Returns the exception, if any, that caused the Task to end prematurely.

Note that the code within the Action delegate is not executed until sometime after the Start() method is called. This may well be some milliseconds after and is determined by the .NET scheduler.

Start here by creating a new VS Code console app, adding a utility class named Logger, which you will use in the exercises and examples going forward. It will be used to log a message to the console along with the current time and current thread's ManagedThreadId.

The steps for this are as follows:

- Change to your source folder.

- Create a new console app project called Chapter05 by running the following command:

source>dotnet new console -o Chapter05

- Rename the Class1.cs file to Logger.cs and remove all the template code.

- Be sure to include the System and System.Threading namespaces. System.Threading contains the Threading based classes:

using System;

using System.Threading;

namespace Chapter05

{

- Mark the Logger class as static so that it can be used without having to create an instance to use:

public static class Logger

{

Note

If you use the Chapter05 namespace, then the Logger class will be accessible to code in examples and activities, provided they also use the Chapter05 namespace. If you prefer to create a folder for each example and exercise, then you should copy the file Logger.cs into each folder that you create.

- Now declare a static method called Log that is passed a string message parameter:

public static void Log(string message)

{

Console.WriteLine($"{DateTime.Now:T} [{Thread.CurrentThread.ManagedThreadId:00}] {message}");

}

}

}

When invoked, this will log a message to the console window using the WriteLine method. In the preceding snippet, the string interpolation feature in C# is used to define a string using the $ symbol; here, :T will format the current time (DateTime.Now) into a time-formatted string and :00 is used to include Thread.ManagedThreadId with a leading 0.

Thus, you have created the static Logger class that will be used throughout the rest of this chapter.

Note

You can find the code used for this example at https://packt.link/cg6c5.

In the next example, you will use the Logger class to log details when a thread is about to start and finish.

- Start by adding a new class file called TaskExamples.cs:

using System;

using System.Threading;

using System.Threading.Tasks;

namespace Chapter05.Examples

{

class TaskExamples

{

- The Main entry point will log that taskA is being created:

public static void Main()

{

Logger.Log("Creating taskA");

- Next, add the following code:

var taskA = new Task(() =>

{

Logger.Log("Inside taskA");

Thread.Sleep(TimeSpan.FromSeconds(5D));

Logger.Log("Leaving taskA");

});

Here, the simplest Task constructor is passed an Action lambda statement, which is the actual target code that you want to execute. The target code writes the message Inside taskA to the console. It pauses for five seconds using Thread.Sleep to block the current thread, thus simulating a long-running activity, before finally writing Leaving taskA to the console.

- Now that you have created taskA, confirm that it will only invoke its target code when the Start() method is called. You will do this by logging a message immediately before and after the method is called:

Logger.Log($"Starting taskA. Status={taskA.Status}");

taskA.Start();

Logger.Log($"Started taskA. Status={taskA.Status}");

Console.ReadLine();

}

}

}

- Copy the contents of Logger.cs file to same folder as the TaskExamples.cs example.

- Next run the console app to produce the following output:

10:47:34 [01] Starting taskA. Status=Created

10:47:34 [01] Started taskA. Status=WaitingToRun

10:47:34 [03] Inside taskA

10:47:39 [03] Leaving taskA

Note that the task's status is WaitingToRun even after you've called Start. This is because you are asking the .NET scheduler to schedule the code to run—that is, to add it to its queue of pending actions. Depending on how busy your application is with other tasks, it may not run immediately after you've called Start.

Note

You can find the code used for this example at https://packt.link/DHxt3.

In earlier versions of C#, this was the main way to create and start Task objects directly. It is no longer recommended and is only included here as you may find it used in older code. Its usage has been replaced by the Task.Run or Task.Factory.StartNew static factory methods, which offer a simpler interface for the most common usage scenarios.

Using Task.Factory.StartNew

The static method Task.Factory.StartNew contains various overloads that make it easier to create and configure a Task. Notice how the method is named StartNew. It creates a Task and automatically starts the method for you. The .NET team recognized that there is little value in creating a Task that is not immediately started after it is first created. Typically, you would want the Task to start performing its operation right away.

The first parameter is the familiar Action delegate to be executed, followed by optional cancelation tokens, creation options, and a TaskScheduler instance.

The following are some of the common overloads:

- Task.Factory.StartNew(Action action): The Action delegate contains the code to execute, as you have seen previously.

- Task.Factory.StartNew(Action action, CancellationToken cancellationToken): Here, CancellationToken coordinates the cancellation of the task.

- Task.Factory.StartNew(Action<object> action, object state, CancellationToken cancellationToken, TaskCreationOptions creationOptions, TaskScheduler scheduler): The TaskScheduler parameter allows you to specify a type of low-level scheduler responsible for queuing tasks. This option is rarely used.

Consider the following code, which uses the first and simplest overload:

var taskB = Task.Factory.StartNew((() =>

{

Logger.Log("Inside taskB");

Thread.Sleep(TimeSpan.FromSeconds(3D));

Logger.Log("Leaving taskB");

}));

Logger.Log($"Started taskB. Status={taskB.Status}");

Console.ReadLine();

Running this code produces the following output:

21:37:42 [01] Started taskB. Status=WaitingToRun

21:37:42 [03] Inside taskB

21:37:45 [03] Leaving taskB

From the output, you can see that this code achieves the same result as creating a Task but is more concise. The main point to consider is that Task.Factory.StartNew was added to C# to make it easier to create tasks that are started for you. It was preferable to use StartNew rather than creating tasks directly.

Note

The term Factory is often used in software development to represent methods that help create objects.

Task.Factory.StartNew provides a highly configurable way to start tasks, but in reality, many of the overloads are rarely used and need a lot of extra parameters to be passed to them. As such, Task.Factory.StartNew itself has also become somewhat obsolete in favor of the newer Task.Run static method. Still, the Task.Factory.StartNew is briefly covered as you may see it used in legacy C# applications.

Using Task.Run

The alternative and preferred static factory method, Task.Run, has various overloads and was added later to .NET to simplify and shortcut the most common task scenarios. It is preferable for newer code to use Task.Run to create started tasks, as far fewer parameters are needed to achieve common threading operations.

Some of the common overloads are as follows:

- public static Task Run(Action action): Contains the Action delegate code to execute.

- public static Task Run(Action action, CancellationToken cancellationToken): Additionally contains a cancelation token used to coordinate the cancellation of a task.

For example, consider the following code:

var taskC = Task.Run(() =>

{

Logger.Log("Inside taskC");

Thread.Sleep(TimeSpan.FromSeconds(1D));

Logger.Log("Leaving taskC");

});

Logger.Log($"Started taskC. Status={taskC.Status}");

Console.ReadLine();

Running this code will produce the following output:

21:40:27 [03] Inside taskC

21:40:27 [01] Started taskC. Status=WaitingToRun

21:40:28 [03] Leaving taskC

As you can see, the output is pretty similar to the outputs of the previous two code snippets. Each wait for a shorter time than its predecessor before the associated Action delegate completes.

The main difference is that creating a Task instance directly is an obsolete practice but will allow you to add an extra logging call before you explicitly call the Start method. That is the only benefit in creating a Task directly, which is not a particularly compelling reason to do so.

Running all three examples together produces this:

21:45:52 [01] Creating taskA

21:45:52 [01] Starting taskA. Status=Created

21:45:52 [01] Started taskA. Status=WaitingToRun

21:45:52 [01] Started taskB. Status=WaitingToRun

21:45:52 [01] Started taskC. Status=WaitingToRun

21:45:52 [04] Inside taskB

21:45:52 [03] Inside taskA

21:45:52 [05] Inside taskC

21:45:53 [05] Leaving taskC

21:45:55 [04] Leaving taskB

21:45:57 [03] Leaving taskA

You can see various ManagedThreadIds being logged and that taskC completes before taskB, which completes before taskA, due to the decreasing number of seconds specified in the Thread.Sleep calls in each case.

It is preferable to favor either of the two static methods, but which should you use when scheduling a new task? Task.Run should be used for the majority of cases where you need to simply offload some work onto the thread pool. Internally, Task.Run defers down to Task.Factory.StartNew.

Task.Factory.StartNew should be used where you have more advanced requirements, such as defining where tasks are queued, by using any of the overloads that accept a TaskScheduler instance, but in practice, this is seldom the requirement.

Note

You can find more information on Task.Run and Task.Factory.StartNew at https://devblogs.microsoft.com/pfxteam/task-run-vs-task-factory-startnew/ and https://blog.stephencleary.com/2013/08/startnew-is-dangerous.html.

So far, you have seen how small tasks can be started, each with a small delay before completion. Such delays can simulate the effect caused by code accessing slow network connections or running complex calculations. In the following exercise, you'll extend your Task.Run knowledge by starting multiple tasks that run increasingly longer numeric calculations.

This serves as an example to show how potentially complex tasks can be started and allowed to run to completion in isolation from one another. Note that in a traditional synchronous implementation, the throughput of such calculations would be severely restricted, owing to the need to wait for one operation to complete before the next one can commence. It is now time to practice what you have learned through an exercise.

Exercise 5.01: Using Tasks to Perform Multiple Slow-Running Calculations

In this exercise, you will create a recursive function, Fibonacci, which calls itself twice to calculate a cumulative value. This is an example of potentially slow-running code rather than using Thread.Sleep to simulate a slow call. You will create a console app that repeatedly prompts for a number to be entered. The larger this number, the longer each task will take to calculate and output its result. The following steps will help you complete this exercise:

- In the Chapter05 folder, add a new folder called Exercises. Inside that folder, add a new folder called Exercise01. You should have the folder structure as Chapter05ExercisesExercise01.

- Create a new file called Program.cs.

- Add the recursive Fibonacci function as follows. You can save a little processing time by returning 1 if the requested iteration is less than or equal to 2:

using System;

using System.Globalization;

using System.Threading;

using System.Threading.Tasks;

namespace Chapter05.Exercises.Exercise01

{

class Program

{

private static long Fibonacci(int n)

{

if (n <= 2L)

return 1L;

return Fibonacci(n - 1) + Fibonacci(n - 2);

}

- Add the static Main entry point to the console app and use a do-loop to prompt for a number to be entered.

- Use int.TryParse to convert the string into an integer if the user enters a string:

public static void Main()

{

string input;

do

{

Console.WriteLine("Enter number:");

input = Console.ReadLine();

if (!string.IsNullOrEmpty(input) && int.TryParse(input, NumberStyles.Any, CultureInfo.CurrentCulture, out var number))

- Define a lambda statement that captures the current time using DateTime.Now, calls the slow-running Fibonacci function, and logs the time taken to run:

{

Task.Run(() =>

{

var now = DateTime.Now;

var fib = Fibonacci(number);

var duration = DateTime.Now.Subtract(now);

Logger.Log($"Fibonacci {number:N0} = {fib:N0} (elapsed time: {duration.TotalSeconds:N0} secs)");

});

}

The lambda is passed to Task.Run and will be started by Task.Run shortly, freeing the do-while loop to prompt for another number.

- The program shall exit the loop when an empty value is entered:

} while (input != string.Empty);

}

}

}

- For running the console app, start by entering the numbers 1 and then 2. As these are very quick calculations, they both return in under one second.

Note

The first time you run this program, Visual Studio will show a warning similar to "Converting null literal or possible null value to non-nullable type". This is a reminder that you are using a variable that could be a null value.

Enter number:1

Enter number:2

11:25:11 [04] Fibonacci 1 = 1 (elapsed time: 0 secs)

Enter number:45

11:25:12 [04] Fibonacci 2 = 1 (elapsed time: 0 secs)

Enter number:44

Enter number:43

Enter number:42

Enter number:41

Enter number:40

Enter number:10

11:25:35 [08] Fibonacci 41 = 165,580,141 (elapsed time: 4 secs)

11:25:35 [09] Fibonacci 40 = 102,334,155 (elapsed time: 2 secs)

11:25:36 [07] Fibonacci 42 = 267,914,296 (elapsed time: 6 secs)

Enter number: 39

11:25:36 [09] Fibonacci 10 = 55 (elapsed time: 0 secs)

11:25:37 [05] Fibonacci 43 = 433,494,437 (elapsed time: 9 secs)

11:25:38 [06] Fibonacci 44 = 701,408,733 (elapsed time: 16 secs)

Enter number:38

11:25:44 [06] Fibonacci 38 = 39,088,169 (elapsed time: 1 secs)

11:25:44 [05] Fibonacci 39 = 63,245,986 (elapsed time: 2 secs)

11:25:48 [04] Fibonacci 45 = 1,134,903,170 (elapsed time: 27 secs)

Notice how the ThreadId is [04] for both 1 and 2. This shows that the same thread was used by Task.Run for both iterations. By the time 2 was entered, the previous calculation had already been completed. So .NET decided to reuse thread 04 again. The same occurs for the value 45, which took 27 seconds to complete even though it was the third requested.

You can see that entering values above 40 causes the elapsed time to increase quite dramatically (for each increase by one, the time taken almost doubles). Starting with higher numbers and descending downward, you can see that the calculations for 41, 40, and 42 were all completed before 44 and 43, even though they were started at similar times. In a few instances, the same thread appears twice. Again, this is .NET re-using idle threads to run the task's action.

Note

You can find the code used for this exercise at https://packt.link/YLYd4.

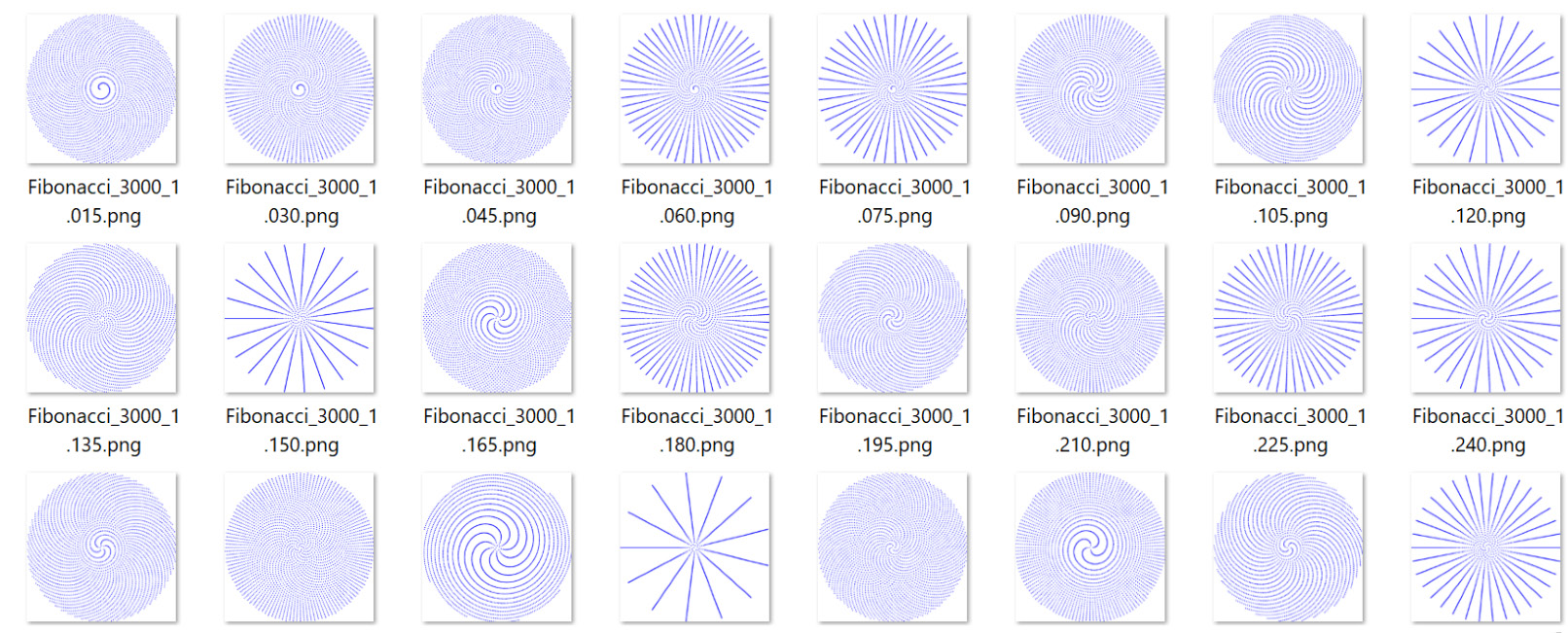

Coordinating Tasks

In the previous Exercise 5.01, you saw how multiple tasks can be started and left to run to completion without any interaction between the individual tasks. One such scenario is a process that needs to search a folder looking for image files, adding a copyright watermark to each image file found. The process can use multiple tasks, each working on a distinct file. There would be no need to coordinate each task and its resulting image.

Conversely, it is quite common to start various long-running tasks and only continue when some or all of the tasks have completed; maybe you have a collection of complex calculations that need to be started and can only perform a final calculation once the others have completed.

In the Introduction section, it was mentioned that a hiking application needed a GPS satellite signal, navigation route, and a heart rate monitor before it could be used safely. Each of these dependencies can be created using a Task and only when all of them have signaled that they are ready to be used should the application then allow the user to start with their route.

Over the next sections, you will cover various ways offered by C# to coordinate tasks. For example, you may have a requirement to start many independent tasks running, each running a complex calculation, and need to calculate a final value once all the previous tasks have completed. You may either like to start downloading data from multiple websites but want to cancel the downloads that are taking too long to complete. The next section will cover this scenario.

Waiting for Tasks to Complete

Task.Wait can be used to wait for an individual task to complete. If you are working with multiple tasks, then the static Task.WaitAll method will wait for all tasks to complete. The WaitAll overloads allow cancellation and timeout options to be passed in, with most returning a Boolean value to indicate success or failure, as you can see in the following list:

- public static bool WaitAll(Task[] tasks, TimeSpan timeout): This is passed an array of Task items to wait for. It returns true if all of the tasks complete within the maximum time period specified (TimeSpan allows specific units such as hours, minutes, and seconds to be expressed).

- public static void WaitAll(Task[] tasks, CancellationToken cancellationToken): This is passed an array of Task items to wait for, and a cancellation token that can be used to coordinate the cancellation of the tasks.

- public static bool WaitAll(Task[] tasks, int millisecondsTimeout, CancellationToken cancellationToken): This is passed an array of Task items to wait for and a cancellation token that can be used to coordinate the cancellation of the tasks. millisecondsTimeout specifies the number of milliseconds to wait for all tasks to complete by.

- public static void WaitAll(params Task[] tasks): This allows an array of Task items to wait for.

If you need to wait for any task to complete from a list of tasks, then you can use Task.WaitAny. All of the WaitAny overloads return either the index number of the first completed task or -1 if a timeout occurred (the maximum amount of time to wait for).

For example, if you pass an array of five Task items and the last Task in that array completes, then you will be returned the value four (array indexes always start counting at zero).

- public static int WaitAny(Task[] tasks, int millisecondsTimeout, CancellationToken cancellationToken): This is passed an array of Task items to wait for, the number of milliseconds to wait for any Task to complete by, and a cancellation token that can be used to coordinate the cancellation of the tasks.

- public static int WaitAny(params Task[] tasks): This is passed an array of Task items to wait for any Task to be completed.

- public static int WaitAny(Task[] tasks, int millisecondsTimeout): Here, you pass the number of milliseconds to wait for any tasks to complete.

- public static int WaitAny(Task[] tasks, CancellationToken cancellationToken) CancellationToken: This is passed a cancellation token that can be used to coordinate the cancellation of the tasks.

- public static int WaitAny(Task[] tasks, TimeSpan timeout): This is passed the maximum time period to wait for.

Calling Wait, WaitAll, or WaitAny will block the current thread, which can negate the benefits of using a task in the first place. For this reason, it is preferable to call these from within an awaitable task, such as via Task.Run as the following example shows.

The code creates outerTask with a lambda statement, which itself then creates two inner tasks, inner1, and inner2. WaitAny is used to get the index of the first inner task to complete. In this example, inner2 will complete first as it pauses for a shorter time, so the resulting index value will be 1:

TaskWaitAnyExample.cs

1 var outerTask = Task.Run( () =>

2 {

3 Logger.Log("Inside outerTask");

4 var inner1 = Task.Run(() =>

5 {

6 Logger.Log("Inside inner1");

7 Thread.Sleep(TimeSpan.FromSeconds(3D));

8 });

9 var inner2 = Task.Run(() =>

10 {

11 Logger.Log("Inside inner2");

12 Thread.Sleep(TimeSpan.FromSeconds(2D));

13 });

14

15 Logger.Log("Calling WaitAny on outerTask");

You can find the complete code here: http://packt.link/CicWk.

When the code runs, it produces the following output:

15:47:43 [04] Inside outerTask

15:47:43 [01] Press ENTER

15:47:44 [04] Calling WaitAny on outerTask

15:47:44 [05] Inside inner1

15:47:44 [06] Inside inner2

15:47:46 [04] Waitany index=1

The application remains responsive because you called WaitAny from inside a Task. You have not blocked the application's main thread. As you can see, thread ID 01 has logged this message: 15:47:43 [01] Press ENTER.

This type of pattern can be used in cases where you need to fire and forget a task. For example, you may want to log an informational message to a database or a log file, but it is not essential that the flow of the program is altered if either task fails to complete.

A common progression from fire-and-forget tasks is those cases where you need to wait for several tasks to complete within a certain time limit. The next exercise will cover this scenario.

Exercise 5.02: Waiting for Multiple Tasks to Complete Within a Time Period

In this exercise, you will start three long-running tasks and decide your next course of action if they all completed within a certain randomly selected time span.

Here, you will see the generic Task<T> class being used. The Task<T> class includes a Value property that can be used to access the result of Task (in this exercise, it is a string-based generic, so Value will be a string type). You won't use the Value property here as the purpose of this exercise is to show that void and generic tasks can be waited for together. Perform the following steps to complete this exercise:

- Add the main entry point to the console app:

using System;

using System.Threading;

using System.Threading.Tasks;

namespace Chapter05.Exercises.Exercise02

{

class Program

{

public static void Main()

{

Logger.Log("Starting");

- Declare a variable named taskA, passing Task.Run a lambda that pauses the current thread for 5 seconds:

var taskA = Task.Run( () =>

{

Logger.Log("Inside TaskA");

Thread.Sleep(TimeSpan.FromSeconds(5));

Logger.Log("Leaving TaskA");

return "All done A";

});

- Create two more tasks using the method group syntax:

var taskB = Task.Run(TaskBActivity);

var taskC = Task.Run(TaskCActivity);

As you may recall, this shorter syntax can be used if the compiler can determine the type of argument required for a zero- or single-parameter method.

- Now pick a random maximum timeout in seconds. This means that either of the two tasks may not complete before the timeout period has elapsed:

var timeout = TimeSpan.FromSeconds(new Random().Next(1, 10));

Logger.Log($"Waiting max {timeout.TotalSeconds} seconds...");

Note that each of the tasks will still run to completion as you have not added a mechanism to stop executing the code inside the body of the Task.Run Action lambda.

- Call WaitAll, passing in the three tasks and the timeout period:

var allDone = Task.WaitAll(new[] {taskA, taskB, taskC}, timeout);

Logger.Log($"AllDone={allDone}: TaskA={taskA.Status}, TaskB={taskB.Status}, TaskC={taskC.Status}");

Console.WriteLine("Press ENTER to quit");

Console.ReadLine();

}

This will return true if all tasks complete in time. You will then log the status of all tasks and wait for Enter to be pressed to exit the application.

- Finish off by adding two slow-running Action methods:

private static string TaskBActivity()

{

Logger.Log($"Inside {nameof(TaskBActivity)}");

Thread.Sleep(TimeSpan.FromSeconds(2));

Logger.Log($"Leaving {nameof(TaskBActivity)}");

return "";

}

private static void TaskCActivity()

{

Logger.Log($"Inside {nameof(TaskCActivity)}");

Thread.Sleep(TimeSpan.FromSeconds(1));

Logger.Log($"Leaving {nameof(TaskCActivity)}");

}

}

}

Each will log a message when starting and leaving a task, after a few seconds. The useful nameof statement is used to include the name of the method for extra logging information. Often, it is useful to examine log files to see the name of a method that has been accessed rather than hardcoding its name as a literal string.

- Upon running the code, you will see the following output:

14:46:28 [01] Starting

14:46:28 [04] Inside TaskBActivity

14:46:28 [05] Inside TaskCActivity

14:46:28 [06] Inside TaskA

14:46:28 [01] Waiting max 7 seconds...

14:46:29 [05] Leaving TaskCActivity

14:46:30 [04] Leaving TaskBActivity

14:46:33 [06] Leaving TaskA

14:46:33 [01] AllDone=True: TaskA=RanToCompletion, TaskB=RanToCompletion, TaskC=RanToCompletion

Press ENTER to quit

While running the code, a seven-second timeout was randomly picked by the runtime. This allowed all tasks to complete in time, so true was returned by WaitAll and all tasks had a RanToCompletion status at that point. Notice that the thread ID, in square brackets, is different for all three tasks.

- Run the code again:

14:48:20 [01] Starting

14:48:20 [01] Waiting max 2 seconds...

14:48:20 [05] Inside TaskCActivity

14:48:20 [06] Inside TaskA

14:48:20 [04] Inside TaskBActivity

14:48:21 [05] Leaving TaskCActivity

14:48:22 [04] Leaving TaskBActivity

14:48:22 [01] AllDone=False: TaskA=Running, TaskB=Running, TaskC=RanToCompletion

Press ENTER to quit

14:48:25 [06] Leaving TaskA

This time the runtime picked a two-second maximum wait time, so the WaitAll call times out with false being returned.

You may have noticed from the output that Inside TaskBActivity can sometimes appear before Inside TaskCActivity. This demonstrates the .NET scheduler's queuing mechanism. When you call Task.Run, you are asking the scheduler to add this to its queue. There may only be a matter of milliseconds between the time that you call Task.Run and when it invokes your lambda, but this can depend on how many other tasks you have recently added to the queue; a greater number of pending tasks could increase that time period.

Interestingly, the output shows Leaving TaskBActivity, but the taskB status was still Running just after WaitAll finished waiting. This indicates that there can sometimes be a very slight delay when a timed-out task's status is changed.

Some three seconds after the Enter key is pressed, Leaving TaskA is logged. This shows that the Action within any timed-out tasks will continue to run, and .NET will not stop it for you.

Note

You can find the code used for this exercise at https://packt.link/5lH0o.

Continuation Tasks

So far, you have created tasks that are independent of one another, but what if you need to continue a task with the results of the previous task? Rather than blocking the current thread, by calling Wait or accessing the Result property, this can be achieved using the Task ContinueWith methods.

These methods return a new task, referred to as a continuation task, or more simply, a continuation, which can consume the previous task's or the antecedent's results.

As with standard tasks, they do not block the caller thread. There are several ContinueWith overloads available, many allowing extensive customization. A few of the more commonly used overloads are as follows:

- public Task ContinueWith(Action<Task<TResult>> continuationAction): This defines a generic Action<T> based Task to run when the previous task completes.

- public Task ContinueWith(Action<Task<TResult>> continuationAction, CancellationToken cancellationToken): This has a task to run and a cancellation token that can be used to coordinate the cancellation of the task.

- public Task ContinueWith(Action<Task<TResult>> continuationAction, TaskScheduler scheduler): This also has a task to run and a low-level TaskScheduler that be used to queue the task.

- public Task ContinueWith(Action<Task<TResult>> continuationAction, TaskContinuationOptions continuationOptions): A task to run, with the behavior for the task specified with TaskContinuationOptions. For example, specifying NotOnCanceled indicates that you do not want the continuation to be called if the previous task is canceled.

Continuations have an initial WaitingForActivation status. The .NET Framework will execute this task once the antecedent task or tasks have completed. It is important to note that you do not need to start a continuation and attempting to do so will result in an exception.

The following example simulates calling a long-running function, GetStockPrice (this may be some sort of web service or database call that takes a few seconds to return):

ContinuationExamples.cs

1 class ContinuationExamples

2 {

3 public static void Main()

4 {

5 Logger.Log("Start...");

7 .ContinueWith(prev =>

8 {

9 Logger.Log($"GetPrice returned {prev.Result:N2}, status={prev.Status}");

10 });

11

12 Console.ReadLine();

13 }

14

You can find the complete code here: http://packt.link/rpNcx.

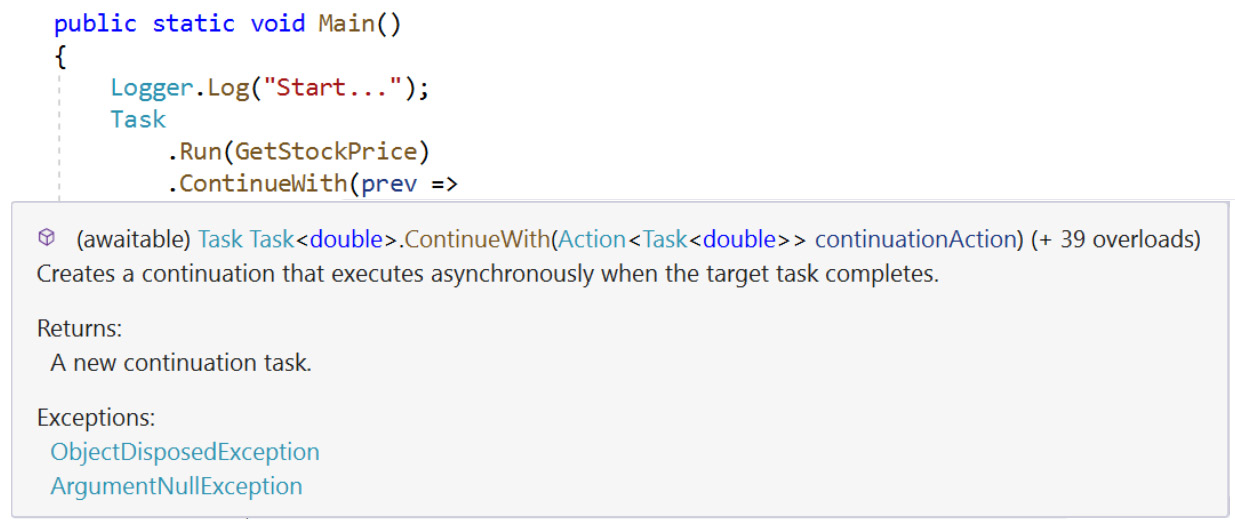

The call to GetStockPrice returns a double, which results in the generic Task<double> being passed to as a continuation (see the highlighted part). The prev parameter is a generic Action of type Task<double>, allowing you to access the antecedent task and its Result to retrieve the value returned from GetStockPrice.

If you hover your mouse over the ContinueWith method, you will see the IntelliSense description for it as follows:

Figure 5.1: ContinueWith method signature

Note

The ContinueWith method has various options that can be used to fine-tune behavior, and you can get more details about them from https://docs.microsoft.com/en-us/dotnet/api/system.threading.tasks.taskcontinuationoptions.

Running the example produces an output similar to the following:

09:30:45 [01] Start...

09:30:45 [03] Inside GetStockPrice

09:30:50 [04] GetPrice returned 76.44, status=RanToCompletion

In the output, thread [01] represents the console's main thread. The task that called GetStockPrice was executed by thread ID [03], yet the continuation was executed using a different thread, thread ([04]).

Note

You can find the code used for this example at https://packt.link/rpNcx.

The continuation running on a different thread may not be a problem, but it certainly will be an issue if you are working on UWP, WPF, or WinForms UI apps where it's essential that UI elements are updated using the main UI thread (unless you are using binding semantics).

It is worth noting that the TaskContinuationOptions.OnlyOnRanToCompletion option can be used to ensure the continuation only runs if the antecedent task has run to completion first. For example, you may create a Task that fetches customers' orders from a database and then use a continuation task to calculate the average order value. If the previous task fails or is canceled by the user, then there is no point in wasting processing power to calculate the average if the user no longer cares about the result.

Note

The ContinueWith method has various options that can be used to fine-tune behavior, and you can see https://docs.microsoft.com/en-us/dotnet/api/system.threading.tasks.taskcontinuationoptions for more details.

If you access the Task<T> Result property on a failed or canceled antecedent task, this will result in an AggregateException being thrown. This will be covered in more detail later.

Using Task.WhenAll and Task.WhenAny with Multiple Tasks

You have seen how a single task can be used to create a continuation task, but what if you have multiple tasks and need to continue with a final operation when any or all of the previous tasks have completed?

Earlier, the Task.WaitAny and Task.WaitAll methods were used to wait for tasks to complete, but these block the current thread. This is where Task.WhenAny and Task.WhenAll can be used. They return a new Task whose Action delegate is called when any, or all, of the preceding tasks have completed.

There are four WhenAll overloads, two that return a Task and two that return a generic Task<T> allowing the task's result to be accessed:

- public static Task WhenAll(IEnumerable<Task> tasks): This continues when the collection of tasks completes.

- public static Task WhenAll(params Task[] tasks): This continues when the array of tasks completes.

- public static Task<TResult[]> WhenAll<TResult>(params Task<TResult>[] tasks): This continues when the array of generic Task<T> items complete.

- public static Task<TResult[]> WhenAll<TResult>(IEnumerable<Task<TResult>> tasks): This continues when the collection of generic Task<T> items complete.

WhenAny has a similar set of overloads but returns the Task or Task<T> that is the first task to complete. You'll next perform a few exercises showing WhenAll and WhenAny in practice.

Exercise 5.03: Waiting for All Tasks to Complete

Say you have been asked by a car dealer to create a console application that calculates the average sales value for cars sold across different regions. A dealership is a busy place, but they know it may take a while to fetch and calculate the average. For this reason, they want to enter a maximum number of seconds that they are prepared to wait for the average calculation. Any longer and they will leave the app and ignore the result.

The dealership has 10 regional sales hubs. To calculate the average, you need to first invoke a method called FetchSales, which returns a list of CarSale items for each of these regions.

Each call to FetchSales could be to a potentially slow-running service (you will implement random pauses to simulate such a delay) so you need to use a Task for each as you can't know for sure how long each call will take to complete. You also do not want slow-running tasks to affect other tasks, but to calculate a valid average, it's important to have all results returned before continuing.

Create a SalesLoader class that implements IEnumerable<CarSale> FetchSales() to return the car sales details. Then, a SalesAggregator class should be passed a list of SalesLoader (in this exercise, there will be 10 loader instances, one for each region). The aggregator will wait for all loaders to finish using Task.WhenAll before continuing with a task that calculates the average across all regions.

Perform the following steps to do so:

- First, create a CarSale record. The constructor accepts two values, the name of the car and its sale price (name and salePrice):

using System;

using System.Collections.Generic;

using System.Globalization;

using System.Linq;

using System.Threading;

using System.Threading.Tasks;

namespace Chapter05.Exercises.Exercise03

{

public record CarSale

{

public CarSale(string name, double salePrice)

=> (Name, SalePrice) = (name, salePrice);

public string Name { get; }

public double SalePrice { get; }

}

- Now create an interface, ISalesLoader, that represents the sales data loading service:

public interface ISalesLoader

{

public IEnumerable<CarSale> FetchSales();

}

It has just one call, FetchSales, returning an enumerable of type CarSale. For now, it's not important to know how the loader works; just that it returns a list of car sales when called. Using an interface here allows using various types of loader as needed.

- User the aggregator class to call an ISalesLoader implementation:

public static class SalesAggregator

{

public static Task<double> Average(IEnumerable<ISalesLoader> loaders)

{

It is declared as static as there is no state between calls. Define an Average function that is passed an enumerable of ISalesLoader items and returns a generic Task<Double> for the final average calculation.

- For each of the loader parameters, use a LINQ projection to pass a loader.FetchSales method to Task.Run:

var loaderTasks = loaders.Select(ldr => Task.Run(ldr.FetchSales));

return Task

.WhenAll(loaderTasks)

.ContinueWith(tasks =>

Each of these will return a Task<IEnumerable<CarSale>> instance. WhenAll is used to create a single task that continues when all of the loader tasks have completed via a ContinueWith call.

- Use the LINQ SelectMany to grab all of the CarSale items from every loader call result, before calling the Linq Average on the SalePrice field of each CarSale item:

{

var average = tasks.Result

.SelectMany(t => t)

.Average(car => car.SalePrice);

return average;

});

}

}

}

- Implement the ISalesLoader interface from a class called SalesLoader:

public class SalesLoader : ISalesLoader

{

private readonly Random _random;

private readonly string _name;

public SalesLoader(int id, Random rand)

{

_name = $"Loader#{id}";

_random = rand;

}

The constructor will be passed an int variable used for logging and a Random instance to help create a random number of CarSale items.

- Your ISalesLoader implementation requires a FetchSales function. Include a random delay of between 1 and 3 seconds to simulate a less reliable service:

public IEnumerable<CarSale> FetchSales()

{

var delay = _random.Next(1, 3);

Logger.Log($"FetchSales {_name} sleeping for {delay} seconds ...");

Thread.Sleep(TimeSpan.FromSeconds(delay));

You are trying to test that your application behaves with various time delays. Hence, the random class use.

- Use Enumerable.Range and random.Next to pick a random number from one to five:

var sales = Enumerable

.Range(1, _random.Next(1, 5))

.Select(n => GetRandomCar())

.ToList();

foreach (var car in sales)

Logger.Log($"FetchSales {_name} found: {car.Name} @ {car.SalePrice:N0}");

return sales;

}

This is the total number of CarSale items to return using your GetRandomCar function.

- Use the GetRandomCar to generate a CarSale item with a random manufacturer's name from a hardcoded list.

- Use the carNames.length property to pick a random index number between zero and four for the car's name:

private readonly string[] _carNames = { "Ford", "BMW", "Fiat", "Mercedes", "Porsche" };

private CarSale GetRandomCar()

{

var nameIndex = _random.Next(_carNames.Length);

return new CarSale(

_carNames[nameIndex], _random.NextDouble() * 1000);

}

}

- Now, create your console app to test this out:

public class Program

{

public static void Main()

{

var random = new Random();

const int MaxSalesHubs = 10;

string input;

do

{

Console.WriteLine("Max wait time (in seconds):");

input = Console.ReadLine();

if (string.IsNullOrEmpty(input))

continue;

Your app will repeatedly ask for a maximum time that the user is prepared to wait while data is downloaded. Once all the data has been downloaded, the app will use this to calculate an average price. Pressing Enter alone will result in the program loop ending. MaxSalesHubs is the maximum number of sales hubs to request data for.

- Convert the entered value into an int type, then use Enumerable.Range again to create a random number of new SalesLoader instances (you have up to 10 different sales hubs):

if (int.TryParse(input, NumberStyles.Any, CultureInfo.CurrentCulture, out var maxDelay))

{

var loaders = Enumerable.Range(1, random.Next(1, MaxSalesHubs))

.Select(n => new SalesLoader(n, random))

.ToList();

- Pass loaders to the static SalesAggregator.Average method to receive a Task<Double>.

- Call Wait, passing in the maximum wait time:

var averageTask = SalesAggregator.Average(loaders);

var hasCompleted = averageTask.Wait( TimeSpan.FromSeconds(maxDelay));

var average = averageTask.Result;

If the Wait call does return in time, then you will see a true value for has completed.

- Finish off by checking hasCompleted and log a message accordingly:

if (hasCompleted)

{

Logger.Log($"Average={average:N0}");

}

else

{

Logger.Log("Timeout!");

}

}

} while (input != string.Empty);

}

}

}

- When running the console app and entering a short maximum wait of 1 second, you see three loader instances randomly created:

Max wait time (in seconds):1

10:52:49 [04] FetchSales Loader#1 sleeping for 1 seconds ...

10:52:49 [06] FetchSales Loader#3 sleeping for 1 seconds ...

10:52:49 [05] FetchSales Loader#2 sleeping for 1 seconds ...

10:52:50 [04] FetchSales Loader#1 found: Mercedes @ 362

10:52:50 [04] FetchSales Loader#1 found: Ford @ 993

10:52:50 [06] FetchSales Loader#3 found: Fiat @ 645

10:52:50 [05] FetchSales Loader#2 found: Mercedes @ 922

10:52:50 [06] FetchSales Loader#3 found: Ford @ 9

10:52:50 [05] FetchSales Loader#2 found: Porsche @ 859

10:52:50 [05] FetchSales Loader#2 found: Mercedes @ 612

10:52:50 [01] Timeout!

Each loader sleeps for 1 second (you can see various thread IDs are logged) before returning a random list of CarSale records. You soon reach the maximum timeout value, hence the message Timeout! with no average value displayed.

- Enter a larger timeout period of 10 seconds:

Max wait time (in seconds):10

20:08:41 [05] FetchSales Loader#1 sleeping for 2 seconds ...

20:08:41 [12] FetchSales Loader#4 sleeping for 1 seconds ...

20:08:41 [08] FetchSales Loader#2 sleeping for 1 seconds ...

20:08:41 [11] FetchSales Loader#3 sleeping for 1 seconds ...

20:08:41 [15] FetchSales Loader#5 sleeping for 2 seconds ...

20:08:41 [13] FetchSales Loader#6 sleeping for 2 seconds ...

20:08:41 [14] FetchSales Loader#7 sleeping for 1 seconds ...

20:08:42 [08] FetchSales Loader#2 found: Porsche @ 735

20:08:42 [08] FetchSales Loader#2 found: Fiat @ 930

20:08:42 [11] FetchSales Loader#3 found: Porsche @ 735

20:08:42 [12] FetchSales Loader#4 found: Porsche @ 735

20:08:42 [08] FetchSales Loader#2 found: Porsche @ 777

20:08:42 [11] FetchSales Loader#3 found: Ford @ 500

20:08:42 [12] FetchSales Loader#4 found: Ford @ 500

20:08:42 [12] FetchSales Loader#4 found: Porsche @ 710

20:08:42 [14] FetchSales Loader#7 found: Ford @ 144

20:08:43 [05] FetchSales Loader#1 found: Fiat @ 649

20:08:43 [15] FetchSales Loader#5 found: Ford @ 779

20:08:43 [13] FetchSales Loader#6 found: Porsche @ 763

20:08:43 [15] FetchSales Loader#5 found: Fiat @ 137

20:08:43 [13] FetchSales Loader#6 found: BMW @ 415

20:08:43 [15] FetchSales Loader#5 found: Fiat @ 853

20:08:43 [15] FetchSales Loader#5 found: Porsche @ 857

20:08:43 [01] Average=639

Entering a value of 10 seconds allow 7 random loaders to complete in time and to finally create the average value of 639.

Note

You can find the code used for this exercise at https://packt.link/kbToQ.

So far, this chapter has considered the various ways that individual tasks can be created and how static Task methods are used to create tasks that are started for us. You saw how Task.Factory.StartNew is used to create configured tasks, albeit with a longer set of configuration parameters. The Task.Run methods, which were more recently added to C#, are preferable by using their more concise signatures for most regular scenarios.

Using continuations, single and multiple tasks can be left to run in isolation, only continuing with a final task when all or any of the preceding tasks have run to completion.

Now it is time to look at the async and wait keywords to run asynchronous code. These keywords are a relatively new addition to the C# language. The Task.Factory.StartNew and Task.Run methods can be found in older C# applications, but hopefully, you will see that async/await provides a much clearer syntax.

Asynchronous Programming

So far, you have created tasks and used the static Task factory methods to run and coordinate such tasks. In earlier versions of C#, these were the only ways to create tasks.

The C# language now provides the async and await keywords to mark a method as asynchronous. This is the preferred way to run asynchronous code. Using the async/await style results in less code and the code that is created is generally easier to grasp and therefore easier to maintain.

Note

You may often find that legacy concurrent-enabled applications were originally created using Task.Factory.StartNew methods are subsequently updated to use the equivalent Task.Run methods or are updated directly to the async/await style.

The async keyword indicates that the method will return to the caller before it has had a chance to complete its operations, therefore the caller should wait for it to complete at some point in time.

Adding the async keyword to a method instructs the compiler that it may need to generate additional code to create a state machine. In essence, a state machine extracts the logic from your original method into a series of delegates and local variables that allows code to continue onto the next statement following an await expression. The compiler generates delegates that can jump back to the same location in the method once they have completed.

Note

You don't normally see this extra complied code, but if you are interested in learning more about state machines in C#, visit https://devblogs.microsoft.com/premier-developer/dissecting-the-async-methods-in-c.

Adding the async keyword does not mean that all or any part of the method will actually run in an asynchronous manner. When an async method is executed, it starts off running synchronously until it comes to a section of code with the await keyword. At this point, the awaitable block of code (in the following example, the BuildGreetings call is awaitable due to the preceding async keyword) is checked to see if it has already been completed. If so, it continues executing synchronously. If not, the asynchronous method is paused and returns an incomplete Task to the caller. This will be complete once the async code has been completed.

In the following console app, the entry point, static Main, has been marked as async and the Task return type added. You cannot mark a Main entry point, which returns either int or void, as async because the runtime must be able to return a Task result to the calling environment when the console app closes:

AsyncExamples.cs

1 using System;

2 using System.Threading;

3 using System.Threading.Tasks;

4

5 namespace Chapter05.Examples

6 {

7 public class AsyncExamples

8 {

9 public static async Task Main()

10 {

11 Logger.Log("Starting");

12 await BuildGreetings();

13

14 Logger.Log("Press Enter");

15 Console.ReadLine();

You can find the complete code here: http://packt.link/CsCek.

Running the example produces an output like this:

18:20:31 [01] Starting

18:20:31 [01] Morning

18:20:41 [04] Morning...Afternoon

18:20:42 [04] Morning...Afternoon...Evening

18:20:42 [04] Press Enter

As soon as Main runs, it logs Starting. Notice how the ThreadId is [01]. As you saw earlier, the console app's main thread is numbered as 1 (because the Logger.Log method uses the 00 format string, which adds a leading 0 to numbers in the range zero to nine).

Then the asynchronous method BuildGreetings is called. It sets the string message variable to "Morning" and logs the message. The ThreadId is still [01]; this is currently running synchronously.

So far, you have been using Thread.Sleep to block the calling thread in order or simulate long-running operations, but async/await makes it easier to simulate slow actions using the static Task.Delay method and awaiting that call. Task.Delay returns a task so it can also be used in continuation tasks.

Using Task.Delay, you will make two distinct awaitable calls (one that waits for 10 seconds and the second for two seconds), before continuing and appending to your local message string. The two Task.Delay calls could have been any method in your code that returns a Task.

The great thing here is that each awaited section gets its correct state in the order that it was declared in the code, irrespective of waiting 10 (or two) seconds prior. The thread IDs have all changed from [01] to [04]. This tells you that a different thread is running these statements. Even the very last Press Enter message has a different thread to the original thread.

Async/await makes it easier to run a series of task-based codes using the familiar WhenAll, WhenAny, and ContinueWith methods interchangeably.

The following example shows how multiple async/await calls can be applied at various stages in a program using a mixture of various awaitable calls. This simulates an application that makes a call to a database (FetchPendingAccounts) to fetch a list of user accounts. Each user in the pending accounts list is given a unique ID (using a task for each user).

Based on the user's region, an account is then created in the northern region or the other region, again, using a task for each. Finally, an awaitable Task.WhenAll call signals that everything has been completed.

using System;

using System.Collections.Generic;

using System.Linq;

using System.Threading.Tasks;

namespace Chapter05.Examples

{

Use an enum to define a RegionName:

public enum RegionName { North, East, South, West };

A User record constructor is passed a userName and the user's region:

public record User

{

public User(string userName, RegionName region)

=> (UserName, Region) = (userName, region);

public string UserName { get; }

public RegionName Region { get; }

public string ID { get; set; }

}

AccountGenerator is the main controlling class. It contains an async CreateAccounts method that can be awaited by a console app (this is implemented at the end of the example):

public class AccountGenerator

{

public async Task CreateAccounts()

{

Using the await keyword, you define an awaitable call to FetchPendingAccounts:

var users = await FetchPendingAccounts();

For each one of the users returned by FetchPendingAccounts, you make an awaitable call to GenerateId. This shows that a loop can contain multiple awaitable calls. The runtime will set the user ID for the correct user instance:

foreach (var user in users)

{

var id = await GenerateId();

user.ID = id;

}

Using a Linq Select function, you create a list of tasks. For each user, a Northern or Other account is created based on the user's region (each one of the calls is a Task per user):

var accountCreationTasks = users.Select(

user => user.Region == RegionName.North

? Task.Run(() => CreateNorthernAccount(user))

: Task.Run(() => CreateOtherAccount(user)))

.ToList();

The list of account creation tasks is awaited using the static WhenAll call. Once this completes, UpdatePendindAccounts will be called passing in the updated user list. This shows that you can pass lists of tasks between async statements:

Logger.Log($"Creating {accountCreationTasks.Count} accounts");

await Task.WhenAll(accountCreationTasks);

var updatedAccountTask = UpdatePendingAccounts(users);

await updatedAccountTask;

Logger.Log($"Updated {updatedAccountTask.Result} pending accounts");

}

The FetchPendingAccounts method returns a Task containing a list of users (here you simulate a delay of 3 seconds using Task.Delay):

private async Task<List<User>> FetchPendingAccounts()

{

Logger.Log("Fetching pending accounts...");

await Task.Delay(TimeSpan.FromSeconds(3D));

var users = new List<User>

{

new User("AnnH", RegionName.North),

new User("EmmaJ", RegionName.North),

new User("SophieA", RegionName.South),

new User("LucyG", RegionName.West),

};

Logger.Log($"Found {users.Count} pending accounts");

return users;

}

GenerateId uses Task.FromResult to generate a globally unique ID using the Guid class. Task.FromResult is used when you want to return a result but do not need to create a running task as you would with Task.Run:

private static Task<string> GenerateId()

{

return Task.FromResult(Guid.NewGuid().ToString());

}

The two bool task methods create either a northern account or other account. Here, you return true to indicate that each account creation call was successful, regardless:

private static async Task<bool> CreateNorthernAccount(User user)

{

await Task.Delay(TimeSpan.FromSeconds(2D));

Logger.Log($"Created northern account for {user.UserName}");

return true;

}

private static async Task<bool> CreateOtherAccount(User user)

{

await Task.Delay(TimeSpan.FromSeconds(1D));

Logger.Log($"Created other account for {user.UserName}");

return true;

}

Next, UpdatePendingAccounts is passed a list of users. For each user, you create a task that simulates a slow-running call to update each user and returning a count of the number of users subsequently updated:

private static async Task<int> UpdatePendingAccounts(IEnumerable<User> users)

{

var updateAccountTasks = users.Select(usr => Task.Run(

async () =>

{

await Task.Delay(TimeSpan.FromSeconds(2D));

return true;

}))

.ToList();

await Task.WhenAll(updateAccountTasks);

return updateAccountTasks.Count(t => t.Result);

}

}

Finally, the console app creates an AccountGenerator instance and waits for CreateAccounts to finish before writing an All done message:

public static class AsyncUsersExampleProgram

{

public static async Task Main()

{

Logger.Log("Starting");

await new AccountGenerator().CreateAccounts();

Logger.Log("All done");

Console.ReadLine();

}

}

}

Running the console app produces this output:

20:12:38 [01] Starting

20:12:38 [01] Fetching pending accounts...

20:12:41 [04] Found 4 pending accounts

20:12:41 [04] Creating 4 accounts

20:12:42 [04] Created other account for SophieA

20:12:42 [07] Created other account for LucyG

20:12:43 [04] Created northern account for EmmaJ

20:12:43 [05] Created northern account for AnnH

20:12:45 [05] Updated 4 pending accounts

20:12:45 [05] All done

Here, you can see that thread [01] writes the Starting message. This is the application's main thread. Note, too, that the main thread also writes Fetching pending accounts... from the FetchPendingAccounts method. This is still running synchronously as the awaitable block (Task.Delay) has not yet been reached.

Threads [4], [5], and [7] create each of the four user accounts. You used Task.Run to call the CreateNorthernAccount or CreateOtherAccount methods. Thread [5] runs the last statement in CreateAccounts: Updated 4 pending accounts. The thread numbers might differ in your system because .NET uses an internal pool of threads which vary based on how busy each thread is.

Note

You can find the code used for this example at https://packt.link/ZIK8k.

Async Lambda Expressions

Chapter 3, Delegates, Events, and Lambdas, looked at lambda expressions and how they can be used to create succinct code. You can also use the async keyword with lambda expressions to create code for an event handler that contains various async code.

The following example uses the WebClient class to show two different ways to download data from a website (this will be covered in great detail in Chapter 8, Creating and Using Web API Clients and Chapter 9, Creating API Services).

using System;

using System.Net;

using System.Net.Http

using System.Threading.Tasks;

namespace Chapter05.Examples

{

public class AsyncLambdaExamples

{

public static async Task Main()

{

const string Url = "https://www.packtpub.com/";

using var client = new WebClient();

Here, you add your own event handler to the WebClient class DownloadDataCompleted event using a lambda statement that is prefixed with the async keyword. The compiler will allow you to add awaitable calls inside the body of the lambda.

This event will be fired after DownloadData is called and the data requested has been downloaded for us. The code uses an awaitable block Task.Delay to simulate some extra processing on a different thread:

client.DownloadDataCompleted += async (sender, args) =>

{

Logger.Log("Inside DownloadDataCompleted...looking busy");

await Task.Delay(500);

Logger.Log("Inside DownloadDataCompleted..all done now");

};

You invoke the DownloadData method, passing in your URL and then logging the length of the web data received. This particular call itself will block the main thread until data is downloaded. WebClient offers a task-based asynchronous version of the DownloadData method called DownloadDataTaskAsync. So it's recommended to use the more modern DownloadDataTaskAsync method as follows:

Logger.Log($"DownloadData: {Url}");

var data = client.DownloadData(Url);

Logger.Log($"DownloadData: Length={data.Length:N0}");

Once again, you request the same URL but can simply use an await statement, which will be run once the data download has been completed. As you can see, this requires less code and has a cleaner syntax:

Logger.Log($"DownloadDataTaskAsync: {Url}");

var downloadTask = client.DownloadDataTaskAsync(Url);

var downloadBytes = await downloadTask;

Logger.Log($"DownloadDataTaskAsync: Length={downloadBytes.Length:N0}");

Console.ReadLine();

}

}

}

Running the code produces this output:

19:22:44 [01] DownloadData: https://www.packtpub.com/

19:22:45 [01] DownloadData: Length=278,047

19:22:45 [01] DownloadDataTaskAsync: https://www.packtpub.com/

19:22:45 [06] Inside DownloadDataCompleted...looking busy

19:22:45 [06] DownloadDataTaskAsync: Length=278,046

19:22:46 [04] Inside DownloadDataCompleted..all done now

Note

When running the program, you may see the following warning: "Warning SYSLIB0014: 'WebClient.WebClient()' is obsolete: 'WebRequest, HttpWebRequest, ServicePoint, and WebClient are obsolete. Use HttpClient instead.'". Here, Visual Studio has suggested that the HttpClient class be used, as WebClient has been marked as obsolete.

DownloadData is logged by thread [01], the main thread, which is blocked for around one second until the download completes. The size of the downloaded file is then logged using the downloadBytes.Length property.

The DownloadDataTaskAsync request is handled by thread 06. Finally, the delayed code inside the DownloadDataCompleted event handler completes via thread 04.

Note

You can find the code used for this example at https://packt.link/IJEaU.

Canceling Tasks

Task cancelation is a two-step approach:

- You need to add a way to request a cancelation.

- Any cancelable code needs to support this.

You cannot provide cancelation without both mechanisms in place.

Typically, you will start a long-running task that supports cancelation and provide the user with the ability to cancel the operation by pressing a button on a UI. There are many real-world examples where such cancellation is needed, such as image processing where multiple images need to be altered allowing a user to cancel the remainder of the task if they run out of time. Another common scenario is sending multiple data requests to different web servers and allowing slow-running or pending requests to be canceled as soon as the first response is received.

In C#, CancellationTokenSource acts as a top-level object to initiate a cancelation request with its Token property, CancellationToken, being passed to concurrent/slow running code that can periodically check and act upon this cancellation status. Ideally, you would not want low-level methods to arbitrarily cancel high-level operations, hence the separation between the source and the token.

There are various CancellationTokenSource constructors, including one that will initiate a cancel request after a specified time has elapsed. Here are a few of the CancellationTokenSource methods, offering various ways to initiate a cancellation request:

- public bool IsCancellationRequested { get; }: This returns true if a cancellation has been requested for this token source (a caller has called the Cancel method). This can be inspected at intervals in the target code.

- public CancellationToken Token { get; }: The CancellationToken that is linked to this source object is often passed to Task.Run overloads, allowing .NET to check the status of pending tasks or for your own code to check while running.

- public void Cancel(): Initiates a request for cancellation.

- public void Cancel(bool throwOnFirstException): Initiates a request for cancellation and determines whether further operations are to be processed should an exception occur.

- public void CancelAfter(int millisecondsDelay): Schedules a cancel request after a specified number of milliseconds.

CancellationTokenSource has a Token property. CancellationToken contains various methods and properties that can be used for code to detect a cancellation request:

- public bool IsCancellationRequested { get; }: This returns true if a cancellation has been requested for this token.

- public CancellationTokenRegistration Register(Action callback): Allows code to register a delegate that will be executed by the system if this token is canceled.

- public void ThrowIfCancellationRequested(): Calling this method will result in OperationCanceledException being thrown if a cancellation has been requested. This is typically used to break out of loops.

Throughout the previous examples, you may have spotted that CancellationToken can be passed to many of the static Task methods. For example, Task.Run, Task.Factory.StartNew, and Task.ContinueWith all contain overrides that accept CancellationToken.

.NET will not try to interrupt or stop any of your code once it is running, no matter how many times you call Cancel on a CancellationToken. Essentially, you pass these tokens into target code, but it is up to that code to periodically check the cancellation status whenever it can, such as within a loop, and then decide how it should act upon it. This makes logical sense; how would .NET know at what point it was safe to interrupt a method, maybe one that has hundreds of lines of code?

Passing CancellationToken to Task.Run only provides a hint to the queue scheduler that it may not need to start a task's action, but once started, .NET will not stop that running code for you. The running code itself must subsequently observe the cancelation status.

This is analogous to a pedestrian waiting to cross a road at a set of traffic lights. Motor vehicles can be thought of as tasks that have been started elsewhere. When the pedestrian arrives at the crossing and they press a button (calling Cancel on CancellationTokenSource), the traffic lights should eventually change to red so that the moving vehicles are requested to stop. It is up to each individual driver to observe that the red light has changed (IsCancellationRequested) and then decide to stop their vehicle. The traffic light does not forcibly stop each vehicle (.NET runtime). If a driver notices that the vehicle behind is too close and stopping soon may result in a collision, they may decide to not stop immediately. A driver that is not observing the traffic light status at all may fail to stop.

The next sections will continue with exercises that show async/await in action, some of the commonly used options for canceling tasks, in which you will need to control whether pending tasks should be allowed to run to completion or interrupted, and when you should aim to catch exceptions.

Exercise 5.04: Canceling Long-Running Tasks

You will create this exercise in two parts:

- One that uses a Task that returns a double-based result.

- Second that provides a fine-grained level of control by inspecting the Token.IsCancellationRequested property.

Perform the following steps to complete this exercise:

- Create a class called SlowRunningService. As the name suggests, the methods inside the service have been designed to be slow to complete:

using System;

using System.Globalization;

using System.Threading;

using System.Threading.Tasks;

namespace Chapter05.Exercises.Exercise04

{

public class SlowRunningService

{

- Add the first slow-running operation, Fetch, which is passed a delay time (implemented with a simple Thread.Sleep call), and the cancellation token, which you pass to Task.Run:

public Task<double> Fetch(TimeSpan delay, CancellationToken token)

{

return Task.Run(() =>

{

var now = DateTime.Now;

Logger.Log("Fetch: Sleeping");

Thread.Sleep(delay);

Logger.Log("Fetch: Awake");

return DateTime.Now.Subtract(now).TotalSeconds;

},

token);

}

When Fetch is called, the token may get canceled before the sleeping thread awakes.

- To test whether Fetch will just stop running or return a number, add a console app to test this. Here, use a default delay (DelayTime) of 3 seconds:

public class Program

{

private static readonly TimeSpan DelayTime=TimeSpan.FromSeconds(3);

- Add a helper function to prompt for a maximum number of seconds that you are prepared to wait. If a valid number is entered, convert the value entered into a TimeSpan:

private static TimeSpan? ReadConsoleMaxTime(string message)

{

Console.Write($"{message} Max Waiting Time (seconds):");

var input = Console.ReadLine();

if (int.TryParse(input, NumberStyles.Any, CultureInfo.CurrentCulture, out var intResult))

{

return TimeSpan.FromSeconds(intResult);

}

return null;

}

- Add a standard Main entry point for the console app. This is marked async and returns a Task:

public static async Task Main()

{

- Create an instance of the service. You will use the same instance in a loop, shortly:

var service = new SlowRunningService();

- Now add a do-loop that repeatedly asks for a maximum delay time:

Console.WriteLine($"ETA: {DelayTime.TotalSeconds:N} seconds");

TimeSpan? maxWaitingTime;

while (true)

{

maxWaitingTime = ReadConsoleMaxTime("Fetch");

if (maxWaitingTime == null)

break;

This allows you to try various values to see how that affects the cancel token and the results you receive back. In the case of a null value, you will break out of the do-loop.