It has been a long journey—almost 300 pages about a couple of new elements in HTML. Who would have thought there was this much to learn. However, realistically, we are only at the start of what will be possible with audio and video in the coming years. Right now we are only seeing the most basic functionality of multimedia implemented on the Web. Once the technology stabilizes, publishers and users will follow, and with them businesses, and further requirements and technologies will be developed. However, for now, we have a fairly complete overview of existing functionalities.

Before giving you a final summary of everything that we have analyzed in this book, let us mention two more areas of active development.

Two further topics deserve a brief mention: metadata and quality of service metrics. Both of these topics sound rather ordinary, but they enable functionalities that are quite amazing.

The W3C has a Media Annotations Working Group[192]. This group has been chartered to create "an ontology and API designed to facilitate cross-community data integration of information related to media objects in the Web, such as video, audio, and images". In other words: part of the work of the Media Annotations Working Group is to come up with a standardized API to expose and exchange metadata of audio and video resources. The aim behind this is to facilitate interoperability in search and annotation.

In the Audio API chapter we have already come across something related: a means to extract key information about the encoding parameters from an audio resource through the properties audio.mozChannels, audio.mozSampleRate, and audio.mozFrameBufferLength.

The API that the Media Annotations Working Group is proposing is a bit more generic and higher level. The proposal is to introduce a new Object into HTML which describes a media resource. Without going into too much detail, the Object introduces functions to expose a list of properties. Examples are media resource identifiers, information about the creation of the resource, about the type of content, content rights, distribution channels, and ultimately also the technical properties such as framesize, codec, framerate, sampling-rate, and number of channels.

While the proposal is still a bit rough around the edges and could be simplified, the work certainly identifies a list of interesting properties about a media resource that is often carried by the media resource itself. In that respect, it aligns with some of the requests from archival organizations and the media industry, including the captioning industry, to make such information available through an API.

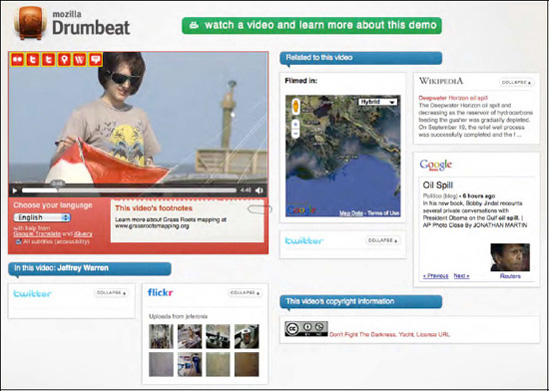

Interesting new applications are possible when such information is made available. An example application is the open source Popcorn.js semantic video demo[193]. Popcorn.js is a JavaScript library that connects a video, its metadata, and its captions dynamically with related content from all over the Web. It basically creates a mash-up that changes over time as the video content and its captions change.

Figure A-1 has a screenshot of a piece of content annotated and displayed with popcorn.js.

A collection of statistics about the playback quality of media elements will be added to the media element in the near future. It is interesting to get concrete metrics to monitor the quality of service (QoS) that a user perceives, for benchmarking and to help sites determine the bitrate at which their streaming should be started. We would have used this functionality in measuring the effectiveness of Web Workers in Chapter 7 had it been available. Even more importantly, if there are continuously statistics available about the QoS, a JavaScript developer can use these to implement adaptive HTTP streaming.

We have come across adaptive HTTP streaming already in Chapter 2 in the context of protocols for media delivery. We mentioned that Apple, Microsoft, and Adobe offer solutions for MPEG-4, but that no solutions yet exist for other formats. Once playback statistics exist in all browsers, it will be possible to implement adaptive HTTP streaming for any format in JavaScript. This is also preferable over an immediate implementation of support for a particular manifest format in browsers—even though Apple has obviously already done that with Safari and m3u8. It is the format to support for delivery to the iPhone and iPad.

So, what are the statistics that are under discussion for a QoS API? Mozilla has an experimental implementation of mozDownloadRate and mozDecodeRate[194] for the HTMLMediaElement API. These respectively capture the rate at which a resource is being downloaded in bytes per second, and the rate at which it is being decoded in bytes per second. Further, there are additional statistics for video called mozDecodedFrames, mozDroppedFrames, and mozDisplayedFrames, which respectively count the number of decoded, dropped, and displayed frames for a media resource. These allow identification of a bottleneck either as a network or CPU issue.

Note that Adobe has a much more extensive interface for Flash[195]. A slightly different set of QoS metrics for use in adaptive HTTP streaming is suggested in the WHATWG wiki[196]:

downloadRate: The current server-client bandwidth (read-only)

videoBitrate: The current video bitrate (read-only)

droppedFrames: The total number of frames dropped for this playback session (read-only)

decodedFrames: The total number of frames decoded for this playback session (read-only)

height: The current height of the video element (already exists)

videoHeight: The current height of the videofile (already exists)

width: The current width of the video element (already exists)

videoWidth: The current width of the videofile (already exists)

These would also allow identification of the current bitrate that a video has achieved, which can be compared to the requested one and can help make a decision to switch to a higher or lower bitrate stream. We can be sure that we will see adaptive HTTP streaming implementations shortly after, when such an API has entered the specification and is supported in browsers.

This concludes the discussion of HTML5 media technologies under active development.

In this book we have taken a technical tour of HTML5 <audio> and <video>.

The Introduction told the story behind the formats and technologies that we have arrived at today and, in particular, explained why we don't have a single baseline codec for either <audio> or <video>. This is obviously a poor situation for content providers, but the technology has been developed around it and there are means to deal with this. Ultimately, the availability of browser plugins—such as Adobe Flash, VLC, and Cortado for Ogg Theora—can help a content provider deliver only a single format without excluding audiences on browsers that do not support that format natively.

In the Audio and Video Elements chapter we had out first contact with creating and publishing audio and video content through the <audio> and <video> elements. We dug deep into the new markup defined for <audio>, <video>, and <source>, including all their content attributes. We took a brief look at open source transcoding tools that are used to get our content into a consistent format for publishing. We briefly explained how to publish the content to a Web server and how it is delivered over HTTP. We concluded the chapter with a comparison of user interfaces to the media elements between browsers, in particular paying attention to the support of accessibility in implemented player controls.

Chapter 3 on CSS3 Styling saw us push the boundaries of how to present audio and video content online. Simply by being native elements in HTML, <audio> and <video> are able to make use of the amazing new functionalities of CSS3 including transitions, transforms, and animations. We also identified some short-comings for video in CSS3, in particular that reflections cannot be achieved through CSS alone, that the marquee property is too restricted to create a video scroller, and that video cannot be used as a background to a web page. However, we were able to experiment with some amazing new displays for video collections—one about a pile of smart phones where you can basically pick one up and watch the video on it, and one with video playing on the faces of a 3D spinning cube.

The JavaScript API chapter saw us dig deep into the internal workings of the <audio>, <video>, and <source> elements. The interface of these media elements is very rich and provides a web developer with much control. It is possible to set and read the content attribute values through this interface. Particular features of the underlying media resources are exposed, such as their intrinsic width and height. It is also possible to monitor the stats and control the playback functionalities of the resources. We concluded this chapter with an implementation of a video player with our own custom controls that make use of many of the JavaScript API attributes, states, events, and methods.

At this point, we reached quite a sophisticated understanding of the HTML5 media elements and their workings. These first four chapters provided a rather complete introduction. The next three chapters focused on how <audio> and <video> would interact with other elements of HTML5, in particular SVG, Canvas, and Web Workers.

In HTML5 Media and SVG we used SVG to create further advanced styling. We used SVG shapes, patterns, or manipulated text as masks for video, implemented overlay controls for videos in SVG, placed gradients on top of videos, and applied filters to the image content, such as blur, black-and-white, sepia, or line masks. We finished this section by looking at the inclusion of <video> as a native element in SVG or through <foreignObject>. This, together with SVG transformations, enabled the creation of video reflections or the undertaking of edge detection.

For further frame- and pixel-based manipulation and analysis, SVG isn't quite the right tool. In Chapter 6, HML5 Media and Canvas, we focused on such challenges by handing video data through to one or more <canvas> elements. We demonstrated video frames bouncing through space, efficient tiling of video frames, color masking of pixels, 3D rendering of frames, ambient color frames for video, and transparency masks on video. These functions allowed us to replicate some of the masking, text drawing, and reflection functionalities we had previously demonstrated in SVG. We finished the chapter with a demonstration how user interactions can also be integrated into Canvas-manipulated video, even though such needs are better satisfied through SVG, which allows attachment of events to individual graphical objects.

In Chapter 7, about HTML5 Media and Web Workers, we experimented with means of speeding up CPU-intensive JavaScript processes by using new HTML5 parallelization functionality. A Web Worker is a JavaScript process that runs in parallel to the main process and communicates with it through message passing. Image data can be posted from and to the main process. Thus, Web Workers are a great means to introduce sophisticated Canvas processing of video frames in parallel into a web page, without putting a burden on the main page; it continues to stay responsive to user interaction and can play videos smoothly. We experimented with parallelization of motion detection, region segmentation, and face detection.

A limiting factor is the need to pass every single video frame that needs to be analyzed by a Web Worker through a message. This massively slows down the efficiency of the Web Worker. There are discussions in the WHATWG and W3C about giving Web Workers a more direct access to video and image content to avoid this overhead.

All chapters through Chapter 7 introduced technologies that have been added to the HTML5 specifications and are supported in several browsers. The three subsequent chapters reported on further new developments that have only received trial implementations in browsers, but are rather important. Initial specifications exist, but we will need to see more work on these before there will be interoperable implementations in multiple browsers.

In Chapter 8, on the HTML5 Audio API, we introduced two complementary pieces of work for introducing audio data manipulation functionalities into HTML5. The first proposal is by Mozilla and it creates a JavaScript API to read audio samples directly from an <audio> or <video> element to allow it to be rendered, for example, as a waveform or a spectrum. It also has functionality to write audio data to an <audio> or <video> element. Through the combination of both and through writing processing functions in JavaScript, any kind of audio manipulation is possible. The second proposal is by Google and introduces an audio filter network API into JavaScript with advanced pre-programmed filters such as gain, delay, panning, low-pass, high-pass, channel splitting, and convolution.

The chapter on Media Accessibility and Internationalization introduced usability requirements for <audio> and <video> elements with a special regard towards sensory impaired and foreign language users. Transcriptions, video descriptions, captions, sign translations, subtitles, and navigation markers were discussed as formats that create better access to audio-visual content. Support for these alternative content technologies is finding its way into HTML5. We saw the newly defined <track> element, a JavaScript API, and the WebSRT file format as currently proposed specifications to address a large number of the raised requirements.

Finally, Chapter 10, Audio and Video Devices, presented early experiments on the <device> element and the Stream API, which together with Web Sockets allow the use of web browsers for video conferencing. We referred to experiments made by Ericsson on an unreleased version of Webkit that demonstrated how such live audio or video communication can be achieved. Many of the components are already specified, so we were able to explain them in example code. We also analyzed how recording from an audio or video device may be possible, and demonstrated how to use Web Sockets to create a shared viewing experience of video presentations across several participating web browser instances.

I hope your journey through HTML5 media was enjoyable, and I wish you many happy hours developing your own unique applications with these amazing new elements.

[192] See http://www.w3.org/2008/WebVideo/Annotations/

[193] See http://webmademovies.etherworks.ca/popcorndemo/

[194] See http://www.bluishcoder.co.nz/2010/08/24/experimental-playback-statistics-for-html-video-audio.html

[195] See http://help.adobe.com/en_US/FlashPlatform/reference/actionscript/3/flash/net/NetStreamInfo.html

[196] See http://wiki.whatwg.org/wiki/Adaptive_Streaming#QOS_Metrics