4

Vision Two ‒ Virtual Worlds Appear

As we did in previous chapters in Part II, we will be looking at an industry vertical in this chapter. This chapter's vertical is Technology, Media, and Telecommunications (TMT). Since this vertical goes to the core of what Spatial Computing is very much about, it will necessarily be longer than the other chapters that focus on industry verticals.

New realities are appearing thanks to Virtual Reality and Augmented Reality. In a few years, though, the capabilities of VR and AR will change and morph into more sophisticated Spatial Computing glasses, which will arrive with massive increases in bandwidth thanks to 5G and new AI capabilities, which are due in part to the R&D being done on autonomous vehicles. Here we dive into some of the fundamentals of the TMT vertical, as well as the profound changes we expect.

From 2D to 3D

As we look back at the technology industry, especially when it comes to personal computers and mobile phones, which customarily present two-dimensional images on a flat screen, you can see that there's an underlying set of goals―beliefs, even. These include things such as connecting everyone together, giving you "superpowers" to analyze business data with spreadsheets, and the ability to communicate better with photos and videos. Previous generations were just about giving us better tools than the mechanical ones that existed before, whether the printing press or the old rotary phones that so many of us now-old people had in their homes.

Increasing the Bandwidth

Elon Musk puts it best: the goal is to increase the bandwidth to and from each human. Now he's investing in a new kind of computing with his company Neuralink that includes "jacking in" and hooking a computer directly up to your brain. That will take a lot longer to show up for most people than Spatial Computing, simply because of the cost of opening up a human skull and having a robot surgically implant tiny wires directly in a brain.

One way to look at it is via our current computing interfaces. If you have a thought that you want to communicate with other people, you probably use a mouse or a finger on a screen to open an application or a window, and you use a keyboard to type a message. That's pretty much the same whether you want to communicate in a Microsoft Word document, an SMS text message, an email, or a Tweet on Twitter. On the other side, your reader sees what you wrote on a screen and that person's eyes and visual perception system translates that into something their brain can understand, process, store, integrate, and then maybe reply to.

That process of reading is pretty fast in comparison to the process of writing. There's friction in communication. We're feeling it right now as we type these words into Microsoft Word and are trying to imagine you reading this book.

The process isn't a whole lot faster even if we use video. After all, you can even have someone, or, better yet, an AI, read this text to you while you walk down the street. That would be hard to do if we made a video. (Note: We previously discussed the relevance of AI, including Machine Learning, Deep Learning, and Computer Vision in Chapter 2, Four Paradigms and Six Technologies, so we won't replicate that information here.)

Plus, you can skim and do other things with text that video makes very hard. Your mind, on the other hand, would far rather watch a movie than read a movie script, or watch a football game on TV than read a report about it later.

Where we are going with this is that the tech industry is spending billions of dollars in an attempt to make it easier to communicate and work with each other, or even to better enjoy the natural world. We see the ultimate effect of this in the "jacking-in" fantasy that Elon Musk and others are trying to make a reality. Some take a more negative view, like John von Neumann and his term "the singularity," which was later popularized by Vernor Vinge in his 1993 essay in which he wrote that it would signal the end of the human era. Scary stuff, but the need to communicate our thoughts with not just other people, but machines, like we are doing now with Siri, Alexa, Google Assistant, and Facebook's Portal, are opening up new ways of communicating with machines. Elon Musk's Neuralink, which uses a robot to hook up tiny wires directly to a human brain, is the most forward approach we've seen actually being put to use. That said, we don't think we'll sign up for that in the early exploratory years of the company!

In its current form, such technology brings with it deep side effects and, as of today, costs hundreds of thousands of dollars due to the necessity of a surgical procedure to install something like Neuralink. If you have, for example, Parkinson's, you may get such a technology implanted by the end of the 2020s, but the rest of us will be stuck wearing some form of on-the-face computing, whether eyeglasses or contact lenses, for the foreseeable future.

Change is Coming

Spatial Computing is evolving quickly, with several different device types (which we'll go into more depth about in a bit), but they all require putting screens on your face. We dream of a lightweight pair of glasses that will bring the whole spectrum of Spatial Computing experiences that cover the reality-virtuality continuum introduced by researcher Paul Milgram, when he said that soon humans would be able to experience extended reality, or XR, along a spectrum from "completely real" on one side to "completely virtual" on the other.

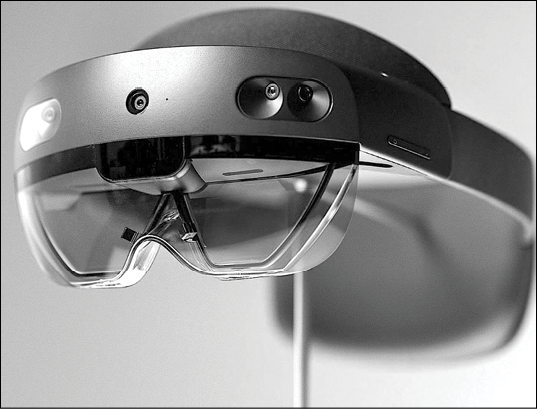

Today we have devices like Microsoft's HoloLens 2 that give us tastes of VR, but they aren't really great devices for experiencing the "completely virtual" side of the spectrum, opting to let the user see the real world instead of presenting big, glorious high-resolution virtual screens.

Within the next three years, or certainly by 2025, we'll see devices that let you switch between real and virtual so quickly and seamlessly that some might start to lose track of the reality of what they are experiencing. We'll cover the pros and cons of that in the later chapters here but it's also good for us to slow down and revisit the technology that's here today so that we can have a decent conversation about where things have been and where they might be going.

We argue that it's easier for you to understand what, for example, a Macintosh could do if you knew what previous computers could do and the design constraints that were placed on earlier devices and ecosystems. Our kids forget why the Dodge tool in Adobe Photoshop looks like a lollipop, but those of us who used to work in darkrooms with chemicals and enlargers remember using a tool like that to make parts of photos lighter. The same will happen here.

First, we constantly get pushback every time we explain that you will soon be forced to wear something on your face to fully appreciate the higher communication speed that Spatial Computing brings to your brain.

Yes, why glasses? Why can't we get to this "high bandwidth to and from the brain" world with standard monitors?

Thomas Furness, the American inventor and VR pioneer, asked himself just that question back in the 1960s when he was designing cockpits for pilots in the military. He, and many others, have tried over the years to come up with better monitors. The US government funded HDTV research for just that reason. The answer comes in how we evolved over millions of years. Evolution, or some might say God, gave us two eyes that perceive the analog world in three dimensions and a large part of our brains are dedicated to processing the information our eyes take in.

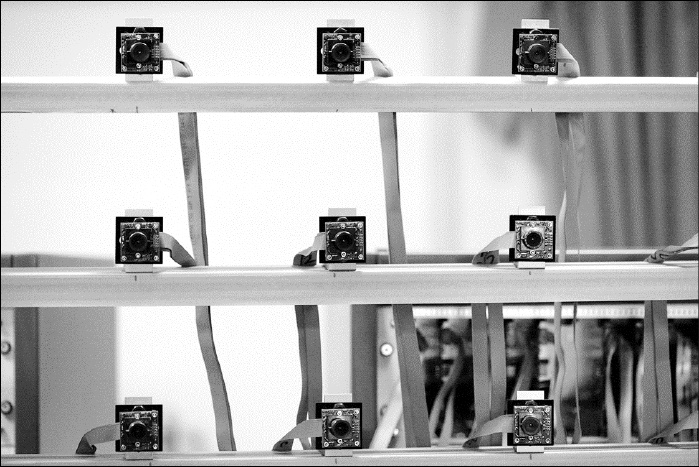

If we want to take in more and get the bandwidth to our brains higher, we need to move our monitors onto our eyes. If we want to improve the outbound bandwidth from our brains to the computing world, we need to use voice, hands, eyes, and movement and add more sensors to let us communicate with computers with just our thoughts. All of this requires wearing sensors or microphones either on our faces or heads. There is no way around this except in special situations. Andy Wilson, one of Microsoft's top human/machine researchers up in Redmond, Washington, has a lab with dozens of projectors and computers where he can do a lot of this kind of stuff in one room―sort of like the Holodeck in Star Trek. But even if his room is successful, most of us don't want to compute only while sitting in a singular place, and most humans simply don't have the space in their homes and offices for such a contraption anyway.

We see this in high-end uses already. The pilots who fly the F-35 fighter jets tell us they are forced to wear an AR helmet simply to fly the plane. "I'll never lose to an F-16" one pilot at Nellis Airforce Base told us.

"Why not?" we asked.

"Because I can see you, thanks to my radar systems and the fact that your plane isn't stealthy, but you can't see me. Oh, and I can stop and you can't."

Soon many of us will be forced to follow the F-35 pilot in order to maximize our experiences in life. Those of us who already wear corrective lenses, which are about 60 percent of us, will have an easier time, since we already are forced to wear glasses simply to see. We see a world coming, though, where Spatial Computing devices bring so much utility that everyone will wear them, at least occasionally.

This will bring profound changes to humanity. We are already seeing that in VR. Users of VR are already experiencing a new form of entertainment and media; one that promises to be a bigger wave than, say, the invention of cinema, TV, or radio were in previous generations. This new wave builds on the shoulders of those previous innovations and even subsumes them all: wearing the glasses of 2025, you will still be able to read a magazine, watch a TV show, or listen to your favorite radio station (or the podcasts that replace them). As all of these improve, we are seeing new kinds of experiences, new kinds of services, and even a new culture evolving.

To those whom we have told this story, much of this seems improbable, weird, dystopian, and scary. We have heard that with each of the previous paradigm shifts too, but because this puts computing on your face, the resistance is a bit more emotional. We see a world coming over the next few years where we mostly will get over it.

Emergence as a Human Hook

So why do you need to put on a headset? Well, when you're inside the headset, you'll be able to experience things you've never been able to experience on a computer monitor before. But what's the purpose?

The Brain and VR

Why do human beings like playing in VR? Emergence is one reason, and we'll cover a couple of others in a bit. What is emergence? When applied to video games, emergence is the feeling you get from playing complex situations, and unexpected interactions show up from relatively simple game dynamics. The same feelings are why we go to movies. It's why we like taking regular drives on a curvy road. It's why we like holding a baby for the first time. That's why we like falling in love. All of these create positive chemical reactions in our brains that are similar to the emergence that happens in video games. VR enables this more frequently. Since the 1970s and 1980s, board games and table-top role-playing games such as Cosmic Encounter or Dungeons and Dragons have featured intentional emergence as a primary game function by supplying players with relatively simple rules or frameworks for play that intentionally encouraged them to explore creative strategies or interactions and exploit them toward the achievement of victory or a given goal. VR makes all that much more powerful.

If we have sharper and more capable screens we can experience things that make our brains happier, or, if you will, a flow state, which is the euphoria that both workers and surfers report as they do something that brings high amounts of emergence.

The Right Tool for the Job

The thing is, we're going to need different kinds of headsets for different things. VR headsets separate us from others. They aren't appropriate to wear in a shopping mall, on a date night, or in a business meeting. But, in each of these situations, we still want digital displays to make our lives better and to give us that feeling of emergence.

It's the same reason we would rather watch a football game on a high-resolution HDTV, instead of the low-resolution visuals of our grandparents' TVs. It's why we tend to upgrade our phones every few years to get better screens and faster processors. When we watch sports or movies on higher-resolution screens or bigger screens, or both, we can experience emergence at a higher rate.

Emergence is also why notifications on our phones are so darn addictive. Every time a new notification arrives, a new hit of dopamine arrives with it. Marketers and social networks have used these game dynamics to get us addicted and build massive businesses by keeping us staring at our feeds of new items. VR promises to take our dopamine levels from these mechanisms to new levels. In later chapters, we'll cover some of the potential downsides of doing that, but here we'll discover why Spatial Computing devices are seen as a more powerful way to cause these dopamine hits, and thus, a promise to build massive businesses.

These dopamine hits, along with powerful new ways to be productive, are coming from a range of new devices―everything from tiny little screens that bring new forms of your phone's notification streams, to devices that so completely fool your brain that they are being used to train airplane pilots, police, and retail workers.

The "Glassholes" Show the Way

It started with a jump out of a dirigible at a Google programming conference back in May 2014. The jumpers were wearing a new kind of computer: one that put a little screen and a camera right near their right eye. They broadcast a video feed from them to everyone. People jumped up to be the first to order one (we were amongst the first in line) the demo was so compelling.

Defeated Expectations

That said, the demo oversold what would actually materialize. Before we got ours, we thought it would be an experience so futuristic that we had to put down $1,500 to be the first to experience it. Now, don't get us wrong, the first year of wearing Google Glass was pretty fun, mostly. People were highly interested in seeing it. At the NextWeb conference, attendees stood in line for an hour to try ours out. Most people walked away thinking they had seen the future, even though all they saw was a tiny screen that barely worked when we talked to it with "Hey, Google" commands.

Photo credit: Maryam Scoble. This photo of coauthor Robert Scoble wearing Google Glass in the shower went viral and has been on BBC TV many times, along with many other media outlets.

As we got to wear ours, it became clear that Google Glass wasn't that futuristic and certainly didn't meet expectations. Robert Scoble's wife, Maryam, upon seeing it for the first time, asked, "will it show me anything about my friends?" Truth is: no, it didn't, but that was an expectation. There were other expectations too, set up by that first demo. The camera wasn't that good. Certainly, it wasn't as good as everyone thought it was, which later became a problem since people thought it was constantly recording and uploading, or, worse, doing facial recognition on everyone.

The expectations were, though, that it was a magical device that you could look at anything with and learn more about that person or thing. Those expectations were never really corrected by either Google or the people who owned them, who soon gained a derisive name: "Glassholes." This derisive tone came about after a journalist named Sarah Slocum wore a pair into a bar and was, in her words, accosted due to wearing them. After that, we saw more businesses putting up signs forbidding users from wearing them, and Google soon gave up trying to sell them to consumers.

Where Did Things Go Wrong?

We are frequently asked, how did Google Glass go so wrong? And what does it mean for the next wave of products about to arrive?

Photo credit: Google. Here is a closer look at the original Google Glass, which had a small transparent screen, along with a camera, microphone, speaker, and a battery.

We think it mostly came down to that dramatic launch and the expectations it caused, but there was a basket of new problems that humans had never considered until Google forced this into public view. Doing such a spectacular launch with people jumping out of dirigibles over a San Francisco convention center with thousands of people in the audience, all watching on huge screens, with millions of other viewers around the world, just set a bunch of expectations that it couldn't meet.

We list the main complaints many had here:

- The screen was too small.

- The three-dimensional sensors weren't yet ready.

- The Graphics Processing Units (GPUs) were very underpowered so the graphics that it could show were very rudimentary.

- The battery life wasn't great.

- It didn't have screens or cameras for both eyes, so it wasn't appropriate for doing more serious AR like what Microsoft's HoloLens 2 does today.

- The cameras weren't as good as in your standard GoPro.

- The screens were of far lower resolution and size than even your cheapest phone at the time (and compared to today's devices, are like a postage stamp compared to a letter-sized piece of paper).

Worse yet, Google didn't expect the social contract problems that showed up months after they shipped. Humans had evolved to look into each other's eyes to verify and communicate trust, and here was a new device messing with that. Many people focused negative attention on the camera. This touched on privacy expectations that hadn't been explored by previous technologies, and the lack of utility or affordability just added into a perfect storm of negative PR that it couldn't escape from.

That said, Google Glass was, and is, an important product that set the table for a whole range of new products. It was the first computer that you wore on your face that most people heard about, at a minimum, and got a whole industry thinking about putting computers on people's faces. But, this is a hard space to get right. Why? Because it's so damn personal. The computer is on your face in front of your eyes, or as we learned earlier this year from a new start-up, Mojo Vision, actually on your eyes in the form of a contact lens. This isn't like a desktop computer that you can hide in an office and only use when you aren't trying to be social. When fully expressed, these new computing forms change what it means to compute and what it means to be human.

What It Means to Be Human

Whoa. Change what it means to be human? Yes. Soon you'll be able to do things with these glasses that futurists have been predicting for decades. Everything from ubiquitous computing to next-generation AR that changes how we perceive the real world in deep ways. Qualcomm's new prototypes have seven cameras on a device about the size or smaller than Google Glass, which only had one camera. One of these cameras watches your mouth with Artificial Intelligence that was barely being conceived when Google Glass was announced. The others watch your eyes and the real world in high detail that bears little resemblance to what Glass' low-resolution camera was able to do.

It's important for us to pause a moment and take a birds-eye view of the Spatial Computing landscape though, and get a good look at all the devices and where they came from. Spatial Computing is a big tent that includes products that are small and lightweight, like the Google Glass, all the way to devices like the Varjo, which as of 2020, opened with an initial cost of $10,000 and needed to be tethered to a $3,000 PC. The Varjo is big and heavy, but the experience of wearing one is totally futuristic compared to the early Google Glass prototypes.

No longer are we stuck with a tiny postage stamp of a screen. Inside the Varjo, you have huge high-resolution screens. Where Google Glass is like a mobile TV on a kitchen counter, Varjo's device is like being in an IMAX theater. With it, you can simulate flying in an Airbus Jet's cockpit. Where the Google Glass could barely show a few words of text, the Varjo lets you feel like you are in the cockpit as a pilot, with tons of displays blinking away and everything so photorealistic that you feel like you are looking at hundreds of real knobs and levers in a real plane. Out the window, while wearing the Varjo, you can see cities and landscapes emerge, along with runways from a distant airport you were supposed to land at. All simulated, yes, but your brain sees so much resolution it thinks it is real.

Photo credit: Varjo. The highest-resolution VR headset, as of early 2020, is the Varjo XR-1. This $10,000 device is used by Volvo to design and test future car designs.

As 2020 evolves, you'll see a spectrum of devices with far more features and capabilities soon escape from R&D labs―including a small pair of glasses. Focals by North is a good example, and one that's similar to what Google Glass tried to do. On the other side of the spectrum are VR headsets from Valve, Varjo, Oculus, and others. We'll detail them here to give you context on the market and the kinds of devices that fit under the Spatial Computing tent.

When we say these will change what it means to be human, we mean that soon, the way that you experience the real world will be completely changed by AR. Already Snapchat is changing faces, floors, and buildings with a simplified version of AR. Soon every part of your human experience will be augmented as new Spatial Computing glasses, headsets, and other devices appear. The Varjo is giving us an early taste of just how complete these changes could be―inside the headset, you experience a virtual world in such detail that you no longer want to turn it off. It is being used to train pilots, and when we got a demo, the virtual cockpit that was presented was so sharp and colorful that we imagine that someday we'll just experience the whole world that way. These changes will deeply change human life. We predict that the changes will be deeper than those brought to us by the automobile or telephone.

Embodiment, Immersion, Presence, Empathy, and Emergence

In February 2014, Mark Zuckerberg visited Stanford's Virtual Human Interaction Lab. Prof. Jeremy Bailenson and his students walked Facebook's founder and CEO through a variety of demos of how VR could change people's experiences. He walked across a plank that made you feel fear of falling off. He experienced how VR could help with a variety of brain ailments and played a few games. Bailenson ran us through the same demos years later and said we had the same experiences including being freaked out by finding ourselves standing on a narrow piece of wood after the floor virtually dropped away to reveal a deep gap. Bailenson adeptly demonstrates how VR enables powerful emergence, along with a few new ways to cause our brains to be entertained: embodiment and immersion, not to mention a few other emotions, like the vertigo felt on that wooden plank.

Embodiment and Immersion

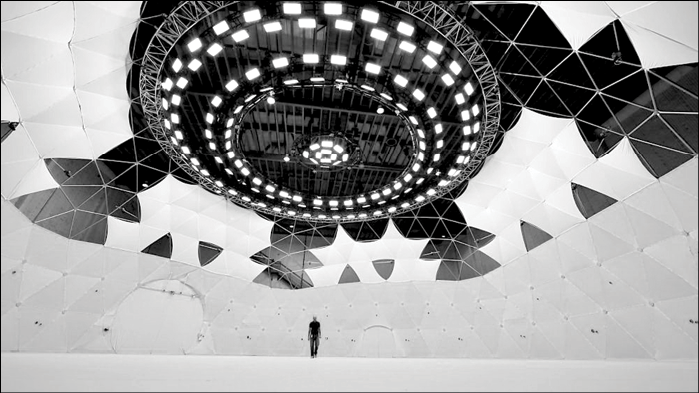

Embodiment means you can take the form of either another person or an animal. Bailenson showed us how embodiment could give us empathy for others. In one demonstration we experienced life as a black child. In another demo, we had the experience of being a homeless man who was harassed on a bus. Chris Milk, a VR developer, turned us into a variety of forms in his experience "Life of Us" that he premiered at the Sundance Film Festival in 2017. We watched as people instantly flew as they discovered that they had wings, among other forms, from being a tadpole to a stock trader running down Wall Street.

Immersion is the feeling that you are experiencing something real, but in a totally virtual world, in other words, being present in that world. The perception is created by surrounding the user of the VR system with images, sound, and other stimuli that provide an engrossing total environment.

Photo credit: Facebook. The $400 Oculus Quest changed the market by making VR far easier thanks to having no cords and no need for a powerful PC to play high-end VR games and other experiences.

Within a few months of getting the demos from Bailenson and his team, Zuckerberg would acquire Oculus for $2 billion. Oculus was started a couple of years prior in Irvine, California, by Palmer Luckey, Brendan Iribe, Michael Antonov, and Nate Mitchell. The early VR device was a headset that had specialized displays, positional audio, and an infrared tracking system. Sold on Kickstarter, Oculus raised $2.4 million, which was 10 times what was expected.

Evolution

Since 2012, Oculus has evolved. The headset was paired with controllers that let a user touch, grab, poke, move, shoot, slap, and do a few other things inside virtual worlds. A new term, 6DOF, got popular around the same time. That stands for Six Degrees of Freedom and meant that the headset and controllers could completely move inside a virtual world. Previous headsets, including the current Oculus Go, were 3DOF, which meant that while you could turn your head you couldn't physically move left, right, forward, or backward. To experience the full range of things in VR, you'll want a true 6DOF headset and controllers.

Systems that aren't 6DOF won't deliver the full benefits of immersion, presence, embodiment, emergence, or the empathy that they can bring. That said, there are quite a few cheaper headsets on the market and they are plenty capable of a range of tasks. 3DOF headsets are great for viewing 360-degree videos, or lightweight VR for educational uses that don't require navigating around a virtual world, or the other benefits that 6DOF brings. It is this addition of 6DOF to both the controllers and the headset that really set the stage for the paradigm shift that Spatial Computing will deliver.

Photo credit: Robert Scoble. Facebook had huge signs when Oculus Quest first showed up, showing the market advantages Facebook has in getting its products featured.

As we open the 2020s, the product that got the most accolades last year was the $400 Oculus Quest from Facebook. This opened up VR to lots of new audiences. Before it came out most of the other VR headsets, and certainly the 6DOF ones, were "tethered" to a powerful PC. Our first VR system was an HTC Vive connected to a PC with an Nvidia GPU and cost more than $2,500. Today, for most uses, a $400 Quest is just as good, and for the few more serious video games or design apps that need more graphic power, you can still tether to a more powerful PC with an optical cable that costs about $80.

Facebook has the Edge

Facebook has other unnatural advantages, namely its two billion users, along with the social graph they bring (Facebook has a contact list of all of those users and their friends). That gives Facebook market power to subsidize VR devices for years and keep their prices down. The closest market competitor, Sony's PlayStation VR, is a more expensive proposition and requires a tether to the console.

The Quest comes with a different business model, though, that some find concerning: advertising. In late 2019, Facebook announced it would use data collected on its VR headsets to improve advertising that its various services show its users.

Photo credit: Robert Scoble. Here Scoble's son, Ryan, plays on Oculus Quest while his dad charges his Tesla at a supercharger.

This gives Facebook a near monopoly in sub-$500 devices that bring full-blown VR and it is the only one that brings this in a non-tethered way.

Yes, Sony's PlayStation VR has sold more units at five million sold, which seems impressive until you remember that device has been on the market since 2016, and the experience of using a PlayStation is far inferior to using the Quest with its better controllers, a better selection of things to do, and the integration into Facebook's social graph, which enables new kinds of social VR that others will find difficult to match. The Quest sold about half a million units between when it launched in May 2019 and January 2020. The Quest was one of the hottest products during Christmas 2019 and was sold out for months. Even well into April 2020, the Quest remained sold out and could be found on eBay for a hefty premium over its $400 retail price.

What is notable is the shifting of price points. When PlayStation VR first came on the market it was a lot cheaper for a kid to get when compared to buying a $2,000 gaming PC and a $900 headset, like the HTC Vive. The Quest obliterated that price advantage, and it's the business subsidy that Facebook is giving to its Oculus division that's turning the industry on its head. Anyone who wants to go mainstream has to figure out how to deal with that price differential due to the advertising-subsidized pricing that Facebook has. Google could also do the same, but its forays into Spatial Computing, including Glass and the Gear VR headset systems that used mobile phones, have fallen flat with consumers because they didn't provide the full VR magic of embodiment, immersion, presence, and so on.

Market Evolution

Our prediction is that Facebook will consolidate much of the VR market due to its pricing, social element, and the advantages that come with having a war chest of billions of dollars to put into content. Its purchase of Beat Saber's owner, Beat Games, in late 2019 showed that it was willing to buy companies that make the most popular games on its platform, at least in part to keep those games off of other platforms that will soon show up. Beat Saber was the top-selling game in 2019, one where you slice cubes flying at you with light sabers. This consolidation won't hit all pieces of the market, though. Some will resist getting into Facebook's world due to its continued need for your data to be able to target ads to you. Others, including enterprise companies, will want to stay away from advertising-supported platforms. For that, we visited VMware's Spatial Computing lab in its headquarters in Palo Alto.

Photo credit: Robert Scoble. A wide range of headsets hang on the wall inside VMware's Spatial Computing lab in its Palo Alto headquarters.

At VMware, they buy and test all the headsets, so they can see all the different approaches various VR and AR headset manufacturers are taking. VMware's main business is selling virtual machines to run the world's internet infrastructure inside data centers and manage all the personal computers that connect to it.

Why is it investing in Spatial Computing? Because it is building an array of management infrastructure for enterprises to use, and sees a rapidly growing demand for Spatial Computing. Walmart, for instance, bought more than 10,000 VR headsets for its training initiatives. Simply loading an OS on that many headsets is a daunting challenge―even more so if corporate IT managers want to keep them up to date, keep them focused on one experience, and make sure that they haven't been hacked and have access to appropriate things on corporate networks and other workers.

Inside VMware Labs, Matt Coppinger, director, and Alan Renouf, senior product line manager, walked us through the headsets and the work that they are doing with both VR and AR. They told us about enterprises who decided against going with Facebook's solution because Facebook wanted to control the software loaded on the headset too tightly. Lots of enterprises want to control everything for a variety of reasons, from the ease of use that comes from getting rid of everything but one app that's already running when you put on the headset, to being able to control which corporations the collected personal data is shared with.

They both split up the market into different use cases:

- 6DOF tethered/high end: This means Varjo, with its ultra-high-resolution and low-latency reign, which is why Volvo uses it for car design. The headsets at the high end have sharper screens and a wider field of view, which makes them the best choice for architecture, design, and use cases where you have highly detailed and complex things to work on, like an Airbus cockpit simulation for teaching pilots to fly.

- 6DOF tethered/mid-market and lower market: Valve Index, HTC Vive, HTC Cosmo, and Oculus Rift headset products fit here. These tethered headsets allow the use of Nvidia's latest GPUs, so are great for working on three-dimensional design, architecture, and factory work but are much more affordable than the $10,000 Varjo. Most of these will run $1,500 to $3,000 for a decent PC and about $1,000 for the headset systems.

- 6DOF self-contained: The Oculus Quest. This is the most exciting part of the VR market, they say, because it brings true VR at an affordable price with the least amount of hassle due to no cord and no external PC being required.

- 3DOF self-contained: The Oculus Go, which is a headset that only does three degrees of freedom, has lots of corporate lovers for low-end, 360-degree media viewing and training with a minimal amount of interactivity or navigation. This is the headset that Walmart did all its training on, although it's now switching to the Quest to get more interactive-style training and simulations.

Keep in mind we have been through many waves of VR devices. VR has existed in the military since the mid-1960s. In the 1990s, people had VR working on Silicon Graphics machines (if you know what those are, that dates you to at least 40 years old or so). We flew plane simulators on such systems. But the VR form factor back then was huge and a Silicon Graphics machine cost more than $100,000.

We tend to think of that phase as an R&D phase, where the only uses possible were either military or experiences that cost a lot of money per minute. One of our friends had opened a retail shop where you could pay $50 for a few minutes inside one to simulate flying a fighter jet. Since most of us couldn't afford the fuel for a real jet, this was as close as we were going to get and it was pretty fun. Today's version of the same is an LBE (Location-based Entertainment) experience, where you can pay $20 to $50 for an average of 15 minutes per person to be "inside a movie" at Sandbox VR, The Void, Dreamscape Immersive, and others.

2014's New Wave

Instead of looking so far back, though, we see the new wave of Spatial Computing really started in 2014 when Facebook bought Oculus. That purchase by Mark Zuckerberg really started up a whole set of new projects and caused the world to pay attention to VR with fresh eyes.

We still remember getting a "Crescent Bay" demo, one of the first demos available on the Oculus Rift headset, in the back room at the Web Summit Conference in Ireland in 2016. That headset was tethered to a PC. Two little sensors were on a table in front of us. Once we were in the headset, they handed us controllers. It started out in darkness. When the demo started, we found ourselves on the top of a skyscraper.

It was the first time we had felt vertigo; our brains freaked out because it was so real-feeling due to the immersive power of VR that we thought we might fall to our deaths.

Photo credit: Facebook. The original Oculus Rift VR system, with its two sensors and controllers. Not seen here is the PC with a big GPU inside, usually made by Nvidia, that it's tethered to.

Now how did a computer make us scared of falling to our death? Well, the headset, as it was moved around, showed a 360-degree world. We felt like we were really on top of a skyscraper and could fall over the edge. This was far different than any computer or medium we had ever seen or experienced before. The power of putting highly tracked screens on our face that could reveal a virtual world was like magic. We barely noticed the cord that was hanging from a device over our head leading to a PC on the side of our play area.

Later, after we bought our own, we would get the limitations of the sensor system. It built a virtual room that we couldn't really move out of. In our case, in our homes, it was a virtual box that was a few feet wide by a few feet long. The cord and the sensors kept us from leaving that box and those limitations started to chafe. We couldn't play VR while on the road traveling. We couldn't take it to shopping malls. Trying to show people VR in their backyards proved very difficult. Getting even two of the systems to work meant getting black curtains to separate the two systems, since the sensors didn't like being next to another set that was trying to operate.

Photo credit: HTC. The HTC Vive was popular because of its superior tracking system. These black boxes, called "Lighthouses," were aimed at a VR player and sprayed a pattern of invisible light that let the system track the headset and controllers accurately. This type of tracking system is still used in many high-end headsets.

Let's talk about those sensors because how tracking is done is a key differentiator between headsets. HTC Vive is still a favorite amongst heavy users of VR, in part, because its tracking system is so good. It consists of two black boxes (you could use more, if needed, in, say, a large space) that spray invisible light on a VR user walking around. This invisible light is used to track headsets, controllers, and newer trackers that users could put on objects, their feet, and other things. These black boxes, called "Lighthouses," are better at tracking than the ones Facebook sold with its Oculus Rift system. The original Oculus ones were simpler cameras that weren't quite as exact and couldn't track as many things as the HTC system did.

Photo credit: Robert Scoble. A girl plays with an HTC Vive. Here you see the tether to a gaming PC.

A lot of these advantages, though, have been whittled away at by the Oculus team, who shipped quite a few updates to make its system work better and, finally with the Oculus Quest, this older "outside-in" tracking system was replaced by a series of four cameras on the front that implemented the newer tracking system that didn't require putting boxes or cameras around a VR user.

Inside-out

We believe this newer system, which industry people call "inside-out" tracking because the sensors are looking from the headset "out" into the real world, is better for Spatial Computing because it frees users from having to calibrate sensors and place boxes or cameras around, and it also means you have the freedom to walk around much bigger spaces, leading to wearing glasses in a few years where you can play virtually anywhere without worrying about the tracking.

The Oculus "inside-out" tracking also shows up in its Rift S model, which replaces the earlier Rift, with its two external trackers. Why does Facebook have three models – the Go, the Quest, and the Rift S? The Quest is optimized for low-cost 6DOF. The Rift S has the best performance. Compared to the Quest, graphics inside look sharper and nicer and the field of view is a little larger. The Go is a low-cost media viewer and we don't even like including it in a discussion about VR's capabilities because it is only 3DOF and can't let you do interactive games or experiences the way the Quest or the Rift S can.

Competing with the Rift S is the Valve Index, which got many gamers to salivate with its higher specs, including a sharp set of 2880x1600 monitors with a 130-degree field of view. The Oculus Quest, for comparison, only has 1440x1200 pixels per eye and a slower 72 Hz refresh rate and a smaller field of view at around 100 degrees. The negative for all that performance is that the Valve requires a user to be tethered to an expensive gaming PC.

Photo credit: Valve. The Valve Index got a lot of great reviews from gamers, who valued its high-resolution screens with a wide field of view.

Finally, the road to bringing us this magic has been quite rocky. Even the best headsets haven't sold more than a few million units; the Quest struggled to get to half a million by the end of 2019, and many attempts at bringing the masses into VR have failed. Two notable failures are Google Daydream and Microsoft's Mixed Reality licensed headsets.

Why did these do so poorly in the market? Well, for different reasons.

Mistakes Were Made

The Google Daydream headset, and a similar idea from Samsung, let users plop their phones into a headset. This idea promised low-cost VR, but it didn't deliver very well. First of all, the mobile phones that were on the market three years ago didn't have hugely powerful GPUs, so the graphic capabilities were muted. Worse, mobile phones often got too hot because of this underpowered nature, and VR pushed the phone's capabilities harder than anything else could, draining the batteries quickly on devices people needed for a lot more than just playing in VR.

Ours crashed a lot, too, due to the processing demands that heated everything up. The weight of the phone, too, meant that these headsets weren't very comfortable, with a lot of weight out in front of your nose.

What really killed them, though, was that they were only 3DOF, and there just wasn't enough content to view and the people who might have hyped them up had just gotten their full 6DOF systems from HTC or Facebook and weren't likely to spend hours playing underpowered mobile-based headsets.

Photo credit: Google. One of the attempts that didn't go well with consumers was the Google Daydream headset that let you drop your mobile phone into this device to do VR. But it wasn't very full-featured; it couldn't do 6DOF, for instance, and only ever had very limited content developed for it.

The Microsoft Mixed Reality headsets tried to fix many of these problems but also failed due to a lack of customers, mostly because the people who really cared about VR saw these attempts as underpowered, with poor sensors, and not having much marketing behind them. Translation: the cool games and experiences were showing up on the HTC Vive or Oculus Rifts. Things like Job Simulator, which every child under the age of 13 knew about due to the brilliant influencer marketing of its owner, Owlchemy Labs, which now is owned by Google, were selling like hotcakes, and games like that needed the more powerful headsets.

We tend to think it was a mistake, too, for Microsoft to call these VR headsets "mixed reality." That confused the market since they came after Microsoft had released the critically acclaimed HoloLens, which we'll talk about a lot more in a bit, which was a true mixed reality experience where monsters could come out of your actual walls. These VR devices weren't that and confused the market and made the market wonder why they weren't just saying these are VR headsets. Either way, these are gone now except for the Samsung HMD Odyssey, and the Oculus Quest has set the scene for the next wave of Spatial Computing products.

We are seeing products in R&D that bear very little resemblance to the big, black headsets that are on the market as the 2020s open up. In fact, at CES, Panasonic showed off a new VR prototype that looked like a steampunk pair of lenses and was much smaller than the Oculus Rift. Oculus co-founder Palmer Luckey made some Twitter excitement when he posted a photo and praise of the Panasonic (which we doubt will do well in the market due to its poor viewing angle, lack of content, and lack of 6DOF).

Photo credit: Microsoft. The original Microsoft-licensed VR headsets from Lenovo, Dell, Acer, and HP [from left to right and top to bottom]. The Samsung HMD Odyssey is missing from the photo, as it came out many months later than these, was relatively more expensive, and had the reputation of being the best quality of the bunch. Only the Odyssey is still available for direct purchase at a $499 retail price.

Either way, VR is here now, and the magic it brings of immersion, embodiment, and presence sets the stage for a whole range of new capabilities that will show up over the next few years.

The Spectrum ‒ Augmented Reality from Mobile to Mixed Reality

The popularity of Amazon's Alexa and its competitors show us that humans are hungry for a new kind of computing. This form of computing lets us see new layers of information on top of the existing world. It is computing that surrounds us, walks with us through shopping malls and assists us while working out. This kind of computing could potentially even save our lives as it collects our visual, biometric and textual data.

Closer Computers

To make it possible to get this level of human-machine interface, we will need to get computers closer to our brains and certainly our eyes.

We can do some of this kind of computing by holding a phone in our hands, which is already used for AR by kids playing Minecraft Earth or Pokémon Go. Holding a mobile phone, though, won't get us to the promised land of true Spatial Computing. It must be said, however, that developers are using mobile to build a variety of things that are important to pay attention to.

As the 2020s open, we are seeing some of these wearables evolving in the marketplace with a spectrum of devices.

On one side, we have very lightweight devices that look similar to the Google Glass device. A good example of these lightweight devices is Focals by North, which got some hype at the Consumer Electronics Show (CES) 2020, but there are a number of them from Vuzix, Epson, Nreal, and others. These have screens that show you a variety of information overlaid on top of the real world and do it with a form factor that looks like a pair of sunglasses.

At the other end of the spectrum are devices that completely change our visual experience as we move around in the real world. These devices which include Microsoft's HoloLens 2 could be seen as a new kind of "mixed-reality" device that brings advanced graphical features and a ton of sensors along with a new form and Artificial Intelligence to see, process, and display all sorts of new experiences, both visual and auditory, that profoundly changes how we interact with the real world.

It is these higher-end devices that have us dreaming of a radically new way of computing, which is what we are covering in this book: Spatial Computing. With these devices, you're wearing seven cameras along with a bunch of other sensors and because of the amount of processing that needs to be done and the kinds of visual displays that need to be included, these devices tend to be both heavier and more expensive than devices, for instance, like Focals by North.

It is the near future, though, that has us most excited. Qualcomm announced a new XR2 chipset and reference design that will make powerful AR devices possible that will make the HoloLens 2 device soon seem underpowered, heavy, and overpriced. We're expecting a wave of new devices to be shipped in 2021 based on the XR2 with new optics that will be far better than anything we've seen to date.

Let's go back, though, to where AR came from.

The Origins and Limitations of Augmented Reality

For us, the potential for AR came up in 2011 as Peter Meier, then CTO of a small AR company in Munich, Germany, took us into the snow outside of its offices and showed us dragons on the building across the street. These virtual dragons were amazing, but the demo was done with a webcam on a laptop. Hardly easy to do for consumers. Earlier attempts, like Layar, which was an AR platform for mobile phones, didn't hit with consumers because the mobile phones of the day were underpowered, and most didn't appreciate AR on small screens. These early efforts, though, did wake up a lot of people, including us, to the possibilities of AR. It also woke Apple up, which started working earnestly on Spatial Computing.

Within a few years Apple had purchased that company, Metaio, and Peter today works there. Since then Apple has purchased dozens of companies that do various pieces of Spatial Computing, and that acquisition trend continues into 2020. While we were typing this Apple bought another company that does AI that will prove important to the future we describe here. Metaio's work was the foundation of a new set of capabilities built into iPhones. Developers know this AR framework as "ARKit." Google has a competitive framework for Android users called "ARCore."

There are now thousands of applications that use ARKit, ranging from apps to let you measure things to apps that radically change the real world in terms of everything from shopping to new forms of video games. If you visit Apple's store at its new headquarters in Cupertino you will be handed an iPad where you can see an augmented display of the building across the street from the store.

Touch the screen and the roof pops off so you can see what it's like inside. Touch it again and you'll learn about the solar panels that provide clean energy to the building. Touch it again and you can see a wind analysis layer of the building.

Photo credit: IKEA. IKEA Place lets you move furniture around in your house and try it out to see if it fits your lifestyle before you buy it and unpack it, only to discover it doesn't work.

It is apps like these that show the power of AR, but they also show you the limitations, of which there are many. Let's dig into a few of the limitations of mobile AR:

- You have to hold the phone: This keeps you from being able to use more capable controllers, or even your hands, to touch, manipulate, or shoot virtual items.

- Tracking often doesn't work well: This is especially the case in dark rooms or places that don't have a lot of detail or in moving vehicles like planes or buses, and tracking is almost impossible to get. Apple, even in its headquarters, put a grid pattern on top of the building model so that the camera on the iPad would be able to figure out where it was and align virtual items accurately and quickly.

- Occlusion doesn't always work: Occlusion means that virtual items properly cover up things behind them. Or, if a human or, say, a cat walks in front of the virtual item, the virtual item disappears behind that moving human or animal properly.

- Virtual items don't look natural for a variety of other reasons: It's hard for your iPhone to be able to properly place shadows under virtual items that match real-world lighting conditions. It's also hard to make virtual items detailed enough to seem natural. For things like virtual cartoons, that might not be a big deal, but if you want to "fool" your user into thinking a virtual item is part of the real world it's hard to do that because of the technical limitations inherent in today's phones and the software that puts these virtual items on top of the real world.

Photo credit: Niantic. Pokémon Go lets you capture characters on top of the real world in its popular mobile game, which generated about a billion dollars in revenue in 2019.

The other problem that most people don't think about when they are holding their mobile phone is that their screen is actually fairly small. Even the biggest iPhones or Samsung devices are only a few inches across. That hardly is enough to give your brain anything close to the immersion, embodiment, and other advantages of VR. The problem of having to hold the phone or tablet is the most daunting, actually, particularly for workers. Imagine working on a factory line where you need both of your hands to insert a part into a product. Now, imagine having to put down that part to hold up a phone to augment your work and see details on what you need to do next to properly hook that part up.

Or, if you are playing video games, imagine trying to play paintball, or go bowling with your friends. The act of holding a phone, especially with a tiny screen, limits your ability to recreate the real-world version of those games.

This is why companies like Apple, Qualcomm, Magic Leap, Facebook, Google, and others have already spent billions of dollars to develop some form of wearable AR glasses, blades, or head-mounted displays, if you wish to call them that.

Wearable AR

The industry has been attempting to have a go at this for quite some time and already there have been some notable failures.

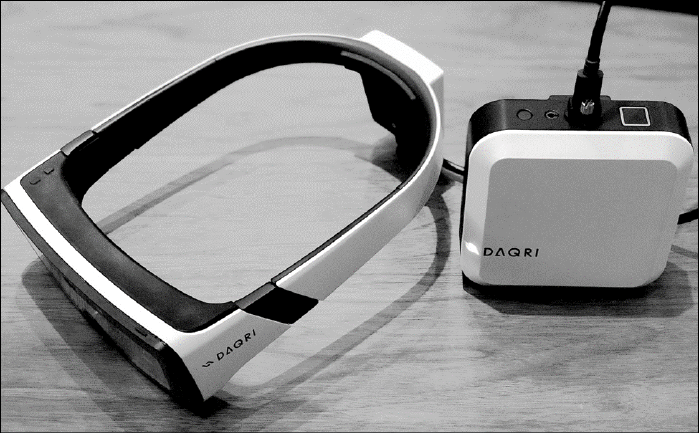

ODG, Meta, DAQRI, Intel Vaunt, and others populate the AR "burial zone" and insiders expect more to come. This is really a risky game for big companies. Why?

Well, to really take on the mass market, a company will need the following:

- Distribution, stores: The ability to get people to try on these devices.

- A brand: Because these computers will be worn on your face, consumers will be far more picky about brands. Brands that are perceived as nerdy will face headwinds. Already, Facebook announced it is working with Luxottica, which owns brands like Ray-Ban, Oakley, and many others.

- A supply chain: If their product does prove successful, tens of millions will buy it. There are only a few companies that can make those quantities of products and use the latest miniaturization techniques, along with the latest materials science, to make devices lightweight, strong, and flexible to absorb the blows that will come with regular use.

- Marketing: People don't yet know why they need head-mounted displays, so they will need to be shown the advantages. This will take many ads and other techniques to get consumers to understand why these are better than a smartphone alone.

- Content: If you get a pair of $2,000 glasses home and you can't watch TV or Netflix, or play a lot of different games, or use them at work with a variety of different systems, you will think you got ripped off. Only a few companies can get developers to build these, along with making the content deals happen.

- Design and customer experience: Consumers have, so far, rejected all the attempts that have come before. Why? Because the glasses that are on the market as of early 2020 generally have a ton of design and user experience problems, from being too heavy to not having sharp enough visuals, to looking like an alien force made them. Only a few companies have the ability to get where consumers want them to be. One company we have heard about has built thousands of different versions of its glasses and it hasn't even shipped yet. The cost of doing that kind of design work runs into hundreds of millions of dollars.

- Ecosystem: When we got the new Apple Watch and the new Apple AirPods Pros in late 2019, we noted that turning the knob on the Watch (Apple calls it the Digital Crown) causes the audio on the headphones to be adjusted. This kind of ecosystem integration will prove very difficult for companies that don't have phones, watches, headphones, computers, and TV devices on the market already.

- Data privacy: These devices will have access to more data about you than your smartphones do. Way more. We believe consumers are getting astute about the collection of this kind of data, which may include analysis of your face, your vascular system (blood vessels), your eyes and what you are looking at, your voice, your gait and movement, and more. Only a few companies can deal satisfactorily with regulators concerning this data and can afford the security systems to protect this data from getting into the wrong hands.

This is why when Meron Gribetz, the founder of Meta, told us that he thought he had a chance to be disruptive to personal computers, we argued with him. He thought the $200 million or so that had been invested in his firm would be enough. We saw that a competitive firm, Magic Leap, had already raised more than a billion dollars and thought that meant doom for his firm. We feel Magic Leap, even with its current war chest of $2.7 billion dollars, doesn't have enough capital to build all eight of these required aspects from the preceding list. In fact, there have been news reports that indicate that Magic Leap is currently for sale. In April 2020, Magic Leap announced massive layoffs of about 50 percent of its workforce, or more than 1,000 people, and refocused away from attempting to sell to consumers.

We turned out to be right about Meta, for his firm was later decimated and sold for parts. Others, too, had the same dream of coming out of the woodwork and leaving a stamp on the world. ODG's founder Ralph Osterhout had quite a bit of early success in the field. He invented night-vision scope devices for the military and that got him the ability to have a production line and team in downtown San Francisco to come up with new things.

He sold some of his original patents to Microsoft where they were used on the HoloLens product. But, like Meron's company, his firm couldn't stay in the market.

Photo credit: Robert Scoble. Meron Gribetz, founder of Meta, shows off how his headset could augment the world and let you use your hands to work.

These early attempts, though, were important. They woke developers up to the opportunities that are here if all the pieces can be put together and they saw the power of a new way of working, and a new way of living, thanks to AR.

Photo credit: Intel. Intel Vaunt promised great-looking smart glasses with innovative digital displays, but didn't provide the features that potential customers would expect.

They weren't alone in their dream of changing computing for all humans, either. Some other notable failures tell us a bit about where we are going in the future. Intel's Vaunt aimed at fixing a ton of problems with the other early attempts. It had no camera due to the PR problems that Google Glass got into and the last version could only show two-dimensional overlay visuals in black and white. It also had no speaker or microphone. It looked like an everyday pair of glasses.

Too Much and Too Little

It was Intel's fear of the social problems of smart devices on your face, though, that doomed it. In April 2018, it announced it was canceling the Vaunt project. To do AR and the virtualized screens that would give these devices real utility beyond just being a bigger display on your face, the device needs to know where it is in the real world.

Not including cameras meant no AR. No microphones meant you couldn't use your voice to control the devices. No speakers meant you still needed to add on a pair of headphones, which increased complexity, and consumers just decided to stay away. Not to mention Intel didn't own a brand anyone wants on their face and didn't have the marketing muscle or the stores to get people to try them on and get the proper fit and prescription lenses.

DAQRI, on the other hand, went to the other side of the spectrum. They included pretty great AR and had a camera and much better displays. Workers wearing them could use them for hours to, say, inspect oil refinery pipes and equipment.

Photo credit: Robert Scoble. DAQRI's headset gathered hundreds of millions in investment and showed promise for enterprise workers, but never took off because of its tether and expensive costs.

Where Intel threw out any features that made the glasses seem weird or antisocial, DAQRI kept them in. It was big, bulky, and had a tether to a processing pack you would keep on your belt or in a backpack. This appeared very capable, and because they were aimed at enterprise users who didn't need to worry about looking cool or having a luxury brand associated with the product, it seemed like it would see more success. And for a while it did, except for one thing: the price tag.

Many corporate employees heard about this system, gave DAQRI a call, then found out the costs involved, which could run into hundreds of thousands of dollars, maybe even millions, and that killed many projects. In September 2019, it joined the failure pile and shut down most operations.

Getting Past the Setbacks

Now, if we thought the failures would rule the day, we wouldn't have written this book. Here, perspective rules. There were lots of personal computing companies that went out of business before Apple figured out the path to profitability (Apple itself almost went bankrupt after it announced the Macintosh). Luckily, the opportunity for improving how we all compute is still keeping many innovators energized. For instance, at CES 2020, Panasonic grabbed attention with a new pair of glasses. Inside were lenses from Kopin, started by John Fam.

Photo credit: Robert Scoble. John Fam of Kopin shows off how his lenses work in a demo. His lenses and displays are now in Panasonic's latest head-mounted displays and soon, he promises, others.

One thing that's held back the industry has been the quality of lenses and displays, and Fam is one of the pioneers trying to fix the problems. What problems? Well, if you wear a Magic Leap or a HoloLens, you'll see virtual images that are pretty dark, don't have good contrast, are fairly blurry, and are fairly thick. They also have disadvantages including a small viewing area, usually around 50 degrees, when compared to the 100 degrees on offer in most VR headsets. Because these new displays and lenses need to display virtual images while letting a user see through to the real world, they also are very difficult to manufacture, which is why a Magic Leap is priced at $2,500 and a HoloLens is $3,500 (as of January 2020, but we expect these prices to come down pretty rapidly over the next few years).

Today, there are only a few approaches to getting virtual images into your eyes. One approach is waveguides. Here, tiny little structures in something that looks like glass bounce light dozens of times from projectors until the right pixel gets to the right place and are reflected into your eyes. You can't really see these extremely small structures with the naked eye, but this process makes for a flat optic that can be fitted into a head-mounted display. It has lots of disadvantages, the most significant of which is that waveguides are typically not very efficient.

The ones in the first HoloLens presented virtual images that could barely be seen outdoors, for instance. They also present eye-strain problems because it is hard to make these kinds of optics present images at various focal depths. Getting virtual images close to your eyes? Forget it, but even ones that are a few feet away don't usually work at various depths as well as taking into account things in the real world, which puts strain on your eyes. Two terms optics experts talk a lot about are vergence and accommodation.

Vergence and Accommodation

Vergence is how your eyes move inward as you try to focus on things that are closer and closer to you. Cross-eyed? That's vergence and if your glasses don't align images well as they get closer, they can cause your eyes to get much more tired than when they have to focus on real-world items for a long time.

Accommodation refers to how your eye changes shape―and how the lenses in your eyes move to focus on things close up. The eye often uses cues from the real world to figure out how to do that and in smart glasses, these cues aren't available because everything is presented on a single focal plane. Eye strain appears again as an issue.

Microsoft with HoloLens 2, its latest AR device, went in a different direction―it is using a series of lasers, pointed into tiny mirrors that reflect that light into the back of your eye, which allows you to see virtual images. So far, this approach brings other problems. Some users say they see a flicker, while others say that colors don't look correct.

Either way, we haven't seen one monitor/lens system yet that fixes all these problems. The closest we've seen is one from Lumus, but because it stayed with waveguides, it needed huge projectors pushing light into the optics. This might be good for something as big as a HoloLens (which weighs about a pound) but the industry knows it needs to ship products that are closer to what Intel's Vaunt was trying to do if it wants to be popular with users.

We hear new optics approaches are coming within the next few years, which is what will drive a new wave of devices, but even those spending billions are no longer hyping things up. Both Facebook's founder Mark Zuckerberg and Magic Leap's founder Rony Abovitz have been much more muted when discussing the future with the press lately. Zuckerberg now says he believes we will get glasses that replace mobile phones sometime in the next decade.

Fast, Cheap, or Good

The saying goes that when designing a product, you can have it cheap, fast, or good, except you can only have two of the three. By "good," we mean well designed and appealing for the user: a good and intuitive user interface, appealing aesthetics, and so on. If you've got a "good," fast product, it's not going to be cheap. If it's both fast and cheap, it's unlikely that it's had so much time and expertise put into the design process to bring you a "good" user experience. Spatial Computing product designers will have to make other choices on top of this canonical choice as well. Lightweight or the best optics? A cheap price tag or the best performance? Private or advertising-supported? Split the devices up into their component parts and you see the trade-offs that will have to be made to go after various customer contexts. Aiming a product at someone riding a mountain bike will lead to different trade-offs from aiming one at a construction worker inspecting pipes, for instance.

Since we assume you, our reader, will be putting Spatial Computing to work in your business, we recognize you will need to evaluate the different approaches and products that come to market. These are the things you will need to evaluate for your projects. For instance, having the best visuals might be most important to you, and you are willing to go with a bigger, and heavier, device to get them.

Photo credit: Robert Scoble. DigiLens Display at Photonics West in 2019. Photonics West is where the industry's optics experts go to show off their latest work and here a potential customer tries on DigiLens' latest offering.

Here are the 10 technology areas where devices will compete:

- The monitors and optics: These control what you see. The ones that present more data in a bigger field of view with more pixels, or higher resolution, are usually in the bigger and more expensive devices than the ones on the lightweight side of the scale.

- The GPU: GPU speed means how many polygons per second can be processed. The more powerful the GPU in your device, the more detailed the virtual items presented can be. Again, the more powerful ones will be in devices that can supply the battery power these will need and the heat handling that comes along with more powerful chips.

- Battery: If you want to use your devices for more hours without needing to charge, you'll need a bigger and heavier one. There will, too, be various approaches to charging said batteries that you will need to consider.

- Wireless capabilities: Will it have a 5G chip so it can be used on the new high-speed wireless networks? Or will it only have Wi-Fi capabilities, like current Microsoft HoloLens devices? That's great if you'll only use it at home or work, where Wi-Fi abounds, but makes it harder to use elsewhere.

- The sensors: Newer and bigger devices will have several cameras and a variety of other sensors―one to look at your mouth for data to build avatars and study your sentiment, one for your eyes, another to gather light data, so it can adjust the monitor to be brighter or darker depending on conditions, and four for the world, two of which will be black and white for tracking and two will be color for photography and scanning items. In addition, there's usually an Inertial Measurement Unit (IMU), which watches how the unit moves. An IMU actually has a group of sensors―an accelerometer, a gyroscope, a compass, and a magnetometer. These give the system quite a bit of data about how the headset is moving. The top-of-the-line units also have some three-dimensional sensors. All these sensors add many new capabilities, including the ability to have virtualized monitors that stick to surfaces such as real-world tables, or AR characters, masks, items, and such.

- AI chip: Newer devices will add on chips to decipher what the unit is seeing and to start predicting the next moves of both the user and others in their field of view.

- Corrective lenses: Many users will need custom lenses inserted, or systems that can change the prescription of the system. Apple and others have patented various methods to bend lenses to supply different corrective prescriptions.

- Audio: On some systems, we are seeing array microphones in some high-end units (an array has a number of microphones that can be joined together in software to make it so that they work in higher noise situations, such as a factory floor). Also, a variety of speakers are available, with more on the way. Some even vibrate the bones in your face, which passes audio waves to your inner ear.

- Processing: Some systems are all self-contained, like the Microsoft HoloLens, where everything is in the unit on your head. Others, like Nreal and Magic Leap, need to be tethered to processor packs you wear, or to your phone.

- The frame and case: Some design their products to be resistant to a large amount of abuse, others design them to be hyper-light and beautiful. There will be a variety of different approaches to water resistance and shock resistance, and trade-offs between expensive new materials that can provide flexibility and reduce device weight while looking amazing, versus cheaper materials that might be easier to replace if broken.

Each product that we'll see on the market will have various capabilities in each of these areas and trade-offs to be made.

Differences To Be Aware Of

We won't go into depth on each, since we're not writing this book to help the product designers who work inside Apple, Facebook, or others, but want to point out some differences that business strategists, planners, and product managers will need to be aware of.

For instance, let's look at the lenses. Generally, you'll have to know where you will attempt to use these devices. Will they be outside in bright sunlight? That's tough for most of the current products to do, so might limit your choices. Lumus, an optics company from Israel, showed us some of its lenses that would work outdoors. They said they are capable of about 10,000 nits (a unit of measure for how bright a monitor is), versus the 300 to 500 nits in many current AR headsets which only work in fairly low light.

The problem is that Lumus' solution, at least as it was presented to us in 2018, was expensive and fairly big. This is appropriate for a device the size of a HoloLens, but you won't see those in glasses anytime soon. The Lumus lenses were a lot sharper than others we had experienced before and since, and had a bigger field of view, but product designers haven't yet included those in products, in part because of their big size and higher cost.

At the other end of the scale were lenses from Kopin and DigiLens. DigiLens showed us glasses in early 2019 that were very affordable (devices would be about $500 retail) but had a tiny field of view―about 15 degrees instead of the 50 that Lumus showed us, which is about what the HoloLens 2 has, and not as much resolution either. So, reading a newspaper or doing advanced AR won't happen with the DigiLens products, at least with what we've seen up to early 2020.

So, let's talk about how these products get made. When we toured Microsoft Research back in 2004, we met Gary Starkweather. He was the inventor of the laser printer. That interview is still up on Microsoft's Channel 9 site at https://channel9.msdn.com/Blogs/TheChannel9Team/Kevin-Schofield-Tour-of-Microsoft-Research-Social-Software-Hardware.

In the preceding video, Starkweather talks about his research into the future of computing. Starkweather was looking for ways to build new kinds of monitors that would give us ubiquitous computing. In hindsight, this was the beginning of where the HoloLens came from. In a separate lab at Microsoft Research nearby, Andy Wilson was working on software to "see" hands and gestures we could make to show the computer what to do, among other research he and his team was doing.

He still is working on advancing the state-of-the-art human-machine interfaces at Microsoft Research today. Starkweather, on the other hand, died while we were writing this book, but his work was so important to the field that we wanted to recognize it here.

From Kinect to the HoloLens

This early research from the mid-2000s soon became useful as three-dimensional sensors started coming on the scene. In 2010, Microsoft announced the Kinect for Xbox 360. This was a three-dimensional sensor that attempted to bring new capabilities to its video game system. The system could now "see" users standing in front of it. Microsoft had licensed that technology from a small, then unknown Israeli start-up, PrimeSense.

Why was Kinect important? It soon became clear that Kinect wouldn't be a success in the video game world, but Microsoft's researchers saw another way to use that three-dimensional sensor technology. Shrink it down, put it on your face and aim it at the room, instead of players. That would let a wearable computer map the room out, adding new computing capabilities to the room itself.

That's exactly what Alex Kipman and his team did on the first HoloLens. That device had four cameras and one of those three-dimensional depth sensors that mapped out the room, then converted that map into a sheet of polygons (little triangles). You can still see that sheet of triangles once in a while when using the HoloLens and needing to do calibration.

Photo credit: Microsoft. HoloLens 2 brings a much more comfortable headset with way better gesture recognition, along with a flip-up screen so workers don't need to remove it as often.

The sensors on the front of a Spatial Computing device, like a Microsoft HoloLens, build a virtual layer on top of the real world where virtual items can be placed. Software developers soon become adept at manipulating this virtual layer that many call the "AR Cloud." This database of trillions of virtual triangles laid on top of the real world provides the foundation for everything to come from simple text to fully augmented or virtual characters running around.

When the HoloLens was introduced, it brought a new computing paradigm. It's useful to look at just some of the new things it did that weren't possible before:

- Screens can now be virtualized: Before HoloLens, if you wanted a new computer screen you had to buy one from a retailer and hook it up. They are big, awkward, heavy, and expensive. With HoloLens you just gestured with your hands or told the system to give you a new screen. Then you could grab that virtual screen and place it anywhere in the room around you. There aren't any limits on how many screens you could get, either.

- Surfaces can be changed: When using RoboRaid, one of the games we bought for our HoloLens, aliens could blow holes in our real walls and then crawl through them to attack. The effect was quite stunning and showed developers that you could "mix" reality in a whole new way, keeping the real world but replacing parts of it with virtual pieces.

- Everything is three-dimensional (and not like those glasses at the movies): The first thing you figure out when you get a HoloLens is that you can drop holograms, which are three-dimensional items that move, nearly everywhere. We have a clown riding a bicycle around our feet as we write this paragraph, for instance. Now that might not sound that interesting, but you can view your business data in three dimensions too. Over at BadVR, a start-up in Santa Monica, California, Suzie Borders, the founder, showed us quite a few things, from shopping malls where you could see traffic data on the actual floor to factories where you could see data streaming from every machine. This new way of visualizing the world maps to the human brain and how it sees the real world, so it has deep implications for people who do complex jobs, such as surgeons, for instance.

- Everything is remembered: Our little bicycle-riding hologram will still be here even a year later, still riding in the same spot; the monitors we placed around our office stick in the same place. Data we leave around stays where we left it. Microsoft calls these "Azure Spatial Anchors" and has given developers new capabilities regarding this new idea.

- Voice first: The HoloLens has four microphones, arranged in a way to make it easy for it to hear your commands. You can talk to the HoloLens and ask it to do a variety of things and it'll do them and talk back. While you can do the same with Siri on an iPhone, this was built into the system at a deep level and feels more natural because it's on your head and always there.

- Gesture- and hand-based systems: Ever wanted to punch your computer? Well, HoloLens almost understands that. Instead, you open your hand and a variety of new capabilities pop up out of thin air. You can also grab things with your hands, and poke at them now too, all thanks to the sensors on the front of the device that are watching your hands, and the AI chip that makes sense of it all.

Given this combination of technologies and capabilities, which are superior to those that the Magic Leap or any other current AR headset has, we believe that the HoloLens historically will be viewed as a hugely important product that showed the world Spatial Computing at a depth we hadn't seen before.

Technology Takes a Leap

All others would use the HoloLens as a measuring stick, which brings us to Magic Leap. Magic Leap gathered investors with a promise that it would make a big magic leap into this new kind of computing. In hindsight we don't think the investors knew how advanced Microsoft's efforts were. Its first round of $540 million came back in 2014, two years before HoloLens hit the market.

Photo credit: Magic Leap. The Magic Leap One is an attractive consumer-targeted product that has more graphical capabilities than HoloLens, but requires a tether to a processor pack, which HoloLens doesn't need.

The hype before Magic Leap shipped its first product, the ML1, was that it constituted a real graphics breakthrough, with optics that were way ahead of others in the market. That didn't come true, at least not in its first product, and as mentioned, Magic Leap's future as a continuing company as this book goes to press is an unknown.