2

Four Paradigms and Six Technologies

Introduction

You aren't reading this book to learn about small new features of existing apps, but rather to more deeply understand what we are calling the "Fourth Paradigm" of personal computing―Spatial Computing.

This chapter will bring you up to date on the technology you need to know about to understand where Spatial Computing came from, where it's going, and why it's so fundamentally different than the desktops or mobile phones that came before, even though it is using a lot of technology invented for the machines of earlier paradigms. Spatial Computing is the fourth paradigm, and we see it as the most personal of all the personal computing paradigms yet to come. We will cover six technologies that enable Spatial Computing to work: Optics and Displays, Wireless and Communications, Control Mechanisms (Voice and Hands), Sensors and Mapping, Compute Architectures (new kinds of Cloud Computing, for instance), and Artificial Intelligence (Decision Systems).

The Four Paradigms

What do we mean by "paradigm"? The evolution of the user experience is the easiest way to understand this. The First Paradigm has to do with the beginning of personal computing and the interface being text-based. With the Second Paradigm, graphics and color capabilities were included.

Movement and mobility were added with the Third Paradigm in the form of mobile phones. With Spatial Computing, the Fourth Paradigm, computing escapes from small screens and can be all around us. We define Spatial Computing as computing that humans, virtual beings, or robots move through. It includes what others define as ambient computing, ubiquitous computing, or mixed reality. We see this age as also inclusive of things like autonomous vehicles, delivery robots, and the component technologies that support this new kind of computing, whether laser sensors, Augmented Reality, including Machine Learning and Computer Vision, or new kinds of displays that let people view and manipulate 3D-centric computing.

We will see seven industries that will be dramatically upended by the 3D thinking that Spatial Computing brings and will go into more detail on the impacts on those industries in further chapters. Soon, all businesses will need to think in 3D and this shift will be difficult for many. Why can we say that with confidence? Because the shift has started already.

The Oculus Quest, which shipped in May 2019, is an important product for one reason―it proves that a small computer can run an on-the-face spatial computer that brings fantastic visual worlds you can move around in and interact with. It is proving that the world of Spatial Computing has arrived with a device many can afford and is easy to use. It's based on a Qualcomm chipset that is already years old as you are reading this―all for $400, and with controllers that can move freely in space and control it all! Now that our bodies can move within a digital landscape, the Spatial Computing age, that is, "the Fourth Paradigm of Personal Computing," has arrived and will only expand as new devices arrive this year and the next. What we are seeing now are the Spatial Computing counterparts of products like the Apple II or the IBM PC of earlier paradigms.

The Quest isn't alone as evidence that a total shift is underway. Mobile phones now have 3D sensors on both sides of the phone, and brands are spending big bucks bringing Augmented Reality experiences to hundreds of millions of phones and seeing higher engagement and sales as a result.

Look at mobile phones and the popularity of Augmented Reality games like Minecraft Earth and Niantic's Harry Potter: Wizards Unite last summer. You play these while walking around the real world. A ton of new technologies are working in the background to make these 3D games possible.

How is this fourth paradigm of computing different? It is additive, including the technologies that came before it, even as it brings quantitatively different kinds of technology to our eyes and world. Spatial Computing promises to do what the previous three paradigms have failed to do: to map computing to humans in a deep way. Elon Musk says it will increase our bandwidth; what he means is that our computers will be able to communicate with each of us, and us with our technology, in a far more efficient way than we've ever done before. Paradigm one kicked it off by enabling us to converse with our own computer, one that was in our homes for the first time, through a keyboard. That brought a revolution, and the next paradigm promises a huge amount of change, but to understand the amplitude of that change, we should look back to the world that Steve Wozniak and Jobs brought us way back in 1977.

Paradigm One – The Arrival of the Personal Computer

The Apple II is as important as the Oculus Quest, even though most people alive have never used one. The Quest brought a new kind of Spatial Computing device to the market―one that mostly was biased toward Virtual Reality, where you can only see a virtual world while the real world is hidden from view. This $400 device was the first from Facebook's Oculus division that didn't require sensors to be placed around you, and didn't require a wire from the headset to a PC. It was all self-contained and it powered on instantly, which dramatically increased usage numbers.

Where the Quest let everyday people think about owning a VR headset for the first time, the Apple II acted in the same way for those back in the late 1970s, allowing people to own a personal computer for the first time! As the 1980s began, a lot of people, not just governments or big businesses, had access to computers. Four decades later, we are seeing the same trend with Spatial Computing.

The Apple II, and later the IBM PC, which ran Microsoft's DOS (for Disk Operating System), introduced the integrated circuit into a consumer-focused product and enabled an entire industry to be built up around it. By the end of the 1980s, literally every desk inside some corporations had a personal computer sitting on it, and the names of those who made them, such as Michael Dell, are still well-known today.

We expect that Spatial Computing will similarly lift previously unknown people up to wealth and household name status.

Photo credit: Robert Scoble. Steve Wozniak stands next to an Apple II at the Computer History Museum. He is the last person to have the design of an entire computer, from the processor to the memory to the display drivers, in his head. After this, teams took on the engineering of all those parts.

Speaking of Dell, he may not be the one that gets credit for starting the personal computing age, but only because he was able to make personal computing more accessible to the masses. He did this by licensing Microsoft's operating system and putting it in lower-cost machines, allowing companies like Dell to make many sales. We foresee that a similar pattern will probably play out. We expect Apple to enter the market in late 2020, but believe that its first products will be very controlling of privacy, tethered wirelessly to the iPhone, and much more expensive than, say, competitive products from Google and Facebook, not to mention those from the Chinese.

It is hard to see how early computers that could only display black and white computations on a screen could be relevant to Spatial Computing, but the concepts it introduced remain relevant even today, such as files, printing, saving, and others. The thing is that back in the beginning, there were no graphics; computers were way too big to hold (much less dream about putting in your pocket) and were much harder to use. Those of us who learned computing way back then remember having to look up commands in manuals to do something simple like print, and then you'd have to type those commands onto the screen.

Spatial Computing, or computing you can move through, is actually joined by much improved voice technology. Companies like Otter.ai are understanding our voices, and systems like Apple's Siri, Amazon's Alexa, Google's Assistant, and others are waiting for us to speak to them.

We imagine that, soon, you will be able to just say something like "Hey Siri, can you print out my report?" and it will be so. This new world of convenience that is being ushered in is, in our opinion, far preferable to the days of code and command lines that we saw during the first days of personal computing!

The first computers arrived with little excitement or fanfare. The first ones only had a few applications to choose from, a basic recipe database, and a couple of games. Plus, Apple founders Steve Wozniak and Steve Jobs were barely out of high school, and the first machines were popular mostly with engineers and technical people who had dreamed of owning their own personal computers. Those days remind us a lot of the current Virtual Reality market. At the time of writing this book, only a few million VR machines have sold. In its first year, only a few thousand Apple IIs had sold. It was held back because the machines were fairly expensive (in today's dollars, they cost more than $10,000) and because they were hard to use; the first people using them had to memorize lots of text commands to type in.

It's funny to hear complaints of "There's not enough to do on an Oculus Quest," which we heard frequently last summer. Hello, you can play basketball with your friends in Rec Room! Try going back to 1977, when the first ones basically didn't do anything and, worse, you had to load the handful of apps that were out back then from tape, a process that took minutes and frequently didn't work right at all. Wozniak told us his wife back then lost her entire thesis project on an Apple II, and even he couldn't figure out how to save it. Those problems aren't nearly as frequent in the days of automatic saving on cloud computing servers.

Why is the Apple II, along with its competitors and precursors, so important? What was really significant was that people like Dan Bricklin and Bob Frankston bought one. They were students at Harvard University and saw the potential in the machine to do new things. In their case, they saw how it could help businesspeople. You might already know the rest of the story; they invented the digital spreadsheet.

The app they developed, VisiCalc, changed businesses forever and became the reason many people bought Apple IIs. "VisiCalc took 20 hours of work per week for some people, turned it out in 15 minutes, and let them become much more creative," Bricklin says.

The Apple II ended up selling fewer than 6 million units. The Apple II, and its competitors that would soon come, such as the IBM PC, did something important, though―they provided the scale the industry needed to start shrinking the components, making them much faster, and reducing their cost, which is what computing would need to do to sell not just a few million machines, but billions―which it did in the next two paradigms.

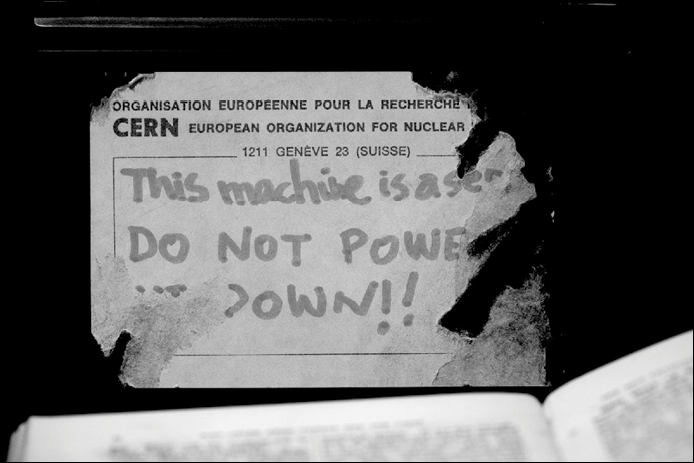

Photo credit: Robert Scoble. Label on Tim Berners-Lee's NeXT computer on which he invented the World Wide Web while working at CERN. The NeXT operating system still survives in today's Macintosh and iOS, but is really the best example of concepts invented at Xerox Palo Alto Research Center in the early 1980s.

Paradigm Two – Graphical Interfaces and Thinking

In 1984, the launch of the Apple Macintosh computer brought us graphical computing. Instead of typing text commands to, say, print a document, you clicked an icon. That made computing much easier but also enabled the launch of a new series of desktop publishing apps. By the time Windows 95 arrived, finally bringing the idea to the mass market, the entire technology stack was built around graphics. This greatly increased the size of tech companies and led to profitable new lines of software for Microsoft and Adobe, setting the stage for Spatial Computing.

This graphical approach was much easier to use than learning to type in commands to copy files and print. Now, you could just click on a printer icon or a save icon. This increased accessibility brought many new users into computing. The thing to take away here is computing, with this, and each of the paradigm shifts that followed, made a massive move toward working more like humans do, and made handling computer tasks much easier. Spatial Computing will complete this move (Google's Tilt Brush in VR still uses many of the icons developed in this era to do things like choose brushes, or save/delete files).

It was a massive increase in the number of computer users (many stores had long lines of people waiting to buy Windows 95) that gave Microsoft, in particular, the resources to invest in R&D labs that led directly to the development of HoloLens 25 years after Windows 95's huge release.

Also, it took many graphic designers off of working on typesetting machines and brought them into computing, which accelerated with the popularity of the web, which also saw its debut on Windows 95 and Macintosh. When Tim O'Reilly and Dale Dougherty popularized the term Web 2.0 in 1994, even Bill Gates didn't understand how important having people interacting on web pages would be.

Weblogs were springing up by the millions and e-commerce sites like Amazon and eBay were early adopters of techniques that let parts of web pages change without being completely refreshed. Today, WordPress is used by about 20 percent of the web but back then, Gates and his lieutenant Steven Sinofsky didn't see the business value in Web 2.0, refusing to consider a purchase after the coauthor of this book, Robert Scoble, suggested such a thing when he worked as a strategist at Microsoft. He is now Chief Strategy Officer at Infinite Retina.

The web was starting to "come alive" and desktops and laptops were too with new video gaming technology. Nvidia, born in the 1990s, was now seeing rapid growth as computing got cheaper and better. Where a megabyte of RAM cost $450 in the late 1980s, by 2005, a gigabyte was running at $120 and while that was happening, internet speeds escaped the very slow modem age and were increasing to such speeds that new video services like YouTube and social networks, including LinkedIn, Twitter, and Facebook, were able to appear. Technology continues to get cheaper and smaller to this day. Today, 21 billion transistors fit into a chip the size of your fingernail (like the one that does self-driving in a Tesla) and memory now costs about $15 for 64-GB chips. It is this decrease in cost and increase in capabilities that is bringing us Spatial Computing.

This new user interface paradigm, while easier than typing text commands, still was difficult to use for many. More than one phone call to Microsoft's support lines demonstrated that many had difficulty figuring out how a mouse worked, and even dragging icons around the screen was daunting and scary to many. Those of us who grew up around computers saw it as easy, but many didn't. While the visual metaphors were there, the disconnect between moving your hand on a mouse on a desk while controlling a cursor on the screen meant that computers still didn't work the way we did. While most figured it out, computing had another problem―computing wasn't designed to fit in your hand or pockets, which is the vision that Douglas Engelbart, among other pioneers, had for all of us. Engelbart was the genius who, back in the late 1960s, showed the world the technology that it wouldn't get until the Macintosh arrived in 1984. Before he died, he told us he had an unfinished dream: of making computing even more personal, where you could communicate with computers with your hands and eyes. He predicted not only the move to mobile, but the move to truly Spatial Computing.

This brings us to Paradigm Three: Mobile.

Paradigm Three – Mobile

Humans aren't happy being tied to desks to do their work, and that enabled a new industry and a new paradigm of personal computing to arrive―one that brought computing off of desks and laps and into your hand. This paradigm shift enabled billions to get on the internet for the first time (we've seen very poor people in China and other places riding bikes while talking on their smartphones) and would be the platform that many new companies would build upon, thanks to new sensors, ubiquitous data networks, and new kinds of software that were designed for these devices we all now hold.

This third technology shift started in places like Toronto (RIM Blackberry) and Helsinki (Nokia). For years, these two companies, along with Palm, with its Treo, and a few others, started a new technology industry direction. They produced products that fit in your hand and didn't seem to be very powerful computers at the time. Mostly, they were aimed at helping you take a few notes (Treo) or make a call, while entertaining the ability to take a photo too (Nokia) or send a few text messages to coworkers (RIM's Blackberry). This turned into quite an important industry. Nokia alone, at its peak in 2000, accounted for four percent of its country's GDP and 70 percent of the Helsinki Stock Exchange market capital.

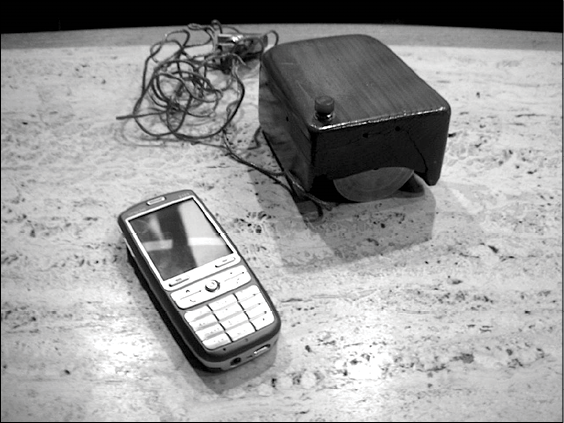

Photo credit: Robert Scoble. A Microsoft Windows Phone, circa 2006, sits next to the original mouse, circa 1968, on Douglas Engelbart's coffee table.

What they didn't count on was that Steve Jobs would return to Apple and with the help of Microsoft, who poured capital into the failing Apple of the late 1990s, brought Apple back, first by rejuvenating the Macintosh line to keep its faithful happy, then with the introduction of the iPod. Now, the iPod didn't seem to be a device that would help Apple compete with the Blackberries, Treos, and Nokias, but it helped Jobs build teams that could build small, hand-held devices and figure out how to market them. That effort crushed other portable audio players and did so in such a way to give Jobs the confidence, and cash, to invest in other devices like phones.

A few of the engineers who built the iPod were asked by Jobs to come up with ideas for a new device that would merge what they learned with the iPod and add in phone capabilities. The early prototypes looked much more like an iPod than the product we all know today.

That team all carried Treos and studied the other devices, Andy Grignon told us. He was one of the dozen who worked on the first prototypes. They saw that while early devices were useful because they could be carried around, they were hard to use for most things other than making phone calls. Many didn't have keyboards that were easy to type on, for instance, and even the ones that did have keyboards, like the RIM devices, were hard to use to surf the web or do things like edit photos on.

He told us that Jobs forbade him from hiring anyone who had worked on one of these competitive products, or even from hiring anyone who had worked in the telecom industry. Jobs wanted new thinking.

On January 9, 2007, Steve Jobs introduced the iPhone. That day, we were at the big Consumer Electronics Show getting reactions from Blackberry and Nokia execs. They demonstrated the hubris that often comes from being on top: "Cupertino doesn't know how to build phones," one told us. They totally missed that there was an unserved market―one that not only wanted to use devices while walking around, but also wanted to do far more than just make a call or take a photo once in a while. Their devices were too hard to use for other tasks, and their arrogance kept them from coming up with a device that made it easy.

Around that time, the web had become something that everyone was using for all sorts of things that Tim Berners-Lee, the inventor of the web, could never imagine. With iPhones, and later, Android phones, we could easily use the full web on our mobile devices while walking around, all by using our fingers to zoom into articles on the New York Times, just like Steve Jobs had demoed months earlier from a stage in San Francisco.

It was this combination of an easy-to-use device, along with sensors, that could start adding location-based context to apps that formed the basis of many new companies, from Uber to Instagram, that were born within years of the iPhone launch, which showed something significant had happened to the world of technology and that set up the conditions for the next battle over where the tech industry will go next: Spatial Computing.

Paradigm Four – Spatial Computing

You might notice a theme here. Each paradigm builds upon the paradigm that came before, bringing real breakthroughs in user experience. With our mobile phones, tablets, and computers, there's still one glaring problem―they don't work like humans do. Paradigm Four is bringing the perfect storm of all usability breakthroughs.

Even a young child knows how to pick up a cup and put it in the dishwasher or fill it with milk. But this same child is forced to use computing that doesn't work like that. Instead of grabbing with her hand, she has to touch a screen, or use a mouse, to manipulate objects on a flat screen.

In Spatial Computing, she will just grab a virtual cup like she would a real one in the real world. This will enable many new people to get into computing and will make all of our tasks easier, and introduce new ways of living.

This move to a 3D world isn't just happening in computing, either. We experienced an off-Broadway play, "Sleep No More," which was a remake of Shakespeare's Macbeth, where you walk through sets, with action happening all around. Even New York plays are starting to move from something confined to a rectangular stage to one that surrounds us in 360-degrees. It's a powerful move―one that totally changes entertainment and the audience's expectations of it.

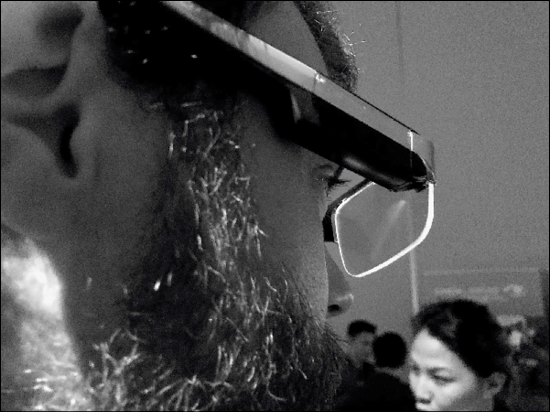

Photo credit: Robert Scoble. Qualcomm shows off Spatial Computing/AR Glasses of the future at an event in 2017.

If Spatial Computing only introduced new 3D thinking, that would be absolutely huge. But it's joined by new kinds of voice interfaces. Those of us who have an Amazon Echo or a Google Home device already know that you can talk to computers now and ask them to do things. Within a year or two, you will be having entire conversations with computers. Also coming at the same time are powerful new AIs that can "see" things in your environment. Computer Vision will make getting information about things, plants, and people much easier.

A perfect storm is arriving―one that will make computing more personal, easier to use, and more powerful. This new form of computing will disrupt seven industries, at a minimum, which we will go into deeply in the rest of this book. In the next section, we'll look at how this storm of change is to impact technology itself.

Six Technologies

The storm will bring major advances in six new technologies: Optics and Displays, Wireless and Communications, Control Mechanisms (Voice and Hands), Sensors and Mapping, Compute Architectures (new kinds of Cloud Computing, for instance), and Artificial Intelligence (Decision Systems). For the rest of this chapter, we'll explore the implications of the fourth computing paradigm for each of these technologies.

Optics and Displays

The purest forms of Spatial Computing will be experienced while wearing a head-mounted display personified in the form of glasses. In these glasses, you will experience either Augmented Reality (where you see some of the real world, along with some virtual items either placed on top, or replacing real-world items) or Virtual Reality (where you experience only a virtual world).

Paul Milgram, an industrial engineering professor who specializes in human factors, went further into depth than just dividing the world into these two poles. He said that these two were part of a reality-virtuality continuum, which included mixed reality (where both the real and virtual are mixed). People will continue to mix up all the terms, and we expect that whatever Apple calls them when it introduces its brand of Spatial Computing devices are what may stick, just like it calls high-resolution screens "Retina Displays."

Photo credit: Robert Scoble. DigiLens prototypes, as displayed at SPIE Photonics West 2019, a conference focusing on optics. As of early 2020, glasses like these still haven't gotten good enough, or small enough, but a raft of new technologies in R&D labs promises to change all that by 2025.

Other terms used to describe various experiences on the spectrum between Augmented and Virtual Reality? Computer-mediated reality, extended reality, simulated reality, and transreality gaming. Now, you probably get why we prefer to just lump all of these under one term: Spatial Computing.

There are already a range of Spatial Computing products on the market, from Google Glass on one side, with its tiny screen, to Microsoft HoloLens on another, with an approach that puts virtual images on top of the real world in a very compelling way. Yet none of these approaches have succeeded with consumers, due to deep flaws such as having a heavy weight, low battery life, blurry and dim screens, not to mention that since they haven't sold many units, developers haven't supported them with millions of apps like the ones that are available on mobile phones.

In talking with Qualcomm, which makes the chipsets in most of these products, we learned that there are two approaches that will soon dominate the market: one where you see through the virtual layer to the real world, and one that passes the real world to your eyes through cameras and screens.

Both fit into the Spatial Computing family, since they present computing you can move around in, but they have completely different use cases.

Examples of see-through devices are Magic Leap's ML1 or Microsoft's HoloLens, which let you see the real world through the lenses a wearer sees through, but that uses a Wave Guide to display virtual items on top of the real world.

In November 2019, it was leaked that Apple will bring HMDs that bring something else: a completely opaque display that, when worn, presents the real world "passed through" cameras to its tiny little high-resolution screens. This passthrough approach, first introduced in spring of 2019 in the Varjo VR-1, brings you a superwide field of view (FOV), something that the Magic Leap and HoloLens can't yet match, along with extremely high resolution and better color than Magic Leap and HoloLens can bring you. The same leak, reported in The Information, said that Apple is also planning a see-through pair of lighter glasses that will come in 2023. That matches plans at other companies, like Facebook, which has a four-year strategy for building its own pair of Augmented Reality glasses.

The passthrough designs, though, have some significant limitations―other people can't see your eyes, so they are not great to use in situations where you need to talk with other people in the real world, and, because you are seeing a virtual representation of the real world, they won't be good for military or police, who need to see the real world in order to shoot guns. The latency and imperfections in bringing the real world to your eyes in such an approach might prove troublesome in applications that require immediacy. Similarly, we wouldn't trust them in everyday situations where you could be in danger if your awareness is compromised, like if you are a worker using a bandsaw, or someone driving a car, or even if you are trying to walk around a shopping mall.

That said, a passthrough device would be amazing for replacing monitors. Another way to look at it is that passthrough will be biased toward Virtual Reality, where you see mostly virtual worlds or items and very little, if any, of the real world. See-through displays, on the other hand, will be biased toward Augmented Reality, where you are mostly seeing the real world with virtual characters or items, or virtual screens overlaid or replacing parts of the real world. Each is a member of the Spatial Computing family, and has different use cases and benefits.

We are hearing that, by 2022, these passthrough devices will present you with a virtual monitor that both will be bigger and have a higher resolution than most of our friends have in their homes in 2019, and we know many people who have 4K projection screens in their entertainment rooms.

We don't know which approach will win with consumers, but we have come up with some theories by trying out the different approaches for ourselves.

Enterprise workers who need to walk around machines, or police/military users, will tend to go with something like the HoloLens.

These devices, at least as of early 2020, use optics systems that guide light to your eye through glass with tiny structures, or mirrors, that bounce light projected from tiny projectors or lasers into your eyes, giving you an image. Why do we say workers or the military will go with these? Because they let you see through the glass to the real world. The analog world is hard to properly represent virtually with low latency, and these optics don't even try.

The devices that require lots of cameras, sensors, and powerful GPUs to properly align virtual images into the analog world will remain fairly big and heavy. The HoloLens weighs more than a pound and it doesn't even include a 5G radio, or batteries that can keep the thing powered for long enough for it to be used for more than a few hours.

We can't imagine running, or skiing, with such a big contraption on our heads, not to mention they make a wearer look antisocial if worn out in public, which explains why there are small, if not underpowered, devices like the Focals by North and Google Glass. We find these smaller devices lacking in the ability to present text in a satisfying way, and have many limitations from a very small field of view to not enough power to do Augmented Reality, which is where we believe the real power of on-face wearable devices lies. These are great for wearing while doing, say, inventory at a warehouse, or seeing notifications like a smart watch might display, but they can't display huge virtual monitors and can't even attempt to do virtual or true Augmented Reality-style entertainment apps.

Bigger devices with optics from companies like Kopin or Lumus, which makes the display in Lenovo's glasses, and DigiLens, which got an investment from Niantic, the company behind Pokémon Go, are pushing the bleeding edge, with many others coming soon.

You should be aware of these different approaches, though, and the pros and cons of each. For instance, people who mostly work at desks, whether sitting at a Starbucks, or a corporate office, won't often need to see the real world, so they may prefer the wider field of view, the better color, and higher resolution of passthrough devices like the Varjo, and what we expect Apple to be working on, among others.

Why do we see passthrough as the ones that may win with consumers by 2022? New micro LED displays are coming on the market from companies like Sony and Mojo Vision, which have super high-resolution displays that are smaller than your fingernails.

These displays offer better power utilization and some can do things like completely turn the sky you are looking at dark, or replace it with virtual images. That lets wearers experience both VR and AR, often one after another, in all sorts of lighting conditions with potentially wrap around screens at a lower cost than the techniques used in HoloLens.

We can see a world where we'll own two or maybe even three pairs of glasses for different things. Additionally, we might use a very different headset, like the Varjo, when we go to a shopping mall to play high-end VR in Location-based Entertainment retail settings like you see from The Void, Spaces, Sandbox VR, Hologate, or Dreamscape Immersive. These are places you pay $20-$30 or more to have much more immersive experiences than you can at home, but for the purposes of this book, we'll focus on enterprise and consumer uses of Spatial Computing and not these high-end uses―although you should try them out since our families and team members love them as well.

One thing we want you to realize is that much better devices will soon arrive―some may have even been announced in-between us finishing writing this book and the time you read it. Expect this section of our book to be out of date quickly after printing as technologies come out of the lab.

As we've been talking with people about these different approaches, many people believe that small GPUs will not be able to power such high-resolution displays. That might be true if displays behaved like the ones on your desktop, which have the same resolution all the way across the display.

VR pioneer Tom Furness told us that won't be true soon, thanks to a new technique called "foveated rendering." What he, and his researchers realized, is that the human eye can only perceive color and high resolution in the middle of the eye, the fovea. Since Spatial Computing devices will be worn and some include eye sensors, they can play visual tricks with our eyes, he says, by only putting high resolutions, or tons of polygons, right in the center where our eyes are looking. The outside screens could be lower resolutions, or, at a minimum, display fewer polygons, thus "fooling" your eyes that the entire display is, say, an 8K one, while it really is only the center of the display that is that high a resolution. Already, this technique is being used in some VR headsets, like the Varjo, and products that use this technique can double apparent resolution without putting extra strain on the GPU or computers driving them.

Other problems also need to be solved. With the HoloLens, you can't bring virtual items close to your face. Why is that? Because of two terms: accommodation, which is how the muscles in your eyes react to closer-focus items, and vergence, which is how your eyes move toward each other when focusing on something close. Those two actions are hard for current optics and microscreens to support.

The holy grail of displays was actually invented about 20 years ago by Furness. It uses a low-power laser to scan a single pixel across the back of your retina. No one has been able to manufacture such a monitor in scale yet, but Furness said prototypes in his lab actually helped a few people to add sight into their blind eyes, since the laser penetrates scar tissue that some people built up due to birth defects or injuries to the back of their eye. We hope to see a working version of such a monitor, which, if a company can build it, promises to use very little power and give amazing images due to being painted right onto our retinas. That said, display technology we will have on our eyes within a year of the publishing of this book will be very impressive indeed.

Now that we've discussed the future of Optics and Displays, let's look at the other pieces of the Spatial Computing puzzle that are also rapidly evolving.

Wireless and Communications

The screens mentioned in the previous section are going to be data-hungry. If our sources are correct, the wearable screens on our faces will need 8K and probably much higher resolution videos to give you a sharp view of the world around you. We are hearing of companies testing glasses with 32K videos that wrap around you in 360 degrees.

For such a viewing experience to be true, you'll need a huge amount of data―all while the glasses are also uploading data streaming from cameras and sensors up front.

Plus, engineers in labs are telling us that they are trying to remove as much computing from the glasses as possible and put that computation up on new kinds of cloud servers. For such a scheme to work, we'll need not only much more bandwidth than we have today, but we'll need very low latency.

Enter 5G. 5G brings us three benefits. First, very high bandwidth. Users are getting about 10 times more bandwidth than they used to get with LTE on mobile phones. Second, low latency. 5G has a latency of about two milliseconds to a cell tower. The current latency is about 10x slower. That matters if you are trying to play a video game with someone else. Imagine everything lags or is slow. The third benefit comes to bear if you visit sporting/concert arenas with thousands of other people. 5G promises many times more devices can connect per antenna. We remember the day we went to the World Series at AT&T Park in San Francisco and couldn't send a text message or make a phone call on our iPhones, despite AT&T putting the best radios it had into the park.

As of 2020, we don't expect many of you to have 5G, but it is already being put into sports arenas, and cities with high population densities. 5G also has some significant problems. The highest frequencies can't go through walls easily and you need to be much closer to antennas than with LTE. Telecom experts tell us that rollouts will be slow to most neighborhoods and you will probably need to upgrade your Wi-Fi in order to distribute such fast bandwidth inside office buildings and homes.

When will you get 5G in your home? If you live in a high-density city, probably by 2022. If you live in a rural area, though, you might not see it for most of the 2020s due to it being necessary for true 5G to be very close to a 5G tower. That said, in 2019, we got an AT&T fiber line and Wi-Fi 6 in our homes, which already gives us most of the benefits of 5G without waiting. WiFi6 is the newest version of the Wi-Fi standard and shipped on iPhones for the first time in late 2019. Unfortunately, very few people have access to a gigabit fiber line, our Wifi6 router cost about $400, and we bought another $500 worth of extenders. Very few people will want to put more than $1,000 into new Wifi6 equipment just so they can have the fastest speeds in the neighborhood!

Another place where 5G will be rolled out more quickly? Inside factories. Here, 5G pays real dividends because virtualized factory floors can make things much more efficient, and many factories have thousands of sensors and thousands of robots that can overwhelm older communications infrastructure.

It is the new software architectures that 5G enables, however, that will be most exciting. Google Stadia gives you a little taste. Instead of loading games on your phone or your glasses that can be many gigabytes in size, you just livestream them. This kind of scheme will enable much more complex processing to be done on huge server farms, serving just streams of pixels down to the optics on your eyes. We can imagine a day, soon, if it hasn't happened already by the time this book is published, where you say something like "Hey Siri, play Minecraft Earth" and the game just starts playing without loading anything.

The thing with all this bandwidth is that it's very hard to use on older equipment. Even with tons of 4K video streaming, we can't slow down our gigabit internet line. It's Spatial Computing that will use all that bandwidth that 5G, or a WiFi6/Gigabit internet line, will bring and to control that we'll need a much more efficient way to control the multiple video screens and the Augmented Reality that will surround us as we wear new Spatial Computing glasses. This is why Qualcomm is already building wearable devices that have seven cameras, most of which will be used for new kinds of controls for this new highly interactive world.

Control Mechanisms (Voice, Eyes, and Hands)

When we put a HoloLens on someone for the first time, what do they try to do? Grab for one of the holograms they see with their hands. Obviously, this is something that we would never attempt to do with a 2D interface such as a computer screen or a smartphone screen.

This human need to try to touch, grab, and manipulate is so powerful that it's easy to see that controlling computing inside an AR or VR headset won't be anything like it is on a traditional laptop or desktop machine with its mouse and keyboard. There are so many business and consumer uses in Spatial Computing for this direct kind of manipulation, that is, using your hands.

Photo credit: Robert Scoble. A woman uses the Icaros VR fitness device at a tradeshow in Germany in 2018. This shows that Spatial Computing controls will include not just hand controls, but sensors on the face, and other places that can enable new kinds of fun experiences.

At the same time, new voice-first technologies are coming fast and furious. At Apple's WWDC last year, Apple showed off new voice commands that could run the entire operating system of a Macintosh simply by talking. Many of us are already used to using voice for some things, either with Siri on iPhones, or Alexa on Amazon Echo devices, or with Google's Assistant on its Android phones, apps, or Google Home devices. Others, like Samsung's Bixby, are also coming along, and specialized voice apps and services, like Otter, are further encouraging us to talk to our devices to perform tasks, take notes, or do other work.

While most people will judge devices by how well they handle hand gestures, we see that it's the combination of input methods, whether it's using your voice, your hands, your eyes, or even typing or moving a phone, that makes Spatial Computing so powerful.

It's the first time we can really experience hands-free computing. Surgeons, for instance, are starting to use Spatial Computing because they can control the cameras and other devices by talking to their devices.

Haptic controls are also evolving quickly, which add realism to many use cases. The standard controllers that come with, say, an Oculus Quest or HTC Vive, do some, like shaking when you shoot a gun, but there's an entire industry springing up as Spatial Computing glasses and VR headsets become more popular, from companies that make gloves that let you touch and feel things, to suits that let you capture your entire body motion and, even, in the case of the $15,000 Tesla suit, let a wearer experience feeling hot or cold, along with other sensations passed to your skin through dozens of transducers.

That said, the standard sensors in headsets alone bring major new capabilities, even if you don't add on some of these other accessories.

What really is going on is that we're seeing a convergence of technologies that's making all of this possible. Sensors are getting cheap enough to be included in consumer-grade devices. AI is getting good enough that we are close to having full conversations with our computers, as Google demonstrated in 2018 with its Duplex demo. There, an AI called a local business and talked with the human answering the phone and the human was none the wiser.

Computer Vision and Machine Learning makes using hand gestures much more accurate. Andy Wilson showed us how gestures worked at Microsoft Research way back in 2005 (he directs the perception and interaction research group there: https://www.microsoft.com/en-us/research/people/awilson/). He wrote algorithms that could "see" his fingers with a camera. Another algorithm was taught how to tell if he touched his finger and thumb together. Then, he zoomed in a map just by using his hands in mid-air. Today, we see that same technique used in Microsoft's HoloLens, even though it has become much more advanced than the simple touch-your-fingers-together algorithm.

Back then, though, gestures only did one or two things and weren't accurate if the sensor couldn't clearly see your thumb touching your finger. Today, AIs pick up small patterns on the back of your hand from your muscles contracting so that many more gestures work, and the ones you try might not even be fully visible to the sensors on the front of your Spatial Computing glasses.

These capabilities make Spatial Computing devices awesome for remote assistance, hands-free work, and all sorts of new interactions with computing or robots. Snap your fingers and you can be shooting at monsters crawling toward you in a video game.

The thing businesses need to know here is that users will come at this world from where they are at, and you will need to do a lot more testing with people to make sure they can use your services well. Accessibility is a huge opportunity. Apple demonstrated this when a guy who had no use of his hands, working in a wheelchair, could still send messages, manipulate photos, and perform other tasks, all with his voice.

It is hard to imagine just how deeply user interfaces will soon change. Eye sensors will facilitate some major leaps in the way we interact with our technology. We can see a day when we are in the shopping mall and ask our glasses "Hey, Siri, what's the price of that?," totally expecting an answer about the shirt we are actually looking at. Indeed, all the major companies have purchased eye sensor-producing companies. In part, this is because eye sensors can be used to further develop high-resolution monitors that pack tons of polygons into the spot where you actually are looking using the foveated rendering technique, but we bet they see the business opportunities of making user interfaces understand us much better by knowing what we are looking at, along with the biometric security that will come, too.

Another need for eye sensors is biometric-multifactor identity. Turns out our eyes are like our fingerprints: uniquely ours. So, if our glasses are put on by someone else, they won't be able to access our private information until they hand them back and give them to us. Add that to identity systems that use voice, heart rate, and the blood vessels in skin that your cameras can see, where your human eye can't, along with patterns like gait and hand movements, and computing soon will be able to know it's you at a very high degree of accuracy, increasing the security of everything you do and finally getting rid of passwords everywhere.

Eye sensors will also be loved by marketers, since it's possible they will know if you actually looked at an ad or got excited by a new product display at a store. These new capabilities will lead to many new privacy and control concerns.

We've seen companies like Umoove, which lets us control phone screens using only our eyes, but that seemed clunky and not well integrated and, truth be told, not all that accurate. That won't be the case as more eye sensors get included into Spatial Computing glasses.

Speaking of Siri, as of 2019, she isn't all that good, but Apple is working on improving her to the place where you can do everything, from finding a restaurant to editing a video, just by talking to her. Ask her something like "how many people are checked in on Foursquare at the Half Moon Bay Ritz?" You will quickly learn her limitations. She understands us just fine (although if you don't speak English well or have a weird accent or other non-standard speech patterns, she fails there too).

Foursquare actually has an answer to that question, along with an API so other programmers could get to it. Siri, though, was hard coded and Apple's programmers haven't yet gotten around to such an obscure feature, so it fails by bringing in an answer from Bing that makes no sense.

Where is this all going? Well, Magic Leap had already started demonstrating a virtual being that it calls "Mica." Mica will stand or sit with you, and interact with you with full conversations, and she can even play games with virtual game pieces with you. Those capabilities were shown at the Game Developer's Conference in early 2019.

The thing about Mica is that it years away from being able to properly fool us in all situations that Mica is as good a conversationalist as a human, but that's not the point. Amazon Echo/Alexa and Google Assistant/Home demonstrate something is going on here: we are increasingly using our voice to control our computing, and much better AI is coming between now and 2025 that will adapt to even difficult-to-understand voices, as well as much more complex queries that go far beyond the Foursquare example.

As systems get more data about where we are, what we are doing, what we are looking at, what or who we are interacting with, and what our preferences are, these virtual beings and assistants will become much better. Businesses will increasingly run on them, and your customers will choose businesses that better support our assistants. A "Hey Siri, can you get me some Chinese food for lunch?" type of query might soon decide that your Chinese restaurant is the one it'll bring thousands of customers to. Why you, rather than a competitor? Maybe you put your menu online, maybe you are better at social media, maybe you got better Yelp ratings, or maybe your staff is wearing Apple glasses and can answer queries for Apple customers faster so Siri starts to prefer your business.

Put all of these together and you'll see a computing system that works the way you do with other people; by gesturing and touching, talking, and using your eyes to focus attention on things.

That said, a major way we'll navigate around is, well, by moving around, and there's some major new technology that's been developed for sensing where we are, and what surrounds us. You'll see versions of this technology used in robots, autonomous cars, and in Augmented Reality glasses.

Sensors and Mapping

We humans take for granted moving around the real world. It seems easy to us, something we've done ever since we were born after our parents bundled us up and brought us home.

Computers, though, couldn't understand this real world until recently. They didn't have "eyes" in which to see it and didn't have even a basic understanding of being able to move around, that is, until the mobile phone came along and we started teaching it by building digital maps.

At first, those digital maps were mere lines where streets are, with a few dots of data along the street. Today, however, our glasses, our cars, and robots in the street are mapping that street out with billions of points of data, and now this same process is happening inside our factories, hospitals, shopping malls, and homes.

Photo credit: Luminar Technologies. Here, a street is mapped out in 3D by Luminar Technologies' solid state LIDAR.

Mapbox, which is the map provider that hundreds of thousands of apps use, showed us the future of maps that was only possible once mobile phones became ubiquitous enough to get huge amounts of data on every street. It showed us how they could build an entire map of a freeway, including lanes, via huge numbers of mobile check-ins. Every time you open Yelp or Foursquare, or use Snapchat, among other apps, it gets another little point of location data, and if you get enough of these points, which they call "pings," a new kind of map is possible due to mega-huge databases of all that data, combined with Machine Learning to make sense of all that data streaming into its servers.

Then, there is data collected by cameras or new kinds of 3D sensors, like time-of-flight lasers, which measure the world out very accurately by figuring out how long a beam of light takes to reflect off of a surface and get back to it. Your 3D sensor in a modern iPhone works like that. Today, Apple mostly uses that sensor for Augmented Reality avatars or face detection to unlock your phone, but in the future, those sensors will do a lot more.

A Tesla car, driving down the same street, images the street as a point-cloud of 3D data, and there are many streets where a Tesla drives down the street every few seconds, gathering even more data.

Soon every stop sign, tree, pole, lane marker, restaurant sign, and far more insignificant details, will be mapped by many companies. Apple alone drove four million miles with 360-degree cameras and 3D sensors, in an attempt to make its maps better. Other companies have driven even further.

These early efforts in mapping the world are great, but the datapoints that all these companies have collected is going up exponentially. The next frontier will be to map out everything.

Our friends who have worked at Uber tell us it is keeping track of the sensor data coming from the mobile phones of its drivers. It can tell if streets have a lot of potholes just because those driver's phones are shaking. The auto insurance company, Go, says it has maps of which streets have more accidents than others. It and other companies are laying on top of the digital map all sorts of things, from sensor readings coming off of machines, to other location data from various databases, similar to how Zillow shows you the quality of school districts on top of homes you might be considering buying.

Luminar Technologies's CEO, Austin Russell (he runs a company that builds LIDAR sensors for autonomous cars), told us how his sensors work―they map out the real world with solid-state sensors that can see up to 250 meters away. The high-resolution point cloud that is generated is processed by Artificial Intelligence that quickly identifies literally every feature on the street. Other companies take that data and are building high-resolution contextual maps that future cars then can use. Russell explained to us how these maps are nothing like maps humans have ever used before. These maps, he says, aren't a simple line like you would see on your mobile phone, but a complete 3D representation of the street, or surroundings of where you are.

The glasses of the future will do something very similar. Companies like recently acquired 6D.ai had been using the cameras on mobile phones to build a crude (as of 2019) 3D map of the world. 6D.ai had been calling it an AR Cloud, but what it really is is a sheet of polygons stretched on every surface that a particular camera sees. AR Cloud is a new term that incorporates a technology called SLAM, which stands for Simultaneous Location and Mapping. That's the technique developed by NASA to have the Mars Rover navigate around the surface of Mars without humans being involved. Basically, it is how a robot can see the real world and move around in it. AR Cloud takes that further by giving a framework for where virtual items can be placed.

This is how Augmented Reality works at a base level. In order to put a virtual item onto the real world, you need a "digital twin" of that world. We don't like that term, because in reality, we see some places having dozens of digital twins. Times Square in New York, for instance, will be scanned by Apple, Facebook, and Microsoft, along with dozens of car companies and transportation companies, and eventually robots from Amazon and other companies, which will be rolling through there delivering food and products. Each building a digital twin.

This digital twin need not look anything like the real world to humans. At Niantic, the folks who make Pokémon Go, Ross Finman's team, have such a digital twin with only data of where people play games built on top of its platform―parks, shopping malls, sports arenas, that kind of thing. The data in its platform doesn't look like the real world to a human. Their "map" of the real world then converts that data into contextual information about the real world. Is there water nearby? Is this a park where children play, or adults hike? That data lets them build better games, by putting specific Pokémon characters near water, and others, where there are, say, tons of forests, and their world wide database is doubling in size every few months.

The holy-grail, though, is to have a detailed map of every surface that surrounds a human. Already at factories like the ones Audi or Volkswagen run, they have done just that. They used cameras and 3D sensors to build a high-resolution virtual version of their factory floors using a system from a company called Visualix, which tracks everything in that factory down to a centimeter accuracy, its CTO Michael Bucko told us. Why? For two reasons: training and design.

It can use that virtual factory floor to train employees using VR headsets on new jobs before they even see the real factory. It can also use that same virtual factory floor to see how to redesign part of it to hold a new set of robots, for instance, too, and design workspaces for the humans that will need to interact with the robots. It won't stop there, either. Workers, who wear Augmented Reality headsets, will be able to be assisted during their work while on the real factory floor. Re'flekt already built such a system for Audi workers. They can even leave videos on the real factory floor for the next shift to explain how to use a new piece of equipment. This is especially useful if a worker is retiring and will be walking out the door with dozens of years of knowledge about how said equipment actually works. These videos can be left on that real equipment by attaching it to the "digital twin" that is actually in a database up in the cloud. To the human it looks real, but it is all virtual and all enabled by sensors and cameras that have mapped out the factory floor.

Bucko says its hundreds of customers are using Visualix' SLAM-mapping and positioning technology to build the foundation for Augmented Reality, as well as the digital twin that thousands of robots will use to navigate inside, say, a huge warehouse or retail store.

Your kitchen will soon be the same as that factory. The cameras on the front of your glasses will build a 3D map of your kitchen (Microsoft's HoloLens and even the $400 Oculus Quest have four cameras that are constantly mapping out the room you are in, at a high resolution). It won't take your privacy away―that's how it works, so that you can play Virtual Reality or use Augmented Reality. On the HoloLens, sometimes, you even catch a glimpse of the polygon sheet that it's producing on your walls, tables, and floor. It then uses that sheet of billions of little triangles to put virtual items on top.

Today, we don't have enough bandwidth or power in our devices to upload that sheet to the cloud, but we can see how, in the future, you will want every room in your home mapped out this way, along with every place you typically use these devices in: your car, your office, even stores. Today, these maps, or sheets of polygons, are stored locally, partly for privacy reasons, but also partly because, again, the small batteries and processors inside just can't handle a lot of uploading and downloading and comparing of these sheets yet. That will start to change dramatically this year, as this process of seeing the real world and uploading and downloading a digital twin of it gets more efficient.

Visual mapping with cameras, LIDARs, and 3D sensors isn't all that's going on here, either.

Once these sheets of polygons, or even the higher-resolution point clouds, hit a computer, you'll see systems that categorize this data. MobileEye, at CES 2019, showed what we mean. This autonomous car company, started in Israel and now owned by Intel, not only builds a digital twin of the real world, but as users drive by, say, a restaurant, it ingests that data into its AI systems and then categorizes that data into a useful database that sits on top of the map. Now, it knows where your favorite Chinese restaurant is. It also knows what is next to it. So, future drivers can now say "navigate me to my favorite Chinese restaurant" and it will take you right there.

The dream is to go further than any of this―to a memory aide. After all, if these imaging and categorization systems are already able to map out where your favorite restaurants are, they can also do things like remember where you left your phone or your keys, even warning you if you walk away from a table in said restaurant without picking up your phone. They could say to you, "Hey dummy, you left your phone on the table inside the restaurant."

Soon, these databases will remember everything in your world and will notice if you move things around, or where you left your keys. Why? The demand on these companies will be to build much more realistic Augmented and Virtual Reality experiences. Virtual beings will even sit on chairs with you in your kitchen and will have conversations with you. To do that properly, it will need to be fully situationally aware. Not just know there's a chair there, but the context of a chair. After all, don't you behave differently when sitting in a chair at church, or school, or one at a noisy bar? So, too, will the virtual beings in our future glasses.

That sensor and map data will lead into new computing architectures, too, particularly when paired with the high bandwidth of 5G and new Wi-Fi.

Computing Architectures

Computing Architectures have also gone through major paradigm shifts, and as we move into Spatial Computing, will see another major shift: to decentralized systems. Databases that once used to be stored on a single server, then multiple ones, moved to virtual Cloud servers. We saw that move first-hand working inside Microsoft and particularly Rackspace. When Rackspace started in the late 1990s, it installed servers in racks for customers. Today, those customers buy virtual servers that can be started up in far less than a second.

This move led to Amazon Web Services being the dominant way that start-ups build their infrastructure. Today, Amazon offers dozens of different kinds of virtualized servers, from databases to ones with beefy GPUs to do image processing on. It also started out as having one big data center, to having many around the world in regions.

The goal is to get computing as close to users as possible. Some of that is for redundancy, yes, so if a data center in one region fails, the others kick in and the service stays up. Today, though, as we move into Spatial Computing, there are another couple of reasons―to reduce latency―and reduce costs of building systems that will see very heavyweight workloads. See, we are still controlled by physics. A packet of data can't travel faster than the speed of light, and, actually, it is slowed down by each piece of equipment it travels through.

Plus, as we move our businesses from, say, things that work like e-commerce systems that serve web pages, to ones that look and work more like interactive video games, traditional infrastructure that's centralized will start to crack and break, not to mention becoming very expensive.

This is why most companies no longer use Oracle to house their databases, preferring instead to house their databases on hundreds of virtualized servers, or even using serverless architectures that let companies like Amazon or Microsoft handle all that infrastructure work.

Photo credit: Robert Scoble. A bunch of wires serving 5G radios in Las Vegas' T-Mobile Arena. Just a very small part of how Spatial Computing experiences will get to your glasses and mobile devices.

As we move to 5G, which gets that packet from a cell tower to your glasses or your phone with very little latency, the latency elsewhere in the system will become the bottleneck. Already, we are seeing new computing architectures that push the systems that draw polygons on your glasses' screen out to the edge of the network―in other words, on the glasses themselves or a box that's sitting in your home or office or somewhere else nearby.

But some data still needs to travel elsewhere. Think about if you are playing a virtual shooting game in VR. Now, if you are playing a neighbor who lives on your street or in your apartment building, you might see everything slow down due to latency. Why? Well, that packet might need to travel from your home in, say, Mumbai, all the way to one of the mega-huge data centers in Las Vegas and back, passing through dozens of switches and routers, not to mention fiber or, worse, copper cable, to make it back and forth. Just because you have two-millisecond latency times to your cell tower won't mean that you have a good experience shooting at your neighbor in a video game, or collaborating with a coworker on a new product design.

As a result, businesses will increasingly be forced to buy cloud computing resources closer to the people playing the game. The standard architecture used by businesses is to spread data over three to ten regions, or data centers. That won't be enough, though, in this new highly interactive 5G-enabled Spatial Computing world. Packets getting to major data centers will still introduce too much latency and cost, because if everything is centralized, you need massive server power to deal with the loads that will soon come due to new customer demands like interactive VR experiences.

A new architecture is evolving that some are calling "fog." A new three-tier approach "cloud" for massive data centers far away, "fog" is for servers closer to you and "edge" is for servers that are built into your devices or are very close to you in your homes or offices. Your main servers might be on Amazon or Microsoft Azure hundreds or thousands of miles away, which we still will call cloud, but a new set of smaller data centers will evolve that will serve parts of cities, or neighborhoods. This new layer, since it sits between the huge data centers that Amazon, Microsoft, IBM, and Google run for their cloud infrastructure and users, is called fog. It's sort of between a real-world cloud (the huge data center) and the ground (for example, your computer), just like real fog is. In this way, many packets won't travel far at all, especially if you are only trying to shoot your neighbor virtually. These new smaller servers, or, fog servers, don't need to be massive machines because they will support far fewer users. So, this three-tier approach will scale to many new application types.

Businesses that come into Spatial Computing will need to build using this new three-tier approach, pushing as much computing power out as close to users as possible. Older businesses will find that moving from a heavily centralized infrastructure where everything lives on huge data centers to one that's decentralized will be culturally and financially challenging, but it must be done to support not just these new use cases, but to support the many new users we expect (and the increasing demands on infrastructure we expect they will bring).

While that is all happening, we foresee a new set of infrastructures needed―content management systems. Designing standard business web pages that keep track of the images and text that need to be translated to local languages is fairly simple compared to having, say, different Pokémon characters for each city around the world. We visited EchoAR to get a look at one of these new Spatial Computing management systems. They showed us how users could even change their avatars and that the system would distribute those new avatars in real time to other gamers, or even corporate users around the world.

Add it all up and CIOs inside businesses will need to learn new systems and budget differently for the new workloads that are rapidly evolving.

Artificial Intelligence (Decision Systems)

AI has gone way beyond telling the difference between dogs and cats. Today's AI, in the form of Machine Learning and Deep Learning, and often with the aid of Computer Vision, is used for everything from building virtual cities, to driving autonomous vehicles and robots, to helping answer your emails.

All of these elements provide the situational "awareness" that is necessary for software running in a machine to present the appropriate images, and possibly accompanying sound to the person who is viewing Virtual Reality or Augmented Reality experiences, or riding in an autonomous vehicle.

Before we go deeper into how AI is generally used for Spatial Computing, let's define what AI, Machine Learning, Deep Learning, and Computer Vision are.

AI is the simulation of human intelligence using software and accompanying apps or machines. Machine Learning, which is sometimes not included as a branch of AI since it is seen as its least robust form, automates analytical model building by identifying patterns in data and making decisions based on those perceived patterns.

Deep Learning is a more robust Machine Learning technique that uses multi-layered hidden artificial neural networks in a "supervised" or "unsupervised" way, having as many as hundreds of network layers that either train on large amounts of data (supervised) or not (unsupervised) and then makes decisions. Multi-layered neural networks are actually a system of mathematical algorithms that work together to come to a decision on what a pattern in a particular dataset is.

If the Deep Learning is supervised, it uses labeled data as training inputs, that is, data that has been identified, collected together, and labeled according to particular attributes, such as visual data labeled as "car" or "traffic sign" or "store front." The output of "supervised" Deep Learning is the determination of whether or not the new data that is fed to it matches with the training data in terms of particular attributes.

If the Deep Learning is unsupervised, it means that there is no labeled training dataset and the outcomes are unknown. The system learns on its own using its mathematical algorithms to see patterns. It is the unsupervised version of Deep Learning that has caught everyone's imagination. Both supervised and unsupervised Deep Learning are used for autonomous vehicles, but it is unsupervised learning that enables the vehicle to make a decision as to whether it should change lanes or suddenly stop. There is also a reinforced version of learning that mixes both supervised and unsupervised methods, but it is still the unsupervised "leg" that produces the outcomes that most mimic human decision-making.

Computer Vision plays a very big part in both basic Machine Learning and Deep Learning. Corresponding to human vision, it serves as a mechanism by which both digital images and real life images are "sensed," with image attributes then sent to the learning mechanism, which in this case is Machine Learning and Deep Learning; in the case of digital images, the use of Computer Vision is often called image processing. For instance, in the case of digital images, those that are in color are reduced to grayscale by the more advanced image processing algorithms, because this reduces the amount of data that the system has to deal with for identification. If color intensity is one of the attributes that needs to be categorized, then it is done by way of a digital tag. For real-life images, Computer Vision actually uses cameras that feed the visual data through its Computer Vision algorithms, which identify the elements of the images, reducing everything to computer code that could then be fed through supervised Machine Learning and Deep Learning algorithms, first as training data if the data is more finely labeled by the Computer Vision algorithms, or, otherwise, fed through unsupervised Deep Learning algorithms.

In Spatial Computing, AI algorithms coupled with Computer Vision are used extensively. It is not hard to understand why this would be the case since Spatial Computing often deals with first identifying and then predicting the movement of three-dimensional objects, and it can even work to identify and predict what you, a robot, or an autonomous vehicle will do next, or where you or it will look, within a Spatial Computing environment. This can be achieved because the AI algorithms, coupled with Computer Vision, produce a high level of situational "awareness" within the software that could then be used to make predictions about three-dimensional qualities and movements. New virtual beings, like "Mica," which was demonstrated in 2019 by Magic Leap, can even walk around and interact with real and virtual items in your room, thanks to this technology.

Other examples of where digital situational "awareness" is necessary for Spatial Computing are when drones and autonomous vehicles are operated. For drones, the need for situational "awareness" goes beyond what is needed to have the drone move in space without colliding with other objects. Drones used for the delivery of packages, such as what Amazon is championing, as well as those used for warfare, have objectives that need to be fulfilled regarding the identification of objects and the predictions of correct trajectory that could be determined by AI algorithms and Computer Vision. Similar types of machines along these lines that benefit from using AI systems are food and parcel delivery robots that travel on city streets, as well as robots used in manufacturing and logistics that operate in irregular environments.

Another example of a machine that benefits from using AI systems is an autonomous vehicle. There are many similarities between the smaller delivery robot and an autonomous vehicle in terms of them having to seamlessly navigate city streets; however, the complexities associated with the rules of the road and the more sophisticated machinery and human payload warrant a much more robust Deep Learning system, one that incorporates Computer Vision that uses reinforced learning.

Outside of these physical manifestations using AI systems are those that integrate the virtual with the textual.

Machine Learning really started to hit its stride when it became the system of choice for the user interface operation of bots; those textually-based, mostly reactionary "assistants" that companies ranging from insurance to telecom, to retail to entertainment, and others are still using on their website direct messaging systems and for customer phone calls.

Virtual assistants, such as Siri, Amazon Alexa, and Google Assistant, are just vocalized bots that have been encapsulated within branded machinery, such as the iPhone, Amazon Echo, and Google Nest Hub. Where these become relevant to Spatial Computing is when these voice assistants are paired up with devices such as AR glasses (to a lesser degree, they are currently useful spatially when used in collaboration with a smartphone and a mobile AR app).

When Apple comes out with their AR glasses, we fully expect them to offer a version of Siri as the voice interface. In the future, the expectation is that voice navigation and command, paired with natural hand manipulation, will serve as computing's user interface.

Characters that embody a bot virtually are called synthetic or AI characters, though it will be a long while before these virtual characters truly have unsupervised Deep Learning capabilities. These synthetic characters can be made three-dimensional, and thus spatial, and can be used in VR, as well as AR apps and experiences. Uses of these go beyond entertainment, into sales and marketing, training, customer relations, and other business uses―basically, anywhere where an expert is needed to provide relevant information.

In the further future, AI using unsupervised algorithmic systems could potentially create a culture and government for its own embodied bots, such as robots, which would ideally be in the service of humanity. These beings would be the ultimate in what Spatial Computing could bring. We choose to be optimistic with regard to this potentiality.

Back to the present. Since many tasks in Spatial Computing cannot be accomplished without the use of AI algorithms often coupled with Computer Vision, AI's impact on the business landscape cannot be overemphasized.

Evolution

The seven industry verticals that we will be covering in this book―Transportation; Technology, Media, and Telecommunications (TMT); Manufacturing; Retail; Healthcare; Finance; and Education―are in the beginning stages of being transformed by AI-enhanced Spatial Computing. We will go into more detail on these transformations when we address each vertical in their individual chapters.

The evolution of personal computing, from text-based interface to a three-dimensional one with Spatial Computing, fulfills our need for directness and ease that is produced via innovation. Spatial Computing's 3D interface jumpstarts a new higher curve for productivity.

The current technologies that fuel Spatial Computing will inevitably be enhanced and deepened with new technologies, all of which will take computing to new places that we cannot even imagine at this time.

In our next Part, Part II, we provide you with a roadmap for the disruptions caused by Spatial Computing that will happen in seven industries, starting with the transportation industry.