The New Civil War

The New Civil War

![]() In 1964, American 13-year-olds took the First International Math Study, ranking eleventh on a list of twelve countries.1

In 1964, American 13-year-olds took the First International Math Study, ranking eleventh on a list of twelve countries.1

“Our Nation is at risk. Our once unchallenged preeminence in commerce, industry, science, and technological innovation is being overtaken by com-petitors throughout the world.”2 Those words were written in 1983 by an independent commission tasked with evaluating the current landscape of education in the United States and recommending a prescriptive path forward. The 65-page report gave a blistering account of America's eroding position in the global education race. The opus was laced with incendiary language, intended to provoke response and action:

What was unimaginable a generation ago has begun to occur—others are matching and surpassing our educational attainments. If an unfriendly foreign power had attempted to impose on America the mediocre educational performance that exists today, we might well have viewed it as an act of war. As it stands, we have allowed this to happen to ourselves.... We have, in effect, been committing an act of unthinking, unilateral educational disarmament.3

At the time, this might have been perceived as a commission's flair for the dramatic (or a well-crafted piece of propaganda designed to convince then President Ronald Reagan to jettison his plans of abolishing the federal U.S. Education Department4), however according to a recent nonpartisan assessment of the topic, the “war” metaphor may have proven more prophecy than hyperbole. A 2012 Council on Foreign Relations report on U.S. Education Reform and National Security warns that the country's anemic educational performance is a threat to its national security, stating, “Educational failure puts the United States' future economic prosperity, global position, and physical safety at risk.”5

Despite the fact that the United States spends more in K-12 public education than many other countries, its results are not commensurate. The country ranks fourteenth in reading, twenty-fifth in math, and seventeenth in science, when comparing the performance of American 15-year-olds to that of their peers in other industrialized countries. More than one-quarter of U.S. high school students fail to graduate in four years, a number that approaches 40 percent among minorities. Less than a quarter of U.S. high school students meet college-ready standards. Even among college-bound seniors, less than half meet these standards, forcing more college students to take remedial classes. Adding to the problem, three-fourths of U.S. citizens aged 17–24 cannot pass military entrance exams because they are physically unfit, have criminal records, or lack critical skills needed in modern warfare (including basic world geography knowledge). The report goes on to say that such lack of preparedness threatens the United States on five national security fronts: economic growth and competitiveness, physical safety, intellectual property, U.S. global awareness, and U.S. unity and cohesion.6 Considering that the United States spends more than 5 percent of its GDP7 to ready tomorrow's workforce, one expects better than mediocrity.

For healthcare, a sector that exceeds 15 percent of the GDP (making it the single largest component of the U.S. GDP) and more than $7,000 per capita—the highest for both metrics of any country in the world8—one expects excellence. However, excellent is hardly a word to describe the current U.S. healthcare system, with expenses at nearly 2.5 times the health spending, yet with fewer practicing physicians, nurses, and acute-care bed days per capita of the median country in the Organization for Economic Cooperation and Development (OECD).9 The point is further accentuated when one considers that comparable healthcare costs in almost all other advanced industrial countries cover virtually everyone, yet the United States leaves nearly 50 million people uninsured.10 The trend shows no signs of abating, because U.S. healthcare costs are expected to double over the next decade.11 A key contributor to spiraling healthcare costs is the American lifestyle, rendering at least one in three Americans obese, a condition that spawns other chronic diseases. According to David Squires, primary author of a Commonwealth Fund study on U.S. healthcare, the United States suffers from “a failure to effectively manage these chronic conditions that make up an increasing share of the disease burden.”12 With chronic diseases estimated to afflict more than six in 10 Baby Boomers by 2030, one can expect healthcare costs to continue to soar accordingly. The situation is exacerbated by a shortage of registered nurses that is projected to exceed 250,000 by 2025,13 the result of an equally aging nursing population combined with a deficit of qualified candidates entering the employment ranks (partly a response to a lagging educational system that is failing to generate the next crop of employees).

Despite the obvious differences between education and healthcare as independent sectors of the economy, the similarities are compelling. Both critically serve the public at large. Both are significant beneficiaries of national investment and discourse. Both have characteristics similar to enterprises, yet they also serve the private market in unique ways—either by preparing tomorrow's employees or caring for today's workforce. Yet, neither is attaining the level of performance one would expect despite the level of spending and focus. What's more, both sectors possess long-term implications for the nation's ability to compete and cooperate on a world stage.

As it has for the private sector, evidence suggests that technology (or lack thereof) is already playing a role in enabling the current situation in the public sector. A 2006 study by authors at Johns Hopkins found the United States lagging as much as a dozen years behind other industrialized countries in healthcare information technology, a cornerstone of which is the electronic health record (EHR).14 Although the EHR can reduce costly errors and duplications, the United States trails its counterparts in usage, an issue given the Commonwealth Fund's findings that Americans are more likely than people in other advanced nations to experience medical problems with uncoordinated care.15 In education, the outlook isn't better, with the United States falling behind other countries in equipping classrooms for twenty-first century skill development. South Korea, which has the highest college attainment rate in the world, will phase out physical textbooks and replace them with digital versions by 2015. Even Uruguay, a developing country in comparison, provides a computer for every student.16

However, technology is hardly a panacea for the considerable issues facing citizens of this country. In fact, it also imposes consequences on today's grim landscape. The Commonwealth Fund points to excessive use of high-priced technology (including MRIs and CT scans) as a major contributing factor to the United States' escalating healthcare costs. In education, the jury is still out on technology's impact on classroom learning, driven largely by how instructors incorporate such technology into an integrated curriculum. When this point is underestimated, the results can backfire. For example, a national study in the 1990s found a negative relationship between the frequency of use of school computers and scholastic achievement.17

Yet, despite the extenuating circumstances surrounding the positive or negative contributions of technology to these public sectors, technology is being adopted nonetheless. Students and patients in households are using it to further their academic pursuits or manage complex healthcare conditions, respectively. Academics and clinicians are bringing personalized technology into the workplace to serve their stakeholders. Institutions are leveraging the latest technology trends to address increasingly formidable challenges. Fortunately, there is much to be learned from these trailblazers in how to apply technology to help solve some of the country's most daunting problems. As an example, consider how the healthcare field is tackling opportunities associated with big data. It turns out that Watson, IBM's supercomputer of artificial intelligence, is capable of more than just beating the toughest Jeopardy! champions or becoming the latest employee of financial services giant Citigroup—it is starting to make waves in healthcare. WellPoint Inc. plans to use Watson to monitor patients and offer support to physicians. According to WellPoint's CIO Andrew Lang, “We're the first to bring the Watson solution to the market, and our first focus is on a diagnosis and treatment for oncology. Then, we're moving both vertically and horizontally from that space to explore other partnerships with Watson and IBM.”18 Some of the potential adjacencies for Watson include helping manage those complex and chronic conditions that have become the bane of the country's healthcare system. By ingesting a patient's progress over a period of time, Watson can leverage the history to drive continuity of care. As Lang states, “It would dramatically improve the health of our patients and improve the quality of physician treatments and diagnoses. Ultimately, through Watson, we want to reduce costs and promote best practices around diagnoses. We want to get better results while spending less money.”19

Indeed, big data in healthcare far surpasses even the most interesting applications for Watson. According to IDC, the big data market is expected to grow from $30 billion in 2011 to close to $34 billion in 2012, in part because of increased use in the healthcare industry.20 This investment is more than offset by the potential benefits in reducing healthcare overhead, with administrative inefficiencies estimated to cost providers $100–$150 billion annually.21 The EHR is the centerpiece of the transformation, although it will be augmented over time with medical activities that overwhelmingly occur outside the doctor's office. For example, of particular importance in managing disease is compliance in taking prescriptions. Unfortunately, studies show that less than half of Americans take their medications as prescribed. Noncompliance in doing so is attributed to 30–40 percent of hospital admissions for seniors over 65, roughly 125,000 deaths per year, and a healthcare tab of $290 billion annually.22 To address the need, a new category of pill bottle caps has entered the scene, complete with digital intelligence to detect the length of time since the pill bottle has been opened, alert patients with reminders that medication is due, and send weekly progress reports of compliance to physicians over a wireless network. In independent studies in which such caps have been deployed, patients using the technology had nearly a 34 percent increase in compliance with dosage instructions.23 Such ongoing data can be used to monitor and chart a patient's progress in managing stubborn chronic diseases while going about daily life, thereby creating a more holistic and accurate view of one's unique medical history.

In education, big data is endowing academics with similar visibility as it pertains to a student's distinctive learning approach. In 2011, Stanford University placed three computer science courses online and embarked on an unprecedented study to understand how students learn. Although other studies have certainly tackled the knotty topic, they have done so by using small groups of students and comparing results in different classrooms, leading to uncertain and delayed research conclusions. The Stanford experiment is revolutionizing the approach by studying the real-time mouse clicks and interactions of 20,000 online students. According to Daphne Koller, a professor at the Stanford Artificial Intelligence Laboratory, “If 5,000 people had the same wrong answer, it's obvious a concept is not getting through, and you have a clear path that shows where students went wrong.”24

Beyond merely detecting where curriculum may be missing its objective, such big data can be used to tailor educational content to the specific learning needs of the student. In 2011, the Bill and Melinda Gates Foundation endowed $1 million to launch an ongoing data mining effort involving a half dozen online universities. The project has led to the development of a database measuring 33 variables for the online coursework of 640,000 students to track student performance and retention across a broad range of demographic factors. In a surprising break from conventional wisdom, early findings suggest that at-risk students do better if they ease into online education with a small number of courses, as opposed to the full-course immersive load required for maximum federal aid. The data set has the potential to give tremendous insights into the learning patterns for small subsets of groups, such as targeted minorities. Although the study is far from complete, it is already being heralded as a potential Match.com for aspiring college students. According to Sebastian Diaz, the project's senior statistician, “Rather than just going on rankings done by a particular news agency, [students and parents] could really look at tailoring which institution provides the best fit for a particular individual student.”25 Lest one believe that big data's role in education is limited to these higher education examples where online coursework is the norm, consider that 40 states have virtual schools or state-led initiatives. In fact, 30 states and Washington, DC are home to statewide full-time online schools, comprising millions of mouse clicks and interactions on which to study how younger students learn and modify or personalize curriculum accordingly.26

Yet, big data is only as useful as it is available. In both healthcare and education, the data tsunami is fueled by Internet-connected devices and the clouds connecting them. According to CompTIA's 2011 Third Annual Healthcare IT Insights and Opportunities study, the prevalence of mobile technology among practitioners is rivaled only by its adoption by their patients. One-quarter of healthcare providers currently use tablets in their practice. Another 21 percent expect to do so in the next year. More than half already use a smartphone for work purposes. Perhaps most telling, two-thirds of healthcare providers say that implementing or improving their use of mobile technologies ranks as a high- or mid-level priority over the next 12 months.27

These numbers point to additional opportunities for cloud-based solutions, where the demand for immediate and simple access to data stimulates appetite for centralized IT services. They are also introducing a bevy of new challenges for healthcare providers. Whereas more than eight in 10 healthcare providers use mobile devices to collect, store, and/or transmit some form of personal health information (PHI), nearly half admit to not taking proper steps to secure their devices.28 Some industry experts predict an increase in the number of class-action lawsuits in 2012 as a result of PHI violations. As such, although mobile technology is on the rise, many healthcare providers remain cautious about BYOD policies. In the 2012 Alcatel-Lucent study, healthcare organizations were among the most aggressive in outright rejecting a BYOD policy—with two in five healthcare organizations precluding the use of personal technology in the workplace, compared with just one in five educational respondents stating the same. Among the top reasons cited for a more cautious BYOD approach was the fear of infiltration of viruses and malware to the network—of particular importance given electronic protected health information (EPHI) security concerns. According to at least one expert, “With EPHI being accessed from a multitude of mobile devices, risks of contamination of systems by a virus introduced from a mobile device used to transmit EPHI significantly increase. Mobile devices and BYOD policies leave a healthcare organization open to potential data breaches.”29 And, the concerns are sufficient to create additional pressures on the IT function for healthcare organizations, regardless if a policy outright denies the use of personal technology in the workplace or not. According to the 2012 Alcatel-Lucent study, more than two in five healthcare IT respondents admit to being contacted more by their internal customers, as a result of employees bringing personal technology into the workplace and experiencing issues in using it for work purposes (compared with just one in five education IT respondents).

Although educators are far more permissive with BYOD than their healthcare counterparts, they face a different set of challenges that are unenviable on their own merits. Mobile technology is certainly popular among educators. In the 2012 Alcatel-Lucent study, educators are more likely to own tablets (61%), use a personal 4G/LTE mobile device for work purposes (31%), and pay for cellular data services (3G/4G) for work purposes out of their own pocket (21%). Although the educator's fervent adoption of mobility can best be explained by the changing complexion of her students, most of whom come equipped with a sophisticated mobile device perennially attached to their hips, mobility in the classroom suffers from a lack of compelling evidence as to its effective use in the curriculum. Compounding the problem is the length of time required to form an informed opinion regarding a technology's impact on learning juxtaposed with the speed with which technology itself is evolving. Soon after the iPad was introduced, enthusiastic schools began permitting the device in classrooms and learning environments, despite the lack of evidence on which to justify its acceptance. Interestingly, schools rallied behind laptops in much the same way just a few years earlier. In fact, a 2010 study by Project RED, a research initiative linked with the One-to-One Institute (which supports one-to-one laptop initiatives in K-12 schools), found that most schools that had integrated laptops and other digital learning tools into learning were not maximizing the use of the technology to get the most benefit from its potential.30 But despite the challenges, there is hope for educators overwhelmed by technology options that seem to germinate daily. That is, as more sophisticated data analysis accompanies the deluge of mouse clicks and keyboard entries inside and outside the classroom, the more educators' understanding about technology's role in the learning process can catch up with the pace of technological change itself. Until then, educators will rely on increasingly sophisticated mobile device management capabilities that can be incorporated in the classroom to at least provide instrumentation to instructors as to how such devices are aiding or deterring a legitimate learning environment.

These advanced mobile and cloud management tools are not far from availability, if collaboration solutions in the classroom are any indicator. Just a few years ago, teachers were struggling with whether to incorporate social networking tools in their instruction. Although Facebook is the seemingly unstoppable social networking juggernaut among consumers, it lacks the management oversight required for coursework completion. Enter a new range of collaboration tools purpose-built for teachers striving to incorporate interactive learning while protecting the integrity of their curriculum. One such solution is Gaggle, which allows students to join only at the invitation of teachers, does not allow students to have private conversations, and has filters to detect inappropriate language, bullying references, and pornography. The options offered by Gaggle and its ilk address the relatively recent concerns plaguing teachers since social networking became unavoidable in the classroom. Nancy Willard, the executive director of the Center for Safe and Responsible Internet Use, comments that, although these academic interactive environments look and feel reminiscent of Facebook, “... the emphasis is different. You're not trying to pick someone up for a date. This is where you're trying to focus on ‘Romeo and Juliet.’31

As such, many of these academically tailored solutions are capable of far more than simple collaboration, including cloud-based storage and multimedia capabilities. In addition, for teachers interested in having students collaborate with experts and instructors across the globe, Skype offers its classroom-tailored service to answer the call. Through a variety of educational partnerships, Skype offers a search-and-retrieval method connecting instructors and experts with classrooms around the world. Penguin Young Readers Group connects authors with students for discussions about books and writing, whereas the New York Philharmonic offers interactions with esteemed musicians.32 Indeed, Skype has permeated the classroom, with educators in the 2012 Alcatel-Lucent study among its heaviest users. Educators' appreciation of videoconferencing has also seeped into their nonclassroom instruction time as a valuable tool when interacting with their peers. In the 2012 Alcatel-Lucent study, when educators were asked to choose which statement came closer to their view:

- Videoconferencing is better than audioconferencing since nonverbal communication is not sacrificed and richer interactions can result;

or

- Audioconferencing is better than videoconferencing because it allows employees to multitask through otherwise mundane meetings;

more than two-thirds selected the first option, the highest of any professional segment tested.

In contrast, healthcare providers had among the lowest preferences for videoconferencing, with only a slight majority (59%) preferring it to audioconferencing. Perhaps the lower appetite has something to do with the nascent condition of telemedicine, especially when measured against the relatively booming online education market in comparison. According to the CompTIA study, only 14 percent of healthcare professionals report actively following news and trends in telemedicine, for which patient consultation via videoconferencing is seen as a primary benefit. Only one in 10 healthcare providers intends to use videoconferencing for patient interaction in the next 12 months,33 helping to explain how the technology could be evaluated so poorly as a means of employee engagement in the 2012 Alcatel-Lucent study. Yet, the suppressed interest may be more a function of onerous or ill-fitted videoconferencing solutions on the market today as opposed to a lack of demand. When asked about a service that would seamlessly engage multiple individuals in a high-definition video and collaboration conference through any device—one in which participants control their virtual environment with simple hand gestures and are unbothered by otherwise annoying background noises, which are effortlessly muted—healthcare professionals were the most enthusiastic cohort to respond. Specifically, more than three in 10 indicated that they would be very likely to use such a service if it were made available. With $1 billion in annual federal support to study telemedicine and its benefits and nearly one-fifth of Americans living in areas where primary care physicians are scarce, telemedicine may finally be due for a surge. Still, critics question whether doctors will be able to detect subtle cues via a remote, albeit high-definition, connection that sacrifices tactile capabilities.34 In yet another demonstration of how these technology trends are intertwined, perhaps this sensory void is more than handled with a wealth of big data statistics that can track a patient's progress unlike ever before.

Indeed, whether exploring big data, cloud, mobility, or collaboration, the inextricable connection among these technologies yields an effect in which the whole is greater than the sum of the parts. When dealing with some of the most formidable challenges facing the nation, such synergies are welcome ammunition in a metaphorical war. However, despite the similarities of both healthcare and education in taking their respective shots in the court of public opinion, there are profound differences in how each sector will embrace technology's role in addressing its unique challenges:

- A new power relationship is emerging in healthcare, with technology at the center—Advanced technology has been a leading contributor to exorbitant healthcare costs. However, the emerging healthcare consumerism trend seeks to redefine the relationships between patients, clinicians, and insurance providers. Among other items, the movement seeks to put more information in the hands of patients to help them make informed decisions about providers, insurance coverage, and treatment. It seeks to provide full transparency of a patient's medical records within his or her hands to allow for the quick resolution of errors, omissions, or derogatory information. At its most advanced stage, healthcare consumerism seeks to empower patients with personalized development plans and corresponding incentives designed to change lifestyle, another major contributing factor to escalating healthcare expenditures. The result of this power shift, one that transforms a patient from a passive observer in his healthcare plan to an active partner with those who serve him, will be enabled by affordable and pervasive technology options (including telemedicine and remote monitoring capabilities) that have a long-term impact on lifestyle alteration. When coupled with financial incentives, the potential to reduce bloated healthcare costs is profound. In a six-month experiment involving 139 patients suffering from hypertension, researchers measured the effectiveness of rewards linked to a connected pill bottle cap. Respondents in the experimental group received up to $90 if they adhered to their medication schedule at least 80 percent of the time. The difference in behavioral compliance between this cohort and the control group (which was offered neither the connected pill bottle cap nor an incentive) was significant. Those in the control group were compliant 71 percent of the time, whereas those in the experimental group met their schedules 99 percent of the time. Such a variation could yield significant healthcare savings when extrapolated to the broader population of chronic disease sufferers.

Beyond the potential cost savings, patients are ready to be empowered. According to a 2012 Deloitte study among 4,000 consumers, 44 percent report being very interested in videoconferencing capabilities for follow-up visits, and 41 percent are eager to try self-monitoring devices that send electronic readings to doctors via the network.35 Despite the enthusiasm among early adopters, the same primary issue plagues both consumers and clinicians unwilling to experiment with such innovative technologies: that of protecting the security of personal health information. However, given the surprising number of clinicians failing to protect this information when currently using mobile devices, security issues will likely not be the impediment to adoption. Instead, advanced mobile data management and cloud-based solutions will increasingly be relied on to mitigate data risks created by these security-minded clinicians themselves.

- Educators will experiment, demanding a new relationship between IT and HR—Led primarily by administrators, technology will play an increasingly important role in creating new learning environments. Instructors are following suit, driven by the need to adapt to changing student expectations. However, a lack of instruction for instructors will continue to challenge the effectiveness of technology in and beyond the classroom, leading to results that do not fully maximize the potential benefits. This is, at least in part, due to the velocity of technology adoption, driven by newer crops of more advanced digital natives entering the classroom. Academics will impose greater expectations on IT professionals as a result. In the 2012 Alcatel-Lucent study, only 35 percent of education respondents indicate that the level of training provided to them when new technologies are introduced by their organization is more than adequate or sufficient (compared with half of healthcare respondents). One in five educators rates their training as subpar or poor.

At the same time, IT professionals in this sector are challenged with keeping up with the pace of technology while keeping an eye on essential IT concerns. Among them, nearly one-third cite their biggest challenge as being the move toward cloud-based services, which creates new security, reliability, and performance challenges for IT to manage (the highest percentage of IT respondents out of any industry tested). However, IT is also stepping up their response and embracing other trends in their organization, including consumerization, with a welcoming attitude. Nearly half agree that the movement by employees in bringing personal technology into the workplace has allowed the IT function in their organization to become more strategic, no longer encumbered with purchasing and maintaining hardware for employees in the company. In addition, their internal customers agree, because 60 percent of educators indicate that IT equips them with innovative tools, services, or capabilities that foster a healthy culture—particularly important given that one in five educators is likely to state their organizational culture is due for serious changes, making them the most eager cohort in support of cultural reform.

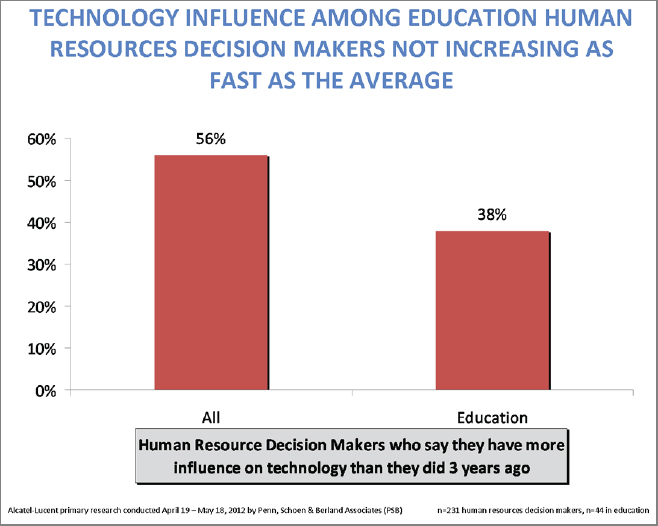

Finally, while there appears to be a respect among educators as to the importance of technology within their organizations (with nearly half of executives driving deployment decisions, compared with less than one-third, on average for all industries, in the Alcatel-Lucent study), much work remains to be done in tightening the critical HR and IT connection. Teachers are increasingly accepting technology's role in curriculum and professional development. According to a 2011 Project Tomorrow study of more than 36,000 K-12 teachers nationwide, one-third of teachers are involved in an online professional learning community (compared with just one in five in 2007). Nearly two-thirds of instructors regularly download music for classroom use (up from less than 40% in 2007). However, many still crave resources and training for how to incorporate digital resources in the classroom more effectively. More than half of teachers desire access to an online collection of vetted content-specific courses to assist them in incorporating digital content within their learning environment. Nearly 40 percent want face-to-face professional development for the same.36 Despite the accelerating adoption of teachers and the gaps that still remain in making them fully comfortable with new learning environments, HR professionals appear to be underestimating their value. According to the 2012 Alcatel-Lucent study, more than 50 percent of HR professionals in the education sector admit that their influence over technology decisions has not changed in the past three years. Close to 10 percent shockingly admit that their influence has actually decreased in that period of time. To ensure that technology is not underutilized in and beyond the classroom, IT and HR must work cooperatively to equip instructors with both tools and training.

Education and healthcare are the critical engines of citizen welfare in this country. And, for all that has changed in each of these sectors over the past several decades, much remains the same. Each institution finds itself the subject of discourse, even criticism, as the beneficiaries of significant taxpayer investment. Each is struggling to prove its value as the United States continues to be outranked by other countries that are getting better results with less money. Yet, the United States isn't so much competing with other countries as it is competing with itself. Take those mediocre scores in education as an example. According to Andreas Schleicher, the head of education indicators and analysis for the Organization for Economic Cooperation and Development, from a statistical perspective, “There is no decline on any measure that we have for the United States.” Instead, he cites the issue as “the rate of improvement in other countries, in terms of getting more people into school and educating them well, is steeper.”37 Other countries are raising their game, and there is much the United States can learn from their success, while not underestimating unique cultural nuances that must be acknowledged. However, becoming infatuated with the latest ratings and rankings—whether in education, healthcare, or other global indicators—can have counterproductive results if one assumes that those other countries are the competition, when, in fact, the battle begins at home.

Education and healthcare are not the only sectors in the spotlight when comparing the United States against its global peers. A 2011 report by the Information Technology and Innovation Foundation ranked countries on the basis of their innovation. It states, “It is worth reiterating that in 2000 the United States ranked first, a position it likely held for the majority of the post-war period, but in a decade it has fallen to fourth. At this rate, where will the United States rank at the end of the next decade?” Such findings are likely to provoke response, as President Obama issued a challenge in a 2011 State of the Union address, “We need to out-innovate, out-educate, and out-build the rest of the world.”38

Yet, this new arms race is not without its critics—those quick to point out that the United States operates as part of a global economy, not an island deserving of wartime preservation. In fact, they cite evidence supporting a slightly negative correlation between R&D investment and GDP growth. They create a case for a rising tide lifting all boats, indicating that the United States will benefit from developments made abroad. In his article, “No One Can Win the Future,” Konstantin Kakaes argues that, although Democrats and Republicans agree on the question, “How can America compete with the rest of the world, especially China?” it's the wrong question to start. He submits:

Imagine that someone in a lab in China cured cancer tomorrow. Technology spreads quickly—that cancer cure would be applied in the United States (and throughout the world) with tremendous benefit for human welfare. In no way would we be worse off. Sure, a Chinese pharmaceutical company would make money. But so would the pharmacist who sold the drug in the United States and the doctor who prescribed it. American researchers would build on the Chinese discovery. American workers would be more productive, and American families would have their sarcoma-ridden loved ones futures restored to them. The money made by the notional Chinese pharmaceutical firm is inconsequential compared with the worldwide effect of the miracle cure.39

So, although the United States will continue to fight to regain its status as a globally recognized innovative bellwether, perhaps there is relief, rather than anxiety, in knowing that other countries are seeking the same.