Chapter 13

Troubleshooting Multicast

This chapter covers the following topics:

IGMP Protocol Operation

IGMP Configuration and Verification

PIM Protocol Operation

PIM Configuration and Verification

Multicast and Virtual Port-channels (vPC)

Ethanalyzer Examples For Multicast

Multicast traffic is found in nearly every network deployed today. The concept of multicast communication is easy to understand. A host transmits a message that is intended for multiple recipients. Those recipients are enabled to listen specifically for the multicast traffic of interest and ignore the rest, which supports the efficient use of system resources. However, bringing this simple concept to life in a modern network can be confusing and misunderstood. This chapter introduces multicast communication using Cisco NX-OS. After discussing the fundamental concepts, it presents examples to demonstrate how to verify that the control plane and data plane are functioning as intended. Multicast is a broad topic, and including an example for every feature is not possible. The chapter primarily focuses on the most common deployment options for IPv4; it does not cover multicast communication with IPv6.

Multicast Fundamentals

Network communication is often described as being one of the following types:

Unicast (one-to-one)

Broadcast (one-to-all)

Anycast (one-to-nearest-one)

Multicast (one-to-many)

The concept of unicast traffic is simply a single source host sending packets to a single destination host. Anycast is another type of unicast traffic, with multiple destination devices sharing the same network layer address. The traffic originates from a single host with a destination anycast address. Packets follow unicast routing to reach the nearest anycast host, where routing metrics determine the nearest device.

Broadcast and multicast both provide a method of one-to-many communication on a network. What makes multicast communication different from broadcast communication is that broadcast traffic must be received and processed by each host that receives it. This typically results in using system resources to process frames that end up being discarded. Multicast traffic, in contrast, is processed only by devices that are interested in receiving the traffic. Multicast traffic is also routable across Layer 3 (L3) subnet boundaries, whereas broadcast traffic is typically constrained to the local subnet. Figure 13-1 demonstrates the difference between broadcast and multicast communication behavior.

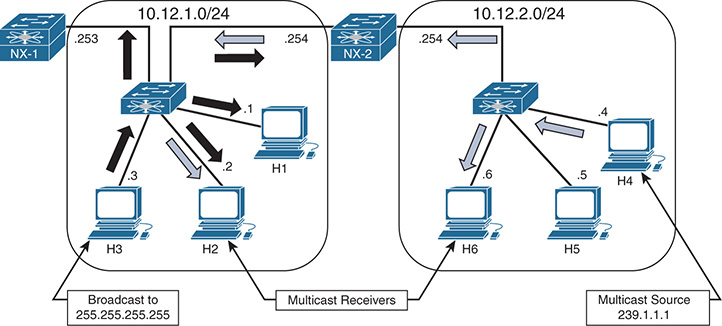

Figure 13-1 Multicast and Broadcast Communication

NX-2 is configured to route between the two L3 subnets in Figure 13-1. Host 3 sent a broadcast packet with a destination IP address 255.255.255.255 and destination MAC address of ff:ff:ff:ff:ff:ff. The broadcast traffic is represented by the black arrows. The broadcast packet is flooded from all ports in the L2 switch and received by each device in the 10.12.1.0/24 subnet. Host 1 is the only device running an application that needs to receive this broadcast. Receiving the packets on every other device results in wasted bandwidth and packet processing. NX-2 receives the broadcast but does not forward the packet to the 10.12.2.0/24 subnet. This behavior limits the scope of communication to only devices that are within the same broadcast domain or L3 subnet. Figure 13-1 demonstrates the potential ineffieciency of using broadcasts when certain hosts do not need to receive those packets.

Host 4 is sending multicast traffic represented by the white arrows to a group address of 239.1.1.1. These multicast packets are handled differently by the L2 switch and flooded only to Host 6 and NX-2, which is acting as an L3 multicast router (mrouter). NX-2 performs multicast routing and forwards the traffic to the L2 switch, which finally forwards the packets to Host 2. Because NX-1 is not receiving multicast traffic, the L2 switch does not consider it to be an mrouter. If NX-1 is reconfigured to be a multicast router with interested receivers attached, the packet is received and again multicast routed by NX-1 toward its receivers on other subnets. This theoretical behavior of NX-1 is mentioned to demonstrate that the scope of a multicast packet is limited by the time to live (TTL) value set in the IP header by the multicast source, not by an L3 subnet boundary as with broadcasts. Scope is also limited by administrative boundaries, access lists (ACL), or protocol-specific filtering techniques.

Multicast Terminology

The terminology used to describe the state and behaviors of multicast must be defined before diving further into concepts. Table 13-1 lists the multicast terms with their corresponding definition used throughout this chapter.

Table 13-1 Multicast Terminology

Term |

Definition |

mroute |

An entry in the Multicast Routing Information Base (MRIB). Different types of mroute entries are associated with the source tree or the shared tree. |

Incoming interface (IIF) |

The interface of a device that multicast traffic is expected to be received on. |

Outgoing interface (OIF) |

The interface of a device that multicast traffic is expected to be transmitted out of, toward receivers. |

Outgoing interface list (OIL) |

The OIFs on which traffic is sent out of the device, toward interested receivers for a particular mroute entry. |

Group address |

Destination IP address for a multicast group. |

Source address |

The unicast address of a multicast source. Also referred to as a sender address. |

L2 replication |

The act of duplicating a multicast packet at the branch points along a multicast distribution tree. Replication for multicast traffic at L2 is done without rewriting the source MAC address or decrementing the TTL, and the packets stay inside the same broadcast domain. |

L3 replication |

The act of duplicating a multicast packet at the branch points along a multicast distribution tree. Replication for multicast traffic at L3 requires PIM state and multicast routing. The source MAC address is updated and the TTL is decremented by the multicast router. |

Reverse Path Forwarding (RPF) check |

Compares the IIF for multicast group traffic to the routing table entry for the source IP address or the RP address. Ensures that multicast traffic flows only away from the source. |

Multicast distribution tree (MDT) |

Multicast traffic flows from the source to all receivers over the MDT. This tree can be shared by all sources (a shared tree), or a separate distribution tree can be built for each source (a source tree). The shared tree can be one-way or bidirectional. |

Protocol Independent Multicast (PIM) |

Multicast routing protocol that is used to create MDTs. |

RP Tree (RPT) |

The MDT between the last-hop router (LHR) and the PIM RP. Also referred to as the shared tree. |

Shortest-path tree (SPT) |

The MDT between the LHR and the first-hop router (FHR) to the source. Typically follows the shortest path as determined by unicast routing metrics. Also known as the source tree. |

Divergence point |

The point where the RPT and the SPT diverge toward different upstream devices. |

Upstream |

A device that is relatively closer to the source along the MDT. |

Downstream |

A device that is relatively closer to the receiver along the MDT. |

Sparse mode |

Protocol Independent Multicast Sparse mode (PIM SM) relies on explicit joins from a PIM neighbor before sending traffic toward the receiver. |

Dense mode |

PIM dense mode (PIM DM) relies on flood-and-prune forwarding behavior. All possible receivers are sent the traffic until a prune is received from uninterested downstream PIM neighbors. NX-OS does not support PIM DM. |

rendezvous point (RP) |

The multicast router that is the root of the PIM SM shared multicast distribution tree. |

Join |

A type of PIM message, but more generically, the act of a downstream device requesting traffic for a particular group or source. This can result in an interface being added to the OIL. |

Prune |

A type of PIM message, but more generically, the act of a downstream device indicating that traffic for the group or source is no longer requested by a receiver. This can result in the interface being removed from the OIL if no other downstream PIM neighbors are present. |

First-hop router (FHR) |

The L3 router that is directly adjacent to the multicast source. The FHR performs registration of the source with the PIM RP. |

Last-hop router (LHR) |

The L3 router that is directly adjacent to the multicast receiver. The LHR initiates a join to the PIM RP and initiates switchover from the RPT to the SPT. |

Intermediate router |

An L3 multicast-enabled router that forwards packets for the MDT. |

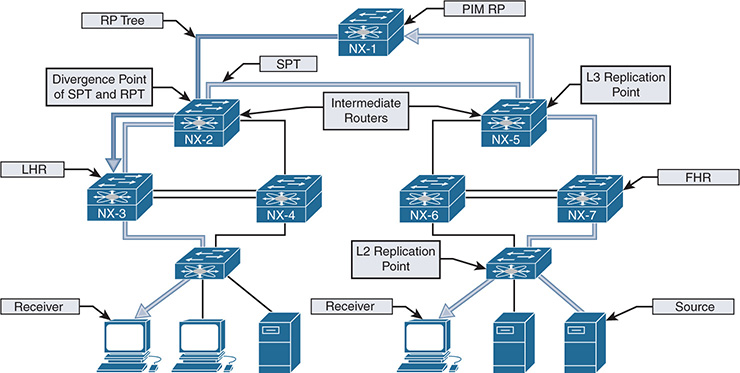

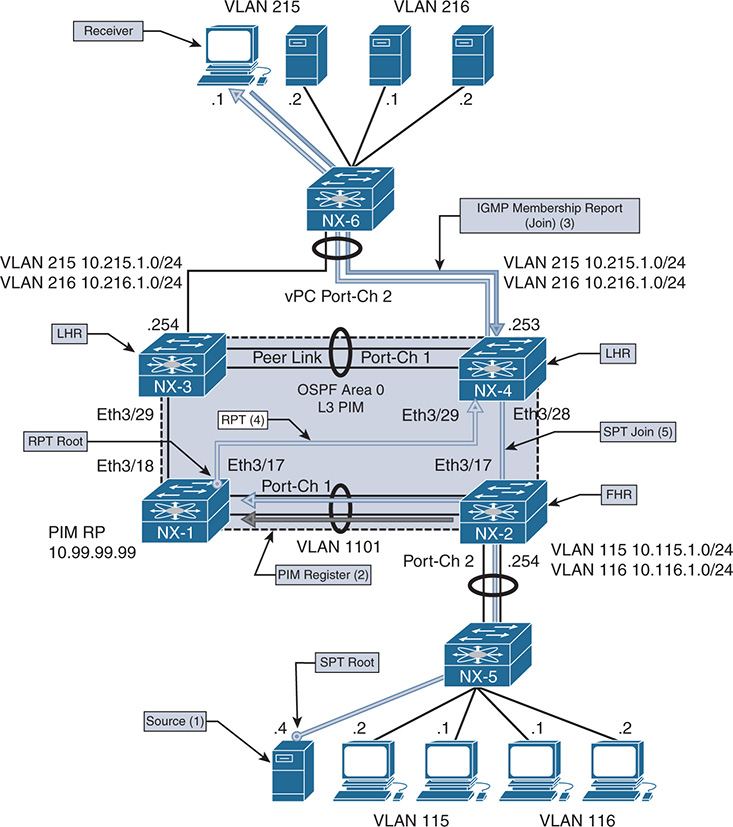

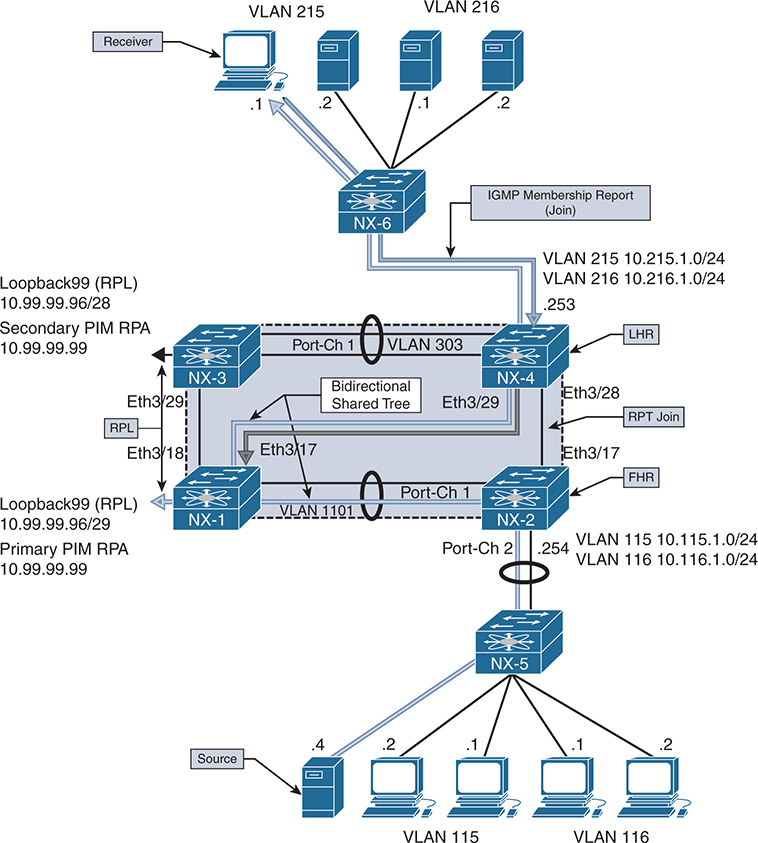

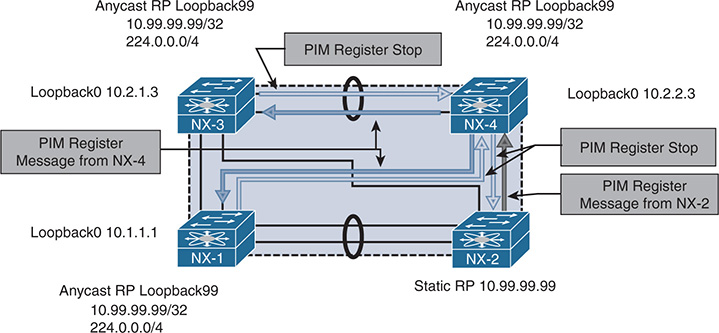

The example multicast topology in Figure 13-2 illustrates the terminology in Table 13-1.

Figure 13-2 Visualizing Multicast Terminology

Figure 13-2 illustrates a typical deployment of PIM Sparse mode any-source multicast (ASM). The end-to-end traffic flow from the source to the receiver is made possible through several intermediate steps to build the MDT:

Step 1. Register the source with the PIM RP.

Step 2. Establish the RPT from the RP to the receiver.

Step 3. Establish the SPT from the source to the receiver.

When troubleshooting a multicast problem, determining which of these intermediate steps are completed guides the investigation based on the current state of the network. Each intermediate step consists of different checks, conditions, and protocol state machines that this chapter explores in depth.

Note

Figure 13-2 shows both the RP tree and the source tree in the diagram, for demonstration purposes. This state does not persist in reality because NX-3 prunes itself from the RP tree and receives the group traffic from the source tree.

Layer 2 Multicast Addresses

At L2, hosts communicate using Media Access Control Addresses (MAC addresses). A MAC address is 48-bits in length and is a unique identifier for a Network Interface Card (NIC) on the LAN Segment. MAC addresses are represented by a 12-digit hexadecimal number in the format 0012.3456.7890, or 00:12:34:56:78:90.

The MAC address used by a host is typically assigned by the manufacturer and is referred to as the Burned-In-Address (BIA). When two hosts in the same IP subnet communicate, the destination address of the L2 frame is set to the target device’s MAC address. As frames are received, if the target MAC address matches the BIA of the host, the frame is accepted and handed to higher layers for further processing.

Broadcast messages between hosts are sent to the reserved address of FF:FF:FF:FF:FF:FF. A host receiving a broadcast message must process the frame and pass its contents to a higher layer for additional processing where the frame is either discarded or acted upon by an application. As mentioned previously, for applications that do not need to be received by each host on the network the inefficiencies of broadcast communication can be improved upon by utilizing multicast.

Multicast communication requires a way of identifying frames at Layer 2 that are not broadcasts but can still be processed by one or more hosts on the LAN segment. This allows hosts that are interested in this traffic to process the frames and permits hosts that are not interested to throw away the frames and save processing and buffer resources.

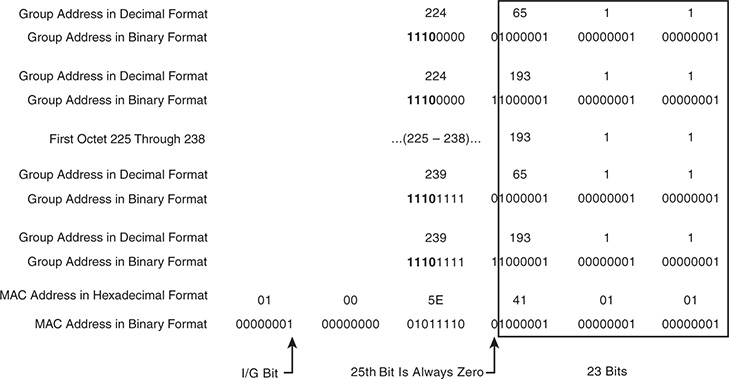

The multicast MAC address differentiates multicast from unicast or broadcast frames at Layer 2. The reserved range of multicast MAC addresses designated in RFC 1112 are from 01:00:5E:00:00:00 to 01:00:5E:7F:FF:FF. The first 24 bits are always 01:00:5E. The first byte contains the individual/group (I/G) bit, which is set to 1 to indicate a multicast MAC address. The 25th bit is always 0, which leaves 23 bits of the address remaining. The Layer 3 group address is mapped to the remaining 23 bits to form the complete multicast MAC address (see Figure 13-3).

Figure 13-3 Mapping Layer 3 Group Address to Multicast MAC Address

When expanded in binary format, it is clear that multiple L3 group addresses must map to the same multicast MAC address. In fact, 32 L3 multicast group addresses map to each multicast MAC address. This is because 9 bits from the L3 group address do not get mapped to the multicast MAC address. The 4 high-order bits of the first octet are always 1110, and the remaining 4 bits of the first octet are variable. Remember that the multicast group IP address has the first octet in the range of 224 to 239. The first high-order bit of the third octet is ignored when the L3 group address is mapped to the multicast MAC address. This is the 25th bit of the multicast MAC address that is always set to zero. Combined, the potential variability of those 5 bits is 32 (25), which explains why 32 multicast groups map to each multicast MAC address.

For a host, this overlap means that if its NIC is programmed to listen to a particular multicast MAC address, it could receive frames for multiple multicast groups. For example, imagine that a source is active on a LAN segment and is generating multicast group traffic to 233.65.1.1, 239.65.1.1 and 239.193.1.1. All these groups are mapped to the same multicast MAC address. If the host is interested only in packets for 239.65.1.1, it cannot differentiate the different groups at L2. All the frames are passed to a higher layer where the uninteresting frames get discarded, while the interesting frames are sent to the application for processing. The 32:1 overlap must be considered when deciding on a multicast group addressing scheme. It is also advisable to avoid using groups X.0.0.Y and X.128.0.Y because the multicast MAC overlaps with 224.0.0.X. These frames are flooded by switches on all ports in the same VLAN.

Layer 3 Multicast Addresses

IPv4 multicast addresses are identified by the value of the first octet. A multicast address has the first octet of the address fall in the range of 224.0.0.0 to 239.255.255.255, which is also referred to as the Class D range. Viewed in binary format, a multicast address always has the first 4 bits in the first octet set to a value of 1110. The concept of subnetting does not exist with multicast because each address identifies an individual multicast group address. However, various address blocks within the 224.0.0.0/4 multicast range signify a specific purpose based on their address. The Internet Assigned Numbers Authority (IANA) lists the multicast address ranges provided in Table 13-2.

Table 13-2 IPv4 Multicast Address Space Registry

Designation |

Multicast Address Range |

Local Network Control Block |

224.0.0.0 to 224.0.0.255 |

Internetwork Control Block |

224.0.1.0 to 224.0.1.255 |

AD-HOC Block I |

224.0.2.0 to 224.0.255.255 |

Reserved |

224.1.0.0 to 224.1.255.255 |

SDP/SAP Block |

224.2.0.0 to 224.2.255.255 |

AD-HOC Block II |

224.3.0.0 to 224.4.255.255 |

Reserved |

224.5.0.0 to 224.251.255.255 |

DIS Transient Groups |

224.252.0.0 to 224.255.255.255 |

Reserved |

225.0.0.0 to 231.255.255.255 |

Source-Specific Multicast Block |

232.0.0.0 to 232.255.255.255 |

GLOP Block |

233.0.0.0 to 233.251.255.255 |

AD-HOC Block III |

233.252.0.0 to 233.255.255.255 |

Unicast Prefix-based IPv4 Multicast Addresses |

234.0.0.0 to 234.255.255.255 |

Reserved |

235.0.0.0 to 238.255.255.255 |

Organization-Local Scope |

239.0.0.0 to 239.255.255.255 |

The Local Network Control Block is used for protocol communication traffic. Examples are the All routers in this subnet address of 224.0.0.2 and the All OSPF routers address of 224.0.0.5. Addresses in this range should not be forwarded by any multicast router, regardless of the TTL value carried in the packet header. In practice, protocol packets that utilize the Local Network Control Block are almost always sent with a TTL of 1.

The Internetwork Control Block is used for protocol communication traffic that is forwarded by a multicast router between subnets or to the Internet. Examples include Cisco-RP-Announce 224.0.1.39, Cisco-RP-Discovery 224.0.1.40, and NTP 224.0.1.1.

Table 13-3 provides the well-known multicast addresses used by control plane protocols from the Local Network Control Block and from the Internetwork Control Block. It is important to become familiar with these specific reserved addresses so they are easily identifiable while troubleshooting a control plane problem.

Table 13-3 Well-Known Reserved Multicast Addresses

Description |

Multicast Address |

All Hosts in this subnet (all-hosts group) |

224.0.0.1 |

All Routers in this subnet (all-routers) |

224.0.0.2 |

All OSPF routers (AllSPFRouters) |

224.0.0.5 |

All OSPF DRs (AllDRouters) |

224.0.0.6 |

All RIPv2 routers |

224.0.0.9 |

All EIGRP routers |

224.0.0.10 |

All PIM routers |

224.0.0.13 |

VRRP |

224.0.0.18 |

IGMPv3 |

224.0.0.22 |

HSRPv2 and GLBP |

224.0.0.102 |

NTP |

224.0.1.1 |

Cisco-RP-Announce (Auto-RP) |

224.0.1.39 |

Cisco-RP-Discovery (Auto-RP) |

224.0.1.40 |

PTPv1 |

224.0.1.129 to 224.0.1.132 |

PTPv2 |

224.0.1.129 |

The Source-Specific Multicast Block is used by SSM, an extension of PIM Sparse mode that is described later in this chapter. It is optimized for one-to-many applications when the host application is aware of the specific source IP address of a multicast group. Knowing the source address eliminates the need for a PIM RP and does not require any multicast routers to maintain state on the shared tree.

The Organization-Local Scope is also known as the Administratively Scoped Block. These addresses are the multicast equivalent to RFC1918 unicast IP addresses, in which an organization assigns addresses from this range as needed. These addresses are not publicly routed or administered by IANA.

NX-OS Multicast Architecture

The multicast architecture of NX-OS inherits the same design principals as the operating system itself. Each component process is fully modular, creating the foundation for high availability (HA), reliability, and scalability.

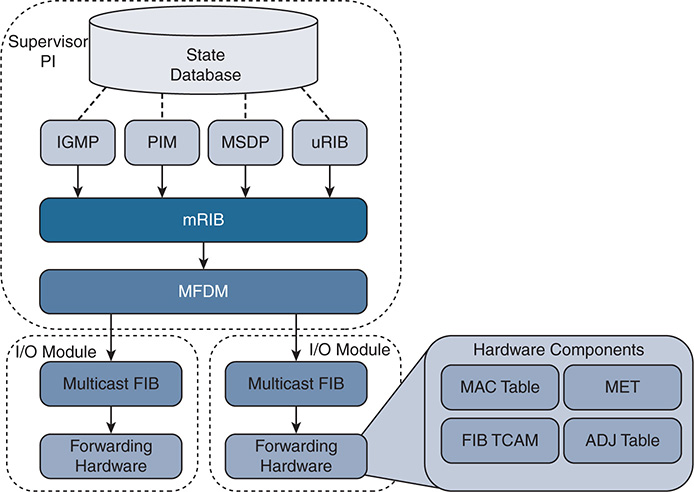

The NX-OS HA architecture allows for stateful process restart and in-service software upgrades (ISSU) with minimal disruption to the data plane. As Figure 13-4 shows, the architecture is distributed with platform-independent (PI) components running on the supervisor module and hardware-specific components that forward traffic running on the I/O modules or system application-specific integrated circuits (ASIC).

Figure 13-4 NX-OS Multicast Architecture

This common architecture is used across all NX-OS platforms. However, each platform can implement the forwarding components differently, depending on the capabilities of the specific hardware ASICs.

Each protocol, such as Internet Group Management Protocol (IGMP), Protocol Independent Multicast (PIM), and Multicast Source Discovery Protocol (MSDP), operates independently with its own process state, which is stored using the NX-OS Persistent Storage Services (PSS). Message and Transactional Services (MTS) is used to communicate and exchange protocol state messages with other services, such as the Multicast Routing Information Base (MRIB).

The MRIB is populated by client protocols such as PIM, IGMP, and MSDP to create multicast routing state entries. These mroute states describe the relationship of the router to a particular MDT and are populated by the various MRIB client protocols, such as IGMP, PIM, and IP. After MRIB creates the mroute state, it pushes this state to the Multicast Forwarding Distribution Manager (MFDM).

The MRIB interacts with the Unicast Routing Information Base (URIB) to obtain routing protocol metrics and next-hop information used during Reverse Path Forwarding (RPF) lookups. Any multicast packets that are routed by the supervisor in the software forwarding path are also handled by the MRIB.

MFDM is an intermediary between the MRIB and the platform-forwarding components. It is responsible for taking the mroute state from the MRIB and allocating platform resources for each entry. MFDM translates the MRIB into data structures that the platform components understand. The data structures are then pushed from MFDM to each I/O module, in the case of a distributed platform such as the Nexus 7000 series. In a nonmodular platform, MFDM distributes its information to the platform-forwarding components.

The Multicast Forwarding Information Base (MFIB) programs the (*, G) and (S, G) and RPF entries it receives from MFDM into hardware forwarding tables known as FIB (ternary content-addressable memory) TCAM. The TCAM is a high-speed memory space that is used to store a pointer to the adjacency. The adjacency is then used to obtain the Multicast Expansion Table (MET) index. The MET index contains information about the OIFs and how to replicate and forward the packet to each downstream interface. Many platforms and I/O modules have dedicated replication ASICs. The steps described here vary based on the type of hardware a platform uses, and troubleshooting at this depth typically involves working with Cisco TAC Support. Table 13-4 provides a mapping of multicast components to show commands used to verify the state of each component process.

Table 13-4 CLI Commands for Each Multicast Component

Component |

CLI Command |

IGMP |

show ip igmp route show ip igmp groups show ip igmp snooping groups |

PIM |

show ip pim route |

MSDP |

show ip msdp route show ip msdp sa-cache |

URIB |

show ip route |

MRIB |

show routing ip multicast [group] [source] show ip mroute |

MFDM |

show forwarding distribution ip multicast route show forwarding distribution ip igmp snooping |

Multicast FIB |

show forwarding ip multicast route module [module number] |

Forwarding Hardware |

show system internal forwarding ip multicast route show system internal ip igmp snooping |

TCAM, MET, ADJ Table |

Varies by platform and hardware type |

When Virtual Device Contexts (VDC) are used with the Nexus 7000 series, all of the previously mentioned PI components are unique to the VDC. Each VDC has its own PIM, IGMP, MRIB, and MFDM processes. However, in each I/O module, the system resources are shared among the different VDCs.

Replication

Multicast communication is efficient because a single packet from the source can be replicated many times as it traverses the MDT toward receivers located along different branches of the tree. Replication can occur at L2 when multiple receivers are in the same VLAN on different interfaces, or at L3 when multiple downstream PIM neighbors have joined the MDT from different OIFs.

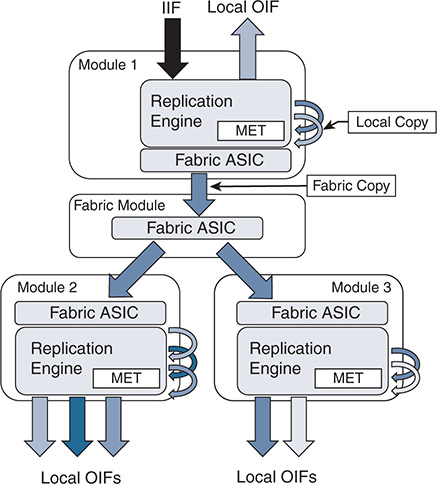

Replication of multicast traffic is handled by specialized hardware, which is different on each Nexus platform. In the case of a distributed platform with different I/O modules, egress replication is used (see Figure 13-5).

Figure 13-5 Egress Multicast Replication

The benefit of egress replication is that it allows all modules of the system to share the load of packet replication, which increases the forwarding capacity and scalability of the platform. As traffic arrives from the IIF, the following happens:

The packet is replicated for any receivers on the local module.

A copy of the packet is sent to the fabric module.

The fabric module replicates additional copies of the packet, one for each module that has an OIF.

At each egress module, additional packet copies are made for each local receiver based on the contents of the MET table.

The MET tables on each module contain a list of local OIFs. For improved scalability, each module maintains its own MET tables. In addition, multicast forwarding entries that share the same OIFs can share the same MET entries, which further improves scalability.

Protecting the Central Processing Unit

Multicast traffic can be directed to the Supervisor CPU for a number of reasons. A few possibilities include these:

Non-RPF traffic used to generate a PIM Assert message

A packet in which the TTL has expired in transit

The initial packet from a new source used to create a PIM register message

IGMP membership reports used to create entries in the snooping table

Multicast control plane packets for PIM or IGMP

NX-OS uses control plane policing (CoPP) policies to protect the supervisor CPU from excessive traffic. The individual CoPP classes used for multicast traffic vary from platform to platform, but they all serve an important role: to protect the device. Leaving CoPP enabled is always recommended, although exceptional cases require modifying some of the classes or policer rates. The currently applied CoPP policy is viewed with the show policy-map interface control-plane command. Table 13-5 provides additional detail about the default CoPP classes related to multicast traffic.

Table 13-5 CoPP Classes for Multicast

CoPP Class |

Description |

copp-system-p-class-multicast-router |

Matches multicast control plane protocols such as MSDP, PIM messages to ALL-PIM-ROUTERs (224.0.0.13) and PIM register messages (unicast) |

copp-system-p-class-multicast-host |

Matches IGMP packets |

copp-system-p-class-normal |

Matches traffic from directly connected multicast sources that is used to build PIM register messages |

Class-default |

Catchall class any packets that do not match another CoPP class |

In addition to CoPP, which polices traffic arriving at the supervisor, the Nexus 7000 series uses a set of hardware rate limiters (HWRL). The hardware rate limiters exist on each I/O module and control the amount of traffic that can be directed toward the supervisor. The status of the HWRL is viewed with the show hardware rate-limiter (see Example 13-1).

Example 13-1 Nexus 7000 Hardware Rate Limiters

! Output omitted for brevity

Units for Config: packets per second

Allowed, Dropped & Total: aggregated since last clear counters

rl-1: STP and Fabricpath-ISIS

rl-2: L3-ISIS and OTV-ISIS

rl-3: UDLD, LACP, CDP and LLDP

rl-4: Q-in-Q and ARP request

rl-5: IGMP, NTP, DHCP-Snoop, Port-Security, Mgmt and Copy traffic

Module: 3

Rate-limiter PG Multiplier: 1.00

R-L Class Config Allowed Dropped Total

+------------------+--------+---------------+---------------+-----------------+

L3 mtu 500 0 0 0

L3 ttl 500 12 0 12

L3 control 10000 0 0 0

L3 glean 100 1 0 1

L3 mcast dirconn 3000 13 0 13

L3 mcast loc-grp 3000 2 0 2

L3 mcast rpf-leak 500 0 0 0

L2 storm-ctrl Disable

access-list-log 100 0 0 0

copy 30000 7182002 0 7182002

receive 30000 27874374 0 27874374

L2 port-sec 500 0 0 0

L2 mcast-snoop 10000 34318 0 34318

L2 vpc-low 4000 0 0 0

L2 l2pt 500 0 0 0

L2 vpc-peer-gw 5000 0 0 0

L2 lisp-map-cache 5000 0 0 0

L2 dpss 100 0 0 0

L3 glean-fast 100 0 0 0

L2 otv 100 0 0 0

L2 netflow 48000 0 0 0

L3 auto-config 200 0 0 0

Vxlan-peer-learn 100 0 0 0

Table 13-6 describes each multicast HWRL.

Table 13-6 Hardware Rate Limiters for Multicast

R-L Class |

Description |

L3 mcast dirconn |

Packets for which the source is directly connected. These packets are sent to the CPU to generate PIM register messages. |

L3 mcast loc-grp |

Packets sent to the CPU at the LHR to trigger SPT switchover. |

L3 mcast rpf-leak |

Packets sent to the CPU to create a PIM assert message. |

L2 mcast-snoop |

IGMP membership reports, queries, and PIM hello packets punted to the CPU for IGMP snooping. |

As with the CoPP policy, disabling any of the HWRLs that are enabled by default is not advised. In most deployments, no modification to the default CoPP or HWRL configuration is necessary.

If excessive traffic to the CPU is suspected, incrementing matches or drops in a particular CoPP class or HWRL provide a hint about what traffic is arriving. For additional detail, an Ethanalyzer capture can look at the CPU-bound traffic for troubleshooting purposes.

NX-OS Multicast Implementation

Many network environments consist of a mix of Cisco NX-OS devices and other platforms. It is therefore important to understand the differences in default behavior between NX-OS and Cisco IOS devices. NX-OS has the following differences:

Multicast does not have to be enabled globally.

Certain features (PIM, MSDP) must be enabled before they are configurable. IGMP is automatically enabled when PIM is enabled.

Removing a feature removes all related configuration.

PIM dense mode is not supported.

Multipath support is enabled by default. This allows multicast traffic to be load-balanced across equal-cost multipath (ECMP) routes.

Punted multicast data packets are not replicated by default (this is enabled by configuring ip routing multicast software-replicate only if needed).

PIM IPsec AH-MD5 neighbor authentication is supported.

PIM snooping is not supported.

IGMP snooping uses an IP-based forwarding table by default. IGMP snooping based on MAC address table lookup is a configurable option.

NX-OS platforms might require the allocation of TCAM space for multicast routes.

Static Joins

In general, static joins should not be required when multicast has been correctly configured. However, this is a useful option for troubleshooting in certain situations. For example, if a receiver is not available, a static join is used to build multicast state in the network.

NX-OS offers the ip igmp join-group [group] [source] interface command, which configures the NX-OS device as a multicast receiver for the group. Providing the source address is not required unless the join is for IGMPv3. This command forces NX-OS to issue an IGMP membership report and join the group as a host. All packets received for the group address are processed in the control plane of the device. This command can prevent packets from being replicated to other OIFs and should be used with caution.

The second option is the ip igmp static-oif [group] [source] interface command, which statically adds an OIF to an existing mroute entry and forwards packets to the OIF in hardware. The source option is used only with IGMPv3. It is important to note that if this command is being added to a VLAN interface, you must also configure a static IGMP snooping table entry with the ip igmp snooping static-group [group] [source] interface [interface name] VLAN configuration command to actually forward packets.

Clearing an MROUTE Entry

A common way to clear the data structures associated with a multicast routing entry is to use the clear ip mroute command. In Cisco IOS platforms, this command is effective in clearing the entry. However, in NX-OS, the data structures associated with a particular mroute entry might have come from any MRIB client protocol. NX-OS provides the commands necessary to clear the individual MRIB client entries. In NX-OS 7.3, the clear ip mroute * command was enhanced to automatically clear the individual client protocols as well as the MRIB entry. In older releases of NX-OS, it is necessary to issue additional commands to completely clear an mroute entry from the MRIB and all associated client protocols:

clear ip mroute * clears entries from the MRIB.

clear ip pim route * clears PIM entries created by PIM join messages.

clear ip igmp route * clears IGMP entries created by IGMP membership reports.

clear ip mroute data-created * clears MRIB entries created by receiving multicast data packets.

Multicast Boundary and Filtering

The Cisco IOS equivalent of a multicast boundary does not exist in NX-OS. In Cisco IOS, the multicast boundary command is a filter applied to an interface to create an administratively scoped boundary where multicast traffic can be filtered on the interface. The following control plane and data plane filtering techniques are used to create an administrative boundary in NX-OS:

Filter PIM join messages: ip pim jp-policy [route-map] [in | out]

Filter IGMP membership reports: ip igmp report-policy [route-map]

Data traffic filter: ip access-group [ACL] [in | out]

In addition, the ip pim border command can be configured on an interface to prevent the forwarding of any Auto-RP, bootstrap, or candidate-RP messages.

Event-Histories and Show Techs

NX-OS provides event-histories, which are an always-on log of significant process events for enabled features. In many cases, the event-history log is sufficient for troubleshooting in detail without additional debugging. The various event-history logs for multicast protocols and processes are referenced throughout this chapter for troubleshooting purposes. Certain troubleshooting situations call for an increase in the default event-history size because of the large volume of protocol messages. Each event-history type can be increased in size, independent of the other types. For PIM, the event-history size is increased with the ip pim event-history [event type] size [small | medium | large] configuration command. IGMP is increased with the ip igmp event-history [event type] size [small | medium | large] configuration command.

Each feature or service related to forwarding multicast traffic in NX-OS has its own show tech-support [feature] output. These commands are typically used to collect the majority of data for a problem in a single output that can be analyzed offline or after the fact. The tech support file contains configurations, data structures, and event-history output for each specific feature. If a problem is encountered and the time to collect information is limited, the following list of NX-OS tech support commands can be captured and redirected to individual files in bootflash for later review:

show tech-support ip multicast

show tech-support forwarding l2 multicast vdc-all

show tech-support forwarding l3 multicast vdc-all

show tech-support pixm

show tech-support pixmc-all

show tech-support module all

Knowing what time the problem might have occurred is critical so that the various system messages and protocol events can be correlated in the event-history output. If the problem occurred in the past, some or all of the event-history buffers might have wrapped and the events related to the problem condition could be gone. In such situations, increasing the size of certain event-history buffers might be useful for when the problem occurs again.

After collecting all the data, the files can be combined into a single archive and compressed for Cisco support to investigate the problem.

Providing an exhaustive list of commands for every possible situation is impossible. However, the provided list will supply enough information to narrow the scope of the problem, if not point to a root cause. Also remember that multicast problems are rarely isolated to a single device, which means it could be necessary to collect the data set from a peer device or PIM neighbor as well.

IGMP

Hosts use the IGMP protocol to dynamically join and leave a multicast group through the LHR. With IGMP, a host can join or leave a group at any time. Without IGMP, a multicast router has no way of knowing when interested receivers reside on one of its interfaces or when those receivers are no longer interested in the traffic. It should be obvious that, without IGMP, the efficiencies in bandwidth and resource utilization in a multicast network would be severely diminished. Imagine if every multicast router sent traffic for each group on every interface! For that reason, hosts and routers must support IGMP if they are configured to support multicast communication. In the NX-OS implementation of IGMP, a single IGMP process serves all virtual routing and forwarding (VRF) instances. If Virtual Device Contexts (VDC) are being used, an IGMP process runs on each VDC.

IGMPv1 was defined in RFC 1112 and provided a state machine and the messaging required for hosts to join and leave multicast groups by sending membership reports to the local router. Finding a device using IGMPv1 in a modern network is uncommon, but an overview of its operation is provided for historical purposes so that the differences and evolution in IGMPv2 and IGMPv3 are easier to understand.

A multicast router configured for IGMPv1 periodically sends query messages to the All-Hosts address of 224.0.0.1. The host then waits for a random time interval, within the bounds of a report delay timer, to send a membership report using the group address as the destination address for the membership report. The multicast router receives the message indicating that traffic for a specific group should be sent. When the router receives the membership report, it knows that a host on the segment is a current member of the multicast group and starts forwarding the group traffic onto the segment. A functional reason for using the group address as the destination of the membership report is so that hosts are aware of the presence of other receivers for the group on the same network. This allows a host to suppress its own report message, to reduce the volume of IGMP traffic on a segment. A multicast router needs to receive only a single membership report to begin sending traffic onto the segment.

When a host wants to join a new multicast group, it can immediately send a membership report for the group; it does not have to wait for a query message from a multicast router. However, when a host wants to leave a group, IGMPv1 does not provide a way to indicate this to the local multicast router. The host simply stops responding to queries. If the router receives no further membership reports, it sends three queries before pruning off the interface from the OIL and determining that interested receivers are no longer present.

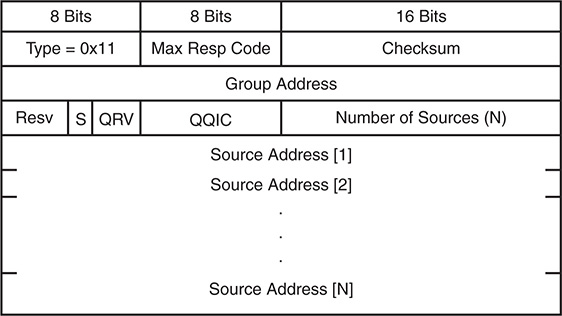

IGMPv2

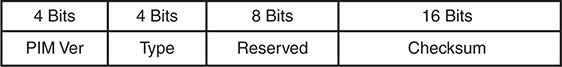

Defined in RFC 2236, IGMPv2 provides additional functionality over IGMPv1. It required an additional message to be defined to implement the new functionality. Figure 13-6 shows the IGMP message format.

Figure 13-6 IGMP Message Format

The IGMPv2 message fields are defined in the following list:

Type:

0x11 Membership query (general query or group specific query)

0x12 Version 1 membership report (used for backward compatibility)

0x16 Version 2 membership report

0x17 Leave group

Max Response Time: Used only in membership query messages and is set to zero in all other message types. This is used to tune the response time of hosts and the leave latency observed when the last member decides to leave the group.

Checksum: Used to ensure the integrity of the IGMP message.

Group Address: Set to zero in a general query and set to the group address when sending a group specific query. In a membership report or leave group message, the group address is set to the group being reported or left.

Note

IP packets carrying IGMP messages have the TTL set to 1 and the router alert option set in the IP header, to force routers to examine the packet contents.

In IGMPv2, an election to determine the IGMP querier is specified whenever more than one multicast router is present on the network segment. Upon startup, a multicast router sends an IGMP general query message to the All-Hosts group 224.0.0.1. When a router receives a general query message from another multicast router, a check is performed and the router with the lowest IP address assumes the role of the querier. The querier is then responsible for sending query messages on the network segment.

The process of joining a multicast group is similar in IGMPv2 to IGMPv1. A host responds to general queries as well as group-specific queries with a membership report message. A host implementation chooses a random time to respond, between zero seconds and the max-response-interval sent in the query message. A host can also send an unsolicited membership report when a new group is joined to initiate the flow of multicast traffic on the segment.

The leave group message was defined to address the IGMPv1 problem in which a host could not explicitly inform the network after deciding to leave a group. This message type is used to inform a router when the multicast group is no longer needed on the segment and all members have left the group. If a host is the last member to send a membership report on the segment, it should send a leave group message when the host no longer wants to receive the group traffic. This leave group message is sent to the All-Routers multicast address 224.0.0.2. When the querier receives this message, it sends a group-specific query in response, which is also a new functionality enhancement over IGMPv1. The group-specific query message uses the multicast group’s destination IP address, to ensure that any host listening on the group receives the query. These messages are sent based on the last member query interval. If a membership report is not received, the router prunes the interface from the OIL.

IGMPv3

IGMPv3 was specified in RFC 3376. It allows a host to support the functionality required for Source Specific Multicast (SSM). SSM multicast allows a receiver to specifically join not only the multicast group address, but also the source address for a particular group. Applications running on a multicast receiver host can now request specific sources.

In IGMPv3, the interface state of the host includes a filter mode and source list. The filter mode can be include or exclude. When the filter mode is include, traffic is requested only from the sources in the source list. If the filter mode is exclude, traffic is requested for any source except the ones present in the source list. The source list is an unordered list of IP unicast source addresses, which can be combined with the filter mode to implement source-specific logic. This allows IGMPv3 to signal only the sources of interest to the receiver in the protocol messages.

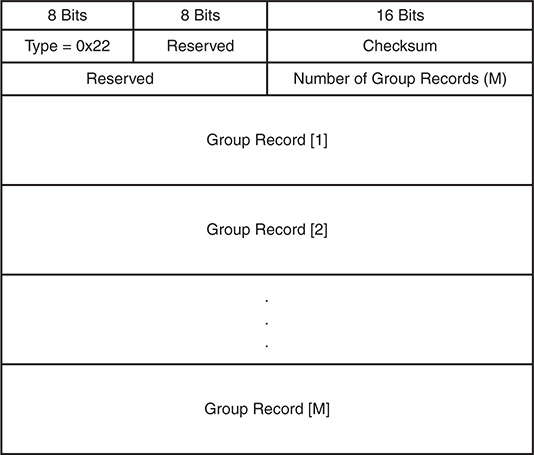

Figure 13-7 provides the IGMPv3 membership query message format, which includes several new fields when compared to the IGMPv2 membership query message, although the message type remains the same (0x11).

Figure 13-7 IGMPv3 Membership Query Message Format

The IGMPv3 membership query message fields are defined as follows:

Type 0x11: Membership query (general query, group specific query, or group and source specific query). These messages are differentiated by the contents of the group address and source address fields.

Max Resp Code: The maximum time allowed for a host to send a responding report. It enables the operator to tune the burstiness of IGMP traffic and the leave latency.

Checksum: Ensures the integrity of the IGMP message. It is calculated over the entire IGMP message.

Group Address: Set to zero for general query and is equal to the group address for group specific or source and group specific queries.

Resv: Set to zero and ignored on receipt.

S Flag: When set to 1, suppresses normal timer updates that routers perform when receiving a query.

QRV: Querier’s robustness variable. Used to overcome a potential packet loss. It allows a host to send multiple membership report messages to ensure that the querier receives them.

QQIC: Querier’s query interval code. Provides the querier’s query interval (QQI).

Number of Sources: Specifies how many sources are present in the query.

Source Address: Specific source unicast IP addresses.

Several differences appear when compared to IGMPv2. The most significant is the capability to have group and source specific queries, enabling query messages to be sent for specific sources of a multicast group.

The membership report message type for IGMPv3 is identified by the message type 0x22 and involves several changes when compared to the membership report message used in IGMPv2. Receiver hosts use this message type to report the current membership state of their interfaces, as well as any change in the membership state to the local multicast router. Hosts send this message to multicast routers using the group IP destination address of 224.0.0.22. Figure 13-8 shows the format of the membership report for IGMPv3.

Figure 13-8 IGMPv3 Membership Report Message Format

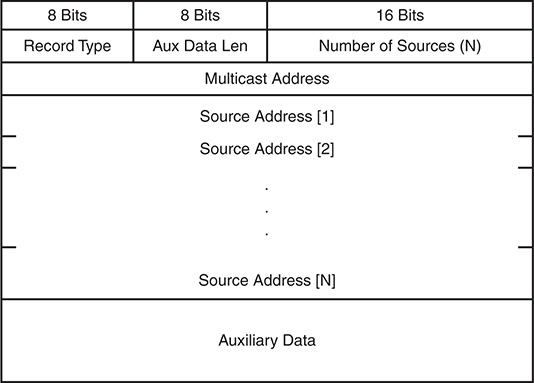

Each group record in the membership report uses the format shown in Figure 13-9.

Figure 13-9 IGMPv3 Membership Report Group Record Format

The IGMPv3 membership report message fields are defined in the following list:

Type 0x22: IGMPv3 membership report

Reserved: Set to zero on transmit and ignored on receipt

Checksum: Verifies the integrity of the message

Number of Group Records: Provides the number of group records present in this membership report

Group Record: A block of fields that provides the sender’s membership in a single multicast group on the interface from which the report was sent

The fields in each group record are defined here:

Record Type: The type of group record.

Current-State Record: The current reception state of the interface

Mode_is_include: Filter mode is include

Mode_is_exclude: Filter mode is exclude

Filter-Mode-Change Record: Indication that the filter mode has changed

Change_to_Include_Mode: Filter mode change to include

Change_to_Exclude_Mode: Filer mode change to exclude

Source-List-Change Record: Indication that the source list has changed, not the filter mode

Allow_New_Sources: List new sources being requested

Block_Old_Sources: List sources no longer being requested

Aux Data Len: Length of auxiliary data in the group record.

Number of Sources: How many sources are present in this group record.

Multicast Address: The multicast group this record pertains to.

Source Address: The unicast IP address of a source for the group.

Auxiliary Data: Indication that auxiliary data is not defined for IGMPv3. The Aux Data Len should be set to zero and the auxiliary data should be ignored.

Additional Data: Accounted for in the IGMP checksum, but any data beyond the last group record is ignored.

The most significant difference in the IGMPv3 membership report when compared to the IGMPv2 membership report is the inclusion of the group record block data. This is where the IGMPv3-specific functionality for the filter mode and source list is implemented.

IGMPv3 is backward compatible with previous versions of IGMP and still follows the same general state machine mechanics. When a host or router running an older version of IGMP is detected, the queries and report messages are translated from IGMPv2 into their IGMPv3 equivalent. For example, an IGMPv3-compatible representation of an IGMPv2 membership report for 239.1.1.1 includes all sources in IGMPv3.

As in IGMPv2, general queries are still sent to the All-Hosts group 224.0.0.1 from the querier. Hosts respond with a membership report message, which now includes specific sources in a source list and includes or excludes logic in the record type field. Hosts that want to join a new multicast group or source use unsolicited membership reports. When leaving a group or specific source, a host sends an updated current state group record message to indicate the change in state. The leave group message found in IGMPv2 is not used in IGMPv3. If no other members are in the group or source, the querier sends a group or group and source-specific query message before pruning off the source tree. The multicast router keeps an interface state table for each group and source and updates it as needed when an include or exclude update is received in a group record.

IGMP Snooping

Without IGMP snooping, a switch must flood multicast packets to each port in a VLAN to ensure that every potential group member receives the traffic. Obviously, bandwidth and processing efficiency are reduced if ports on the switch do not have an interested receiver attached. IGMP snooping inspects (or “snoops on”) the higher-layer protocol communication traversing the switch. Looking into the contents of IGMP messages allows the switch to learn where multicast routers and interested receivers for a group are attached. IGMP snooping operates in the control plane by optimizing and suppressing IGMP messages from hosts, and operates in the data plane by installing multicast MAC address and port-mapping entries into the local multicast MAC address table of the switch. The entries created by IGMP snooping are installed in the same MAC address table as unicast entries. Despite the fact that different commands are used for viewing the entries installed by normal unicast learning and IGMP snooping, they share the same hardware resources provided by the MAC address table.

An IGMP snooping switch listens for IGMP query messages and PIM hello messages to determine which ports are connected to mrouters. When a port is determined to be an mrouter port, it receives all multicast traffic in the VLAN so that appropriate control plane state on the mrouter is created and sources are registered with the PIM RP, if applicable. The snooping switch also forwards IGMP membership reports to the mrouter to initiate the flow of multicast traffic to group members.

Host ports are discovered by listening for IGMP membership report messages. The membership reports are evaluated to determine which groups and sources are being requested, and the appropriate forwarding entries are added to the multicast MAC address table or IP-based forwarding table. An IGMP snooping switch should not forward membership reports to hosts because it results in hosts suppressing their own membership reports for IGMPv1 and IGMPv2.

If a multicast packet for the Network Control Block 224.0.0.0/24 arrives, it might need to be flooded on all ports. This is because devices can listen for groups in this range without sending a membership report for the group, and suppressing those packets could interrupt control plane protocols.

IGMP snooping is a separate process from the IGMP control plane process and is enabled by default in NX-OS. No user configuration is required to have the basic functionality running on the device. NX-OS builds its IGMP snooping table based on the group IP address instead of the multicast MAC address for the group. This behavior allows for optimal forwarding even if the L3 group addresses of multiple groups overlap to the same multicast group MAC address. The output in Example 13-2 demonstrates how to verify the IGMP snooping state and lookup mode for a VLAN.

Example 13-2 Verify IGMP Snooping

Global IGMP Snooping Information:

IGMP Snooping enabled

Optimised Multicast Flood (OMF) enabled

IGMPv1/v2 Report Suppression enabled

IGMPv3 Report Suppression disabled

Link Local Groups Suppression enabled

IGMP Snooping information for vlan 115

IGMP querier present, address: 10.115.1.254, version: 2, i/f Po1

Switch-querier disabled

IGMPv3 Explicit tracking enabled

IGMPv2 Fast leave disabled

IGMPv1/v2 Report suppression enabled

IGMPv3 Report suppression disabled

Link Local Groups suppression enabled

Router port detection using PIM Hellos, IGMP Queries

Number of router-ports: 1

Number of groups: 1

VLAN vPC function disabled

Active ports:

Po1 Po2 Eth3/19

It is possible to configure the device to use a MAC address–based forwarding mechanism on a per-VLAN basis, although it can lead to suboptimal forwarding because of address overlap. This option is configured in the VLAN configuration submode in Example 13-3.

Example 13-3 Enable MAC Address Lookup Mode

NX-2(config-vlan-config)# layer-2 multicast lookup mac

If multicast traffic arrives for a group that a host has not requested via a membership report message, those packets are forwarded to the mrouter ports only, by default. This is called optimized multicast flooding in NX-OS and is shown as enabled by default in Example 13-2. If this feature is disabled, traffic for an unknown group is flooded to all ports in the VLAN.

Note

Optimized multicast flooding should be disabled in IPv6 networks to avoid problems related to neighbor discovery (ND) that rely specifically on multicast communication. This feature is disabled with the no ip igmp snooping optimised-multicast-flood command in VLAN configuration mode.

IGMP membership reports are suppressed by default to reduce the number of messages the mrouter receives. Recall that the mrouter needs to receive a membership report from only one host for the interface to be added to the OIL for a group.

NX-OS has several options available when configuring IGMP snooping. Most of the configuration is applied per VLAN, but certain parameters can be configured only globally. Global values apply to all VLANs. Table 13-7 provides the default configuration parameters for IGMP snooping that apply globally on the switch.

Table 13-7 IGMP Snooping Global Configuration Parameters

Parameter |

CLI Command |

Description |

IGMP snooping |

ip igmp snooping |

Enables IGMP snooping on the active VDC. The default is enabled. Note: If the global setting is disabled, all VLANs are treated as disabled, whether they are enabled or not. |

Event-history |

ip igmp snooping event-history { vpc | igmp-snoop-internal | mfdm | mfdm-sum | vlan | vlan-events } size buffer-size |

Configures the size of the IGMP snooping history buffers. The default is small. |

Group timeout |

ip igmp snooping group-timeout { minutes | never } |

Configures the group membership timeout for all VLANs on the device. |

Link-local groups suppression |

ip igmp snooping link-local-groups-suppression |

Configures link-local groups suppression on the device. The default is enabled. |

Optimise-multicast-flood (OMF) |

ip igmp optimise-multicast-flood |

Configures OMF on all VLANs. The default is enabled. |

Proxy |

ip igmp snooping proxy general-inquiries [ mrt seconds ] |

Enables the snooping function to proxy reply to general queries from the multicast router while also sending round-robin general queries on each switchport with the specified MRT value. The default is 5 seconds. |

Report suppression |

ip igmp snooping report-suppression |

Limits the membership report traffic sent to multicast-capable routers on the device. When you disable report suppression, all IGMP reports are sent as is to multicast-capable routers. The default is enabled. |

IGMPv3 report suppression |

ip igmp snooping v3-report-suppression |

Configures IGMPv3 report suppression and proxy reporting on the device. The default is disabled. |

Table 13-8 provides the IGMP snooping configuration parameters, which are configured per VLAN. The per-VLAN configuration is applied in the vlan configuration [vlan-id] submode.

Table 13-8 IGMP Snooping per-VLAN Configuration Parameters

Parameter |

CLI Command |

Description |

IGMP snooping |

ip igmp snooping |

Enables IGMP snooping on a per-VLAN basis. The default is enabled. |

Explicit tracking |

ip igmp snooping explicit-tracking |

Tracks IGMPv3 membership reports from individual hosts for each port on a per-VLAN basis. The default is enabled. |

Fast leave |

ip igmp snooping fast-leave |

Enables the software to remove the group state when it receives an IGMP leave report without sending an IGMP query message. This parameter is used for IGMPv2 hosts when no more than one host is present on each VLAN port. The default is disabled. |

Group timeout |

ip igmp snooping group-timeout { minutes | never } |

Modifies or disables the default behavior of expiring IGMP snooping group membership after three missed general queries. |

Last member query interval |

ip igmp snooping last-member-query- interval seconds |

Sets the interval that the software waits after sending an IGMP query to verify that a network segment no longer has hosts that want to receive a particular multicast group. If no hosts respond before the last member query interval expires, the software removes the group from the associated VLAN port. Values range from 1 to 25 seconds. The default is 1 second. |

Optimize- multicast-flood |

ip igmp optimised-multicast-flood |

Configures OMF on the specified VLAN. The default is enabled. |

Proxy |

ip igmp snooping proxy general- queries [ mrt seconds ] |

Enables the snooping function to proxy reply to general queries from the multicast router while also sending round-robin general queries on each switchport with the specified MRT value. The default is 5 seconds. |

Snooping querier |

ip igmp snooping querier ip-address |

Configures a snooping querier on an interface when you do not enable PIM because multicast traffic does not need to be routed. |

Query timeout |

ip igmp snooping querier-timeout seconds |

Query timeout value for IGMPv2. The default is 255 seconds. |

Query interval |

ip igmp snooping query-interval seconds |

Time between query transmissions. The default is 125 seconds. |

Query max response time |

ip igmp snooping query-max-response- time seconds |

Max response time for query messages. The default is 10 seconds. |

Startup count |

ip igmp snooping startup-query-count value |

Number of queries sent at startup. The default is 2. |

Startup interval |

ip igmp snooping startup-query- interval seconds |

Interval between queries at startup. The default is 31 seconds. |

Robustness variable |

ip igmp snooping robustness-variable value |

Configures the robustness value for the specified VLANs. The default is 2. |

Report suppression |

ip igmp snooping report-suppression |

Limits the membership report traffic sent to multicast-capable routers on a per-VLAN basis. When you disable report suppression, all IGMP reports are sent as is to multicast-capable routers. The default is enabled. |

Static mrouter port |

ip igmp snooping mrouter interface interface |

Configures a static connection to a multicast router. The interface to the router must be in the selected VLAN. |

Layer 2 static group |

ip igmp snooping static-group group- ip-addr [ source source-ip-addr ] interface interface |

Configures a Layer 2 port of a VLAN as a static member of a multicast group. |

Link-local groups suppression |

ip igmp snooping link-local-groups- suppression |

Configures link-local groups suppression on a per-VLAN basis. The default is enabled. |

IGMPv3 report suppression |

ip igmp snooping v3-report-suppression |

Configures IGMPv3 report suppression and proxy reporting on a per-VLAN basis. The default is enabled per VLAN. |

Version |

ip igmp snooping version value |

Configures the IGMP version number for the specified VLANs. |

In a pure L2 deployment of multicast, a snooping querier must be configured. This applies to situations in which PIM is not enabled on any interfaces, no mrouter is present, and no multicast traffic is being routed between VLANs.

Note

When vPC is configured with IGMP snooping, configuring the same IGMP parameters on both vPC peers is recommended. IGMP state is synchronized between vPC peers with Cisco Fabric Services (CFS).

IGMP Verification

IGMP is enabled by default when PIM is enabled on an interface. Troubleshooting IGMP problems typically involves scenarios in which the LHR does not have an mroute entry populated by IGMP and the problem needs to be isolated to the LHR, the L2 infrastructure, or the host itself. Often IGMP snooping must be verified during this process because it is enabled by default and therefore plays an important role in delivering the queries to hosts and delivering the membership report messages to the mrouter.

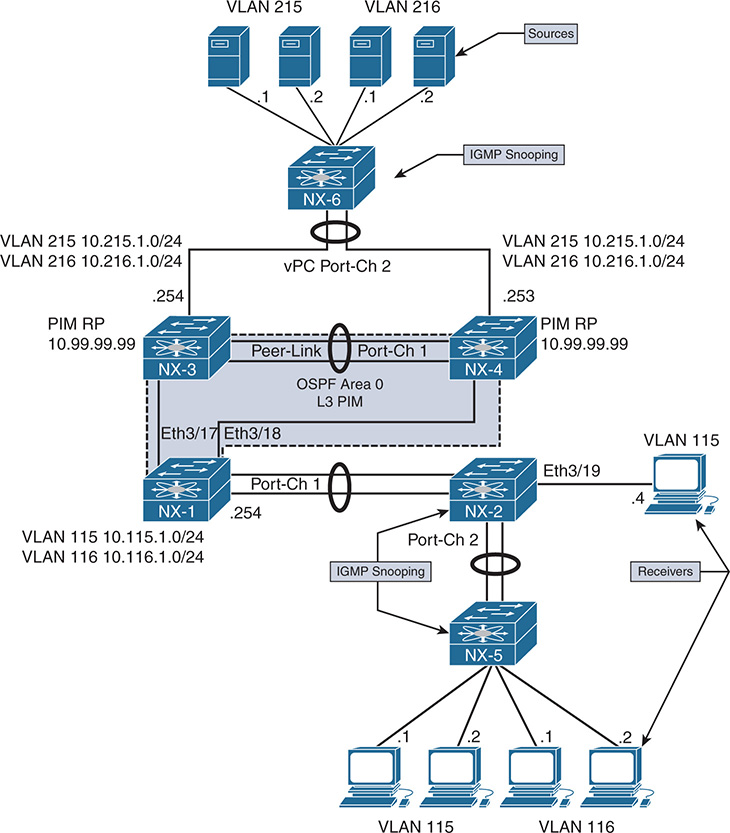

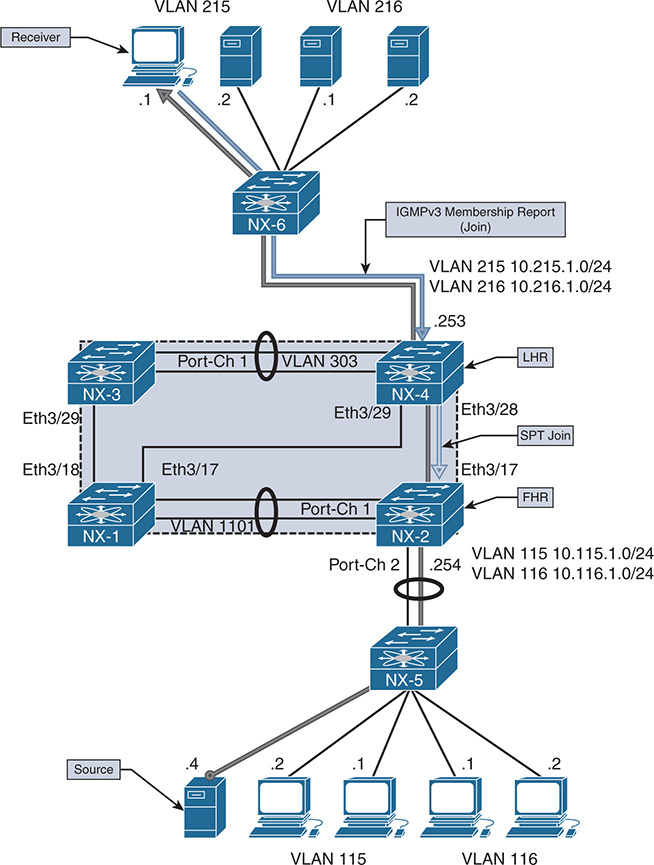

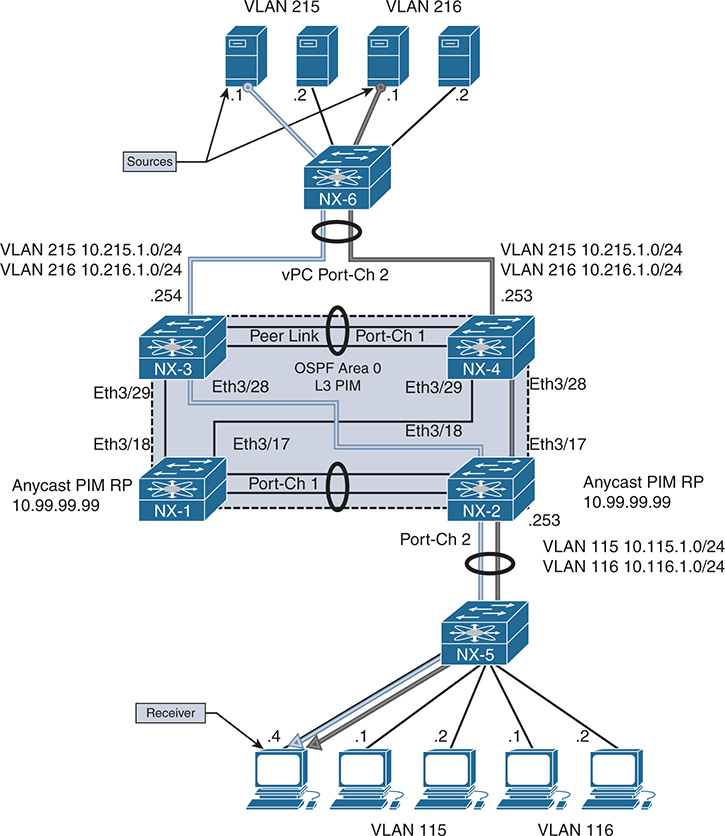

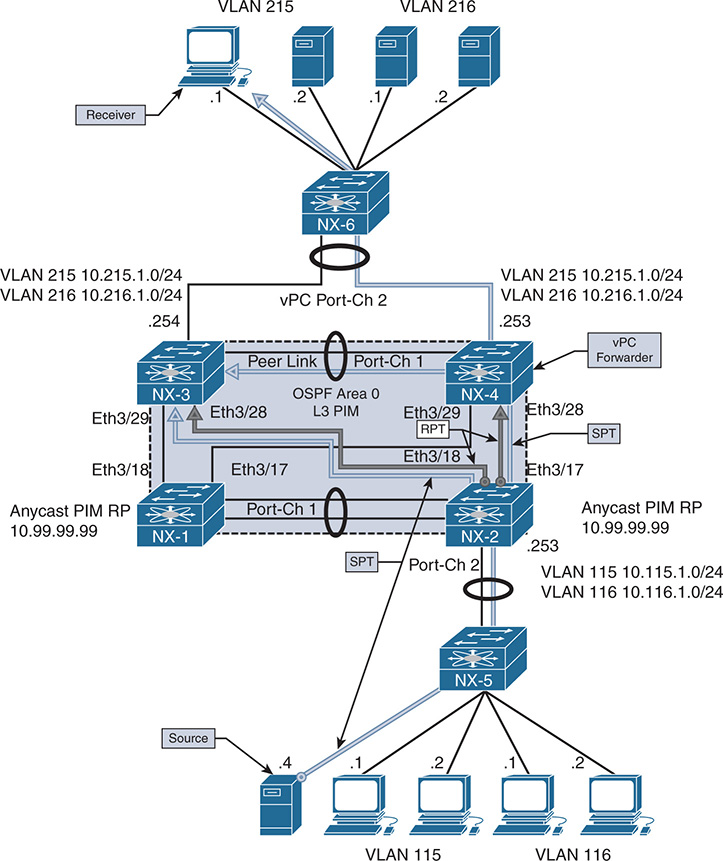

In the topology in Figure 13-10, NX-1 is acting as the LHR for receivers in VLAN 115 and VLAN 116. NX-1 is also the IGMP querier for both VLANs. NX-2 is an IGMP snooping switch that is not performing any multicast routing. All L3 devices are configured for PIM ASM, with an anycast RP address shared between NX-3 and NX-4.

Figure 13-10 IGMP Verification Example Topology

If a receiver is not getting multicast traffic for a group, verify IGMP for correct state and operation. To begin the investigation, the following information is required:

Multicast Group Address: 239.215.215.1

IP address of the source: 10.215.1.1

IP address of the receiver: 10.115.1.4

LHR: NX-1

Scope of the problem: The groups, sources, and receivers that are not functioning

The purpose of IGMP is to inform the LHR that a receiver is interested in group traffic. At the most basic level, this is communicated through a membership report message from the receiver and should create a (*, G) state at the LHR. In most circumstances, checking the mroute at the LHR for the presence of the (*, G) is enough to verify that at least one membership report was received. The OIL for the mroute should contain the interface on which the membership report was received. If this check passes, typically the troubleshooting follows the MDT to the PIM RP or source to determine why traffic is not arriving at the receiver.

In the following examples, no actual IGMP problem condition is present because the (*, G) state exists on NX-1. Instead of troubleshooting a specific problem, this section reviews the IGMP protocol state and demonstrates the command output, process events, and methodology used to verify functionality.

Verification begins from NX-2, which is the IGMP snooping switch connected to the receiver 10.115.1.4, and works across the L2 network toward the mrouter NX-1. Example 13-4 contains the output of show ip igmp snooping vlan 115, which is where the receiver is connected to NX-2. This output is used to verify that IGMP snooping is enabled and that the mrouter port is detected.

Example 13-4 IGMP Snooping Status for VLAN 115

Global IGMP Snooping Information:

IGMP Snooping enabled

Optimised Multicast Flood (OMF) enabled

IGMPv1/v2 Report Suppression enabled

IGMPv3 Report Suppression disabled

Link Local Groups Suppression enabled

Optimised Multicast Flood (OMF) enabled

IGMPv3 Explicit tracking enabled

IGMPv2 Fast leave disabled

IGMPv1/v2 Report suppression enabled

IGMPv3 Report suppression disabled

Link Local Groups suppression enabled

Router port detection using PIM Hellos, IGMP Queries

Active ports:

Po1 Po2 Eth3/19

The Number of Groups field indicates that one group is present. The show ip igmp snooping groups vlan 115 command is used to obtain additional detail about the group, as in Example 13-5.

Example 13-5 VLAN 115 IGMP Snooping Group Membership

Type: S - Static, D - Dynamic, R - Router port, F - Fabricpath core port

Vlan Group Address Ver Type Port list

115 */* - R Po1

The last reporter is seen using the detail keyword, shown in Example 13-6.

Example 13-6 Detailed VLAN 115 IGMP Snooping Group Membership

IGMP Snooping group membership for vlan 115

Group addr: 239.215.215.1

Group ver: v2 [old-host-timer: not running]

IGMPv2 member ports:

IGMPv1/v2 memb ports:

Eth3/19 [0 GQ missed], cfs:false, native:true

vPC grp peer-link flag: include

M2RIB vPC grp peer-link flag: include

Note

If MAC-based multicast forwarding was configured for VLAN 115, the multicast MAC table entry can be confirmed with the show hardware mac address-table [module] [VLAN identifier] command. There is no software MAC table entry in the output of show mac address-table multicast [VLAN identifier], which is expected.

NX-2 is configured to use IP-based lookup for IGMP snooping. The show forwarding distribution ip igmp snooping vlan [VLAN identifier] command in Example 13-7 is used to find the platform index, which is used to direct the frames to the correct output interfaces. The platform index is also known as the Local Target Logic (LTL) index. This command provides the Multicast Forwarding Distribution Manager (MFDM) entry, which was discussed in the NX-OS “NX-OS Multicast Architecture” section of this chapter.

Example 13-7 IGMP Snooping MFDM Entry

Vlan: 115, Group: 239.215.215.1, Source: 0.0.0.0

Route Flags: 0

Outgoing Interface List Index: 13

Reference Count: 2

Platform Index: 0x7fe8

Vpc peer link exclude flag clear

Number of Outgoing Interfaces: 2

The Ethernet3/19 interface is populated by the membership report from the receiver. The Port-channel 1 interface is included as an outgoing interface because it is the mrouter port. Verify the platform index as shown in Example 13-8 to ensure that the correct interfaces are present and match the previous MFDM output. The show system internal pixm info ltl [index] command obtains the output from the Port Index Manager (PIXM). The IFIDX/RID is 0xd, which matches the Outgoing Interface List Index of 13.

Example 13-8 Verify the Platform LTL Index

MCAST LTLs allocated for VDC:1

============================================

LTL IFIDX/RID LTL_FLAG CB_FLAG

0x7fe8 0x0000000d 0x00 0x0002

mi | v5_f3_fpoe | v4_fpoe | v5_fpoe | clp_v4_l2 | clp_v5_l2 | clp20_v4_l3

| clp_cr_v4_l3 | flag | proxy_if_index

0x3 | 0x3 | 0x0 | 0x3 | 0x0 | 0x3 | 0x3 | 0x3 | 0x0 | none

Member info

------------------

IFIDX LTL

---------------------------------

Eth3/19 0x0012

Po1 0x0404

Note

If the IFIDX of interest is a port-channel, the physical interface is found by examining the LTL index of the port-channel. Chapter 5, “Port-Channels, Virtual Port-Channels, and FabricPath,” demonstrates the port-channel load balance hash and how to find the port-channel member link that will be used to transmit the packet.

At this point, the IGMP snooping control plane was verified in addition to the forwarding plane state for the group with the available show commands. NX-OS also provides several useful event-history records for IGMP, as well as other multicast protocols. The event-history output collects significant events from the process and stores them in a circular buffer. In most situations, for multicast protocols, the event-history records provide the same level of detail that is available with process debugs.

The show ip igmp snooping internal event-history vlan command provides a sequence of IGMP snooping events for VLAN 115 and the group of interest, 239.215.215.1. Example 13-9 shows the reception of a general query message from Port-channel 1, as well as the membership report message received from 10.115.1.4 on Eth3/19.

Example 13-9 IGMP Snooping VLAN Event-History

239.215.215.1|General

(*, 239.215.215.1) came on Eth3/19

02:19:33.729973 igmp [7177]: [7314]: SN: <115> Updated oif Eth3/19 for

(*, 239.215.215.1) entry

02:19:33.729962 igmp [7177]: [7314]: SN: <115> Received v2 report:

group 239.215.215.1 from 10.115.1.4 on Eth3/19

02:19:33.721639 igmp [7177]: [7314]: SN: <115> Report timer not running.

..starting with MRT expiry 10 for group: 239.215.215.1

02:19:33.721623 igmp [7177]: [7314]: SN: <115> Received v2 General query

from 10.115.1.254 on Po1

The Ethanalyzer tool provides a way to capture packets at the netstack component level in NX-OS. This is an extremely useful tool for troubleshooting any control plane protocol exchange. In Example 13-10, an Ethanalyzer capture filtered for IGMP packets clearly shows the receipt of the general query messages, as well as the membership report from 10.115.1.4. Ethanalyzer output is directed to local storage with the write option. The file can then be copied off the device for a detailed protocol examination, if needed.

Example 13-10 Ethanalyzer Capture of IGMP Messages on NX-2

1 02:29:24.420135 10.115.1.254 -> 224.0.0.1 IGMPv2 Membership Query, general

2 02:29:24.421061 10.115.1.254 -> 224.0.0.1 IGMPv2 Membership Query, general

3 02:29:24.430482 10.115.1.4 -> 239.215.215.1 IGMPv2 Membership Report group 239.215.215.1

NX-OS maintains statistics for IGMP snooping at both the global and interface level. These statistics are viewed with either the show ip igmp snooping statistics global command or the show ip igmp snooping statistics vlan [VLAN identifier] command. Example 13-11 shows the statistics for VLAN 115 on NX-2. The VLAN statistics also include global statistics, which are useful for confirming how many and what type of IGMP and PIM messages are being received on a VLAN. If additional packet-level details are needed, using Ethanalyzer with an appropriate filter is recommended.

Example 13-11 NX-2 VLAN 115 IGMP Snooping Statistics

Global IGMP snooping statistics: (only non-zero values displayed)

Packets received: 3783

Packets flooded: 1882

vPC PIM DR queries fail: 2

vPC PIM DR updates sent: 6

vPC CFS message response sent: 19

vPC CFS message response rcvd: 16

vPC CFS unreliable message sent: 403

vPC CFS unreliable message rcvd: 1632

vPC CFS reliable message sent: 16

vPC CFS reliable message rcvd: 19

STP TCN messages rcvd: 391

IM api failed: 1

VLAN 115 IGMP snooping statistics, last reset: never (only non-zero values displayed)

Packets received: 666

IGMPv2 reports received: 242

IGMPv2 queries received: 267

IGMPv2 leaves received: 4

PIM Hellos received: 1065

IGMPv2 reports suppressed: 1

IGMPv2 leaves suppressed: 2

Queries originated: 2

IGMPv2 proxy-leaves originated: 1

Packets sent to routers: 242

STP TCN received: 18

vPC Peer Link CFS packet statistics:

IGMP packets (sent/recv/fail): 300/150/0

IGMP Filtering Statistics:

Router Guard Filtering Statistics:

With NX-2 verified, the examination moves to the LHR, NX-1. NX-1 is the mrouter for VLAN 115 and the IGMP querier. The IGMP state on NX-1 is verified with the show ip igmp interface vlan 115 command, as in Example 13-12.

Example 13-12 NX-1 IGMP Interface VLAN 115 State

IGMP Interfaces for VRF "default"

Vlan115, Interface status: protocol-up/link-up/admin-up

IP address: 10.115.1.254, IP subnet: 10.115.1.0/24

Active querier: 10.115.1.254, version: 2, next query sent in: 00:00:06

Membership count: 1

Old Membership count 0

IGMP max response time: 10 secs, configured value: 10 secs

IGMP startup query interval: 31 secs, configured value: 31 secs

IGMP startup query count: 2

IGMP last member mrt: 1 secs

IGMP last member query count: 2

IGMP group timeout: 260 secs, configured value: 260 secs

IGMP querier timeout: 255 secs, configured value: 255 secs

IGMP unsolicited report interval: 10 secs

IGMP robustness variable: 2, configured value: 2

IGMP reporting for link-local groups: disabled

IGMP interface enable refcount: 1

IGMP interface immediate leave: disabled

IGMP VRF name default (id 1)

IGMP Report Policy: None

IGMP State Limit: None

IGMP interface statistics: (only non-zero values displayed)

General (sent/received):

v2-queries: 999/1082, v2-reports: 0/1266, v2-leaves: 0/15

Errors:

Interface PIM DR: Yes

Interface vPC SVI: No

Interface vPC CFS statistics:

DR queries sent: 1

DR queries rcvd: 1

DR updates sent: 1

DR updates rcvd: 3

The membership report NX-2 forwarded from the host is received on Port-channel 1. The query messages and membership reports are viewed in the show ip igmp internal event-history debugs output in Example 13-13. When the membership report message is received, NX-1 determines that state needs to be created.

Example 13-13 NX-1 IGMP Debugs Event-History

debugs events for IGMP process

04:39:34.349013 igmp [7011]: : Processing report for (*, 239.215.215.1)

(Vlan115), mrt: 10 sec

04:39:34.335543 igmp [7011]: : Sending SVI query packet to IGMP-snooping module

04:39:34.335541 igmp [7011]: : Send General v2 Query on Vlan115 (mrt:10 sec)

IGMP creates a route entry based on the received membership report in VLAN 115. The IGMP route entry is shown in the output of Example 13-14.

Example 13-14 IGMP Route Entry on NX-1

IGMP Connected Group Membership for VRF "default" - 1 total entries

Type: S - Static, D - Dynamic, L - Local, T - SSM Translated

Group Address Type Interface Uptime Expires Last Reporter

239.215.215.1 D Vlan115 01:59:49 00:03:49 10.115.1.4

IGMP must also inform the MRIB so that an appropriate mroute entry is created. This is seen in the show ip igmp internal event-history igmp-internal output in Example 13-15. An IGMP update is sent to the MRIB process buffer through Message and Transactional Services (MTS). Note that IGMP receives notification from MRIB that the message was processed and the message buffer gets reclaimed.

Example 13-15 IGMP Event-History of Internal Events

igmp-internal events for IGMP process

04:39:34.354419 igmp [7011]: [7564]: MRIB: Processing ack: reclaiming buffer

0x0x967cbe4, xid 0xffff000c, count 1

04:39:34.354416 igmp [7011]: [7564]: Received Message from MRIB minor 16

04:39:34.353742 igmp [7011]: [7566]: default: Sending IGMP update-route buffer

0x0x967cbe4, xid 0xffff000c, count 1 to MRIB

04:39:34.353738 igmp [7011]: [7566]: default: Moving MRIB txlist member marker

to version 12

04:39:34.353706 igmp [7011]: [7566]: Inserting IGMP update-update for

The message identifier 0xffff000c is used to track this message in the MRIB process events. Example 13-16 shows the MRIB processing of this message from the show routing ip multicast event-history rib output.

Example 13-16 MRIB Creating (*, G) State

04:39:34.355736 mrib [7170]::RPF change for (*, 239.215.215.1/32) (10.99.99.99)

04:39:34.354246 mrib [7170]::Doing multi-route add for "igmp"

04:39:34.354126 mrib [7170]:: OIF : Vlan115

04:39:34.354099 mrib [7170]::"igmp" add route (*, 239.215.215.1/32)

(list-00000000)[1],rpf Null 0.0.0.0(0.0.0.0), iod 0, mdt_encap_index 0, bidir: 0

, multi-route

04:39:34.353994 mrib [7170]::update IPC message (type:mts) from "igmp", 1 routes

When the MRIB process receives the MTS message from IGMP, an mroute is created for (*, 239.215.215.1/32) and the MFDM is informed. The RPF toward the PIM RP (10.99.99.99) is then confirmed and added to the entry.

The output of show ip mroute in Example 13-17 confirms that a (*, G) entry has been created by IGMP and the OIF was also populated by IGMP.

Example 13-17 IGMP Created MROUTE Entry on NX-1

IP Multicast Routing Table for VRF "default"

(*, 232.0.0.0/8), uptime: 10:08:39, pim ip

Incoming interface: Null, RPF nbr: 0.0.0.0

Outgoing interface list: (count: 0)

(*, 239.215.215.1/32), uptime: 01:59:08, igmp ip pim

Incoming interface: Ethernet3/18, RPF nbr: 10.1.13.3

(10.215.1.1/32, 239.215.215.1/32), uptime: 02:14:30, pim mrib ip

Incoming interface: Ethernet3/17, RPF nbr: 10.2.13.3

Outgoing interface list: (count: 1)

Vlan115, uptime: 01:59:08, mrib

Note

Additional events occur after this point when traffic arrives from the source, 10.215.1.1. The arrival of data traffic from the RP triggers a PIM join toward the source and creation of the (S, G) mroute. This is explained in the “PIM Any Source Multicast” section later in this chapter.

PIM Multicast

PIM is the multicast routing protocol used to build shared trees and shortest-path trees that facilitates the distribution of multicast traffic in an L3 network. As the name suggests, PIM was designed to be protocol independent. PIM essentially creates a multicast overlay network built upon the information available from the underlying unicast routing topology. The term protocol independent is based on the fact that PIM can use the unicast routing information in the Routing Information Base (RIB) from any source protocol, such as EIGRP, OSPF, or BGP. The unicast routing table provides PIM with the relative location of sources, rendezvous points, and receivers, which is essential to building a loop-free MDT.

PIM is designed to operate in one of two modes, dense mode or sparse mode. Dense mode (DM) operates under the assumption that receivers are densely dispersed through the network. In dense mode, the assumption is that all PIM neighbors should receive the traffic. In this mode of operation, multicast traffic is flooded to all downstream neighbors. If the group traffic is not required, the neighbor prunes itself from the tree. This is referred to as a push model because traffic is pushed from the root of the tree toward the leaves, with the assumption that there are many leaves and they are all interested in receiving the traffic. NX-OS does not support PIM dense mode because PIM sparse mode offers several advantages and is the most popular mode deployed in modern data centers.

PIM sparse mode (SM) is based on a pull model. The pull model assumes that receivers are sparsely dispersed through the network and that it is therefore more efficient to have traffic forward to only the PIM neighbors that are explicitly requesting the traffic. PIM sparse mode works well for the distribution of multicast when receivers are sparsely or densely populated in the topology. Because of its explicit join behavior, it has become the preferred mode of deploying multicast.

The role of PIM in the process of distributing multicast traffic from a source to a receiver is described by the following responsibilities:

Registering multicast sources with the PIM RP (ASM)

Joining an interested receiver to the MDT

Deciding which tree should be joined on behalf of the receiver

If multiple PIM routers exist on the same L3 network, determining which PIM router will forward traffic