CHAPTER 2

MPEG—Organization and Standard

You may have heard or read the abbreviation “MPEG.” You may have various associations attached to this four-letter term. Chances are that the associations you have are either with video on DVDs or with digital broadcast television. Other people immediately think about audio files on the Web or Napster or similar MP3 file-sharing systems. People think of all sorts of things when they hear the term MPEG. However, the truth about what MPEG actually stands for and what it represents is not generally known. And for the average consumer, there is no immediate need to. Many people don’t understand how a mobile phone works in detail or what GSM stands for and they can still make use of these technologies. This is somewhat the same with MPEG. It’s mostly invisible to the end consumer.

However, if one is doing business in a marketplace where MPEG represents a relevant technology, more knowledge about the facts behind this famous abbreviation are necessary. MPEG stands for “Moving Picture Expert Group.” In order to better understand any of the MPEG standards and MPEG-4 in particular, it is helpful to have some insights into what sort of animal MPEG is as an organizational body, how it works, where it lives, and—you get the idea.

In this chapter, we will elaborate on the organizational concepts behind MPEG. In order to better grasp MPEG-4, it is helpful to have a basic understanding of how and why the MPEG standards were created, and who is actually pushing for those standards. So we will begin to describe MPEG in terms of organizational entities.

2.1. MPEG—the Standardization Body

In public discussion, the label MPEG is used to denote completely different things. For some people, MPEG is a standard for video compression or just a compression technology; for others it is a file format for audio and video. Still others think of MPEG as a group, or a company, or an organization. Some people may have no clear picture of what MPEG actually is, and consider those who use it to be a funky crowd of video aficionados. In this section, we attempt to shed some light on different aspects of MPEG, when considering it as an organization.

2.1.1. Structure of ISO

Let’s start with the meaning of the abbreviation, which stand for “Moving Picture Experts Group.” MPEG is actually a nickname for a working group of the International Organization for Standardization, abbreviated as ISO (notice the flipping of the letters in the abbreviation). If you want to spend some time browsing through the ISO’s Web site, you can find it under www.iso.ch [1]. The ISO’s Web site provides a neat explanation of why the letters in the abbreviation ISO appear to be mixed up. Because “International Organization for Standardization” would have different abbreviations in different languages (for example, IOS in English; OIN in French, for Organization Internationale de Normalisation), it was decided at the outset to use a word derived from the Greek “isos,” meaning “equal.” Thus, whatever the country, whatever the language, the short form of the organization’s name is always ISO.

Furthermore, from the Web site you can learn that the ISO is a network of the national standards institutes, from 147 member bodies. A member body of ISO is the national body “most representative of standardization in its country.” Only one such body for each country is accepted for membership of the ISO. Member bodies are entitled to participate and exercise full voting rights on any technical committee and policy committee of ISO. A correspondent member is usually an organization in a country that does not yet have a fully-developed national standards activity. Correspondent members do not take an active part in the technical and policy development work, but they are entitled to be kept fully informed about the work that is of interest to them. Subscriber membership has been established for countries with very small economies. Subscriber members pay reduced membership fees that nevertheless allow them to maintain contact with international standardization. ISO has its Central Secretariat in Geneva, Switzerland, which coordinates the organization. ISO is a non-governmental organization (NGO). Therefore, unlike the United Nations, the national members of ISO are not delegations of the governments of those countries but come from public and private sectors. It is important that the ISO be an NGO because many of its members are part of the governmental structure of their respective countries, or are mandated by their government. Other members have their roots uniquely in the private sector, having been set up by national partnerships of industry associations. The following are a few examples of members in the ISO: Japan is represented by the JISC (Japanese Industrial Standards Committee), the U.S. is represented by ANSI (American National Standards Institute), and Germany by the DIN (Deutsches Institut für Normung). ISO’s national members pay subscriptions that meet the operational cost of ISO’s Central Secretariat. The dues paid by each member are in proportion to the country’s GNP and trade figures. Another source of revenue is the sale of standards, which covers 30% of the budget. However, the operations of the central office represent only about one fifth of the cost of the system’s operation. The main costs are borne by the organizations that manage the specific projects or loan experts to participate in the technical work. These organizations are, in effect, subsidizing the technical work by paying the travel costs of the experts and allowing them time to work on their ISO assignments.

Each of those national standards organizations establishes its own set of rules on who can be a member and how to join and how the national standardization work is administered. In many cases, it is the companies residing in a country which are the paying members of the national standards organization. The representatives of the companies working in standardization on a national level will get accredited by the national organization to participate in the international MPEG meetings and have access to the corresponding documentation, which is shared among the members of MPEG.

In the course of finalizing and publishing an international ISO standard, various ballots are held in which the ISO members, i.e., the national organizations, can vote to either accept the standard or reject it. Each country has just one vote. Therefore, the companies engaged in MPEG standardization have to discuss and agree on a national level in order to cast a vote for or against the adoption of a standard. This can lead to interesting situations when different parts of a multinational corporation participate in ballots as part of different national organizations. Wouldn’t it be ironic if, say, Siemens in Germany would have to go with a national vote submitted by the DIN, which is different than the vote submitted by the ANSI, which represents the U.S. branch of the company?

The final draft International Standard (FDIS) is circulated to all ISO member bodies by the ISO Central Secretariat for a final Yes/No vote within a period of 2 months. The text is approved as an International Standard if a ⅔ majority of the P-members of the TC/SC are in favor and not more than ¼ of the total number of votes cast are negative. If these approval criteria are not met, the standard is referred back to the originating Technical Committee for reconsideration in light of the technical reasons submitted in support of the negative votes received.

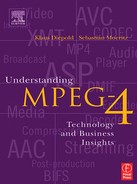

Within the ISO organization, MPEG is more formally referred to as “Coding of Moving Pictures and Audio,” which is a slightly less appealing name for the group. However, since ISO is less concerned with finding appealing names than with being well organized, MPEG is referred to by the string ISO/IEC/JTC1/SC29/WG11. This string describes the route one has to take through the organizational chart of the ISO to arrive at the Working Group 11, which is MPEG. But let’s see what additional information about the structure of ISO the route down the organizational chart reveals.

ISO/IEC has a Joint Technical Committee—JTC1—which deals with standardization in the field of information technology (IEC is yet another standardization body). The ISO has more than 200 technical committees dealing with all sorts of standardization activities. For example, there is TC176, which deals with quality management and quality assurance. This is where the ISO9000 suite of standards originates from. On the next level, we see SC29—Subcommittee 29—which deals with “coding of audio, picture, multimedia and hypermedia information.” A parallel subcommittee is SC24, which handles computer graphics and image processing. SC24 has issued standards such as VRML (Virtual Reality Modeling Language). Finally, we arrive at the Work Group level, abbreviated WG. MPEG is assigned to WG11. MPEG is not alone; sibling working groups exist, such as WG1—coding of still pictures—and WG12 multimedia and hypermedia. (Refer to Figure 2.1 to get a visual overview of the organizational structure.) These working groups are better known by their corresponding nicknames, such as “JPEG—Joint Photographic Experts Group” or “MHEG—Multimedia and Hypermedia Experts Group,” respectively.

Figure 2.1 Organizational overview of ISO and MPEG.

2.1.2. MPEG—the Agenda

What is MPEG as a group actually doing? What is it producing? As a partial answer, let’s have a look at the official description of MPEG’s “Area of Work,” which reads as follows:

Development of international standards for compression, decompression, processing, and coded representation of moving pictures, audio, and their combination, in order to satisfy a wide variety of applications.

In other words, MPEG creates a technical specification for the (de)compression of all sorts of audio-visual data. MPEG is not concentrating on one particular field of application, but rather tries to supply standardized technology at a fairly generic level in order to embrace as many application fields as possible.

The program of work for the MPEG working group, as taken from the “Terms of Reference,” to found at the ISO Web site reads as follows:

• MPEG shall serve as the responsible body within ISO/IEC for recommending a set of standards consistent with the Area of Work. MPEG shall cooperate with other standardization bodies dealing with similar applications.

• MPEG shall consider requirements for interworking with other applications such as telecommunications and broadcasting, with other image coding algorithms defined by other SC29 working groups and with other picture and audio coding algorithms defined by other standardization bodies.

• MPEG shall define methods for the subjective assessment of quality of audio, moving pictures, and their combination for the purpose of the area of work. MPEG shall assess characteristics of implementation technologies realizing coding algorithms of audio, moving picture, and their combination. MPEG shall assess characteristics of digital storage and other delivery media targets of the standards developed by WG11.

• MPEG shall develop standards of coding of moving pictures, audio, and their combination, taking into account quality of coded media, effective implementation, and constraints from delivery media.

• MPEG shall propose standards for the coded representation of moving picture and audio information, and information consisting of moving pictures and audio in combination. MPEG shall propose standards for protocols associated with coded representation of moving pictures, audio, and their combination.

2.1.3. MPEG Meetings

In order to fulfill its mission as stated in the program of work, MPEG holds meetings about four times a year, in locations that are scattered around the world. An average MPEG meeting consists of 250 to 350 participants from more than 20 countries. The meetings serve as a point of personal communication and cooperation to fuse the many results that have been created all over the world. Most importantly, consensus on all technical questions must be achieved to actually generate a final product, which is an MPEG standard. A typical MPEG meeting lasts 5 days, starting on a Monday morning and ending on a Friday night—days that are packed with activities and concentrated work to seriously push ahead on the progress toward finalizing a standard. The work continues between the meetings as the delegates return to their companies, research organizations, or universities. For this purpose, each meeting creates a list of so-called ad-hoc groups. Each ad-hoc group is convened by a chairman. The purpose of the ad-hoc group is to work on specific problems or questions that need to be resolved or answered until the next meeting. The goal of the ad-hoc group is formulated as a mandate. Whoever feels entitled and competent to contribute can join the ad-hoc group. Most of the correspondence for the ad-hoc group is handled via e-mail reflectors and occasionally meetings are held. E-mail reflector and meetings are organized by the chairman, who is mostly an acclaimed expert for the particular issue at hand. By the time the next MPEG meeting is held, the ad-hoc group needs to present a written report about the findings and discussions. The report is expected to contain recommendations for MPEG to be accepted or discussed during the meeting. An ad-hoc group officially ceases to exist as soon as the next MPEG meeting begins. However, it may well be the case that the ad-hoc group is instantiated again at the end of this meeting since the problem or issue is not fully resolved and needs further work. In fact, a lot of work towards the standard is done under the umbrella of ad-hoc groups. There are somewhere between 10 and 20 ad-hoc groups established at the end of each meeting.

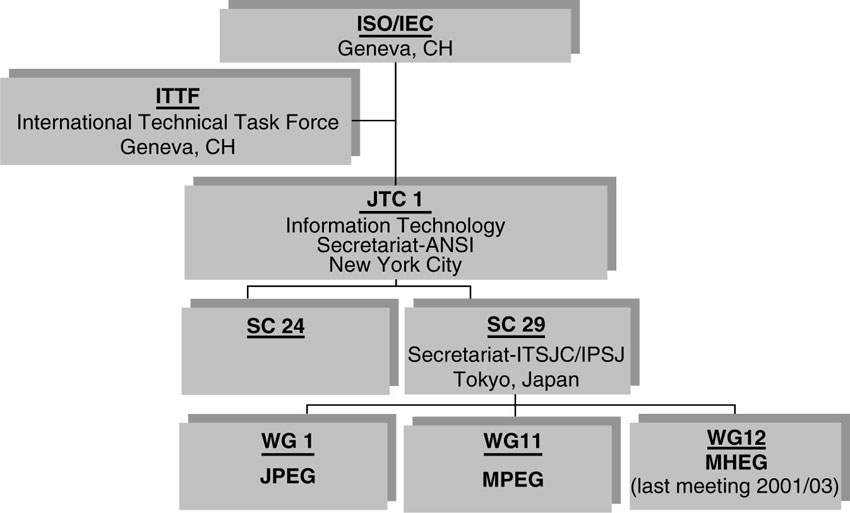

2.1.4. MPEG Subgroups

We have arrived at the Work Group level within the ISO org chart, and MPEG has been identified as WG11. The actual group counts several hundred people worldwide, actively contributing to the creation of MPEG standards. An MPEG meeting of 250 to 350 people is too big a group to be convened as one single crowd, and the topics dealt with are quite diverse and not of interest to all participants. Therefore, MPEG itself is subdivided into subgroups. Each of the subgroups deals with a specific topic and includes all the experts in that particular field. A subgroup is managed by a chairman, who convenes the subgroup meetings and reports to the MPEG convener. While the individual chairpersons change over time, the MPEG convener, Leonardo Chiariglione, has been a constant throughout the years since he started MPEG in the late 80s. More information on Leonardo Chiariglione and his involvement with multimedia standards can be looked up in the article [2]. In Figure 2.2 the various subgroups of MPEG are graphically depicted.

Figure 2.2 Subgroups of MPEG.

The following sections describe the subgroups in MPEG and their respective agendas.

2.1.4.1. Requirements Subgroup

The task of the requirements group is to collect the technical requirements that the new standard shall satisfy. This sounds rather modest, but there are MPEG delegates who consider the requirements group as the head (though not the brain) of MPEG. The requirements group also deals heavily with strategic questions. Profiling policies are discussed within this group, as are the definitions of the profiles. Profiling discussions typically occur toward the end of a standardization campaign, since the technical groups need to first conclude much of their technical work. We will go over the concept of profiles and levels in a later chapter.

Requirements are also the group that collects new ideas and suggestions for new work items or emerging application domains that may require standardization of technology. In addition, this group gathers experts from various fields (audio, video, systems, etc.) to discuss the overall strategy and decision-making procedures and policies for activities such as establishing profiles. Even though the requirements group produces quite a number of documents throughout the process, none of these documents will become a part of the finished standard.

2.1.4.2. Video Subgroup

The video subgroup combines all technical experts who are concerned with video signal processing. This is where the new video compression technologies are actually proposed, discussed, and tested, and where the technical decisions are made about which video coding technology will make it into the standard. The video group is responsible for the technical content that is specified in Part 2, the visual part of the MPEG standard. (We will tell you more about the individual parts of MPEG standards in a later chapter of this book.) Traditionally, the video subgroup is the largest group in MPEG.

2.1.4.3. Audio Subgroup

The audio subgroup is very similar to the video group, i.e., it is a gathering of internationally acclaimed technical experts. The group deals with all aspects of audio coding and audio-signal processing. Note that this is the group where MP3, the Internet surfers’ favorite music file format, has been specified, among other technologies in the field. Hence, the audio subgroup is responsible for the text of the standards document that contains the audio specification (typically Part 3 of an MPEG standard but also Part 10 in MPEG-2).

2.1.4.4. Systems Subgroup

The third big technical group is the systems subgroup. This group also consists of international experts who deal with all technical questions concerning the combination and the packeting of audio and visual data along with other ancillary data. Part 1, the systems part of most MPEG standards, is produced by the systems group. Traditionally, the results from the systems group are extremely important for things like getting a TV system actually going. However, the public relations for the systems group has not always reflected the importance of its accomplishments.

2.1.4.5. Test Subgroup

The test group is another example of a technical subgroup whose accomplishments are publicly underrepresented. Nevertheless, since MPEG puts an emphasis on thoroughly assessing the quality of their work, testing the standardized technology in order to verify that the requirements are met by the new standard is another essential part of MPEG. The test subgroup is responsible for designing, specifying, and actually performing and evaluating various types of testing activities of MPEG. As a major responsibility, the test group issues Part 4 of the MPEG standards, which describes the methodology to test for compliance with the specifications in the video, audio, and systems parts of the standard. Part 4 of the standards is traditionally one of the last parts to be issued and published, because the test group has to wait until the other technical groups are done with their job and until the requirements subgroup has concluded its profiling discussion.

Since testing is not necessarily a field where companies and individuals can position their Intellectual Property Rights (IPR) or patents, this is a group where the head count of participants is always subject to variations.

2.1.4.6. Implementation Study Group

The implementation study group (ISG) is another technical subgroup. Its task is to advise the big technical groups regarding implementation questions. A typical question ISG deals with is which algorithm is more complex, A or B, and by how much is it more complex? Often this question comes up, for example, if the audio or video group needs to make a decision on which algorithm to pick for the specification, in particular if both alternatives produce about the same result in terms of coding performance. Other than that, ISG does not produce a normative part of an MPEG standard. As part of the MPEG-4 activities, ISG creates a technical report, which includes a reference hardware description for various coding tools.

2.1.4.7. Synthetic Natural Hybrid Coding (SNHC) Subgroup

MPEG-4 contains technologies that process synthetic visual data. Synthetic in this context includes techniques from computer graphics and animation, that is, from a field where the moving pictures and images are synthesized by the computer rather than captured by a camera. It has been found in the course of the MPEG-4 project that this domain is characterized by different experts, a different culture, and other technical features when compared to the video subgroup. Those differences made it beneficial to combine all efforts and experts for synthetic visual data in a separate subgroup. The resulting SNHC subgroup does not issue a separate part of the MPEG standard. SNHC instead merges the results and specifications with the work of the video subgroup to jointly create the visual part of MPEG.

2.1.4.8. Liaison

MPEG as a standards work group is not alone in the world of technology standards. There are numerous organizations and technical groups, which deal with topics related to the MPEG work items. In order to avoid duplicated efforts and conflicting specifications, MPEG has created liaisons to those organizations. A typical example is the liaison to DVB (Digital Video Broadcast) or SMPTE (Society of Motion Picture and Television Engineers). Both organizations are creating specifications for their particular field of application. Those specifications use MPEG standards as building blocks. It is important for all parties involved to stay tuned, in order to foster the development and adoption of complementary standards and to avoid conflicts or mismatches. Another very important task for the liaison group is to coordinate the cooperation with the Video Coding Experts Group (VCEG), a separate video-coding experts group under the control of the ITU (International Telecommunications Union). VCEG has created the other family video coding standards, denoted by abbreviations like H.261, H.262, H.263, and more recently, H.264. Traditionally, there has been a kind of cooperation/competition between MPEG and VCEG. The constructive cooperation between the two groups is evidenced by the fact that H.262 and the video part of MPEG-2 actually contain identical text, which has been developed jointly. The competition part is represented by the race for the best video coding technology during the MPEG-4 project, where the VCEG has won the beauty contest against the MPEG-4 codec with the development of their H.26L technology. Based on this result, VCEG and MPEG joined forces to establish the joint video team (JVT), which further progressed on the H.26L design to arrive at what is now MPEG-4 AVC (Advanced Video Coding) in the ISO world and H.264 in the ITU world.

2.1.4.9. Head of Delegations

Each national delegation participating in MPEG assigns a head of delegation (HoD). All those HoDs meet in an assembly to discuss and decide on various logistical and organizational matters of relevance to running MPEG. HoDs also have the pleasure of deciding on future meeting locations.

2.1.5. Other Standardization Organizations

In addition to the ISO, there is the ITU (formerly CCIR), the IEEE (Institute for Electrical and Electronics Engineers), the ETSI (European Telecommunication Standards Institute) at the international level, as well as national standardization bodies such as DIN and ANSI.

Parallel to those standards bodies, there are quite a number of industry consortia, which are also pushing actively for standardized technology, mostly for particular fields of applications or markets. Examples are the DVB (Digital Video Broadcasting) project, DAVIC (Digital Audio Video Council), the DVD-Forum, IETF (Internet Engineering Task Force), Web3D, W3C, DMTF (Distributed Management Task Force), 3GPP (3rd Generation Partner Project) and ISMA (Internet Streaming Media Alliance), to name just a few.

In some cases, standards are set by individual companies when they release products that turn out to be so successful that they reach the status of a de-facto standard. Examples of this type of standard are the audio cassette as introduced by Philips, the Audio Compact Disk (CD) as proposed by Philips and Sony, analog VCR introduced by JVC, and finally, the Windows operating system by Microsoft. Alternatively, a company can submit its technology to a standards body such as ISO and ask if it can be standardized. Sun Microsystems with Java is an example of this last procedure.

2.2. MPEG—the Standardization Process

There exist quite a number of de-facto standards and specifications in the audio-visual field. Most of them have been developed and deployed by companies to serve the needs of a particular industry. There are other cases where the standard is the result of one product being successfully introduced to the market where it has become universally accepted. Take the Philips compact audio tape format as a classic example. For the most part, this style of standardization has resulted in products, services, and applications pertaining to one industry not being interoperable with those of another industry. As long as the borders between industries are clear, this has not constituted a major problem. Such borders are built on a tight integration of services and technology, i.e., a particular technology is intimately tied to one particular service. This was particularly true in the good old days of analog technology. Take for example the standard telephone system which, way back when, was used almost exclusively for voice communications. Only later, with the advent of analog modems, did the telephone networks come to be used for transmitting faxes or other data services. Today, digital audio and video content is communicated as digital signals, or bits. The bare bits can be transmitted over any type of transmission channel, including telephone networks, which increasingly have transformed into data networks. The Internet is a packet-switched data transmission network, which doesn’t care what the transported bits are actually representing. It could be voice data (IP telephony), or video (media streaming) or just plain old file transfer—audio and video are used in multiple instances. This is a major problem for those producing content, those consuming it, and the service providers in between. The problem originates from the fact that the universality of digital data networks removes the traditional separation between media, services, industries, and even products.

In former times, a telephone network was only a telephone network and was entirely distinct from a television network, which again was entirely different from a radio network. This also translates to different technologies being used for each of such networks. This was even more true for the companies and industries associated with the networks. On one hand, there was a telephone network company taking care of the telephone network and the pertaining voice service. This business was completely different from operating a television network. A telephone network operator was not in competition with a television network operator. A TV set was usable for watching TV or videos exclusively. With digital technology, all those boundaries are vanishing. A telephone network operator can start to distribute video and audio content through his network, just as much as a television network operator can think about offering competitive telecommunication services based on his infrastructure. If a consumer owns a computer it is possible to use it for watching movies, listening to music, sending e-mails or even do long-distance phone calls over the Internet. More problems come from the possibility to copy digital data without any loss of fidelity or quality. This taps into yet another topic—the field of digital rights management, which we will not cover here.

From its earliest stages, MPEG has taken a distinguished approach. When developing a standard, participation of all industries potentially affected by the new technology has been sought and obtained. Look at the large number of individuals from diverse industries attending MPEG meetings and subscribing to MPEG e-mail reflectors. Requirements from a wide range of industries have been collected and a set of commonly shared technologies capable of satisfying the fundamental requirements of all participating industries has been developed and standardized.

As explained in the previous section, MPEG can rightfully be considered as a group. However, MPEG also incorporates a specific standardization program or process. This process has been shaped through years of intense standardization work practiced by the many MPEG colleagues. In particular, the MPEG Convener has initiated and brought forward this process by means of his dedication and vision. Therefore, the acronym MPEG is intimately connected to the name of Leonardo Chiariglione.

Let’s have a look at the process that has ultimately led to successful media standards such as MPEG-1, MPEG-2, and, of course, MPEG-4. There is no such thing as MPEG-3. Why this is will be explained a little later in this book. But before we dive into the description of the standardization process, we will discuss briefly the challenges standardization has to face. The MPEG process will then be shown as one method of coping with those challenges.

2.2.1. Challenges for the Standardization Process

Any standardization process, and MPEG is no exception, is exposed to a number of challenges, which make standardization a difficult and sometimes frustrating endeavor. The thing that makes MPEG particularly challenging is the fact that it is located in the field of information technology, which can be characterized by extremely fast innovation cycles and a global approach to developing products and services. Keep this in mind as you read the following sections.

2.2.1.1. Frozen Technology

Formulating a standard implies that the parties agree on a set of technological features, which will be subsequently specified in detail and kept fixed over some period of time. Any selection of technological features for the standard represents a mere snapshot of the technology available at the time the standard is crafted. This may lead to a situation in which the standard consists of frozen technology that no longer follows the most recent trends in research and advanced development. The result is that the standard is not adopted by industry, since complying with the standard cuts the industry off from further improvements.

2.2.1.2. Obsolete Technology

The challenge of obsolete technology being adopted as part of a standard is very closely related to the case described in the previous subsection. This can happen if the finalization of the standardization process is delayed unduly until the technology selected has turned stale and obsolete. Timeliness of publication for a new standard is therefore of prime importance. Again, the above situation will lead to the standard not being adopted, since nobody wants to have products and services which are tied to a standard embodying last year’s technology.

2.2.1.3. Premature Technology

A standards body may be tempted to select premature technology in a misled effort to escape the problem of adopting technology that will be obsolete by the time the standard is published. Premature technology means that it becomes evident after the standardization campaign is finished that the stuff won’t work the way it was expected. The result is that the standard will not be used since nobody wants to build products based on a standard that doesn’t work.

2.2.1.4. Over- and Under-Specification

If a standard is tuned to match a particular application field, then it may turn out that the technology tries to fix too many details of the application field. Therefore, it can’t live outside of the original target market and application, which unnecessarily limits the applicability and success of the standard. This situation represents a case of over-specification.

Underspecification refers to too many options to choose from. The implementer has a long list of options to choose from or a substantial number of loose ends. Expressed in other words, the standard does not provide sufficient technical detail that allows the implementation of standards compliant products without making additional design choices. This way, interoperability between products is sacrificed, and the standard suffers from underspecification. Another case of underspecification is given if the standard comprises many alternative solutions for achieving a particular functionality. These alternatives are also often called “options,” where the implementer either makes a choice for one solution or opts for implementing all options. The first situation again puts interoperability in jeopardy while the second approach tends to make products unnecessarily costly.

2.2.1.5. Timeliness of a Standard

Information technology is a fast-moving field. This sounds like a fairly obvious statement. However, from a standards point of view this fact makes standardization a difficult task. Besides the challenges mentioned so far, it is also important to have a standard that will allow the industry to begin building products and setting up services by the time the markets are in existence. It is definitely too late to start a standardization effort once the selling of potentially incompatible products has started. At that point, a product or a product family would need to be adopted that could serve as a de facto standard. It is therefore important to have the standards ready in time, which implies that standards people need to have a magic crystal ball to foresee future opportunities, predict the upcoming technologies, and thus begin the standards development process early enough.

2.2.2. Patents and Intellectual Property Rights

Standards and patents—a contradiction? This is a tricky subject, which we will shed some light on in the chapter dealing with the business interface. ISO, and hence MPEG, acknowledge the existence of Intellectual Property Rights (IPRs) and patents. Here we are talking about the patents and IPRs associated with the technology contained in the standard. There are also IPRs and copyrights associated with media items such as a movie or a piece of music. We will defer the latter discussion to another chapter.

To date, ISO has not dealt with issues of licensing of MPEG technology, as this is certainly outside of the jurisdiction of such an organization. Licensing of technology is a subject that should be addressed and handled by the companies or individuals involved. However, ISO does have a policy to deal with patents. This policy requires companies who have actively contributed technology to the standard to sign a so-called “Patent Statement.” This document simply states that the respective company will grant access to the patents associated with the standard on non-discriminatory and reasonable terms. This only applies to products that claim to be compliant with MPEG.

Times are changing, and so are the established patterns of doing business, due to new technical means and a changing environment. This also creates a new challenge for standardization. Until recently, traditional communication standards were implemented in terms of physical devices (i.e., the technology was embodied in hardware implementations). The pertaining intellectual property rights are associated with the hardware device carrying the technology, which is protected by patents. With the advent of more recent digital technologies, a standard is likely to be implemented by a processing algorithm that runs on a programmable device, i.e., the implementation takes place via a software application executed on a PC or a similar programmable device. Actually, the standard becomes a piece of computer code whose IPR protection is achieved by protecting the copyright of the source code. Digital networks, such as the Internet or wireless communication networks, are pervasive, and it is possible for a PC or a PDA to run a multiplicity of algorithms downloaded from the network.

According to the traditional pattern of the ISO dealing with IPRs, a patent holder is supposed to grant fair, reasonable, and non-discriminatory terms to a licensee. However, there are significant possibilities for the patent holder to actually discriminate against the licensee. The discrimination occurs because the patent holder is assuming a certain business model that is based on the existence of hardware, whereas the licensee may have a completely different model that is based on the existence of a programmable device and software. Questions naturally arise concerning how to interpret the notion of “fair, reasonable and non-discriminatory” in this context and how the environment for such policies has changed through the advance of technology.

There are more changes that create new challenges for standardization. Digital technologies cause convergence. In the analog domain, there is a clear separation between the devices and systems that make communication possible and the messages themselves. Take rental videos as an example. When a video cassette is rented by a consumer for use on a home video player, a remuneration is paid to the holders of the copyright for the movie. The holders of patent rights for the video recording system receive a remuneration at the time the player is purchased. In the digital domain, an audio-visual presentation may be composed of some digitally encoded pieces of audio and video, text, and drawings. There is a software application that manages the user interaction and the access to the different components of the presentation. If the application runs on a programmable device, the intellectual property can only be associated with the bits—the digital content and the executable program code, which may have been downloaded from the network. Since, in a digital world, the playback device, (i.e., the software application) as well as the content (i.e., the movie) are nothing but bits, they can easily be copied without loss of functionality or quality and distributed to other consumers. This can happen without creating a stream of money to remunerate neither the implementer of the playback software nor the creator of the digital media item. This is the root for all activities to prevent piracy of software, video, or audio. The case for the MP3 audio format serves as a good example for both aspects. The software players for MP3 audio files were distributed freely until the inventors of the technology stepped in and asked for patent licenses. The fair remuneration for the content owners in this context is still an ongoing discussion.

2.2.3. Principles of MPEG Standardization

Since the technological landscape changed from analog to digital, with all the associated implications, it was essential that standard makers modify the way by which standards are created. Standards must offer interoperability, across countries, services, and applications, and not a “system-driven approach” in which the value of a standard is limited to a specific, vertically integrated system. This brings us to the toolkit approach in which a standard must provide a minimum set of relevant tools, which, after assembled according to industry needs, provide the maximum interoperability at a minimum of complexity and cost. The success of MPEG standards is mainly based on this toolkit approach, bounded by the “one functionality, one tool” principle. In conclusion, MPEG wants to offer the users interoperability and flexibility, at the lowest cost and level of complexity.

In the previous section, we listed a number of challenges the standardization process has to face. MPEG—or Leonardo Chiariglione, the MPEG convener—has developed a vision and an approach that apparently circumvents possible pitfalls and shortcomings. The current subsection presents a list of concepts by which MPEG is governed to produce successful standards.

2.2.3.1. A Priori Standardization

If a standards body is to serve the needs of a community of industries, it must start the development of standards well ahead of the time the standard will be needed. This requires a fully functioning and dedicated strategic planning function, totally up to date on the evolution of the technology and the state of research.

2.2.3.2. Systems vs. Tools

Many industry-specific standards bodies create standards that fail because they attempt to specify complete systems to completely match specific applications at hand. As soon as such applications are slightly transformed, the corresponding standard may lose its validity. Furthermore, each industry then requests its particular set of standards. This hampers economy of scale as well as the quick adaptation of technology to new product trends and innovative applications.

For a standard to supply longevity of the specification and to support economy of scale, the responsible standards bodies should collect different industries under one umbrella, where each industry needs standards based on the same technology. The target products and services of course may differ from industry to industry. This goal is accomplished if a standard like MPEG comes in individual components that are commonly referred to as “tools.” Each industry then has the choice to pick tools from the standard and combine them according to the needs originating from the application. This way a hierarchy of standards can be created using lower-level specifications as fixed building blocks. As a good example of this concept, consider the Digital Video Broadcasting system specification, which builds on top of the MPEG-2 standard as a basic building block for audio and video coding.

2.2.3.3. Specify the Minimum

When standards bodies are made up of a single industry, it is very convenient to add to a standard those nice little aspects that bring it nearer to a product specification. This is the case with industry standards or standards used to enforce the concept of “guaranteed quality” so dear to broadcasters and telecommunication operators because of their “public service” nature. To guard against the threat of over-specification, only the minimum that is necessary for interoperability should be specified. The extra elements that are desirable for an industry may be unnecessary for—or even alienate—another industry.

2.2.3.4. One Functionality—One Tool

This is a strict rule within MPEG, which is based on common sense. Too many failures in standards are known to have been caused by too many options. The reader may wonder why the existence of options and choice is considered to be detrimental to the quality of a standard, when this is usually taken as a bonus. If there is choice in tools for the same functionality, how would all the receivers out in the field know which choices have been taken by the transmitters at the sending side? As a solution to this dilemma, a receiver has to either incorporate all alternative tools for one functionality, or the designer himself has to make a choice. In the one case, the receiver device is unnecessarily burdened by superfluous tools and thus is overly expensive. In the other case, no one can guarantee that there is interoperability between receivers and transmitters of different manufacturers.

2.2.3.5. Relocation of Tools

When a standard is defined by a single industry, there is generally a certain agreement about where a given functionality resides in the system. In a multi-industry environment, this is usually not the case because the location of a function in the communication chain is often associated with the value added by a certain industry. The technology must be defined not only in a generic way, but also in such a way that the technology can be located at different points in the system.

2.2.3.6. Verification of the Standard

It is not enough to produce a standard and collect cool technology. After the work is completed, evidence must be given to the industry that the work done indeed satisfies the requirements originally agreed upon, which may be considered as “product specification” for standardization. This is obviously also an important promotional tool for the acceptance of the standard in the marketplace.

At the end of a standardization campaign, the new standard will be verified by formal tests. Besides the verification of functionalities, the verification tests include the assessment of the coding performance for video and audio codecs. To this end, the new codecs are compared with previous versions of MPEG standards or carefully selected reference codecs. Setting up and performing meaningful verification tests can be a challenge and can require substantial effort and know-how. The MPEG group also has the ambition for its tests to be as objective and unbiased as possible. This is considered the only feasible way for the standard to gain credibility in the marketplace.

The challenge is to not test just one particular implementation of the standard and compare it to one particular product. More specifically, MPEG doesn’t want to test the performance of a particular encoder, as this is not covered by the standard. Comparing optimized commercial encoders with reference implementation doesn’t give meaningful results. If you have ever tried to make sense out of results originating from, for example, a test performed by press people, you may have an idea how difficult this can be. Results of MPEG tests can be found on MPEG’s home page http://www.chiariglione.com [3].

2.2.4. What Is Actually Standardized by MPEG?

Standardization in general and MPEG in particular are facing a whole collection of challenges, which have been listed previously. One fundamentally important approach taken by MPEG to deal with those challenges is that it refrains from specifying a video or audio encoding machinery. To some people, that’s surprising. However, this fact has to be stressed and underlined several times as it is an essential aspect for any MPEG standard.

MPEG only specifies the bit stream syntax and the decoder semantics. MPEG specifies the bit stream by setting the rules for how to line up the ones and zeros in a sequence, in order to form a legal serial bit stream that carries the compressed media data. Specifying the decode semantics means that MPEG specifies the meaning of those bits so that a decoder unambiguously knows how to process the incoming serial bit stream in order to recreate the original video data.

The actual encoding process, that is, the mechanism for creating the bits for the compressed representation of the original video data, is not covered by the standard. This holds for any type of visual data as well as for audio. Similar concepts are employed for other types of media standards as well, such as JPEG, JPEG2000, and the family of ITU video codecs denoted by H.26x.

This concept of seemingly restricted standardization enables the further progress of encoding technology. The lack of encoder specification allows for competition around who has the best encoder (best image quality for the bit), the fastest encoder, or the cheapest encoder. Competition on the decoder side concerns price, performance, and features of the decoding device.

How does this concept then play out? Everybody can create an encoder to his or her liking. In theory, you can even use a text editor to create a legal video file that can be played back on a compliant decoder. The only requirement is that the created bit stream has its syntax bits set according to the specification. Nowhere in the standard does it say anything about the pictures needing to look good. The fact that a coded bit stream adheres to the specification set out by MPEG does not automatically mean that the decoded images will look good when played back by a decoder and watched on a screen. The achievable quality of the decoded audio or video streams is determined by the capabilities of the encoder. And the encoder can be anything from a very simple and cheap piece of software that has to rely on manual interaction from a human operator, resulting in very slow encoding process, to a highly sophisticated real-time–capable device that has gone through many cycles of optimization.

The MPEG-4 standard opens new frontiers in the way users will play with, create, re-use, access, and consume audiovisual content. The MPEG-4 object-based representation approach, where a scene is modeled as a composition of objects, both natural and synthetic, with which the user may interact, is at the heart of the MPEG-4 technology.

2.2.5. The Standardization Process

MPEG follows a development process, the major steps of which will be explained in the next subsections.

2.2.5.1. Exploration Phase

MPEG is regularly exploring new fields of application in order to plan for new work items. In an initial step for strategic planning, MPEG members are asked for input in order to identify relevant new applications. Members understand “relevance” to mean that the applications feature properties that make it necessary or at least beneficial to develop a standard for these markets to unfold. This is a point that needs careful consideration by the group as a whole to avoid launching work items that will lead nowhere.

Through a sequence of technical discussions, MPEG identifies the technical functionalities that are needed by the applications taken into consideration. This discussion takes place in open seminars and workshops where industry experts are invited to present and discuss their subjects. The exploration phase typically lasts for about 6 to 12 months, depending on the extent of the search.

2.2.5.2. Requirements Phase

Based on those identified functionalities, MPEG collects and describes the technical requirements in such a way that a set of requirements can be identified that are common for different applications across the areas of interest. MPEG also identifies requirements that are not common but still relevant. In this phase, a scope of work is established.

As a final step in this phase, a public call for proposals is issued, asking all interested parties to submit relevant technology to fulfill the identified requirements and functionalities. The development of the requirements takes about 6 to 12 months, which somewhat parallels the exploration phase.

2.2.5.3. Competitive Phase

In the competitive phase, all submitted proposals are evaluated for their technical merits and compared to each other. The evaluation is done in a well-defined, adequate, and fair evaluation process. The “beauty contest” can entail subjective testing, objective comparisons (e.g., coding performance and complexity), and evaluation by experts to fully assess the strengths and weaknesses of the submissions. In any case, the detailed evaluation process has been developed by MPEG members a priori, and is published openly, together with the call itself. This way all submitters of new technology know in advance how performance of proposals will be assessed. As a result of the evaluation, the technology best addressing the requirements is selected as a starting solution for the standard base on which further development efforts towards the final standard will emerge. The competitive phase covers about 3 to 6 months, which partly overlaps with the requirements phase.

2.2.5.4. Collaborative phase

During the collaborative phase, all the MPEG members collectively improve and complete the most promising tools identified at the evaluation. The group identifies the individual pieces of technology that offer excellent solutions to certain elements of the requirements. Those pieces are identified, and are subsequently integrated into the starting solution, which has been selected by the end of the competitive phase. So begins a collaborative process to draft and improve the standard. The collaboration includes the definition and improvement of a “Working Model,” which embodies an early version of the standard. The Working Model evolves by comparing different alternative tools with those already in the Working Model. This process is based on the so-called “Core Experiments” (CE), which will be explained in more detail.

The collaborative phase is the major strength of the MPEG process—hundreds of the top experts in the world, from dozens of companies and universities, work together for a common goal. It is therefore no surprise that this super-team traditionally achieves excellent technical results, justifying the need for most companies to at least follow the process, even if they cannot be directly involved.

The collaborative phase lasts for 1 year following the completion of the competitive phase. The total process up to this point takes about 2 years.

2.2.6. Further Steps and Updates

The standardization process doesn’t actually end with the publication of the agreed-upon standard. The standard and its parts are a living thing that continues to develop even after it has “grown up.” This occurs by means of updates to the standard. These can be done in various ways, depending on the type of update. However, special emphasis is given to maintaining backward compatibility of the specification whenever this is a viable option, although it is not always possible. The latest addition of video coding technology to the visual part of MPEG-4 is commonly referred to as MPEG-4 AVC (Advanced Video Coding) or H.264 alternatively. This is an example of how the standard can be updated while sacrificing backward compatibility in favor of significant technical improvements. A similar process has taken place in the audio coding regime, where AAC (Advanced Audio Coding) has been added to MPEG-2 as a separate part, which is not backward compatible to formats such as MP3.

The options for updating the standard allow new technology to be added as it becomes available and as it shows significant performance gains over the existing specification.

Another need to change the standard originates from the detection of errors in the specification after its completion. There is a particular process to amend the standard or to correct errors in the specification that are detected after completion and publication of the documents. To this end, MPEG issues Corrigenda to include the fixes in an updated version of the standard. A Corrigendum is a particular type of document to describe fixes for technical problems that have been detected in an already finalized and published part of a standard.

MPEG always keeps its door open and is alert to monitor the progress made in the ongoing search for technology enhancements. To aid in this process, MPEG issues “Calls for Evidence,” which allow interested parties to present new/improved technologies within the scope of work covered by MPEG’s charter. The new technology needs to demonstrate significant benefits to trigger new standardization actions. Those actions may include issuing amendments to the existing standards or initiating an entirely new standard.

2.2.7. Standardization Work in the Collaborative Phase

The previously described process is not rigid. Some steps may be taken more than once and iterations are sometimes needed. This was the case during the MPEG-4 project. The time schedule, however, is always closely observed by MPEG, and the convener in particular. Although all technical decisions are made by consensus, the process keeps running at a fast pace, allowing MPEG to timely deliver technical solutions and a stable standard.

As stated above, two working tools play a major role in the collaborative development phase that follows the initial competitive phase: the Working Model and Core Experiments (CE). Both elements will be described in more detail below.

2.2.7.1. Working Model

In MPEG-1, the (video) working model was called Simulation Model (SM); in MPEG-2, the (video) working model was called Test Model (TM). Every once in a while, you may run across a statement that tests whether MPEG-2 video coding has been done on the basis of TM-5. This refers to the MPEG-2 Test Model version 5, which was the final version of the test model during MPEG-2. This version has been implemented in software for test purposes. It is freely available as open-source software and is sometimes loosely referred to as the “Eckard coder,” named after Stefan Eckard, a PhD student at Tech-nische Universität München (TUM), who was responsible for a substantial part of the software implementation work.

In MPEG-4, the various working models were called “Verification Models” (VM). Besides the VMs for video, there were independent VMs for the audio, SNHC, and systems developments. A Verification Model consists of a textual description of the current version of the respective system, as well as a corresponding software implementation such that experiments performed by multiple independent parties will be based on a common framework. Hence, the experiments should produce essentially identical results. The VMs enable the checking of the relative performance benefits of different tools, as well as the measuring of performance improvements of selected tools.

The first MPEG-4 VMs were built after screening the submissions that came in answer to the call for proposals. The first VM (for each technical area) was not the best proposal but a combination of the best tools, independent of the proposal that they belonged to. Each VM includes an encoder (non-normative tools) as well as a corresponding decoder (normative tools). Even though the encoder will not be standardized, it is needed in order to create the common framework that allows the performing of adequate evaluation and comparison of coding tools. The goal is to continuously include in the VM the incremental improvements of the technology. After the first VMs were established, new tools were brought to MPEG-4 and were evaluated inside the VMs following a core experiment procedure. The VMs evolved through various versions as core experiments verified the inclusion of new techniques, or proved that included techniques should be substituted. At each version, only the best performing tools were part of the VM. If any part of a proposal was selected for inclusion, the proposer had to provide the corresponding source code for integration into the VM software under the conditions specified by MPEG.

2.2.7.2. Core Experiments

Now let’s clarify the term “Core Experiment,” which was mentioned above. The improvement of the VMs starts with a first set of core experiments defined at the conclusion of the evaluation of the proposals. The core experiments process allows for multiple, independent, directly comparable experiments to be performed to determine whether or not a proposed tool has merit. Proposed tools target the substitution of a tool in the VM or the direct inclusion in the VM to provide a new relevant functionality. Improvements and additions to the VMs are decided based on the results of core experiments. A core experiment has to be completely and uniquely defined, so that the results are unambiguous. In addition to the specification of the tool to be evaluated, a core experiment also specifies the conditions to be used, again so the results can be compared. A core experiment is proposed by one or more MPEG experts and is accepted by consensus, providing that two or more independent experts agree to perform the experiment.

It is important to realize that neither the Verification Models, nor any of the Core Experiments, will end up in the standard itself, as these are just working tools to ease the development process. Although it is not easy at this stage to tell how many core experiments have been performed in MPEG-4—dozens, for certain—it is safe to say that the group reached its goal by continuously improving upon the technology to be included in the standard.

2.2.8. Interoperability and Compliance

One of the major reasons to create standards is to achieve interoperability. This term appears in any discussion dealing with standards. Interoperability should be seen as closely related to the topic of compliance. Let’s discuss both those concepts, since they are central to any MPEG standard and MPEG-4 in particular.

2.2.8.1. Interoperability

A standard specifies technology for use in devices. If the devices are implemented according to the standard specification, all devices, irrespective of the specific manufacturer, are working more or less in the same way, or at least in a specified way. This implies that devices originating from different manufacturers can be interchanged without interrupting the functionality offered by the device. If this sort of exchangeability is achieved, we call the individual devices “interoperable.” Note that interoperability does not imply that the products of all manufacturers are identical. This would preclude competition, which is certainly not the intention of MPEG. However, there must be a minimum set of rules that every product or device is complying with, such that products originating from different companies can work together in a seamless fashion. That’s what a user expects from his or her cell phone or TV set, or even from the paper he or she puts into a printer or copy machine; that is, irrespective of who has manufactured the phone, the user would like to be able to place or receive calls without even thinking of the type of telephone his or her communication partner on the other end is using.

In the context of this book, we talk about interoperability if the encoder device of company A produces bit streams such that the decoder for company B can decode those streams correctly. Decoding correctly means that the decoder for company B can create a reconstruction of the original media data that is practically identical to the reconstruction generated by the decoder of company A. Devices are truly interoperable if this scenario also works the other way round; i.e., decoder A can reproduce the media data that have been created by encoder B so that they are practically identical to the decoding of the same data by decoder B.

If there are only two parties involved, then this doesn’t appear to be a significant challenge for either company A or company B. However, consider the real world for a second, where there are many solution providers offering their products in the marketplace. It is a challenge then to make sure that all decoders from all companies can decode each other’s streams successfully. It is hardly possible that all companies can cooperate at a level to achieve a complete mutual testing. Imagine how difficult it might be if the companies are competitors.

As end users, we are used to buy cell phones, for example, from a wide range of companies. We expect that we can use any of those phones, which are labeled as implementing the GSM standard, to perform mobile communications in any GSM network. We don’t care who is building the GSM network components used in the telephone infrastructure, nor do we care which company’s cell phone our communication partner is using—and we shouldn’t have to. This is what the standard is supposed to achieve—true interoperability, which does not come for free.

2.2.8.2. Compliance

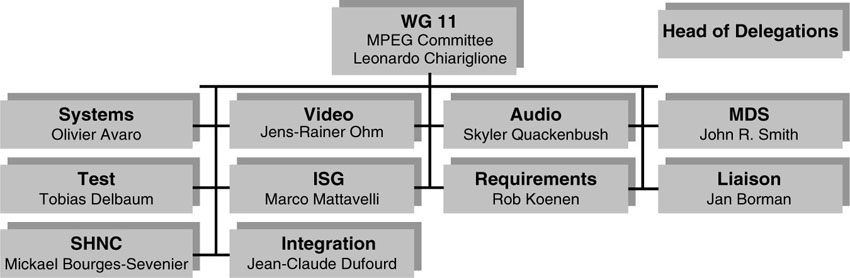

Interoperability is achieved by requesting the devices to be compliant with the standard. But what does compliance mean and how is it achieved? First, recall that the standard only specifies the bit stream syntax and the decoder. Thus, we talk about compliant bit streams and compliant decoders. Strictly speaking, there is no such thing as a compliant encoder. However, we may use this term loosely if we agree that a compliant encoder is a device that is producing legal and thus compliant bit streams.

To make a bit stream compliant, the standard defines which syntactic elements are allowed to appear in a stream. For a decoder to be compliant, the standard defines how the decoder is supposed to act on the received bit stream of digital data offered to the input.

The standard specifies so-called “conformance points.” Each conformance point consists of two ingredients: 1) the list of syntactic elements that are admissible in a stream; and 2) quantitative bounds on parameters like maximum permissible image size, frame rate, bit rate, and sampling rate or similar. Such conformance points are defined by the profiles and levels of specification, which we will discuss in more detail in a later chapter.

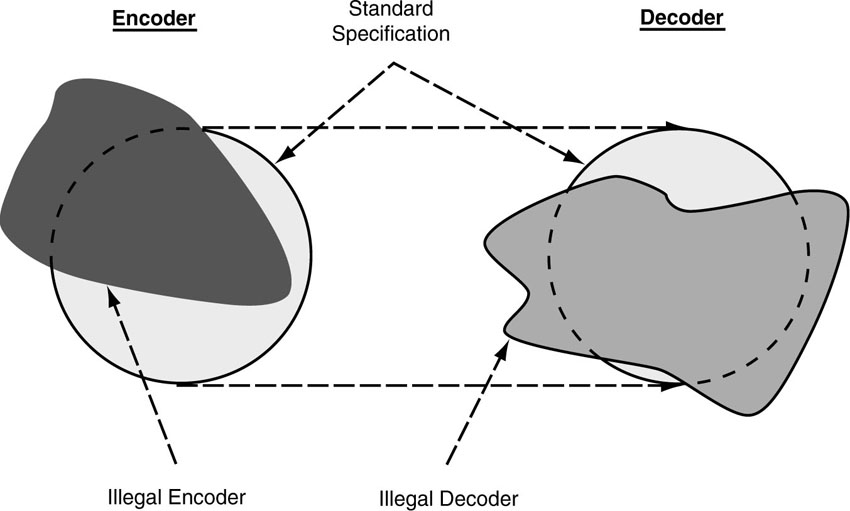

The decoder that is compliant with a given conformance point must be able to decode all compliant bit streams, i.e., all bit streams that stick to the specified syntax and the specified upper bounds of some quantitative parameters, such as maximum bit rate, maximum image size, and other parameters of that type. In other words, the decoder has to implement all decoding tools that the standard requests, and provide at least as much memory and processing power as necessary. The decoder can offer additional features and capabilities and provide more computing power or offer abundant memory without causing a problem. In other words, the decoder may be capable of doing more than the specification requires, but in no cases can it do less in order to be compliant. See Figure 2.3 for a graphic representation of this.

Figure 2.3 Concept of a compliant decoder and a matching encoder.

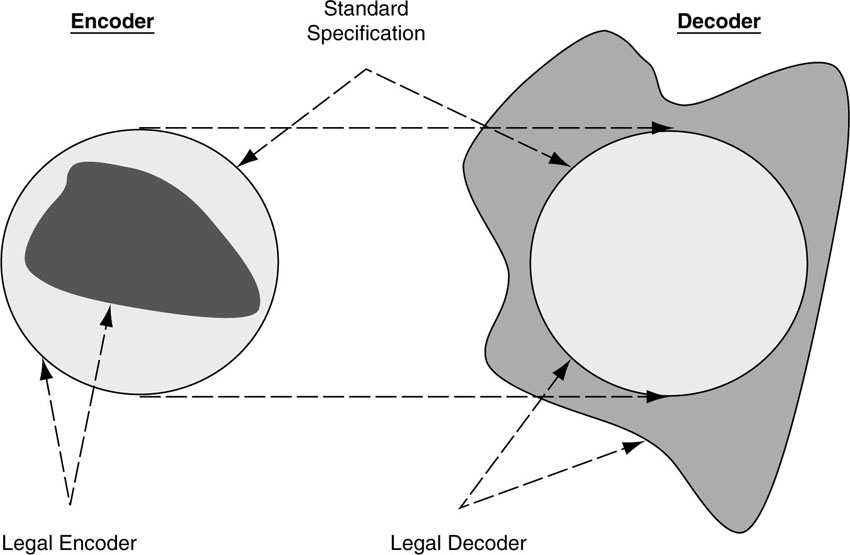

As mentioned before, the encoder is not explicitly addressed by the standard. However, the encoder is not allowed to produce bit streams that exceed the specified capabilities of a compliant decoder, but to this end the encoder need not incorporate all coding tools that are specified. In Figure 2.4, a scenario is depicted schematically in which the decoder fails to be compliant and the encoder fails to produce compliant bit streams.

Figure 2.4 Violation of the concept of a compliance.

2.2.9. Examples of Successful Standards

Let us give you a few examples of successful standards that have shaped the technology scene and provided stable technology, helping to enable a sustained market growth and long-term business development.

2.2.9.1. NTSC/PAL Broadcast Television

NTSC and PAL are standards for analog color television predominantly in the U.S. and Europe, respectively. The NTSC standard was finalized and implemented in 1953, while PAL started in 1961 with black and white pictures and turned into a color TV standard by 1968. Both standards are still used today. There are billions of TV sets around the world—probably even more TV sets than telephones. Business has been good and competition fierce, while the technology has been kept transparent to the end users, in spite of technical improvements to the TV sets as well as the TV cameras and the sender infrastructure. If you happen to still own an operational NTSC TV set from the 1950s, it probably still produces good pictures today. Longevity and widespread use of the standard helped both to develop markets by bringing the cost for end consumers down, and to guarantee the longevity of the investment for the operators.

2.2.9.2. GSM—Mobile Cell-Phones

Telecommunications is a domain in which many standards were developed and deployed over a long period of time. The telecommunications services and markets would not have evolved without the existence of standards. In particular, consider the case of GSM—a European standard for mobile communications, which is used in most countries around the world. A user can take his GSM cell phone from Germany to Australia, and still be reachable at his regular phone number without having to know how it actually works. It is conceivable that the mobile communications market would not have lifted off the ground with this incredible speed and worldwide success without the existence of such a standard. Here are just a few additional facts to demonstrate the enormous success of the GSM standard:

• GSM has about 1 billion users worldwide.

• GSM can be used in 200 countries around the world. To offer some perspective, note that McDonald’s is present in 100 countries, Coca Cola in 130 countries, and the United Nations in 180 countries.

• At its peak, the income for the EU by exporting GSM equipment throughout the world exceeded the revenues made by selling cars!

There are several factors that were responsible for this huge success of GSM, such as the technical quality of the standard, which was fostered by the joint willingness of all participating parties to come to an agreement (strong Memorandum of Understanding) through cooperation, and to standardize the result as an open specification. Also, the roll-out of GSM was not seriously slowed by patent issues. The existence of the GSM standard was the single most important reason for the explosion of the mobile communication market as we have experienced it throughout the last decade.

If you happen to still own a GSM cell phone of the first generation, you may notice that it still works flawlessly in today’s system. The people responsible for the standard were very professional in formulating upgrade policies that guaranteed the backward compatibility of the latest systems with previous versions. This professionalism is responsible for the longevity of the GSM standard. This longevity in turn makes long-term investments in GSM infrastructure economically reasonable. Finally, there is no shortage of competition at the service and application level as well as on the manufacturer’s side. All this competition helped to shape the market and to make mobile communication a compelling offering to the general public. In contrast to this, think of the situation when dealing with updates and upgrades in the PC world, or the evolution of streaming media in terms of market sizes and revenues. In both cases it can be recognized what a difference it makes to not have a commonly accepted standard, which provides longevity and broad market acceptance.

A standards story that makes worthwhile reading is the development of the DVB standard (Digital Video Broadcast) for building digital TV systems in Europe. A good starting point to learn more about the DVB project is the Web site www.dvb.org.

2.2.9.3. Paper Formats, ASCII, and Programming Languages

The existence of the DIN standard for document sizes, be it A formats or B formats, is yet another example of a successful standard. Based on the standardized document formats, it is easy to exchange documents, to build printers, copy machines, and so on. It is so omnipresent that we do not actively think about the benefits originating from such a standard.

Similar statements are true when it comes to the ASCII code or to the standardization of programming languages such as ANSI C to achieve portability of source code.

There are many more such examples of successful standards in our daily lives.

2.3. The MPEG Saga So Far

Before going further with the topic of MPEG-4, we would like to review the MPEG saga so far. As mentioned previously, there are other standardization organizations dealing with compression technology, most notably the ITU, which has a working group called the VCEG (Video Coding Experts Group). The official name of the group is ITU-T Study Group 16. There is both cooperation and competition between MPEG and the ITU when it comes to coding video. In this section, we will highlight the connections between the two groups regarding video coding. The ITU is more concerned with standards for communications applications. The main focus for the ITU has been on video conferencing or video telephony, whereas MPEG is more concerned with, but not confined to, consumer applications and consumer electronics.

2.3.1. Pre-MPEG Accomplishments

There have been standards for compression before the existence of MPEG. MPEG actually started in 1988. Before the first MPEG standard was completed, there was another very successful standard published—JPEG (Joint Photographic Experts Group), which specifies compression for still images. JPEG is also a standard issued by the ISO, by Working Group 1 of SC29. Think of JPEG as the older brother of the MPEG standards.

Besides the ISO, there are also a number of excellent standards in the field of audio-visual compression issued by the ITU. The ITU’s video coding standards are typically named H.26x, where x stands for digits currently ranging from 1 up to 4. The first real video coding standard predating the first MPEG standard was the H.261 standard. This standard already comprised the fundamental concept of a motion-compensated hybrid video codec, which is the basis of all video coding standards to date. This basic concept has been improved over time by new standards and adapted to certain application domains to get better and better available compression. H.261 target bit rates for compressed video lie in the range between 64 kbit/s and 2Mbit/s. In fact, the standard is sometimes referred to as k×64, since the target bit rate steps are in multiples of 64 kbit/s. Interestingly enough, the development of this standard was largely driven by the vision of teleconferencing over ISDN channels. Note that ISDN channels can be bundled in steps of multiples of 64 kbit/s. The H.261 standard is still used today, largely due to the fact that it has simple encoding and a very simple decoding algorithm.

Various software implementations as open source code are available on the Web. The technology is conceptually simple and already includes many of the fundamental principles for coding video. H.261 was first published in 1990. The fact that the teleconferencing business did not really take off was another motivation for researchers around the world to find even better video and audio compression, as well as to enable the video telephony business.

2.3.2. MPEG-1: Coding for Digital Storage Media

The MPEG saga officially began with the development of the MPEG-1 standard, which is officially referred to as ISO/IEC 11192. MPEG-1 was designed with the target in mind of storing and retrieving video and audio on a compact disc (CD). The standardization effort was finished in November 1992, when MPEG-1 was approved. The speed of CD-ROM drives at that time was limited to a bit rate of about 1.5 Mbit/s. This is why MPEG-1 is usually only credited with supporting bit rates up to 1.5 Mbit/s for video and audio. However, note that MPEG-1 is not actually constrained to this bit rate, but can be used for higher bit rates and image sizes. For example, DirecTV started out with their direct satellite-based broadcasting service using MPEG-1 codecs for compressing standard TV resolution images. They later switched to MPEG-2, as soon as the new standard was finished and corresponding products were available. MPEG-1 audio coding has been employed to take care of compression affairs as part of the DAB (Digital Audio Broadcast) system, which is a terrestrial transmission system for delivering digital radio services as a replacement for conventional FM systems.

The Video CD (VCD) uses the MPEG-1 standard, which has turned out to be a success mainly in China, where the format is often used to distribute movie material. The distribution model didn’t include a working royalty system and the recognition and protection of copyrights. However, in spite of this deficiency, decoder hardware manufacturers didn’t complain about the business. MPEG-1 has also been used as a format for video clips on the Web in its early days. So far in China alone, more than 70 million Video CD players have been sold.

MP3 (MPEG-1 Audio Layer III) has turned into a buzz word to denote high-quality audio on the Web for downloading music, sharing music over peer-to-peer networks. It has changed forever the way people experience music. MP3 files are small enough to be downloaded from the Internet or stored on small mobile devices, while offering stereo audio of acceptable quality. In this regard, MP3 audio coding technology has created a new market and a new paradigm for content use. With peer-to-peer file-sharing systems such as Napster and Gnutella, it is clear that MP3 also has changed the world for people in the music business. This is a somewhat two-edged success story for the MPEG-1 standard. Whatever the business implications are, the technology opened up a completely new way of dealing with high-quality audio material, most notably with music on the Internet. The case of copyrights on music and illegal copying and distribution of music titles is still open and widely discussed. Once the rights of consumers and copyright holders find a point of equilibrium, the future of MP3-coded digital music will be ensured.

Personal computers using the Microsoft Windows operating system have a native MPEG-1 player installed. In addition, many compact and lightweight portable MPEG-1 cameras exist, which have become increasingly popular. MPEG-1 audio and video compression is still in use due to the wide availability of hardware and software solutions, and the familiarity of developers and users alike with the technology and products.

It is also still in use among video hobbyists and students for the distribution of downloaded movie material, much to the dismay of the major studios in Hollywood. The format is being used for creating low-budget CDs to store and play movies instead of using more costly DVDs. VCDs can be played back on most DVD players. SVCD is an extension of the VCD, which uses MPEG-2 codecs instead of MPEG-1. Other than the above examples, the MPEG-1 video system business appears to be on the decline.

All in all, MPEG-1 has been a substantial success, even though it has been used for a number of applications which were not necessarily envisioned at the time the standard was developed. It is actually not so unusual for technology to be developed for a certain application domain, only to find use in another field, as well.

2.3.3. MPEG-2/H.262 Coding for Digital TV and DVD

Two years after MPEG-1 was released, in November 1994, MPEG delivered its next stellar product, the MPEG-2 standard, which is officially referred to as ISO/IEC 13818. The driving force behind MPEG-2 and the main motivation was to offer compression technology to support the migration to digital television services. MPEG-2 is the result of cooperation between the ITU and MPEG. Thus, the same technology has been standardized by the ITU under the title H.262. The creation of an entire new industry—digital television—has been triggered by the arrival of this standard. DirecTV, Canal+, and Premiere, for instance, are all digital television broadcast services that are based on the MPEG-2 standard.