2. Motion Capture Case Studies and Controversy

Chapter outline

Digital Humans and the Uncanny Valley50

Motion capture, performance capture, Satan's rotoscope—call it what you may, it is a technology that rose to the top of the hype machine among entertainment industry watchers, and then became a topic of major controversy for various reasons.

The first of many problems was the initial perception of what could be done with motion capture. Years ago, when the technology was first used to generate animated characters in three dimensions, the results amazed most people because nobody had ever seen realistic human motion used for 3D character animation. Many people in the industry thought they had found the replacement for the traditional character animator. However, because the captured motion data available in those days was very noisy and expensive, it quickly became clear that it wasn't going to replace manual labor any time soon. In addition, off-the-shelf computer animation software with user-friendly interfaces such as Maya started to emerge, which helped more artistic and less technically oriented people enter the business. Character animators started to work in three dimensions.

In the late 1980s, the concept of the motion capture service bureau was born. Equipped with the latest in optical and electromagnetic technology, these studios detected the need for cheaper methods of character animation. The video game industry quickly became the first entertainment unit to sign up, finding the data fast and cost effective. In fact, video game developers are still the number one consumers of optical motion capture services, with about 70% of the total usage. Larger video game publishers, such as Electronic Arts, have in-house motion capture studios that their game developers use, but smaller developers still use the service bureaus.

The next logical market for service bureaus to pursue was film and television, but there was one problem: The applications were very different from video games. Video game characters were extremely low detail, and the motion data didn't need to be very clean. Most characters didn't even need to stay firmly on the ground. Also, most service bureaus didn't have anyone familiar with computer animation or even performance animation, and motion capture equipment manufacturers had even less knowledge of the subject, especially since their main market wasn't entertainment. This lack of knowledge combined with the desire to break into the entertainment industry drove manufacturers and service providers to make the same outrageous claims that had been made years ago: “Motion capture can replace character animators.” This time around, the technology was more affordable and readily available for purchase, so the studios and the press started listening. The media still loves to show people geared up with markers, especially if it is a celebrity performing for a digital character. On almost every motion capture job that I have worked on, there has been a “behind the scenes” crew videotaping the session. Motion capture would be the headline even if it wasn't ultimately used on the project. Many media reports on the subject also claimed that the technology would eventually replace traditional animators.

Some studio executives who got wind of the “new” technology through the media decided to study the possibility of integrating it into their special effects and CG animation projects. Most of these studies consisted of having some data captured by a motion capture studio and then testing it on a particular character. The results were misleading, because the studies didn't really include what the motion capture people went through to clean up the data and apply it to the character. They only considered cost, but didn't take into account that motion capture studios were often discounting the price of the data in order to get in the door. In addition, the motions studied were usually walk cycles and other simple moves that are less complicated to process than the average movements for a typical film or game project.

This motion capture frenzy was further fueled by the success of Toy Story as the first CG animated feature-length film. Performance animation was seen as a way to achieve the same success at a fraction of the cost. It was also heralded as the key to cost-effective CG television cartoons. At TSi, there was a period when studios would show up and ask us to bid on capturing data for full-length feature film projects or television shows without even having a specific project yet. It really didn't matter what the content was, as long as it was motion captured. In the studios' views, such a project would cost less and guarantee great press exposure, especially if an actual movie star performed the character.

Project-driven purchases of expensive motion capture equipment happened in many studios, such as Warner Brothers, DreamWorks, and smaller studios. The equipment in almost every case did not end up being used for the project for which it was intended, resulting in budget and creative problems and sometimes even the cancellation of projects. These studios were led to believe that any character-animated shot could be achieved with motion capture at a lower cost, even those with cartoon characters and requiring stylized motion and complex interactions with objects and other characters. In many cases, character animators were handed captured motion data and asked to modify it, a big mistake in my opinion, which only served to generate more heat against the technology.

For many years, motion capture couldn't find a place in the film industry. Some studios used it to create digital stunts and some background character work, but the technology had an overall bad reputation in the industry. It wasn't until 2004, when Robert Zemeckis released The Polar Express that motion capture started to find its place in the film industry, although not without some new controversy.

The Screen Actors Guild first noticed performance capture when James Cameron released Titanic in 1997. They started to be concerned about it during the shooting of The Polar Express and that concern has grown with the proliferation of performance capture films to the point that SAG has recently formed a national Performance Capture Committee. The guild will soon try to renegotiate its master contract with producers to cover performance capture under the same terms as other acting work.

Furthermore, none of the actors on Avatar was nominated for an Oscar, even though the film broke all box-office records and was nominated for Academy Awards in most categories. And to make things even more interesting, the Academy has recently ruled that performance capture films can no longer be considered for Academy Awards in the Best Animated Feature category.

Regardless of who authors or owns the performance and whether performance capture should be treated as any other acting job, there is still the issue of look and appropriateness. What kinds of characters should be performed versus animated? Is it photo-real or stylized humans? Stunts only or also main performances? Nonhuman characters? Cartoons?

Performance capture has been used successfully in creating photo-real humans for films like Titanic and others that didn't include main performances. It has also been used successfully in creating stylized humans on films like Monster House. Nonhuman photo-real creatures are clearly a great use of performance capture, as evidenced by Gollum and the Na'vi in Avatar. Even cartoony characters have been successfully created and the perfect example of that is the film Happy Feet, which even went on (and will be the only one) to win an Academy Award for Best Animated Picture. Films like The Polar Express and Beowulf had success but some say the look came short and that is attributed to the fact that the characters are intended to look as real as possible.

Digital Humans and the Uncanny Valley

During my career in visual effects, I sometimes must make suggestions as to whether a certain shot should be carried out using performance animation. Even though I am very careful in suggesting the use of motion capture and do so only when I feel that it is vital for the shot, I still meet with some resistance from the character animation group. Years ago, suggesting the use of motion capture was like telling a character animator that his or her job could be done faster and better in some other way. Also, motion capture had a reputation of being unreliable and unpredictable, and animators were afraid of being stuck with having to fix problems associated with it. Many of today's experienced character animators who work with computer-generated (CG) images have had such encounters. Many of them have stories about having to clean up and modify captured motion data, only to end up replacing the whole thing with keyframe animation. I saw this first hand on The Polar Express and I've heard animators claim that most mocap data was dropped in favor of keyframe animation in projects like the Lord of the Rings films and even Avatar. Also, many animators dislike the look of performance animation. Some say it is so close to reality that it looks gruesome on CG human characters; others say it is far from real. In the past few years, things have changed and motion capture has repaired most of its reputation. It is also no longer such a dividing, almost religious issue in the industry, but there are still some that resist it in a massive way.

The end result of applying performance animation to digital human characters can indeed look strange, but it isn't only because of the performance data. Creating a digital human is a very difficult endeavor. Since we are used to what a human must look like to the last detail, anything that is not perfect will jump out. We have seen this effect in most digital humans and there's even a name for it: the “Uncanny Valley.” It isn't that clear what causes it but some people think that it is all due to the eyes. Our brains are so well trained to know what a human is supposed to look like that something as small as a twitch in the eye or the wrong dilation of the pupil can make it all seem strange.

Jerome Chen was the visual effects supervisor for Beowulf and The Polar Express. He traces the origins of the term “Uncanny Valley” to Japanese robotics, where the intention was to create a synthetic clone of a human and make it as lifelike as possible. “The theory of uncanny valley is the closer you approach to making something artificially human the higher the level of revulsion occurs in the human observing,” points out Chen, “which is why if you have very stylized animation, like there's no issue of uncanny valley in terms of mocap being used for Monster House, because the characters are really stylized. The problem with [The] Polar [Express] and Beowulf is not the fact that they were performance captured, I think it had to do to the fact that maybe the characters weren't stylized enough. It was the level of realism in the designs of what the characters looked like.”

In most digital human attempts, animators end up creating the eyes' performance. There have been attempts to capture eye lines and eye motion using electronic sensors, but most of those attempts haven't worked because the final eye line almost always differs from the one at the capture stage. When animators work on the eye movements they usually animate the voluntary motions, but eyes have many involuntary movements that end up being left behind, and without those it is difficult to sell a digital human. Also, how many people know how to create a gaze versus a look? Sounds easy, but it really is quite complicated. There are several kinds of eye motions and lots of research on the subject. Saccadic motions, for example, are fast simultaneous motions of both eyes in the same direction. These occur differently depending on whether the person is listening to someone else or talking to someone. There are other involuntary motions such as microsaccades, oculovestibular reflexes, and others. Also, body language may be linked to eye motions and eye line in a much deeper way than we know and our subconscious can tell the difference.

Eyes and perhaps skin and minor facial expressions are the reason why we have been able to take digital humans 98% of the way, but the remaining 2% is the most elusive. Creatures that look close to human but not quite have been very successful because there's no point of reference for the last 2%. A perfect example is the Na'vi creatures in Avatar. “If we had a human character we would shoot them live action,” says Jon Landau, producer of Avatar, “that's how we would approach it.”

Jerome Chen thinks that it also has to do with the fact that when we are looking at synthetic humans on screen we wonder why they weren't photographed for real. “Essentially you become frustrated, because all you're doing is picking things that are missing,” he says. “It's an artistic choice. If the director wants to pursue the photorealistic human and you are in the position of helping him create that vision you have to go along for that ride, and you learn a lot. There're some valuable lessons you learn in trying to accomplish that, which is why digital stunt doubles have become state of the art now.”

Relevant Motion Capture Accounts

In the recent past, the use of performance animation in a project was largely driven by cost. Many projects failed or fell into trouble due to lack of understanding, because the decision to use motion capture was taken very lightly and made by the wrong people and for the wrong reasons. Some of these projects entered into production with the client's informed knowledge that motion capture may or may not work, and a budget that supported this notion. Other clients blindly adopted performance animation as the primary production tool, based on a nonrepresentative test or no test at all, and almost always ran into trouble. In some cases, the problems occurred early in production, resulting in manageable costs; in other cases, the problems weren't identified until expenses were so excessive that the projects had to be reworked or cancelled.

Projects such as Marvin the Martian in the 3rd Dimension, the first incarnation of Shrek, Casper, and Total Recall were unsuccessful in using performance animation for different reasons. Others, such as Titanic and Batman and Robin, were successful to a certain degree: They were able to use some of the performance animation, but the keyframe animation workload was larger than expected. A few projects managed to successfully determine early in production whether it was feasible to use motion capture or not. Disney's Mighty Joe Young, Dinosaur, and Spiderman are good examples of projects in which motion capture was tested well in advance and ended up being discarded.

In live-action films, large crowd scenes and background human stunt actions are some of the best applications. Character animation pieces, such as Toy Story or Shrek, will almost never have a use for performance animation, but let's not forget Happy Feet.

Total Recall

In 1989, Metrolight Studios attempted what was supposed to be the first use of optical motion capture in a feature film. The project was perfect for this technology and was planned and budgeted around it, but it somehow became the first failure of motion capture in a feature film.

The project was the futuristic epic Total Recall, starring Arnold Schwarzenegger. The planned performance animation was for a skeleton sequence in which Arnold's character, the pursuing guards, and some extras, including a dog, would cross through an X-ray machine. The idea was for characters to have an X-ray look.

Metrolight hired an optical motion capture equipment manufacturer to capture and postprocess the data. The motion capture session had to be on location in Mexico City, and the manufacturer agreed to take its system there for the shoot. The manufacturer also sent an operator to install and operate the system.

“We attached retro-reflective markers to Arnold Schwarzenegger, about 15 extras, and a dog,” recalls George Merkert, who was the visual effects producer for the sequence, “then we photographed the action of all of these characters as they went through their performances.” Regarding the placement of the markers, Merkert recalls that the operator “advised Tim McGovern, the visual effects supervisor, and me about where the markers should be on the different characters. We placed the markers according to his directions.”

They captured Arnold's performances separately. The guards were captured two at a time, and the extras were captured in groups of up to 10 at a time. Even by today's standards, capturing that many performers in an optical stage is a difficult proposition unless the equipment is state of the art. “It seemed a little strange to me; I didn't see how we could capture that much data, but the operator guaranteed us that everything was going to be okay,” recalls Merkert. The motion capture shoot went smoothly as far as anybody could tell, but nobody knew much about motion capture except for the operator sent by the manufacturer. Therefore, nobody would have been able to tell if anything went wrong.

After the shoot, the operator packed up the system and went back to the company's headquarters in the United States to process the captured data, but no usable data was ever delivered to Metrolight. “He [the operator] needed to do a step of computing prior to providing the data to us so that we could use it in our animation, and we never got beyond that step. He was never able to successfully process the information on even one shot. We got absolutely no usable data for any of our shots,” says Merkert. “They had excuses that it was shot incorrectly, which it may well have been. My response to that was, ‘Your guy was there, telling us how to shoot it. We would have been happy to shoot the motion capture any way that you specified. The real problem is that you never told us what that right way was even though we asked you every few minutes what we could do to make sure this data gets captured correctly.’” The production even sent people to the motion capture manufacturer to see if anything could be done to salvage any data, but all of it was unusable.

“I think what happened is that their process just entirely melted down, didn't work. They couldn't process the data and they were unwilling to say so because they thought they would get sued. It wound up costing my company maybe three hundred thousand dollars extra,” notes Merkert. “Regardless that the motion capture simply didn't work, we were still responsible to deliver to our client. The only way we could do that was by using the videotapes, which were extremely difficult to use for motion tracking. Unfortunately, because the motion capture company advised that we do it in this way, we had lit the motion capture photography in such a way that you could hardly see the characters to track them. You could see the retro-reflective balls attached to the characters very well, but you couldn't see the body forms of the characters very well.” Optical systems in those days worked only in very dark environments. Almost no lighting could be used other than the lighting emitted from the direction of the cameras. “Our efforts were very successful, however. Tim McGovern won the 1990 Academy Award for Best Visual Effects for the skeleton sequence in Total Recall,” he adds.

Many lessons can be learned from this experience. First, a motion capture equipment manufacturer is not an animation studio, and thus may have no idea of the requirements of animation. In this case, the operator didn't even know the system well enough to decide whether it should be used to capture more than one person at a time. This brings us to the second lesson: Motion capture always seems to be much easier than it really is. It must be approached carefully, using operators with a track record. Extra personnel are also needed to make sure nobody is creating any noise or touching the cameras. Third, if possible, never capture more than the proven number of performers at a time. In this example, it was clear that each performer could have been captured separately because they didn't interact, but it was decided not to do so. Fourth, both the captured data and calibration must be checked before the performers take off the markers and leave the stage, and extra takes of everything must be captured for safety. Fifth, marker setups have to be designed by experienced technical directors and not by operators who know little about animation setup requirements. Sixth, when working on an unknown remote location, the motion capture system has to be tested before and during the session. Extra care must be taken because lighting conditions and camera placements are always different from those in the controlled environment of a regular motion capture studio.

Shell Oil

After 1992, many companies were involved in commercial work using motion capture. A campaign that was especially prominent was one for Shell Oil that featured dancing cars and gasoline pumps. It was produced by R. Greenberg and Associates in New York using a Flock of Birds electromagnetic device manufactured by Ascension Technology Corporation.

Fred Nilsson, now a senior animator at PDI, worked at R. Greenberg in 1994 when the Shell campaign was produced. “We got the main skeleton guys from Softimage to come down and they taught us how to build a skeleton for motion capture,” recalls Nilsson. “We set up a skeleton that had goals where all the motion capture points were, and then there was another skeleton on top that was a parent of all the joints of the other skeleton, so that we could capture the motion and then animate on top of the other skeleton.” The main complaint that Nilsson had about the motion capture data was that the characters were never firm on the floor, but the second skeleton was used by the animators to ground the characters, with the help of inverse kinematics.

The skeleton that contained the final motion was used to deform a grid, which was used to deform the final character—a car or gas pump. Because of the strange proportions of the characters, a lot of cleanup work was necessary. They also had to deal with interference. “We were on a sound stage and there was some big green screen wall that was all metal and it was really interfering,” says Nilsson.

“A year later we did three more Shell spots and all of us said no, we don't want to use motion capture,” recalls Nilsson. They animated the last three spots by hand and it took about the same time to produce them. “They turned out a lot better,” says Nilsson.

In this particular case, the biggest benefit gained by the use of performance animation was the extensive press coverage that Shell obtained because of the “new” technology that was used to animate the cars and pumps. The free publicity didn't dissipate with the creation of the last three spots by keyframe animation, since most people thought that performance animation was used on those as well.

Marvin the Martian in the 3rd Dimension

Marvin the Martian in the 3rd Dimension was a 12-min stereoscopic film that was produced for Warner Brothers Movie World theme park in Australia in 1996. It was meant to look like a traditional cartoon, but because it was stereoscopic, it had to be created using two different angles that represent the views from both the right and left eyes. The best way to achieve this was to create all the character animation using a computer. The challenge was to make sure the rendering and motion looked true to the traditionally animated cartoon.

In 1994, Warner Brothers acquired a Motion Analysis optical motion capture system, thinking that they could have actors perform the parts of the Warner Brothers' cartoon characters. Among the companies involved in the project were Will Vinton Studios, PDI, Metrolight Studios, Atomix, and Warner Brothers Imaging Technology (WBIT). “They asked if we could do Daffy Duck with motion capture,” recalls Eric Darnell, director of Madagascar. After several months of testing, Warner Brothers decided to scratch the motion capture idea and use traditional animation after all. They ended up providing keyframes on pencil to all the 3D animation studios to use as reference for motion. The Warner Brothers motion capture equipment was auctioned in 1997, after WBIT closed its doors.

The Pillsbury Doughboy

In 1996, TSi was approached by Leo Burnett, the advertising agency that handles the Pillsbury account, to create two spots with the Pillsbury Doughboy using performance animation. The agency had already explored the technology once by doing a spot using an electromagnetic tracker at Windlight Studios, and now they wanted to try optical motion capture.

The first step was for us to produce a test with the Doughboy performing some extreme motions. We decided to bring in a dancer/choreographer we had often employed, because she had a petite figure and was a good actress who could probably come as close to performing the Doughboy as any human could. Since the client didn't specify what kinds of motions they were looking for, we decided to have the Doughboy dance to the tune of M.C. Hammer's “You Can't Touch That.” We captured the main dance and a couple of background dances we would use for background characters and, after post processing, converted them to the Alias format.

The main dance was applied to the Doughboy, and the background dancers were mapped to models of Pillsbury products. The resulting piece was quite disturbing: the Pillsbury Doughboy performing a sexy dance surrounded by three or four cylindrical chocolate-chip cookie-dough dancers doing a pelvic-motion-intensive step. It wasn't exactly what you'd imagine the Doughboy would do, but they did say “extreme motions.” I was a little worried about what the client's reaction would be, but I figured that if they really wanted to use motion capture for the Doughboy, they had a right to see the kind of realism in the motion they would get. We sent the test to Chicago.

The day after we sent the test we got a call from producers at Leo Burnett. Their first comment was that the dark lighting that we used would never be used on a Doughboy commercial. We explained that the point of the test was for them to see the motion, not the lighting, and that the lighting had been approached that way to match with the sexy nature of the motion. As for the motion, Leo Burnett said that it was offensive to their client (Pillsbury), but that it had proven the point. They gave us two Doughboy spots to do simultaneously.

Part of the deal was that we would set up a motion capture session that the Leo Burnett and Pillsbury people could attend. We used the same woman who performed the sexy Doughboy for the test. One of the shots involved the Doughboy vaulting over a dough package using a spoon as a pole. We knew that at the time we couldn't use captured motion data for the interactions of the spoon and the Doughboy, but we captured it anyway, because our client wanted this method used on all their shots. We figured we could use it for timing, and that's exactly what we did. All the other shots were standard Doughboy fare: the Doughboy pulling a dish, smelling the steam from baked products, just standing and talking, and, of course, being poked in the belly. The session went very smoothly. Our clients and their clients were very interested in the technology that we were using and had a lot of fun watching the performance.

The data was processed and converted to the Alias format, where we already had set up the Doughboy model for the test. A big problem we had on the test was that the legs of the Doughboy were so short that even retargeting them as best as we could at the time they would never stay on the ground and look right. We knew we would run into this problem again while working on the shots for the commercials. Benjamin Cheung was the technical director in charge of applying the data and lighting the Doughboy. He used only portions of the data, throwing away most of the legs' and some of the arms' motions, particularly when they interacted with the spoon. We probably used only 15% of the captured data on the character, and the rest just as reference for poses and timing. We were already expecting that, so it wasn't a surprise and we didn't miss our deadline.

The client, as we had agreed, stayed faithful to the captured performance and didn't make any major animation changes. It was a very smooth pair of projects, and although we were charging very low rates, we managed to make a decent profit. The project would have cost a little more if it had been keyframed, but it wouldn't have made a huge difference.

The main risk on a project such as this concerns the art direction, such as when a client who is not familiar with performance animation suddenly discovers that the motion looks different from what was expected and starts making changes. In this case it didn't happen because we did our homework to educate the client on what our system could do; however, they did not return for more motion-captured Doughboys, preferring to go back to keyframe animation. I was not surprised.

Batman and Robin

PDI had provided a CG Batman stuntman for Batman Forever, using optical motion capture as a means to collect the performance of a gymnast performing on a stage doing flips and other movements on rings. Batman and Robin required much more elaborate digital stunts, many of them happening in the air. PDI was again contracted to create these stunts using motion capture.

The motion capture services were provided by Acclaim Entertainment, the computer game company that had the video game rights for the Batman property and that had earlier provided the motion capture services for Batman Forever. They used their proprietary four-camera optical system to capture all the necessary motions for the stunt sequences.

Acclaim's team captured several of the shots at Acclaim's motion capture stage in New York, except for the shots that belonged to the sequence in which Batman and Robin skyboard away from an exploding rocket while in pursuit of Mr. Freeze (played by Arnold Schwarzenegger), trying to recover a diamond he had stolen. Because all the action in this sequence happened in the air, John Dykstra, visual effects supervisor, decided to bring Acclaim's optical motion capture equipment to a vertical wind tunnel used for military training. The tunnel was located at the Fort Bragg military base in North Carolina, and the performers were not actors, but members of the US Army's Golden Knights parachuting team. Dykstra directed the performances in the tunnel, and Acclaim processed the data and delivered it to PDI.

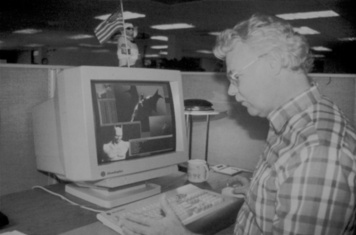

“We took the skeleton files from Acclaim and converted them to a setup,” explains Richard Chuang, who was the digital effects supervisor for PDI. “We also converted all the motion data into our own animation space.” Dick Walsh, character technical director at PDI, had set up a system that allowed them to adjust the differences in physical attributes between the digital model and the performer (Figure 2.1). “We actually had a certain flexibility with the use of motion capture data from different performers on the same character,” recalls Chuang. The data was then used to move a skeleton that would drive PDI's muscle system, also written by Walsh. In addition, animators had the ability to blend the captured data with keyframe animation, with or without inverse kinematics. The system didn't require animators to modify the motion data. They worked on clean curves and were able to blend the animation and the captured data using animatable ratios. The system was especially useful when blending between captured motion cycles.

|

| Figure 2.1 Dick Walsh working on the Batman character setup. Photo courtesy of PDI. |

“In both cases we ended up using probably around 20% motion capture and 80% animation,” notes Chuang, referring to both Batman films. “What we found is that because the motion is always captured in a stage, it really has nothing to do with the final performance you need for the film. For the director to have control of the final creative, we ended up taking motion capture as a starting point, and then animating on top of it. Then we'd be able to dial between motion capture and animation whenever we needed to.”

Part of the problem with motion capture in this case was that the motions were for the main action of the sequence and the director wasn't present to direct the performance. When you capture motion for background characters, it is okay for the director to delegate the task, but in a case such as this, the director would expect to have first-hand creative control over the final performance. That meant the performance would have to be modified by the PDI team to his specification. Larry Bafia, animation director at PDI's commercial and film effects division, worked on the air sequence. “It was the first time that I ever had to deal with motion capture data at all, so I had no idea what I was getting into at the time,” recalls Bafia. “This is a character that comes from a comic book and it really has to look like a superhero,” he adds. “Some of the poses had to be exaggerated and it wasn't something that a human could do in a wind tunnel.” The team kept most of the captured data for the motions of the character's torso, especially the actions of weight shifting on the skyboard. They used keyframe animation on many shots to enhance the pose of the arms and to animate the fingers, which were not included in the motion data. Inverse kinematics was used mostly on the legs.

The motion capture session was directed by the special effects supervisor, John Dykstra, who tried to capture as many different shots as possible for the director to choose from. This was done even before background plates were available, so no final camera angles and framing were available. “What you do is you capture all the possibilities, and then based on that you try to edit something that will fit your film,” says Chuang. “But by the time you get your background plates you can end up with something completely different.”

PDI technical directors prepared desktop movies of all the captured motions so that, after the plates became available, the team could decide which actions would apply for each particular shot. “One of the problems was the fact that a lot of the shots were long enough where the particular motion capture cycle didn't have quite enough activity,” recalls Bafia. Because of this, the team had to blend several captured motions into single shots. An example of this is the shot in which Robin catches the diamond after Mr. Freeze loses it. “I had to start the front end of the shot with one piece of motion capture where [Robin] is basically staying alive and balancing himself on the board and getting ready for spotting this diamond and catching it,” recalls Bafia. After catching the diamond, Robin had to do a spin-around, not unlike that of a surfer. “Because of the restrictions inside the wind tunnel, the guy could do the spin but he had to pull up early so that he wouldn't fall too far and drop down toward the fan that was actually suspending him,” says Bafia. PDI had to use inverse kinematics to maintain the character's feet on the board, and keyframe animation to blend between two motion files that had a reversed feet stance—all this while keeping the elements in the captured data that John Dykstra was interested in maintaining.

The fact that the performers weren't aware of where the final camera would be located was a problem that translated into the performance. “I would like to see a situation where you actually try motion capture after you shoot the film versus before, so you know where the background plate is going to be, and the director directs the action accordingly,” notes Chuang.

Regardless of the expected problems with captured performances, the resulting shots were extremely successful. PDI had the experience to use whatever they could out of the motion data and discard the rest. Therefore, the project was completed in a timely manner, the clients were happy, and PDI made a good profit. The difference between this and other projects using motion capture was that for Batman and Robin, motion capture was used to achieve a certain look, not to save money.

Shrek

Before the original Shrek animated film started production, DreamWorks Feature Animation had been developing it to be mostly based on performance animation.

The decision to use performance animation on Shrek was based not only on cost (which indeed played a big part in the decision), but also on the desire to achieve a certain look. Before Shrek was even in preproduction, PropellerHead Design (Rob Letterman, Jeffrey Abrams, Andy Waisler, and Loren Soman) produced a test of a similarly shaped character called “Harry” for a potential television show for HBO. The motion capture for the test was done at TSi.

For the Harry test, the propellerheads (as they were known in the Shrek production) prepared an appliance that was worn by their performer. The appliance would add the volume that the performer needed to approach the proportions of Harry. This was a new idea that we had never seen done before, and it seemed like a good one. During our first of two sessions, we placed the markers on the appliance and captured the body motions with our optical system. The tracking of the data was a challenging feat because it was an uncommon setup, but we managed to get it done with our ancient six camera system.

In the second session, we were supposed to capture Harry's finger motion. We had never tried to capture the motion of fingers with an optical system, and we didn't guarantee that it would work. It was strictly a developmental session. We placed the small markers that we usually used on face capture on the finger joints. The performer sat down in a chair, and we immobilized his hands as much as possible because the wrist movement had already been captured in the previous session and we knew we couldn't capture the fingers if the wrists were moving. We captured several passes of the actor moving his fingers with the timing of the dialogue, but in the end it was impossible to track those motions, and we had to scratch the idea of optical finger capture. Facial capture wasn't even tested for this project.

The propellerheads finished the Harry test, filling in the additional animation, plus texture and lighting. The end result was stunning. The Harry test laid the foundation for the production of Shrek at DreamWorks. The propellerheads would coproduce the film, which would be animated using techniques similar to those in the Harry test: optical motion capture with body extensions or appliances.

The DreamWorks studio in Glendale was geared up with Silicon Graphics computers running different animation platforms and a brand new 10-camera Motion Analysis optical motion capture system. A production staff was assembled and production of a 30-s test began. Character animators started to work with the optical motion data. Several months later, the test was shown to Jeffrey Katzenberg, DreamWorks' cofounder and the partner responsible for animation films. Shortly thereafter, the production was halted and the project went back into development. The propellerheads were no longer involved. Exactly what happened is still a mystery, but motion capture was ruled out for this project. Among other things, the production made a mistake by using experienced character animators to work with the captured motion data, even from the stage of retargeting. It is not certain if that actually affected the test, but it would have eventually become a big problem.

Godzilla

Another character that was supposed to be animated by performance was Godzilla, in the 1998 film with the same name. Future Light had developed a real-time motion capture system based on optical hardware by Northern Digital. The system was connected to a Silicon Graphics Onyx with a Reality Engine, and it could render an animated creature in real time. Future Light was a division of Santa Monica Studios and a sister company of Vision Art, one of the CG studios involved in the visual effects production for Godzilla. Director Roland Emmerich liked the system because he could see the creature motion in real time, so the production proceeded with the idea of using the system as much as possible for the animation of the creature.

The character design was proportionally close to the human body, so it was possible to map human motion into the character's skeleton without much retargeting. According to Karen Goulekas, associate visual effects supervisor, the motion capture worked technically, but it didn't yield the results they were looking for esthetically, so they decided to abort that strategy and use keyframe animation instead. In the end, the animation for all the creatures, including Godzilla and its offspring, was done with keyframe animation.

“The reason we pulled the plug on using the motion capture was, very simply, because the motion we captured from the human actor could not give us the lizard-like motion we were seeking. The mocap could also not reflect the huge mass of Godzilla either,” adds Goulekas. “During our keyframe tests, we found that the Godzilla motion we wanted was one that maintained the sense of huge mass and weight, while still moving in a graceful and agile manner. No human actor could give us this result.” In addition, they had Godzilla running at speeds as high as 200 mph, with huge strides; this proved to be impossible to capture.

In general, using human motion to portray a creature like Godzilla, even if it is proportionally close to a human, is a mistake. In previous Godzilla films, it was always a guy in a suit performing the creature. In this version as initially conceived, it would also be a guy in a suit, except it would be a digital suit.

Sinbad: Beyond the Veil of Mists

Sinbad: Beyond the Veil of Mists was the first full-length CG feature that used mostly performance animation. The film was produced by Improvision, a Los Angeles production company, and Pentafour Software and Exports, one of India's largest and most successful software companies.

The story concerns an evil magician named Baracca, who switches places with a king by giving him a potion. The king's daughter, who is the only one who knows that the switch took place, escapes, eventually running into Sinbad. The Veil of Mists refers to a mythical place where Sinbad and the princess have to go to find the answer to defeating Baracca and saving the king. The main character, Sinbad, was voiced by Brendan Fraser. Additional cast included Leonard Nimoy, John Rhys-Davies, Jennifer Hale, and Mark Hamill.

With an initial budget of $7 million, it is no secret that motion capture was adopted by the producers primarily as a cost-effective alternative to character animation. They had originally planned to finish the whole film in 6 months, thinking that using motion capture would be a huge timesaver. Evan Ricks, codirector of the picture, was approached by the producers with a rough script. “They already knew that they wanted to do motion capture,” recalls Ricks, who is a cofounder of Rezn8, a well-known CG production and design studio in Los Angeles. He told the producers that a film of this magnitude would probably take at least 2 years of production, but they insisted on trying to finish it on schedule.

Given the constraint that the production would have to utilize motion capture, Ricks initially explored the possibility of using digital puppeteering to animate the characters in real time, using that as a basis for animators to work on. After conducting a few tests, they found that there weren't enough off-the-shelf software tools available to produce this kind of project in real time, so they decided to use an optical motion capture setup.

Motion capture would be used for human characters' body motions only. They couldn't find a good solution at that time to capture facial gestures optically, so they tried to use digital puppeteering instead. Finally, they decided that all facial animation would be achieved by shape interpolation. Pentafour had acquired a Motion Analysis optical motion capture system that was sitting for some time unused in India, but the production decided to use the services of the California-based House of Moves and their Vicon 370E optical system. The reason was that in Hollywood there were more resources and expertise readily available for the shoot, such as stages, actors, set builders, and motion capture specialists.

The production rented a large sound stage at Raleigh Studios and started building all the props and sets that the actors would interact with during the capture session. They even built a ship that sat on a gimbal that could be rocked to simulate the motion of the ocean. “That introduced all kinds of problems in itself,” notes Ricks, “because all of a sudden you don't know exactly where the ground is and you have to subtract that all out.”

In addition to the people from House of Moves, the production had the services of Demian Gordon, who was brought in as motion capture manager. Gordon had worked extensively with motion capture before when he was at EA Sports. His job was to help break down the script, work closely with House of Moves, help design marker setups for all characters, and find solutions to problems that arose during production.

The House of Moves' Vicon system was brought into the sound stage and installed using a special rig that rose about 30 ft off the floor, which kept all seven cameras away from the action on the stage. In addition, special outfits were made for the performers to wear during the sessions.

Ricks wanted to shoot the motion capture sessions on tape from many angles as if it were a live television show. He was planning to edit a story reel after the motion capture was done and to use it while producing the CG images. A camera crew was hired initially, but was eventually dismissed by the production company because of its high cost. “We paid the price later,” says Ricks. “The camera can really help the director to visualize what's going to be on the screen. I guess you can stand by and use a finder, but with live action, the very next morning you can see dailies. You can see what you actually shot and if the angle works. With motion capture, you get a single reference and end up with mechanics more than cinema.”

Even if they had been able to tape all the captured shots, they had to deal with the motion capture system's inability to synchronize video and capture scan rates. They couldn't use SMPTE time code, because at that time no optical system was able to generate it, although it is a very important aspect of production work. According to Ricks, the lack of a good way to synchronize all the elements played a big part in the extension of the production schedule.

Because of the compressed schedule, the crew had to capture thousands of shots in about 2 months. According to Ricks, 4 months would have been ideal. “We weren't able to finish all the storyboarding before we started the capture, so sometimes we would be literally looking over the editor's shoulder, telling him what we wanted, or looking at new storyboards that had just been drawn, and then go over to the stage around the corner and capture it. Not the ideal way to work.”

Typical problems during the motion capture sessions included performers losing or relocating markers. On projects of this magnitude, it becomes very difficult to keep track of exact marker placements, which creates inconsistencies in the motion data. They also had to deal with obstruction problems, where cameras didn't have a direct line of sight for all markers.

Many of the shots had more than one performer interacting at the same time. They were able to capture up to four characters simultaneously, although it became somewhat problematic to clean up the data. “A lot of it took place aboard ship, and there're six people on the crew, so there's a lot of interactions,” says Ricks. Fighting sequences were especially difficult to capture, particularly when somebody would fall on the floor and lose markers.

The production was set up so that all design work, preproduction, initial modeling of characters, and motion capture work were done in the United States. The animation side, including the application of captured data, modeling of sets and secondary characters, shading, and rendering, would be handled by the Pentafour CG studio in India, which was a relatively new studio whose experience was mainly in the production of art for CD-ROM titles. Ricks wanted to use very experienced people to handle the most challenging parts of the project, creatively and technically, leaving the supporting tasks to the inexperienced crew.

Overall, the first 6 months of production had been extremely productive. Evan Ricks and Alan Jacobs had managed to rewrite the script practically from scratch, while Ricks and the production designer developed the look. They hired the staff and cast, recorded the voice track, storyboarded, built sets to be used for motion capture, tested motion capture alternatives, and designed and built all the digital characters and sets. They even stopped regular production for over a month to create a teaser for the Cannes Film Festival. All the motion capture was also finished within those first 6 months. Everything was looking great and the staff was energized. “I was continually told by visitors and investors how beautiful our designs were, and how new and different as opposed to a ‘Disney’ look,” says Ricks.

Unfortunately, after the completion of the motion capture, the producers had a mistaken perception of the work left and didn't understand what had been achieved in those 6 months. In their view, most of the technical hurdles in the production had been surpassed. Not only did they not see the need to fulfill repeated requests to hire additional experienced personnel, but they also started laying off their most experienced staff members. Finally, the remainder of the production was moved to the Pentafour studio in India.

The difficult part started after House of Moves finished postprocessing and delivered the data. “There was a serious underestimation in how long it takes to weight the characters properly,” notes Ricks, who describes some bizarre problems with the deformations of the characters. The deformation setup was much more time consuming than they had originally estimated. The problem was augmented by the fact that they relied exclusively on off-the-shelf software, and there's a lack of commercial tools for handling captured motion data. A project of this scale would normally have some sort of in-house R&D support, but the budget didn't provide for it.

The mechanical setups for the characters were created using rotational data. For a large project like this, with so many characters and interactions, it would have been beneficial to create customized setups using also the translational data, using markers as goals for inverse kinematics or as blended constraints. When using rotational data, the mechanical setup has already been done by the motion capture studio, and not necessarily in the best possible way for the project at hand. “[House of Moves] hadn't done tons of this stuff at that point either, and they had to staff up. It was a learning experience for everybody,” notes Ricks, “but they did a very good job under the circumstances.”

A typical problem with captured motion data is in the unmatched interactions between characters and props or other characters. The crew in India has been responsible for cleaning up all these problems, including the inability to lock the characters' feet on the floor. This particular problem can be fixed by using inverse kinematics, but it can also be hidden by framing the shots in such a way that the characters' contact with the floor is not visible. Ricks was trying not to let this problem rule the framing of shots.

As Ricks predicted, the length of the production of the film was close to 2 years. “If you deconstructed just about any recent film, you'd find out what the director originally wanted and what actually took place are very often two different things, and that was no different here,” says Ricks.

“The key is to use this experience to identify the strengths of mocap, to realize that its value is currently not as broad as many think. It is a new camera, not an end in itself,” concludes Ricks.

The Polar Express

Robert Zemeckis had teamed up with Tom Hanks to bring the famous children's book The Polar Express to the screen. The original idea was to give the film the same painterly look as Chris Van Allsburg's book. The methodology to achieve the look would be to use live-action characters shot in front of green screen in order to place them in digitally generated backgrounds. Once the elements were integrated, some kind of filter would be applied to give it the look.

A day was scheduled to bring Tom Hanks to a soundstage at Sony Pictures to do a green screen test. Ken Ralston and Scott Stokdyk were the visual effects supervisors for the test. I was helping Scott with the preparations and we thought it would be interesting to try to capture Tom's face and see how accurate looking we could get it. One must remember that while there had been some interesting facial motion capture examples at the time, there still wasn't a believable side-by-side test of the face of a known person, so we weren't exactly sure what the result would look like.

We had Vicon bring a system to the soundstage for the day of the test. Once Tom was there, we spent most of the time shooting the green screen. Afterward, we were given a few minutes to do our thing. We scanned Tom's head with a laser scanner and slapped about 50 markers in his face on places where I thought would give us the movement we needed. We then had Tom say a few lines in front of the cameras. Nobody but Scott and I had any hope that this test would turn into something. Ken said to me that he was sure it wasn't going to succeed and Zemeckis didn't even pay attention to us when we were doing it. The day after the test almost everyone had forgotten we captured anything. I was the only person working on the motion capture test. Everyone else in the crew was working on the green screen test.

After several days, we started seeing the first green screen tests in dailies. They weren't looking good at all. The static elements in the shot were fine with the painterly look, but the live-action actors looked like they had a skin disease.

In the meantime, I was working away on the mocap test. I had already written a facial muscle-based solver that was used on Stuart Little II for talking cats and I was modifying it to work with captured data. Once I set up the system with Tom's facial model and started driving it with the data we captured, I was happily surprised. The test actually looked like Tom and it was moving the right way. It clearly required more data but you could see Tom in there.

After several weeks, the painterly look tests weren't looking any better. I hadn't been asked to show anything as most people had forgotten or assumed what I was doing didn't work. When I finally showed it in dailies, Ken Ralston looked at it and said “this may actually work. Let's show it to Bob.” Once Zemeckis saw it, he decided to pull the plug on the green screen test and use mocap for The Polar Express. I wasn't expecting that reaction. I still didn't know how extrapolate from that test into a whole 90-min film with lots of characters interacting with each other. This was a scary time, but it got worse when I was told that I had to have the ability to capture face and body together and we'd start shooting in about 5 months.

Producer Jody Echegaray and I had to start by choosing the right equipment and assembling the team. We started talking to the different equipment manufacturers and also interviewing all the possible candidates. We knew that we pretty much had to hire almost anyone who had any experience with motion capture to cover the scope of this project. Our first hire was Demian Gordon, who would be our motion capture supervisor. He had just finished working on the Matrix sequels and was able to bring with him a lot of good people to fill many of the slots in our team. Part of his expertise was also the design of camera layouts for capture stages, so I asked him to calculate how many cameras would be needed to meet the spec that we were asked to deliver. He estimated that we needed no less than 70 to 80 cameras to cover the face and body markers of up to four people at a time.

None of the existing manufacturers had the capabilities we needed, so we started to talk to them about working with us to daisy chain several of their systems together. As we told some of them what we were thinking, most decided to pass and at least one of them told us we didn't know what we were doing. The only manufacturer willing to work with us was Vicon, and between them and us we started designing the final layout, calibration methodology, and pipeline to achieve the final spec. Brian Nilles, Vicon's CEO, was instrumental in having his engineering team work on their software to allow us to hook up five Vicon systems together. All of us were on constant communication and as soon as they could ship some equipment we started to assemble a massive truss for placing cameras inside a soundstage at the Culver Studios. At the beginning, the assembled system was very delicate and just the wrong setting would make it malfunction. There was also the issue of calibration, as there was no known way to do this with a daisy-chained system. Initially, we had to calibrate five systems separately and then merge the calibrations, but eventually Vicon provided us with software to merge them on the fly. The problem was that the calibration had to be seen by all cameras and apparently in some particular order. We had to come up with a “dance” that D.J. Hauck, our head mocap operator, had to perform perfectly every time in order for the calibration to take. In the next couple of months, we came up with protocols and procedures for everything that could happen and started educating the production team on what to expect and what was possible and what wasn't.

We ended up with three different mocap stages: the main stage, where body and facial data would be captured, and two larger stages, where only body data would be captured. The main stage ended up with 84 cameras; 70 of them were Vicon cameras and would be used strictly for face capture. The rest of the cameras were operated by Giant Studio and would be used for body capture. Giant Studio was also responsible for the operations of the two larger sets, one of which was 36,000 ft3 (30 × 60 × 20 ft), the largest ever done at the time and used for large captures with many performers. The other large stage was 27,000 ft3 (30 × 30 × 30 ft) and was used mostly for stunt action because it had more height. The main stage was only 1300 ft3, which is 10 × 10 × 13 ft high.

We felt the stage was ready to shoot only about a week before the actual production would start. We held a massive meeting with all the different groups that would be on set and with the director and we went step by step over what was to happen on set. I also had to answer hundreds of questions from people who had never even heard about motion capture and were about to be dropped in the middle of the largest motion capture effort ever done. After that day, we started training the different groups about their new tasks. The makeup artists' job was to apply face markers and also to make sure that the marker sets were always complete during the shoot. Wardrobe people were in charge of mocap suits and body markers. Grips were in charge of bringing in and out of stage props that had to be built mostly with chicken wire. Also, the first assistant director and production assistants had to be educated about their new roles to get the stage ready for shooting.

I have to say that the crew for that shoot was ideal. Zemeckis understood immediately what he could and couldn't do. The makeup crew, led by Dan Striepeke, had to place 152 markers on up to 24 faces every day before shooting could begin, and they learned to do it very quickly and very accurately. Demian Gordon, our stage supervisor, had no problem stopping the shoot if any problem arose. He was never intimidated by Zemeckis, Hanks, or anyone else and because of that we never had any data surprises. We shot for 45 days without a major problem and everyone enjoyed the process. I heard Zemeckis say that he would never shoot a live-action movie again. He was so excited that he brought lots of people to see the stage. Among the people I saw, there were several studio heads and famous actors and directors like Steven Spielberg, James Cameron, and others.

An important aspect of the way Zemeckis decided to shoot his film is that he didn't want to frame shots on set or even look at real-time graphics of the performances. The small set was designed as an intimate setting where he could work with the actors. Not many people were allowed to stand inside the set.

When the shoot was done, we had to move into postproduction. We set up three departments to deal with the captured data. First was the Rough Integration Group, whose job was to create an assembled sequence from all the different pieces captured in different passes and stages. This sequence was played in real time and using a virtual camera setup the director of photography would design the shots. During The Polar Express we called that process Wheels, and it was specifically designed for Zemeckis' style of filmmaking.

The second group was the data-tracking group, whose responsibility was to track and deliver facial data from the shots created and used by editorial. The third group, called “Final Integration,” would bring each shot into Maya and integrate body and face data with the camera created by the DP. The rest of the pipeline was more typical of an animated feature. Shots went on to animation, layout, cloth, effects, color and lighting, and finally compositing.

“[The] Polar Express was in terms of motion capture probably the most groundbreaking, not necessarily on the imaging side, but because of the technology that had to be put together. Nobody had actually done body and face together at that point,” says Jerome Chen, visual effects supervisor.

Beowulf

Robert Zemeckisv second directorial effort in the performance capture arena was Beowulf. His initial goal was, as with The Polar Express, to create stylized environments and characters, but again the project evolved into the almost-photo-real realm. “Zemeckis wants to see realistic textures, realistic aspects to the environment and to the characters,” recalls Jerome Chen. “He doesn't gravitate toward the stylized designs; he likes it to be realistic. … Once again you are starting out trying to be stylized and ending up wanting to see more detail in the characters once you see realistic motion on them.”

Beowulf's pipeline was almost identical to the one used for The Polar Express. The main difference was in the data acquisition. Imageworks upgraded to Vicon's latest hardware included cameras with much higher resolution and the ability to accommodate many more of them, over 250 to be more specific. The volume was bigger so all the capture was done in a single stage. Also, the crew had a lot more experience from having done The Polar Express and Monster House. “A lot of the protocols established in the stage capture were now perfected,” says Chen. “We budgeted more time in Beowulf to do facial animation, particularly preserving volume in the face and on the lips.” The facial system in Beowulf was different from the one used on The Polar Express. It was mostly driven by FACS (Facial Action Coding System, described in Chapter 1), where actors would have a number of predetermined expressions and an algorithm would decide the optimal combination of expressions for each frame. They also paid a lot of extra attention to the eyes.

The Wheels process, now called Camera Layout, was still a major piece in the postproduction process as all the shots were designed there. For Zemeckis' style of filmmaking, that process worked very well because he is a big fan of complex camera work. Camera Layout allowed him to experiment and tweak the camera angles as many times as he wanted.

Avatar

The main difference between the production pipelines of The Polar Express/Beowulf and Avatar is in the way the camera work is designed. Zemeckis likes to have a director of photography design the shots well after the actual capture, while Cameron likes to design his cameras while he's capturing the performances. For that purpose, he used a virtual camera he called “Simulcam” that was captured at the same time and in the same way as the performers. It was very important to have real-time feedback in order to achieve this and Giant Studio was able to provide it.

Avatar had been in the works for many years but it didn't start production until 2005. “Avatar wasn't done before because the technology didn't exist to do the movie before,” says Jon Landau, producer. “People get confused about what the technology was. They think it's the 3D. It has nothing to do with the 3D. It was the facial performance of the CG characters. We knew that for this movie to work the characters needed to be emotional and engaging, and that wasn't there.”

The second large difference between Avatar and its predecessors was the fact that the facial data acquisition was done using a helmet-mounted camera and no facial markers. Image-based analysis was used to obtain the facial sampling. “Over the years, we did some testing ourselves in another project called Brother Termite, where we did some image based facial capture. That proved to us that there was some validity there. Then in seeing things like Gollum in Lord of the Rings and what other people were doing we felt in 2005 that if we pushed the technology that we could be the impetus to get it to where we needed it to go,” says Jon.

I asked Jon about his take on motion capture and what was their intention when they decided to use it for Avatar. “We don't call it motion capture, we call it performance capture. We wanted the creative choices of performance to be made by actors and not made by animators, and that takes nothing away from great animators, because the animators are a very important step in the process, but the decisions that are made of when to stare and not blink and when to twitch—those are made by actors because that's what they do. The animators were responsible for showing that performance came through in the CG characters and that is a huge skill into itself. Performance capture is not a money saver to us, but a performance saver. If an actor gets that great performance, they have to only do it once. In fact it's a pure way of acting. Sigourney Weaver, on her first day on the set I said to her, so what do you think, and she said to me, It's easier than working on a theatre stage, because on a theatre stage you have to remember to play out to the audience, you can't always play off of your actors. Here I can play off of my actors and I can move and do what I want. It's liberating and I know that what I do, you will realize,” he adds.

The Polar Express, Monster House, and Beowulf all had performers wear facial markers. It's just a matter of taste, as we did give Zemeckis the choice of having a helmet with cameras. He thought actors wouldn't like it and it would hurt the facial performance. The drawback of not using helmets is in the capture volume size, as cameras surrounding it need to be able to see a'll the facial markers. Zemeckis was happy with this compromise because he wanted a small, intimate space where he could interact with the actors better. Cameron's style is different in the sense that he likes to edit his film as he's shooting it, in fact, he would stop shooting for days or weeks to edit what was shot and then he would continue shooting again. That is why cameras had to be already done, and he needed to have body and facial real-time feedback as well in order to build his cut so early in the process.

“We stand on the shoulders of those that came before us. We ride on top of what [The] Polar Express did and Polar was an incredible first step in this arena,” he concludes.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.