Chapter 2

Exploring the Current State of Virtual Reality

IN THIS CHAPTER

![]() Reviewing the various form factors for virtual reality

Reviewing the various form factors for virtual reality

![]() Comparing the features of current virtual reality hardware

Comparing the features of current virtual reality hardware

![]() Surveying the available types of virtual reality controllers

Surveying the available types of virtual reality controllers

![]() Exploring some of the current issues with virtual reality

Exploring some of the current issues with virtual reality

![]() Assessing virtual reality adoption rates

Assessing virtual reality adoption rates

With virtual reality (VR) developments in a state of constant flux, it’s an exciting (and frenetic) time to be involved with VR. Stopping and taking stock of where these developments are headed is important. Are we racing toward mass adoption of VR, propelling us to the peak of the fourth wave of technological change within the next year or two? Or, as some critics have suggested, is the current VR cycle just another misstep in VR’s development cycle? A promising rise of the tide, followed by a washout leaving companies high and dry, leading to another decade of VR wallowing in the “Trough of Disillusionment?”

Many experts believe that VR will begin to see mainstream adoption by 2021 to 2023. By that time, VR headsets will likely be on their third or fourth generation of technology, and many of the issues that exist in 2018 will have been solved.

This chapter takes a look at the current state of the technology (as of this writing). Many first-generation devices have been released, with many second-generation (or first-and-a-half generation) devices announced as well. Understanding where VR is now will help you make your own predictions about where the technology is headed, and decide for yourself where we are in the VR cycle.

Looking at the Available Form Factors

Most VR hardware manufacturers seem to be driving toward a similar form factor, generally a headset/integrated audio/motion controller combination. That form factor may indeed be the best base setup for VR experiences, but it could also speak to a lack of innovation that there is not more variety in hardware executions for mass consumer devices. Perhaps years down the road, VR’s form factor will change entirely. For now, most VR form factors are executed as a headset, but every company has designed its own version of how that form factor should look and feel.

To keep the focus of this book somewhat manageable, I mainly focus on the consumer head-mounted displays (HMDs) with the largest consumer base. Currently, those are the Oculus Rift, HTC Vive, Windows Mixed Reality, Samsung Gear VR, PlayStation VR, Google Daydream, and Google Cardboard. These are the first-generation consumer VR headsets with the broadest reach at this time, though the list will change in the future. Even if another headset comes along, it will likely share many of the same evaluation criteria I include here. Many of the upcoming second-generation devices (see Chapter 4) can be evaluated against the same criteria. Becoming familiar with the various options, benefits, and drawbacks of various hardware executions will enable you to evaluate any new entries into the market, not just the hardware mentioned here.

For consumer-grade VR, HTC Vive and Oculus Rift currently sit at the high end of our VR experience graph. Without a doubt, they offer some of the most realistic experiences of any VR hardware to date. Those experiences come at a cost, however — both for the headsets themselves and for the separate hardware required to power them.

Microsoft’s Windows Mixed Reality VR headsets, a new addition to the VR market, are in the same high-end tier of VR experiences as the Vive and the Rift. Don’t let the name fool you, though. Mixed Reality is just how Microsoft is branding its VR headsets — there is nothing “mixed reality” about them at this time — though the name could point to a convergence of VR and AR in the future. Will the products eventually function as both a VR headset and as an augmented reality (AR) headset via a camera pass-through image of the surrounding environment? That could be the direction Microsoft would like to take it, but that capability doesn’t fully exist yet in its current batch of headsets.

Without one definitive Windows Mixed Reality VR headset to use as a baseline, it can be difficult to compare apples to apples for evaluation purposes. For example, the Acer Windows Mixed Reality headset may have different baseline specs than, say, the HP Windows Mixed Reality headset. That said, the specifications of most Windows Mixed Reality VR headsets generally place them toward the higher end of VR experiences.

The PlayStation VR offers a take on VR by a game console manufacturer. Sony’s PlayStation VR doesn’t require a separate PC to run it, but it does require a Sony PlayStation gaming console. Reviewers have praised the PlayStation VR’s ease of use, price point, and game selection, but they’ve knocked the lack of a room-scale experience, the slightly underperforming controllers, and the lower resolution per eye versus the higher-end headsets listed here.

Table 2-1 compares some of the available desktop VR headsets. For legibility, I provide separate tables for the higher-end “desktop” VR experiences, which require external devices to power them, typically computers or game consoles, and the lower-end mobile VR experiences, which work with mobile devices such as smartphones. (The mobile device info is coming up later in the chapter, in Table 2-2.) This is not to say that one experience is necessarily a better choice than the other. Both have different sets of strengths and weaknesses. For example, you may require the most powerful or most immersive experience you can buy — in which case, externally powered “desktop” VR experiences are for you. Or, perhaps image fidelity is not as much of a concern and you require mobility for your VR headset, making the mobile VR headsets a better fit for your personal needs.

TABLE 2-1 Virtual Reality Desktop Headset Comparison

HTC Vive |

Oculus Rift |

Windows Mixed Reality |

PlayStation VR |

|

Platform |

Windows or Mac |

Windows |

Windows |

PlayStation 4 |

Experience |

Stationary, room-scale |

Stationary, room-scale |

Stationary, room-scale |

Stationary |

Field of view |

110 degrees |

110 degrees |

Varies (100 degrees) |

100 degrees |

Resolution per eye |

1,080 x 1,200 OLED |

1,080 x 1,200 OLED |

Varies (1,440 x 1,440 LCD) |

1,080 x 960 OLED |

Headset weight |

1.2 pounds |

1.4 pounds |

Varies (0.375 pound) |

1.3 pounds |

Refresh rate |

90 Hz |

90 Hz |

Varies (60–90 Hz) |

90–120 Hz |

Controllers |

Dual motion wand controllers |

Dual motion controllers |

Dual motion controllers, inside-out tracking |

Dual PlayStation move controllers |

TABLE 2-2 Virtual Reality Mobile Headset Comparison

Samsung Gear VR |

Google Daydream |

Google Cardboard |

|

Platform |

Android |

Android |

Android, iOS |

Experience |

Stationary |

Stationary |

Stationary |

Field of view |

101 degrees |

90 degrees |

Varies (90 degrees) |

Resolution |

1,440 x 1,280 Super AMOLED |

Varies (Pixel XL 1,440 x 1,280 AMOLED) |

Varies |

Headset weight |

0.76 pound without phone |

0.49 pound without phone |

Varies (0.2 pound without phone) |

Refresh rate |

60 Hz |

Varies (minimum 60 Hz) |

Varies |

Controllers |

Headset touchpad, single motion controller |

Single motion controller |

Single headset button |

There are many different variations of Windows Mixed Reality Headsets with varying specifications. I include specs for the Acer AH101 Mixed Reality Headset, a popular Windows Mixed Reality Headset.

Slightly lower on the first-generation consumer VR devices performance and features scale are mobile-powered VR devices such as the Google Daydream and Samsung Gear VR. These devices require little more than a relatively low-cost headset and a compatible higher-end Android smartphone, making these devices a good entry-level choice for the curious first-time user.

At the low end of the first generation of consumer VR headsets are mobile-powered headsets such as the Google Cardboard, so named for the fact that the original Cardboard was little more than a few specially designed lenses and a folded cardboard container for your mobile device. Google Cardboard relies on little more than some inexpensive parts and your mobile device to create a VR headset, and almost any newer mobile device — iOS or Android — can run the required Google Cardboard software. However, due to its lack of specialization, the Google Cardboard VR experience doesn’t provide the level of experience offered by dedicated headsets.

As with Windows Mixed Reality headsets, Google doesn’t necessarily manufacture all Google Cardboard headsets. The Cardboard specifications are freely available on Google’s website. Other manufacturers have also produced a number of Google Cardboard variations, such as Mattel’s View-Master VR and DodoCase’s SMARTvr. All the Cardboard variations use similar technology and offer similar levels of support.

Table 2-2 compares some of the available options for mobile VR headsets. It can be difficult to provide direct specifications for the mobile executions because each headset may support multiple mobile devices and, thus, not have a single specification it adheres to.

Keeping those general categories in mind, let’s take a look at the total sales and reach of the VR headsets mentioned so far. All companies have remained relatively quiet concerning their true sales numbers, but the figures in Table 2-3 are the reported forecast via Statista as of November 2017 (www.statista.com/statistics/752110/global-vr-headset-sales-by-brand/). You’ll notice Google Cardboard figures are higher than those listed by Statista. That’s because Cardboard has been around longer than the two years tracked here. Google self-reported more than 10 million Cardboards having shipped worldwide as of February of 2017.

TABLE 2-3 Virtual Reality Headset Units Sold

Device |

Units Sold |

HTC Vive |

1.35 million |

Oculus Rift |

1.1 million |

Sony PlayStation VR |

3.35 million |

Samsung Gear VR |

8.2 million |

Google Daydream |

2.35 million |

Google Cardboard |

More than 10 million |

Surprisingly, the lower-quality experience offered by Google Cardboard appears to have not adversely affected its adoption numbers, making it appear to be the clear winner thus far in the VR headset adoption race. Samsung’s Gear VR is showing strong adoption numbers for a midlevel user experience, and Google Daydream and PlayStation VR show decent numbers whose lower adoption likely aligns with being released nearly two years after Google Cardboard and a year after the Gear. HTC Vive and Oculus Rift show numbers that reflect the premium price tag associated with their experiences. Windows Mixed Reality, with its late 2017 release date, does not have sales figures available.

It shouldn’t come as a complete shock that consumers are preferring to enter the VR market with an inexpensive option such as the Cardboard. With an unknown technology, consumers appear to be entering the market somewhat warily, and only the most bleeding-edge early adopters have been purchasing the more expensive, higher-end headsets.

However, it should lead you to think about what these sales numbers mean for the future of VR. The numbers show decent adoption rates, but nothing like the massive adoption seen by other technology such as gaming systems. As a comparison, the PlayStation 4 (the system that powers PlayStation VR) sold one million consoles within its first 24 hours at retail.

Additionally, there is a currently steep drop-off between the lower- and higher-end headsets, and you should consider just how many of those who first experience VR on one of the lower-end headsets will end up converting to a higher-end headset. Could mobile VR sales actually be cannibalizing higher-end VR sales for this generation and unintentionally harming future VR sales?

The lower-end VR headsets offer (predictably) a lower-end experience. Cardboard has a noble goal (democratizing the VR experience by getting it into the hands of as many users as possible), but it may also lead to users who believe that their VR experience within Cardboard is representative of the current generation of VR, which is not the case. Even some of the midlevel systems, such as Daydream and Gear VR, do not offer the same level of immersiveness that the higher-end VR headsets, such as Vive or Rift, do. I take a deeper look at this potential problem later in this chapter.

Focusing on Features

Besides price and headset design, there are also a number of different approaches each manufacturer is taking in regard to the VR experience it offers. The following sections look at some of the most important VR features.

Room-scale versus stationary experience

Room-scale refers to the ability of a user to freely walk around the play area of a VR experience, with his real-life movements tracked into the digital environment. For first-generation VR devices, this will require extra equipment outside of the headset, such as infrared sensors or cameras, to monitor the user’s movement in 3D space. Want to stroll over to the school of fish swimming around you underwater? Crawl around on the floor of your virtual spaceship chasing after your robot dog? Walk around and explore every inch of a 3D replica of Michelangelo’s David? Provided your real-world physical space has room for you to do so, you can do these things in a room-scale experience.

A stationary experience, on the other hand, is just what is sounds like: a VR experience where the experience is designed around the user remaining seated or standing in a single location for the bulk of the experience. Currently, the higher-end VR devices (such as the Vive, Rift, or WinMR headsets) allow for room-scale experiences, while the lower-end, mobile-based experiences do not.

Room-scale experiences can feel much more immersive than stationary experiences, because a user’s movement is translated into their digital environment. If a user wants to walk across the digital room, she simply walks across the physical room. If she wants to reach under a table, she simply squats down in the physical world and reaches under the table. Doing the same in a stationary experience would require movement via a joystick or similar hardware, which pulls the user out of the experience and makes it feel less immersive. In the real world, we experience our reality through movement in physical space; the VR experiences that allow that physical movement go a long way toward feeling more “real.”

Room-scale digital experiences must also include barriers indicating where real-world physical barriers exist, to prevent users from running into doors and walls, displaying the boundary in the digital world of where the physical-world boundaries exist.

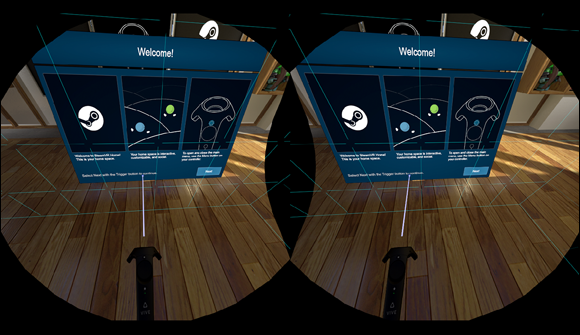

Figure 2-1 illustrates how the HTC Vive headset currently addresses this issue. When a user in headset wanders too close to a real-life barrier (as defined during room setup), a dashed-line green “hologram wall” alerts the user of the obstacle. It isn’t a perfect solution, but considering the challenges of movement in VR, it works well enough for this generation of headsets. Perhaps a few generations down the road, headsets will be able to detect real-world obstacles automatically and flag them in the digital world.

FIGURE 2-1: The “hologram wall” border as seen in HTC Vive.

Many room-scale VR experiences also require users to travel distances far greater than their physical space can accommodate. The solution for traveling in stationary experiences is generally a simple choice. Because a user can’t physically move about in her stationary experiences, either the entire experience takes place in a single location or a different method of locomotion is used throughout (for example, using a controller to move a character in a video game). Room-scale experiences introduce a different set of issues. A user can now move around in the virtual world, but only the distance allowed by each user’s unique physical setup. Some users may be able to physically walk a distance of 20 feet in VR. Other users’ physical VR play areas may be tight, and they may only have 7 feet of space to physically walk that can be emulated in the virtual environment.

VR developers now have some tough choices to make regarding how to enable the user to move around in both the physical and virtual environments. What happens if the user needs to reach an area slightly outside of the usable physical space in the room? Or around the block? Or miles away?

If a user needs to travel across the room to pick up an item, in room-scale VR he may be able to simply walk to the object. If he needs to travel a great distance in room-scale, however, issues start to arise. In these instances, developers need to determine when to let a user physically move to close-proximity objects, but also when to help a user reach objects farther away. These problems are solvable, but with VR still in its relative infancy, the best practices for VR developers regarding these solutions are still being experimented with (see Chapter 7).

Inside-out tracking

Currently only the higher-end consumer headsets offer room-scale experiences. These high-end headsets typically require a wired connection to a computer, and users often end up awkwardly stepping over wires as they move about in room-scale. This wired problem is generally twofold: Wiring is required for the image display within the headset, and wiring is required for tracking the headset in physical space.

Headset manufacturers have been trying to solve this wired display issue, and many of the second generation of VR headsets are being developed with wireless solutions in mind. In the meantime, companies such as DisplayLink and TPCast are also researching ways to stream video to a headset without the need for a wired connection.

On the tracking side, both the Vive and the Rift are currently limited by their externally-based outside-in tracking systems, where the headset and controllers are tracked via an external device. Outside of the headset, additional hardware (called sensors or lighthouses for Rift and Vive, respectively) are placed around the room where the user will be moving around while in VR space. These sensors are separate from the headset itself. Placing them about the room allows for extremely accurate tracking of the user’s headset and controllers in 3D space, but users are limited to movement within the sensor’s field of view. When the user moves outside that space, tracking is lost.

Figure 2-2 shows setup for the first-generation HTC Vive, which requires you to mount lighthouses around the space you want to track. You then define your “playable” space by dragging the controllers around the available area (which must be within visible range of the lighthouses). This process defines the area you’re able to move in. Many first-generation room-scale headsets handle their space definition similarly.

FIGURE 2-2: HTC Vive room setup.

In contrast, inside-out tracking places the sensors within the headset itself, removing the need for external tracking sensors. It relies on the headset to interpret depth and acceleration cues from the real-world environment in order to coordinate the user’s movement in VR. Windows Mixed Reality headsets currently utilize inside-out tracking.

Even though most high-end first-generation VR headsets still require tethering to a computer or external sensors, companies are finding creative ways to work around these issues. Companies such as the VOID have implemented their own innovative solutions that offer a glimpse of what sort of experiences a fully self-contained VR headset could offer. The VOID is a location-based VR company offering what they term hyper-reality, allowing users to interact with digital elements in a physical way.

The cornerstone of the VOID’s technology is their backpack VR system. The backpack/headset/virtual gun system allows the VOID to map out entire warehouses worth of physical space and overlay it with a one-to-one digital environment of the physical space. The possibilities this creates are endless. Where there is a plain door in the real world, the VOID can create a corresponding digital door oozing with slime and vines. What might be a nondescript gray box in the real world can become an ancient oil lamp to light the user’s way through the fully digital experience.

The backpack form factor the VOID currently utilizes is likely not one that will see success at a mass consumer scale. It’s cumbersome, expensive, and likely too complex to serve a mass audience. However, for the location-based experiences the VOID provides, it works well and gives a glimpse of the level of immersion VR could offer once untethered from cords and cables.

Both Vive and Rift appear to be gearing up to ship wireless headsets as early as 2018, with both the HTC Vive Focus (already released in China) and Oculus’s upcoming Santa Cruz developer kits utilizing inside-out tracking.

Haptic feedback

Haptic feedback, which is the sense of touch designed to provide information to the end user, is already built into a number of existing VR controllers. The Xbox One controller, the HTC Vive Wands, and the Oculus Touch controllers all have the option to rumble/vibrate to provide the user some contextual information: You’re picking an item up. You’re pressing a button. You’ve closed a door.

However, the feedback these controllers provide is limited. The feedback these devices provide is similar to your mobile device vibrating when it receives a notification. Although it’s a nice first step and better than no feedback whatsoever, the industry needs to push haptics much further to truly simulate the physical world while inside the virtual. There are a number of companies looking to solve the issue of touch within VR.

Go Touch VR has developed a VR touch system to be worn on one or more fingers to simulate physical touch in VR. The Go Touch VR is little more than a device that straps to the ends of your fingers and pushes with various levels of force against your fingertips. Go Touch VR claims that the device can generate a surprisingly realistic sensation of grabbing a physical object in the digital world.

Other companies, such as Tactical Haptics, are looking to solve the haptic feedback problem within the controller. Using a series of sliding plates in the surface handle of their Reactive Grip controller, they claim to be able to simulate the types of friction forces you would feel when interacting with physical objects. When hitting a ball with a tennis racquet, you would feel the racquet push down against your grip. When moving heavy objects, you would feel greater force pushing against your hand than when moving lighter objects. When painting with a brush, you would feel the brush pull against your hand as if you were dragging it over paper or canvas. Tactical Haptics claims to be able to emulate each of these scenarios far more precisely than the simple vibration most controllers currently allow.

On the far end of the scale of haptics in VR are companies such as HaptX and bHaptics, developing full-blown haptic gloves, vests, suits, and exoskeletons.

bHaptics is currently developing the wireless TactSuit. The TactSuit includes a haptic mask, haptic vest, and haptic sleeves. Powered by eccentric rotating mass vibration motors, it distributes these vibration elements over the face, front and back of the vest, and sleeves. According to bHaptics, this allows for a much more refined immersive experience, allowing users to “feel” the sensation of an explosion, of a weapon recoil, or the sensation of being punched in the chest.

HaptX is one of the companies exploring the farthest reaches of haptics in VR with its HaptX platform. HaptX is creating smart textiles to allow you to feel texture, temperature, and shape of objects. It’s currently prototyping a haptic glove to take virtual input and apply realistic touch and force feedback to VR. But HaptX takes a step beyond the standard vibrating points of most haptic hardware. HaptX has invented a textile that pushes against a user’s skin via embedded microfluidic air channels that can provide force feedback to the end user.

HaptX claims that its use of technology provides a far superior experience to those devices that only incorporate vibration to simulate haptics. When combined with the visuals of VR, HaptX’s system takes users a step closer to fully immersive VR experiences. HaptX’s system could carry through its technology to the realization of a full-body haptic platform, allowing you to truly feel VR. Figure 2-3 shows an example of HaptX’s latest glove prototype for VR.

Courtesy of HaptX

FIGURE 2-3: HaptX VR gloves.

Audio

As VR seeks to emulate reality as closely as possible, it must engage other senses besides sight and touch. Smell and taste simulation are (perhaps thankfully) likely far off from reaching the mass consumer adoption, but 3D audio is ready for its day in the sun. Engaging the user’s sense of hearing plays an important role in creating a realistic experience. Audio and visuals work together to provide a sense of presence and space for the user and help to establish the feeling of being there. Directional audio cues, alongside visual cues, can also be vital in guiding users through a digital experience.

Human hearing itself is three-dimensional; we can distinguish the 3D direction that a sound is coming from, the general distance from the source, and so on. Simulating that effect is necessary for a user to feel as if she’s experiencing audio as she would in the real world. 3D audio simulation has existed for a while, but often as a solution without a problem. With the rise of VR, 3D audio has found itself a market that can help propel it forward (and vice versa).

Most current headsets (even lower-end devices such as Google Cardboard) have support for spatial audio. Spatial audio takes into consideration the fact that the user’s ears are on opposite sides of the head and adjusts sounds appropriately. Sounds coming from the right will reach the user’s left ear with a delay (because the sound wave travels slower to the ear farther away from the audio source). Before spatial audio, apps simply played sounds coming from the left in the left speaker, or coming from the right in the right speaker, cross-fading between the two.

Standard stereo recordings consist of two different channels of audio signal, recorded with two microphones spaced apart. This recording approach can create a loose sense of space, with panning of the sound between each channel. Binaural recordings are two-channel recordings created by using special microphones that simulate a human head. This allows for extremely realistic playback through headphones. For live audio in VR, binaural audio recordings can create a very realistic experience for the end user.

Considering Controllers

Early virtual experience users discovered that, although the visuals are important, the experience quickly falls flat if you don’t have a system of input that matches the visuals in fidelity of experience. Users may be fully immersed in the visuals of a VR experience, but as soon as they try to move their hands or feet and find those movements not reflected in the virtual world, the immersion crumbles.

“The virtual reality experience is not going to be complete with just the visual side,” Oculus Rift founder Palmer Luckey told The Verge. “You absolutely need to have an input and output system that is fully integrated, so you not only have a natural way to view the virtual world, but also a natural way to interact with it.”

The following are various input devices and features you will come across as you delve into the world of VR. Some are very simple, and some are incredibly complex. Each offers a vastly different take on how interaction in the virtual world should occur. Sometimes the simplest input solution may serve the experience the best, such as a gaze to trigger an action. Other times, nothing fills out the immersion experience better than a fully realized digital hand mimicking the movements of a user’s physical hand.

Toggle button

The toggle button is so simple it barely warrants an entry here. However, it’s also currently the only physical input method for the virtual world on the best-selling VR headset, the Google Cardboard. (Based solely on number of devices sold at the time of this writing.)

Little more than a simple on/off tap switch (or magnetometer toggle), the humble button rarely needs much of an introduction for anyone to learn how to use (beyond perhaps pointing them to where it’s located). Click, and actions are triggered.

Integrated hardware touchpad

Some hardware manufacturers, such as Samsung (on the Gear VR), have taken the idea of an integrated hardware button a step further by incorporating a full touchpad on the side of their headsets. Figure 2-4 shows Samsung’s integrated touchpad (1) used for tapping, swipes, and clicks, as well as an integrated Home button (2) and Back button (3).

Courtesy of Oculus

FIGURE 2-4: Samsung Gear VR’s integrated touchpad.

The touchpad allows for better interaction than the simple toggle button. The touchpad gives the user the freedom to swipe horizontally or vertically, tap on items, toggle volume, and back out of content as needed. The touchpad also provides a backup means of control if the user misplaces a device’s motion controller.

One drawback is that integrated control solutions need ways to communicate with the device running the experience. For example, mobile VR headsets using integrated hardware controls may require a micro-USB (or similar) connection to the mobile device. In addition, because the touchpad may not have a natural integration with the virtual world (simulating the controller in the virtual world), it can pull the user out of the experience.

Gaze controls

Gaze controls can be baked into any VR application. They are a popular means of VR interaction, especially in applications that may seek to provide a user interaction method that can be more passive than active. (Think of gazing at a control for a set period of time to trigger that control versus actively using a touchpad or motion controller.) Applications such as video applications or photo viewers, where a user may be more passively engaged with the content, are good examples of applications which often utilize gaze controls.

And gaze controls are not for passive interaction alone. Gaze, in combination with other methods of interaction (such as hardware buttons or controllers), is often used in VR to trigger interactions. As eye tracking (discussed later in this chapter) becomes more popular, gaze controls will likely see even more usage.

A reticle in VR is any graphic used to help indicate where a user is gazing. For headsets that do not include eye tracking, this is typically the center of a users vision. Often just a simple dot or crosshair, the reticle typically sits on top of all elements as a way to help visualize what a user will currently be selecting. A reticle directly in the center of view offers a simple solution until more sophisticated eye tracking in headsets becomes mainstream.

Figure 2-5 shows an example of a reticle in use in VR. The reticle helps a user know where his gaze is focused within a virtual scene.

FIGURE 2-5: A reticle in use in VR.

Keyboard and mouse

Some VR headsets utilize variations of a standard mouse and/or keyboard for interaction. The problem with such setups can be that there is no way to see the keyboard from inside the headset. Even the best touch typists have trouble typing when unable to at least glance down at the keyboard.

Using a mouse can also be problematic. In standard 2D digital worlds, such as on desktop PCs, the mouse has long been the standard tool for shifting the view to “look around.” In the 3D world, though, the headset gaze should control what a user is seeing. In a few early applications, both the mouse and gaze controls could shift a user’s view, but this interaction had the potential to be very disorienting, as the mouse pulled the gaze one way and the user’s physical gaze pulled another.

Some VR apps still support keyboards and mice, but those input methods have mostly fallen out of fashion as the main input in favor of more integrated input solutions. However, these new integrated solutions can come with a drawback of their own. With a keyboard no longer available as the main input device, how can long-form text be entered into applications?

A number of different control options have been put forth to solve that problem. Logitech has created a proof-of-concept VR accessory that allows HTC Vive users to see a representation of its physical keyboard in the virtual space. Connecting a tracker to your keyboard, Logitech creates a 3D model of its keyboard in your VR space right on top of the physical version of the keyboard, an interesting execution that could help the touch typists of the world.

Fully digital text input solutions exist as well. Jonathan Ravasz’s Punch Keyboard is a predictive keyboard that enables users to type using motion controllers as drumsticks. Figure 2-6 shows the Punch Keyboard in use. Moving forward, VR application developers will need to find and standardize on better text input methods if they are to see mass consumer adoptions.

FIGURE 2-6: Jonathan Ravasz’s Punch Keyboard in use.

Standard gamepads

Many headsets and controllers support standard gamepads or videogame controllers, a familiar input solution for many gamers. The original Oculus Rift even shipped with an Xbox One controller, a gamepad many gamers were already familiar with (see Figure 2-7).

Used with permission from Microsoft

FIGURE 2-7: The Xbox One controller.

However, gamepads in VR as an input solution typically don’t feel as integrated as some of the other input options. They are, however, a familiar input method for many VR users who are also gamers, and it was a good first step away from keyboard and mouse. Most VR headsets seem to be moving away from relying on standard gamepads as the main source of input for VR, preferring instead more integrated motion controllers.

Motion controllers

Motion controllers, once mainly a slightly gimmicky device for 2D PC games, have quickly become the de facto standard for interaction in VR. Nearly all the larger headset manufacturers have released a set of motion controllers compatible with their headsets.

Figure 2-8 displays a pair of Oculus Touch motion controllers, the latest set of controllers packaged with the Oculus Rift. HTC and Microsoft both have similar variations on the same theme, both offering a pair of untethered motion controllers.

Courtesy of Oculus

FIGURE 2-8: A pair of Oculus Touch motion controllers.

A controller ideally should be nearly invisible to the end user. When utilizing many of the input methods previously discussed in this chapter, users must make conscious decisions outside of the VR experience to do so: I am now clicking a button on the side of the headset. I am now hunting/pecking for the W key on the keyboard. Motion controllers take a step in the direction of eliminating the issue of input being separate from your VR experience. Motion controllers are typically visualized within the VR experience, and can begin to feel like a natural extension of your hand. Many of the higher-end VR controllers also offer six degrees of freedom of movement, allowing even deeper immersion of these methods of input.

Not to be left behind from the higher-end headsets, first-generation mid-tier mobile headset options (Gear VR and Daydream) have included motion controls of their own. These options have been simplified from the higher-end headsets. Typically realized as a single controller with a variety of features (a touchpad, volume controls, Back/Home buttons, and so on). The controller also has a representation in the digital world, allowing the user to “see” what his hand is doing in the real world. Unlike the higher-end motion controllers, these controllers typically only offer three degrees of freedom (only tracking their rotation in the virtual space).

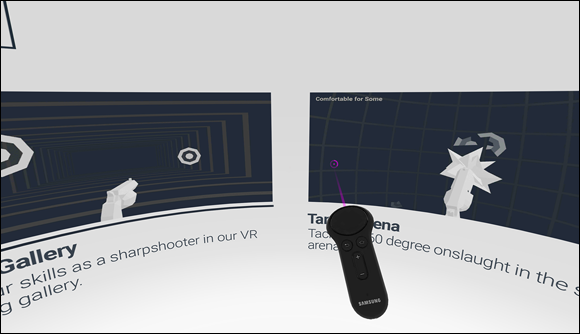

Figure 2-9 displays what a user would see in VR when using the Samsung Gear VR controller. There’s a virtual representation of the real device, enabling the user to target items in VR.

FIGURE 2-9: Samsung Gear VR controller in VR.

However, although it’s less sophisticated than the higher-end motion control options, even these simple one-handed control representations can provide much more of an integrated experience for VR than the previously discussed options. The ability to “see” the controller in the virtual space and track its physical movement is an enormous step toward immersing the user into the virtual world and incorporating a user’s physical movements into the virtual space.

The higher-end headset options (such as the Rift, Vive, and Windows Mixed Reality) have a pair of wireless motion controllers. The motion controllers have some slight differences (a touchpad on each Vive wand, shown in Figure 2-10, versus the analog joysticks of the Oculus Touch), but they share many similar qualities overall.

Courtesy of HTC Vive

FIGURE 2-10: A pair of HTC Vive “wand” motion controllers.

These high-end controller pairs allow for incredibly precise (sub-millimeter) object detection. Being able to look down and see a visual representation of the controllers moving in tandem with your physical body is another important step in making the VR experience truly immersive.

Many VR applications have started to target only motion controllers as their main input device moving forward. Motion controls appear to be the standard for interaction in VR for now. However, a plethora of other options is available for interacting in VR, with many more being developed.

Hand tracking

Hand tracking enables headsets to track the user’s hands in VR without any additional hardware worn on the extremities. And some people consider hand tracking the next evolutionary step from motion controllers.

A number of companies are exploring this avenue for both VR applications. In AR applications, this method of control is seeing even more development. Companies such as Leap Motion have been working on hand tracking extensively, both inside and outside of VR, for years. Leap Motion initially launched its hand tracking controller in 2012, intended for use with 2D screens. With the rise of VR, the company pivoted its technology to cover VR as well, seeing the potential its technology could have for interaction in that space.

Unlike motion controllers, where the visual in VR is typically a controller, wand, virtual “fake” hand or similar model, hand tracking typically brings a representation of your actual hand into the virtual space. You pinch your fingers, and your digital hand pinches. You flex your thumbs, and your digital hand flexes its thumbs. You give the peace sign, and your digital hand does as well. Viewing a digital representation of your physical hands in VR, with full finger tracking, is a bit of a mind-bending experience. It can feel as if you’re trying on a new body. You may find yourself staring at your hand in VR, opening and closing your fist, just to watch your digital representation do the same.

Another drawback of hand tracking is that, although the tracking itself can be impressive, it lacks the tactile feedback a user would expect if interacting in the real world. Picking up a box in VR using hand tracking alone does not give the tactile feedback of picking up a box in the real world, which can feel awkward to many users.

Hand tracking will likely continue to take a back seat to motion controllers for standard consumer VR experiences for the near future. However, hand tracking will eventually find a place in VR. It’s too special an experience to not eventually find a way to be utilized within the digital world.

Eye tracking

In 2016, a company called FOVE released the first VR headset with built-in eye tracking. Facebook, Apple, and Google also all acquired eye-tracking startups for their various VR and AR hardware devices, signaling eye tracking as a field to watch.

Eye tracking has the potential to make a user’s experience in VR much more intuitive. Most of the current generation of headsets (outside of entries such as the FOVE) determine only where the user’s head is turned, not necessarily where the user is actually looking.

As discussed earlier in this chapter, most headsets use a reticle that sits directly in the middle of a user’s view to inform the user where her gaze is centered. However, in the real world, people often focus their vision somewhere else besides directly in front of their faces. Even using a computer screen, often directly in front of your gaze, your eyes are often straying to the bottom or top of the screen to select various menus, or down to your keyboard or mouse, even though your head may remain stationary.

Figure 2-11 shows an example of how foveated rendering would play out in VR. With the eye focused on one area, the center foveated region would remain at full resolution. The secondary blended region would create a step between the full-resolution area and the much lower-resolution peripheral region.

FIGURE 2-11: An example of foveated rendering at work.

Although FOVE is focused on eye tracking within its own headset, other developers such as Tobii and 7invensun are focusing on creating foveated rendering hardware that can be added to existing VR consumer devices.

With most major headset manufacturers remaining mum on their plans for adding eye tracking to their next generation of devices, it’s likely that onboard eye tracking for headsets may be a generation or two out, though it’s definitely a development to keep an eye on.

… and much more

Voice control and speech may begin to see deeper uses as an interaction method with VR. Windows Mixed Reality headsets already allow speech commands, and most headsets with integrated microphones can support voice recognition as well. Plus, speech recognition and conversational user interfaces (UIs) are fields that are currently seeing heavy development and investment outside of VR/AR. Although speech recognition wasn’t a clear area of focus for some of the first generation of VR headsets, it’s a natural method of input that will likely see heavier development in the VR space in the near future.

On the hardware front, there is no lack of innovation in the methods of input space for VR. Some of the specialty options range from a vast collection of VR gun controllers, to foot controllers such as the 3D Rudder or SprintR, to full-blown platforms walking or running in VR such as the Virtuix Omni treadmill, shown in Figure 2-12.

Courtesy of Virtuix

FIGURE 2-12: Virtuix’s Omni treadmill for locomotion in VR.

Many controller options may find their way filling niche spaces in arcades or other one-off experiences. The real trick is finding which peripherals will be the next big thing in VR, and which will end up falling by the wayside.

The VR controller landscape is nothing if not extremely large and ever expanding. And for good reason: No one has yet to discover the “one true way” to mimic our physical experience in VR. Perhaps human perception of reality is so complex that there will never be one true way to mimic our interaction with the physical world. Or perhaps VR will reveal input methods we only wish we could incorporate into our physical world.

Now and in the near future, the standard VR experience appears to be rallying around a combination of gaze interaction and motion controllers for the broad set of interactions VR hopes to emulate. But who knows what the far future holds?

Recognizing the Current Issues with VR

Consumer-grade VR is steadily getting lighter, cheaper, and more polished, but it still has a number of technical hurdles to overcome to truly reach its mass consumer potential. Fortunately, renewed interest in VR the past few years has led to an influx of investments in the field, which should accelerate uncovering solutions. The following section explores some of the major issues facing VR today and how some companies are working to resolve them.

Simulator sickness

Early HMD devices generated widespread user complaints of motion sickness. This issue alone was enough to derail early VR mass consumer devices such as Sega VR and Nintendo’s Virtual Boy. It’s an issue that modern headset manufacturers still grapple with.

Motion sickness can occur when there are inconsistent signals between your inner ear’s vestibular sense of motion and what your eyes are seeing. Because your brain senses these signals are inconsistent, it then assumes that your body is sick, potentially from a toxin or some other affliction. At that point, your brain may decide to induce headaches, dizziness, disorientation, and nausea. VR headset use can induce a type of motion sickness that doesn’t necessarily involve any real motion; researchers have dubbed it simulator sickness.

There are a number of ways to combat simulator sickness, including some unconventional approaches. One study by Purdue University’s Department of Computer Graphics Technology suggested adding a “virtual nose” to every VR application to add a stabilizing effect for the user. Virtualis LLC is commercializing this virtual nose, naming it nasum virtualis. Embedding the nose in the user’s field of view acts as a fixed point of reference to ease VR sickness. Your physical nose appears in your line of sight in real life, but you often don’t perceive it there. Similarly, most users in Virtualis’s studies didn’t even notice the virtual nose in VR, but they reported a 13.5 percent drop in severity of sickness and an increase of time spent in the simulator.

Figure 2-13 displays how a virtual nose may appear to users in VR.

FIGURE 2-13: Using a virtual nose to prevent simulator sickness.

The most effective way that VR developers can combat simulator sickness, however, is to minimize latency between a user’s physical motion and the headset’s response. In the real world, there is no latency between the movement of our heads and the visual response of the world around us. Reproducing that lack of latency in headset is of paramount importance.

With the proliferation of VR on low-powered mobile devices, it’s more important than ever to keep the frames per second (FPS) each headset can display as high as possible. Doing so enables the visuals in the headset to stay aligned with the user’s movement.

Here are some other suggestions for avoiding simulator sickness when creating or using VR apps:

- Ensure the headset is properly adjusted. If the virtual world seems blurry when trying on your headset, most likely the headset needs adjustment. Most headsets enable users to tweak the device’s fit and distance from their eyes to eliminate any blurriness. Be sure your headset is properly adjusted before going into any VR experience.

- Sit down. For some people, the sense of stability that being seated provides helps them overcome their motion sickness.

- Keep text legible. Avoid reading or using small-font text in VR, and keep text use to a minimum (just a few words at a time).

- No unexpected movement. When developing, never move the camera programmatically without reason. The user should feel motion occurs due to his own motion or triggered via interaction.

- Avoid acceleration. It is possible to move virtual cameras without triggering simulator sickness, but that motion needs to be smooth. Avoid accelerating or decelerating a user when motion in the virtual space needs to occur.

- Always track the user’s movements. Do not reverse the camera against a user’s motion or stop tracking the user’s head position. A user’s view must update with her head movement.

- Avoid fixed-view items.Fixed-view items (items that do not change when the view changes, such as a pop-up to inform the user of something in the middle of the screen or a heads-up display [HUD]) are fairly common in 2D gaming today. This mechanic does not work well in VR, however, because it isn’t something users are accustomed to in the real world.

As devices become more powerful, simulator sickness due to low FPS should, in theory, become less common. The more powerful the device, the better the ability to keep the visuals and movement of the virtual world tracked to the user’s physical movement, reducing the main cause of simulator sickness.

However, although we have far surpassed the processing power of computers of old, we still run into software that often runs slower than games of 20 years ago. In general, the more powerful our hardware gets, the more we tend to ask of it. Better visuals! More items on screen! Larger fields of view! More effects! Knowing the potential causes of simulator sickness should help you navigate these issues should they appear.

The screen-door effect

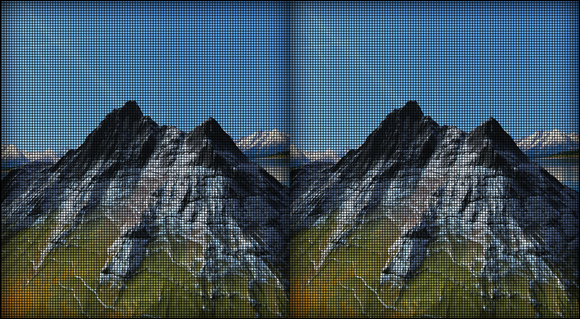

Put on any older VR headsets, or some of the current smartphone-powered VR headsets, and look closely at the image produced in the headset. Depending on the resolution of the device you’re using, you may notice “lines” in between the displayed pixels. As a child, you may have noticed the same thing if you sat too closely to your older TV set. This issue is called the screen-door effect, for its resemblance to looking at the world through a screen door. Although this problem has long been solved for televisions of today with extremely high resolutions, it has been reintroduced in some VR headsets.

Figure 2-14 displays an example of an exaggerated screen-door effect example as seen in VR. (An actual screen-door effect would occur on a pixel-by-pixel basis, not shown here.) With the display so close to a user’s face, the grid of space between pixels can begin to become apparent.

FIGURE 2-14: The screen-door effect in VR.

This effect is most noticeable in displays with lower resolutions, such as older headsets or some smartphones, many of which were never intended to be used primarily as VR machines, held inches from your nose and magnified via optic lenses.

Various proposals have been put forth to solve this issue. For example, LG has suggested placing a “light diffuser” between the screen and lenses, though most people agree the real fix will be higher-resolution displays. High-definition displays should alleviate the screen-door effect, as they have for TV, but they’ll require more processing power to run. As with simulator sickness, the hope is that the better the hardware, the less likely this effect will be to occur. With a little luck, the screen-door effect should become a relic of the past within the next generation or two of VR headsets.

Movement in VR

Moving through the digital environment of VR is still an issue. Higher-end headsets, such as Vive and Rift, allow users to be tracked throughout a room, but not much farther than that. Anything more requires some sort of movement mechanic built into the application itself, or specialized hardware beyond what most consumers likely have available (as discussed in the “Considering Controllers” section earlier in this chapter).

Movement over large distances in VR will likely be an ongoing logistical problem for application developers. Even utilizing some of the solutions listed previously, movement in VR that does not correspond to physical motion can trigger simulator sickness in some users. And even if you could guarantee an omni-directional treadmill for every user to track his or her movement, there are often large distances users will not want to cover walking. Plus, users with limited mobility may be unable to traverse distances on foot. Movement is an issue that hardware and software developers will need to work together to solve. And there are solutions available. I discuss some of the potential solves for movement in VR in the Chapter 7.

Health effects

Health risks are perhaps the largest unknown on this list. The Health and Safety guidelines for Oculus Rift caution against use if the user is pregnant, elderly, tired, or suffering from heart conditions. They also warn that users might experience severe dizziness, seizures, or blackouts. Scary-sounding stuff! There are also big unknowns as to the long-term health effects of VR. Researchers have yet to thoroughly study the impact of long-term use of VR headsets on eyesight and the brain.

Initial studies have generally shown that most adverse health effects are short-term, with little lasting effect on the user. However, as users begin to stay in VR space for longer stretches at a time, further studies would be required to discover any long-term effects of VR usage.

In the meantime, VR companies seem to be erring on the side of caution regarding the potential long-term effects. As Sarah Sharples, president of the Chartered Institute of Ergonomics and Human Factors, said in an interview with The Guardian, “Absolutely there are potentially negative effects of using VR. The most important thing that we should do is just to be cautious and sensible. But we should not let that stop us from taking advantage of the massive potential this technology offers as well.”

Cannibalization of the market

One final worry has to do with the VR market as a whole. The mobile market (and specifically the cheapest implementation, Google Cardboard) holds a massive adoption advantage over the higher-end headsets (refer to Table 2-3). And perhaps for good reason. It’s easier for a consumer to stomach a $20 purchase of a low-end mobile VR headset than to save up a few hundred dollars for a higher-end model.

Predictably, low-end headsets tend to offer lower-end experiences. A user may dismiss a low-end VR system as little more than a toy, believing it represents the current level of VR immersion, when nothing could be farther from the truth. However, perception can often become reality. Could the proliferation of low-end VR implementations serve to harm VR’s adoption in the long run, cannibalizing its own market?

The sales figures of lower-end devices have likely made some companies sit up and take notice. Many manufacturers appear to be focusing on a multi-tiered strategy for their next generation of headsets, offering experiences ranging from low end to high end for consumers. For example, Oculus co-founder Nate Mitchell claimed in an interview with Ars Technica that Oculus would focus on a three-headset strategy for its next generation of consumer headsets, with the standalone Oculus Go, set to be released in 2018, as its lower-end standalone device, followed by the Oculus Santa Cruz, a mid-tier headset experience. Similarly, HTC has released the HTC Vive Pro as a higher-end device, with the standalone HTC Vive Focus (released in China), focused more on the mid-tier market. For a more in-depth discussion of the various market segments of upcoming hardware, see Chapter 4.

In the long run, there is likely a broad enough market base to support all variations of VR. With the advent of the next generation of headsets, it will be interesting to see which devices make the biggest inroads with consumers. The near future will likely bring a rise in midrange mobile device headsets, while the higher-end, external PC-based headsets cater to those going all-in on high-end devices. The latter is a smaller demographic, but one that is willing to spend more to get the best-in-class experience that VR can offer.

Assessing Adoption Rates

Research firms such as Gartner Research estimate that mass adoption of VR will occur by 2020 to 2023. Based on the current state of VR hardware, features, and issues explored in this chapter, that estimate feels appropriate — though it will need to see strong adoption of the upcoming second-generation consumer devices (releasing soon) to get there.

The adoption rate of headsets, especially the low- to mid-tier headsets such as Google Cardboard and Gear VR show that the public is ready to dip its toes into the water of VR. Manufacturers are still trying to determine what sort of headsets the public wants, and this first round of headsets has given them figures to work with and make decisions against.

Today’s consumers only have access to the first-generation mass consumer VR headsets. Some companies (such as Oculus, Google, and HTC) have announced plans for their next generation of headsets, but other companies (like Microsoft) are just now getting their first generation of devices into the VR market (in the case of Microsoft, the Windows Mixed Reality VR headsets launched in late fall of 2017).

Although many of these first-generation headsets offer impressive immersive experiences, it’s clear that within this first generation, no manufacturer has completely figured out what will work best for mass consumer adoption in the VR space. For each headset version released, each VR app created, more and more knowledge is gained and companies are able to adjust their road maps and home in on creating truly great (and commercially viable) VR devices.

Mark Zuckerberg, Facebook CEO, recently announced a goal of “getting one billion people into virtual reality.” That’s an incredibly lofty goal. For comparison, the Internet, which most people would consider ubiquitous throughout the globe, has 3.2 billion users worldwide. For VR to reach close to that number of users would take a massive level of adoption.

However, many corporations have a vested interest in VR’s success, and many consumers want it to succeed. The next few years will be pivotal for the development of VR. This time period will likely paint a picture of the type of growth we can expect to see for VR, as it strives to hit mass consumer adoption. When headset manufacturers are able to fully dial in the experience aligning with consumers’ affordability sweet spot, Zuckerberg’s goal may not be out of reach.

Windows Mixed Reality is not a brand of hardware; instead, it’s a mixed reality platform that includes specifications that hardware providers can follow to create their own Windows Mixed Reality Headsets. (Google Cardboard, discussed later in this chapter, functions in a similar fashion.) To use a personal computing metaphor, you might think of the HTC Vive or Oculus Rift as Apple, in that each manufacturer creates and markets its own headset hardware, controlling all aspects. On the other hand, Microsoft controls only the software specifications for Windows Mixed Reality; it doesn’t necessarily create its own hardware. Various manufacturers can create their own headsets and sell them under the Windows Mixed Reality name, provided they meet Microsoft’s specifications. However, the AR headset Microsoft HoloLens (which Microsoft does produce) also technically falls under the Windows Mixed Reality platform. It can all get very confusing. In this chapter (and throughout the book), when I discuss Windows Mixed Reality, it is generally in relation to the line of immersive VR headsets. When discussing the Microsoft HoloLens, I typically refer to it directly by name.

Windows Mixed Reality is not a brand of hardware; instead, it’s a mixed reality platform that includes specifications that hardware providers can follow to create their own Windows Mixed Reality Headsets. (Google Cardboard, discussed later in this chapter, functions in a similar fashion.) To use a personal computing metaphor, you might think of the HTC Vive or Oculus Rift as Apple, in that each manufacturer creates and markets its own headset hardware, controlling all aspects. On the other hand, Microsoft controls only the software specifications for Windows Mixed Reality; it doesn’t necessarily create its own hardware. Various manufacturers can create their own headsets and sell them under the Windows Mixed Reality name, provided they meet Microsoft’s specifications. However, the AR headset Microsoft HoloLens (which Microsoft does produce) also technically falls under the Windows Mixed Reality platform. It can all get very confusing. In this chapter (and throughout the book), when I discuss Windows Mixed Reality, it is generally in relation to the line of immersive VR headsets. When discussing the Microsoft HoloLens, I typically refer to it directly by name. You may hear stationary experiences referred to a few different ways — standing, seated, stationary, desk-scale… . They all mean the same thing: You can’t move about in physical space in the VR experience.

You may hear stationary experiences referred to a few different ways — standing, seated, stationary, desk-scale… . They all mean the same thing: You can’t move about in physical space in the VR experience. OLED stands for organic light emitting diode. Known for its ability to display absolute blacks and extremely bright whites, OLED generally compares favorably to LCD in its contrast ratios and power consumption.

OLED stands for organic light emitting diode. Known for its ability to display absolute blacks and extremely bright whites, OLED generally compares favorably to LCD in its contrast ratios and power consumption. Room-scale is not without its own set of drawbacks. Room-scale experiences can require a fairly large empty physical space if a user wants to walk around in VR without bumping into physical obstacles. Having entire rooms of empty space dedicated to VR setups in homes isn’t practical for most of us — though there are various tricks that developers can use to combat this lack of space (see

Room-scale is not without its own set of drawbacks. Room-scale experiences can require a fairly large empty physical space if a user wants to walk around in VR without bumping into physical obstacles. Having entire rooms of empty space dedicated to VR setups in homes isn’t practical for most of us — though there are various tricks that developers can use to combat this lack of space (see