Chapter 4

VR Headsets and Other Human–VR Interfaces

In this chapter are listed the current means of interfacing with virtual reality. From VR headsets to controllers and haptic vests, the hardware market is booming, and engineers are on the lookout for new, intuitive ways to feel even more present in the virtual world. The complexity and cost of current VR headsets are often listed as potential threats to the success of the VR industry. It is vital to improve the interface technology to make it more affordable and intuitive. This chapter lists the most important specs and requirements of the current VR headsets and what to look for in the near future.

A Note on Virtual Reality Sickness

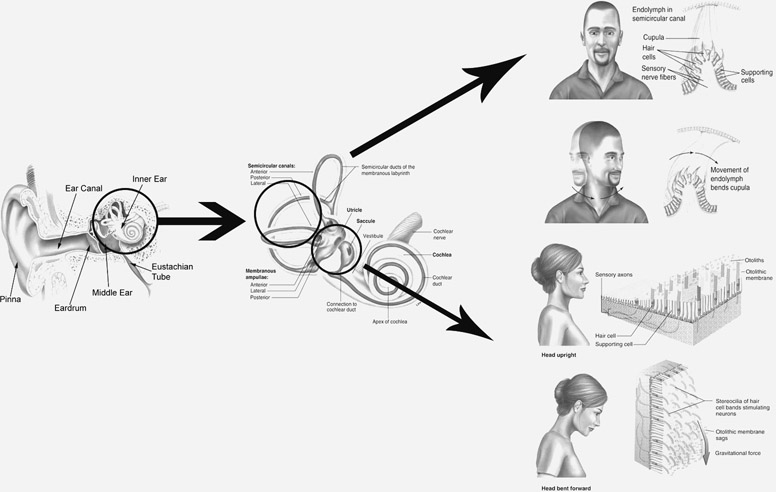

What is the cause of virtual reality sickness? Our bodies are trained to detect and react to conflicting information coming from our vision and from the vestibular system, commonly known as the inner ear.

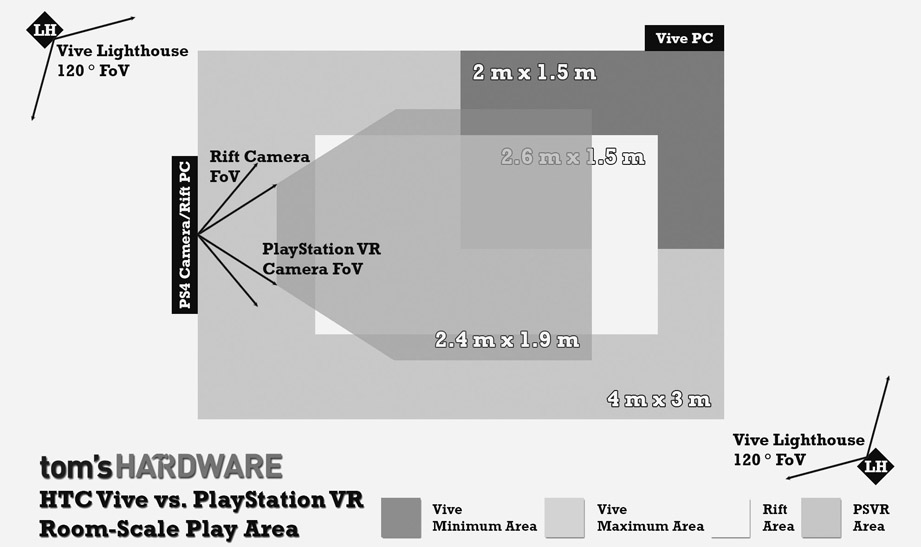

Figure 4.1 The human vestibular system and how it detects head movements – source: https://goo.gl/LTeKiK

The vestibular system is the sensory system that provides the leading contribution to the sense of balance and spatial orientation for the purpose of coordinating movement with balance.

The brain uses information from the vestibular system to understand the body’s position and acceleration from moment to moment. When this information conflicts directly with the information coming from the visual system, it can create symptoms like nausea and vertigo. A fun yet unverified theory on why this conflict leads to nausea states that a long time ago (a few thousand years), humans were at risk of eating a lethal mushroom. The first symptoms being vertigo and a dysfunctional vestibular system, our body learned to automatically “expel” whatever is in the stomach to get rid of the dangerous mushroom, hence the nausea. The danger is long gone, but our bodies remember and this is why some people are subject to motion sickness. In virtual reality, the field of vision of the participant is completely filled by the VR experience, and when moving in the virtual world while standing/sitting in the real world, the conflict between what we see (we move in VR) and what we feel (we are static in the real world) leads to feelings of vertigo and nausea. This specific type of motion sickness is called virtual reality sickness.

How to avoid virtual reality sickness? Moving the camera (or the FPS character in the case of a game engine-based VR experience) while the participant stays still is the leading cause of virtual reality sickness. Here are a few basic rules to follow to prevent it:

- Absolutely no rotating/panning the camera. The left–right movements are left to the participants themselves, who can choose to look around or not.

- Absolutely no tilting the camera. The up–down movements are also left to the participants themselves, who can choose to look around or not.

- Making sure the horizon is leveled. If the horizon is not flat but the vestibular system of the participant detects it should be, it can cause vertigo and loss of balance.

- If moving the camera, favor slow motion-controlled movement. The hair in the inner ear can only detect acceleration, not actual movement. Acceleration is a change of speed, so if the camera speed is perfectly constant, the chances of motion sickness are reduced.

- Changes of direction are technically a form of acceleration. It is best always to move the camera on a straight line.

In the case of room-scale VR experiences, the participants are free to move physically in the real world and their movements are tracked and matched in the virtual world. If the latency is under a certain threshold, this can be perfectly VR sickness-free as the information coming from both the vestibular and the visual systems matches. For live-action VR, the use of “motion-controlled chairs” inspired by the 4D theaters (with seats programmed to match the exact movement on the screen) is a great avenue to prevent motion sickness. The company Positron offers a motion simulator that matches the camera movement of each VR experience, but this type of solution is unfortunately too big and expensive to become a producer/consumer (“prosumer”) product and is for now only available at location-based VR events like festivals or arcades.

Figure 4.2 Positron Voyager chair at the SXSW festival in 2017

Head-Mounted Displays (HMDs)

A head-mounted display or HMD is a device that is worn on the head or as part of a helmet and has a small display optic in front of the eyes. In Chapter 1, we have seen that the history of VR is linked to and dependent on the development of HMD technology. In fact, the current VR craze was started in 2012 when Oculus successfully raised over US$2 million on Kickstarter for the making of their VR headset, only to be bought by Facebook for $2 billion shortly afterward. The smartphone technology, as well as the affordability of high-resolution OLED screens, accelerometers, and gyroscopes finally made the potential of a high-quality, six degrees of freedom VR interface accessible, which kick-started the booming VR industry as we know it today.

Basics

A VR HMD is usually made of two basic components: a high-definition screen to display stereoscopic content and head motion-tracking sensors. These sensors may include gyroscopes, accelerometers, cameras, etc. When the participants move their heads to look in different directions, the sensors send corresponding information to the player, which adjusts the view.

Virtual reality headsets have significantly high requirements for latency – the time it takes from a change in the head position to “see” the effect. If the system is too slow to react to head movement, then it can cause the participant to experience virtual reality sickness, as explained above.

According to a Valve engineer, the ideal latency would be 7–15 milliseconds. A major component of this latency is the refresh rate of the high-resolution display. A refresh rate of 90Hz and above is required for VR.

Figure 4.3 OSVR Hacker Dev Kit (2015)

There are two types of VR headset: mobile VR and high-end “tethered” VR. Mobile VR uses a smartphone as both the screen and the computing power for the headset; it is currently only capable of three degrees of freedom. The most well-known mobile VR solutions are the Samsung Gear VR, compatible with selected Samsung phones, and the Google Cardboard and Daydream. High-end “tethered” VR uses an external computer and cameras/sensors for positional tracking to allow six degrees of freedom. The most well-known tethered VR headsets are the Oculus Rift and HTC Vive, which use a Windows PC and high-performance graphics cards for computing power, and the PlayStation VR which uses the PlayStation 4 and subsequent models for computing power. Figure 4.4 lists the current most-used VR headsets.

A third category is worth mentioning: stand-alone VR headsets, which will feature internal computing capabilities and inside-out tracking to achieve six degrees of freedom. Many headset manufacturers are currently working on this technology and we can expect a new generation of VR headset to come to light in the next two or three years.

Tracking

When it comes to presence, after the visuals, accurate sub-millimeter tracking is the next most important feature. This makes the visuals in VR appear as they would in reality, no matter where your head is positioned. Different technologies are used separately or together to track movement either in a three or six degrees of freedom environment. In the first case (pan-roll-tilt), it is called “head tracking”; and in the second case (pan, roll, tilt, front/back, left/right, and up/down), it is called “positional tracking.” The IMU, or inertial measuring unit, is an electronic sensor composed of accelerometers, gyroscopes, and magnetometers. It measures a device’s velocity, orientation, and gravitational forces. In virtual and augmented reality, an IMU is used to perform rotational tracking for HMDs. It measures the rotational movements of the pitch, yaw, and roll, hence “head tracking.”

The lack of positional tracking on mobile VR systems (they only do head tracking) is one of the reasons why they lack the heightened sense of presence that high-end VR offers. Good tracking relies on external sensors (like the Oculus Rift’s camera), something that is not feasible on a mobile device.

Accelerometer and Gyroscope

An accelerometer measures proper acceleration in an absolute reference system. For example, an accelero-meter at rest on the surface of the Earth will measure an acceleration due to Earth’s gravity. In tablet computers, smartphones, and digital cameras, accelerometers are used so that images on screens are always displayed upright. Accelerometers are also used in drones for flight stabilization.

Originally, a gyroscope is a spinning wheel or disc in which the axis of rotation is free to assume any orientation by itself. It is the same system that is used in Steadicams. When rotating, the orientation of this axis is unaffected by tilting or rotation of the mounting, according to the conservation of angular momentum. Gyroscopes are therefore useful for measuring or maintaining orientation. The integration of the gyroscope into consumer electronics has allowed for more accurate recognition of movement within a 3D space than the previous lone accelerometer within a number of smartphones. Gyroscopes and accelerometers are combined in smartphones and most VR headsets to obtain more robust direction and motion sensing. Together, an accelerometer and a gyroscope can achieve accurate head tracking.

Figure 4.5 The components of an IMU: accelerometer, gyroscope, and magnetometer

Magnetometers

A magnetometer is a device that measures magnetic fields. It can act as a compass by detecting magnetic North and can always tell which direction it is facing on the surface of the Earth. Magnetometers in smartphones are not used for tracking purposes (they are not accurate enough), but some developers have repurposed the magnetometer for use with the Google Cardboard: where a magnetic ring is slid up and down another magnet, the fluctuation in the field is then registered as a button click.

Laser Sensors

Valve’s Lighthouse positional tracking system and HTC’s controllers for its Vive headset use a laser system for positional tracking. It involves two base stations around the room which sweep the area with flashing lasers.

The HTC Vive headset and SteamVR controllers are covered in small sensors that detect these lasers as they go by. When a flash occurs, the headset simply starts counting until it “sees” which one of its photosensors gets hit by a laser beam – and uses the relationship between where that photosensor exists on the headset, and when the beam hit the photosensor, to mathematically calculate its exact position relative to the base stations in the room.

Hit enough of these photosensors with a laser at the same time, and they form a “pose” – a 3D shape that not only lets you know where the headset is, but the direction it is facing, too. No optical tracking required. It is all about timing.

The system cleverly integrates all of these data to determine the rotation of the devices and their position in 3D space. High-speed on-board IMUs in each device are used to aid in tracking. This system is extremely accurate: according to Oliver “Doc-Ok” Kreylos (PhD in Computer Science), with both base stations tracking the Vive headset, the jitter of the system is around 0.3mm. This means that the headset appears to be jumping around in the space of a sphere that is about 0.3mm across in all directions though in reality the headset is sitting absolutely still. Fortunately this sub-millimeter jitter is so small that it goes completely unnoticed by our visual system and brain. Although the base stations need to be synced together via Bluetooth (or the included sync cable) and require power, they are not connected to your PC or the HMD. Unlike the camera sensors of the other systems that track markers on the headsets, it is those data collected by the HMD (along with information from the IMUs) that get sent to the PC to be processed.

Visible Light

The PlayStation VR headset uses a “visible light” system for positional tracking. The idea is as follows: the participant wears a headset with nine LED lights on it and the PlayStation camera tracks them. The significant difference is that the PlayStation camera has two cameras for stereo depth perception. The camera can only see items within its cone-shaped field of view and sometimes occlusion or lighting can cause a problem (strong natural lighting in the room, TV screens, and reflective surfaces like mirrors). The PlayStation VR headset only has nine LEDs, but they are shaped in such a way that their orientation can be determined by the camera, and the stereo depth perception can work out their position.

Figure 4.7 The PlayStation VR and its nine LED lights

The PlayStation VR also features an IMU for accurate head tracking.

Infrared Light

A series of 20 infrared LEDs embedded in the Oculus Rift headset are what Oculus calls the Constellation Tracking System. These markers – laid out almost like a constellation – are picked up by the Oculus sensors, which are designed to detect the light of the markers frame by frame. These frames are then processed by Oculus software on your computer to determine where in space you are supposed to be.

Just like the visible light tracking system, occlusion can become a big issue for infrared light positional tracking: if anything gets between too many infrared markers and a Rift sensor, then the infrared markers will be blocked, or occluded, and tracking will become impossible. It is now possible to use up to three cameras/sensors to avoid this issue and make the Oculus “room-scale.” The tracking range of a camera is going to be limited by both its optics and image resolution. There will be a point where the headset is too far away for it to resolve enough detail to track accurately. There is a dead zone of 90cm in front of the camera where it cannot track the HMD, probably because it cannot focus properly on the headset. The headset retains the gyroscope, accelerometer, and magnetometer that track 360° orientation.

Magnetic Tracking

Magnetic tracking relies on measuring the intensity of the magnetic field in various directions. There is typically a base station that generates AC, DC, or pulsed DC excitation. As the distance between the measurement point and base station increases, the strength of the magnetic field decreases. If the measurement point is rotated, the distribution of the magnetic field is changed across the various axes, allowing determination of the orientation as well. Magnetic tracking has been implemented in several products, such as the VR controllers Razer Hydra.

Magnetic tracking accuracy can be good in controlled environments (the Hydra specs are 1.0mm positional accuracy and 1° rotational accuracy), but magnetic tracking is subject to interference from conductive materials near emitter or sensor and from magnetic fields generated by other electronic devices.

Figure 4.9 The Razer Hydra controllers and base station

Optical Tracking

Fiducial markers: A camera tracks markers such as predefined patterns or QR codes. The camera can recognize the existence of this marker and if multiple markers are placed in known positions, the position and orientation can be calculated. An earlier version of the StarVR headset used this method.

Figure 4.10 An earlier version of the StarVR headset with fiducial markers

Active markers: An active optical system is commonly used for mocap (motion capture). It triangulates positions by illuminating one LED at a time very quickly or multiple LEDs with software to identify them by their relative positions (a group of LEDs for a VR headset, another one for a controller, etc.). This system features a high signal-to-noise ratio, resulting in very low marker jitter and a resulting high measurement resolution (often down to 0.1mm within the calibrated volume). The company PhaseSpace uses active markers for their VR positional tracking technology. The new version of the StarVR headset uses this system in combination with an inertial sensor (IMU)

Structured Light

Structured light is the process of projecting a known pattern (often grids or horizontal bars) onto a scene. The way that the light deforms when striking surfaces allows vision systems to calculate the depth and surface information of the objects in the scene, as used in structured light 3D scanners. No VR headset uses this technology, but Google’s augmented reality solution “Project Tango” does.

Inside-Out Tracking and Outside-In Tracking

Figure 4.12 Structured light 3D scanning – photo credit: Gabriel Taubin / Douglas Lanman

All of the current VR headsets with positional tracking use an “outside-in” tracking method. This is when tracking cameras are placed in the external environment where the tracked device (HMD) is within its view. Outside-in tracking requires complex and often expensive equipment composed of the HMD, one or multiple sensors/cameras, a computing station, and connectivity between all the elements. On the other hand, inside-out tracking is when the tracking camera/sensor is placed on the HMD itself, which then detects the environment around itself and its position in real time, like Google’s “Project Tango.”

Most VR headset manufacturers are currently developing inside-out tracking for their HMDs, including Oculus, as announced during the Oculus Connect 3 event in 2016. Meanwhile, outside-in tracking is limited by the area set by the camera/sensors. Figure 4.13 shows a comparison of the positional tracking “play area” of three of the headsets mentioned above.

The StarVR uses active marker technology, which means the play area is scalable depending on the number of cameras used to track the headset, up to 30x30 meters.

Visual Quality

The different display technologies and optics each headset uses greatly impact image quality, as well as the feeling of immersion. There are four important elements when it comes to high-end VR: display resolution, optics quality, refresh rate, and field of view.

Resolution

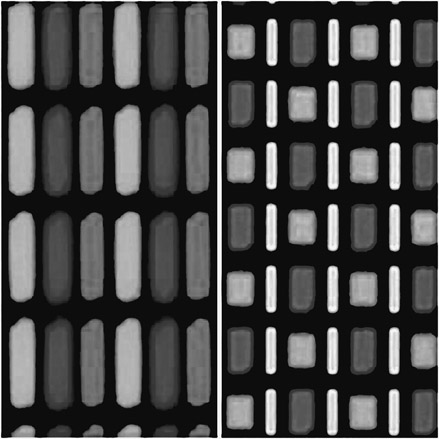

Both the Rift and the Vive have a resolution of 1200x1080 pixels per eye, while the PlayStation VR is 960x1080 pixels per eye, but its screen’s RGB (red, green, blue) stripe matrix is superior to the Samsung PenTile matrix of the other HMD displays.

On a PenTile matrix screen, there are fewer subpixels and more of them are green. This is the reason why the PlayStation VR’s perceived resolution is similar to that of the Vive or Rift. The StarVR headset, however, has a whopping resolution of 5120x1440 pixels but the field of view is also much larger (210°), so the perceived resolution per degree is supposedly bigger (but has not been confirmed by the manufacturer). Human vision has an angular resolution of 70 pixels per degree while current VR headsets usually offer around 10 pixels per degree, which explains why people often complain about “seeing the pixels.” The magnification factor due to the fact that a single display is stretched across a wide field of view makes the flaws much more apparent.

For mobile VR, the resolution depends on the smartphone being used in the headset. The Pixel XL (compatible with Daydream) has a resolution of 2560x1440 pixels, while the Samsung Galaxy S8 (compatible with Samsung Gear VR) is 2960x1440 pixels. These numbers can be misleading: mobile VR seems to have higher resolution than high-end “tethered” VR headsets. However, they will not necessarily have the better visual presentation. Even the cheapest compatible graphics card inside the Rift’s connected PC will allow for a much richer graphical environment than you will typically find in Gear VR games or apps.

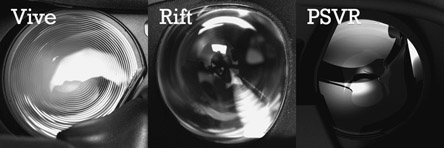

Lenses

The choice of lenses for VR headsets determines the final field of view as well as image quality. The screen being placed very close to the eyes, the focal length must be short to magnify it. Some headset manufacturers like HTC and Oculus have opted for Fresnel lenses. Fresnel lens reduce the amount of material required compared to a conventional lens by dividing the lens into a set of concentric annular sections.

Figure 4.15 HMD lens comparison – source: https://goo.gl/JY1Jwp

The Oculus Rift’s lenses are hybrid Fresnel lenses with very fine ridges combined with a regular convex lens which reduces the “I can see pixels” problem. The Rift’s hybrid lenses also have a larger sweet spot and more consistent focus across their visual field, meaning they are more forgiving about how you position the HMD in front of your eyes. Fresnel lenses can help achieve a wider field of view but the ridges of the lens can become visible in high-contrast environments, even more so when the headset is not perfectly aligned with the eyes.

The PlayStation VR, on the other hand, has opted for a regular lens and is capitalizing on the quality of their screen instead. VR headset lenses often produce an important distortion and chromatic aberration which have to be compensated in the VR player itself .

Refresh Rate

As stated above, a refresh rate of 90Hz is a requirement for virtual reality in order to limit the effects of virtual reality sickness and offer a heightened sense of presence. Unfortunately, most of the mobile VR headsets are limited to 60Hz. PlayStation VR, on the other hand, offers a 120Hz refresh rate.

Field of View

There are no discernible differences between the horizontal field of view (FoV) of the HTC Vive, the Oculus Rift, the mobile VR headsets, and the PlayStation VR, which are all announced at approximately 100°, while human vision horizontal FoV is (when including peripheral vision) 220°. This means that VR headsets can only cover half of our normal vision, as if we were wearing blinders, which greatly impacts the sense of presence. However, the StarVR and an upcoming Panasonic VR headset use a double-Fresnel design allowing for a super-wide field of view of approximately 210° horizontally.

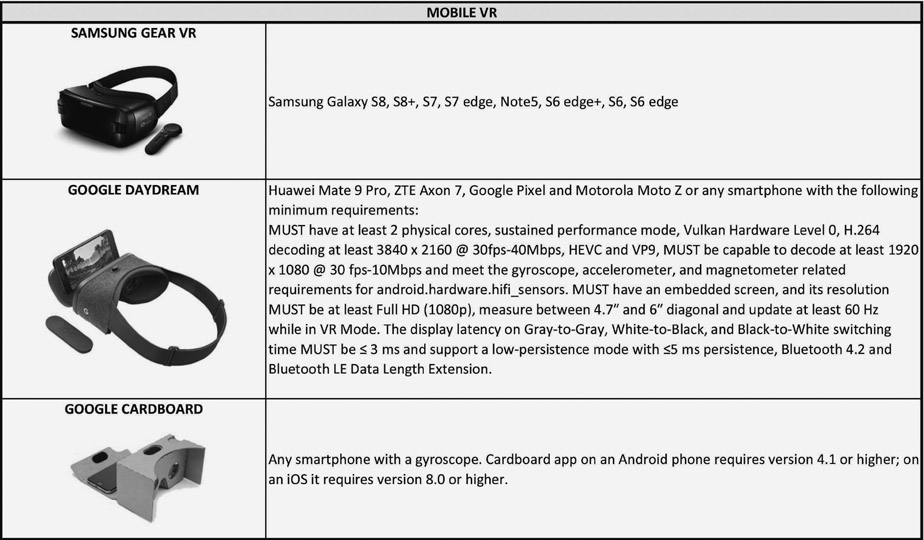

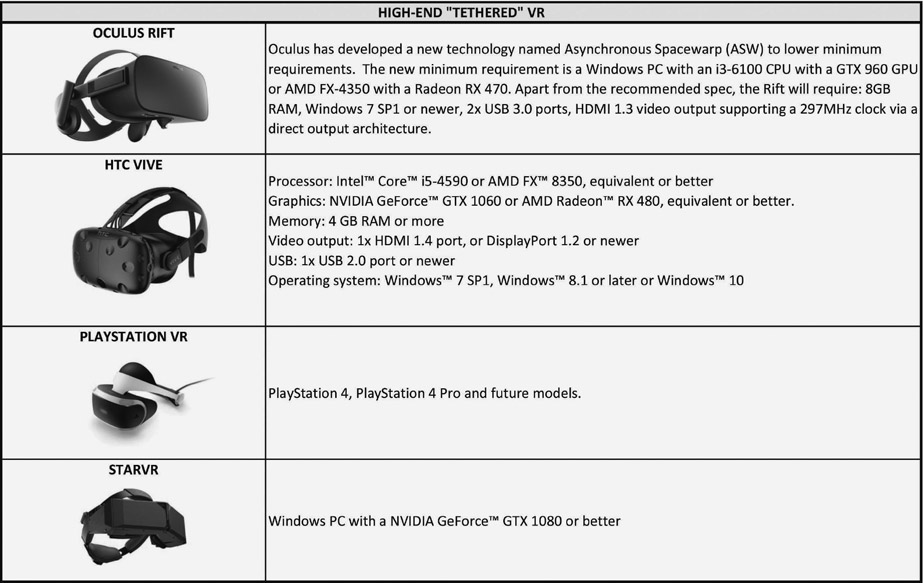

Minimum PC/Mobile Requirements

Most of the VR headsets are compatible only with a few specific smartphones in the case of mobile VR and graphics cards/computer power in the case of high-end tethered VR. The only exception is that some VR headsets are compatible with most current smartphones as long as they have an IMU for head tracking – for example, the affordable Google Cardboard.

Steve Schklair, Founder, 3ality Technica and 3mersiv

What’s limiting a lot of film and television professionals from getting into VR is simply the quality of the image in the mobile viewing device. Mass market displays look terrible. People who have never worn a headset, after they get over the shock of being able to look around and see everything and think it’s so cool, their first question is why are the images so fuzzy? It’s not fuzzy, there’s just lack of resolution. The fact is, you can see all the pixels. That’s got to go away.

Figure 4.17 Minimum mobile requirements for mobile VR HMDs

Current minimum requirements for VR headsets are shown in Figure 4.18.

Figure 4.18 Minimum PC requirements for high-end VR HMDs

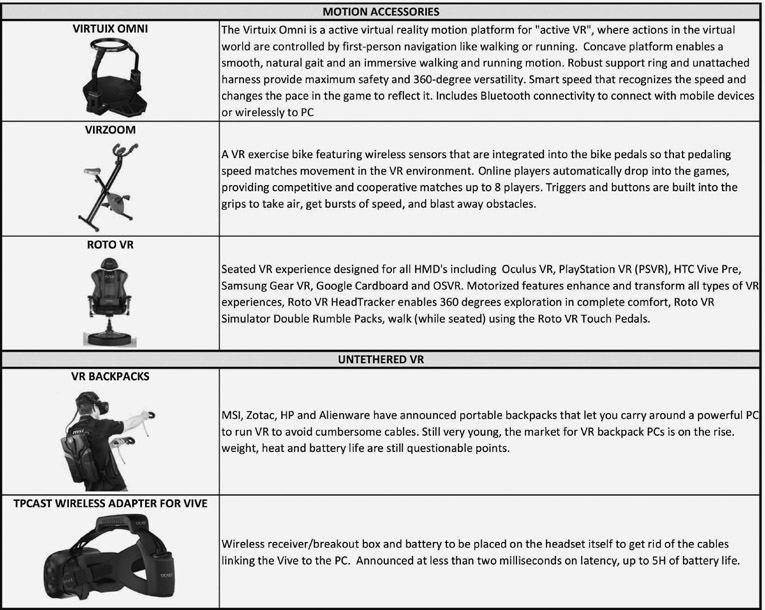

Controllers and Accessories

Controllers and accessories can make virtual reality even more immersive by improving the way we interact with the virtual environment. Hand tracking and the possibility to easily manipulate virtual objects is of the upmost importance. Treadmills, haptic vests, and wireless solutions for the high-end VR market are booming. Figures 4.20 to 4.24 give a short list of controllers and accessories available as of the first quarter of 2017. The list should grow exponentially as new VR headset solutions hit the market.

Figure 4.22