By the time the first Web 2.0 conversations started, the first incarnations of MP3.com and Napster were both effectively history. Neither of them was particularly well liked by the music industry, for reasons that feed into Web 2.0 but aren’t critical to the comparison between them. Their business stories share a common thread of major shift in the way music is distributed, but the way they went about actually transferring music files was very different, mirroring the Akamai/BitTorrent story in many ways.

Some of the technical patterns illustrated by this comparison are:

Service-Oriented Architecture

Software as a Service

Participation-Collaboration

The Synchronized Web

Collaborative Tagging

Declarative Living and Tag Gardening

Persistent Rights Management

You can find more information on these patterns in Chapter 7.

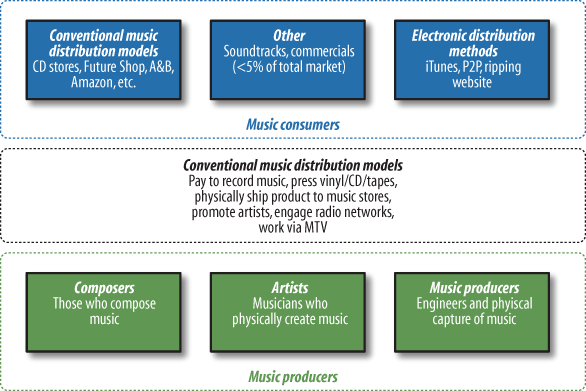

The music industry has historically been composed of three main groups: those who create music (writing, recording, or producing it); those who consume it; and those who are part of the conventional recording and music distribution industry, who sit in the middle (see Figure 3-8).

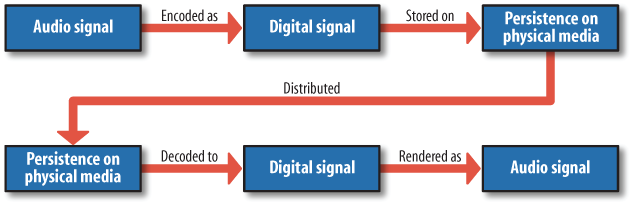

Historically, music publishing and distribution has been done via physical media, from 78s to CDs. If you abstract the pattern of this entire process, you can easily see that the storage of music on physical media is grossly inefficient (see Figure 3-9).

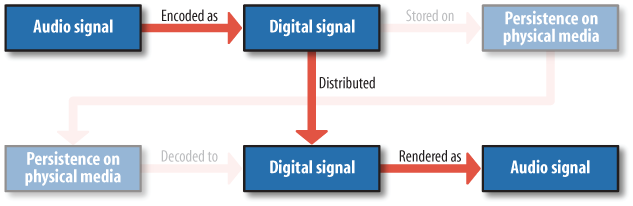

Figure 3-10 contains two “Digital Signal” points in the sequence. The persistence to some form of physical storage medium is unnecessary for people who are capable of working directly with the digital signal. If you’re providing a digital signal at the source, the signal can travel from the source to its ultimate target in this digital form, and the media is consumed as a digital signal, why would it make sense to use a non-digital storage medium (such as CD, vinyl, or tape) as an intermediate step? The shift to digital MP3 files has made the middle steps unnecessary. Figure 3-10 depicts a simpler model that has many advantages, except to those whose business models depend on physical distribution.

For instance, this new pattern is better for the environment, because it does not involve turning petroleum products into records and CDs, or transporting physical goods thousands of miles. It satisfies people’s cravings for instant gratification, and it lets consumers store the music on physical media if they want, by burning CDs or recording digital audio tapes.

The old model also had one massive stumbling block: it arguably suppressed a large percentage of artists. For a conventional record company to sign a new artist, it must make a substantial investment in that artist. This covers costs associated with such things as recording the music, building the die for pressing it into physical media, and printing CD case covers, as well as the costs associated with manufacturing and distributing the media. The initial costs are substantial: even an artist who perhaps produces only 250,000 CDs may cost a record company $500,000 to sign initially. This doesn’t include the costs of promoting the artist or making music videos. Estimates vary significantly, but it’s our opinion that as a result, the conventional industry signs only one out of every 10,000 artists or so. If a higher percentage were signed, it might dilute each artist’s visibility and ability to perform. After all, there are only so many venues and only so many people willing to go to live shows.

An industry size issue compounds this problem. If the global market were flooded with product, each artist could expect to capture a certain portion of that market. For argument’s sake, let’s assume that each artist garners 1,000 CD sales on average. Increasing the total number of artists would cause each artist’s share of the market to decrease. For the companies managing the physical inventory, it’s counterproductive to have too much product available in the marketplace. As more products came to market, the dilution factor would impact sales of existing music to the point where it might jeopardize the record company’s ability to recoup its initial investment in each artist.

Note

Love’s Manifesto, a speech given by Courtney Love during a music conference, illuminates several of the problems inherent in the music industry today and is a brilliant exposé of what is wrong with the industry as a whole (pun intended) and the realities faced by artists. You can read the speech online at http://www.indie-music.com/modules.php?name=News&file=article&sid=820.

Producers and online distributors of digital music benefit from two major cost reductions. In addition to not having to deal with physical inventory and all its costs, they also offload the cost of recording music onto the artists, minimizing some of the risk associated with distributing the work of previously unsigned bands. These companies often adopt a more “hands off” approach. Unlike conventional record companies, online MP3 retailers can easily acquire huge libraries of thousands of new, previously unsigned artists. They don’t need to censor whose music they can publish based on their perceptions of the marketplace, because adding tracks to their labels poses minimal risk. (They do still face some of the same legal issues as their conventional predecessors, though—notably, those associated with copyright.)

This approach also has significant benefits for many artists. Instead of having to convince a record company that they’ll sell enough music to make the initial outlay worthwhile, new independent artists can go directly to the market and build their own followings, demonstrating to record companies why they’re worth signing. AFI, for example, was the first MySpace band to receive more than 500,000 listens in one day. Self-promotion and building up their own followings allows clever artists to avoid record companies while still achieving some success.

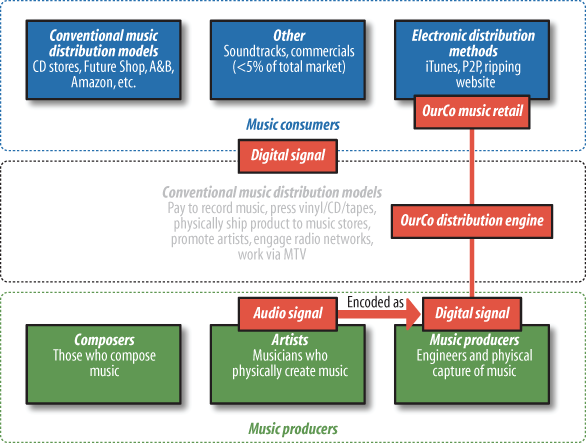

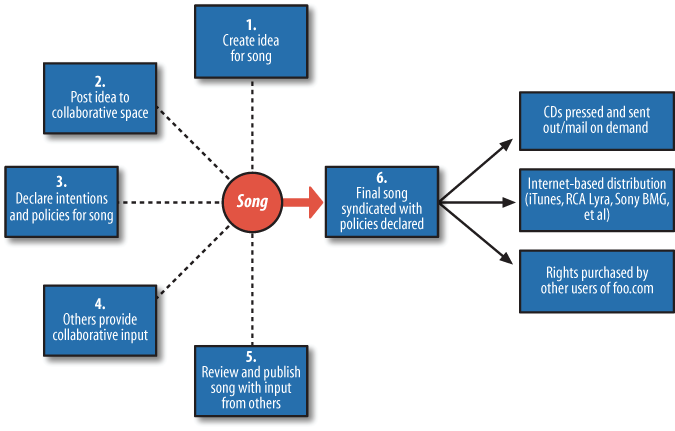

In this model, artists become responsible for creating their own music. Once they have content, they may publish their music via companies such as Napster and MP3.com. Imagine a fictional company called OurCo. OurCo can assimilate the best of both the old and the new distribution models and act as a private label distribution engine, as depicted in Figure 3-11.

When analyzing P2P infrastructures, we must recognize the sophistication of the current file-sharing infrastructures. The concepts of a web of participation and collaboration form the backbone of how resources flow and stream in Web 2.0. Napster is a prime example of how P2P networks can become popular in a short time and—in stark contrast to MP3.com—can embrace the concepts of participation and collaboration among users.

MP3.com, started because its founder realized that many people were searching for “mp3,” was originally launched as a website where members could share their MP3 files with each other.

Note

The original MP3.com ceased to operate at the end of 2003. CNET now operates the domain name, supplying artist information and other metadata regarding audio files.

The first iteration of MP3.com featured charts defined by genre and geographical area, as well as statistical data for artists indicating which of their songs were more popular. Artists could subscribe to a free account, a Gold account, or a Platinum account, each providing additional features and stats. Though there was no charge for downloading music from MP3.com, people did have to sign up with an email address, and online advertisements were commonplace across the site. Although MP3.com hosted songs from known artists, the vast majority of the playlist comprised songs by unsigned or independent musicians and producers. Eventually MP3.com launched “Pay for Play,” which was a major upset to the established music industry. The idea was that each artist would receive payments based on the number of listens or downloads from the MP3.com site.

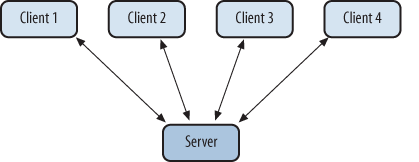

The original technical model that MP3.com employed was a typical client/server pattern using a set of centralized servers, as shown in Figure 3-13.

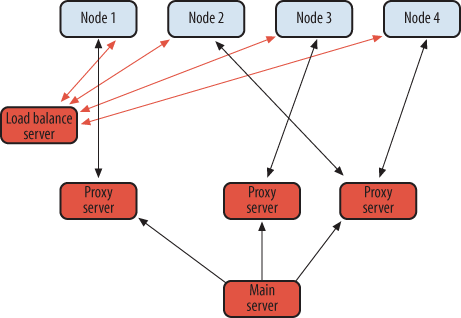

MP3.com engineers eventually changed to a new model (perhaps due to scalability issues) that used a set of federated servers acting as proxies for the main server. This variation of the original architectural pattern—depicted in Figure 3-14 using load balancing and clusters of servers—was a great way to distribute resources and balance loads, but it still burdened MP3.com with the expense of hosting files. (Note that in a P2P system, clients are referred to as “nodes,” as they are no longer mere receivers of content: each node in a P2P network is capable of acting as both client and server.)

In Figure 3-14, all nodes first communicate with the load balancing server to find out where to resolve or retrieve the resources they require. The load balancing server replies with the information based on its knowledge of which proxies are in a position to serve the requested resources. Based on that information, each node makes a direct request to the appropriate node. This pattern is common in many web architectures today.

Napster took a different path. Rather than maintaining the overhead of a direct client/server infrastructure, Napster revolutionized the industry by introducing the concept of a shared, decentralized P2P architecture. It worked quite differently from the typical client/server model but was very similar conceptually to the BitTorrent model. One key central component remained: keeping lists of all of the peers for easy searching. This component not only created scalability issues, but also exposed the company to the legal liability that ultimately did it in.

Napster also introduced a pattern of “Opting Out, Not Opting In.” As soon as you downloaded and installed the Napster client software, you became, by default, part of a massive P2P network of music file sharers. Unless you specifically opted out, you remained part of the network. This allowed Napster to grow at an exponential rate. It also landed several Napster users in legal trouble, as they did not fully understand the consequences of installing the software.

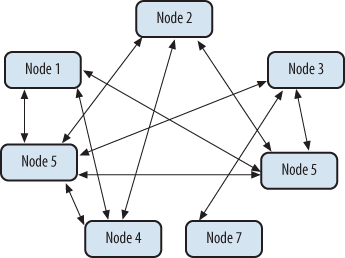

P2P architectures can generally be classified into two main types. The first is a pure P2P architecture where each node acts as a client and a server. There is no central server or DNS-type node to coordinate traffic, and all traffic is routed based on each node’s knowledge of other nodes and the protocols used. BitTorrent, for example, can operate in this mode. This type of network architecture (also referred to as an ad hoc architecture) works when nodes are configured to act as both servers and clients. It is similar conceptually to how mobile radios work, except that it uses a point-to-point cast rather than a broadcast communication protocol. Figure 3-15 depicts this type of network.

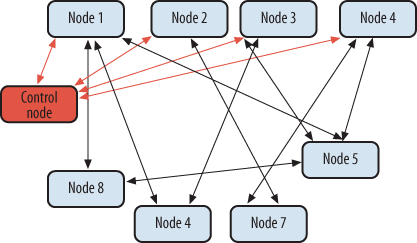

In this pure-play P2P network, no central authority determines or orchestrates the actions of the other nodes. By comparison, a centrally orchestrated P2P network includes a central authority that takes care of orchestration and essentially acts as a traffic cop, as shown in Figure 3-16.

The control node in Figure 3-16 keeps track of the status and libraries of each peer node to help orchestrate where other nodes can find the information they seek. Peers themselves store the information and can act as both clients and servers. Each node is responsible for updating the central authority regarding its status and resources.

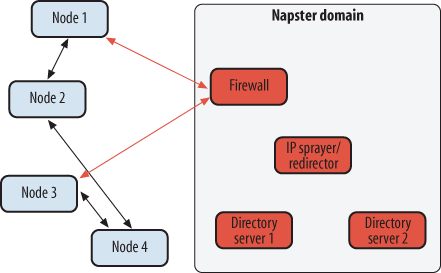

Napster itself was a sort of hybrid P2P system, allowing direct P2P traffic and maintaining some control over resources to facilitate resource location. Figure 3-17 shows the classic Napster architecture.

Napster central directories tracked the titles of content in each P2P node. When users signed up for and downloaded Napster, they ended up with the P2P node software running on their own machines. This software pushed information to the Napster domain. Each node searching for content first communicated with the IP Sprayer/Redirector via the Napster domain. The IP Sprayer/Redirector maintained knowledge of the state of the entire network via the directory servers and redirected nodes to nodes that were able to fulfill its requests. Napster, and other companies such as LimeWire, are based on hybrid P2P patterns because they also allow direct node-to-node ad hoc connections for some types of communication.

Both Napster and MP3.com, despite now being defunct, revolutionized the music industry. MySpace.com has since added a new dimension into the mix: social networking. Social networking layered on top of the music distribution model continues to evolve, creating new opportunities for musicians and fans.