Chapter 8. Web application firewalls and client-side filters

Information in this chapter:

• Bypassing WAFs

• Client-Side Filters

Abstract

Different types of filtering devices can be used to protect Web applications from attacks such as Structured Query Language injections and cross-site scripting. For some applications, it is difficult to implement internal controls to protect against Web attacks due to the high cost of retrofitting existing code. Even worse, it may be impossible to make changes to code due to licensing agreements or lack of source code. To add defenses to these kinds of Web applications, external solutions must be considered. Web application firewalls (WAFs) and client-side filters are commonly used to detect (and sometimes block) Web attacks. However, both WAFs and client-side filters have filtering limitations which an attacker can exploit. In addition to discussing techniques for bypassing WAFs, this chapter uses code examples to show how to bypass NoScript and Internet Explorer filters, and explains how to perform denial-of-service attacks against poorly constructed regular expressions.

Key words: Web application firewall, Client-side filter, Blacklisting mode, Whitelisting mode, NoScript plug-in, XSS Filter, Trigger string

Defenses against Web attacks such as SQL injections and cross-site scripting can be implemented in many places. In this chapter, we discuss the evolution and present state of defenses against these types of Web attacks.

Traditionally, applications were responsible for providing their own protection, and would thus contain specific input filtering and output encoding controls meant to block malicious attacks. Even today, this remains a common, sensible, and recommended practice. The types of controls found in Web applications range from poorly thought out blacklists to carefully designed and highly restrictive whitelists. Most fall somewhere in the middle.

Expecting Web application developers to know enough about defending against Web attacks is often unrealistic. As such, many organizations have security specialists develop internal libraries for defending against Web attacks. Along with solid coding standards to ensure proper use of these libraries, many Web applications are able to provide much stronger defenses. Similarly, open source libraries and APIs were developed to protect Web applications. The Enterprise Security API library, known as ESAPI, provided by the Open Web Application Security Project (OWASP), is a perfect example.

For some applications, it is difficult to implement internal controls to protect against Web attacks due to the high cost of retrofitting existing code. Even worse, it may be impossible to make changes to code due to licensing agreements or lack of source code. To add defenses to these kinds of Web applications, external solutions must be considered. Many intrusion detection and prevention systems are capable of filtering Web traffic for malicious traffic. Web application firewalls (WAFs) are also commonly used to detect (and sometimes block) Web attacks. Many commercial WAFs are available, along with several freely available (usually open source) alternatives. WAFs can be difficult to customize for a particular application, making it difficult to run them in “whitelisting mode.” It is common to find WAFs deployed in “blacklisting mode,” making them more vulnerable to bypasses and targeted attacks.

Most open source WAFs have a publicly accessible demo application showing the effectiveness of their filtering, and sometimes the WAF's administrative interface as well. Some commercial vendors also provide publicly accessible demo pages; unfortunately, most do not. Spending some time with the administrative interfaces and/or bypassing the built-in filters is a great way to practice many of the techniques discussed in this book. After some practice, security penetration testers can learn to recognize the general strengths and weaknesses of WAFs, which can help them to hone their Web application attack skills. The following is a list of a few publicly accessible demo WAF pages:

•http://demo.phpids.org Hacking on the filters is highly encouraged.

•www.modsecurity.org/demo/ Began incorporating the PHPIDS filters in summer 2009.

•http://waf.barracuda.com/cgi-mod/index.cgi Log in as guest with no password.

•http://xybershieldtest.com/ A demo application for Xybershield (http://xybershield.com).

Identifying public Web sites that make use of WAFs is fairly straightforward. However, hacking on such sites without permission is never recommended! Stick with sites where it is safe and encouraged to so, such as http://demo.phpids.org.

Bypassing WAFs

All WAFs can be bypassed. As such, they should never be relied on as a primary mitigation for some vulnerability. At best, they can be considered as a temporary band-aid to hinder direct exploitation of a known attack until a more permanent solution can be deployed. Finding bypasses for most WAFs is, sadly, quite easy. It would not be fair to call out any particular WAF vendor as being worse than the others (and legally it is probably best to avoid doing this). So, to demonstrate various bypasses, let us review a list of different attacks along with the modified versions which are no longer detected by the unnamed WAF. Table 8.1 lists the bypasses; credit for several of these vectors goes to Alexey Silin (LeverOne), Johannes Dahse (Reiners), and Roberto Salgado (LightOS). 1

1Silin A, Dahse J, Salgado R. Sla.ckers.org posts, dated March 2007 through August 2010. http://sla.ckers.org/forum/read.php?12,30425,page=1.

Most WAFs are built around a list of blacklisting filters that are meant to detect malicious attacks. Some allow for various optimizations, such as profiling the target Web application, thereby allowing for more aggressive filtering. The more customized the rules can be, the better. However, to do this takes time and detailed knowledge of both the target application and the WAF. Additionally, false positive detection rates will likely increase, resulting in a potentially broken application. As such, blacklisting mode seems to be the standard deployed mode for filters.

Most WAF vendors keep their actual filters as closely guarded secrets. After all, it is much easier for attackers to find a bypass for the filters if they can see what they are trying to bypass. Unfortunately, this adds only a thin layer of obscurity, and most determined attackers will easily be able to bypass such filters, even without seeing the actual rules. However, some WAF developers, especially the open source ones, have fully open rules. These filters can (and do) receive much more scrutiny by skilled penetration testers, allowing the overall quality of the filters to be higher. In the interest of full disclosure, it is essential to point out that none of the authors are completely impartial; Mario Heiderich was one of the original developers and a maintainer for PHPIDS, while Eduardo Vela, Gareth Heyes, and David Lindsay have each spent countless hours developing bypasses for the PHPIDS filters.

Ideally, a WAF should be configured in a whitelisting mode where all legitimate requests to the application are allowed and anything else is blocked by default. This requires that the target Web application be known and well understood, and all access URLs along with GET and POST parameters be mapped out. Then, the WAF can be heavily tuned to allow only these valid requests and to block everything else. When this is done properly, the work and skill level required from an attacker are significantly raised.

Tuning a WAF can take a lot of time to configure and additional time to maintain and tweak rules. After all this work is done, the whitelisting filters may still be bypassed.

Effectiveness

The effectiveness of the various WAFs varies greatly. Needless to say, a determined attacker could bypass any of them. There also appears to be little to no correlation between the price of a WAF and its effectiveness at blocking malicious attacks. This does not reflect particularly well for WAF vendors that tout themselves as the market leader of WAFs or whose product costs are as high as the salary of a full-time security consultant.

Another troubling point to consider when contemplating the purchase of a WAF is that while it is attempting to limit the exploitability of a vulnerable Web application, the WAF also increases the attack surface of a target organization. The WAF itself may be the target of and vulnerable to malicious attacks. For example, a WAF may be vulnerable to cross-site scripting, SQL injection, denial-of-service attacks, remote code execution vulnerabilities, and so on. Once the target company's network is compromised, an attacker has gained a valuable foothold into the company from which additional attacks may be launched.

These types of weaknesses have been found in all types of WAF products as well, regardless of reputation and price. For example, one popular (and expensive) WAF used by many companies had a reflected cross-site scripting vulnerability which was disclosed in May 2009. Sjoerd Resink found the vulnerability on a page where users are redirected when they do not have a valid session. This was possible because a GET parameter was base64-decoded before being reflected onto a login page which included session information, including presently set cookie values. However, to exploit the issue, a nonguessable token value must also be included in a separate GET parameter and the token must match with the rest of the request. This prevented the base64 value from being directly modified. However, a clever workaround was to first set a cookie with the cross-site scripting payload. Next, the attacker could visit a URL which redirected him to the vulnerable page. The server would then generate the vulnerable base64-enocoded payload and associated valid token! All the attacker would have to do then is to copy the redirected URL and coerce others into visiting the same link. Additional details on the vulnerability are available at https://www.fox-it.com/uploads/pdf/advisory_xss_f5_firepass.pdf.

According to recently collected Building Security In Maturity Model (BSIMM) data at http://bsimm2.com/, 36% (11 of 30) of the surveyed organizations use WAFs, or something similar, to monitor input to software to detect attacks. 2 Regardless of the effectiveness of WAFs, companies are clearly finding justifications to include them in their security budgets.

2Migues S, Chess B, McGraw G. The BSIMM2 Web page. http://bsimm2.com/. Accessed June 2010.

One of the leading drivers for this increase over the past several years is the Payment Card Industry (PCI) Data Security Standard (DSS). In particular, Section 6.6 of the standard specifies that public-facing Web applications which process credit card data must protect against known Web attacks through one of the two methods. In the first method, a manual or automated assessment may be performed on a yearly basis, and after any changes to the application are made. In the second method, a WAF can be installed to protect the application. 3 Automated and manual assessments require skilled security professionals and are thus rather expensive to buy. Many corporations, for better or for worse, view WAFs as the cheaper alternative.

3PCI Security Standards Council. “About the PCI Data Security Standard (DSS).”https://www.pcisecuritystandards.org/security_standards/pci_dss.shtml. Accessed August 2010.

Client-side filters

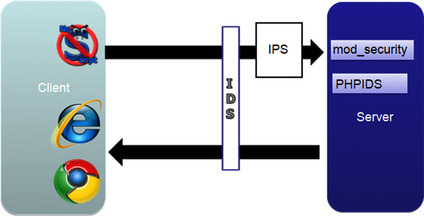

In the early 2000s, people started to explore the idea of blocking Web attacks within Web browsers. This was a rather novel idea at the time, considering that vulnerabilities such as cross-site scripting and SQL injection are typically thought to be Web application (server-side) issues. The main advantage of implementing defenses within the browser is that users are protected by default against vulnerabilities in all Web applications. See Figure 8.1 for a diagram showing how client-side filters relate to more traditional types of WAFs. The downside is that for filters to be generic enough to be enabled all the time, they must also be highly targeted and thus limited in scope. Therefore, Web applications cannot rely on browser-based defenses to block all malicious attacks. However, users of Web applications can still enjoy what limited protections they do provide. From an attacker's point of view, being able to bypass browser defenses makes it much easier to target users who would be otherwise protected.

|

| Figure 8.1 How client-side filters fit in, compared with traditional filters. |

The first serious implementation of a browser-based protection against Web vulnerabilities occurred in 2005 when Giorgio Maone released a Firefox plug-in called NoScript. At the time, Maone was primarily concerned about protecting himself against a particular vulnerability in Firefox 1.0.3 (https://bugzilla.mozilla.org/show_bug.cgi?id=292691). Having previously developed another popular Firefox extension, he was reluctant to just switch to another browser while the vulnerability was being fixed. Additionally, Maone was disillusioned with standard zero-day browser mitigation advice, namely to “Disable JavaScript” and “Don't browse to untrusted websites.” JavaScript is essential for access to many Web sites. Plus, the trustworthiness of a Web site is impossible to determine until you have navigated to the site! So, Maone sought a solution that would allow both of these pieces of advice to make sense. After a few days of intense work, NoScript was born, with the purpose to allow JavaScript to be executed only on trusted sites and disabled for everything else. 4

One limitation to the original NoScript design was that if a trusted Web site was compromised by something such as cross-site scripting, NoScript would not block the attack. Maone refined NoScript to be able to handle these types of situations. In 2007, he added specific cross-site scripting filters to NoScript so that even a trusted Web application would not be able to execute JavaScript, provided that NoScript could clearly identify it as malicious. This type of comprehensive security has helped to propel NoScript to become one of the most popular Firefox extensions over the past few years. 5 Perhaps more importantly, the success of NoScript, including the specific cross-site scripting filters, publicly demonstrated the effectiveness of browser-based defenses to prevent targeted malicious Web attacks.

NoScript has a lot of security features built in besides just blocking third-party scripts and cross-site scripting filtering. Check out some of its other innovative features at http://noscript.net/.

5Add-ons for Firefox Web page. The page lists NoScript as the third most downloaded extension with 404,199 downloads per week. https://addons.mozilla.org/en-US/firefox/extensions/?sort=downloads. Accessed August 8, 2010.

During the early to mid-2000s, researchers working on Web security at Microsoft were also internally designing specific filters to mitigate cross-site scripting attacks. Originally, the XSS Filter was made available only to internal Microsoft employees. 6 In March 2009, the XSS Filter became public with the release of Internet Explorer 8.

In 2009 and 2010, Google worked on developing its own set of client-side cross-site scripting filters, known as XSS Auditor, to be included in Chrome. The internal workings of XSS Auditor differ substantially from NoScript and Microsoft's XSS Filter; however, the end result is the same. As of the time of this writing, XSS Auditor is still in beta mode and is not enabled by default in the latest version of Chrome.

Bypassing client-side filters

Client-side filters must be generic enough to work with any Web site. As such, they are sometimes limited in scope to avoid false positives (David Ross's compatibility tenets). However, for the types of attacks they do attempt to block, they should do so very effectively; otherwise, it would be simple for attackers to modify their attack techniques to account for the possibility of any potential client-side filters that their victims might be using.

NoScript's filters are, in general, quite aggressive and attempt to block all types of attacks. They do this by analyzing all requests for malicious attacks. Whenever a request is detected that appears to have a malicious component, the request is blocked. This is notably different from Internet Explorer's approach, which is to look at outbound requests as well as incoming responses. As a result of these factors, the NoScript filters are more subject to both false positives and false negatives. On the plus side, as a Firefox extension, NoScript is able to quickly respond to any bypasses, and thus the window of exposure for its users can be kept relatively small.

IE8 filters

The Internet Explorer filters are much narrower in scope. There are roughly two dozen filters, and each has been carefully developed and tested, accounting for some of the particular details and quirks in how Internet Explorer parses HTML. The following regular expressions show the 23 most current versions of the filters (as of summer 2010):

1.

(v|(&[#()[].]x?0*((86)|(56)|(118)|(76));?))([ ]|(&[#()[].]x?0*(9|(13)|(10)|A|D);?))*(b|(&[#()[].]x?0*((66)|(42)|(98)|(62));?))([ ]|(&[#()[].]x?0*(9|(13)|(10)|A|D);?))*(s|(&[#()[].]x?0*((83)|(53)|(115)|(73));?))([ ]|(&[#()[].]x?0*(9|(13)|(10)|A|D);?))*(c|(&[#()[].]x?0*((67)|(43)|(99)|(63));?))([ ]|(&[#()[].]x?0*(9|(13)|(10)|A|D);?))*{(r|(&[#()[].]x?0*((82)|(52)|(114)|(72));?))}([ ]|(&[#()[].]x?0*(9|(13)|(10)|A|D);?))*(i|(&[#()[].]x?0*((73)|(49)|(105)|(69));?))([ ]|(&[#()[].]x?0*(9|(13)|(10)|A|D);?))*(p|(&[#()[].]x?0*((80)|(50)|(112)|(70));?))([ ]|(&[#()[].]x?0*(9|(13)|(10)|A|D);?))*(t|(&[#()[].]x?0*((84)|(54)|(116)|(74));?))([ ]|(&[#()[].]x?0*(9|(13)|(10)|A|D);?))*(:|(&[#()[].]x?0*((58)|(3A));?)).

2.

(j|(&[#()[].]x?0*((74)|(4A)|(106)|(6A));?))([ ]|(&[#()[].]x?0*(9|(13)|(10)|A|D);?))*(a|(&[#()[].]x?0*((65)|(41)|(97)|(61));?))([ ]|(&[#()[].]x?0*(9|(13)|(10)|A|D);?))*(v|(&[#()[].]x?0*((86)|(56)|(118)|(76));?))([ ]|(&[#()[].]x?0*(9|(13)|(10)|A|D);?))*(a|(&[#()[].]x?0*((65)|(41)|(97)|(61));?))([ ]|(&[#()[].]x?0*(9|(13)|(10)|A|D);?))*(s|(&[#()[].]x?0*((83)|(53)|(115)|(73));?))([ ]|(&[#()[].]x?0*(9|(13)|(10)|A|D);?))*(c|(&[#()[].]x?0*((67)|(43)|(99)|(63));?))([ ]|(&[#()[].]x?0*(9|(13)|(10)|A|D);?))*{(r|(&[#()[].]x?0*((82)|(52)|(114)|(72));?))}([ ]|(&[#()[].]x?0*(9|(13)|(10)|A|D);?))*(i|(&[#()[].]x?0*((73)|(49)|(105)|(69));?))([ ]|(&[#()[].]x?0*(9|(13)|(10)|A|D);?))*(p|(&[#()[].]x?0*((80)|(50)|(112)|(70));?))([ ]|(&[#()[].]x?0*(9|(13)|(10)|A|D);?))*(t|(&[#()[].]x?0*((84)|(54)|(116)|(74));?))([ ]|(&[#()[].]x?0*(9|(13)|(10)|A|D);?))*(:|(&[#()[].]x?0*((58)|(3A));?)).

3.

<st{y}le.*?>.*?((@[i\])|(([:=]|(&[#()[].]x?0*((58)|(3A)|(61)|(3D));?)).*?([(\]|(&[#()[].]x?0*((40)|(28)|(92)|(5C));?))))

4.

[/+ “‘']st{y}le[/+ ]*?=.*?([:=]|(&[#()[].]x?0*((58)|(3A)|(61)|(3D));?)).*?([(\]|(&[#()[].]x?0*((40)|(28)|(92)|(5C));?))

5.

<OB{J}ECT[/+ ].*?((type)|(codetype)|(classid)|(code)|(data))[/+ ]*=

6.

<AP{P}LET[/+ ].*?code[/+ ]*=

7.

[/+ “‘']data{s}rc[+ ]*?=.

8.

<BA{S}E[/+ ].*?href[/+ ]*=

9.

<LI{N}K[/+ ].*?href[/+ ]*=

10.

<ME{T}A[/+ ].*?http-equiv[/+ ]*=

11.

<?im{p}ort[/+ ].*?implementation[/+ ]*=

12.

<EM{B}ED[/+ ].*?SRC.*?=

13.

[/+ “‘']{o}nccc+?[+ ]*?=.

14.

<.*[:]vmlf{r}ame.*?[/+ ]*?src[/+ ]*=

15.

<[i]?f{r}ame.*?[/+ ]*?src[/+ ]*=

16.

<is{i}ndex[/+ >]

17.

<fo{r}m.*?>

18.

<sc{r}ipt.*?[/+ ]*?src[/+ ]*=

19.

<sc{r}ipt.*?>

20.

[“’][]*(([ˆa-z0–9~_:‘”])|(in)).*?(((l|(\u006C))(o|(\u006F))({c}|(\u00{6}3))(a|(\u0061))(t|(\u0074))(i|(\u0069))(o|(\u006F))(n|(\u006E)))|((n|(\u006E))(a|(\u0061))({m}|(\u00{6}D))(e|(\u0065)))).*?=

21.

[“’][]*(([ˆa-z0–9~_:‘”])|(in)).+?(({[.]}.+?)|({[[]}.*?{[]]}.*?))=

22.

[“’].*?{)}[]*(([ˆa-z0–9~_:‘”])|(in)).+?{(}

23.

[“’][]*(([ˆa-z0–9~_:‘”])|(in)).+?{(}.*?{)}

These filters are essentially regular expressions, but with one exception. The neuter character for each filter is surrounded by curly braces and has been bolded to emphasize its importance.

Some filters have multiple neuter characters in boldface since the regular expression may match in different places.

The filters look a lot more complicated than they really are. The first two simply detect the strings javascript: and vbscript: allowing for various encodings of the letters. Filters 3 and 4 detect CSS-related injections that utilize the word style as either an HTML element or an element's attribute. Filters 5, 6, 8 through 12, and 14 through 19 each detect the injection of a specific HTML element such as iframe, object, or script. Filters 7 and 13 look for the datasrc attribute and any sort of attribute event handler such as onerror, onload, or onmouseover. Finally, filters 20 through 23 each detect injections in JavaScript that require the attacker to first escape from a single- or double-quoted string.

The general case of detecting cross-site scripting injections into arbitrary JavaScript was determined to be too difficult to handle since JavaScript can be encoded and obfuscated in endless ways (as discussed in Chapters 3 and 4). However, one of the most common cross-site scripting scenarios involving data reflected into JavaScript is the scenario in which the attacker can control the value of a quoted string. To do anything malicious, the attacker must first escape from the string using a literal single- or double-quote character. This extra requirement provided enough of a “hook” that Microsoft felt it could develop filters covering the string escape followed by most of the ways that arbitrary JavaScript can be executed after the string escape.

IE8 bypasses

The Internet Explorer 8 filters, though limited in scope, are well constructed and difficult to attack. As tight as the filters are, though, they are still not bulletproof. Since the release of Internet Explorer 8, several direct and indirect bypasses have been identified. In particular, at least a few bypasses have emerged for the filters which detect injections into quoted JavaScript strings. Listed here are some of the more interesting bypasses:

1.

“+{valueOf:location, toString: [].join,0:'javx61script:alertx280)',length:1}//

This string could be injected into a JavaScript string. It would escape the string and then execute an alert, bypassing several of the filters along the way. In particular, Filter 20 attempts to prevent values from being assigned to the location object. This injection bypasses the filter by not using any equals sign to assign a string value to the location object (which in JavaScript will force a new page to load and can execute JavaScript via the javascript: URI schema). This injection also bypasses Filter 1 by encoding the string javascript using an encoding not covered in the filter. Filters 22 and 23 also played a part because they detect injected JavaScript that uses parentheses to invoke functions; as such, no function calls could be used in the injection.

2.

foo=‘&js_xss=";alert(0)//

This injection can be used to escape from a JavaScript string to perform cross-site scripting. The injection requires two GET (or POST) parameters to be set: the first is a fake (if needed) parameter and the second is for the real injection. Filters 19 through 23 each incorrectly identify the start of the injection. They determine the potential attack to be ‘&js_xss=";alert(0)//. When this string (or something closely resembling it) is not found in the response body, no blocking occurs. However, since the real injection, ";alert(0)//, slips through undetected, the filters are effectively bypassed.

3.

";x:[document.URL=‘javx61script:alertx280)’]//

This injection can also be used to escape from a JavaScript string. Filter 21 should detect this very string; however, a problem with the regular expression engine appears to prevent a match from occurring. Filter 21 contains three important parts, highlighted in Bypass 4.

4.

[“’][]*(([ˆa-z0–9~_:‘”])|(in)).+?(({[.]}.+?)|({[[]}.*?{[]]}.*?))=

(1)(2)(3)

The first important part of Filter 21, as referenced in the introduction to the preceding bypass code example, is a nongreedy matcher of any number of characters. The second is a literal period character followed by arbitrary text. The third is arbitrary text surrounded by brackets (and followed by some more arbitrary text, but this part is not important). Note that either the second or the third subexpression must match, since they are separated by the or character. So, when the regular expression engine is parsing this particular injection, the first subexpression will initially match just x: (the third and fourth characters in the injection) since it is a nongreedy match and the bracket allows matching to continue in subexpression 3. The closing bracket in subexpression 3 does not come until the third-to-last character of the injection, leaving the trailing // to match against the .*?. The regular expression then just needs to match against an equals sign to be complete. However, there is no final equals sign to match; thus the regular expression engine should unwind back to the point where subexpression 1 is matching at the beginning of the injection. As far as can be determined, this unwinding does not fully occur; if it did, a check for a literal period in subexpression 2 would match the period in document.URL (and then the final equals sign in the regular expression would match the equals sign following document.URL).

Attacking Internet Explorer 8's filters

There are several important things to consider when developing browser-based cross-site scripting filters. The primary considerations were nicely outlined by David Ross, a software security engineer at Microsoft. In a blog post at http://blogs.technet.com/b/srd/archive/2008/08/19/ie-8-xss-filter-architecture-implementation.aspx, Ross outlines three key factors: compatibility, performance, and security. Compatibility is important so that Web page authors do not have to make any changes to existing (or future) content for things to “work.” Performance is important because users and authors will be extremely put off by a noticeable increase in page load times. Finally, security is important because the whole point is to reduce risk to users, not increase it. 7

7Ross D. IEBlog. July 2, 2008. “IE8 Security Part IV: The XSS Filter.”http://blogs.msdn.com/b/ie/archive/2008/07/02/ie8-security-part-iv-the-xss-filter.aspx. Crosby S, Wallach D. August 2003 USENIX presentation on denial-of-service attacks abusing regular expressions. http://www.cs.rice.edu/~scrosby/hash/slides/USENIX-RegexpWIP.2.ppt.

Implementing browser-based cross-site scripting filters securely can be difficult. Microsoft learned this the hard way when it was discovered that its XSS Filter could be used to enabled cross-site scripting on Web sites that were otherwise immune to cross-site scripting attacks. To understand how this came about, we must first understand the XSS Filter's design and implementation details.

The design of Internet Explorer 8's XSS Filter can be understood as a potential three-step process. The first step is to analyze outbound requests for potential cross-site scripting attacks. For performance reasons, certain outbound requests are not checked, such as when a Web page makes a request to its same origin according to the browser same-origin policy8. Second, whenever a potential attack is detected, the server's response is fully analyzed, looking for the same attack pattern seen in the request. This helps to eliminate false positives. This also means persistent cross-site scripting attacks are not detected (as with Chrome and NoScript). If the second step confirms that a cross-site scripting attack is underway, the final step is to alter the attack string in the server response so as to prevent the attack from occurring.

8Zalewski M. June 30, 2010. “Browser Security Handbook.”http://code.google.com/p/browsersec/wiki/Part2#Same-origin_policy.

To detect malicious attacks in outgoing requests, a series of regular expressions are used which identify malicious attacks. These filters are referred to as heuristics filters. Every time one of the heuristics filters makes a match, a dynamic regular expression is generated to look for that attack pattern in the response. This regular expression must be dynamic since the Web server may change the attack string in certain ways.

The method used to neutralize attacks is also very important in terms of how the XSS Filter operates. Microsoft chose to use a “neutering” technique whereby a single character in the attack string is changed to the # character. The attack string itself may occur in multiple places in the server's response, so the neutering mechanism must be applied every place the dynamic regular expression matches.

Consider an example in which a browser makes a GET request for http://www.example.org/page?name=Alice<script>alert(0)</script>. This URL is checked against each heuristics filter. One of the filters looks for strings such as <script and so a positive match is made. Therefore, when the response from the server arrives, it is also checked against a dynamically generated filter. The response contains the string <h1>Welcome Alice<script>alert(0)</script>!</h1>. The dynamic filter matches the <script again, and so the neutering mechanism is applied before the page is rendered. In this case, the r in script is changed to #. The rendered page thus displays the string Welcome Alice<sc#ipt>alert(0)</script>! rather than executing the alert script.

When originally released, there were (at least) three scenarios where the XSS Filter's neutering mechanism could be abused.

Abuse Scenario 1

The XSS Filter could, and still can, be used to block legitimate scripts on a Web page. On some Web sites, client-side scripts may be used for security purposes. Disabling such scripts can have security-related consequences. For example, a common mitigation for clickjacking attacks is to use JavaScript which prevents the target page from being embedded in a frame. The attack method itself is rather straightforward. Say that a target page avoids clickjacking using inline JavaScript which prevents framing. All the attacker must do is to provide a gratuitous GET parameter such as &foo=<script in the URL to the page being targeted in the attack. The XSS Filter will flag the request in the outbound request along with any inline <script tag in the response. Thus, the antiframing JavaScript included in the response will be disabled by the filter.

Abuse Scenario 2

The second abuse scenario is similar to the first, though the attack itself is quite different. In certain situations, it may be possible for an attacker to control the text within a JavaScript string but not be able to escape from the JavaScript string or script. This may be the case when quotes and forward slashes are stripped before including the attacker-controlled string in a response. If this string is persistent and the attacker can inject < and > characters, the attacker could persist a string such as <img src=x:x onerror=alert(0) alt=x>. Note that it must be a persistent injection; otherwise, the XSS Filter would neuter this string when it is reflected from the server.

<script>name=‘<img src=x:x onerror=alert(0) alt=x>'; … </script>

In the preceding example, the code shown in boldface is controlled by the attacker.

At this point, the persistent injection is not directly exploitable, since the attacker is only in control of a JavaScript string and nothing else. However, the attacker can now provide a gratuitous GET parameter (the same as in abuse scenario 1) along with a request to the target page. This will neuter the script tag containing the attacker-controlled JavaScript string. Neutering the script tag ensures that Internet Explorer will parse the contents of the script as HTML. When the attacker-controlled string is parsed, the parser will see the start of the image tag and treat it as such. Therefore, the attacker's onerror script will be executed.

Microsoft issued a patch for this in July 2010. The fix was to avoid neutering in the first place when the XSS Filter detects a <script tag. Instead, the XSS Filter will disable all scripts on the target page and avoid parsing any inline scripts, thus avoiding any incorrect parsing of the scripts’ contents.

Abuse Scenario 3

The third and most severe scenario for abusing the XSS Filter was responsibly disclosed to Microsoft in September 2009. Microsoft then issued a patch for the vulnerability in January 2010.

Two of the original filters released in Internet Explorer were intended to neuter equals signs in JavaScript to prevent certain cross-site scripting scenarios. If an attacker injected a malicious string such as ";location=‘javascript:alert(0)’ one of the filters would be triggered and the script would be neutered to ";location#‘javascript:alert(0)’.

The problem with both of these filters was that, as with the other abuse cases, an attacker could supply a gratuitous GET parameter to neuter naturally occurring equals signs on a page. More specifically, essentially any equals sign used in an HTML attribute could be neutered. For example, <a href=“/path/to/page.html”> my homepage</a> could be changed to <a href#“/path/to/page.html”>my homepage</a>. On first glance, this may seem like an unfortunate but nonsecurity-related change. However, this particular change affects how Internet Explorer parses attribute name/value pairs.

Most modern browsers consider a/character as a separator between two name/value pairs, just like a space character. Also, when Internet Explorer is parsing the attributes in an element and encounters something such as href#“/ when it is expecting a new attribute, it treats the entire string like an attribute name which is missing the equals sign and value part. The trailing/is then interpreted as a separator between attributes, so whatever follows will be treated as a new attribute! This is the key that allows the neutering of equals signs to be abused for malicious purposes.

For example, say that users of a social media Web site can specify their home page in an anchor tag on the Web site's profile.html page (hopefully this does not represent a big stretch of your imagination). This is a very common scenario and typical cross-site scripting attacks are prevented by blocking or encoding quote characters and ensuring that the attribute itself is properly quoted in the first place. Characters such as/and standard alphanumeric characters are typically not encoded, as these are very common characters to find in a URL. If the attacker can also inject an equals sign unfiltered and unencoded, as is frequently the case, we have the makings of an exploitable scenario.

The attacker would set up the attack by injecting an href value of http://example.org/foo/onmouseover=alert(0)//bar.html, resulting in an HTML attribute such as <a href=“http://example.org/foo/onmouseover=alert(0)//bar.html”>my homepage</a>. Note that this could be a completely legitimate URL, though the attack still works even if it is not.

Use a double forward slash at the end of a JavaScript string which is injected as an unquoted attribute value. This helps to ensure that nothing following the injected string will be parsed as JavaScript.

The attacker would then construct a “trigger string” that would neuter the equals sign being used as part of the href attribute. Finally, the attacker would take the URL to the profile.html Web page and append a gratuitous GET parameter containing a suitable trigger string. Continuing the preceding example, the following string could do the trick:

http://example.org/profile.html?name=attacker&gratuitous=“me.gif”></img><a%20href=

If a victim who was using a vulnerable version of Internet Explorer 8 clicked on this malicious link, her browser would make a request for the page triggering the heuristics filter. When the server response came back, it would detect a malicious attack (though not the real one) since the trigger string was specially constructed to trigger the neutering. The browser would then neuter the target equals sign and proceed to render the page. The anchor tag for the attacker's home page would be <a href#“http://example.org/foo/onmouseover=alert(0)//bar.html”>my homepage</a>. The initial href#“http: would be interpreted as a malformed attribute, as would the strings example.org and foo. Finally, the string onmouseover=alert(0) gets parsed as a true name/value pair so that when the victim next moves the mouse pointer over the link, the alert(0) script will fire.

The preceding example targeted the href value of an anchor tag. In theory, any attribute could have been targeted, provided a couple of fairly low hurdles were cleared. First, the attacker had to be able to identify a suitable trigger string. Based on a sampling of vulnerable pages observed before this vulnerability was patched, this condition was never a limitation. Second, if characters such as forward slashes, equals signs, and white spaces were filtered, the injection would likely not succeed. Again, in the sampling of vulnerable pages taken, this was never a limitation.

Before Microsoft patched Internet Explorer 8 in January 2009, pretty much all major Web sites could be attacked using this vulnerability. In particular, Web sites that were relatively free from other types of cross-site scripting issues were exposed since this vulnerability fell outside the lines of standard cross-site scripting mitigations.

One positive change made to Internet Explorer 8's filtering mechanism as a result of this particular attack scenario is that the browser now recognizes a special response header which allows Web site owners to control the manner by which scripts are disabled. By default, Internet Explorer will neuter the attack as described. If the response headers from a Web site include the following:

X-XSS-Protection: 1;mode=block

the browser will simply not render the page at all. Although less user-friendly, this is definitely more secure than the neutering method. At present, it is recommended that all Web sites wishing to take advantage of IE8's filters enable this header.

Denial of service with regular expressions

Nearly all WAF filters utilize regular expressions in one form or another to detect malicious input. If the regular expressions are not properly constructed, they can be abused to cause denial-of-service vulnerabilities.

Regular expressions can be parsed using various techniques. One common technique is to use a finite state machine to model the parsing of the test string. The state machine includes various transitions from one state to another based on the regular expression. As each character in the test string is processed, a match is attempted against all possible transition states until an allowed state is found. The process then repeats with the next character. One scenario that will occur is that for a given character, no possible transition states are allowed. In other words, a dead end has been reached since the given character did not match any allowed transition states. In this case, the overall match does not necessarily fail. Rather, it means the state machine must revert back to an earlier state (and an earlier character) and continue to try to find acceptable transition states.

Consider the following regular expression:

A(B+)+C

If a test string of ABBBD is given, it is easy to see that a match will not be made. However, a finite state machine-based parser would have to try each potential state before it can determine that the string will not match. In fact, this particular string is somewhat of a worst-case scenario in that the state machine must traverse down many dead ends before determining that the overall string will not match. The number of different paths that must be attempted grows exponentially with the number of Bs provided in the input string.

Now, parsing short strings such as ABBBD can be done very quickly in a regular expression engine. However, the string ABBBBBBBBBBBBBBBBBBBBBBBBD will take considerably longer. How could an attacker exploit this issue? Well, if a regular expression used in a WAF has a pattern similar to A(B+)+C and the regular expression parser uses a finite state machine approach, the attacker could easily construct a worst-case scenario regular expression string that would take the WAF a very long time to complete.

Vulnerable regular expressions tend to appear quite regularly in complicated regular expressions; in particular, when the regular expression developer is not aware of the issue. Listed here are several real-world examples of regular expressions that were developed to match valid e-mail addresses, each of which is vulnerable:

[a-z]+@[a-z]+([a-z.]+.)+[a-z]+

The preceding filter was used in Spam Assassin many years ago. 9

ˆ[a-zA-Z]+(([‘,.-][a-zA-Z])?[a-zA-Z]*)*s+<(w[-._w]*w@w[-._w]*w.w{2,3})>$|ˆ(w[-._w]*w@w[-._w]*w.w{2,3})$

9Crosby S, Wallach D. August 2003 USENIX presentation on denial-of-service attacks abusing regular expressions. http://www.cs.rice.edu/~scrosby/hash/slides/USENIX-RegexpWIP.2.ppt.

The preceding filter was formerly used in Regex Library. 10

ˆ[-a-z0–9~!$%ˆ&*_=+}{‘?]+(.[-a-z0–9~!$%ˆ&*_=+}{‘?]+)*@([a-z0–9_][-a-z0–9_]*(.[-a-z0–9_]+)*.(aero|arpa|biz|com|coop|edu|gov|info|int|mil|museum|name|net|org|pro|travel|mobi|[a-z][a-z])|([0–9]{1,3}.[0–9]{1,3}.[0–9]{1,3}.[0–9]{1,3}))(:[0–9]{1,5})?$

10Weidman A, Roichman A. December 10, 2009. “Securing Applications with Checkmarx Source Code Analysis.”www.checkmarx.com/Upload/Documents/PDF/20091210_VAC-REGEX_DOS-Adar_Weidman.pdf.

The preceding filter was created to match against all legitimate e-mail addresses (and nothing else). 11

11Guillaume A. Mi-Ange blog. March 11, 2009. “The best regexp possible for email validation even in javascript.”http://www.mi-ange.net/blog/msg.php?id=79&lng=en.

Consider now what could happen if several such strings are submitted in rapid succession. At some point, the WAF itself may stop working and will not be able to handle new input. At this point, either access to the target application will be blocked (when the WAF is deployed in active blocked mode) or the WAF will no longer be able to parse new input (when the WAF is deployed in passive mode), meaning malicious content may be passed on to the target application undetected. Either result is a failure from a security point of view.

Denial-of-service attacks abusing regular expressions were first discussed during a USENIX presentation in 2003 by Scott Crosby and Dan Wallach. Their presentation slides are available at www.cs.rice.edu/~scrosby/hash/slides/USENIX-RegexpWIP.2.ppt. Abusing regular expressions in a Web scenario was further explored by Checkmarx researchers Adar Weidman and Alex Roichman during security conferences held in 2009. They coined the issue “ReDoS,” short for “Regular Expression Denial of Service,” as described at www.checkmarx.com/Upload/Documents/PDF/20091210_VAC-REGEX_DOS-Adar_Weidman.pdf.

Many other interesting type vulnerabilities found in regular expressions were discussed in a presentation by Will Drewry and Tavis Ormandy at the WOOT 2008 security conference (part of the 17th USENIX Security Symposium). Details are available in their paper, “Insecure Context Switching: Inoculating regular expressions for survivability,” which is located online at www.usenix.org/event/woot08/tech/full_papers/drewry/drewry_html/.

Summary

Different types of filtering devices can be used to protect Web applications. Both WAFs and client-side filters have filtering limitations which an attacker can exploit. Putting together many of the ideas and techniques covered in this book, we can see how a variety of filters can be bypassed and attacked. These attacks range from abusing cross-site scripting, which results in universal cross-site scripting, to performing denial-of-service attacks against poorly constructed regular expressions.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.