Chapter 11. Touch

In This Chapter

• Using mouse events for tap and double tap gestures

• Understanding how touch events are promoted to mouse events

• Registering for touch notifications using the Touch.FrameReported event

• Using manipulation events to consolidate touch input from multiple fingers

• Using a RenderTransform to move, resize, and rotate a UIElement via touch

• Animating an element in response to a flick gesture

• Best practices when designing touch friendly interfaces

Windows Phone devices come equipped with capacitive touch screens that offer a smooth, accurate, multitouch enabled experience. By adding touch and gesture support to your apps, you can greatly enhance user experience.

You can handle touch input in your Silverlight for Windows Phone app in a number of ways, including the following:

• Mouse events

• TouchPoint class

• Manipulation events

• UIElement gesture events

• Silverlight Toolkit for Windows Phone gestures

Mouse events are an easy way to get basic touch support, and allow you to detect simple, one-finger gestures such as tap and double tap.

The TouchPoint class provides a low-level input system that you can use to respond to all touch activity in the UI. The TouchPoint class is used by the higher-level touch input systems.

Manipulation events are UIElement events used to handle more complex gestures, such as multitouch gestures and gestures that use inertia and velocity data.

UIElement and Toolkit gestures further consolidate the low-level touch API into a set of gesture-specific events and make it easy to handle complex single and multitouch gestures.

This chapter explores each of these four approaches in detail. The chapter presents an example app demonstrating how to move, resize, rotate, and animate a UIElement using gestures and concludes by looking at the best practices for optimizing your user interfaces for touch input.

The Windows Phone Emulator does not support multitouch input using the mouse, therefore multitouch apps must be tested on a development computer that supports touch input, or on an actual phone device.

Handling Touch with Mouse Events

Silverlight mouse events can be used to detect a simple, one-finger gesture. Mouse event handlers can be quickly added to your app and provide an easy way to get basic touch support.

For more complex gestures, additional code is required to translate manipulation values to detect the actual gesture type. As you see in later sections of this chapter, there are easier higher-level APIs for handling more complex gestures.

In this chapter, the term touch point is used to describe the point of contact between a finger and the device screen.

The UIElement class includes the following mouse events:

• MouseEnter—Raised when a touch point enters the bounding area of a UIElement.

• MouseLeave—Raised when the user taps and moves his finger outside the bounding area of the UIElement.

• MouseLeftButtonDown—Raised when the user touches a UIElement.

• MouseLeftButtonUp—Raised when a touch point is removed from the screen while it is over a UIElement (or while a UIElement holds mouse capture).

• MouseMove—Raised when the coordinate position of the touch point changes while over a UIElement (or while a UIElement holds mouse capture).

• MouseWheel—This event is unutilized on a touch-driven UI.

The following example responds to the MouseLeftButtonDown, MouseLeftButtonUp, and MouseLeave events to change the background color of a Border control. See the following excerpt from the MouseEventsView.xaml file:

<Grid x:Name="ContentPanel" Grid.Row="1" Margin="12,0,12,0">

<Border

Tap="HandleTap"

MouseLeftButtonDown="HandleLeftButtonDown"

MouseLeftButtonUp="HandleLeftButtonUp"

MouseLeave="HandleMouseLeave"

Height="100"

Width="200"

Background="{StaticResource PhoneAccentBrush}" />

</Grid>

The event handlers in the code-beside change the color of the Border (see Listing 11.1).

Listing 11.1. MouseEventsView Class

public partial class MouseEventsView : PhoneApplicationPage

{

readonly SolidColorBrush dragBrush = new SolidColorBrush(Colors.Orange);

readonly SolidColorBrush normalBrush;

public MouseEventsView()

{

InitializeComponent();

normalBrush = (SolidColorBrush)Resources["PhoneAccentBrush"];

}

void HandleLeftButtonDown(object sender, MouseButtonEventArgs e)

{

Border border = (Border)sender;

border.Background = dragBrush;

}

void HandleLeftButtonUp(object sender, MouseButtonEventArgs e)

{

Border border = (Border)sender;

border.Background = normalBrush;

}

void HandleMouseLeave(object sender, MouseEventArgs e)

{

Border border = (Border)sender;

border.Background = normalBrush;

}

}

The MouseButtonEventArgs class derives from MouseEventArgs. MouseEventArgs is a RoutedEvent, which is discussed in more detail later in this chapter.

While mouse events provide a simple way to respond to touch in your app, they do not come equipped to support complex gestures or multitouch, nor do they provide any built-in means for ascertaining more detailed touch information, such as touch velocity.

Fortunately, as you see later in the chapter, a number of alternative touch APIs do just that. For now though, turn your attention to the Touch and TouchPoint classes that represent the low-level touch API.

Touch and TouchPoint Classes

The low-level interface for touch in Silverlight is the TouchPoint class. Unlike mouse events, the TouchPoint class is designed exclusively for touch without a pointing device in mind. A TouchPoint instance represents a finger touching the screen.

TouchPoint has four read-only properties, described in the following list:

• Action—Represents the type of manipulation and can have one of the three following values:

• Down—Indicates that the user has made contact with the screen.

• Move—Indicates that the touch point has changed position.

• Up—Indicates that the touch point has been removed.

• Position—Retrieves the X and Y coordinate position of the touch point, as a System.Windows.Point. This point is relative to the top-left corner of the element beneath the touch point.

• Size—This is supposed to get the rectangular area that is reported as the touch-point contact area. The value reported on Windows Phone, however, always has a width and height of 1, making it of no use on the phone.

• TouchDevice—Retrieves the specific device type that produced the touch point. This property allows you to retrieve the UIElement beneath the touch point via its TouchDevice.DirectlyOver property. The TouchDevice.Id property allows you to distinguish between fingers touching the display.

The Touch class is an application-level service used to register for touch events. It contains a single public static member, the FrameReported event. This event is global for your app and is raised whenever a touch event occurs.

To register for touch notifications, you subscribe to the Touch.FrameReported event like so:

Touch.FrameReported += HandleFrameReported;

A handler for the FrameReported event accepts a TouchFrameEventArgs argument, as shown:

void HandleFrameReported(object sender, TouchFrameEventArgs args)

{

.../* method body */

}

A frame is a time slice where one or more touch events have occurred. When the FrameReported event is raised, there may be up to four TouchPoint objects.

TouchFrameEventArgs contains four public members, which are described in the following list:

• TimeStamp property—An int value that indicates when the touch event occurred.

• GetPrimaryTouchPoint(UIElement relativeTo)—Retrieves the TouchPoint representing the first finger that touched the screen. A UIElement can be specified to make the TouchPoint object’s Position property relative to that element. If a UIElement is not specified (it is null), then the returned TouchPoint is relative to the top left of the page.

• GetTouchPoints(UIElement relativeTo)—Retrieves the collection of TouchPoints for the frame. The UIElement parameter works the same as the GetPrimaryTouchPoint. The parameter does not restrict the set of TouchPoints returned; all are returned and only the Position of each TouchPoint is affected.

• SuspendMousePromotionUntilTouchUp()—Prevents touch events from turning into mouse events. See the following section for a more detailed explanation.

Mouse Event Promotion

With the advent of Windows Phone, Silverlight has transitioned from being a browser and desktop only technology with a primarily mouse-driven UI, to a mobile device technology with a touch-driven UI.

To allow controls that were originally designed to work with mouse events to continue to function, the touch system was engineered so that touch events are automatically turned into (promoted to) mouse events. If a touch event is not suspended using the SuspendMousePromotionUntilTouchUp method, then mouse events are automatically raised.

To prevent a touch event from being promoted to a mouse event, call the SuspendMousePromotionUntilTouchUp within the FrameReported event handler, like so:

void HandleFrameReported(object sender, TouchFrameEventArgs e)

{

TouchPoint primaryTouchPoint = e.GetPrimaryTouchPoint(null);

if (primaryTouchPoint != null

&& primaryTouchPoint.Action == TouchAction.Down)

{

e.SuspendMousePromotionUntilTouchUp();

/* custom code */

}

}

The SuspendMousePromotionUntilTouchUp method can be called only when the TouchPoint.Action property is equal to TouchAction.Down, or else an InvalidOperationException is raised.

In most cases, calling SuspendMousePromotionUntilTouchUp is something you should avoid, because doing so prevents the functioning of any controls that rely solely on mouse events and those that have not been built using the touch-specific API.

Handling the Touch.FrameReported Event

The following example shows how to change the color of a Border control by responding to the Touch.FrameReported event. Within the TouchPointView XAML file is a named Border control as shown:

<Grid x:Name="ContentPanel" Grid.Row="1" Margin="12,0,12,0">

<Border x:Name="border"

Height="100"

Width="200"

Background="{StaticResource PhoneAccentBrush}" />

</Grid>

The code-beside file, TouchPointView.xaml.cs, subscribes to the Touch.FrameReported event within the page constructor. The event handler retrieves the primary touch point, and if the TouchPoint is above the Border, then the Border object’s Background is switched (see Listing 11.2).

Listing 11.2. TouchPointView Class

public partial class TouchPointView : PhoneApplicationPage

{

readonly SolidColorBrush directlyOverBrush

= new SolidColorBrush(Colors.Orange);

readonly Brush normalBrush;

public TouchPointView()

{

InitializeComponent();

normalBrush = border.Background;

Touch.FrameReported += HandleFrameReported;

}

void HandleFrameReported(object sender, TouchFrameEventArgs e)

{

TouchPoint primaryTouchPoint = e.GetPrimaryTouchPoint(null);

if (primaryTouchPoint == null

| | primaryTouchPoint.TouchDevice.DirectlyOver != border)

{

return;

}

if (primaryTouchPoint.Action == TouchAction.Down)

{ border.Background = directlyOverBrush;

}

else

{

border.Background = normalBrush;

}

}

}

Using the Touch and TouchPoint API provides you with the ability to respond to touch at a very low level. Subsequent sections of this chapter look at two higher level abstractions of the Touch and TouchPoint APIs, namely manipulation events and the Silverlight Toolkit for Windows Phone, beginning with manipulation events.

Manipulation Events

Manipulation events consolidate the touch activities of one or two fingers. Manipulation events combine individual touch point information, provided by the Touch.FrameReported event, and interpret them into a higher-level API with velocity, scaling, and translation information.

Moreover, unlike the Touch.FrameReported event, which provides touch notifications for your entire interface, a manipulation event is specific to the UIElement to which it is associated.

The manipulation events comprise three UIElement events, which are described in the following list:

• ManipulationStarted—Raised when the user touches the UIElement.

• ManipulationDelta—Raised when a second touch point is placed on the element, and when a touch input changes position. This event can occur multiple times during a manipulation. For example, if the user drags a finger across the screen, the ManipulationDelta event occurs multiple times during the finger’s movement.

• ManipulationCompleted—Raised when the user’s finger, or fingers, leaves the UIElement, and when any inertia applied to the element is complete.

These three events are routed events (they subclass the RoutedEvent class). In case you are not familiar with routed events, a RoutedEvent can be handled by an ancestor element in the visual tree. RoutedEvents bubble upwards through the visual tree, until they are either handled, indicated by the Handled property of the event arguments, or reach the root element of the visual tree. This means that you can subscribe to a manipulation event at, for example, the page level, and it gives you the opportunity to handle manipulation events for all elements in the page.

As you might expect, a manipulation begins with the ManipulationStarted event, followed by zero or more ManipulationDelta events, and then a single ManipulationCompleted event.

The event arguments for all three manipulation events have the following shared properties:

• OriginalSource (provided by the RoutedEvent base class)—This is the object that raised the manipulation event.

• ManipulationContainer—This is the topmost enabled UIElement being touched. Touch points on different UIElements provide a separate succession of manipulation events and are distinguished by the ManipulationContainer property. The OriginalSource and ManipulationContainer properties are in most cases the same. Internally, ManipulationContainer is assigned to the OriginalSource property when a manipulation event is raised.

• ManipulationOrigin—This property, of type Point, indicates the location of the touch point relative to the top-left corner of the ManipulationContainer element. If two touch points exist on the element, then the ManipulationOrigin property indicates the middle position between the two points.

• Handled—A property of type bool that allows you to halt the bubbling of the routed event up the visual tree.

Handling Manipulation Events

When a manipulation begins, the ManipulationStarted event is raised. The event handler accepts a ManipulationStartedEventArgs parameter, as shown:

void HandleManipulationStarted(object sender, ManipulationStartedEventArgs e)

{

/* method body */

}

Contrary to the low-level TouchPoint API, there is no need to store the id of the touch point to track its motion. In fact, the manipulation events do not offer individual touch point information. If you need that information, then the low-level TouchPoint API may be more suitable.

During manipulation, the ManipulationDelta event is raised when a touch point is added to the ManipulationContainer element, or when the position of a touch point on the element changes. The event handler accepts a ManipulationDeltaEventArgs parameter, as shown:

void HandleManipulationDelta(object sender, ManipulationDeltaEventArgs e)

{

/* method body */

}

ManipulationDeltaEventArgs provides you with the most recent and the accumulated manipulation data. The following is a list of its properties, which have not yet been covered:

• CumulativeManipulation—Gets the accumulated changes of the current manipulation, as a ManipulationDelta instance. ManipulationDelta has the following two properties:

• Scale—A Point indicating the horizontal and vertical scale amounts, relevant during multitouch manipulation.

• Translate—A Point indicating the horizontal and vertical positional offset.

• DeltaManipulation—Gets the most recent changes of the current manipulation, as a ManipulationDelta.

• IsInertial—Gets whether the ManipulationDelta event was raised while a finger had contact with the element. In my experience the value of this property is always false during the ManipulationDelta event. This property is of more use during handling of the ManipulationCompleted event, as you soon see.

• Velocities—Gets the rates of the most recent changes to the manipulation. This property is of type ManipulationVelocities, which is a class with the following two properties:

• ExpansionVelocity—Gets a Point representing the rate at which the manipulation was resized.

• LinearVelocity—Gets a Point representing the speed of the linear motion.

Inertia is applied automatically to a manipulation and is based on the velocity of the manipulation. Both the ManipulationCompletedEventArgs and ManipulationStartedEventArgs classes contain a Complete method, which allows you to forcibly finish the manipulation event sequence, which raises the ManipulationComplete event and prevents the application of inertia.

The ManipulationComplete event handler accepts a ManipulationCompletedEventArgs parameter, as shown:

void HandleManipulationCompleted(

object sender, ManipulationCompletedEventArgs e)

{

/* method body */

}

ManipulationCompletedEventArgs provides you with the final velocities and overall manipulation data. The following is a list of its properties, which have not yet been covered:

• FinalVelocities—Gets the final expansion and linear velocities for the manipulation.

• IsInertial—Gets whether the ManipulationDelta event occurred while a finger has contact with the element.

• IsInertial—Gets whether the ManipulationDelta event was raised while a finger had contact with the element. If the manipulation consisted of a single touch point it is indicative of a flick gesture.

• TotalManipulation—Gets a ManipulationDelta object containing two Points representing the total scale and translation values for the manipulation.

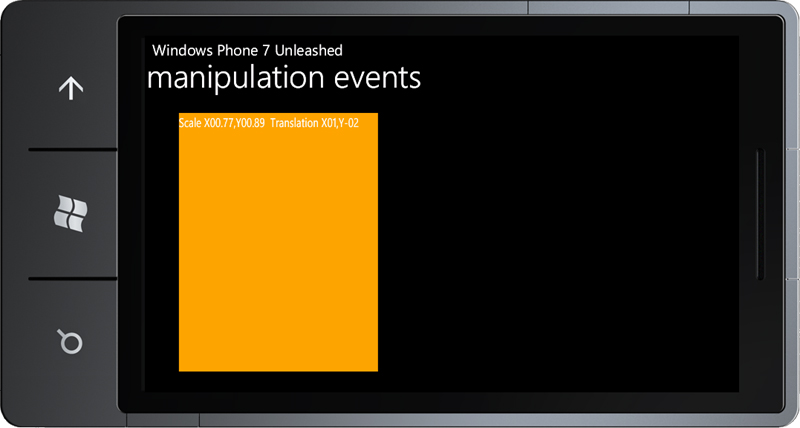

Manipulation Events Example

The following example illustrates how to move and scale a UIElement using the various manipulation events.

Within the ManipulationEventsView XAML file, there is a Border control defined as shown:

<StackPanel x:Name="ContentPanel" Grid.Row="1" Margin="12,0,12,0">

<Border

Height="400"

Width="400"

Background="{StaticResource PhoneAccentBrush}"

ManipulationStarted="HandleManipulationStarted"

ManipulationDelta="HandleManipulationDelta"

ManipulationCompleted="HandleManipulationCompleted">

<TextBlock x:Name="TextBlock_Message" />

<Border.RenderTransform>

<CompositeTransform x:Name="compositeTransform"/>

</Border.RenderTransform>

</Border>

</StackPanel>

When the user touches the Border, the ManipulationStarted event is raised, calling the code-beside handler, which sets the background color of the Border. As the user moves her fingers, the ManipulationDelta event is raised repeatedly, each time the location and scale of the Border is adjusted using the CompositeTransform (see Listing 11.3).

Listing 11.3. ManipulationEventsView Class

public partial class ManipulationEventsView : PhoneApplicationPage

{

readonly SolidColorBrush startManipulationBrush

= new SolidColorBrush(Colors.Orange);

Brush normalBrush;

public ManipulationEventsView()

{

InitializeComponent();

}

void HandleManipulationStarted(

object sender, ManipulationStartedEventArgs e)

{

Border border = (Border)sender;

normalBrush = border.Background;

border.Background = startManipulationBrush;

}

void HandleManipulationDelta(object sender, ManipulationDeltaEventArgs e)

{

compositeTransform.TranslateX += e.DeltaManipulation.Translation.X;

compositeTransform.TranslateY += e.DeltaManipulation.Translation.Y;

if (e.DeltaManipulation.Scale.X > 0

&& e.DeltaManipulation.Scale.X > 0)

{

compositeTransform.ScaleX *= e.DeltaManipulation.Scale.X;

compositeTransform.ScaleY *= e.DeltaManipulation.Scale.Y;

}

}

void HandleManipulationCompleted(

object sender, ManipulationCompletedEventArgs e)

{

Border border = (Border)sender;

border.Background = normalBrush;

TextBlock_Message.Text = string.Empty;

}

}

Pinching or stretching the Border modifies the CompositeTransform scale values, which resizes the control. A tap and drag gesture modifies the CompositeTransform translation values, which repositions the control (see Figure 11.1).

Figure 11.1. A Border is moved and resized in response to manipulation events.

UIElement Touch Gesture Events

Gestures are a high-level way of interpreting touch input data and include a set of common motions, such as tapping, flicking, and pinching. Controls, such as the WebBrowser, handle gestures such as the pinch gesture internally, allowing the user to pan and zoom in and out of content. By responding to gestures you can provide a natural and immersive experience for your users.

Support for basic single touch gestures has been incorporated into the Silverlight for Windows Phone SDK. Support for more complex gestures is provided by the Silverlight for Windows Phone Toolkit, discussed in the next section.

The sample code for this section is located in the UIElementTouchEventsView.xaml and UIElementTouchEventsView.xaml.cs files, in the Touch directory of the WindowsPhone7Unleashed.Examples project.

The UIElement class comes equipped to handle the following three touch gestures:

• Tap

• Double tap

• Hold

The UIElement includes three corresponding routed events: Tap, DoubleTap, and Hold.

The three tap events can be subscribed to in XAML, as the following excerpt demonstrates:

<Grid x:Name="ContentPanel" Grid.Row="1" Margin="12,0,12,0">

<Border

Tap="HandleTap"

DoubleTap="HandleDoubleTap"

Hold="HandleHold"

Height="100"

Width="200"

Background="{StaticResource PhoneChromeBrush}">

<TextBlock x:Name="textBlock"

HorizontalAlignment="Center" VerticalAlignment="Center"

Style="{StaticResource PhoneTextLargeStyle}"/>

</Border>

</Grid>

Figure 11.2 shows the sample page responding to the double tap gesture.

Figure 11.2. UIElementTouchEventsView page

Event handlers for all three events share the same signature and accept a GestureEventArgs object. GestureEventArgs contains a Boolean Handled property, which allows you to stop the event from continuing to bubble up the visual tree, and a GetPosition method that allows you to retrieve the location coordinates of where the touch occurred.

Each tap gesture supported by UIElement is examined in the following sections.

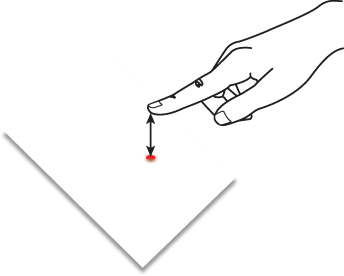

Tap Gesture

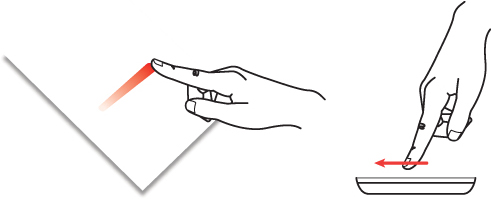

The simplest of all the gestures, a tap occurs when a finger touches the screen momentarily (see Figure 11.3).

Figure 11.3. Tap gesture

This gesture is analogous to a single-click performed with a mouse. The tap gesture can be broken down into the following two parts:

• Finger down provides touch indication.

• Finger up executes the action.

The Tap event is raised when the user performs a tap gesture. If the user’s finger remains in contact with the display for longer than one second, the tap event is not raised.

An event handler for the Tap event is shown in the following excerpt:

void HandleTap(object sender, GestureEventArgs e)

{

textBlock.Text = "tap";

}

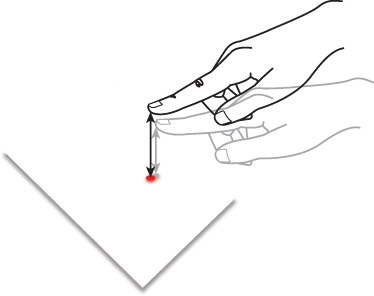

Double Tap Gesture

The double tap gesture occurs when a finger quickly touches the screen twice (see Figure 11.4). This gesture is analogous to a double-click performed with a mouse.

Figure 11.4. Double tap gesture

The double tap is primarily designed to be used to toggle between the in and out zoom states of a control.

An event handler for the DoubleTap event is shown in the following excerpt:

void HandleDoubleTap(object sender, GestureEventArgs e)

{

textBlock.Text = "double tap";

}

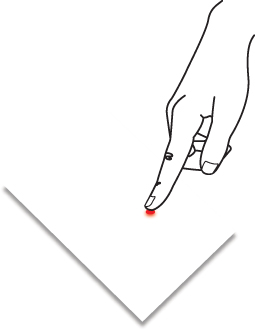

Hold Gesture

The hold gesture is performed by touching the screen while continuing contact for a period of time (see Figure 11.5).

Figure 11.5. Hold gesture

The UIElement.Hold event is raised when the user touches the screen for one second.

An event handler for the Hold event is shown in the following excerpt:

void HandleHold(object sender, GestureEventArgs e)

{

textBlock.Text = "hold";

}

As an aside, the UIElement Hold event is used by the Silverlight Toolkit’s ContextMenu component. When used in this way, the hold gesture mimics the right-click of a mouse.

UIElement and Silverlight Toolkit gesture events (discussed in the next section) are raised only if the UIElement is detected beneath the touch point. Make sure that the Background property of the UIElement is not null. If a background is not defined, touch events pass through the host control, and gestures are not detected. To achieve a see-through background, while still maintaining gesture support, set the background property of the UIElement to Transparent.

Silverlight Toolkit Gestures

Silverlight for Windows Phone Toolkit, introduced in Chapter 9, “Silverlight Toolkit Controls,” abstracts low-level touch events and further consolidates touch event data into a set of events representing the various single and multitouch gesture types.

Toolkit gestures harness the Touch.FrameReported event (described in the previous section “Touch and TouchPoint Classes”) to provide high-level gesture information.

Unless you require low-level touch point data, UIElement and Toolkit gestures are the recommended way to add single and multitouch capabilities to your apps.

There is some overlap between the UIElement touch gesture events and the Toolkit gestures API; both allow you to handle tap, double tap, and hold gestures.

The first release of the Windows Phone SDK did not include baked-in support for these three gestures, and they were subsequently added with the 7.1 release of the SDK. In the meantime, the Toolkit had already included gesture support, so the presence of these gestures in the Toolkit remains as somewhat of a legacy feature. It is therefore recommended that you use the UIElement touch gesture events, rather than the Toolkit gesture API, for the tap, double tap, and hold gestures.

Getting Started with Toolkit Gestures

Unlike the other techniques seen in this chapter, Toolkit gestures are provided as a separate assembly, as part of the Silverlight for Windows Phone Toolkit package.

You can obtain the Silverlight for Windows Phone Toolkit from the Toolkit website on CodePlex (http://silverlight.codeplex.com/). See Chapter 9 for more information.

The two main classes for working with Toolkit gestures are the GestureService and GestureListener classes. GestureService is used only to attach a GestureListener to a UIElement. Like manipulation events, Toolkit gestures are used in conjunction with a UIElement. Once attached, the GestureListener monitors the element for gestures that the element can support, such as tap, hold, pinch, and flick.

GestureListener allows you to specify handlers for each gesture type. The following example shows a Border control with subscriptions to these events via the attached GestureListener:

<Border Background="{StaticResource PhoneAccentBrush}">

<toolkit:GestureService.GestureListener>

<toolkit:GestureListener

GestureBegin="HandleGestureBegin"

GestureCompleted="HandleGestureCompleted"

Tap="HandleTap"

DoubleTap="HandleDoubleTap"

Hold="HandleHold"

DragStarted="HandleDragStarted"

DragDelta="HandleDragDelta"

DragCompleted="HandleDragCompleted"

Flick="HandleFlick"

PinchStarted="HandlePinchStarted"

PinchDelta="HandlePinchDelta"

PinchCompleted="HandlePinchCompleted">

</toolkit:GestureListener>

</toolkit:GestureService.GestureListener>

</Border>

The GestureListener class is designed for Silverlight’s event-driven model. The class effectively wraps the low-level Silverlight touch mechanism to perform automatic conversion of the Touch.FrameReported events into unique Silverlight events for each gesture type.

GestureListener Events in Detail

This section looks at each gesture and the events that correspond to each.

Tap Gesture

When a tap gesture is detected, then the GestureListener.Tap event is raised. The event handler accepts a GestureEventArgs parameter, as shown:

void HandleTap(object sender, GestureEventArgs e)

{

/* method body. */

}

The GestureListener events are quasi routed events. That is, they behave like routed events, yet their event arguments do not inherit from the RoutedEvent class. Like the RoutedEventArgs class, the GestureEventArgs includes an OriginalSource property that allows you to retrieve a reference to the object that raised the event, which may differ from the sender argument provided to the event handler.

The routing mechanism for gesture events also allows you to handle any or all of the gesture events at a higher level in the visual tree. For example, to listen for a Tap event that may occur in a child element, you can subscribe to the Tap event at the page level, like so:

<phone:PhoneApplicationPage

x:Class="DanielVaughan.WindowsPhone7Unleashed.Examples.GesturesView"

... >

<toolkit:GestureService.GestureListener>

<toolkit:GestureListener Tap="HandleTapAtPageLevel" />

</toolkit:GestureService.GestureListener>

...

</phone:PhoneApplicationPage>

The routing mechanism gives you the power to control whether an event should continue to bubble up the visual tree. Set the GestureEventArgs.Handled property to true to prevent the event from being handled at a higher level (in this case by the page level handler), as shown in the following example:

void HandleTap(object sender, GestureEventArgs e)

{

e.Handled = true;

}

By setting the Handled property to true, the HandleTapAtPageLevel method is not called.

The GestureEventArgs class is the base class for other gesture event arguments as shown later in this section.

Double Tap Gesture

When a double tap gesture is detected, the GestureListener.DoubleTap event is raised. The event handler accepts a GestureEventArgs parameter, as shown:

void HandleDoubleTap(object sender, GestureEventArgs e)

{

/* method body. */

}

Hold Gesture

When a hold gesture is detected, then the GestureListener.Hold event is raised. The handler accepts a GestureEventArgs parameter, as shown:

void HandleHold(object sender, GestureEventArgs e)

{

/* method body. */

}

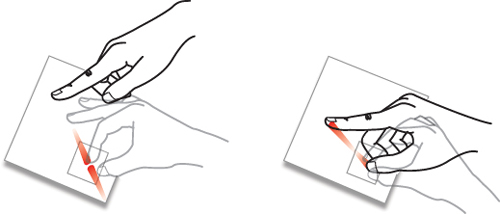

Drag Gesture

The drag gesture is performed by touching the screen and moving the finger in any direction while still in contact with the screen (see Figure 11.6).

Figure 11.6. Drag gesture

The drag gesture is normally used to reorder items in a list or to reposition an element by direct manipulation.

The following three events are used to detect and coordinate activities during a drag gesture:

• DragStarted—Raised when the drag gesture begins, which is as soon as motion is detected following a touch event.

• DragDelta—Raised while a drag gesture is underway and the location of the touch point changes.

• DragCompleted—Raised on touch release after a drag, or when a second touch point is added.

When a drag gesture is detected, the GestureListener.DragStarted event is raised. The event handler accepts a DragStartedGestureEventArgs parameter, as shown:

void HandleDragStarted(object sender, DragStartedGestureEventArgs e)

{

/* method body. */

}

DragStartedGestureEventArgs provides you with the initial direction of the drag gesture via its Direction property and can be either Horizontal or Vertical depending on the predominate direction.

As the user moves either finger during a drag gesture, the GestureListener.DragDelta event is raised. The event handler accepts a DragDeltaGestureEventArgs parameter, as shown:

void HandleDragDelta(object sender, DragDeltaGestureEventArgs e)

{

/* method body. */

}

The DragDeltaGestureEventArgs parameter provides the difference between the current location of the touch point and its previous location. The DragDeltaGestureEventArgs has the following three properties:

• Direction—Provides the predominate direction of the drag gesture, either Horizontal or Vertical.

• HorizontalChange—The horizontal (x axis) difference in pixels between the current touch point location and the previous location. The value of this property is negative if the drag occurs from right to left.

• VerticalChange—The vertical (y axis) difference in pixels between the current touch point location and the previous location. The value of this property is negative if the drag occurs in an upward direction.

When the user completes the drag gesture by removing the touch points, the GestureListener.DragCompleted event is raised. This event provides the opportunity to conclude any activities that were being performed during the drag gesture. The handler accepts a DragCompletedGestureEventArgs parameter, as shown:

void HandleDragCompleted(object sender, DragCompletedGestureEventArgs e)

{

/* method body. */

}

In addition to the data provided by the DragDeltaGestureEventArgs, the DragCompletedGestureEventArgs argument includes a VerticalVelocity property and a HorizontalVelocity property that measure the velocity of the drag. You may find that often both values are zero, when the user stops the dragging motion before removing the touch point.

The example presented later in this section explores the drag gesture in greater detail and shows how the drag gesture can be used to change the location of UIElements on a page.

Flick Gesture

A flick gesture is performed by dragging a finger across the screen and lifting the finger without stopping (see Figure 11.7). A flick gesture normally moves content from one area to another.

Figure 11.7. Flick gesture

When a flick gesture is detected, the GestureListener.Flick event is raised. The event handler accepts a FlickGestureEventArgs parameter, as shown:

void HandleFlick(object sender, FlickGestureEventArgs e)

{

/* method body. */

}

FlickGestureEventsArgs provides you with the direction and velocity of the flick gesture via the following four properties:

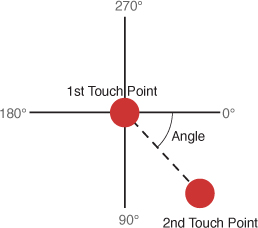

• Angle—A double value, measured in degrees, indicating the angle of the flick. A downward flick results in an angle between 0 and 180 degrees; an upward flick in an angle between 180 and 360 degrees (see Figure 11.8). This value is calculated using the horizontal and vertical velocity of the flick.

Figure 11.8. Origin of the FlickGestureEventsArgs.Angle property

• Direction—An Orientation value of either Horizontal or Vertical, indicating whether the flick was more horizontal than vertical, or vice versa.

• HorizontalVelocity—A double value indicating the horizontal (X) velocity of the flick.

• VerticalVelocity—A double value indicating the vertical (Y) velocity of the flick.

In the example code, presented later in this section, you see how to animate a UIElement in response to a flick gesture.

Gesture events are not necessarily mutually exclusive. The GestureListener.DragCompleted event is raised along with the GestureListener.Flick event.

Pinch Gesture

A pinch gesture is performed by pressing two fingers on the screen and moving either or both fingers together (see Figure 11.9). A stretch gesture is performed by moving the fingers apart. Both gestures are detected using the GestureListener.Pinch event.

Figure 11.9. Pinch and stretch gestures

The pinch gesture is the only multitouch gesture supported by the GestureListener and is typically used to zoom in or out of a page or element.

The following three events are used to detect and coordinate activities during a pinch gesture:

• PinchStarted—Raised when the pinch gesture begins, and occurring as soon as motion is detected when at least two fingers are touching the screen

• PinchDelta—Raised while a pinch gesture is underway and the location of either touch point changes

• PinchCompleted—Raised on touch release, of either touch points, after a pinch

When a pinch gesture is detected, the GestureListener.PinchStarted event is raised. The event handler accepts a PinchStartedGestureEventArgs parameter, as shown:

void HandlePinchStarted(object sender, PinchStartedGestureEventArgs e)

{

/* method body. */

}

PinchStartedGestureEventArgs provides you with the direction and velocity of the pinch gesture via the following two properties:

• Angle—A double value, measured in degrees, indicating the angle from the first touch point, the first to make contact with the screen, to the second touch point (see Figure 11.10)

Figure 11.10. Determining the pinch angle

• Distance—A double value indicating the distance in pixels between the two touch points

As the user moves either finger during a pinch gesture, the GestureListener.PinchDelta event is raised. The handler accepts a PinchGestureEventArgs parameter, as shown:

void HandlePinchDelta(object sender, PinchGestureEventArgs e)

{

/* method body. */

}

The PinchGestureEventArgs argument provides the distance and angle of the touch points compared to the original touch points that were registered when the pinch gesture began. PinchGestureEventArgs has the following two properties:

• DistanceRatio—Provides the ratio of the current distance between touch points divided by the original distance between the touch points

• TotalAngleDelta—Provides the difference between the angles of the current touch positions and the original touch positions

The PinchGestureEventArgs derives from MultiTouchGestureEventArgs, which also provides a GetPosition method that allows you to determine the position of the first or second touch point relative to a specified UIElement. The method accepts a UIElement and an int value indicating the index of a touch point, either 0 or 1.

When the user completes the pinch gesture by removing either or both touch points, the GestureListener.PinchCompleted event is raised. This event provides the opportunity to conclude any activities that were being performed during the pinch gesture, such as finalizing the locations of elements. The handler accepts a PinchStartedGestureEventArgs parameter, as shown:

void HandlePinchDelta(object sender, PinchGestureEventArgs e)

{

/* method body. */

}

The PinchGestureEventArgs argument provides the same information as described for the GestureListener.PinchDelta event.

GestureBegin and GestureCompleted Events

In addition to the gesture-specific events, two events are raised before and after every gesture event. These are the GestureBegin and GestureCompleted events. Handlers for these events resemble the following:

void HandleGestureBegin(object sender, GestureEventArgs e)

{

/* method body. */

}

void HandleGestureCompleted(object sender, GestureEventArgs e)

{

/* method body. */

}

Gesture Sample Code

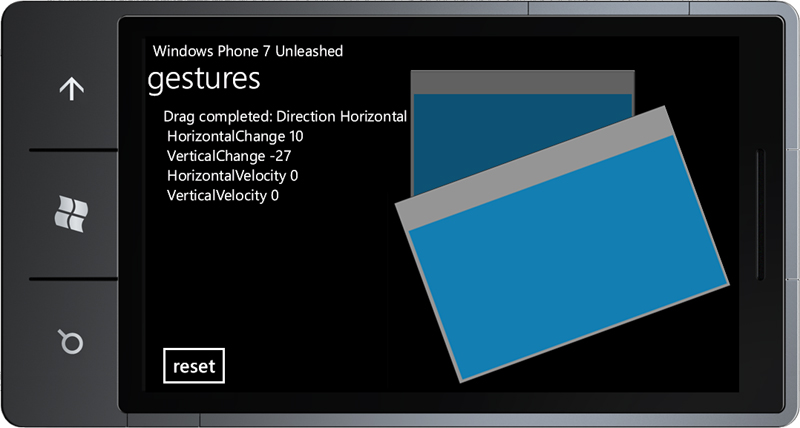

This section looks at the GesturesView page in the downloadable sample code and demonstrates the various events of the GestureListener class. You see how to move, rotate, and resize a UIElement using gestures. You also see how to provide an animation that responds to a flick gesture to send a UIElement hurtling across a page.

The view contains a Border. Within the Border we attach the GestureListener and subscribe to its various events:

<Grid x:Name="ContentPanel" Grid.Row="1" Margin="12,0,12,0">

<TextBlock x:Name="messageBlock"

Style="{StaticResource PhoneTextNormalStyle}"

VerticalAlignment="Top" />

<Button Content="reset" Click="Button_Click"

VerticalAlignment="Bottom"

HorizontalAlignment="Left" />

<Border x:Name="border"

Width="300" Height="200"

BorderBrush="{StaticResource PhoneBorderBrush}"

BorderThickness="4,32,4,4"

Background="{StaticResource PhoneAccentBrush}"

RenderTransformOrigin="0.5,0.5"

Opacity=".8"

CacheMode="BitmapCache">

<Border.RenderTransform>

<CompositeTransform x:Name="compositeTransform"/>

</Border.RenderTransform>

<toolkit:GestureService.GestureListener>

<toolkit:GestureListener

Tap="HandleTap"

DoubleTap="HandleDoubleTap"

Hold="HandleHold"

DragStarted="HandleDragStarted"

DragDelta="HandleDragDelta"

DragCompleted="HandleDragCompleted"

Flick="HandleFlick"

PinchStarted="HandlePinchStarted"

PinchDelta="HandlePinchDelta"

PinchCompleted="HandlePinchCompleted">

</toolkit:GestureListener>

</toolkit:GestureService.GestureListener>

</Border>

</Grid>

A CompositeTransform is used to change the position, size, and rotation of the Border.

The following sections walk through each gesture event and show how the Border control is manipulated in response.

Handling the Tap, DoubleTap, and Hold Events

When the user performs a tap gesture, the HandleTap method in the code-beside is called. This method resets the CompositeTransform translate values to 0, which returns the Border to its original location.

void HandleTap(object sender, GestureEventArgs e)

{

compositeTransform.TranslateX = compositeTransform.TranslateY = 0;

}

When the user performs a double tap gesture, the HandleDoubleTap method is called, which resets the amount of scaling applied to the Border, returning it to its original size.

void HandleDoubleTap(object sender, GestureEventArgs e)

{

compositeTransform.ScaleX = compositeTransform.ScaleY = 1;

}

When a hold gesture is performed, the HandleHold method is called. This, in turn, calls the ResetPosition method, resetting the size and location of the Border.

void HandleHold(object sender, GestureEventArgs e)

{

ResetPosition();

}

void ResetPosition()

{

compositeTransform.TranslateX = compositeTransform.TranslateY = 0;

compositeTransform.ScaleX = compositeTransform.ScaleY = 1;

compositeTransform.Rotation = 0;

}

Dragging the Border Control

When a drag gesture is performed, the HandleDragStarted method is called. This method changes the background of the Border, like so:

void HandleDragStarted(object sender, DragStartedGestureEventArgs e)

{

border.Background = dragBrush;

}

An event handler for the DragDelta event moves the element by the drag amount, via the translation properties of the CompositeTransform:

void HandleDragDelta(object sender, DragDeltaGestureEventArgs e)

{

compositeTransform.TranslateX += e.HorizontalChange;

compositeTransform.TranslateY += e.VerticalChange;

}

When the drag gesture completes the DragCompleted event handler resets the Border background:

void HandleDragCompleted(object sender, DragCompletedGestureEventArgs e)

{

border.Background = normalBrush;

}

Rotating and Scaling in Response to a Pinch Gesture

When a pinch gesture is detected, the Pinch event handler records the amount of rotation applied to the element, along with the scale of the element:

double initialAngle;

double initialScale;

void HandlePinchStarted(object sender, PinchStartedGestureEventArgs e)

{

border.Background = pinchBrush;

initialAngle = compositeTransform.Rotation;

initialScale = compositeTransform.ScaleX;

}

A change in the pinch gesture raises the PinchDelta event, at which point the level of rotation is applied to the CompositeTransform, and the element is scaled up or down using the PinchGestureEventArgs.DistanceRatio property:

void HandlePinchDelta(object sender, PinchGestureEventArgs e)

{

compositeTransform.Rotation = initialAngle + e.TotalAngleDelta;

compositeTransform.ScaleX

= compositeTransform.ScaleY = initialScale * e.DistanceRatio;

}

The completion of a pinch gesture sees the border’s background restored, as shown:

void HandlePinchCompleted(object sender, PinchGestureEventArgs e)

{

border.Background = normalBrush;

}

Flick Gesture Animation

This section presents a technique for animating a UIElement in response to a flick gesture. When a flick occurs we install a StoryBoard with a timeline animation, which performs a translation on the UIElement.

The detection of a flick gesture causes the Flick event handler, named HandleFlick, to be called. This method calculates a destination point for the border control based on the velocity of the flick. It then creates an animation for the border using the custom AddTranslationAnimation. See the following excerpt:

const double brakeSpeed = 10;

void HandleFlick(object sender, FlickGestureEventArgs e)

{

Point currentPoint = new Point(

(double)compositeTransform.GetValue(

CompositeTransform.TranslateXProperty),

(double)compositeTransform.GetValue(

CompositeTransform.TranslateYProperty));

double toX = currentPoint.X + e.HorizontalVelocity / brakeSpeed;

double toY = currentPoint.Y + e.VerticalVelocity / brakeSpeed;

Point destinationPoint = new Point(toX, toY);

var storyboard = new Storyboard { FillBehavior = FillBehavior.HoldEnd };

AddTranslationAnimation(

storyboard, border, currentPoint, destinationPoint,

new Duration(TimeSpan.FromMilliseconds(500)),

new CubicEase {EasingMode = EasingMode.EaseOut});

storyboard.Begin();

}

The static method AddTranslationAnimation creates two DoubleAnimation objects: one for the horizontal axis, the other for the vertical axis. Each animation is assigned to the border’s CompositeTransform, as shown:

static void AddTranslationAnimation(Storyboard storyboard,

FrameworkElement targetElement,

Point fromPoint,

Point toPoint,

Duration duration,

IEasingFunction easingFunction)

{

var xAnimation = new DoubleAnimation

{

From = fromPoint.X,

To = toPoint.X,

Duration = duration,

EasingFunction = easingFunction

};

var yAnimation = new DoubleAnimation

{

From = fromPoint.Y,

To = toPoint.Y,

Duration = duration,

EasingFunction = easingFunction

};

AddAnimation(

storyboard,

targetElement.RenderTransform,

CompositeTransform.TranslateXProperty,

xAnimation);

AddAnimation(

storyboard,

targetElement.RenderTransform,

CompositeTransform.TranslateYProperty,

yAnimation);

}

The static method AddAnimation associates an animation Timeline with a DependencyObject, which in this case is the Border control:

static void AddAnimation(

Storyboard storyboard,

DependencyObject dependencyObject,

DependencyProperty targetProperty,

Timeline timeline)

{

Storyboard.SetTarget(timeline, dependencyObject);

Storyboard.SetTargetProperty(timeline, new PropertyPath(targetProperty));

storyboard.Children.Add(timeline);

}

When launched, the GestureView page allows the user to flick the Border element across the page. A reset button exists to reset the position of the Border if it happens to leave the visible boundaries of the page (see Figure 11.11).

Figure 11.11. The Border can be moved, rotated, resized, and flicked in response to gesture events.

Toolkit gestures offer a high-level way of interpreting touch input data and make it easy to provide a natural and immersive experience for your users, without the bother of tracking low-level touch events.

Designing Touch Friendly User Interfaces

The design and layout of your user interface influences how easy your app is to use with touch input. When designing your UI, it is important to ensure that page elements are big enough and far enough apart to be conducive to touch input. It is also important to consider the nature of mobile devices, and that your app may be used in conditions where touch precision is compromised, such as standing aboard a moving train. Adhering to the advice presented in this section helps you to ensure that your apps are touch friendly.

Three Components of Touch

Touch UI can be broken down into the following three components:

• Touch target—The area defined to accept touch input, which is not visible to the user

• Touch element—The visual indicator of the touch target, which is visible to the user

• Touch control—A touch target that is combined with a touch element that the user touches

The touch target can be larger than the touch element, but should never be smaller than it. The touch element should never be smaller than 60% of the touch target.

Sizing and Spacing Constraints

Providing adequate spacing between touch targets helps to prevent users from selecting the wrong touch control. Touch targets should not be smaller than 34 pixels (9 mm) square and should provide at least 8 pixels (2 mm) between touchable controls (see Figure 11.12).

Figure 11.12. Touch target sizing and spacing constraints

In exceptional cases, controls can be smaller, but they should never be smaller than 26 pixels (7 mm) square.

It is recommend that some touch targets be made larger than 34 pixels (9 mm) when the touch target is a frequently touched control or the target resides close to the edge of the screen.

General Guidelines

The following is a list of some general guidelines when designing a touch-friendly interface:

• When designing your interface, you should allow all basic or common tasks to be completed using a single finger.

• Touch controls should respond to touch immediately. A touch control with even a slight lag, or one that seems slow when transitioning, has a negative impact on the user experience.

• Processing that would otherwise reduce the responsiveness of the UI should be performed on a background thread. If an operation takes a long time, consider providing incremental feedback.

• Try to use a gesture in the manner for which it was intended. For example, avoid using a gesture as a shortcut to a task.

Summary

This chapter began by looking at mouse events. Mouse events are an easy way to get basic touch support and allow you to detect simple, one-finger gestures such as tap and double tap.

The chapter examined the TouchPoint class and illustrated how it provides a low-level input system that you can use to respond to all touch activity in the UI.

The UIElement manipulation events also were discussed. Manipulation events are used to handle more complex gestures, such as multitouch gestures and gestures that use inertia and velocity data.

The chapter explored the gestures support in the Silverlight for Windows Phone SDK and the Silverlight for Windows Phone Toolkit, and you saw how they further consolidate the low-level touch API into a set of gesture-specific events and make it easy to handle complex single and multitouch gestures.

Finally, the chapter looked at the best practices for optimizing your user interfaces for touch input.