20

Greedy Method

This chapter discusses the greedy method and demonstrates the strategy over the Knapsack problem, Prim’s and Kruskal’s spanning tree extraction algorithms and Dijkstra’s Single Source Shortest Path problem.

20.1. Introduction

The greedy method is an algorithm design technique, which is applicable to problems defined by an objectivefunction that needs to be maximized or minimized, subject to some constraints. Given n inputs to the problem, the aim is to obtain a subset of n, that satisfies all the constraints, in which case it is referred to as a feasible solution. A feasible solution that serves to obtain the best objective function value (maximal or minimal) is referred to as the optimal solution.

The greedy method proceeds to obtain the feasible and optimal solution, by considering the inputs one at a time. Hence, an implementation of the greedy method-based algorithm is always iterative in nature. Contrast this with the implementation of Divide and Conquer based algorithms discussed in Chapter 19, which is always recursive in nature.

20.2. Abstraction

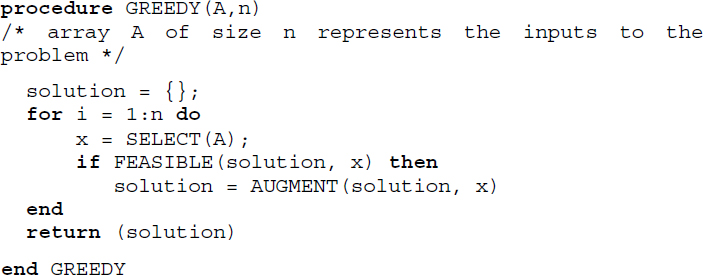

The abstraction of the greedy method is shown in Figure 20.1. Here, the function SELECT(A) makes a prudent selection of the input depending on the problem. Function FEASIBLE() ensures that the solution satisfies all constraints imposed on the problem and AUGMENT() augments the current input which is a feasible solution to the solution set. Observe how the for loop ensures consideration of inputs one by one, justifying the iterative nature of greedy algorithms.

Figure 20.1 Abstraction of the greedy method

The application of the greedy method in the design of solutions to the knapsack problem, extraction of minimum cost spanning trees using Prim’s and Kruskal’s algorithms and Dijkstra’s single source shortest path problem, are discussed in the ensuing sections.

20.3. Knapsack problem

The knapsack problem is a classic problem to illustrate the greedy method. The problem deals with n objects with weights (W1, W2, …Wn ) and with profits or prices (P1, P2, …Pn). A knapsack with a capacity of M units is available. In other words, the knapsack can handle only a maximum weight of M, when objects with varying weights are packed into it. A person is allowed to own as many objects, on the conditions that (i) the total weight of all objects selected cannot exceed the capacity of the knapsack and (ii) objects can be dismantled and parts of them can be selected.

From a practical standpoint, a person while selecting the objects would try to choose those that would fetch high profits, hoping to maximize the profit arising out of owning the objects. Thus, maximizing profit is the objective function of the problem. The fact that objects selected can be dismantled and that their total weight cannot exceed the capacity of the knapsack are the constraints.

Let (O1, O2, … On) be n objects, and (p1, p2, … pn) and (w1, w2, … wn) be their profits and weights, respectively. Let M be the capacity of the knapsack and (x1, x2, … xn) be the proportion of objects which are selected. The mathematical formulation of the knapsack problem is as follows:

subject to

The objective function describes the maximization of the total profits. The constraints describe the total weight of the objects selected to be bound by M and the proportion of objects selected to lie between [0,1], where xi = 0 denotes rejection, xi = 1 denotes the selection of the whole object and anything in between [0, 1] denotes the selection of a part of the object after dismantling it.

20.3.1. Greedy solution to the knapsack problem

20.3.1.1. Strategy 1

A greedy solution to the knapsack problem involves selecting the objects one by one such that the addition of each object into the knapsack increases the profit value, subject to the capacity of the knapsack. This could mean ordering the objects in the non-increasing order of their profits and selecting them one by one until capacity M is exhausted. Will this strategy yield the optimal solution?

20.3.1.2. Strategy 2

Another “more-the-merrier” strategy could insist on ordering the objects in the non-decreasing order of their weights and pack as many objects as possible into the knapsack, subject to the capacity M, hoping to maximize profit by a larger selection of objects. Will this strategy yield the optimal solution?

20.3.1.3. Strategy 3

A third strategy could be to order the objects according to the non-increasing order of profit per unit weight (profit/weight), that is ![]() , and select objects one by one until the capacity M of the knapsack is exhausted. Will this strategy yield the optimal solution?

, and select objects one by one until the capacity M of the knapsack is exhausted. Will this strategy yield the optimal solution?

It can be observed that all the three strategies are greedy method based since they revolve around selecting the object one by one while trying to satisfy the constraints and aiming to maximize the objective function. Let us explore these strategies over an example knapsack problem.

20.4. Minimum cost spanning tree algorithms

Given a connected undirected graph G = (V, E), where V is the set of vertices and E is the set of edges, a subgraph T = (V, E′), where E′ ⊆ E is a spanning tree if T is a tree.

It is possible to extract many spanning trees from a graph G. When G is a connected, weighted and undirected graph, where each edge involves a cost, then a minimum cost spanning tree is a spanning tree that has the minimum cost. Section 9.5.2 of Chapter 9, Volume 2, details the concepts and construction of minimum spanning trees using two methods, which are, Prim’s algorithm (Algorithm 9.4) and Kruskal’s algorithm (Algorithm 9.5).

Both the algorithms adopt the greedy method of algorithm design, despite differences in their approach to obtaining the minimum cost spanning tree.

20.4.1. Prim’s algorithm as a greedy method

Prim’s algorithm selects the minimum cost edge one by one, to optimize the cost of the spanning tree and proceeds to construct the spanning tree after ensuring that the inclusion of the edge does not violate the constraints of (i) edge forming a cycle and (ii) edge staying connected, with the spanning tree under construction. Once all the vertices V have made their appearances in the constructed spanning tree, the algorithm abruptly terminates discarding any leftover edges, while declaring the minimum cost spanning tree as its output.

Thus, in the case of Prim’s algorithm, the objective function is the minimization of the cost of the spanning tree and the constraints ensure the connectedness and acyclicity of the spanning tree, when each edge is added to the tree. The algorithm adopts the greedy method of design by selecting the edges one by one while working to obtain the feasible solution at every stage until the optimal solution is arrived at in the final step.

The time complexity of Prim's algorithm is O(n2), where n is the number of vertices in the graph.

20.4.2. Kruskal’s algorithm as a greedy method

Kruskal’s algorithm, on the other hand, selects a minimum cost edge one by one, just as Prim’s algorithm does, but with a huge difference in that, the selected edges build a forest and not necessarily a tree, during the construction. However, the selection of edges ensures that no cycles are formed in the forest.

Kruskal’s algorithm, therefore, works over a forest of trees until no more edges can be considered for inclusion and the number of edges in the forest equals (n-1), at which stage, the algorithm terminates and outputs the minimum cost spanning tree that is generated.

Thus, in the case of Kruskal’s algorithm, the objective function is the minimization of the cost of the spanning tree and the constraint ensures the acyclicity of trees in the forest. The algorithm also adopts the greedy method of design where the edges are selected one by one, obtaining the feasible solution at each stage until the optimal solution, which is the minimum cost spanning tree is obtained in the final stage.

The time complexity of Kruskal’s algorithm is O(e. log e), where e is the number of edges.

20.5. Dijkstra’s algorithm

The single source shortest path problem concerns finding the shortest path from a node termed source in a weighted digraph, to all other nodes connected to it. For example, given a network of cities, a single source shortest path problem defined over it proceeds to find the shortest path from a given city (source) to all other cities connected to it.

Dijkstra’s algorithm obtains an elegant solution to the single source shortest path problem and has been detailed in section 9.5.1 of Chapter 9, Volume 2, Algorithm 9.3, illustrates the working of Dijkstra's algorithm. The algorithm works by selecting nodes that are closest to the source node, one by one, and ensuring that ultimately the shortest paths from the source node to all other nodes are the minimum. Thus, Dijkstra’s algorithm adopts the greedy method to solve the single source shortest path problem and reports a time complexity of O(N2), where N is the number of nodes in the weighted digraph.

Summary

- The greedy method works on problems which demand an optimal solution, as defined by an objective function, subject to constraints that determine the feasibility of the solution.

- The greedy method selects inputs one by one ensuring that the constraints imposed on the problem are satisfied at every stage. Hence, the greedy method based algorithms are conventionally iterative.

- The knapsack problem, construction of minimum spanning trees using Prim’s and Kruskal’s algorithms and Dijktra’s algorithm for the single source shortest path problem are examples of greedy methods at work.

20.6. Illustrative problems

Review questions

- Why do greedy methods advocate iteration during their implementation?

- Find the optimal solution for the instance of the knapsack problem shown below, using the greedy method:

Number of objects, n = 7, knapsack capacity M = 20, profits (p1, p2, p3, p4, p5 p6, p7) = (20, 10, 30, 5, 15, 25, 18) and weights (w1, w2, w3, w4, w5, w6, w7) = (4, 2, 6, 5, 4, 3, 3).

- Demonstrate how Kruskal’s algorithm and Prim’s algorithm, adopting greedy methods, obtain the minimum cost spanning tree of the graph shown below.

- Let us suppose that n binary trees describe n files to be optimally merged using the greedy method, as explained in illustrative problem 20.2. The structure of the nodes of the binary trees is shown below:

| LCHILD | SIZE | RCHILD |

where LCHILD and RCHILD denote links to the left and right child nodes respectively, and SIZE denotes the number of records in the files.

Initially, all the binary trees possess just a single node (root node) that indicates the size of the file.

Write an algorithm, which begins with the list of n binary trees comprising single nodes and builds a forest of binary trees following the optimal merge pattern and eventually outputs a single binary tree that describes the complete two-way merge tree.

- For the job sequencing using the deadlines problem, detailed in illustrative problem 20.3, an instance of which has been described below, find the optimal solution using the greedy method.

Number of jobs n = 5, profits (p1, p2, p3, p4, p5) = (110, 50, 70, 100, 30) and deadlines in time units (d1, d2, d3, d4, d5) = (2, 2, 3, 1, 4), with each job requiring a unit of time for completion.

Programming assignments

- The GREEDYMETHOD_KNAPSACK() procedure was discussed in section 20.3.1. Implement the procedure and test it over the instance of the knapsack problem as discussed in review question 2.

- Implement the algorithm constructed for the optimal merge pattern problem discussed in review question 4 using a programming language that supports the pointers.