CHAPTER TWENTY-TWO

Network Flow

GRAPHS, DIGRAPHS, AND networks are just mathematical abstractions, but they are useful in practice because they help us to solve numerous important problems. In this chapter, we extend the network problem-solving model to encompass a dynamic situation where we imagine material flowing through the network, with different costs attached to different routes. These extensions allow us to tackle a surprisingly broad variety of problems with a long list of applications.

We see that these problems and applications can be handled within a few natural models that we can relate to one another through reduction. There are several different ways, all of which are technically equivalent, to formulate the basic problems. To implement algorithms that solve them all, we settle on two specific problems, develop efficient algorithms to solve them, then develop algorithms that solve other problems by finding reductions to the known problems.

In real life, we do not always have the freedom of choice that this idealized scenario suggests, because not all pairs of reduction relationships between these problems have been proved, and because few optimal algorithms for solving any of the problems are known. Perhaps no efficient direct solution to a given problem has yet been invented, and perhaps no efficient reduction that directly relates a given pair of problems has yet been devised. The network-flow formulation that we cover in this chapter has been successful not only because simple reductions to it are easy to define for many problems, but also because numerous efficient algorithms for solving the basic network-flow problems have been devised.

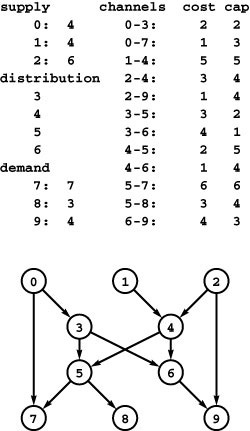

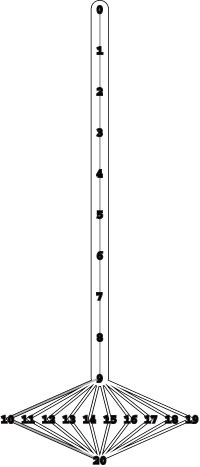

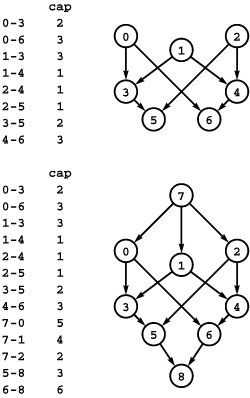

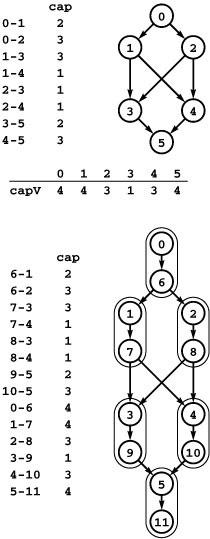

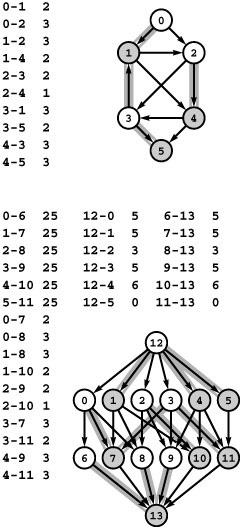

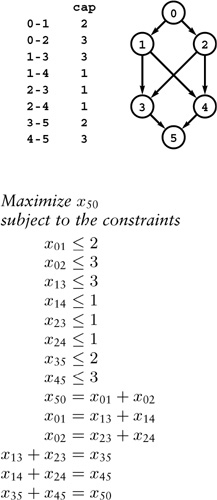

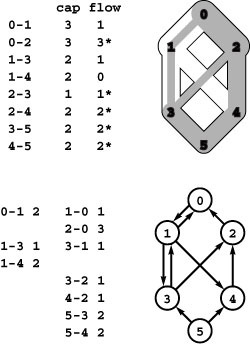

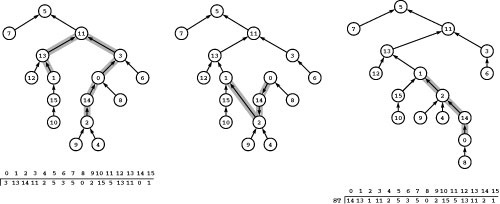

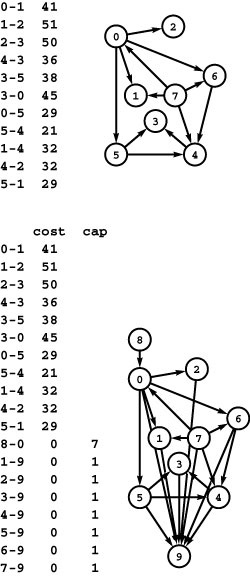

Figure 22.1 Distribution problem

In this instance of the distribution problem, we have three supply vertices (0 through 2), four distribution points (3 through 6), three demand vertices (7 through 9), and twelve channels. Each supply vertex has a rate of production; each demand vertex a rate of consumption; and each channel a maximum capacity and a cost per unit distributed. The problem is to minimize costs while distributing material through the channels (without exceeding capacity anywhere) such that the total rate of material leaving each supply vertex equals its rate of production; the total rate at which material arrives at each demand vertex equals its rate of consumption; and the total rate at which material arrives at each distribution point equals the total rate at which material leaves.

The following examples illustrate the range of problems that we can handle with network-flow models, algorithms, and implementations. They fall into general categories known as distribution problems, matching problems, and cut problems, each of which we examine in turn. We indicate several different related problems, rather than lay out specific details in these examples. Later in the chapter, when we undertake to develop and implement algorithms, we give rigorous descriptions of many of the problems mentioned here.

In distribution problems, we are concerned with moving objects from one place to another within a network. Whether we are distributing hamburger and chicken to fast-food outlets or toys and clothes to discount stores along highways throughout the country—or software to computers or bits to display screens along communications networks throughout the world—the essential problems are the same. Distribution problems typify the challenges that we face in managing a large and complex operation. Algorithms to solve them are broadly applicable and are critical in numerous applications.

Merchandise distribution A company has factories, where goods are produced; distribution centers, where the goods are stored temporarily; and retail outlets, where the goods are sold. The company must distribute the goods from factories through distribution centers to retail outlets on a regular basis, using distribution channels that have varying capacities and unit distribution costs. Is it possible to get the goods from the warehouses to the retail outlets such that supply meets demand everywhere? What is the least-cost way to do so? Program 22.1 illustrates a distribution problem.

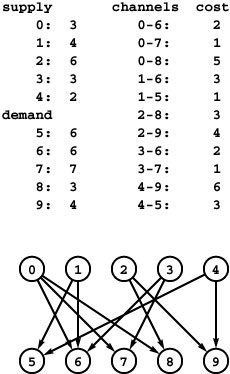

Figure 22.2 illustrates the transportation problem, a special case of the merchandise-distribution problem where we eliminate the distribution centers and the capacities on the channels. This version is important in its own right and is significant (as we see in Section 22.7) not just because of important direct applications but also because it turns out not to be a “special case” at all—indeed, it is equivalent in difficulty to the general version of the problem.

Communications A communications network has a set of requests to transmit messages between servers that are connected by channels (abstract wires) that are capable of transferring information at varying rates. What is the maximum rate at which information can be transferred between two specified servers in the network? If there are costs associated with the channels, what is the cheapest way to send the information at a given rate that is less than the maximum?

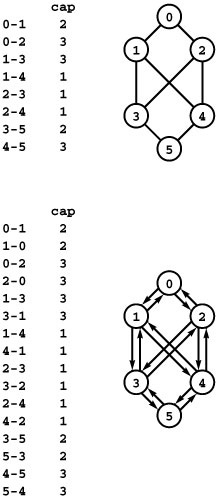

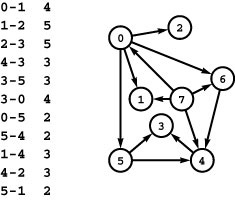

Figure 22.2 Transportation problem

The transportation problem is like the distribution problem, but with no channel-capacity restrictions and no distribution points. In this instance, we have five supply vertices (0 through 4), five demand vertices (5 through 9), and twelve channels. The problem is to find the lowest-cost way to distribute material through the channels such that supply exactly meets demand everywhere. Specifically, we require an assignment of weights (distribution rates) to the edges such that the sum of weights on outgoing edges equals the supply at each supply vertex; the sum of weights on ingoing edges equals the demand at each demand vertex; and the total cost (sum of weight times cost for all edges) is minimized over all such assignments.

Traffic flow A city government needs to formulate a plan for evacuating people from the city in an emergency. What is the minimum amount of time that it would take to evacuate the city, if we suppose that we can control traffic flow so as to realize the minimum? Traffic planners also might formulate questions like this when deciding which new roads, bridges, or tunnels might alleviate rush-hour or vacation-weekend traffic problems.

In matching problems, the network represents the possible ways to connect pairs of vertices, and our goal is to choose among the connections (according to a specified criterion) without including any vertex twice. In other words, the chosen set of edges defines a way to pair vertices with one another. We might be matching students to colleges, applicants to jobs, courses to available hours for a school, or members of Congress to committee assignments. In each of these situations, we might imagine a variety of criteria defining the characteristics of the matches sought.

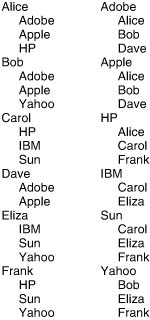

Job placement A job-placement service arranges interviews for a set of students with a set of companies; these interviews result in a set of job offers. Assuming that an interview followed by a job offer represents mutual interest in the student taking a job at the company, it is in everyone’s best interests to maximize the number of job placements. Program 22.3 is an example illustrating that this task can be complicated.

Minimum-distance point matching Given two sets of N points, find the set of N line segments, each with one endpoint from each of the point sets, with minimum total length. One application of this purely geometric problem is in radar tracking systems. Each sweep of the radar gives a set of points that represent planes. We assume that the planes are kept sufficiently well spaced that solving this problem allows us to associate each plane’s position on one sweep to its position on the next, thus giving us the paths of all the planes. Other data-sampling applications can be cast in this framework.

In cut problems, such as the one illustrated in Program 22.4, we remove edges to cut networks into two or more pieces. Cut problems are directly related to fundamental questions of graph connectivity that we first examined in Chapter 18. In this chapter, we discuss a central theorem that demonstrates a surprising connection between cut and flow problems, substantially expanding the reach of network-flow algorithms.

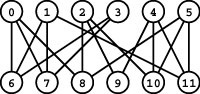

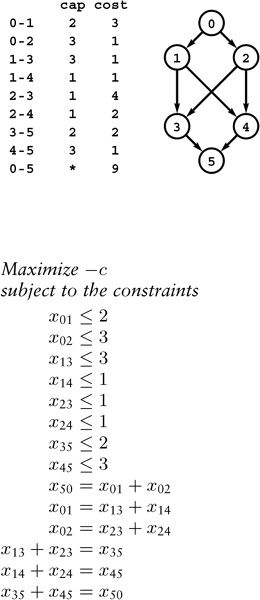

Figure 22.3 Job placement

Suppose that we have six students, each needing jobs, and six companies, each needing to hire a student. These two lists (one sorted by student, the other sorted by company) give a list of job offers, which indicate mutual interest in matching students and jobs. Is there some way to match students to jobs so that every job is filled and every student gets a job? If not, what is the maximum number of jobs that can be filled?

Network reliability A simplified model considers a telephone network as consisting of a set of wires that connect telephones through switches such that there is the possibility of a switched path through trunk lines connecting any two given telephones. What is the maximum number of trunk lines that could be cut without any pair of switches being disconnected?

Cutting supply lines A country at war moves supplies from depots to troops along an interconnected highway system. An enemy can cut off the troops from the supplies by bombing roads, with the number of bombs required to destroy a road proportional to that road’s width. What is the minimum number of bombs that the enemy must drop to ensure that no troops can get supplies?

Each of the applications just cited immediately suggests numerous related questions, and there are still other related models, such as the job-scheduling problems that we considered in Chapter 21. We consider further examples throughout this chapter, yet still treat only a small fraction of the important, directly related practical problems.

The network-flow model that we consider in this chapter is important not just because it provides us with two simply stated problems to which many of the practical problems reduce but also because we have efficient algorithms for solving the two problems. This breadth of applicability has led to the development of numerous algorithms and implementations. The solutions that we consider illustrate the tension between our quest for implementations of general applicability and our quest for efficient solutions to specific problems. The study of network-flow algorithms is fascinating because it brings us tantalizingly close to compact and elegant implementations that achieve both goals.

We consider two particular problems within the network-flow model: the maxflow problem and the mincost-flow problem. We see specific relationships among these problem-solving models, the shortest-path model of Chapter 21, the linear-programming (LP) model of Part 8, and numerous specific problem models including some of those just discussed.

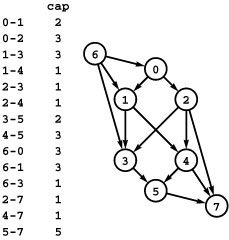

Figure 22.4 Cutting supply lines

This diagram represents the roads connecting an army’s supply depot at the top to the troops at the bottom. The black dots represent an enemy bombing plan that would separate troops from supplies. The enemy’s goal is to minimize the cost of bombing (perhaps assuming that the cost of cutting an edge is proportional to its width), and the army’s goal is to design its road network to maximize the enemy’s minimum cost. The same model is useful in improving the reliability of communications networks and many other applications.

At first blush, many of these problems might seem to be completely different from network-flow problems. Determining a given problem’s relationship to known problems is often the most important step in developing a solution to that problem. Moreover, this step is often significant because, as is usual with graph algorithms, we must understand the fine line between trivial and intractable problems before we attempt to develop implementations. The infrastructure of problems and the relationships among the problems that we consider in this chapter provides a helpful context for addressing such issues.

In the rough categorization that we began with in Chapter 17, the algorithms that we examine in this chapter demonstrate that network-flow problems are “easy,” because we have straightforward implementations that are guaranteed to run in time proportional to a polynomial in the size of the network. Other implementations, although not guaranteed to run in polynomial time in the worst case, are compact and elegant and have been proved to solve a broad variety of other practical problems, such as the ones discussed here. We consider them in detail because of their utility. Researchers still seek faster algorithms, in order to enable huge applications and to save costs in critical ones. Ideal optimal algorithms that are guaranteed to be as fast as possible are yet to be discovered for network-flow problems.

On the one hand, some of the problems that we reduce to network-flow problems are known to be easier to solve with specialized algorithms. In principle, we might consider implementing and improving these specialized algorithms. Although that approach is productive in some situations, efficient algorithms for solving many of the problems (other than through reduction to network flow) are not known. Even when specialized algorithms are known, developing implementations that can outperform good network-flow codes can be a significant challenge. Moreover, researchers are still improving network-flow algorithms, and the possibility remains that a good network-flow algorithm might outperform known specialized methods for a given practical problem.

On the other hand, network-flow problems are special cases of the even more general LP problems that we discuss in Part 8. Although we could (and people often do) use an algorithm that solves LP problems to solve network-flow problems, the network-flow algorithms that we consider are simpler and more efficient than are those that solve LP problems. But researchers are still improving LP solvers, and the possibility remains that a good algorithm for LP problems might—when used for practical network-flow problems—outperform all the algorithms that we consider in this chapter.

The classical solutions to network-flow problems are closely related to the other graph algorithms that we have been examining, and we can write surprisingly concise programs that solve them, using the algorithmic tools we have developed. As we have seen in many other situations, good algorithms and data structures can achieve substantial reductions in running times. Development of better implementations of classical generic algorithms is still being studied, and new approaches continue to be discovered.

In Section 22.1 we consider basic properties of flow networks, where we interpret a network’s edge weights as capacities and consider properties of flows, which are a second set of edge weights that satisfy certain natural constraints. Next, we consider the maxflow problem, which is to compute a flow that is best in a specific technical sense. In Sections 22.2 and 22.3, we consider two approaches to solving the maxflow problem, and examine a variety of implementations. Many of the algorithms and data structures that we have considered are directly relevant to the development of efficient solutions of the maxflow problem. We do not yet have the best possible algorithms to solve the maxflow problem, but we consider specific useful implementations. In Section 22.4, to illustrate the reach of the maxflow problem, we consider different formulations, as well as other reductions involving other problems.

Maxflow algorithms and implementations prepare us to discuss an even more important and general model known as the mincost-flow problem, where we assign costs (another set of edge weights) and define flow costs, then look for a solution to the maxflow problem that is of minimal cost. We consider a classic generic solution to the mincost-flow problem known as the cycle-canceling algorithm; then, in Section 22.6, we give a particular implementation of the cycle-canceling algorithm known as the network simplex algorithm. In Section 22.7, we discuss reductions to the mincost-flow problem that encompass, among others, all the applications that we just outlined.

Network-flow algorithms are an appropriate topic to conclude this book for several reasons. They represent a payoff on our investment in learning basic algorithmic tools such as linked lists, priority queues, and general graph-search methods. The graph-processing classes that we have studied lead immediately to compact and efficient class implementations for network-flow problems. These implementations take us to a new level of problem-solving power and are immediately useful in numerous practical applications. Furthermore, studying their applicability and understanding their limitations sets the context for our examination of better algorithms and harder problems—the undertaking of Part 8.

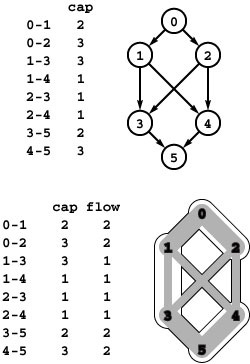

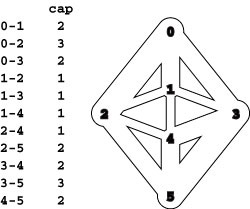

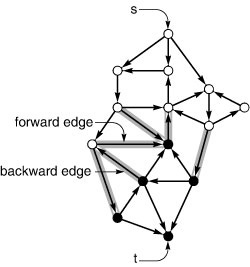

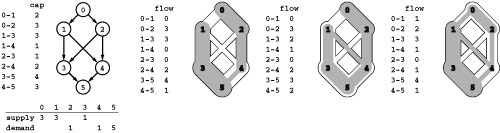

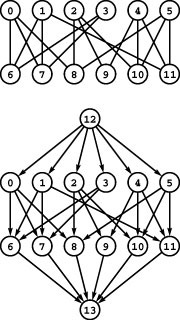

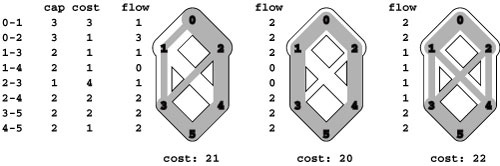

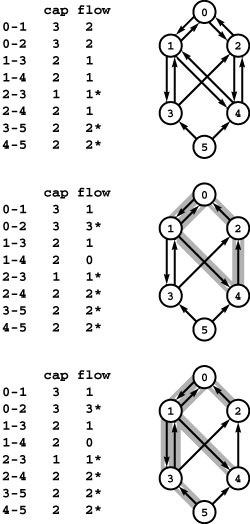

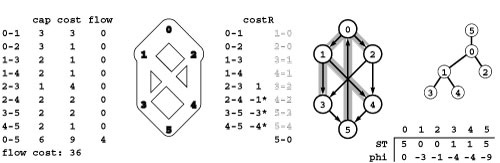

Figure 22.5 Network flow

A flow network is a weighted network where we interpret edge weights as capacities (top). Our objective is to compute a second set of edge weights, bounded by the capacities, which we call the flow. The bottom drawing illustrates our conventions for drawing flow networks. Each edge’s width is proportional to its capacity; the amount of flow in each edge is shaded in gray; the flow is always directed down the page from a single source at the top to a single sink at the bottom; and intersections (such as 1-4 and 2-3 in this example) do not represent vertices unless labeled as such. Except for the source and the sink, flow in is equal to flow out at every vertex: For example, vertex 2 has 2 units of flow coming in (from 0) and 2 units of flow going out (1 unit to 3 and 1 unit to 4).

22.1 Flow Networks

To describe network-flow algorithms, we begin with an idealized physical model in which several of the basic concepts are intuitive. Specifically, we imagine a collection of interconnected oil pipes of varying sizes, with switches controlling the direction of flow at junctions, as in the example illustrated in Program 22.5. We suppose further that the network has a single source (say, an oil field) and a single sink (say, a large refinery) to which all the pipes ultimately connect. At each vertex, the flowing oil reaches an equilibrium where the amount of oil flowing in is equal to the amount flowing out. We measure both flow and pipe capacity in the same units (say, gallons per second).

If every switch has the property that the total capacity of the ingoing pipes is equal to the total capacity of the outgoing pipes, then there is no problem to solve: We simply fill all pipes to full capacity. Otherwise, not all pipes are full, but oil flows through the network, controlled by switch settings at the junctions, such that the amount of oil flowing into each junction is equal to the amount of oil flowing out. But this local equilibrium at the junctions implies an equilibrium in the network as a whole: We prove in Property 22.1 that the amount of oil flowing into the sink is equal to the amount flowing out of the source. Moreover, as illustrated in Program 22.6, the switch settings at the junctions of this amount of flow from source to sink have nontrivial effects on the flow through the network. Given these facts, we are interested in the following question: What switch settings will maximize the amount of oil flowing from source to sink?

We can model this situation directly with a network (a weighted digraph, as defined in Chapter 21) that has a single source and a single sink. The edges in the network correspond to the oil pipes, the vertices correspond to the junctions with switches that control how much oil goes into each outgoing edge, and the weights on the edges correspond to the capacity of the pipes. We assume that the edges are directed, specifying that oil can flow in only one direction in each pipe. Each pipe has a certain amount of flow, which is less than or equal to its capacity, and every vertex satisfies the equilibrium condition that the flow in is equal to the flow out.

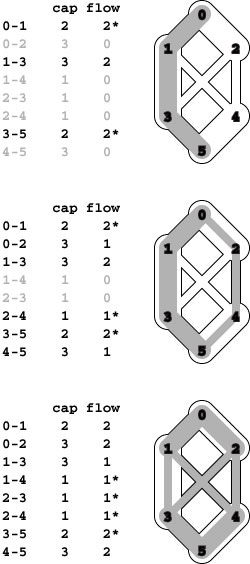

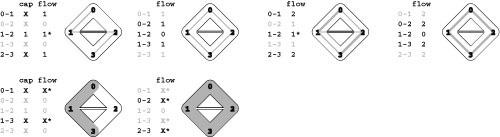

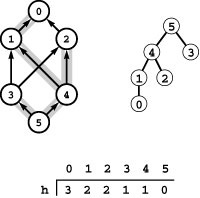

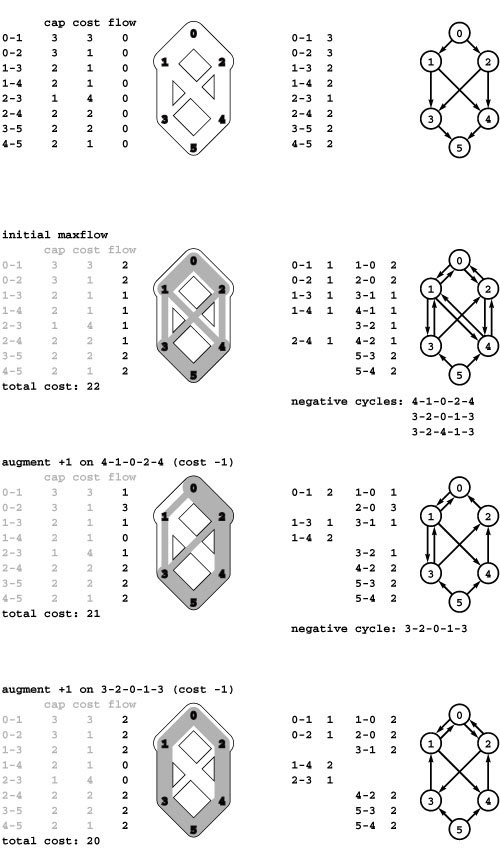

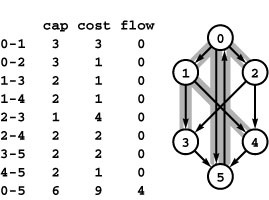

Figure 22.6 Controlling flow in a network

We might initialize the flow in this network by opening the switches along the path 0-1-3-5, which can handle 2 units of flow (top), and by opening switches along the path 0-2-4-5 to get another 1 unit of flow in the network (center). Asterisks indicate full edges.

Since 0-1, 2-4, and 3-5 are full, there is no direct way to get more flow from 0 to 5, but if we change the switch at 1 to redirect enough flow to fill 1-4, we open up enough capacity in 3-5 to allow us to add flow on 0-2-3-5, giving a maxflow for this network (bottom).

This flow-network abstraction is a useful problem-solving model that applies directly to a variety of applications and indirectly to still more. We sometimes appeal to the idea of oil flowing through pipes for intuitive support of basic ideas, but our discussion applies equally well to goods moving through distribution channels and to numerous other situations.

The flow model directly applies to a distribution scenario: We interpret the flow values as rates of flow, so that a flow network describes the flow of goods in a manner precisely analogous to the flow of oil. For example, we can interpret the flow in Program 22.5 as specifying that we should be sending two items per time unit from 0 to 1 and from 0 to 2, one item per time unit from 1 to 3 and from 1 to 4, and so forth.

Another way to interpret the flow model for a distribution scenario is to interpret flow values as amounts of goods so that a flow network describes a one-time transfer of goods. For example, we can interpret the flow in Program 22.5 as describing the transfer of four items from 0 to 5 in the following three-step process: First, send two items from 0 to 1 and two items from 0 to 2, leaving two items at each of those vertices. Second, send one item each from 1 to 3, 1 to 4, 2 to 3, and 2 to 4, leaving two items each at 3 and 4. Third, complete the transfer by sending two items from 3 to 5 and two items from 4 to 5.

As with our use of distance in shortest-paths algorithms, we are free to abandon any physical intuition when convenient because all the definitions, properties, and algorithms that we consider are based entirely on an abstract model that does not necessarily obey physical laws. Indeed, a prime reason for our interest in the network-flow model is that it allows us to solve numerous other problems through reduction, as we see in Sections 22.4 and 22.6. Because of this broad applicability, it is worthwhile to consider precise statements of the terms and concepts that we have just informally introduced.

Definition 22.1 We refer to a network with a designated source s and a designated sink t as an st-network.

We use the modifier “designated” here to mean that s does not necessarily have to be a source (vertex with no incoming edges) and t does not necessarily have to be a sink (vertex with no outgoing edges), but that we nonetheless treat them as such, because our discussion (and our algorithms) will ignore edges directed into s and edges directed out of t. To avoid confusion, we use networks with a single source and a single sink in examples; we consider more general situations in Section 22.4. We refer to s and t as “the source” and “the sink,” respectively, in the st -network because those are the roles that they play in the network. We also refer to the other vertices in the network as the internal vertices.

Definition 22.2 A flow network is an st-network with positive edge weights, which we refer to as capacities. A flow in a flow network is a set of nonnegative edge weights—which we refer to as edge flows —satisfying the conditions that no edge’s flow is greater than that edge’s capacity and that the total flow into each internal vertex is equal to the total flow out of that vertex.

We refer to the total flow into a vertex (the sum of the flows on its incoming edges) as the vertex’s inflow and the total flow out of a vertex (the sum of the flows on its outgoing edges) as the vertex’s outflow. By convention, we set the flow on edges into the source and edges out of the sink to zero, and in Property 22.1 we prove that the source’s outflow is always equal to the sink’s inflow, which we refer to as the network’s value. With these definitions, the formal statement of our basic problem is straightforward.

Maximum flow Given an st -network, find a flow such that no other flow from s to t has larger value. For brevity, we refer to such a flow as a maxflow and the problem of finding one in a network as the maxflow problem. In some applications, we might be content to know just the maxflow value, but we generally want to know a flow (edge flow values) that achieves that value.

Variations on the problem immediately come to mind. Can we allow multiple sources and sinks? Should we be able to handle networks with no sources or sinks? Can we allow flow in either direction in the edges? Can we have capacity restrictions for the vertices instead of or in addition to the restrictions for the edges? As is typical with graph algorithms, separating restrictions that are trivial to handle from those that have profound implications can be a challenge. We investigate this challenge and give examples of reducing to maxflow a variety of problems that seem different in character, after we consider algorithms to solve the basic problem, in Sections 22.2 and 22.3.

Figure 22.7 Flow equilibrium

This diagram illustrates the preservation of flow equilibrium when we merge sets of vertices. The two smaller figures represent any two disjoint sets of vertices, and the letters represent flow in sets of edges as indicated: A is the amount of flow into the set on the left from outside the set on the right, x is the amount of flow into the set on the left from the set on the right, and so forth. Now, if we have flow equilibrium in the two sets, then we must have

A + x =B + y

for the set on the left and

C + y =D + x

for the set on the right. Adding these two equations and canceling the x + y terms, we conclude that

A + C =B + D,

or inflow is equal to outflow for the union of the two sets.

The characteristic property of flows is the local equilibrium condition that inflow be equal to outflow at each internal vertex. There is no such constraint on capacities; indeed, the imbalance between total capacity of incoming edges and total capacity of outgoing edges is what characterizes the maxflow problem. The equilibrium constraint has to hold at each and every internal vertex, and it turns out that this local property determines global movement through the network, as well. Although this idea is intuitive, it needs to be proved.

Property 22.1 Any st-flow has the property that outflow from s is equal to the inflow to t.

Proof: (We use the term st-flow to mean “flow in an st -network.”) Augment the network with an edge from a dummy vertex into s, with flow and capacity equal to the outflow from s, and with an edge from t to another dummy vertex, with flow and capacity equal to the inflow to t. Then, we can prove a more general property by induction: Inflow is equal to outflow for any set of vertices (not including the dummy vertices).

This property is true for any single vertex, by local equilibrium. Now, assume that it is true for a given set of vertices S and that we add a single vertex v to make the set S ′=S {v}. To compute inflow and outflow for S ′, note that each edge from v to some vertex in S reduces outflow (from v ) by the same amount as it reduces inflow (to S); each edge to v from some vertex in S reduces inflow (to v ) by the same amount as it reduces outflow (from S ); and all other edges provide inflow or outflow for S ′ if and only if they do so for S or v. Thus, inflow and outflow are equal for S ′, and the value of the flow is equal to the sum of the values of the flows of v and S minus sum of the flows on the edges connecting v to a vertex in S (in either direction).

Applying this property to the set of all the network’s vertices, we find that the source’s inflow from its associated dummy vertex (which

is equal to the source’s outflow) is equal to the sink’s outflow to its associated dummy vertex (which is equal to the sink’s inflow).

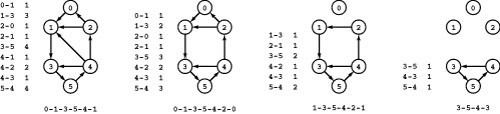

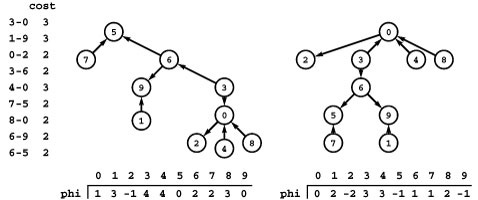

Figure 22.8 Cycle flow representation

This figure demonstrates that the circulation at left decomposes into the four cycles 1-3-5-4-1, 0-1-3-5-4-2-0, 1-3-5-4-2-1, 3-5-4-3, with weights 2, 1, 1, and 3, respectively. Each cycle’s edges appear in its respective column, and summing each edge’s weight from each cycle in which it appears (across its respective row) gives its weight in the circulation.

Corollary The value of the flow for the union of two sets of vertices is equal to the sum of the values of the flows for the two sets minus the sum of the weights of the edges that connect a vertex in one to a vertex in the other.

Proof: The proof just given for a set S and a vertex v still works if we replace v by a set T (which is disjoint from S ) in the proof. An example of this property is illustrated in Program 22.7.

We can dispense with the dummy vertices in the proof of Property 22.1, augment any flow network with an edge from t to s with flow and capacity equal to the network’s value, and know that inflow is equal to outflow for any set of nodes in the augmented network. Such a flow is called a circulation, and this construction demonstrates that the maxflow problem reduces to the problem of finding a circulation that maximizes the flow along a given edge. This formulation simplifies our discussion in some situations. For example, it leads to an interesting alternate representation of flows as a set of cycles, as illustrated in Program 22.8.

Given a set of cycles and a flow value for each cycle, it is easy to compute the corresponding circulation by following through each cycle and adding the indicated flow value to each edge. The converse property is more surprising: We can find a set of cycles (with a flow value for each) that is equivalent to any given circulation.

Property 22.2 (Flow decomposition theorem) Any circulation can be represented as flow along a set of at most E directed cycles.

Proof: A simple algorithm establishes this result. Iterate the following process as long as there is any edge that has flow: Starting with any

edge that has flow, follow any edge leaving that edge’s destination vertex that has flow and continue until encountering a vertex that has already been visited (a cycle has been detected). Go back around the cycle to find an edge with minimal flow; then reduce the flow on every edge in the cycle by that amount. Each iteration of this process reduces the flow on at least one edge to 0, so there are at most E cycles.

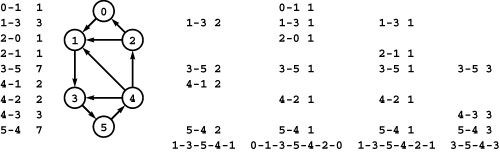

Figure 22.9 Cycle flow decomposition process

To decompose any circulation into a set of cycles, we iterate the following process: Follow any path until encountering a node for the second time, then find the minimum weight on the indicated cycle, then subtract that weight from each edge on the cycle and remove any edge whose weight becomes 0. For example, the first iteration is to follow the path 0-1-3-5-4-1 to find the cycle 1-3-5-4-1, then subtract 1 from the weights of each of the edges on the cycle, which causes us to remove 4-1 because its weight becomes 0. In the second iteration, we remove 0-1 and 2-0; in the third iteration, we remove 1-3, 4-2, and 2-1; and in the fourth iteration, we remove 3-5, 5-4, and 4-3.

Figure 22.9 illustrates the process described in the proof. For st-flows, applying this property to the circulation created by the addition of an edge from t to s gives the result that any st -flow can be represented as flow along a set of at most E directed paths, each of which is either a path from s to t or a cycle.

Corollary Any st-network has a maxflow such that the subgraph induced by nonzero flow values is acyclic.

Proof: Cycles that do not contain t-s do not contribute to the value of the flow, so we can change the flow to 0 along any such cycle without changing the value of the flow.

Corollary Any st-network has a maxflow that can be represented as flow along a set of at most E directed paths from s to t.

Proof: Immediate.

This representation provides a useful insight into the nature of flows that is helpful in the design and analysis of maxflow algorithms.

On the one hand, we might consider a more general formulation of the maxflow problem where we allow for multiple sources and sinks. Doing so would allow our algorithms to be used for a broader range of applications. On the other hand, we might consider special cases, such as restricting attention to acyclic networks. Doing so might make the problem easier to solve. In fact, as we see in Section 22.4, these variants are equivalent in difficulty to the version that we are considering. Therefore, in the first case, we can adapt our algorithms and implementations to the broader range of applications; in the second case, we cannot expect an easier solution. In our figures, we use acyclic networks because the examples are easier to understand when they have an implicit flow direction (down the page), but our implementations allow networks with cycles.

To implement maxflow algorithms, we use the GRAPH class of Chapter 20, but with pointers to a more sophisticated EDGE class. Instead of the single weight that we used in Chapters 20 and 21, we use pcap and pflow private data members (with cap() and flow() public member functions that return their values) for capacity and flow, respectively. Even though networks are directed graphs, our algorithms need to traverse edges in both directions, so we use the undirected graph representation from Chapter 20 and the member function from to distinguish u-v from v-u.

This approach allows us to separate the abstraction needed by our algorithms (edges going in both directions) from the client’s concrete data structure and leaves a simple goal for our algorithms: Assign values to the flow data members in the client’s edges that maximize flow through the network. Indeed, a critical component of our implementations involves a changing network abstraction that is dependent on flow values and implemented with EDGE member functions. We will consider an EDGE implementation (Program 22.2) in Section 22.2.

Since flow networks are typically sparse, we use an adjacency-lists-based GRAPH representation like the SparseMultiGRAPH implementation of Program 20.5. More important, typical flow networks may have multiple edges (of varying capacities) connecting two given vertices. This situation requires no special treatment with SparseMultiGRAPH, but with an adjacency-matrix–based representation, clients have to collapse such edges into a single edge.

In the network representations of Chapters 20 and 21, we used the convention that weights are real numbers between 0 and 1. In this chapter, we assume that the weights (capacities and flows) are all m-bit integers (between 0 and 2m − 1). We do so for two primary reasons. First, we frequently need to test for equality among linear combinations of weights, and doing so can be inconvenient in floating-point representations. Second, the running times of our algorithms

can depend on the relative values of the weights, and the parameter M = 2m gives us a convenient way to bound weight values. For example, the ratio of the largest weight to the smallest nonzero weight is less than M. The use of integer weights is but one of many possible alternatives (see, for example, Exercise 20.8) that we could choose to address these problems.

We sometimes refer to edges as having infinite capacity, or, equivalently, as being uncapacitated. That might mean that we do not compare flow against capacity for such edges, or we might use a sentinel value that is guaranteed to be larger than any flow value.

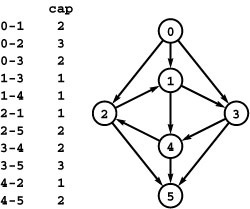

Figure 22.10 Flow network for exercises

This flow network is the subject of several exercises throughout the chapter.

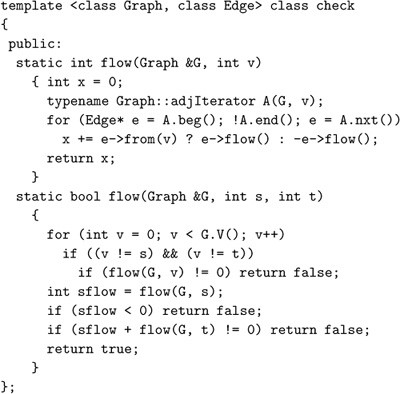

Program 22.1 is an client function that checks whether a flow satisfies the equilibrium condition at every node and returns that flow’s value if the flow does. Typically, we might include a call to this function as the final action of a maxflow algorithm. Despite our confidence as mathematicians in Property 22.1, our paranoia as programmers dictates that we also check that the flow out of the source is equal to the flow into the sink. It might also be prudent to check that no edge’s flow exceeds that edge’s capacity and that the data structures are internally consistent (see Exercise 22.12).

Exercises

• 22.1 Find two different maxflows in the flow network shown in Program 22.10.

22.2 Under our assumption that capacities are positive integers less than M, what is the maximum possible flow value for any st-network with V vertices and E edges? Give two answers, depending on whether or not parallel edges are allowed.

• 22.3 Give an algorithm to solve the maxflow problem for the case that the network forms a tree if the sink is removed.

• 22.4 Give a family of networks with E edges having circulations where the process described in the proof of Property 22.2 produces E cycles.

22.5 Write an EDGE class that represents capacities and flows as real numbers between 0 and 1 that are expressed with d digits to the right of the decimal point, where d is a fixed constant.

• 22.6 Write a program that builds a flow network by reading edges (pairs of integers between 0 and V − 1) with integer capacities from standard input. Assume that the capacity upper bound M is less than 220.

22.7 Extend your solution to Exercise 22.6 to use symbolic names instead of integers to refer to vertices (see Program 17.10).

• 22.8 Find a large network online that you can use as a vehicle for testing flow algorithms on realistic data. Possibilities include transportation networks (road, rail, or air), communications networks (telephone or computer connections), or distribution networks. If capacities are not available, devise a reasonable model to add them. Write a program that uses the interface of Program 22.2 to implement flow networks from your data, perhaps using your solution to Exercise 22.7. If warranted, develop additional private functions to clean up the data, as described in Exercises 17.33–35.

22.9 Write a random-network generator for sparse networks with capacities between 0 and 220, based on Program 17.7. Use a separate class for capacities and develop two implementations: one that generates uniformly distributed capacities and another that generates capacities according to a Gaussian distribution. Implement client programs that generate random networks for both weight distributions with a well-chosen set values of V and E, so that you can use them to run empirical tests on graphs drawn from various distributions of edge weights.

22.10 Write a random-network generator for dense networks with capacities between 0 and 220, based on Program 17.8 and edge-capacity generators as described in Exercise 22.9. Write client programs to generate random networks for both weight distributions with a well-chosen set values of V and E, so that you can use them to run empirical tests on graphs drawn from these models.

• 22.11 Write a program that generates V random points in the plane, then builds a flow network with edges (in both directions) connecting all pairs of points within a given distance d of each other (see Program 3.20), setting each edge’s capacity using one of the random models described in Exercise 22.9. Determine how to set d so that the expected number of edges is E.

• 22.12 Modify Program 22.1 to also check that flow is less than capacity for all edges.

• 22.13 Find all the maxflows in the network depicted in Program 22.11. Give cycle representations for each of them.

22.14 Write a function that reads values and cycles (one per line, in the format illustrated in Program 22.8) and builds a network having the corresponding flow.

22.15 Write a client function that finds the cycle representation of a network’s flow using the method described in the proof of Property 22.2 and prints values and cycles (one per line, in the format illustrated in Program 22.8).

• 22.16 Write a function that removes cycles from a network’s st-flow.

• 22.17 Write a program that assigns integer flows to each edge in any given digraph that contains no sinks and no sources such that the digraph is a flow network that is a circulation.

• 22.18 Suppose that a flow represents goods to be transferred by trucks between cities, with the flow on edge u-v representing the amount to be taken from city u to v in a given day. Write a client function that prints out daily orders for truckers, telling them how much and where to pick up and how much and where to drop off. Assume that there are no limits on the supply of truckers and that nothing leaves a given distribution point until everything has arrived.

Figure 22.11 Flow network with cycle

This flow network is like the one depicted in Program 22.10, but with the direction of two of the edges reversed, so there are two cycles. It is also the subject of several exercises throughout the chapter.

22.2 Augmenting-Path Maxflow Algorithms

An effective approach to solving maxflow problems was developed by L. R. Ford and D. R. Fulkerson in 1962. It is a generic method for

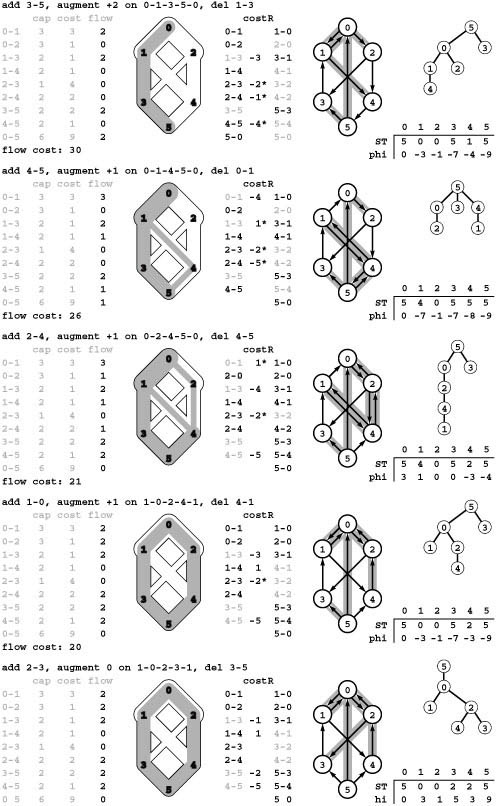

Figure 22.12 Augmenting flow along a path

This sequence shows the process of increasing flow in a network along a path of forward and backward edges. Starting with the flow depicted at the left and reading from left to right, we increase the flow in 0-2 and then 2-3 (additional flow is shown in black). Then we decrease the flow in 1-3 (shown in white) and divert it to 1-4 and then 4-5, resulting in the flow at the right.

increasing flows incrementally along paths from source to sink that serves as the basis for a family of algorithms. It is known as the Ford– Fulkerson method in the classical literature; the more descriptive term augmenting-path method is also widely used.

Consider any directed path (not necessarily a simple one) from source to sink through an st -network. Let x be the minimum of the unused capacities of the edges on the path. We can increase the network’s flow value by at least x, by increasing the flow in all edges on the path by that amount. Iterating this action, we get a first attempt at computing flow in a network: Find another path, increase the flow along that path, and continue until all paths from source to sink have at least one full edge (so that we can no longer increase flow in this way). This algorithm will compute the maxflow in some cases, but will fall short in other cases. Program 22.6 illustrates a case where it fails.

To improve the algorithm such that it always finds a maxflow, we consider a more general way to increase the flow, along any path from source to sink through the network’s underlying undirected graph. The edges on any such path are either forward edges, which go with the flow (when we traverse the path from source to sink, we traverse the edge from its source vertex to its destination vertex) or backward edges, which go against the flow (when we traverse the path from source to sink, we traverse the edge from its destination vertex to its source vertex). Now, for any path with no full forward edges and no empty backward edges, we can increase the amount of flow in the network by increasing flow in forward edges and decreasing flow in backward edges. The amount by which the flow can be increased is limited by the minimum of the unused capacities in the forward edges and the flows in the backward edges. Program 22.12 depicts an example. In the new flow, at least one of the forward edges along the

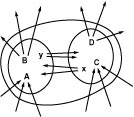

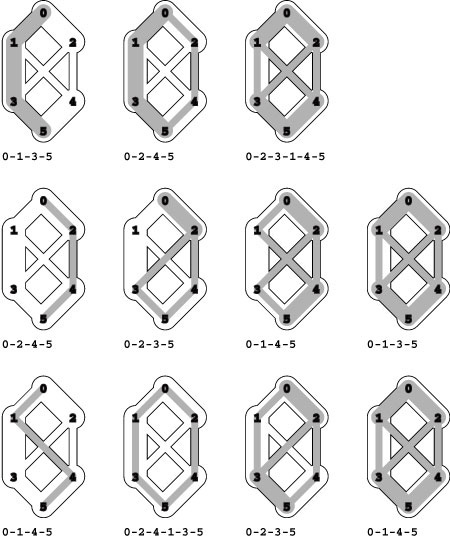

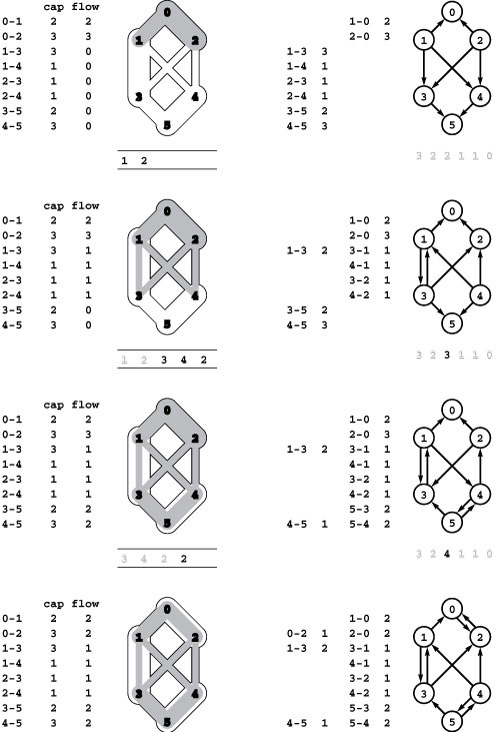

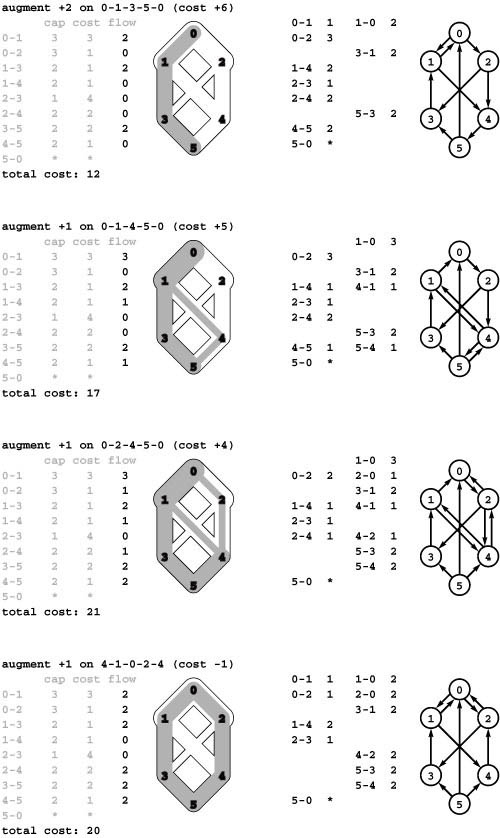

Figure 22.13 Augmenting-path sequences

In these three examples, we augment a flow along different sequences of augmenting paths until no augmenting path can be found. The flow that results in each case is a maximum flow. The key classical theorem in the theory of network flows states that we get a maximum flow in any network, no matter what sequence of paths we use (see Property 22.5).

path becomes full or at least one of the backward edges along the path becomes empty.

The process just sketched is the basis for the classical Ford– Fulkerson maxflow algorithm (augmenting-path method). We summarize it as follows:

Start with zero flow everywhere. Increase the flow along any path from source to sink with no full forward edges or empty backward edges, continuing until there are no such paths in the network.

Remarkably, this method always finds a maxflow, no matter how we choose the paths. Like the MST method discussed in Section 20.1 and the Bellman–Ford shortest-paths method discussed in Section 21.7, itis a generic algorithm that is useful because it establishes the correctness of a whole family of more specific algorithms. We are free to use any method whatever to choose the path.

Figure 22.13 illustrates several different sequences of augmenting paths that all lead to a maxflow for a sample network. Later in this section, we examine several algorithms that compute sequences of augmenting paths, all of which lead to a maxflow. The algorithms differ in the number of augmenting paths they compute, the lengths of the paths, and the costs of finding each path, but they all implement the Ford–Fulkerson algorithm and find a maxflow.

To show that any flow computed by any implementation of the Ford–Fulkerson algorithm indeed has maximal value, we show that this fact is equivalent to a key fact known as the maxflow–mincut theorem. Understanding this theorem is a crucial step in understanding network-flow algorithms. As suggested by its name, the theorem is based on a direct relationship between flows and cuts in networks, so we begin by defining terms that relate to cuts.

Recall from Section 20.1 that a cut in a graph is a partition of the vertices into two disjoint sets, and a crossing edge is an edge that connects a vertex in one set to a vertex in the other set. For flow networks, we refine these definitions as follows (see Figure 22.14).

Definition 22.3 An st-cut is a cut that places vertex s in one of its sets and vertex t in the other.

Each crossing edge corresponding to an st-cut is either an st-edge that goes from a vertex in the set containing s to a vertex in the set containing t, or a ts -edge that goes in the other direction. We sometimes refer to the set of crossing edges as a cut set. The capacity of an st-cut in a flow network is the sum of the capacities of that cut’s st-edges, and the flow across an st-cut is the difference between the sum of the flows in that cut’s st -edges and the sum of the flows in that cut’s ts -edges.

Removing a cut set divides a connected graph into two connected components, leaving no path connecting any vertex in one to any vertex in the other. Removing all the edges in an st-cut of a network leaves no path connecting s to t in the underlying undirected graph, but adding any one of them back could create such a path.

Cuts are the appropriate abstraction for the application mentioned at the beginning of the chapter where a flow network describes the movement of supplies from a depot to the troops of an army. To cut off supplies completely and in the most economical manner, an enemy might solve the following problem.

Figure 22.14 st-cut terminology

An st-network has one source s and one sink t. An st-cut is a partition of the vertices into a set containing s (white) and another set containing t (black). The edges that connect a vertex in one set with a vertex in the other (high-lighted in gray) are known as a cut set. A forward edge goes from a vertex in the set containing s to a vertex in the set containing t; a backward edge goes the other way. There are four forward edges and two backward edges in the cut set shown here.

Minimum cut Given an st-network, find an st-cut such that the capacity of no other cut is smaller. For brevity, we refer to such a cut as a mincut, and to the problem of finding one in a network as the mincut problem.

The mincut problem is a generalization of the connectivity problems that we discussed briefly in Section 18.6. We analyze specific relationships in detail in Section 22.4.

The statement of the mincut problem includes no mention of flows, and these definitions might seem to digress from our discussion of the augmenting-path algorithm. On the surface, computing a mincut (a set of edges) seems easier than computing a maxflow (an assignment of weights to all the edges). On the contrary, the key fact of this chapter is that the maxflow and mincut problems are intimately related. The augmenting-path method itself, in conjunction with two facts about flows and cuts, provides a proof.

Property 22.3 For any st-flow, the flow across each st-cut is equal to the value of the flow.

Proof: This property is an immediate consequence of the generalization of Property 22.1 that we discussed in the associated proof (see Figure 22.7). Add an edge t-s with flow equal to the value of the flow such that inflow is equal to outflow for any set of vertices. Then, for any st-cut where C s is the vertex set containing s and C t is the vertex set containing t, the inflow to C s is the inflow to s (the value of the flow) plus the sum of the flows in the backward edges across the cut; and the outflow from C s is the sum of the flows in the forward edges across the cut. Setting these two quantities equal establishes the desired result.

Property 22.4 No st-flow’s value can exceed the capacity of any st-cut.

Proof: The flow across a cut certainly cannot exceed that cut’s capacity, so this result is immediate from Property 22.3.

In other words, cuts represent bottlenecks in networks. In our military application, an enemy that is not able to cut off army troops

Figure 22.15 All st -cuts

This list gives, for all the st-cuts of the network at left, the vertices in the set containing s, the vertices in the set containing t, forward edges, backward edges, and capacity (sum of capacities of the forward edges). For any flow, the flow across all the cuts (flow in forward edges minus flow in backward edges) is the same. For example, for the flow in the network at left, the flow across the cut separating 0 1 3 and 2 4 5 is 2 + 1 + 2 (the flow in 0-2, 1-4, and 3-5, respectively) minus 1 (the flow in 2-3), or 4. This calculation also results in the value 4 for every other cut in the network, and the flow is a maximum flow because its value is equal to the capacity of the minimum cut (see Property 22.5). There are two minimum cuts in this network.

completely from their supplies could still be sure that supply flow is restricted to at most the capacity of any given cut. We certainly might imagine that the cost of making a cut is proportional to its capacity in this application, thus motivating the invading army to find a solution to the mincut problem. More important, these facts also imply, in particular, that no flow can have value higher than the capacity of any minimum cut.

Property 22.5 (Maxflow–mincut theorem) The maximum value among all st-flows in a network is equal to the minimum capacity among all st-cuts.

Proof: It suffices to exhibit a flow and a cut such that the value of the flow is equal to the capacity of the cut. The flow has to be a maxflow because no other flow value can exceed the capacity of the cut and the cut has to be a minimum cut because no other cut capacity can be lower than the value of the flow (by Property 22.4). The Ford–Fulkerson algorithm gives precisely such a flow and cut: When the algorithm terminates, identify the first full forward or empty backward edge on every path from s to t in the graph. Let C s be the set of all vertices that can be reached from s with an undirected path that does not contain a full forward or empty backward edge, and let C t be the remaining vertices. Then, t must be in C t, so (Cs, Ct)) is an st-cut, whose cut set consists entirely of full forward or empty backward edges. The flow across this cut is equal to the cut’s capacity (since forward edges are full and the backward edges are empty) and also to the value of the network flow (by Property 22.3).

This proof also establishes explicitly that the Ford–Fulkerson algorithm finds a maxflow. No matter what method we choose to find an augmenting path, and no matter what paths we find, we always end up with a cut whose flow is equal to its capacity, and therefore also is equal to the value of the network’s flow, which therefore must be a maxflow.

Another implication of the correctness of the Ford–Fulkerson algorithm is that, for any flow network with integer capacities, there exists a maxflow solution where the flows are all integers. Each augmenting path increases the flow by a positive integer (the minimum of the unused capacities in the forward edges and the flows in the backward edges, all of which are always positive integers). This fact justifies our decision to restrict our attention to integer capacities and flows. It is possible to design a maxflow with noninteger flows, even when capacities are all integers (see Exercise 22.23), but we do not need to consider such flows. This restriction is important: Generalizing to allow capacities and flows that are real numbers can lead to unpleasant anomalous situations. For example, the Ford–Fulkerson algorithm might lead to an infinite sequence of augmenting paths that does not even converge to the maxflow value (see reference section).

The generic Ford–Fulkerson algorithm does not specify any particular method for finding an augmenting path. Perhaps the most natural way to proceed is to use the generalized graph-search strategy of Section 18.8. To this end, we begin with the following definition.

Definition 22.4 Given a flow network and a flow, the residual network for the flow has the same vertices as the original and one or two edges in the residual network for each edge in the original, defined as follows: For each edge v-w in the original, let f be the flow and c the capacity. If f is positive, include an edge w-v in the residual with capacity f ; and if f is less than c, include an edge v-w in the residual with capacity c-f.

If v-w is empty (f is equal to 0), there is a single corresponding edge v-w with capacity c in the residual; if v-w is full (f is equal to c), there is a single corresponding edge w-v with capacity f in the residual; and if v-w is neither empty nor full, both v-w and w-v are in the residual with their respective capacities.

Program 22.2 defines the EDGE class that we use to implement the residual network abstraction with class function members. With such

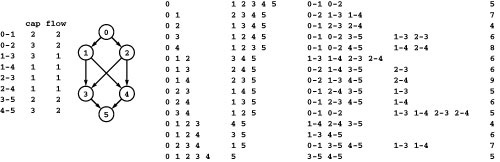

Figure 22.16 Residual networks (augmenting paths)

Finding augmenting paths in a flow network is equivalent to finding directed paths in the residual network that is defined by the flow. For each edge in the flow network, we create an edge in each direction in the residual network: one in the direction of the flow with weight equal to the unused capacity, and one in the opposite direction with weight equal to the flow. We do not include edges of weight 0 in either case. Initially (top), the residual network is the same as the flow network with weights equal to capacities. When we augment along the path 0-1-3-5 (second from top), we fill edges 0-1 and 3-5 to capacity so that they switch direction in the residual network, we reduce the weight of 1-3 to correspond to the remaining flow, and we add the edge 3-1 of weight 2. Similarly, when we augment along the path 0-2-4-5, we fill 2-4 to capacity so that it switches direction, and we have edges in either direction between 0 and 2 and between 4 and 5 to represent flow and unused capacity. After we augment along 0-2-3-1-4-5 (bottom), no directed paths from source to sink remain in the residual network, so there are no augmenting paths.

an implementation, we continue to work exclusively with pointers to client edges. Our algorithms work with the residual network, but they are actually examining capacities and changing flow (through edge pointers) in the client’s edges. The member functions from and P|other| allow us to process edges in either orientation: e.other(v) returns the endpoint of e that is not v. The member functions capRto and addflowRto implement the residual network: If e is a pointer to an edge v-w with capacity c and flow f, then e->capRto(w) is c-f and e->capRto(v) is f; e->addflowRto(w, d) adds d to the flow; and e->addflowRto(v, d) subtracts d from the flow.

Residual networks allow us to use any generalized graph search (see Section 18.8) to find an augmenting path, since any path from

source to sink in the residual network corresponds directly to an augmenting path in the original network. Increasing the flow along the path implies making changes in the residual network: For example, at least one edge on the path becomes full or empty, so at least one edge in the residual network changes direction or disappears (but our use of an

abstract residual network means that we just check for positive capacity and do not need to actually insert and delete edges). Figure 22.16 shows a sequence of augmenting paths and the corresponding residual networks for an example.

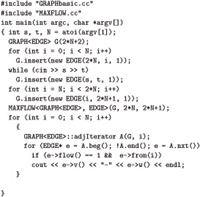

Program 22.3 is a priority-queue–based implementation that encompasses all these possibilities, using the slightly modified version of our PFS graph-search implementation from Program 21.1 that is shown in Program 22.4. This implementation allows us to choose among several different classical implementations of the Ford– Fulkerson algorithm, simply by setting priorities so as to implement various data structures for the fringe.

As discussed in Section 21.2, using a priority queue to implement a stack, queue, or randomized queue for the fringe data structure incurs an extra factor of lg V in the cost of fringe operations. Since we could avoid this cost by using a generalized-queue ADT in an implementation like Program 18.10 with direct implementations, we assume when analyzing the algorithms that the costs of fringe operations are constant in these cases. By using the single implementation in Program 22.3, we emphasize the direct relationships amoung various Ford–Fulkerson implementations.

Although it is general, Program 22.3 does not encompass all implementations of the Ford–Fulkerson algorithm (see, for example, Exercises 22.36 and 22.38). Researchers continue to develop new ways to implement the algorithm. But the family of algorithms encompassed by Program 22.3 is widely used, gives us a basis for understanding computation of maxflows, and introduces us to straightforward implementations that perform well on practical networks.

As we soon see, these basic algorithmic tools get us simple (and useful, for many applications) solutions to the network-flow problem. A complete analysis establishing which specific method is best is a complex task, however, because their running times depend on

• The number of augmenting paths needed to find a maxflow

• The time needed to find each augmenting path

These quantities can vary widely, depending on the network being processed and on the graph-search strategy (fringe data structure).

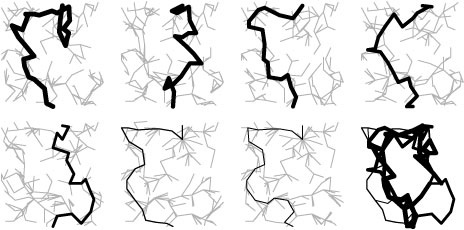

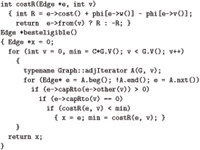

Perhaps the simplest Ford–Fulkerson implementation uses the shortest augmenting path (as measured by the number of edges on the path, not flow or capacity). This method was suggested by Edmonds and Karp in 1972. To implement it, we use a queue for the fringe, either by using the value of an increasing counter for P or by using a queue ADT instead of a priority-queue ADT in Program 22.3. In this case, the search for an augmenting path amounts to breadth-first search (BFS) in the residual network, precisely as described in Sections 18.8 and 21.2. Figure 22.17 shows this implementation of the Ford–Fulkerson method in operation on a sample network. For brevity, we refer to this method as the shortest-augmenting-path maxflow algorithm. As is evident

Figure 22.17 Shortest augmenting paths

This sequence illustrates how the shortest-augmenting-path implementation of the Ford–Fulkerson method finds a maximum flow in a sample network. Path lengths increase as the algorithm progresses: The first four paths in the top row are of length 3; the last path in the top row and all of the paths in the second row are of length 4; the first two paths in the bottom row are of length 5; and the process finishes with two paths of length 7 that each have a backward edge.

from the figure, the lengths of the augmenting paths form a nondecreasing sequence. Our analysis of this method, in Property 22.7, proves that this property is characteristic.

Another Ford–Fulkerson implementation suggested by Edmonds and Karp is the following: Augment along the path that increases the flow by the largest amount. The priority value P that is used in Program 22.3 implements this method. This priority makes the algorithm choose edges from the fringe to give the maximum amount of flow that can be pushed through a forward edge or diverted from a backward edge. For brevity, we refer to this method as the maximum-capacity– augmenting-path maxflow algorithm. Program 22.18 illustrates the algorithm on the same flow network as that in Program 22.17.

These are but two examples (ones we can analyze!) of Ford– Fulkerson implementations. At the end of this section, we consider others. Before doing so, we consider the task of analyzing augmenting-path methods in order to learn their properties and, ultimately, to decide which one will have the best performance.

Figure 22.18 Maximum-capacity augmenting paths

This sequence illustrates how the maximum-capacity–augmenting-path implementation of the Ford– Fulkerson method finds a maxflow in a sample network. Path capacities decrease as the algorithm progresses, but their lengths may increase or decrease. The method needs only nine augmenting paths to compute the same maxflow as the one depicted in Program 22.17.

In trying to choose among the family of algorithms represented by Program 22.3, we are in a familiar situation. Should we focus on worst-case performance guarantees, or do those represent a mathematical fiction that may not relate to networks that we encounter in practice? This question is particularly relevant in this context, because the classical worst-case performance bounds that we can establish are much higher than the actual performance results that we see for typical graphs.

Many factors further complicate the situation. For example, the worst-case running time for several versions depends not just on V and E, but also on the values of the edge capacities in the network. Developing a maxflow algorithm with fast guaranteed performance has been a tantalizing problem for several decades, and numerous methods have been proposed. Evaluating all these methods for all the types of networks that are likely to be encountered in practice, with sufficient precision to allow us to choose among them, is not as clear-cut as is the same task for other situations that we have studied, such as typical practical applications of sorting or searching algorithms.

Keeping these difficulties in mind, we now consider the classical results about the worst-case performance of the Ford–Fulkerson method: One general bound and two specific bounds, one for each of the two augmenting-path algorithms that we have examined. These results serve more to give us insight into characteristics of the algorithms than to allow us to predict performance to a sufficient degree of accuracy

Figure 22.19 Two scenarios for the Ford– Fulkerson algorithm

This network illustrates that the number of iterations used by the Ford–Fulkerson algorithm depends on the capacities of the edges in the network and the sequence of paths chosen by the implementation. It consists of four edges of capacity X and one of capacity 1. The scenario depicted at the top shows that an implementation that alternates between using 0-1-2-3 and 0-2-1-3 as augmenting paths (for example, one that prefers long paths) would require X pairs of iterations like the two pairs shown, each pair incrementing the total flow by 2. The scenario depicted at the bottom shows that an implementation that chooses 0-1-3 and then 0-2-3 as augmenting paths (for example, one that prefers short paths) finds the maximum flow in just two iterations.

for meaningful comparison. We discuss empirical comparisons of the methods at the end of the section.

If edge capacities are, say, 32-bit integers, the scenario depicted at the top would be billions of times slower than the scenario depicted the bottom.

Property 22.6 Let M be the maximum edge capacity in the network. The number of augmenting paths needed by any implementation of the Ford–Fulkerson algorithm is at most equal to VM.

Proof: Any cut has at most V edges, of capacity M, for a total capacity of VM. Every augmenting path increases the flow through every cut by at least 1, so the algorithm must terminate after VM passes, since all cuts must be filled to capacity after that many augmentations.

As discussed below, such a bound is of little use in typical situations because M can be a very large number. Worse, it is easy to describe situations where the number of iterations is proportional to the maximum edge capacity. For example, suppose that we use a longest augmenting-path algorithm (perhaps based on the intuition that the longer the path, the more flow we put on the network’s edges). Since we are counting iterations, we ignore, for the moment, the cost of computing such a path. The (classical) example shown in Figure 22.19 shows a network for which the number of iterations of a longest augmenting-path algorithm is equal to the maximum edge capacity. This example tells us that we must undertake a more detailed scrutiny to know whether other specific implementations use substantially fewer iterations than are indicated by Property 22.6.

For sparse networks and networks with small integer capacities, Property 22.6 does give an upper bound on the running time of any Ford–Fulkerson implementation that is useful.

Corollary The time required to find a maxflow is O (V EM), which is O(V2M)for sparse networks.

Proof: Immediate from the basic result that generalized graph search is linear in the size of the graph representation (Property 18.12). As mentioned, we need an extra lg V factor if we are using a priority-queue fringe implementation.

The proof actually establishes that the factor of M can be replaced by the ratio between the largest and smallest nonzero capacities in the network (see Exercise 22.25). When this ratio is low, the bound tells us that any Ford–Fulkerson implementation will find a maxflow in time proportional to the time required to (for example) solve the all-shortest-paths problem, in the worst case. There are many situations where the capacities are indeed low and the factor of M is of no concern. We will see an example in Section 22.4.

When M is large, the V EM worst-case bound is high; but it is pessimistic, as we obtained it by multiplying together worst-case bounds that derive from contrived examples. Actual costs on practical networks are typically much lower.

From a theoretical standpoint, our first goal is to discover, using the rough subjective categorizations of Section 17.8, whether or not the maximum-flow problem for networks with large integer weights is tractable (solvable by a polynomial-time algorithm). The bounds just derived do not resolve this question, because the maximum weight M = 2m could grow exponentially with V and E. From a practical standpoint, we seek better performance guarantees. To pick a typical practical example, suppose that we use 32-bit integers (m = 32) to represent edge weights. In a graph with hundreds of vertices and thousands of edges, the corollary to Property 22.6 says that we might have to perform hundreds of trillions of operations in an augmenting-path algorithm. If we are dealing with millions of vertices, this point is moot, not only because we will not have weights as large 21000000, but also because V3 and V E are so large as to make the bound meaningless. We are interested both in finding a polynomial bound to resolve the tractability question and in finding better bounds that are relevant for situations that we might encounter in practice.

Property 22.6 is general: It applies to any Ford–Fulkerson implementation at all. The generic nature of the algorithm leaves us with a substantial amount of flexibility to consider a number of simple implementations in seeking to improve performance. We expect that specific implementations might be subject to better worst-case bounds. Indeed, that is one of our primary reasons for considering them in the first place! Now, as we have seen, implementing and using a large class of these implementations is trivial: We just substitute different generalized-queue implementations or priority definitions in Program 22.3. Analyzing differences in worst-case behavior is more challenging, as indicated by the classical results that we consider next for the two basic augmenting-path implementations that we have considered.

First, we analyze the shortest-augmenting-path algorithm. This method is not subject to the problem illustrated in Program 22.19. Indeed, we can use it to replace the factor of M in the worst-case running time with V E/ 2, thus establishing that the network-flow problem is tractable. We might even classify it as being easy (solvable in polynomial time on practical cases by a simple, if clever, implementation).

Property 22.7 The number of augmenting paths needed in the shortest-augmenting-path implementation of the Ford–Fulkerson algorithm is at most V E/ 2.

Proof: First, as is apparent from the example in Program 22.17, no augmenting path is shorter than a previous one. To establish this fact, we show by contradiction that a stronger property holds: No augmenting path can decrease the length of the shortest path from the source s to any vertex in the residual network. Suppose that some augmenting path does so, and that v is the first such vertex on the path. There are two cases to consider: Either no vertex on the new shorter path from s to v appears anywhere on the augmenting path or some vertex w on the new shorter path from s to v appears somewhere between v and t on the augmenting path. Both situations contradict the minimality of the augmenting path.

Now, by construction, every augmenting path has at least one critical edge: an edge that is deleted from the residual network because it corresponds either to a forward edge that becomes filled to capacity or a backward edge that is emptied. Suppose that an edge u-v is a critical edge for an augmenting path P of length d. The next augmenting path for which it is a critical edge has to be of length at least d+2, because that path has to go from s to v, then along v-u, then from u to t. The first segment is of length at least 1 greater than the distance from s to u in P, and the final segment is of length at least 1 greater than the distance from v to t in P, so the path is of length at least 2 greater than P.

Since augmenting paths are of length at most V, these facts imply that each edge can be the critical edge on at most V/ 2 augmenting paths, so the total number of augmenting paths is at most EV/ 2.

Corollary The time required to find a maxflow in a sparse network is O (V3).

Proof: The time required to find an augmenting path is O (E), so the total time is O (V E2). The stated bound follows immediately.

The quantity V3 is sufficiently high that it does not provide a guarantee of good performance on huge networks. But that fact should not preclude us from using the algorithm on a huge network, because it is a worst-case performance result that may not be useful for predicting performance in a practical application. For example, as just mentioned, the maximum capacity M (or the maximum ratio between capacities) might be much less than V, so the corollary to Property 22.6 would provide a better bound. Indeed, in the best case, the number of augmenting paths needed by the Ford–Fulkerson method is the smaller of the outdegree of s or the indegree of t, which again might be far smaller than V. Given this range between best- and worst-case performance, comparing augmenting-path algorithms solely on the basis of worst-case bounds is not wise.

Still, other implementations that are nearly as simple as the shortest-augmenting-path method might admit better bounds or be preferred in practice (or both). For example, the maximum-augmenting-path algorithm used far fewer paths to find a maxflow than did the shortest-augmenting-path algorithm in the example illustrated in Figures 22.17 and 22.18. We now turn to the worst-case analysis of that algorithm.

First, just as for Prim’s algorithm and for Dijkstra’s algorithm (see Sections 20.6 and 21.2), we can implement the priority queue such that the algorithm takes time proportional to V2 (for dense graphs) or (E + V) log V (for sparse graphs) per iteration in the worst case, although these estimates are pessimistic because the algorithm stops

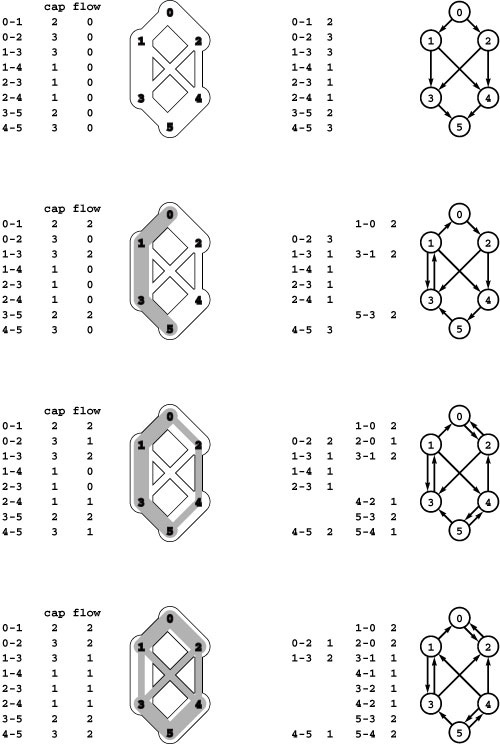

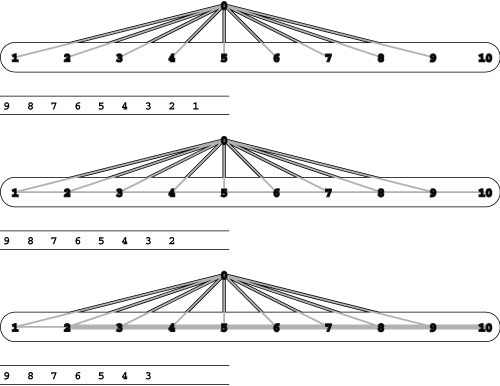

Figure 22.20 Stack-based augmenting-path search

This illustrates the result of using a stack for the generalized queue in our implementation of the Ford– Fulkerson method, so that the path search operates like DFS. In this case, the method does about as well as BFS, but its somewhat erratic behavior is rather sensitive to the network representation and has not been analyzed.

when it reaches the sink. We also have seen that we can do slightly better with advanced data structures. The more important and more challenging question is how many augmenting paths are needed.

Property 22.8 The number of augmenting paths needed in the maximal-augmenting-path implementation of the Ford–Fulkerson algorithm is at most 2E lg M.

Proof: Given a network, let F be its maxflow value. Let v be the value of the flow at some point during the algorithm as we begin to look for an augmenting path. Applying Property 22.2 to the residual network, we can decompose the flow into at most E directed paths that sum to F − v, so the flow in at least one of the paths is at least (F − v)/E. Now, either we find the maxflow sometime before doing another 2E augmenting paths or the value of the augmenting path after that sequence of 2E paths is less than (F − v)/2E, which is less than one-half of the value of the maximum before that sequence of 2E paths. That is, in the worst case, we need a sequence of 2E paths to

Figure 22.21 Randomized augmenting-path search

This sequence the result of using a randomized queue for the fringe data structure in the augmenting-path search in the Ford–Fulkerson method. In this example, we happen upon the short high-capacity path and therefore need relatively few augmenting paths. While predicting the performance characteristics of this method is a challenging task, it performs well in many situations.

decrease the path value by a factor of 2. The first path value is at most M, which we need to decrease by a factor of 2 at most lg M times, so we have a total of at most lg M sequences of 2E paths.

Corollary The time required to find a maxflow in a sparse network is O (V2 lg M lg V ).

Proof: Immediate from the use of a heap-based priority-queue implementation, as for Properties 20.7 and 21.5.

For values of M and V that are typically encountered in practice, this bound is significantly lower than the O (V3) bound of the corollary to Property 22.7. In many practical situations, the maximum-augmenting-path algorithm uses significantly fewer iterations than does the shortest-augmenting-path algorithm, at the cost of a slightly higher bound on the work to find each path.

There are many other variations to consider, as reflected by the extensive literature on maxflow algorithms. Algorithms with better worst-case bounds continue to be discovered, and no nontrivial lower bound has been proved—that is, the possibility of a simple linear-time algorithm remains. Although they are important from a theoretical standpoint, many of the algorithms are primarily designed to lower the worst-case bounds for dense graphs, so they do not offer substantially better performance than the maximum-augmenting-path algorithm for the kinds of sparse networks that we encounter in practice. Still, there remain many options to explore in pursuit of better practical maxflow algorithms. We briefly consider two more augmenting-path algorithms next; in Section 22.3, we consider another family of algorithms.

One easy augmenting-path algorithm is to use the value of a decreasing counter for P or a stack implementation for the generalized queue in Program 22.3, making the search for augmenting paths like depth-first search. Figure 22.20 shows the flow computed for our small example by this algorithm. The intuition is that the method is fast, is easy to implement, and appears to put flow throughout the network. As we will see, its performance varies remarkably, from extremely poor on some networks to reasonable on others.

Another alternative is to use a randomized-queue implementation for the generalized queue so that the search for augmenting paths is a randomized search. Program 22.21 shows the flow computed for our small example by this algorithm. This method is also fast and easy to implement; in addition, as we noted in Section 18.8, it may embody good features of both breadth-first and depth-first search. Randomization is a powerful tool in algorithm design, and this problem represents a reasonable situation in which to consider using it.

We conclude this section by looking more closely at the methods that we have examined in order to see the difficulty of comparing them or attempting to predict performance for practical applications.

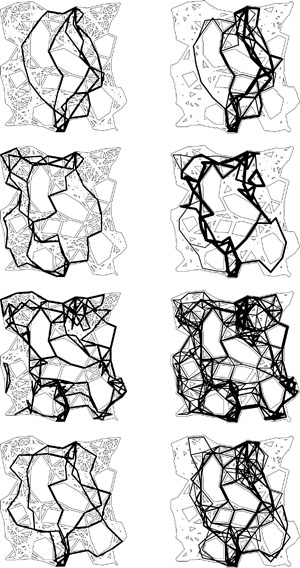

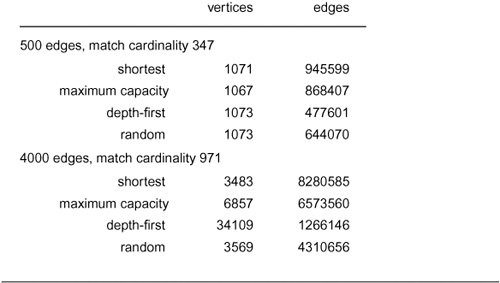

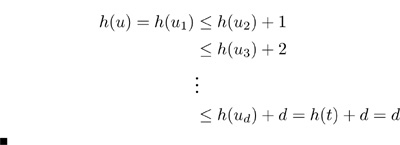

As a start in understanding the quantitative differences that we might expect, we use two flow-network models that are based on the Euclidean graph model that we have been using to compare other graph algorithms. Both models use a graph drawn from V points in the plane with random coordinates between 0 and 1 with edges connecting any two points within a fixed distance of each other. They differ in the assignment of capacities to the edges.