CHAPTER EIGHTEEN

Graph Search

WE OFTEN LEARN properties of a graph by systematically examining each of its vertices and each of its edges. Determining some simple graph properties—for example, computing the degrees of all the vertices—is easy if we just examine each edge (in any order whatever). Many other graph properties are related to paths, so a natural way to learn them is to move from vertex to vertex along the graph’s edges. Nearly all the graph-processing algorithms that we consider use this basic abstract model. In this chapter, we consider the fundamental graph-search algorithms that we use to move through graphs, learning their structural properties as we go.

Graph searching in this way is equivalent to exploring a maze. Specifically, passages in the maze correspond to edges in the graph, and points where passages intersect in the maze correspond to vertices in the graph. When a program changes the value of a variable from vertex v to vertex w because of an edge v-w, we view it as equivalent to a person in a maze moving from point v to point w. We begin this chapter by examining a systematic exploration of a maze. By correspondence with this process, we see precisely how the basic graph-search algorithms proceed through every edge and every vertex in a graph.

In particular, the recursive depth-first search (DFS) algorithm corresponds precisely to the particular maze-exploration strategy of Section 18.1. DFS is a classic and versatile algorithm that we use to solve connectivity and numerous other graph-processing problems. The basic algorithm admits two simple implementations: one that is recursive, and another that uses an explicit stack. Replacing the stack with a FIFO queue leads to another classic algorithm,breadth-first search (BFS), which we use to solve another class of graph-processing problems related to shortest paths.

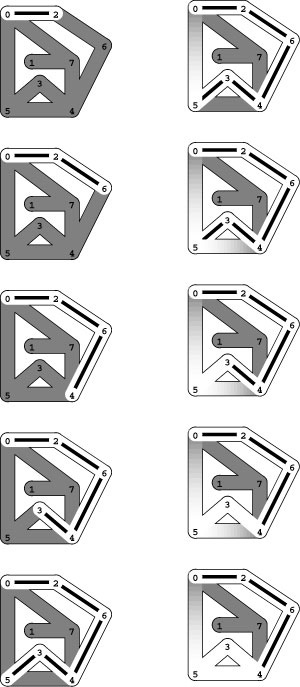

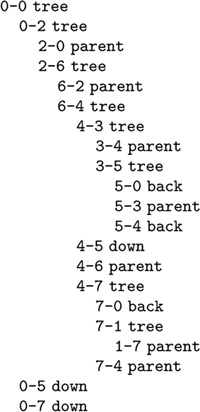

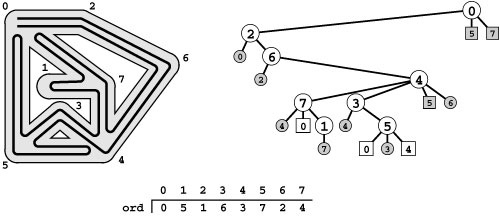

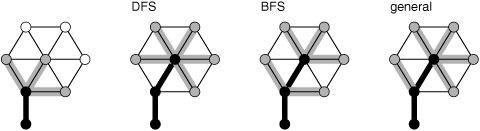

Figure 18.1 Exploring a maze

We can explore every passageway in a simple maze by following a simple rule such as “keep your right hand on the wall.” Following this rule in the maze at the top, we explore the whole maze, going through each passage once in each direction. But if we follow this rule in a maze with a cycle, we return to the starting point without exploring the whole maze, as illustrated in the maze at the bottom.

The main topics of this chapter are DFS, BFS, their related algorithms, and their application to graph processing. We briefly considered DFS and BFS in Chapter 5; we treat them from first principles here, in the context of search-based graph-processing classes, and use them to demonstrate relationships among various graph algorithms. In particular, we consider a general approach to searching graphs that encompasses a number of classical graph-processing algorithms, including both DFS and BFS.

As illustrations of the application of these basic graph-searching methods to solve more complicated problems, we consider algorithms for finding connected components, biconnected components, spanning trees, and shortest paths, and for solving numerous other graph-processing problems. These implementations exemplify the approach that we shall use to solve more difficult problems in Chapters 19 through 22.

We conclude the chapter by considering the basic issues involved in the analysis of graph algorithms, in the context of a case study comparing several different algorithms for finding the number of connected components in a graph.

18.1 Exploring a Maze

It is instructive to think about the process of searching through a graph in terms of an equivalent problem that has a long and distinguished history (see reference section)—finding our way through a maze that consists of passages connected by intersections. This section presents a detailed study of a basic method for exploring every passage in any given maze. Some mazes can be handled with a simple rule, but most mazes require a more sophisticated strategy (see Figure 18.1). Using the terminology maze instead of graph,passage instead of edge, and intersection instead of vertex is making mere semantic distinctions, but, for the moment, doing so will help to give us an intuitive feel for the problem.

One trick for exploring a maze without getting lost that has been known since antiquity (dating back at least to the legend of Theseus and the Minotaur) is to unroll a ball of string behind us. The string guarantees that we can always find a way out, but we are also interested in being sure that we have explored every part of the maze, and we do not want to retrace our steps unless we have to. To accomplish these goals, we need some way to mark places that we have been. We could use the string for this purpose as well, but we use an alternative approach that models a computer implementation more closely.

We assume that there are lights, initially off, in every intersection, and doors, initially closed, at both ends of every passage. We further assume that the doors have windows and that the lights are sufficiently strong and the passages sufficiently straight that we can determine, by opening the door at one end of a passage, whether or not the intersection at the other end is lit (even if the door at the other end is closed). Our goals are to turn on all the lights and to open all the doors. To reach them, we need a set of rules to follow, systematically. The following maze-exploration strategy, which we refer to as Trémaux exploration, has been known at least since the nineteenth century (see reference section):

(i) If there are no closed doors at the current intersection, go to step (iii). Otherwise, open any closed door to any passage leading out of the current intersection (and leave it open).

(ii) If you can see that the intersection at the other end of that passage is already lighted, try another door at the current intersection (step (i)). Otherwise (if you can see that the intersection at the other end of the passage is dark), follow the passage to that intersection, unrolling the string as you go, turn on the light, and go to step (i).

(iii) If all the doors at the current intersection are open, check whether you are back at the start point. If so, stop. If not, use the string to go back down the passage that brought you to this intersection for the first time, rolling the string back up as you go, and look for another closed door there (that is, return to step (i)).

Figures 18.2 and 18.3 depict a traversal of a sample graph and show that, indeed, every light is lit and every door is opened for that example. The figures depict just one of many possible outcomes of the exploration, because we are free to open the doors in any order at each intersection. Convincing ourselves that this method is always effective is an interesting exercise in mathematical induction.

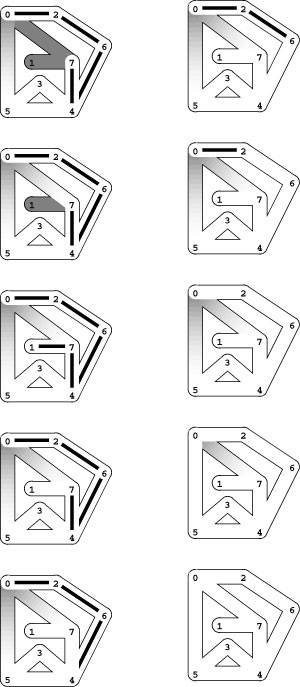

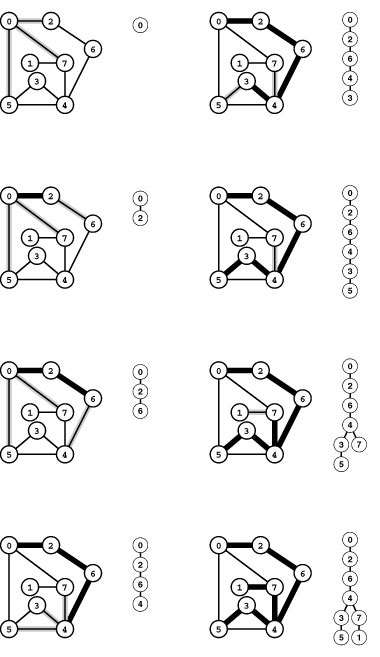

Figure 18.2 Trémaux maze exploration example

In this diagram, places that we have not visited are shaded (dark) and places that we have visited are white (light). We assume that there are lights in the intersections, and that, when we have opened doors into lighted intersections on both ends of a passage, the passage is lighted. To explore the maze, we begin at 0 and take the passage to 2 (left, top). Then we proceed to 6, 4, 3, and 5, opening the doors to the passages, lighting the intersections as we proceed through them, and leaving a string trailing behind us (left). When we open the door that leads to 0 from 5, we can see that 0 is lighted, so we skip that passage (top right). Similarly, we skip the passage from 5 to 4 (right, second from top), leaving us with nowhere to go from 5 except back to 3 and then back to 4, rolling up our ball of string. When we open the doorway from the passage from 4 to 5, we can see that 5 is lighted through the open door at the other end, and we therefore skip that passage (right, bottom). We never walked down the passage connecting 4 and 5, but we lighted it by opening the doors at both ends.

Figure 18.3 Trémaux maze exploration example (continued)

Next, we proceed to 7 (top left), open the door to see that 0 is lighted (left, second from top), and then proceed to 1 (left, third from top). At this point, most of the maze is traversed, and we use our string to take us back to the beginning, moving from 1 to 7 to 4 to 6 to 2 to 0. Back at 0, we complete our exploration by checking the passages to 5 (right, second from bottom) and 7 (bottom right), leaving all passages and intersections lighted. Again, the passages connecting 0 to 5 and 0 to 7 are both lighted because we opened the doors at both ends, but we did not walk through them.

Figure 18.4 Decomposing a maze

To prove by induction that Trémaux exploration takes us everywhere in a maze (top), we break it into two smaller pieces, by removing all edges connecting the first intersection with any intersection that can be reached from the first passage without going back through the first intersection (bottom).

Property 18.1 When we use Trémaux maze exploration, we light all lights and open all doors in the maze and end up back where we started.

Proof: To prove this assertion by induction, we first note that it holds, trivially, for a maze that contains one intersection and no passages—we just turn on the light. For any maze that contains more than one intersection, we assume the property to be true for all mazes with fewer intersections. It suffices to show that we visit all intersections, since we open all the doors at every intersection that we visit. Now, consider the first passage that we take from the first intersection, and divide the intersections into two subsets: (i) those that we can reach by taking that passage without returning to the start, and (ii) those that we cannot reach from that passage without returning to the start. Applying the inductive hypothesis, we know that we visit all intersections in (i) (ignoring any passages back to the start intersection, which is lit) and end up back at the start intersection. Then, applying the the inductive hypothesis again, we know that we visit all intersections in (ii) (ignoring the passages from the start to intersections in (i), which are lit). •

From the detailed example in Figures 18.2 and 18.3, we see that there are four different possible situations that arise for each passage that we consider taking:

(i) The passage is dark, so we take it.

(ii) The passage is the one that we used to enter (it has our string in it), so we use it to exit (and we roll up the string).

(iii) The door at the other end of the passage is closed (but the intersection is lit), so we skip the passage.

(iv) The door at the other end of the passage is open (and the intersection is lit), so we skip it.

The first and second situations apply to any passage that we traverse, first at one end and then at the other end. The third and fourth situations apply to any passage that we skip, first at one end and then at the other end. Next, we see how this perspective on maze exploration translates directly to graph search.

Exercises

• 18.1 Assume that intersections 6 and 7 (and all the hallways connected to them) are removed from the maze in Figures 18.2 and 18.3, and a hallway is added that connects 1 and 2. Show a Trémaux exploration of the resulting maze, in the style of Figures 18.2 and 18.3.

• 18.2 Which of the following could not be the order in which lights are turned on at the intersections during a Trémaux exploration of the maze depicted in Figures 18.2 and 18.3?

0-7-4-5-3-1-6-2

0-2-6-4-3-7-1-5

0-5-3-4-7-1-6-2

0-7-4-6-2-1-3-5

• 18.3 How many different ways are there to traverse the maze depicted in Figures 18.2 and 18.3 with a Trémaux exploration?

18.2 Depth-First Search

Our interest in Trémaux exploration is that this technique leads us immediately to the classic recursive function for traversing graphs: To visit a vertex, we mark it as having been visited, then (recursively) visit all the vertices that are adjacent to it and that have not yet been marked. This method, which we considered briefly in Chapters 3 and 5 and used to solve path problems in Section 17.7, is called depth-first search (DFS). It is one of the most important algorithms that we shall encounter. DFS is deceptively simple because it is based on a familiar concept and is easy to implement; in fact, it is a subtle and powerful algorithm that we put to use for numerous difficult graph-processing tasks.

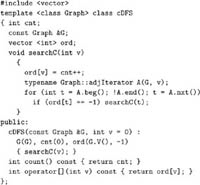

Program 18.1 is a DFS class that visits all the vertices and examines all the edges in a connected graph. Like the simple path-search functions that we considered in Section 17.7, it is based on a recursive function that keeps a private vector to mark vertices as having been visited. In this implementation, ord is a vector of integers that records the order in which vertices are visited. Figure 18.5 is a trace that shows the order in which Program 18.1 visits the edges and vertices for the example depicted in Figures 18.2 and 18.3 (see also Figure 18.17), when the adjacency-matrix graph implementation DenseGRAPH of Section 17.3 is used. Figure 18.6 depicts the maze-exploration process using standard graph drawings.

These figures illustrate the dynamics of a recursive DFS and show the correspondence with Trémaux exploration of a maze. First, the vertex-indexed vector corresponds to the lights in the intersections: When we encounter an edge to a vertex that we have already visited (see a light at the end of the passage), we do not make a recursive

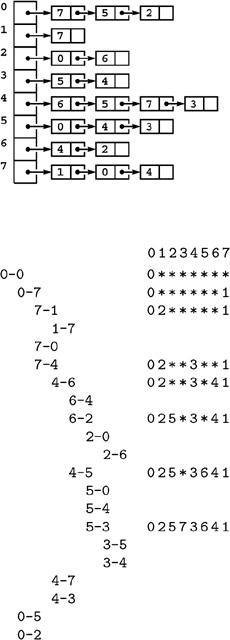

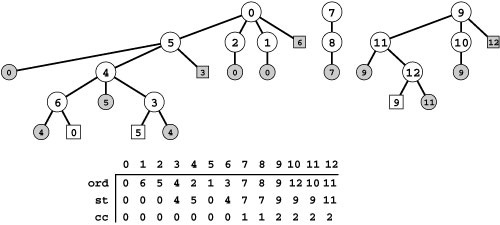

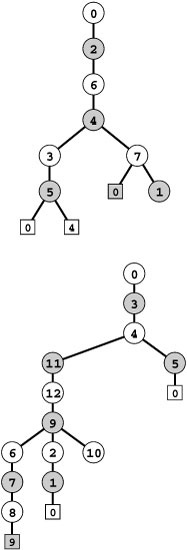

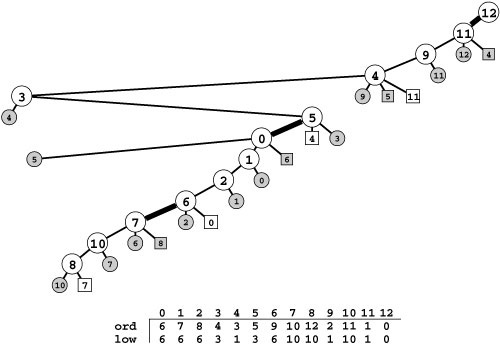

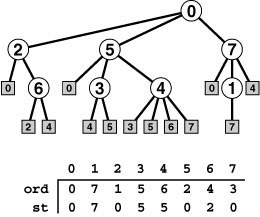

Figure 18.5 DFS trace

This trace shows the order in which DFS checks the edges and vertices for the adjacency-matrix representation of the graph corresponding to the example in Figures 18.2 and 18.3 (top) and traces the contents of the ord vector (right) as the search progresses (asterisks represent -1, for unseen vertices). There are two lines in the trace for every graph edge (once for each orientation). Indentation indicates the level of recursion.

call to follow that edge (go down that passage). Second, the function call–return mechanism in the program corresponds to the string in the maze: When we have processed all the edges adjacent to a vertex (explored all the passages leaving an intersection), we “return” (in both senses of the word).

In the same way that we encounter each passage in the maze twice (once at each end), we encounter each edge in the graph twice (once at each of its vertices). In Trémaux exploration, we open the doors at each end of each passage; in DFS of an undirected graph, we

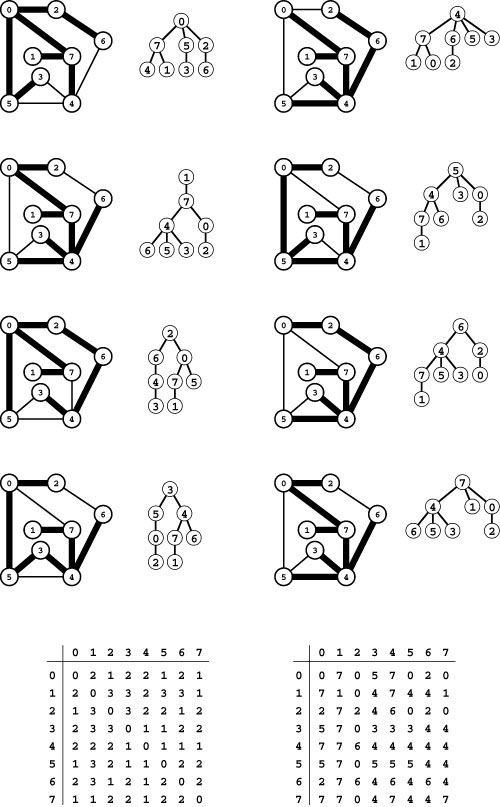

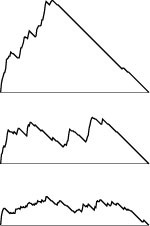

Figure 18.6 Depth-first search

These diagrams are a graphical view of the process depicted in Figure 18.5, showing the DFS recursive-call tree as it evolves. Thick black edges in the graph correspond to edges in the DFS tree shown to the right of each graph diagram. Shaded edges are the candidates to be added to the tree next. In the early stages (left) the tree grows down in a straight line, as we make recursive calls for 0, 2, 6, and 4 (left). Then we make recursive calls for 3, then 5 (right, top two diagrams); and return from those calls to make a recursive call for 7 from 4 (right, second from bottom) and to 1 from 7 (right, bottom).

Figure 18.7 DFS trace (adjacency lists)

This trace shows the order in which DFS checks the edges and vertices for the adjacency-lists representation of the same graph as in Figure 18.5.

check each of the two representations of each edge. If we encounter an edge v-w, we either do a recursive call (if w is not marked) or skip the edge (if w is marked). The second time that we encounter the edge, in the opposite orientation w-v, we always ignore it, because the destination vertex v has certainly already been visited (the first time that we encountered the edge).

One difference between DFS as implemented in Program 18.1 and Trémaux exploration as depicted in Figures 18.2 and 18.3, although it is inconsequential in many contexts, is worth taking the time to understand. When we move from vertex v to vertex w, we have not examined any of the entries in the adjacency matrix that correspond to edges from w to other vertices in the graph. In particular, we know that there is an edge from w to v and that we will ignore that edge when we get to it (because v is marked as visited). That decision happens at a time different from in the Trémaux exploration, where we open the doors corresponding to the edge from v to w when we go to w for the first time, from v. If we were to close those doors on the way in and open them on the way out (having identified the passage with the string), then we would have a precise correspondence between DFS and Trémaux exploration.

Figure 18.6 also depicts the tree corresponding to the recursive calls as it evolves, in correspondence with Figure 18.5. This recursive-call tree, which is known as the DFS tree, is a structural description of the search process. As we see in Section 18.4, the DFS tree, properly augmented, can provide a full description of the search dynamics, in addition to just the call structure.

The order in which vertices are visited depends not just on the graph, but on its representation and ADT implementation. For example, Figure 18.7 shows the search dynamic when the SparseMultiGRAPH adjacency-lists implementation of Section 17.4 is used. For the adjacency-matrix representation, we examine the edges incident on each vertex in numerical order; for the adjacency-lists representation, we examine them in the order that they appear on the lists. This difference leads to a completely different recursive search dynamic, as would differences in the order in which edges appear on the lists (which occur, for example, when the same graph is constructed by inserting edges in a different order). Note also that the existence of parallel edges is inconsequential for DFS because any edge that is parallel to an edge that has already been traversed is ignored, since its destination vertex has been visited.

Despite all of these possibilities, the critical fact remains that DFS visits all the edges and all the vertices connected to the start vertex, regardless of in what order it examines the edges incident on each vertex. This fact is a direct consequence of Property 18.1, since the proof of that property does not depend on the order in which the doors are opened at any given intersection. All the DFS-based algorithms that we examine have this same essential property. Although the dynamics of their operation might vary substantially depending on the graph representation and details of the implementation of the search, the recursive structure gives us a way to make relevant inferences about the graph itself, no matter how it is represented and no matter which order we choose to examine the edges incident upon each vertex.

Exercises

• 18.4 Add a public member function to Program 18.1 that returns the size of the connected component searched by the constructor.

18.5 Write a client program like Program 17.6 that scans a graph from standard input, uses Program 18.1 to run a search starting at each vertex, and prints out the parent-link representation of each spanning forest. Use the DenseGRAPH graph ADT implementation from Section 17.3.

• 18.6 Show, in the style of Figure 18.5, a trace of the recursive function calls made when a cDFS<DenseGRAPH> object is constructed for the graph

0-2 0-5 1-2 3-4 4-5 3-5.

Draw the corresponding DFS recursive-call tree.

18.7 Show, in the style of Figure 18.6, the progress of the search for the example in Exercise 18.6.

18.3 Graph-Search ADT Functions

DFS and the other graph-search methods that we consider later in this chapter all involve following graph edges from vertex to vertex, with the goal of systematically visiting every vertex and every edge in the graph. But following graph edges from vertex to vertex can lead us to all the vertices in only the same connected component as the starting vertex. In general, of course, graphs might not be connected, so we need one call on a search function for each connected component. We

will typically use graph-search functions that perform the following steps until all of the vertices of the graph have been marked as having been visited:

• Find an unmarked vertex (a start vertex).

• Visit (and mark as visited) all the vertices in the connected component that contains the start vertex.

The method for marking vertices is not specified in this description, but we most often use the same method that we used for the DFS implementations in Section 18.2: We initialize all entries in a private vertex-indexed vector to a negative integer, and mark vertices by setting their corresponding entry to a nonnegative value. Using this procedure amounts to using a single bit (the sign bit) for the mark; most implementations are also concerned with keeping other information associated with marked vertices in the vector (such as, for the DFS implementation in Section 18.2, the order in which vertices are marked). The method for looking for a vertex in the next connected component is also not specified, but we most often use a scan through the vector in order of increasing index.

We pass an edge to the search function (using a dummy self-loop in the first call for each connected component), instead of passing its destination vertex, because the edge tells us how we reached the vertex. Knowing the edge corresponds to knowing which passage led to a particular intersection in a maze. This information is useful in many DFS classes. When we are simply keeping track of which vertices we have visited, this information is of little consequence; but more interesting problems require that we always know from whence we came.

Program 18.2 is an implementation that illustrates these choices. Figure 18.8 gives an example that illustrates how every vertex is visited, by the effect on the ord vector of any derived class. Typically, the derived classes that we consider also examine all edges incident upon each vertex visited. In such cases, knowing that we visit all vertices tells us that we visit all edges as well, as in Trémaux traversal.

Program 18.3 is an example that shows how we derive a DFS-based class for computing a spanning forest from the SEARCH base class of Program 18.2. We include a private vector st in the derived class to hold a parent-link representation of the tree that we initialize in the constructor; define a searchC that is similar to searchC from Program 18.1, except that it takes an edge v-w as argument and to set st[w] to v; and add a public member function that allows clients to learn the parent of any vertex. Spanning forests are of interest in many applications, but our primary interest in them in this chapter is their relevance in understanding the dynamic behavior of the DFS, the topic of Section 18.4.

In a connected graph, the constructor in Program 18.2 calls searchC once for 0-0 and then finds that all the other vertices are marked. In a graph with more than one connected component, the constructor checks all the connected components in a straightforward manner. DFS is the first of several methods that we consider for searching a connected component. No matter which method (and no matter what graph representation) we use, Program 18.2 is an effective method for visiting all the graph vertices.

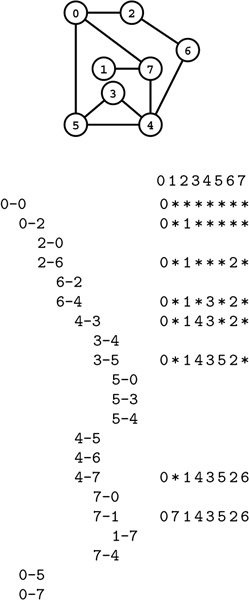

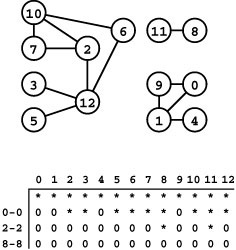

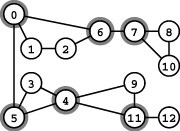

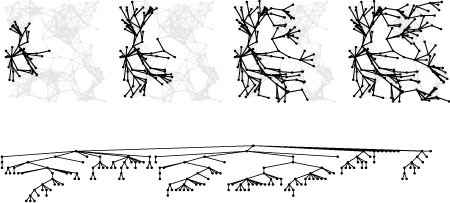

Figure 18.8 Graph search

The table at the bottom shows vertex marks (contents of the ord vector) during a typical search of the graph at the top. Initially, the function GRAPHsearch in Program 18.2 unmarks all vertices by setting the marks all to -1 (indicated by an asterisk). Then it calls search for the dummy edge 0-0, which marks all of the vertices in the same connected component as 0 (second row) by setting them to a nonnegative values (indicated by 0s). In this example, it marks 0, 1, 4, and 9 with the values 0 through 3 in that order. Next, it scans from left to right to find the unmarked vertex 2 and calls search for the dummy edge 2-2 (third row), which marks the seven vertices in the same connected component as 2. Continuing the left-to-right scan, it calls search for 8-8 to mark 8 and 11 (bottom row). Finally, GRAPHsearch completes the search by discovering that 9 through 12 are all marked.

Property 18.2 A graph-search function checks each edge and marks each vertex in a graph if and only if the search function that it uses marks each vertex and checks each edge in the connected component that contains the start vertex.

Proof: By induction on the number of connected components. •

Graph-search functions provide a systematic way of processing each vertex and each edge in a graph. Generally, our implementations are designed to run in linear or near-linear time, by doing a fixed amount of processing per edge. We prove this fact now for DFS, noting that the same proof technique works for several other search strategies.

Property 18.3 DFS of a graph represented with an adjacency matrix requires time proportional to V2.

Proof: An argument similar to the proof of Property 18.1 shows that searchC not only marks all vertices connected to the start vertex but also calls itself exactly once for each such vertex (to mark that vertex). An argument similar to the proof of Property 18.2 shows that a call to search leads to exactly one call to searchC for each graph vertex. In searchC, the iterator checks every entry in the vertex’s row in the adjacency matrix. In other words, the search checks each entry in the adjacency matrix precisely once.•

Property 18.4 DFS of a graph represented with adjacency lists requires time proportional to V + E.

Proof: From the argument just outlined, it follows that we call the recursive function precisely V times (hence the V term), and we examine each entry on each adjacency list (hence the E term).•

The primary implication of Properties 18.3 and 18.4 is that they establish the running time of DFS to be linear in the size of the data structure used to represent the graph. In most situations, we are also justified in thinking of the running time of DFS as being linear in the size of the graph, as well: If we have a dense graph (with the number of edges proportional to V2) then either representation gives this result; if we have a sparse graph, then we assume use of an adjacency-lists representation. Indeed, we normally think of the running time of DFS as being linear in E. That statement is technically not true if we are using adjacency matrices for sparse graphs or for extremely sparse graphs with E << V and most vertices isolated, but we can usually avoid the former situation, and we can remove isolated vertices (see Exercise 17.34) in the latter situation.

As we shall see, these arguments all apply to any algorithm that has a few of the same essential features of DFS. If the algorithm marks each vertex and examines all the latter’s incident vertices (and does any other work that takes time per vertex bounded by a constant), then these properties apply. More generally, if the time per vertex is bounded by some function f (V, E), then the time for the search is guaranteed to be proportional to E + Vf (V, E). In Section 18.8, we see that DFS is one of a family of algorithms that has just these characteristics; in Chapters 19 through 22, we see that algorithms from this family serve as the basis for a substantial fraction of the code that we consider in this book.

Much of the graph-processing code that we examine is ADT-implementation code for some particular task, where we develop a class that does a basic search to compute structural information in other vertex-indexed vectors. We can derive the class from Program 18.2 or, in simple cases, just reimplement the search. Many of our graph-processing classes are of this nature because we typically can uncover a graph’s structure by searching it. We normally add code to the search function that is executed when each vertex is marked, instead of working with a more generic search (for example, one that calls a specified function each time a vertex is visited), solely to keep the code compact and self-contained. Providing a more general ADT mechanism for clients to process all the vertices with a client-supplied function is a worthwhile exercise (see Exercises 18.13 and 18.14).

In Sections 18.5 and 18.6, we examine numerous graph-processing functions that are based on DFS. In Sections 18.7 and 18.8, we look at other implementations of search and at some graph-processing functions that are based on them. Although we do not build this layer of abstraction into our code, we take care to identify the basic graph-search strategy underlying each algorithm that we develop. For example, we use the term DFS class to refer to any implementation that is based on the recursive DFS scheme. The simple-path–search class Program 17.11 and the spanning-forest class Program 18.3 are examples of DFS classes.

Many graph-processing functions are based on the use of vertex-indexed vectors. We typically include such vectors as private data members in class implementations, to hold information about the structure of graphs (which is discovered during the search) that helps us solve the problem at hand. Examples of such vectors are the deg vector in Program 17.11 and the ord vector in Program 18.1. Some implementations that we will examine use multiple vectors to learn complicated structural properties.

Our convention in graph-search functions is to initialize all entries in vertex-indexed vectors to -1, and to set the entries corresponding to each vertex visited to nonnegative values in the search function. Any such vector can play the role of the ord vector (marking vertices as visited) in Programs 18.2 through 18.3. When a graph-search function is based on using or computing a vertex-indexed vector, we often just implement the search and use that vector to mark vertices, rather than deriving the class from SEARCH or maintaining the ord vector.

The specific outcome of a graph search depends not just on the nature of the search function but also on the graph representation and even the order in which search examines the vertices. For specificity in the examples and exercises in this book, we use the term standard adjacency-lists DFS to refer to the process of inserting a sequence of edges into a graph ADT implemented with an adjacency-lists representation (Program 17.9), then doing a DFS with, for example, Program 18.3. For the adjacency-matrix representation, the order of edge insertion does not affect search dynamics, but we use the parallel term standard adjacency-matrix DFS to refer to the process of inserting a sequence of edges into a graph ADT implemented with an adjacency-matrix representation (Program 17.7), then doing a DFS with, for example, Program 18.3.

Exercises

18.8 Show, in the style of Figure 18.5, a trace of the recursive function calls made for a standard adjacency-matrix DFS of the graph

3-7 1-4 7-8 0-5 5-2 3-8 2-9 0-6 4-9 2-6 6-4.

18.9 Show, in the style of Figure 18.7, a trace of the recursive function calls made for a standard adjacency-lists DFS of the graph

3-7 1-4 7-8 0-5 5-2 3-8 2-9 0-6 4-9 2-6 6-4.

18.10 Modify the adjacency-matrix graph ADT implementation in Program 17.7 to use a dummy vertex that is connected to all the other vertices. Then, provide a simplified DFS implementation that takes advantage of this change.

18.11 Do Exercise 18.10 for the adjacency-lists ADT implementation in Program 17.9.

• 18.12 There are 13! different permutations of the vertices in the graph depicted in Figure 18.8. How many of these permutations could specify the order in which vertices are visited by Program 18.2?

18.13 Implement a graph ADT client function that calls a client-supplied function for each vertex in the graph.

18.14 Implement a graph ADT client that calls a client-supplied function for each edge in the graph. Such a function might be a reasonable alternative to GRAPHedges (see Program 17.2).

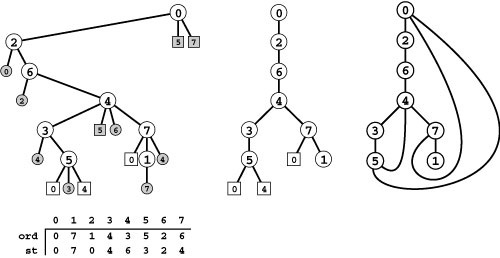

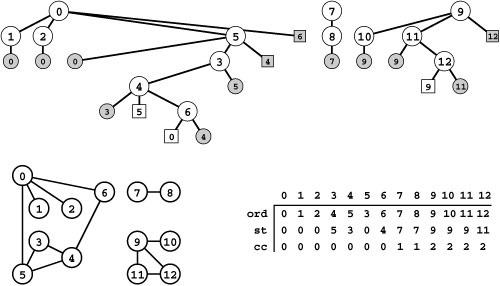

Figure 18.9 DFS tree representations

If we augment the DFS recursive-call tree to represent edges that are checked but not followed, we get a complete description of the DFS process (left). Each tree node has a child representing each of the nodes adjacent to it, in the order they were considered by the DFS, and a preorder traversal gives the same information as Figure 18.5: first we follow 0-0, then 0-2, then we skip 2-0, then we follow 2-6, then we skip 6-2, then we follow 6-4, then 4-3, and so forth. The ord vector specifies the order in which we visit tree vertices during this preorder walk, which is the same as the order in which we visit graph vertices in the DFS. The st vector is a parent-link representation of the DFS recursive-call tree (see Figure 18.6).

There are two links in the tree for every edge in the graph, one for each of the two times it encounters the edge. The first is to an unshaded node and either corresponds to making a recursive call (if it is to an internal node) or to skipping a recursive call because it goes to an ancestor for which a recursive call is in progress (if it is to an external node). The second is to a shaded external node and always corresponds to skipping a recursive call, either because it goes back to the parent (circles) or because it goes to a descendent of the parent for which a recursive call is in progress (squares). If we eliminate shaded nodes (center), then replace the external nodes with edges, we get another drawing of the graph (right).

18.4 Properties of DFS Forests

As noted in Section 18.2, the trees that describe the recursive structure of DFS function calls give us the key to understanding how DFS operates. In this section, we examine properties of the algorithm by examining properties of DFS trees.

If we add external nodes to the DFS tree to record the moments when we skipped recursive calls for vertices that had already been visited, we get the compact representation of the dynamics of DFS illustrated in Figure 18.9. This representation is worthy of careful study. The tree is a representation of the graph, with a vertex corresponding to each graph vertex and an edge corresponding to each graph edge. We can choose to show the two representations of the edge that we process (one in each direction), as shown in the left part of the figure, or just one representation of each edge, as shown in the center and right parts of the figure. The former is useful in understanding that the algorithm processes each and every edge; the latter is useful in understanding that the DFS tree is simply another graph representation. Traversing the internal nodes of the tree in preorder gives the vertices in the order in which DFS visits them; moreover, the order in which we visit the edges of the tree as we traverse it in preorder is the same as the order in which DFS examines the edges of the graph.

Indeed, the DFS tree in Figure 18.9 contains the same information as the trace in Figure 18.5 or the step-by-step illustration of Trémaux traversal in Figures 18.2 and 18.3. Edges to internal nodes represent edges (passages) to unvisited vertices (intersections), edges to external nodes represent occasions where DFS checks edges that lead to previously visited vertices (intersections), and shaded nodes represent edges to vertices for which a recursive DFS is in progress (when we open a door to a passage where the door at the other end is already open). With these interpretations, a preorder traversal of the tree tells the same story as that of the detailed maze-traversal scenario.

To study more intricate graph properties, we classify the edges in a graph according to the role that they play in the search. We have two distinct edge classes:

• Edges representing a recursive call (tree edges)

• Edges connecting a vertex with an ancestor in its DFS tree that is not its parent (back edges)

When we study DFS trees for digraphs in Chapter 19, we examine other types of edges, not just to take the direction into account, but also because we can have edges that go across the tree, connecting nodes that are neither ancestors nor descendants in the tree.

Since there are two representations of each graph edge that each correspond to a link in the DFS tree, we divide the tree links into four classes, using the preorder numbers and the parent links (in the ord and st arrays, respectively) that our DFS code computes. We refer to a link from v to w in a DFS tree that represents a tree edge as

• A tree link if w is unmarked

• A parent link if st[w] is v

and a link from v to w that represents a back edge as

• A back link if ord[w] < ord[v]

• A down link if ord[w] > ord[v]

Each tree edge in the graph corresponds to a tree link and a parent link in the DFS tree, and each back edge in the graph corresponds to a back link and a down link in the DFS tree.

In the graphical DFS representation illustrated in Figure 18.9, tree links point to unshaded circles, parent links point to shaded circles, back links point to unshaded squares, and down links point to shaded squares. Each graph edge is represented either as one tree link and one parent link or as one down link and one back link. These classifications are tricky and worthy of study. For example, note that even though parent links and back links both point to ancestors in the tree, they are quite different: A parent link is just the other representation of a tree link, but a back link gives us new information about the structure of the graph.

Figure 18.10 DFS trace (tree link classifications)

This version of Figure 18.5 shows the classification of the DFS tree link corresponding to each graph edge representation encountered. Tree edges (which correspond to recursive calls) are represented as tree links on the first encounter and parent links on the second encounter. Back edges are back links on the first encounter and down links on the second encounter.

The definitions just given provide sufficient information to distinguish among tree, parent, back, and down links in a DFS class implementation. Note that parent links and back links both have ord[w] < ord[v], so we have also to know that st[w] is not v to know that v-w is a back link. Figure 18.10 depicts the result of printing out the classification of the DFS tree link for each graph edge as that edge is encountered during a sample DFS. It is yet another complete representation of the basic search process that is an intermediate step between Figure 18.5 and Figure 18.9.

The four types of tree links correspond to the four different ways in which we treat edges during a DFS, as described (in maze-exploration terms) at the end of Section 18.1. A tree link corresponds to DFS encountering the first of the two representations of a tree edge, leading to a recursive call (to as-yet-unseen vertices); a parent link corresponds to DFS encountering the other representation of the tree edge (when going through the adjacency list on that first recursive call) and ignoring it. A back link corresponds to DFS encountering the first of the two representations of a back edge, which points to a vertex for which the recursive search function has not yet completed; a down link corresponds to DFS encountering a vertex for which the recursive search has completed at the time that the edge is encountered. In Figure 18.9, tree links and back links connect unshaded nodes, represent the first encounter with the corresponding edge, and constitute a representation of the graph; parent links and down links go to shaded nodes and represent the second encounter with the corresponding edge.

We have considered this tree representation of the dynamic characteristics of recursive DFS in detail not just because it provides a complete and compact description of both the graph and the operation of the algorithm, but also because it gives us a basis for understanding numerous important graph-processing algorithms. In the remainder of this chapter, and in the next several chapters, we consider a number of examples of graph-processing problems that draw conclusions about a graph’s structure from the DFS tree.

Search in a graph is a generalization of tree traversal. When invoked on a tree, DFS is precisely equivalent to recursive tree traversal; for graphs, using it corresponds to traversing a tree that spans the graph

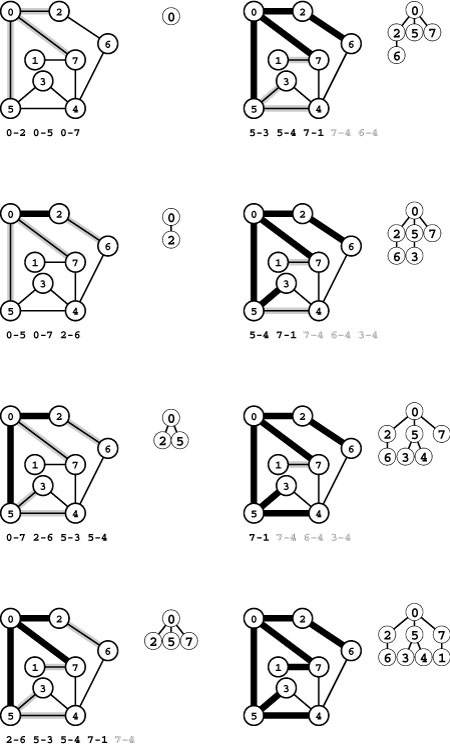

Figure 18.11 DFS forest

The DFS forest at the top represents a DFS of an adjacency-matrix representation of the graph at the bottom right. The graph has three connected components, so the forest has three trees. The ord vector is a preorder numbering of the nodes in the tree (the order in which they are examined by the DFS) and the st vector is a parent-link representation of the forest. The cc vector associates each vertex with a connected-component index (see Program 18.4). As in Figure 18.9, edges to circles are tree edges; edges that go to squares are back edges; and shaded nodes indicate that the incident edge was encountered earlier in the search, in the other direction.

and that is discovered as the search proceeds. As we have seen, the particular tree traversed depends on how the graph is represented. DFS corresponds to preorder tree traversal. In Section 18.6, we examine the graph-searching analog to level-order tree traversal and explore its relationship to DFS; in Section 18.7, we examine a general schema that encompasses any traversal method.

When traversing graphs with DFS, we have been using the ord vector to assign preorder numbers to the vertices in the order that we start processing them. We can also assign postorder numbers to vertices, in the order that we finish processing them (just before returning from the recursive search function). When processing a graph, we do more than simply traverse the vertices—as we shall see, the preorder and postorder numbering give us knowledge about global graph properties that helps us to accomplish the task at hand. Preorder numbering suffices for the algorithms that we consider in this chapter, but we use postorder numbering in later chapters.

We describe the dynamics of DFS for a general undirected graph with a DFS forest that has one DFS tree for each connected component. An example of a DFS forest is illustrated in Figure 18.11.

With an adjacency-lists representation, we visit the edges connected to each vertex in an order different from that for the adjacency-matrix representation, so we get a different DFS forest, as illustrated in Figure 18.12. DFS trees and forests are graph representations that describe not only the dynamics of DFS but also the internal representation

Figure 18.12 Another DFS forest

This forest describes depth-first search of the same graph as Figure 18.11, but using an adjacency-list representation, so the search order is different because it is determined by the order that nodes appear in adjacency lists. Indeed, the forest itself tells us that order: it is the order in which children are listed for each node in the tree. For instance, the nodes on 0’s adjacency list were found in the order 521 6, the nodes on 4’s list are in the order 653, and so forth. As before, all vertices and edges in the graph are examined during the search, in a manner that is precisely described by a preorder walk of the tree. The ord and st vectors depend upon the graph representation and the search dynamics and are different from Figure 18.11, but the vector cc depends on graph properties and is the same.

of the graph. For example, by reading the children of any node in Figure 18.12 from left to right, we see the order in which they appear on the adjacency list of the vertex corresponding to that node. We can have many different DFS forests for the same graph—each ordering of nodes on adjacency lists leads to a different forest.

Details of the structure of a particular forest inform our understanding of how DFS operates for particular graphs, but most of the important DFS properties that we consider depend on graph properties that are independent of the structure of the forest. For example, the forests in Figures 18.11 and 18.12 both have three trees (as would any other DFS forest for the same graph) because they are just different representations of the same graph, which has three connected components. Indeed, a direct consequence of the basic proof that DFS visits all the nodes and edges of a graph (see Properties 18.2 through 18.4) is that the number of connected components in the graph is equal to the number of trees in the DFS forest. This example illustrates the basis for our use of graph search throughout the book: A broad variety of graph-processing class implementations are based on learning graph properties by processing a particular graph representation (a forest corresponding to the search).

Potentially, we could analyze DFS tree structures with the goal of improving algorithm performance. For example, should we attempt to speed up an algorithm by rearranging the adjacency lists before starting the search? For many of the important classical DFS-based algorithms, the answer to this question is no, because they are optimal—their worst-case running time depends on neither the graph structure nor the order in which edges appear on the adjacency lists (they essentially process each edge exactly once). Still, DFS forests have a characteristic structure that is worth understanding because it distinguishes them from other fundamental search schema that we consider later in this chapter.

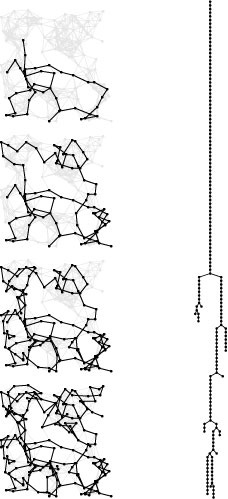

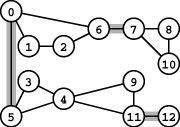

Figure 18.13 shows a DFS tree for a larger graph that illustrates the basic characteristics of DFS search dynamics. The tree is tall and thin, and it demonstrates several characteristics of the graph being searched and of the DFS process.

• There exists at least one long path that connects a substantial fraction of the nodes.

• During the search, most vertices have at least one adjacent vertex that we have not yet seen.

• We rarely make more than one recursive call from any node.

• The depth of the recursion is proportional to the number of vertices in the graph.

This behavior is typical for DFS, though these characteristics are not guaranteed for all graphs. Verifying facts of this kind for graph models of interest and various types of graphs that arise in practice requires detailed study. Still, this example gives an intuitive feel for DFS-based algorithms that is often borne out in practice. Figure 18.13 and similar figures for other graph-search algorithms (see Figures 18.24 and 18.29) help us understand differences in their behavior.

Exercises

18.15 Draw the DFS forest that results from a standard adjacency-matrix DFS of the graph

3-7 1-4 7-8 0-5 5-2 3-8 2-9 0-6 4-9 2-6 6-4.

18.16 Draw the DFS forest that results from a standard adjacency-lists DFS of the graph

3-7 1-4 7-8 0-5 5-2 3-8 2-9 0-6 4-9 2-6 6-4.

18.17 Write a DFS trace program to produce output that classifies each of the two representations of each graph edge as corresponding to a tree, parent, back, or down link in the DFS tree, in the style of Figure 18.10.

• 18.18 Write a program that computes a parent-link representation of the full DFS tree (including the external nodes), using an vector of E integers between 0 and V − 1. Hint: The first V entries in the vector should be the same as those in the st vector described in the text.

Figure 18.13 Depth-first search

This figure illustrates the progress of DFS in a random Euclidean near-neighbor graph (left). The figures show the DFS tree vertices and edges in the graph as the search progresses through 1/4, 1/2, 3/4, and all of the vertices (top to bottom). The DFS tree (tree edges only) is shown at the right. As is evident from this example, the search tree for DFS tends to be quite tall and thin for this type of graph (as it is for many other types of graphs commonly encountered in practice). We normally find a vertex nearby that we have not seen before.

• 18.19 Instrument the spanning-forest DFS class (Program 18.3) by adding member functions (and appropriate private data members) that return the height of the tallest tree in the forest, the number of back edges, and the percentage of edges processed to see every vertex.

• 18.20 Run experiments to determine empirically the average values of the quantities described in Exercise 18.19 for graphs of various sizes, drawn from various graph models (see Exercises 17.64–76).

• 18.21 Write a function that builds a graph by inserting edges from a given vector into an initially empty graph, in random order. Using this function with an adjacency-lists implementation of the graph ADT, run experiments to determine empirically properties of the distribution of the quantities described in Exercise 18.19 for all the adjacency-lists representations of large sample graphs of various sizes, drawn from various graph models (see Exercises 17.64–76).

18.5 DFS Algorithms

Regardless of the graph structure or the representation, any DFS forest allows us to identify edges as tree or back edges and gives us dependable insights into graph structure that allow us to use DFS as a basis for solving numerous graph-processing problems. We have already seen, in Section 17.7, basic examples related to finding paths. In this section, we consider DFS-based ADT function implementations for these and other typical problems; in the remainder of this chapter and in the next several chapters, we look at numerous solutions to much more difficult problems.

Cycle detection Does a given graph have any cycles? (Is the graph a forest?) This problem is easy to solve with DFS because any back edge in a DFS tree belongs to a cycle consisting of the edge plus the tree path connecting the two nodes (see Figure 18.9). Thus, we can use DFS immediately to check for cycles: A graph is acyclic if and only if we encounter no back (or down!) edges during a DFS. For example, to test this condition in Program 18.1, we simply add an else clause to the if statement to test whether t is equal to v. If it is, we have just encountered the parent link w-v (the second representation of the edge v-w that led us to w). If it is not, w-t completes a cycle with the edges from t down to w in the DFS tree. Moreover, we do not need to examine all the edges: We know that we must find a cycle or finish the search without finding one before examining V edges, because any graph with V or more edges must have a cycle. Thus, we can test whether a graph is acyclic in time proportional to V with the adjacency-lists representation, although we may need time proportional to V2 (to find the edges) with the adjacency-matrix representation.

Simple path Given two vertices, is there a path in the graph that connects them? We saw in Section 17.7 that a DFS class that can solve this problem in linear time is easy to devise.

Simple connectivity As discussed in Section 18.3, we determine whether or not a graph is connected whenever we use DFS, in linear time. Indeed, our graph-search strategy is based upon calling a search function for each connected component. In a DFS, the graph is connected if and only if the graph-search function calls the recursive DFS function just once (see Program 18.2). The number of connected components in the graph is precisely the number of times that the recursive function is called from GRAPHsearch, so we can find the number of connected components by simply keeping track of the number of such calls.

More generally, Program 18.4 illustrates a DFS class that supports constant-time connectivity queries after a linear-time preprocessing step in the constructor. It visits vertices in the same order as does Program 18.3. The recursive function uses a vertex as its second argument instead of an edge, since it does not need to know the identity of the parent. Each tree in the DFS forest identifies a connected component, so we arrange to decide quickly whether two vertices are in the same component by including a vertex-indexed vector in the graph representation, to be filled in by a DFS and accessed for connectivity queries. In the recursive DFS function, we assign the current value of the component counter to the entry corresponding to each vertex visited. Then, we know that two vertices are in the same component if and only if their entries in this vector are equal. Again, note that this vector reflects structural properties of the graph, rather than artifacts of the graph representation or of the search dynamics.

Program 18.4 typifies the basic approach that we shall use in addressing numerous graph-processing tasks. We develop a task-specific class so that clients can create objects to perform the task. Typically, we invest preprocessing time in the constructor to compute private data about relevant structural graph properties that help us to provide efficient implementations of public query functions. In this case, the constructor

preprocesses with a (linear-time) DFS and keeps a private data member (the vertex-indexed vector id) that allows it to answer connectivity queries in constant time. For other graph-processing problems, our constructors might use more space, preprocessing time, or query time. As usual, our focus is on minimizing such costs, although doing so is often challenging. For example, much of Chapter 19 is devoted to solving the connectivity problem for digraphs, where achieving linear time preprocessing and constant query time, as in Program 18.4, is an elusive goal.

How does the DFS-based solution for graph connectivity in Program 18.4 compare with the union-find approach that we considered in Chapter 1 for the problem of determining whether a graph is connected, given an edge list? In theory, DFS is faster than union-find because it provides a constant-time guarantee, which union- find does not; in practice, this difference is negligible, and union-find is faster because it does not have to build a full representation of the graph. More important, union-find is an online algorithm (we can check whether two vertices are connected in near-constant time at any point), whereas the DFS solution preprocesses the graph to answer connectivity queries in constant time. Therefore, for example, we prefer union-find when determining connectivity is our only task or when we have a large number

Figure 18.14 A two-way Euler tour

Depth-first search gives us a way to explore any maze, traversing both passages in each direction. We modify Trémaux exploration to take the string with us wherever we go and take a back-and-forth trip on passages without any string in them that go to intersections that we have already visited. This figure shows a different traversal order than shown in Figures 18.2 and 18.3, primarily so that we can draw the tour without crossing itself. This ordering might result, for example, if the edges were processed in some different order when building an adjacency-lists representation of the graph; or, we might explicitly modify DFS to take the geometric placement of the nodes into account (see Exercise 18.26). Moving along the lower track leading out of 0, we move from 0 to 2 to 6 to 4 to 7, then take a trip from 7 to 0 and back because ord[0] is less than ord[7]. Then we go to 1, back to 7, back to 4, to 3, to 5, from 5 to 0 and back, from 5 to 4 and back, back to 3, back to 4, back to 6, back to 2, and back to 0. This path may be obtained by a recursive pre- and postorder walk of the DFS tree (ignoring the shaded vertices that represent the second time we encounter the edges) where we print out the vertex name, recursively visit the subtrees, then print out the vertex name again.

of queries intermixed with edge insertions but may find the DFS solution more appropriate for use in a graph ADT because it makes efficient use of existing infrastructure. Neither approach handles efficiently huge numbers of intermixed edge insertions, edge deletions, and connectivity queries; both require a separate DFS to compute the path. These considerations illustrate the complications that we face when analyzing graph algorithms; we explore them in detail in Section 18.9.

Two-way Euler tour Program 18.5 is a DFS-based class for finding a path that uses all the edges in a graph exactly twice—once in each direction (see Section 17.7). The path corresponds to a Trémaux exploration in which we take our string with us everywhere that we go, check for the string instead of using lights (so we have to go down the passages that lead to intersections that we have already visited), and first arrange to go back and forth on each back link (the first time that we encounter each back edge), then ignore down links (the second time that we encounter each back edge). We might also choose to ignore the back links (first encounter) and to go back and forth on down links (second encounter) (see Exercise 18.25).

Spanning forest Given a connected graph with V vertices, find a set of V − 1 edges that connects the vertices. If the graph has C connected components, find a spanning forest (with V - C edges). We have already seen a DFS class that solves this problem: Program 18.3.

Vertex search How many vertices are in the same connected component as a given vertex? We can solve this problem easily by starting a DFS at the vertex and counting the number of vertices marked. In a dense graph, we can speed up the process considerably by stopping the DFS after we have marked V vertices—at that point, we know that

Figure 18.15 Two-coloring a DFS tree

To two-color a graph, we alternate colors as we move down the DFS tree, then check the back edges for inconsistencies. In the tree at the top, a DFS tree for the sample graph illustrated in Figure 18.9, the back edges 5-4 and 7-0 prove that the graph is not two-colorable because of the odd-length cycles 4-3-5-4 and 0-2-6-4-7-0, respectively. In the tree at the bottom, a DFS tree for the bipartite graph illustrated in Figure 17.5, there are no such inconsistencies, and the indicated shading is a two-coloring of the graph.

no edge will take us to a vertex that we have not yet seen, so we will be ignoring all the rest of the edges. This improvement is likely to allow us to visit all vertices in time proportional to V log V, not E (see Section 18.8).

Two-colorability, bipartiteness, odd cycle Is there a way to assign one of two colors to each vertex of a graph such that no edge connects two vertices of the same color? Is a given graph bipartite (see Section 17.1)? Does a given graph have a cycle of odd length? These three problems are all equivalent: The first two are different nomenclature for the same problem; any graph with an odd cycle is clearly not two-colorable, and Program 18.6 demonstrates that any graph that is free of odd cycles can be two-colored. The program is a DFS-based ADT function implementation that tests whether a graph is bipartite, two-colorable, and free of odd cycles. The recursive function is an outline for a proof by induction that the program two-colors any graph with no odd cycles (or finds an odd cycle as evidence that a graph that is not free of odd cycles cannot be two-colored). To two-color a graph with a given color assigned to a vertex v, two-color the remaining graph, assigning the other color to each vertex adjacent to v. This process is equivalent to assigning alternate colors on levels as we proceed down the DFS tree, checking back edges for consistency in the coloring, as illustrated in Figure 18.15. Any back edge connecting two vertices of the same color is evidence of an odd cycle.

These basic examples illustrate ways in which DFS can give us insight into the structure of a graph. They also demonstrate that we can learn various important graph properties in a single linear-time sweep through the graph, where we examine every edge twice, once in each direction. Next, we consider an example that shows the utility of DFS in discovering more intricate details about the graph structure, still in linear time.

Exercises

• 18.22 Implement a DFS-based cycle-testing class that preprocesses a graph in time proportional to V in the constructor to support public member functions for detecting whether a graph has any cycles and for printing a cycle if one exists.

18.23 Describe a family of graphs with V vertices for which a standard adjacency-matrix DFS requires time proportional to V2 for cycle detection.

• 18.24 Implement the graph-connectivity class of Program 18.4 as a derived graph-search class, like Program 18.3.

• 18.25 Specify a modification to Program 18.5 that will produce a two-way Euler tour that does the back-and-forth traversal on down edges instead of back edges.

• 18.26 Modify Program 18.5 such that it always produces a two-way Euler tour that, like the one in Figure 18.14, can be drawn such that it does not cross itself at any vertex. For example, if the search in Figure 18.14 were to take the edge 4-3 before the edge 4-7, then the tour would have to cross itself; your task is to ensure that the algorithm avoids such situations.

18.27 Develop a version of Program 18.5 that sorts the edges of a graph in order of a two-way Euler tour. Your program should return a vector of edges that corresponds to a two-way Euler tour.

18.28 Prove that a graph is two-colorable if and only if it contains no odd cycle. Hint: Prove by induction that Program 18.6 determines whether or not any given graph is two-colorable.

• 18.29 Explain why the approach taken in Program 18.6 does not generalize to give an efficient method for determining whether a graph is three-colorable.

18.30 Most graphs are not two-colorable, and DFS tends to discover that fact quickly. Run empirical tests to study the number of edges examined by Program 18.6, for graphs of various sizes, drawn from various graph models (see Exercises 17.64–76).

• 18.31 Prove that every connected graph has a vertex whose removal will not disconnect the graph, and write a DFS function that finds such a vertex. Hint: Consider the leaves of the DFS tree.

18.32 Prove that every graph with more than one vertex has at least two vertices whose removal will not increase the number of connected components.

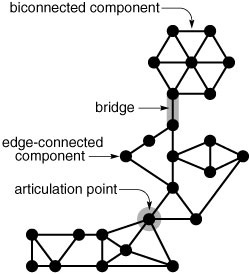

18.6 Separability and Biconnectivity

To illustrate the power of DFS as the basis for graph-processing algorithms, we turn to problems related to generalized notions of connectivity in graphs. We study questions such as the following: Given two vertices, are there two different paths connecting them?

If it is important that a graph be connected in some situation, it might also be important that it stay connected when an edge or a vertex is removed. That is, we may want to have more than one route between each pair of vertices in a graph, so as to handle possible failures. For example, we can fly from New York to San Francisco even if Chicago is snowed in by going through Denver instead. Or, we might imagine a wartime situation where we want to arrange our railroad network such that an enemy must destroy at least two stations to cut our rail lines. Similarly, we might expect the main communications lines in an integrated circuit or a communications network to be connected such that the rest of the circuit still can function if one wire is broken or one link is down.

These examples illustrate two distinct concepts: In the circuit and in the communications network, we are interested in staying connected if an edge is removed; in the air or train routes, we are interested in staying connected if a vertex is removed. We begin by examining the former in detail.

Figure 18.16 An edge-separable graph

This graph is not edge connected. The edges 0-5, 6-7, and 11-12 (shaded) are separating edges (bridges). The graph has 4 edge-connected components: one comprising vertices 0, 1, 2, and 6; another comprising vertices 3, 4, 9, and 11; another comprising vertices 7, 8, and 10; and the single vertex 12.

Definition 18.1 A bridge in a graph is an edge that, if removed, would separate a connected graph into two disjoint subgraphs. A graph that has no bridges is said to be edge-connected.

When we speak of removing an edge, we mean to delete that edge from the set of edges that define the graph, even when that action might leave one or both of the edge’s vertices isolated. An edge-connected graph remains connected when we remove any single edge. In some contexts, it is more natural to emphasize our ability to disconnect the graph rather than the graph’s ability to stay connected, so we freely use alternate terminology that provides this emphasis: We refer to a graph that is not edge-connected as an edge-separable graph, and we call bridges separation edges. If we remove all the bridges in an edge-separable graph, we divide it into edge-connected components or bridge-connected components: maximal subgraphs with no bridges. Figure 18.16 is a small example that illustrates these concepts.

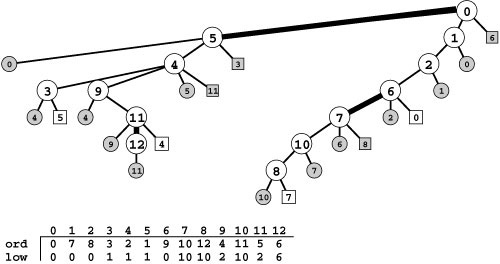

Finding the bridges in a graph seems, at first blush, to be a nontrivial graph-processing problem, but it actually is an application of DFS where we can exploit basic properties of the DFS tree that we have already noted. Specifically, back edges cannot be bridges because we know that the two nodes they connect are also connected by a path in the DFS tree. Moreover, we can add a simple test to our recursive DFS function to test whether or not tree edges are bridges. The basic idea, stated formally next, is illustrated in Figure 18.17.

Property 18.5 In any DFS tree, a tree edge v-w is a bridge if and only if there are no back edges that connect a descendant of w to an ancestor of w .

Proof: If there is such an edge, v-w cannot be a bridge. Conversely, if v-w is not a bridge, then there has to be some path from w to v in the graph other than w-v itself. Every such path has to have some such edge. •

Asserting this property is equivalent to saying that the only link in the subtree rooted at w that points to a node not in the subtree is the parent link from w back to v. This condition holds if and only if every path connecting any of the nodes in w’s subtree to any node that

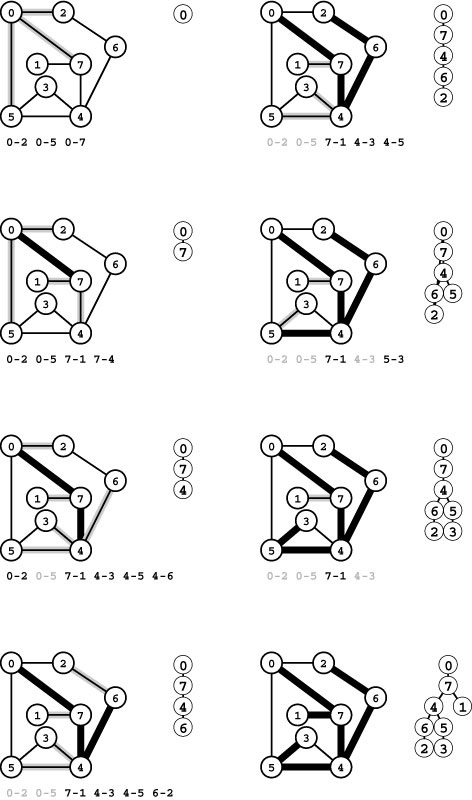

Figure 18.17 DFS tree for finding bridges

Nodes 5, 7, and 12 in this DFS tree for the graph in Figure 18.16 all have the property that no back edge connects a descendant with an ancestor, and no other nodes have that property. Therefore, as indicated, breaking the edge between one of these nodes and its parent would disconnect the subtree rooted at that node from the rest of the graph. That is, the edges 0-5, 11-12, and 6-7 are bridges. We use the vertex-indexed array low to keep track of the lowest preorder number (ord value) referenced by any back edge in the subtree rooted at the vertex. For example, the value of low[9] is 2 because one of the back edges in the subtree rooted at 9 points to 4 (the vertex with preorder number 2), and no other back edge points higher in the tree. Nodes 5, 7, and 12 are the ones for which the low value is equal to the ord value.

is not in w’s subtree includes v-w. In other words, removing v-w would disconnect from the rest of the graph the subgraph corresponding to w’s subtree.

Program 18.7 shows how we can augment DFS to identify bridges in a graph, using Property 18.5. For every vertex v, we use the recursive function to compute the lowest preorder number that can be reached by a sequence of zero or more tree edges followed by a single back edge from any node in the subtree rooted at v. If the computed number is equal to v’s preorder number, then there is no edge connecting a descendant with an ancestor, and we have identified a bridge. The computation for each vertex is straightforward: We proceed through the adjacency list, keeping track of the minimum of the numbers that we can reach by following each edge. For tree edges, we do the computation recursively; for back edges, we use the preorder number of the adjacent vertex. If the call to the recursive function for an edge w-t does not uncover a path to a node with a preorder number less than t’s preorder number, then w-t is a bridge.

Property 18.6 We can find a graph’s bridges in linear time.

Proof: Program 18.7 is a minor modification to DFS that involves adding a few constant-time tests, so it follows directly from Properties 18.3 and 18.4 that finding the bridges in a graph requires time proportional to V2 for the adjacency-matrix representation and to V + E for the adjacency-lists representation.•

In Program 18.7, we use DFS to discover properties of the graph. The graph representation certainly affects the order of the search, but it does not affect the results because bridges are a characteristic of the graph rather than of the way that we choose to represent or search

Figure 18.18 Another DFS tree for finding bridges

This diagram shows a different DFS tree than the one in Figure 18.17 for the graph in Figure 18.16, where we start the search at a different node. Although we visit the nodes and edges in a completely different order, we still find the same bridges (of course). In this tree, 0, 7, and 11 are the ones for which the low value is equal to the ord value, so the edges connecting each of them to their parents (12-11, 5-0, and 6-7, respectively) are bridges.

the graph. As usual, any DFS tree is simply another representation of the graph, so all DFS trees have the same connectivity properties. The correctness of the algorithm depends on this fundamental fact. For example, Figure 18.18 illustrates a different search of the graph, starting from a different vertex, that (of course) finds the same bridges. Despite Property 18.6, when we examine different DFS trees for the same graph, we see that some search costs may depend not just on properties of the graph but also on properties of the DFS tree. For example, the amount of space needed for the stack to support the recursive calls is larger for the example in Figure 18.18 than for the example in Figure 18.17.

As we did for regular connectivity in Program 18.4, wemaywish to use Program 18.7 to build a class for testing whether a graph is edge-connected or to count the number of edge-connected components. If desired, we can proceed as for Program 18.4 to gives clients the ability to create (in linear time) objects that can respond in constant time to queries that ask whether two vertices are in the same edge-connected component (see Exercise 18.36).

We conclude this section by considering other generalizations of connectivity, including the problem of determining which vertices are critical to keeping a graph connected. By including this material here, we keep in one place the basic background material for the more complex algorithms that we consider in Chapter 22. If you are new to connectivity problems, you may wish to skip to Section 18.7 and return here when you study Chapter 22.

When we speak of removing a vertex, we also mean that we remove all its incident edges. As illustrated in Figure 18.19, removing either of the vertices on a bridge would disconnect a graph (unless the bridge were the only edge incident on one or both of the vertices), but there are also other vertices, not associated with bridges, that have the same property.

Definition 18.2 An articulation point in a graph is a vertex that, if removed, would separate a connected graph into at least two disjoint subgraphs.

We also refer to articulation points as separation vertices or cut vertices. We might use the term “vertex connected” to describe a graph that has no separation vertices, but we use different terminology based on a related characterization that turns out to be equivalent.

Definition 18.3 A graph is said to be biconnected if every pair of vertices is connected by two disjoint paths.

The requirement that the paths be disjoint is what distinguishes biconnectivity from edge connectivity. An alternate definition of edge connectivity is that every pair of vertices is connected by two edge-disjoint paths—these paths can have a vertex (but no edge) in common. Biconnectivity is a stronger condition: An edge-connected graph remains connected if we remove any edge, but a biconnected graph remains connected if we remove any vertex (and all that vertex’s incident edges). Every biconnected graph is edge-connected, but an edge-connected graph need not be biconnected. We also use the term separable to refer to graphs that are not biconnected, because they can be separated into two pieces by removal of just one vertex. The separation vertices are the key to biconnectivity.

Property 18.7 A graph is biconnected if and only if it has no separation vertices (articulation points).

Proof: Assume that a graph has a separation vertex. Let s and t be vertices that would be in two different pieces if the separation vertex were removed. All paths between s and t must contain the separation vertex, therefore the graph is not biconnected. The proof in the other direction is more difficult and is a worthwhile exercise for the mathematically inclined reader (see Exercise 18.40).•

Figure 18.19 Graph separability terminology

This graph has two edge-connected components and one bridge. The edge-connected component above the bridge is also biconnected; the one below the bridge consists of two biconnected components that are joined at an articulation point.

Figure 18.20 Articulation points (separation vertices)

This graph is not biconnected. The vertices 0, 4, 5, 6, 7, and 11 (shaded) are articulation points. The graph has five biconnected components: one comprising edges 4-9, 9-11, and 4-11; another comprising edges 7-8, 8-10, and 7-10; another comprising edges 0-1, 1-2, 2-6, and 6-0; another comprising edges 3-5, 4-5, and 3-4; and the single vertex 12. Adding an edge connecting 12 to 7, 8, or 10 would biconnect the graph.

We have seen that we can partition the edges of a graph that is not connected into a set of connected subgraphs, and that we can partition the edges of a graph that is not edge-connected into a set of bridges and edge-connected subgraphs (which are connected by bridges). Similarly, we can divide any graph that is not biconnected into a set of bridges and biconnected components, which are each biconnected subgraphs. The biconnected components and bridges are not a proper partition of the graph because articulation points may appear on multiple biconnected components (see, for example, Figure 18.20). The biconnected components are connected at articulation points, perhaps by bridges.

A connected component of a graph has the property that there exists a path between any two vertices in the graph. Analogously, a biconnected component has the property that there exist two disjoint paths between any pair of vertices.

We can use the same DFS-based approach that we used in Program 18.7 to determine whether or not a graph is biconnected and to identify the articulation points. We omit the code because it is very similar to Program 18.7, with an extra test to check whether the root of the DFS tree is an articulation point (see Exercise 18.43). Developing code to print out the biconnected components is also a worthwhile exercise that is only slightly more difficult than the corresponding code for edge connectivity (see Exercise 18.44).

Property 18.8 We can find a graph’s articulation points and biconnected components in linear time.

Proof: As for Property 18.7, this fact follows from the observation that the solutions to Exercises 18.43 and 18.44 involve minor modifications to DFS that amount to adding a few constant-time tests per edge.

Biconnectivity generalizes simple connectivity. Further generalizations have been the subjects of extensive studies in classical graph theory and in the design of graph algorithms. These generalizations indicate the scope of graph-processing problems that we might face, many of which are easily posed but less easily solved.

Definition 18.4 Agraphis k-connected if there are at least k vertex-disjoint paths connecting every pair of vertices in the graph. The vertex connectivity of a graph is the minimum number of vertices that need to be removed to separate it into two pieces.

In this terminology, “1-connected” is the same as “connected” and “2-connected” is the same as “biconnected.” A graph with an articulation point has vertex connectivity 1 (or 0), so Property 18.7 says that a graph is 2-connected if and only if its vertex connectivity is not less than 2. It is a special case of a classical result from graph theory, known as Whitney’s theorem, which says that a graph is k -connected if and only if its vertex connectivity is not less than k. Whitney’s theorem follows directly from Menger’s theorem (see Section 22.7), which says that the minimum number of vertices whose removal disconnects two vertices in a graph is equal to the maximum number of vertex-disjoint paths between the two vertices (to prove Whitney’s theorem, apply Menger’s theorem to every pair of vertices).

Definition 18.5 A graph is k–edge-connected if there are at least k edge-disjoint paths connecting every pair of vertices in the graph. The edge connectivity of a graph is the minimum number of edges that need to be removed to separate it into two pieces.

In this terminology, “2–edge-connected” is the same as “edge-connected” (that is, an edge-connected graph has edge connectivity greater than 1, and a graph with at least one bridge has edge connectivity 1). Another version of Menger’s theorem says that the minimum number of vertices whose removal disconnects two vertices in a graph is equal to the maximum number of vertex-disjoint paths between the two vertices, which implies that a graph is k –edge-connected if and only if its edge connectivity is k.

With these definitions, we are led to generalize the connectivity problems that we considered at the beginning of this section.

st-connectivity What is the minimum number of edges whose removal will separate two given vertices s and t in a given graph? What is the minimum number of vertices whose removal will separate two given vertices s and t in a given graph?

General connectivity Is a given graph k -connected? Is a given graph k –edge-connected? What is the edge connectivity and the vertex connectivity of a given graph?

Although these problems are much more difficult to solve than are the simple connectivity problems that we have considered in this section, they are members of a large class of graph-processing problems that we can solve using the general algorithmic tools that we consider in Chapter 22 (with DFS playing an important role); we consider specific solutions in Section 22.7.

Exercises

• 18.33 If a graph is a forest, all its edges are separation edges; but which vertices are separation vertices?

• 18.34 Consider the graph

3-7 1-4 7-8 0-5 5-2 3-8 2-9 0-6 4-9 2-6 6-4.

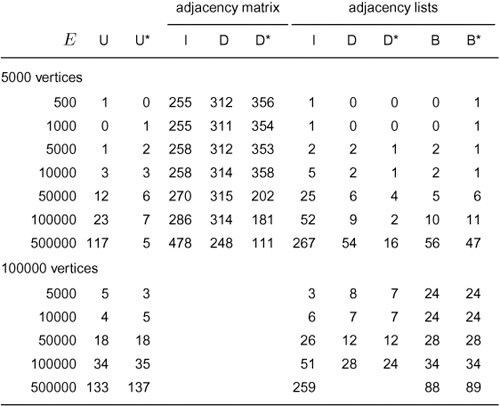

Draw the standard adjacency-lists DFS tree. Use it to find the bridges and the edge-connected components.