CHAPTER NINETEEN

Digraphs and DAGs

WHEN WE ATTACH significance to the order in which the two vertices are specified in each edge of a graph, we have an entirely different combinatorial object known as a digraph, or directed graph. Figure 19.1 shows a sample digraph. In a digraph, the notation s-t describes an edge that goes from s to t but provides no information about whether or not there is an edge from t to s. There are four different ways in which two vertices might be related in a digraph: no edge; an edge s-t from s to t; an edge t-s from t to s; or two edges s-t and t-s, which indicate connections in both directions. The one-way restriction is natural in many applications, easy to enforce in our implementations, and seems innocuous; but it implies added combinatorial structure that has profound implications for our algorithms and makes working with digraphs quite different from working with undirected graphs. Processing digraphs is akin to traveling around in a city where all the streets are one-way, with the directions not necessarily assigned in any uniform pattern. We can imagine that getting from one point to another in such a situation could be a challenge indeed.

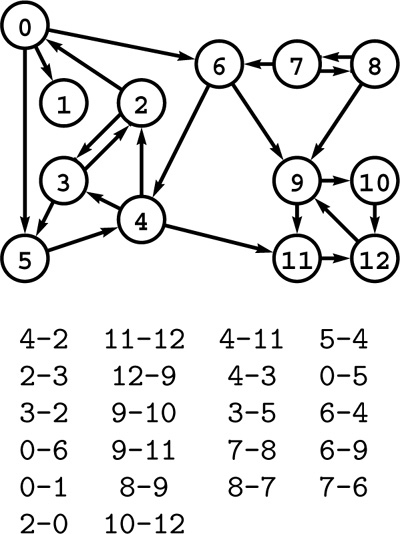

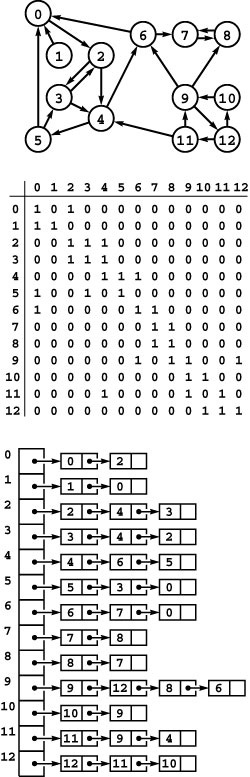

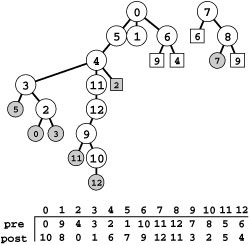

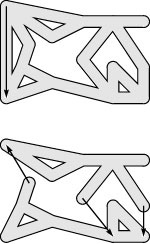

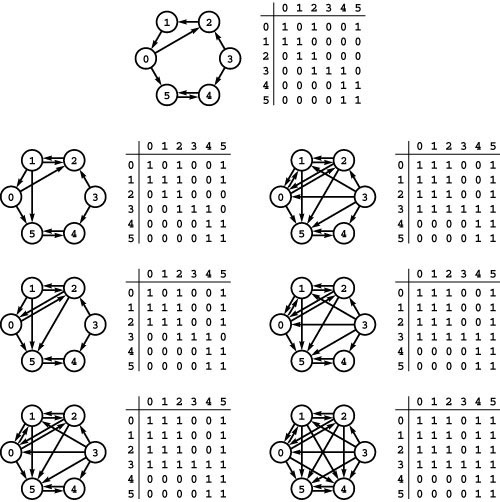

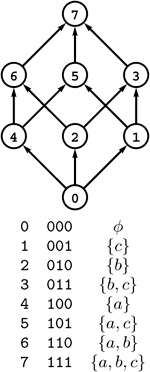

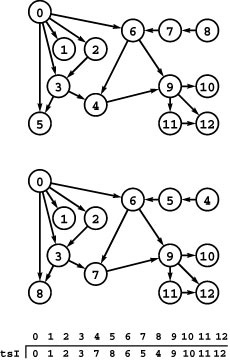

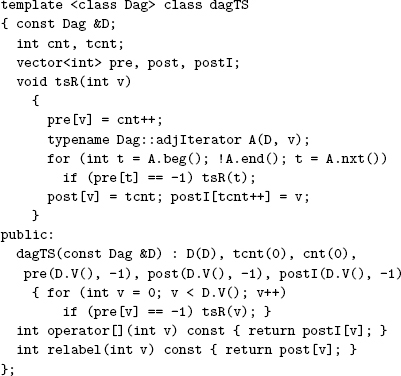

Figure 19.1 A directed graph (digraph)

A digraph is defined by a list of nodes and edges (bottom), with the order that we list the nodes when specifying an edge implying that the edge is directed from the first node to the second. When drawing a digraph, we use arrows to depict directed edges (top).

We interpret edge directions in digraphs in many ways. For example, in a telephone-call graph, we might consider an edge to be directed from the caller to the person receiving the call. In a transaction graph, we might have a similar relationship where we interpret an edge as representing cash, goods, or information flowing from one entity to another. We find a modern situation that fits this classic model on the Internet, with vertices representing Web pages and edges the links between the pages. In Section 19.4, we examine other examples, many of which model situations that are more abstract.

One common situation is for the edge direction to reflect a precedence relationship. For example, a digraph might model a manufacturing line: Vertices correspond to jobs to be done, and an edge exists from vertex s to vertex t if the job corresponding to vertex s must be done before the job corresponding to vertex t. Another way to model the same situation is to use a PERT chart: edges represent jobs and vertices implicitly specify the precedence relationships (at each vertex, all incoming jobs must complete before any outgoing jobs can begin). How do we decide when to perform each of the jobs so that none of the precedence relationships are violated? This is known as a scheduling problem. It makes no sense if the digraph has a cycle so, in such situations, we are working with directed acyclic graphs (DAGs). We shall consider basic properties of DAGs and algorithms for this simple scheduling problem, which is known as topological sorting, in Sections 19.5 through 19.7. In practice, scheduling problems generally involve weights on the vertices or edges that model the time or cost of each job. We consider such problems in Chapters 21 and 22.

The number of possible digraphs is truly huge. Each of the V2 possible directed edges (including self-loops) could be present or not, so the total number of different digraphs is 2V2. As illustrated in Figure 19.2, this number grows very quickly, even by comparison with the number of different undirected graphs and even when V is small. As with undirected graphs, there is a much smaller number of classes of digraphs that are isomorphic to each other (the vertices of one can be relabeled to make it identical to the other), but we cannot take advantage of this reduction because we do not know an efficient algorithm for digraph isomorphism.

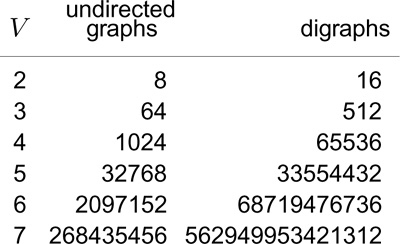

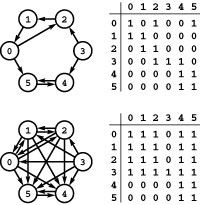

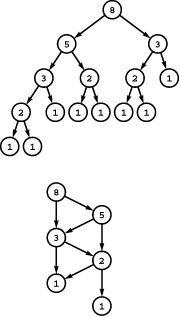

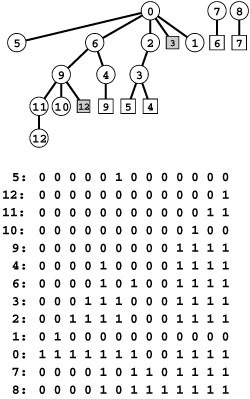

Figure 19.2 Graph enumeration

While the number of different undirected graphs with V vertices is huge, even when V is small, the number of different digraphs with V vertices is much larger. For undirected graphs, the number is given by the formula 2V (V +1)/2; for digraphs, the formula is 2V2.

Certainly, any program will have to process only a tiny fraction of the possible digraphs; indeed, the numbers are so large that we can be certain that virtually all digraphs will not be among those processed by any given program. Generally, it is difficult to characterize the digraphs that we might encounter in practice, so we design our algorithms such that they can handle any possible digraph as input. On the one hand, this situation is not new to us (for example, virtually none of the 1000! permutations of 1000 elements have ever been processed by any sorting program). On the other hand, it is perhaps unsettling to know that, for example, even if all the electrons in the universe could run supercomputers capable of processing 1010 graphs per second for the estimated lifetime of the universe, those supercomputers would see far fewer than 10−100 percent of the 10-vertex digraphs (see Exercise 19.9).

This brief digression on graph enumeration perhaps underscores several points that we cover whenever we consider the analysis of algorithms and indicates their particular relevance to the study of digraphs. Is it important to design our algorithms to perform well in the worst case, when we are so unlikely to see any particular worst-case digraph? Is it useful to choose algorithms on the basis of average-case analysis, or is that a mathematical fiction? If our intent is to have implementations that perform efficiently on digraphs that we see in practice, we are immediately faced with the problem of characterizing those digraphs. Mathematical models that can convincingly describe the digraphs that we might expect in applications are even more difficult to develop than are models for undirected graphs.

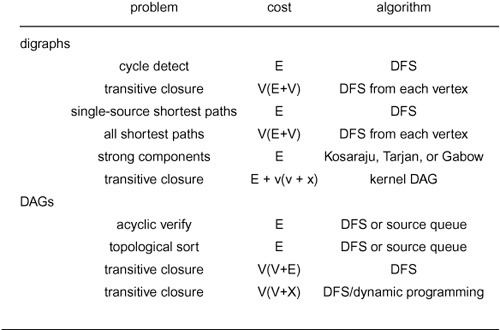

In this chapter, we revisit, in the context of digraphs, a subset of the basic graph-processing problems that we considered in Chapter 17, and we examine several problems that are specific to digraphs. In particular, we look at DFS and several of its applications, including cycle detection (to determine whether a digraph is a DAG);topological sort (to solve, for example, the scheduling problem for DAGs that was just described); and computation of the transitive closure and the strong components (which have to do with the basic problem of determining whether or not there is a directed path between two given vertices). As in other graph-processing domains, these algorithms range from the trivial to the ingenious; they are both informed by and give us insight into the complex combinatorial structure of digraphs.

Exercises

• 19.1 Find a large digraph somewhere online—perhaps a transaction graph in some online system, or a digraph defined by links on Web pages.

• 19.2 Find a large DAG somewhere online—perhaps one defined by function-definition dependencies in a large software system, or by directory links in a large file system.

19.3 Make a table like Figure 19.2, but exclude from the counts graphs and digraphs with self-loops.

19.4 How many digraphs are there that contain V vertices and E edges?

• 19.5 How many digraphs correspond to each undirected graph that contains V vertices and E edges?

• 19.6 How many digits do we need to express the number of digraphs that have V vertices as a base-10 number?

• 19.7 Draw the nonisomorphic digraphs that contain three vertices.

••• 19.8 How many different digraphs are there with V vertices and E edges if we consider two digraphs to be different only if they are not isomorphic?

• 19.9 Compute an upper bound on the percentage of 10-vertex digraphs that could ever be examined by any computer, under the assumptions described in the text and the additional ones that the universe has less than 1080 electrons and that the age of the universe will be less than 1020 years.

19.1 Glossary and Rules of the Game

Our definitions for digraphs are nearly identical to those in Chapter 17 for undirected graphs (as are some of the algorithms and programs that we use), but they are worth restating. The slight differences in the wording to account for edge directions imply structural properties that will be the focus of this chapter.

Definition 19.1 A digraph (or directed graph ) is a set of vertices plus a set of directed edges that connect ordered pairs of vertices (with no duplicate edges). We say that an edge goes from its first vertex to its second vertex.

As we did with undirected graphs, we disallow duplicate edges in this definition but reserve the option of allowing them when convenient for various applications and implementations. We explicitly allow self-loops in digraphs (and usually adopt the convention that every vertex has one) because they play a critical role in the basic algorithms.

Definition 19.2 A directed path in a digraph is a list of vertices in which there is a (directed) digraph edge connecting each vertex in the list to its successor in the list. We say that a vertex t is reachable from a vertex s if there is a directed path from s to t.

We adopt the convention that each vertex is reachable from itself and normally implement that assumption by ensuring that self-loops are present in our digraph representations.

Understanding many of the algorithms in this chapter requires understanding the connectivity properties of digraphs and the effect of these properties on the basic process of moving from one vertex to another along digraph edges. Developing such an understanding is more complicated for digraphs than for undirected graphs. For example, we might be able to tell at a glance whether a small undirected graph is connected or contains a cycle; these properties are not as easy to spot in digraphs, as indicated in the typical example illustrated in Figure 19.3.

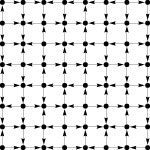

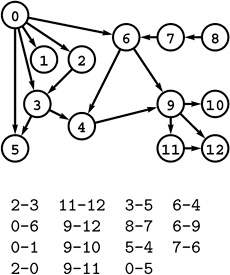

Figure 19.3 A grid digraph

This small digraph is similar to the large grid network that we first considered in Chapter 1, except that it has a directed edge on every grid line, with the direction randomly chosen. Even though the graph has relatively few nodes, its connectivity properties are not readily apparent. Is there a directed path from the upper left corner to the lower right corner?

While examples like this highlight differences, it is important to note that what a human considers difficult may or may not be relevant to what a program considers difficult—for instance, writing a DFS class to find cycles in digraphs is no more difficult than for undirected graphs. More important, digraphs and graphs have essential structural differences. For example, the fact that t is reachable from s in a digraph indicates nothing about whether s is reachable from t. This distinction is obvious, but critical, as we shall see.

As mentioned in Section 17.3, the representations that we use for digraphs are essentially the same as those that we use for undirected graphs. Indeed, they are more straightforward because we represent each edge just once, as illustrated in Figure 19.4. In the adjacency-lists representation, an edge s-t is represented as a list node containing t in the linked list corresponding to s. In the adjacency-matrix representation, we need to maintain a full V -by-V matrix and to represent an edge s-t by a 1 bit in row s and column t. We do not put a 1 bit in row t and column s unless there is also an edge t-s. In general, the adjacency matrix for a digraph is not symmetric about the diagonal.

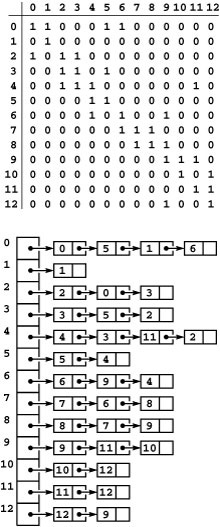

Figure 19.4 Digraph representations

The adjacency-matrix and adjacency-lists representations of a digraph have only one representation of each edge, as illustrated in the adjacency-matrix (top) and adjacency-lists (bottom) representation of the graph depicted in Figure 19.1. These representations both include self-loops at every vertex, which is typical in digraph processing.

There is no difference in these representations between an undirected graph and a directed graph with self-loops at every vertex and two directed edges for each edge connecting distinct vertices in the undirected graph (one in each direction). Thus, we can use the algorithms that we develop in this chapter for digraphs to process undirected graphs, provided that we interpret the results appropriately. In addition, we use the programs that we considered in Chapter 17 as the basis for our digraph programs: Our DenseGRAPH and SparseMultiGRAPH class implementations Programs 17.7 through 17.10 build digraphs when the constructor has true as a second argument.

The indegree of a vertex in a digraph is the number of directed edges that lead to that vertex. The outdegree of a vertex in a digraph is the number of directed edges that emanate from that vertex. No vertex is reachable from a vertex of outdegree 0, which is called a sink; a vertex of indegree 0, which is called a source, is not reachable from any other vertex. A digraph where self-loops are allowed and every vertex has outdegree 1 is called a map (a function from the set of integers from 0 to V − 1 onto itself). We can easily compute the indegree and outdegree of each vertex, and find sources and sinks, in linear time and space proportional to V, using vertex-indexed vectors (see Exercise 19.19).

The reverse of a digraph is the digraph that we obtain by switching the direction of all the edges. Figure 19.5 shows the reverse and its representations for the digraph of Figure 19.1. We use the reverse in digraph algorithms when we need to know from where edges come because our standard representations tell us only where the edges go. For example, indegree and outdegree change roles when we reverse a digraph.

Figure 19.5 Digraph reversal

Reversing the edges of a digraph corresponds to transposing the adjacency matrix but requires rebuilding the adjacency lists (see Figures 19.1 and 19.4).

For an adjacency-matrix representation, we could compute the reverse by making a copy of the matrix and transposing it (interchanging its rows and columns). If we know that the graph is not going to be modified, we can actually use the reverse without any extra computation by simply interchanging the vertices in our references to edges when we want to refer to the reverse. For example, an edge s-t in a digraph G is indicated by a 1 in adj[s][t]. Thus, if we were to compute the reverse R of G, it would have a 1 in adj[t][s]. We do not need to do so, however, if we base our client implementations on the edge test edge(s, t), because to switch to the reverse we just replace every such reference by edge(t, s). This opportunity may seem obvious, but it is often overlooked. For an adjacency-lists representation, the reverse is a completely different data structure, and we need to take time proportional to the number of edges to build it, as shown in Program 19.1.

Yet another option, which we shall address in Chapter 22, is to maintain two representations of each edge, in the same manner as we do for undirected graphs (see Section 17.3) but with an extra bit that indicates edge direction. For example, to use this method in an adjacency-lists representation we would represent an edge s-t by a node for t on the adjacency list for s (with the direction bit set to indicate that to move from s to t is a forward traversal of the edge) and a node for s on the adjacency list for t (with the direction bit set to indicate that to move from t to s is a backward traversal of the edge). This representation supports algorithms that need to traverse edges in digraphs in both directions. It is also generally convenient to include pointers connecting the two representations of each edge in such cases. We defer considering this representation in detail to Chapter 22, where it plays an essential role.

In digraphs, by analogy to undirected graphs, we speak of directed cycles, which are directed paths from a vertex back to itself, and simple directed paths and cycles, where the vertices and edges are distinct. Note that s-t-s is a cycle of length 2 in a digraph but that cycles in undirected graphs must have at least three distinct vertices.

In many applications of digraphs, we do not expect to see any cycles, and we work with yet another type of combinatorial object.

Definition 19.3A directed acyclic graph (DAG) is a digraph with no directed cycles.

We expect DAGs, for example, in applications where we are using digraphs to model precedence relationships. DAGs not only arise naturally in these and other important applications, but also, as we shall see, in the study of the structure of general digraphs. A sample DAG is illustrated in Figure 19.6.

Figure 19.6 A directed acyclic graph (DAG)

This digraph has no cycles, a property that is not immediately apparent from the edge list or even from examining its drawing.

Directed cycles are therefore the key to understanding connectivity in digraphs that are not DAGs. An undirected graph is connected if there is a path from every vertex to every other vertex; for digraphs, we modify the definition as follows:

Definition 19.4 A digraph is strongly connected if every vertex is reachable from every vertex.

The graph in Figure 19.1 is not strongly connected because, for example, there are no directed paths from vertices 9 through 12 to any of the other vertices in the graph.

As indicated by strongly, this definition implies a relationship between each pair of vertices stronger than reachability. In any digraph, we say that a pair of vertices s and t are strongly connected or mutually reachable if there is a directed path from s to t and a directed path from t to s. (Our convention that each vertex is reachable from itself implies that each vertex is strongly connected to itself.) A digraph is strongly connected if and only if all pairs of vertices are strongly connected. The defining property of strongly connected digraphs is one that we take for granted in connected undirected graphs: If there is a path from s to t, then there is a path from t to s. In the case of undirected graphs, we know this fact because the same path fits the bill, traversed in the other direction; in digraphs, it must be a different path.

Another way of saying that a pair of vertices is strongly connected is that they lie on some directed cyclic path. Recall that we use the term cyclic path instead of cycle to indicate that the path does not need to be simple. For example, in Figure 19.1, 5 and 6 are strongly connected because 6 is reachable from 5 via the directed path 5-4-2-0-6 and 5 is reachable from 6 via the directed path 6-4-3-5; and these paths imply that 5 and 6 lie on the directed cyclic path 5-4-2-0-6-4-3-5, but they do not lie on any (simple) directed cycle. Note that no DAG that contains more than one vertex is strongly connected.

Like simple connectivity in undirected graphs, this relation is transitive: If s is strongly connected to t, and t is strongly connected to u, then s is strongly connected to u. Strong connectivity is an equivalence relation that divides the vertices into equivalence classes containing vertices that are strongly connected to each other. (See Section 19.4 for a detailed discussion of equivalence relations.) Again, strong connectivity provides a property for digraphs that we take for granted with respect to connectivity in undirected graphs.

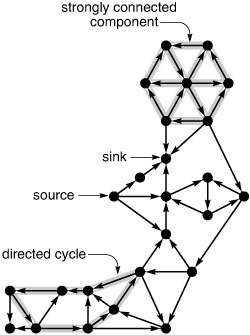

Figure 19.7 Digraph terminology

Sources (vertices with no edges coming in) and sinks (vertices with no edges going out) are easy to identify in digraph drawings like this one, but directed cycles and strongly connected components are more difficult to identify. What is the longest directed cycle in this digraph? How many strongly connected components with more than one vertex are there?

Property 19.1 A digraph that is not strongly connected comprises a set of strongly connected components ( strong components , for short), which are maximal strongly connected subgraphs, and a set of directed edges that go from one component to another.

Proof: Like components in undirected graphs, strong components in digraphs are induced subgraphs of subsets of vertices: Each vertex is in exactly one strong component. To prove this fact, we first note that every vertex belongs to at least one strong component, which contains (at least) the vertex itself. Then we note that every vertex belongs to at most one strong component: If a vertex were to belong to two different components, then there would be paths through that vertex connecting vertices in those components to each other, in both directions, which contradicts the maximality of both components.

For example, a digraph that consists of a single directed cycle has just one strong component. At the other extreme, each vertex in a DAG is a strong component, so each edge in a DAG goes from one component to another. In general, not all edges in a digraph are in the strong components. This situation is in contrast to the analogous situation for connected components in undirected graphs, where every vertex and every edge belongs to some connected component, but similar to the analogous situation for edge-connected components in undirected graphs. The strong components in a digraph are connected by edges that go from a vertex in one component to a vertex in another but do not go back again.

Property 19.2 Given a digraph D, define another digraph K ( D ) with one vertex corresponding to each strong component of D and one edge in K ( D ) corresponding to each edge in D that connects vertices in different strong components (connecting the vertices in K that correspond to the strong components that it connects in D). Then, K ( D ) is a DAG (which we call the kernel DAG of D).

Proof: If K ( D ) were to have a directed cycle, then vertices in two different strong components of D would fall on a directed cycle, and that would be a contradiction.

Figure 19.8 shows the strong components and the kernel DAG for a sample digraph. We look at algorithms for finding strong components and building kernel DAGs in Section 19.6.

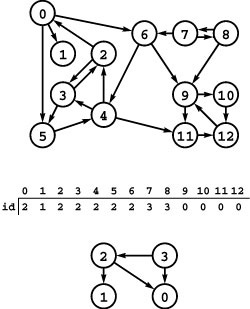

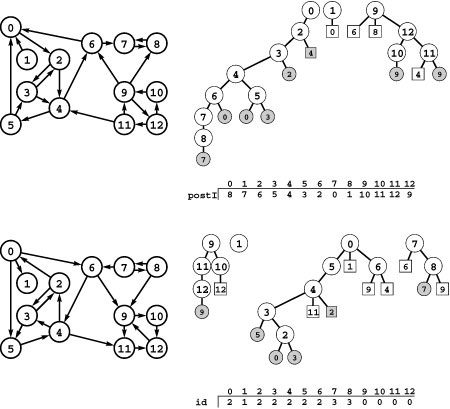

Figure 19.8 Strong components and kernel DAG

This digraph (top) consists of four strong components, as identified (with arbitrary integer labels) by the vertex-indexed array id (center). Component 0 consists of the vertices 9, 10, 11 and 12; component 1 consists of the single vertex 1; component 2 consists of the vertices 0, 2, 3, 4, 5, and 6; and component 3 consists of the vertices 7 and 8. If we draw the graph defined by the edges between different components, we get a DAG (bottom).

From these definitions, properties, and examples, it is clear that we need to be precise when referring to paths in digraphs. We need to consider at least the following three situations:

Connectivity We reserve the term connected for undirected graphs. In digraphs, we might say that two vertices are connected if they are connected in the undirected graph defined by ignoring edge directions, but we generally avoid such usage.

Reachability In digraphs, we say that vertex t is reachable from vertex s if there is a directed path from s to t. We generally avoid the term reachable when referring to undirected graphs, although we might consider it to be equivalent to connected because the idea of one vertex being reachable from another is intuitive in certain undirected graphs (for example, those that represent mazes).

Strong connectivity Two vertices in a digraph are strongly connected if they are mutually reachable; in undirected graphs, two connected vertices imply the existence of paths from each to the other. Strong connectivity in digraphs is similar in certain ways to edge connectivity in undirected graphs.

We wish to support digraph ADT operations that take two vertices s and t as arguments and allow us to test whether

• t is reachable from s

• s and t are strongly connected (mutually reachable)

What resource requirements are we willing to expend for these operations? As we saw in Section 17.5, DFS provides a simple solution for connectivity in undirected graphs that takes time proportional to V, but if we are willing to invest preprocessing time proportional to V + E and space proportional to V, we can answer connectivity queries in constant time. Later in this chapter, we examine algorithms for strong connectivity that have these same performance characteristics.

But our primary aim is to address the fact that reachability queries in digraphs are more difficult to handle than connectivity or strong connectivity queries. In this chapter, we examine classical algorithms that require preprocessing time proportional to V E and space proportional to V2, develop implementations that can achieve constant-time reachability queries with linear space and preprocessing time for some di-graphs, and study the difficulty of achieving this optimal performance for all digraphs.

Exercises

• 19.10 Give the adjacency-lists structure that is built by Program 17.9 for the digraph

3-7 1-4 7-8 0-5 5-2 3-8 2-9 0-6 4-9 2-6 6-4.

• 19.11 Write a program to generate random sparse digraphs for a well-chosen set of values of V and E such that you can use it to run meaningful empirical tests on digraphs drawn from the random-edges model.

• 19.12 Write a program to generate random sparse graphs for a well-chosen set of values of V and E such that you can use it to run meaningful empirical tests on graphs drawn from the random-graph model.

• 19.13 Write a program that generates random digraphs by connecting vertices arranged in a ![]() -by-

-by- ![]() grid to their neighbors, with edge directions randomly chosen (see Figure 19.3).

grid to their neighbors, with edge directions randomly chosen (see Figure 19.3).

• 19.14 Augment your program from Exercise 19.13 to add R extra random edges (all possible edges equally likely). For large R, shrink the grid so that the total number of edges remains about V. Test your program as described in Exercise 19.11.

• 19.15 Modify your program from Exercise 19.14 such that an extra edge goes from a vertex s to a vertex t with probability inversely proportional to the Euclidean distance between s and t.

• 19.16 Write a program that generates V random intervals in the unit interval, all of length d, then builds a digraph with an edge from interval s to interval t if and only if at least one of the endpoints of s falls within t (see Exercise 17.75). Determine how to set d so that the expected number of edges is E. Test your program as described in Exercise 19.11 (for low densities) and as described in Exercise 19.12 (for high densities).

• 19.17 Write a program that chooses V vertices and E edges from the real digraph that you found for Exercise 19.1. Test your program as described in Exercise 19.11 (for low densities) and as described in Exercise 19.12 (for high densities).

• 19.18 Write a program that produces each of the possible digraphs with V vertices and E edges with equal likelihood (see Exercise 17.70). Test your program as described in Exercise 19.11 (for low densities) and as described in Exercise 19.12 (for high densities).

• 19.19 Implement a class that provides clients with the capability to learn the indegree and outdegree of any given vertex in a digraph, in constant time, after linear-time preprocessing in the constructor. Then add member functions that return the number of sources and sinks, in constant time.

• 19.20 Use your program from Exercise 19.19 to find the average number of sources and sinks in various types of digraphs (see Exercises 19.11–18).

• 19.21 Show the adjacency-lists structure that is produced when you use Program 19.1 to find the reverse of the digraph

3-7 1-4 7-8 0-5 5-2 3-8 2-9 0-6 4-9 2-6 6-4.

• 19.22 Characterize the reverse of a map.

19.23 Design a digraph class that explicitly provides clients with the capability to refer to both a digraph and its reverse, and provide an implementation, for any representation that supports edge queries.

19.24 Provide an alternate implementation for your class in Exercise 19.23 that maintains both orientations of edges on adjacency lists.

• 19.25 Describe a family of strongly connected digraphs with V vertices and no (simple) directed cycles of length greater than 2.

• 19.26 Give the strong components and a kernel DAG of the digraph

3-7 1-4 7-8 0-5 5-2 3-8 2-9 0-6 4-9 2-6 6-4.

• 19.27 Give a kernel DAG of the grid digraph shown in Figure 19.3.

19.28 How many digraphs have V vertices, all of outdegree k?

• 19.29 What is the expected number of different adjacency-lists representations of a random digraph? Hint: Divide the total number of possible representations by the total number of digraphs.

19.2 Anatomy of DFS in Digraphs

We can use our DFS code for undirected graphs from Chapter 18 to visit each edge and each vertex in a digraph. The basic principle behind the recursive algorithm holds: To visit every vertex that can be reached from a given vertex, we mark the vertex as having been visited, then (recursively) visit all the vertices that can be reached from each of the vertices on its adjacency list.

In an undirected graph, we have two representations of each edge, but the second representation that is encountered in a DFS always leads to a marked vertex and is ignored (see Section 18.2). In a digraph, we have just one representation of each edge, so we might expect DFS algorithms to be more straightforward. But digraphs themselves are more complicated combinatorial objects than undirected graphs, so this expectation is not justified. For example, the search trees that we use to understand the operation of the algorithm have a more complicated structure for digraphs than for undirected graphs. This complication makes digraph-processing algorithms more difficult to devise. For example, as we will see, it is more difficult to make inferences about directed paths in digraphs than it is to make inferences about paths in graphs.

As we did in Chapter 18, we use the term standard adjacency-lists DFS to refer to the process of inserting a sequence of edges into a digraph ADT implemented with an adjacency-lists representation (Program 17.9, invoked with true as the constructor’s second argument), then doing a DFS with, for example, Program 18.3 and the parallel term standard adjacency-matrix DFS to refer to the process of inserting a sequence of edges into a digraph ADT implemented with an adjacency-matrix representation (Program 17.7, invoked with true as the constructor’s second argument), then doing a DFS with, for example, Program 18.3.

For example, Figure 19.9 shows the recursive-call tree that describes the operation of a standard adjacency-lists DFS on the sample digraph in Figure 19.1. Just as for undirected graphs, such trees have internal nodes that correspond to calls on the recursive DFS function for each vertex, with links to external nodes that correspond to edges that take us to vertices that have already been seen. Classifying the nodes and links gives us information about the search (and the di-graph), but the classification for digraphs is quite different from the classification for undirected graphs.

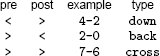

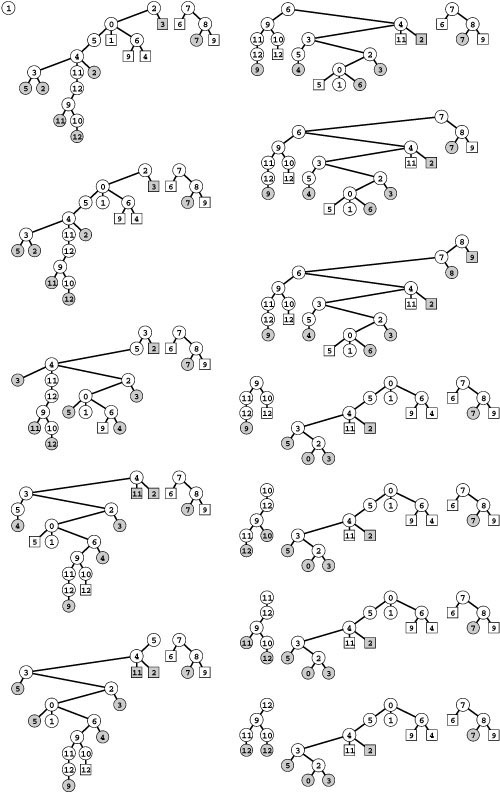

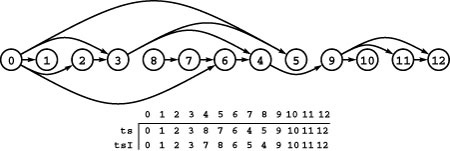

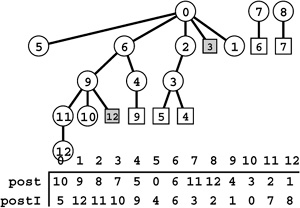

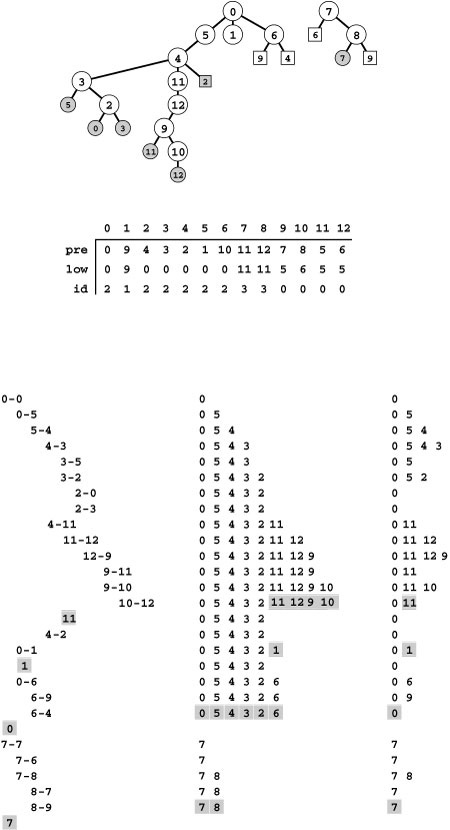

Figure 19.9 DFS forest for a digraph

This forest describes a standard adjacency-lists DFS of the sample digraph in Figure 19.1. External nodes represent previously visited internal nodes with the same label; otherwise the forest is a representation of the digraph, with all edges pointing down. There are four types of edges: tree edges, to internal nodes; back edges, to external nodes representing ancestors (shaded circles); down edges, to external nodes representing descendants (shaded squares); and cross edges, to external nodes representing nodes that are neither ancestors nor descendants (white squares). We can determine the type of edges to visited nodes, by comparing the preorder and postorder numbers (bottom) of their source and destination:

For example, 7-6 is a cross edge because 7‘s preorder and postorder numbers are both larger than 6’s.

In undirected graphs, we assigned each link in the DFS tree to one of four classes according to whether it corresponded to a graph edge that led to a recursive call and to whether it corresponded to the first or second representation of the edge encountered by the DFS. In digraphs, there is a one-to-one correspondence between tree links and graph edges, and they fall into four distinct classes:

• Those representing a recursive call ( tree edges)

• Those from a vertex to an ancestor in its DFS tree ( back edges)

• Those from a vertex to a descendant in its DFS tree ( down edges)

• Those from a vertex to another vertex that is neither an ancestor nor a descendant in its DFS tree ( cross edges)

A tree edge is an edge to an unvisited vertex, corresponding to a recursive call in the DFS. Back, cross, and down edges go to visited vertices. To identify the type of a given edge, we use preorder and postorder numbering (the order in which nodes are visited in preorder and postorder walks of the forest, respectively).

Property 19.3 In a DFS forest corresponding to a digraph, an edge to a visited node is a back edge if it leads to a node with a higher postorder number; otherwise, it is a cross edge if it leads to a node with a lower preorder number and a down edge if it leads to a node with a higher preorder number.

Proof: These facts follow from the definitions. A node’s ancestors in a DFS tree have lower preorder numbers and higher postorder numbers; its descendants have higher preorder numbers and lower postorder numbers. It is also true that both numbers are lower in previously visited nodes in other DFS trees, and both numbers are higher in yetto-be-visited nodes in other DFS trees, but we do not need code that tests for these cases.

Program 19.2 is a DFS class that identifies the type of each edge in the digraph. Figure 19.10 illustrates its operation on the example digraph of Figure 19.1. During the search, testing to see whether an edge leads to a node with a higher postorder number is equivalent to testing whether a postorder number has yet been assigned. Any node for which a preorder number has been assigned but for which a postorder number has not yet been assigned is an ancestor in the DFS tree and will therefore have a postorder number higher than that of the current node.

Figure 19.10 Digraph DFS trace

This DFS trace is the output of Program 19.2 for the example digraph in Figure 19.1. It corresponds precisely to a preorder walk of the DFS tree depicted in Figure 19.9.

As we saw in Chapter 17 for undirected graphs, the edge types are properties of the dynamics of the search, rather than of only the graph. Indeed, different DFS forests of the same graph can differ remarkably in character, as illustrated in Figure 19.11. For example, even the number of trees in the DFS forest depends upon the start vertex.

Despite these differences, several classical digraph-processing algorithms are able to determine digraph properties by taking appropriate action when they encounter the various types of edges during a DFS. For example, consider the following basic problem:

Directed cycle detection Does a given digraph have any directed cycles? (Is the digraph a DAG?) In undirected graphs, any edge to a visited vertex indicates a cycle in the graph; in digraphs, we must restrict our attention to back edges.

Property 19.4 A digraph is a DAG if and only if we encounter no back edges when we use DFS to examine every edge.

Proof: Any back edge belongs to a directed cycle that consists of the edge plus the tree path connecting the two nodes, so we will find no back edges when using DFS on a DAG. To prove the converse, we show that if the digraph has a cycle, then the DFS encounters a back

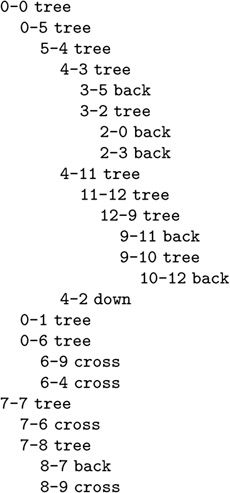

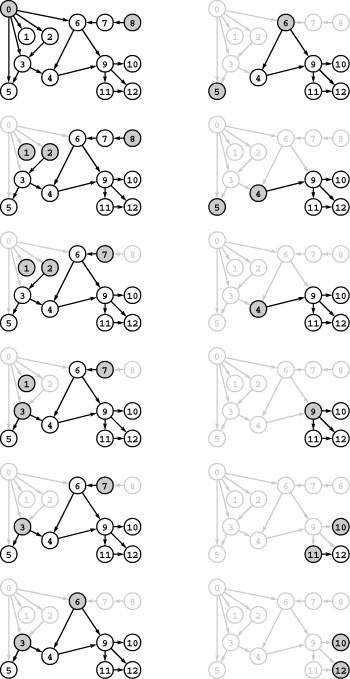

Figure 19.11 DFS forests for a digraph

These forests describes depth-first search of the same graph as Figure 19.9, when the graph search function checks the vertices (and calls the recursive function for the unvisited ones) in the order s, s+1, …, V-1, 0, 1, …, s-1 for each s. The forest structure is determined both by the search dynamics and the graph structure. Each node has the same children (the nodes on its adjacency list, in order) in every forest. The leftmost tree in each forest contains all the nodes reachable from its root, but reachability inferences about other nodes are complicated because of back, cross, and down edges. Even the number of trees in the forest depends on the starting node, so we do not necessarily have a direct correspondence between trees in the forest and strong components, the way that we did for components in undirected graphs. For example, we see that all vertices are reachable from 8 only when we start the DFS at 8.

edge. Suppose that v is the first of the vertices on the cycle that is visited by the DFS. That vertex has the lowest preorder number of all the vertices on the cycle. The edge that points to it will therefore be a back edge: It will be encountered during the recursive call for v (for a proof that it must be, see Property 19.5), and it points from some node on the cycle to v, a node with a lower preorder number (see Property 19.3).

We can convert any digraph into a DAG by doing a DFS and removing any graph edges that correspond to back edges in the DFS. For example, Figure 19.9 tells us that removing the edges 2-0, 3-5, 2-3, 9-11, 10-12, 4-2, and 8-7 makes the digraph in Figure 19.1 a DAG. The specific DAG that we get in this way depends on the graph representation and the associated implications for the dynamics of the DFS (see Exercise 19.37). This method is a useful way to generate large arbitrary DAGs randomly (see Exercise 19.76) for use in testing DAG-processing algorithms.

Directed cycle detection is a simple problem, but contrasting the solution just described with the solution that we considered in Chapter 18 for undirected graphs gives insight into the necessity of considering the two types of graphs as different combinatorial objects, even though their representations are similar and the same programs work on both types for some applications. By our definitions, we seem to be using the same method to solve this problem as for cycle detection in undirected graphs (look for back edges), but the implementation that we used for undirected graphs would not work for digraphs. For example, in Section 18.5 we were careful to distinguish between parent links and back links since the existence of a parent link does not indicate a cycle (cycles in undirected graphs must involve at least three vertices). But to ignore links back to a node’s parents in digraphs would be incorrect; we do consider a doubly-connected pair of vertices in a digraph to be a cycle. Theoretically, we could have defined back edges in undirected graphs in the same way as we have done here, but then we would have needed an explicit exception for the two-vertex case. More important, we can detect cycles in undirected graphs in time proportional to V (see Section 18.5), but we may need time proportional to E to find a cycle in a digraph (see Exercise 19.32).

The essential purpose of DFS is to provide a systematic way to visit all the vertices and all the edges of a graph. It therefore gives us a basic approach for solving reachability problems in digraphs, although, again, the situation is more complicated than for undirected graphs.

Single-source reachability Which vertices in a given digraph can be reached from a given start vertex s? How many such vertices are there?

Property 19.5 With a recursive DFS starting at s, we can solve the single-source reachability problem for a vertex s in time proportional to the number of edges in the subgraph induced by the reachable vertices.

Proof: This proof is essentially the same as the proof of Property 18.1, but it is worth restating to underline the distinction between reachability in digraphs and connectivity in undirected graphs. The property is certainly true for a digraph that has one vertex and no edges. For any digraph that has more than one vertex, we assume the property to be true for all digraphs that have fewer vertices. Now, the first edge that we take from s divides the digraph into the subgraphs induced by two subsets of vertices (see Figure 19.12): ( i ) the vertices that we can reach by directed paths that begin with that edge and do not otherwise include s;and( ii ) the vertices that we cannot reach with a directed path that begins with that edge without returning to s. We apply the inductive hypothesis to these subgraphs, noting that there are no directed edges from a vertex in the first subgraph to any vertex other than s in the second subgraph (such an edge would be a contradiction because its destination vertex should be in the first subgraph), that directed edges to s will be ignored because it has the lowest preorder number, and that all the vertices in the first subgraph have lower preorder numbers than any vertex in the second subgraph, so all directed edges from a vertex in the second subgraph to a vertex in the first subgraph will be ignored.

Figure 19.12 Decomposing a digraph

To prove by induction that DFS takes us everywhere reachable from a given node in a digraph, we use essentially the same proof as for Trémaux exploration. The key step is depicted here as a maze (top), for comparison with Figure 18.4. We break the graph into two smaller pieces (bottom), induced by two sets of vertices: Those vertices that can be reached by following the first edge from the start vertex without revisiting it (bottom piece), and those vertices that cannot be reached by following the first edge without going back through the start vertex (top piece). Any edge that goes from a vertex in the first set to the start vertex is skipped during the search of the first set because of the mark on the start vertex. Any edge that goes from a vertex in the second set to a vertex in the first set is skipped because all vertices in the first set are marked before the search of the second subgraph begins.

By contrast with undirected graphs, a DFS on a digraph does not give full information about reachability from any vertex other than the start node, because tree edges are directed and because the search structures have cross edges. When we leave a vertex to travel down a tree edge, we cannot assume that there is a way to get back to that vertex via digraph edges; indeed, there is not, in general. For example, there is no way to get back to 4 after we take the tree edge 4-11 in Figure 19.9. Moreover, when we ignore cross and forward edges (because they lead to vertices that have been visited and are no longer active), we are ignoring information that they imply (the set of vertices that are reachable from the destination). For example, following the cross edge 6-9 in Figure 19.9 is the only way for us to find out that 10, 11, and 12 are reachable from 6.

To determine which vertices are reachable from another vertex, we apparently need to start over with a new DFS from that vertex (see Figure 19.11). Can we make use of information from previous searches to make the process more efficient for later ones? We consider such reachability questions in Section 19.7.

To determine connectivity in undirected graphs, we rely on knowing that vertices are connected to their ancestors in the DFS tree, through (at least) the path in the tree. By contrast, the tree path goes in the wrong direction in a digraph: There is a directed path from a vertex in a digraph to an ancestor only if there is a back edge from a descendant to that or a more distant ancestor. Moreover, connectivity in undirected graphs for each vertex is restricted to the DFS tree rooted at that vertex; in contrast, in digraphs, cross edges can take us to any previously visited part of the search structure, even one in another tree in the DFS forest. For undirected graphs, we were able to take advantage of these properties of connectivity to identify each vertex with a connected component in a single DFS, then to use that information as the basis for a constant-time ADT operation to determine whether any two vertices are connected. For digraphs, as we see in this chapter, this goal is elusive.

We have emphasized throughout this and the previous chapter that different ways of choosing unvisited vertices lead to different search dynamics for DFS. For digraphs, the structural complexity of the DFS trees leads to differences in search dynamics that are even more pronounced than those we saw for undirected graphs. For example, Figure 19.11 illustrates that we get marked differences for digraphs even when we simply vary the order in which the vertices are examined in the top-level search function. Only a tiny fraction of even these possibilities is shown in the figure—in principle, each of the V ! different orders of examining vertices might lead to different results. In Section 19.7, we shall examine an important algorithm that specifically takes advantage of this flexibility, processing the unvisited vertices at the top level (the roots of the DFS trees) in a particular order that immediately exposes the strong components.

Exercises

19.30 Draw the DFS forest that results from a standard adjacency-lists DFS of the digraph

3-7 1-4 7-8 0-5 5-2 3-8 2-9 0-6 4-9 2-6 6-4.

19.31 Draw the DFS forest that results from a standard adjacency-matrix DFS of the digraph

3-7 1-4 7-8 0-5 5-2 3-8 2-9 0-6 4-9 2-6 6-4.

• 19.32 Describe a family of digraphs with V vertices and E edges for which a standard adjacency-lists DFS requires time proportional to E for cycle detection.

• 19.33 Show that, during a DFS in a digraph, no edge connects a node to another node whose preorder and postorder numbers are both smaller.

• 19.34 Show all possible DFS forests for the digraph

0-1 0-2 0-3 1-3 2-3.

Tabulate the number of tree, back, cross, and down edges for each forest.

19.35 If we denote the number of tree, back, cross, and down edges by t, b, c, and d, respectively, then we have t + b + c + d = E and t < V for any DFS of any digraph with V vertices and E edges. What other relationships among these variables can you infer? Which of the values are dependent solely on graph properties, and which are dependent on dynamic properties of the DFS?

• 19.36 Prove that every source in a digraph must be a root of some tree in the forest corresponding to any DFS of that digraph.

• 19.37 Construct a connected DAG that is a subgraph of Figure 19.1 by removing five edges (see Figure 19.11).

19.38 Implement a digraph class that provides the capability for a client to check that a digraph is indeed a DAG, and provide a DFS-based implementation.

19.39 Use your solution to Exercise 19.38 to estimate (empirically) the probability that a random digraph with V vertices and E edges is a DAG for various types of digraphs (see Exercises 19.11–18).

19.40 Run empirical studies to determine the relative percentages of tree, back, cross, and down edges when we run DFS on various types of digraphs (see Exercises 19.11–18).

19.41 Describe how to construct a sequence of directed edges on V vertices for which there will be no cross or down edges and for which the number of back edges will be proportional to V2 in a standard adjacency-lists DFS.

• 19.42 Describe how to construct a sequence of directed edges on V vertices for which there will be no back or down edges and for which the number of cross edges will be proportional to V2 in a standard adjacency-lists DFS.

19.43 Describe how to construct a sequence of directed edges on V vertices for which there will be no back or cross edges and for which the number of down edges will be proportional to V2 in a standard adjacency-lists DFS.

• 19.44 Give rules corresponding to Trémaux traversal for a maze where all the passages are one-way.

• 19.45 Extend your solutions to Exercises 17.56 through 17.60 to include arrows on edges (see the figures in this chapter for examples).

19.3 Reachability and Transitive Closure

To develop efficient solutions to reachability problems in digraphs, we begin with the following fundamental definition.

Definition 19.5 The transitive closure of a digraph is a digraph with the same vertices but with an edge from s to t in the transitive closure if and only if there is a directed path from s to t in the given digraph.

In other words, the transitive closure has an edge from each vertex to all the vertices reachable from that vertex in the digraph. Clearly, the transitive closure embodies all the requisite information for solving reachability problems. Figure 19.13 illustrates a small example.

Figure 19.13 Transitive closure

This digraph (top) has just eight directed edges, but its transitive closure (bottom) shows that there are directed paths connecting 19 of the 30 pairs of vertices. Structural properties of the digraph are reflected in the transitive closure. For example, rows 0, 1, and 2 in the adjacency matrix for the transitive closure are identical (as are columns 0, 1, and 2) because those vertices are on a directed cycle in the digraph.

One appealing way to understand the transitive closure is based on adjacency-matrix digraph representations, and on the following basic computational problem.

Boolean matrix multiplication A Boolean matrix is a matrix whose entries are all binary values, either 0 or 1. Given two Boolean matrices A and B, compute a Boolean product matrix C, using the logical and and or operations instead of the arithmetic operations * and +, respectively.

The textbook algorithm for computing the product of two V -by- V matrices computes, for each s and t, the dot product of row s in the first matrix and row t in the second matrix, as follows:

for (s = 0; s < V; s++)

for (t = 0; t < V; t++)

for (i = 0, C[s][t] = 0; i < V; i++)

C[s][t] += A[s][i]*B[i][t];

operation is defined for matrices comprising any type of entry for which 0, +, and * are defined. In particular, if we interpret a+b to be the logical or operation and a*b to be the logical and operation, then we have Boolean matrix multiplication. In C++, we can use the following version:

for (s = 0; s < V; s++)

for (t = 0; t < V; t++)

for (i = 0, C[s][t] = 0; i < V; i++)

if (A[s][i] && B[i][t]) C[s][t] = 1;

To compute C[s][t] in the product, we initialize it to 0, then set it to 1 if we find some value i for which both A[s][i] and B[i][t] are both 1. Running this computation is equivalent to setting C[s][t] to 1 if and only if the result of a bitwise logical and of row s in A with column t in B has a nonzero entry.

Now suppose that A is the adjacency matrix of a digraph A and that we use the preceding code to compute C = A * A 2 (simply by changing the reference to B in the code into a reference to A). Reading the code in terms of the interpretation of the adjacency-matrix entries immediately tells us what it computes: For each pair of vertices s and t, we put an edge from s to t in C if and only if there is some vertex i for which there is both a path from s to i and a path from i to t in A. In other words, directed edges in A 2 correspond precisely to directed paths of length 2 in A. If we include self-loops at every vertex in A, then A 2 also has the edges of A; otherwise, it does not. This relationship between Boolean matrix multiplication and paths in digraphs is illustrated in Figure 19.14. It leads immediately to an elegant method for computing the transitive closure of any digraph.

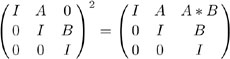

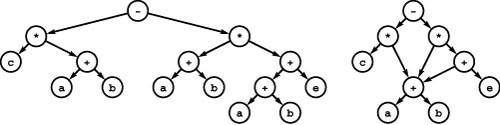

Figure 19.14 Squaring an adjacency matrix

If we put 0s on the diagonal of a digraph’s adjacency matrix, the square of the matrix represents a graph with an edge corresponding to each path of length 2 (top). If we put 1s on the diagonal, the square of the matrix represents a graph with an edge corresponding to each path of length 1 or 2 (bottom).

Property 19.6 We can compute the transitive closure of a digraph by constructing the latter’s adjacency matrix A, adding self-loops for every vertex, and computing AV.

Proof: Continuing the argument in the previous paragraph, A 3 has an edge for every path of length less than or equal to 3 in the digraph, A 4 has an edge for every path of length less than or equal to 4 in the digraph, and so forth. We do not need to consider paths of length greater than V because of the pigeonhole principle: Any such path must revisit some vertex (since there are only V of them) and therefore adds no information to the transitive closure because the same two vertices are connected by a directed path of length less than V (which we could obtain by removing the cycle to the revisited vertex).

Figure 19.15 shows the adjacency-matrix powers for a sample digraph converging to transitive closure. This method takes V matrix multiplications, each of which takes time proportional to V3, for a grand total of V4. We can actually compute the transitive closure for any digraph with just lg [V] Boolean matrix-multiplication operations: We compute A 2, A 4, A 8,… until we reach an exponent greater than or equal to V. As shown in the proof of Property 19.6, A t = A V for any t > V; so the result of this computation, which requires time proportional to V3 lg V, is A V—the transitive closure.

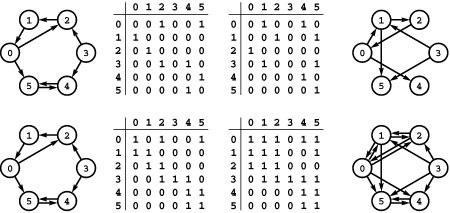

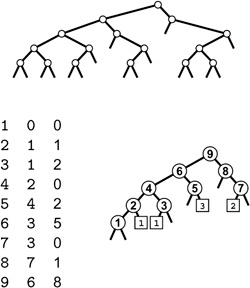

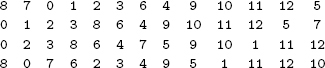

Figure 19.15 Adjacency matrix powers and directed paths

This sequence shows the first, second, third, and fourth powers (right, top to bottom) of the adjacency matrix at the top right, which gives graphs with edges for each of the paths of lengths less than 1, 2, 3, and 4, respectively, (left, top to bottom) in the graph that the matrix represents. The bottom graph is the transitive closure for this example, since there are no paths of length greater than 4 that connect vertices not connected by shorter paths.

Although the approach just described is appealing in its simplicity, an even simpler method is available. We can compute the transitive closure with just one operation of this kind, building up the transitive closure from the adjacency matrix in place, as follows:

for (i = 0; i < V; i++)

for (s = 0; s < V; s++)

for (t = 0; t < V; t++)

if (A[s][i] && A[i][t]) A[s][t] = 1;

This classical method, invented by S. Warshall in 1962, is the method of choice for computing the transitive closure of dense digraphs. The code is similar to the code that we might try to use to square a Boolean matrix in place: The difference (which is significant!) lies in the order of the for loops.

Property 19.7 With Warshall’s algorithm, we can compute the transitive closure of a digraph in time proportional to V3.

Proof: The running time is immediately evident from the structure of the code. We prove that it computes the transitive closure by induction on i. After the first iteration of the loop, the matrix has a 1 in row s and column t if and only if we have either the paths s-t or s-0-t. The second iteration checks all the paths between s and t that include 1 and perhaps 0, such as s-1-t, s-1-0-t, and s-0-1-t. We are led to the following inductive hypothesis: The ith iteration of the loop sets the bit in row s and column t in the matrix to 1 if and only if there is a directed path from s to t in the digraph that does not include any vertices with indices greater than i (except possibly the endpoints s and t). As just argued, the condition is true when i is 0, after the first iteration of the loop. Assuming that it is true for the ith iteration of the loop, there is a path from s to t that does not include any vertices with indices greater than i+1 if and only if ( i ) there is a path from s to t that does not include any vertices with indices greater than i, in which case A[s][t] was set on a previous iteration of the loop (by the inductive hypothesis); or ( ii ) there is a path from s to i+1 and a path from i+1 to t, neither of which includes any vertices with indices greater than i (except endpoints), in which case A[s][i+1] and A[i+1][t] were previously set to 1 (by hypothesis), so the inner loop sets A[s][t].

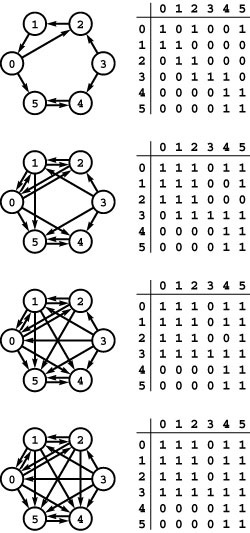

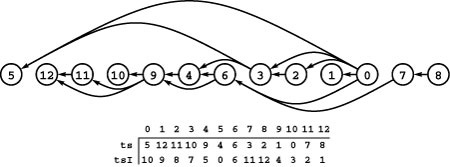

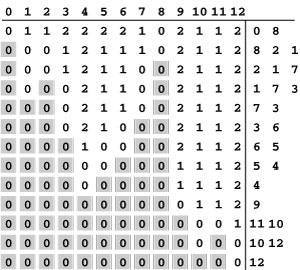

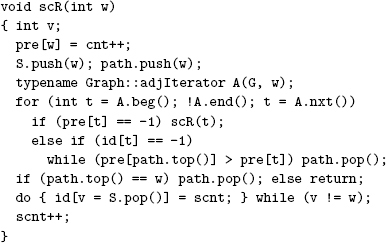

Figure 19.16 Warshall’s algorithm

This sequence shows the development of the transitive closure (bottom) of an example digraph (top) as computed with Warshall’s algorithm. The first iteration of the loop (left column, top) adds the edges 1-2 and 1-5 because of the paths 1-0-2 and 1-0-5, which include vertex 0 (but no vertex with a higher number); the second iteration of the loop (left column, second from top) adds the edges 2-0 and 2-5 because of the paths 2-1-0 and 2-1-0-5, which include vertex 1 (but no vertex with a higher number); and the third iteration of the loop (left column, bottom) adds the edges 0-1, 3-0, 3-1, and 3-5 because of the paths 0-2-1, 3-2-1-0, 3-2-1, and 3-2-1-0-5, which include vertex 2 (but no vertex with a higher number). The right column shows the edges added when paths through 3, 4, and 5 are considered. The last iteration of the loop (right column, bottom) adds the edges from 0, 1, and 2, to 4, because the only directed paths from those nodes to 4 include 5, the highest-numbered vertex.

We can improve the performance of Warshall’s algorithm with a simple transformation of the code: We move the test of A[s][i] out of the inner loop because its value does not change as t varies. This move allows us to avoid executing the t loop entirely when A[s][i] is zero. The savings that we achieve from this improvement depends on the digraph and is substantial for many digraphs (see Exercises 19.53 and 19.54). Program 19.3 implements this improvement and packages Warshall’s method such that clients can preprocess a digraph (compute the transitive closure), then compute the answer to any reachability query in constant time.

We are interested in pursuing more efficient solutions, particularly for sparse digraphs. We would like to reduce both the preprocessing time and the space because both make the use of Warshall’s method prohibitively costly for huge sparse digraphs.

In modern applications, abstract data types provide us with the ability to separate out the idea of an operation from any particular implementation so that we can focus on efficient implementations. For the transitive closure, this point of view leads to a recognition that we do not necessarily need to compute the entire matrix to provide clients with the transitive-closure abstraction. One possibility might be that the transitive closure is a huge sparse matrix, so an adjacency-lists representation is called for because we cannot store the matrix representation. Even when the transitive closure is dense, client programs might test only a tiny fraction of possible pairs of edges, so computing the whole matrix is wasteful.

We use the term abstract transitive closure to refer to an ADT that provides clients with the ability to test reachability after preprocessing a digraph, like Program 19.3. In this context, we need to measure an algorithm not just by its cost to compute the transitive closure (preprocessing cost) but also by the space required and the query time achieved. That is, we rephrase Property 19.7 as follows:

Property 19.8 We can support constant-time reachability testing (abstract transitive closure) for a digraph, using space proportional to V2 and time proportional to V3 for preprocessing.

This property follows immediately from the basic performance characteristics of Warshall’s algorithm.

For most applications, our goal is not just to compute the transitive closure of a digraph quickly but also to support constant query time for the abstract transitive closure using far less space and far less preprocessing time than specified in Property 19.8. Can we find an implementation that will allow us to build clients that can afford to handle such digraphs? We return to this question in Section 19.8.

There is an intimate relationship between the problem of computing the transitive closure of a digraph and a number of other fundamental computational problems, and that relationship can help us to understand this problem’s difficulty. We conclude this section by considering two examples of such problems.

First, we consider the relationship between the transitive closure and the all-pairs shortest-paths problem (see Section 18.7). For di-graphs, the problem is to find, for each pair of vertices, a directed path with a minimal number of edges.

Given a digraph, we initialize a V -by- V integer matrix A by setting A[s][t] to 1 if thereisanedgefrom s to t andtothe sentinel value V if there is no such edge. Our goal is to set A[s][t] equal to the length of (the number of edges on) a shortest directed path from s to t, using the sentinel value V to indicate that there is no such path. The following code accomplishes this objective:

for (i = 0; i < V; i++)

for (s = 0; s < V; s++)

for (t = 0; t < V; t++)

if (A[s][i] + A[i][t] < A[s][t])

A[s][t] = A[s][i] + A[i][t];

This code differs from the version of Warshall’s algorithm that we saw just before Property 19.7 in only the if statement in the inner loop. Indeed, in the proper abstract setting, the computations are precisely the same (see Exercises 19.55 and 19.56). Converting the proof of Property 19.7 into a direct proof that this method accomplishes the desired objective is straightforward. This method is a special case of Floyd’s algorithm for finding shortest paths in weighted graphs (see Chapter 21). The BFS-based solution for undirected graphs that we considered in Section 18.7 also finds shortest paths in digraphs (appropriately modified). Shortest paths are the subject of Chapter 21, so we defer considering detailed performance comparisons until then.

Second, as we have seen, the transitive-closure problem is also closely related to the Boolean matrix-multiplication problem. The basic algorithms that we have seen for both problems require time proportional to V3, using similar computational schema. Boolean matrix multiplication is known to be a difficult computational problem: Algorithms that are asymptotically faster than the straightforward method are known, but it is debatable whether the savings are sufficiently large to justify the effort of implementing any of them. This fact is signifi-cant in the present context because we could use a fast algorithm for Boolean matrix multiplication to develop a fast transitive-closure algorithm (slower by just a factor of lg V ) using the repeated-squaring method illustrated in Figure 19.15. Conversely, we have a lower bound on the difficulty of computing the transitive closure:

Property 19.9 We can use any transitive-closure algorithm to compute the product of two Boolean matrices with at most a constant-factor difference in running time.

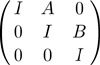

Proof: Given two V -by- V Boolean matrices A and B, we construct the following 3 V -by-3 V matrix:

Here, 0 denotes the V -by- V matrix with all entries equal to 0, and I denotes the V -by- V identity matrix with all entries equal to 0 except those on the diagonal, which are equal to 1. Now, we consider this matrix to be the adjacency matrix for a digraph and compute its transitive closure by repeated squaring. But we only need one step:

The matrix on the right-hand side of this equation is the transitive closure because further multiplications give back the same matrix. But this matrix has the V -by- V product A B in its upper-right corner. Whatever algorithm we use to solve the transitive-closure problem, we can use it to solve the Boolean matrix-multiplication problem at the same cost (to within a constant factor).

The significance of this property depends on the conviction of experts that Boolean matrix multiplication is difficult: Mathematicians have been working for decades to try to learn precisely how difficult it is, and the question is unresolved; the best known results say that the running time should be proportional to about V2 . 5 ( see reference section ). Now, if we could find a linear-time (proportional to V2) solution to the transitive-closure problem, then we would have a linear-time solution to the Boolean matrix-multiplication problem as well. This relationship between problems is known as reduction: We say that the Boolean matrix-multiplication problem reduces to the transitive-closure problem (see Section 21.6 and Part 8). Indeed, the proof actually shows that Boolean matrix multiplication reduces to finding the paths of length 2 in a digraph.

Despite a great deal of research by many people, no one has been able to find a linear-time Boolean matrix-multiplication algorithm, so we cannot present a simple linear-time transitive-closure algorithm. On the other hand, no one has proved that no such algorithm exists, so we hold open that possibility for the future. In short, we take Property 19.9 to mean that, barring a research breakthrough, we cannot expect the worst-case running time of any transitive-closure algorithm that we can concoct to be proportional to V2. Despite this conclusion, we can develop fast algorithms for certain classes of digraphs. For example, we have already touched on a simple method for computing the transitive closure that is much faster than Warshall’s algorithm for sparse digraphs.

Property 19.10 With DFS, we can support constant query time for the abstract transitive closure of a digraph, with space proportional to V2 and time proportional to V ( E + V ) for preprocessing (computing the transitive closure).

Proof: As we observed in the previous section, DFS gives us all the vertices reachable from the start vertex in time proportional to E, if we use the adjacency-lists representation (see Property 19.5 and Figure 19.11). Therefore, if we run DFS V times, once with each vertex as the start vertex, then we can compute the set of vertices reachable from each vertex—the transitive closure—in time proportional to V ( E + V ). The same argument holds for any linear-time generalized search (see Section 18.8 and Exercise 19.66).

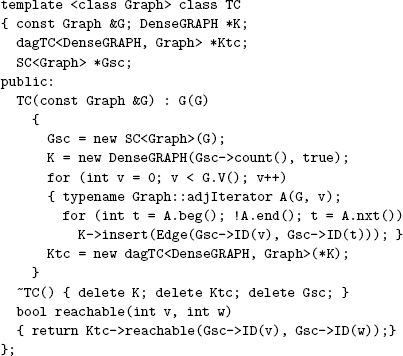

Program 19.4 is an implementation of this search-based transitive-closure algorithm. This class implements the same interface as does Program 19.3. The result of running this program on the sample di-graph in Figure 19.1 is illustrated in the first tree in each forest in Figure 19.11.

For sparse digraphs, this search-based approach is the method of choice. For example, if E is proportional to V, then Program 19.4 computes the transitive closure in time proportional to V2. How can it do so, given the reduction to Boolean matrix multiplication that we just considered? The answer is that this transitive-closure algorithm does indeed give an optimal way to multiply certain types of Boolean matrices (those with O ( V ) nonzero entries). The lower bound tells us that we should not expect to find a transitive-closure algorithm that runs in time proportional to V2 for all digraphs, but it does not preclude the possibility that we might find algorithms, like this one, that are faster for certain classes of digraphs. If such graphs are the ones that we need to process, the relationship between transitive closure and Boolean matrix multiplication may not be relevant to us.

It is easy to extend the methods that we have described in this section to provide clients with the ability to find a specific path connecting two vertices by keeping track of the search tree, as described in Section 17.8. We consider specific ADT implementations of this sort in the context of the more general shortest-paths problems in Chapter 21.

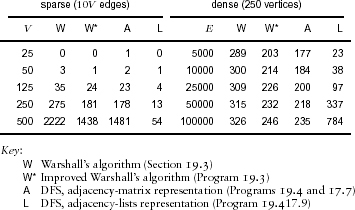

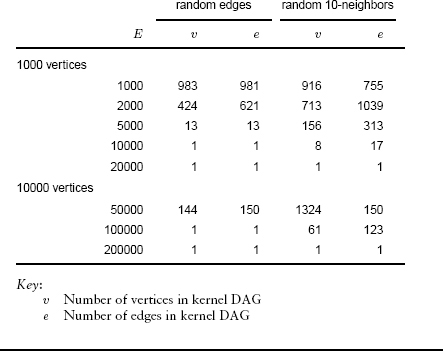

Table 19.1 shows empirical results comparing the elementary transitive-closure algorithms described in this section. The adjacency-lists implementation of the search-based solution is by far the fastest method for sparse digraphs. The implementations all compute an adjacency matrix (of size V2), so none of them are suitable for huge sparse digraphs.

Table 19.1 Empirical study of transitive-closure algorithms

This table shows running times that exhibit dramatic performance differences for various algorithms for computing the transitive closure of random digraphs, both dense and sparse. For all but the adjacency-lists DFS, the running time goes up by a factor of 8 when we double V, which supports the conclusion that it is essentially proportional to V3. The adjacency-lists DFS takes time proportional to V E, which explains the running time roughly increasing by a factor of 4 when we double both V and E (sparse graphs) and by a factor of about 2 when we double E (dense graphs), except that list-traversal overhead degrades performance for high-density graphs.

For sparse digraphs whose transitive closure is also sparse, we might use an adjacency-lists implementation for the closure so that the size of the output is proportional to the number of edges in the transitive closure. This number certainly is a lower bound on the cost of computing the transitive closure, which we can achieve for certain types of digraphs using various algorithmic techniques (see Exercises 19.64 and 19.65). Despite this possibility, we generally view the objective of a transitive-closure computation to be dense, so we can use a representation like DenseGRAPH that can easily answer reachability queries, and we regard transitive-closure algorithms that compute the matrix in time proportional to V2 as being optimal since they take time proportional to the size of their output.

If the adjacency matrix is symmetric, it is equivalent to an undirected graph, and finding the transitive closure is the same as finding the connected components—the transitive closure is the union of complete graphs on the vertices in the connected components (see Exercise 19.48). Our connectivity algorithms in Section 18.5 amount to an abstract–transitive-closure computation for symmetric digraphs (undirected graphs) that uses space proportional to V and still supports constant-time reachability queries. Can we do as well in general digraphs? Can we reduce the preprocessing time still further? For what types of graphs can we compute the transitive closure in linear time? To answer these questions, we need to study the structure of digraphs in more detail, including, specifically, that of DAGs.

Exercises

• 19.46 What is the transitive closure of a digraph that consists solely of a directed cycle with V vertices?

19.47 How many edges are there in the transitive closure of a digraph that consists solely of a simple directed path with V vertices?

• 19.48 Give the transitive closure of the undirected graph

3-7 1-4 7-8 0-5 5-2 3-8 2-9 0-6 4-9 2-6 6-4.

• 19.49 Show how to construct a digraph with V vertices and E edges with the property that the number of edges in the transitive closure is proportional to t, for any t between E and V2. As usual, assume that E > V.

19.50 Give a formula for the number of edges in the transitive closure of a digraph that is a directed forest as a function of structural properties of the forest.

19.51 Show, in the style of Figure 19.15, the process of computing the transitive closure of the digraph

3-7 1-4 7-8 0-5 5-2 3-8 2-9 0-6 4-9 2-6 6-4

through repeated squaring.

19.52 Show, in the style of Figure 19.16, the process of computing the transitive closure of the digraph

3-7 1-4 7-8 0-5 5-2 3-8 2-9 0-6 4-9 2-6 6-4

with Warshall’s algorithm.

• 19.53 Give a family of sparse digraphs for which the improved version of Warshall’s algorithm for computing the transitive closure (Program 19.3) runs in time proportional to V E.

• 19.54 Find a sparse digraph for which the improved version of Warshall’s algorithm for computing the transitive closure (Program 19.3) runs in time proportional to V3.

• 19.55 Develop a base class from which you can derive classes that implement both Warshall’s algorithm and Floyd’s algorithm. (This exercise is a version of Exercise 19.56 for people who are more familiar with abstract data types than with abstract algebra.)

• 19.56 Use abstract algebra to develop a generic algorithm that encompasses both Warshall’s algorithm and Floyd’s algorithm. (This exercise is a version of Exercise 19.55 for people who are more familiar with abstract algebra than with abstract data types.)

• 19.57 Show, in the style of Figure 19.16, the development of the all-shortest paths matrix for the example graph in the figure as computed with Floyd’s algorithm.

19.58 Is the Boolean product of two symmetric matrices symmetric? Explain your answer.

19.59 Add a public function member to Programs 19.3 and 19.4 to allow clients to use tc objects to find the number of edges in the transitive closure.

19.60 Design a way to maintain the count of the number of edges in the transitive closure by modifying it when edges are added and removed. Give the cost of adding and removing edges with your scheme.

• 19.61 Add a public member function for use with Programs 19.3 and 19.4 that returns a vertex-indexed vector that indicates which vertices are reachable from a given vertex.

• 19.62 Run empirical studies to determine the number of edges in the transitive closure, for various types of digraphs (see Exercises 19.11–18).

• 19.63 Consider the bit-matrix graph representation that is described in Exercise 17.23. Which method can you speed up by a factor of B (where B is the number of bits per word on your computer): Warshall’s algorithm or the DFS-based algorithm? Justify your answer by developing an implementation that does so.

• 19.64 Give a program that computes the transitive closure of a digraph that is a directed forest in time proportional to the number of edges in the transitive closure.

• 19.65 Implement an abstract–transitive-closure algorithm for sparse graphs that uses space proportional to T and can answer reachability requests in constant time after preprocessing time proportional to V E + T, where T is the number of edges in the transitive closure. Hint: Use dynamic hashing.

• 19.66 Provide a version of Program 19.4 that is based on generalized graph search (see Section 18.8), and run empirical studies to see whether the choice of graph-search algorithm has any effect on performance.

19.4 Equivalence Relations and Partial Orders

This section is concerned with basic concepts in set theory and their relationship to abstract–transitive-closure algorithms. Its purposes are to put the ideas that we are studying into a larger context and to demonstrate the wide applicability of the algorithms that we are considering. Mathematically inclined readers who are familiar with set theory may wish to skip to Section 19.5 because the material that we cover is elementary (although our brief review of terminology may be helpful); readers who are not familiar with set theory may wish to consult an elementary text on discrete mathematics because our treatment is rather succinct. The connections between digraphs and these fundamental mathematical concepts are too important for us to ignore.

Given a set, a relation among its objects is defined to be a set of ordered pairs of the objects. Except possibly for details relating to parallel edges and self-loops, this definition is the same as our definition of a digraph: Relations and digraphs are different representations of the same abstraction. The mathematical concept is somewhat more powerful because the sets may be infinite, whereas our computer programs all work with finite sets, but we ignore this difference for the moment.

Typically, we choose a symbol R and use the notation sRt as shorthand for the statement “the ordered pair ( s, t ) is in the relation R.” For example, we use the symbol “<” to represent the “less than” relation among numbers. Using this terminology, we can characterize various properties of relations. For example, a relation R is said to be symmetric if sRt implies that tRs for all s and t;itissaidtobe reflexive if sRs for all s. Symmetric relations are the same as undirected graphs. Reflexive relations correspond to graphs in which all vertices have self-loops; relations that correspond to graphs where no vertices have self-loops are said to be irreflexive.

A relation R is said to be transitive when sRt and tRu implies that sRu for all s, t, and u. The transitive closure of a relation is a well-defined concept; but instead of redefining it in set-theoretic terms, we appeal to the definition that we gave for digraphs in Section 19.3. Any relation is equivalent to a digraph, and the transitive closure of the relation is equivalent to the transitive closure of the digraph. The transitive closure of any relation is transitive.

In the context of graph algorithms, we are particularly interested in two particular transitive relations that are defined by further constraints. These two types, which are widely applicable, are known as equivalence relations and partial orders.

An equivalence relation is a transitive relation that is also reflexive and symmetric. Note that a symmetric, transitive relation that includes each object in some ordered pair must be an equivalence relation: If s t, then t s (by symmetry) and s s (by transitivity). Equivalence relations divide the objects in a set into subsets known as equivalence classes. Two objects s and t are in the same equivalence class if and only if s t. The following examples are typical equivalence relations:

Modular arithmetic Any positive integer k defines an equivalence relation on the set of integers, with s t (mod k ) if and only if the remainder that results when we divide s by k is equal to the the remainder that results when we divide t by k. The relation is obviously symmetric; a short proof establishes that it is also transitive (see Exercise 19.67) and therefore is an equivalence relation.

Connectivity in graphs The relation “is in the same connected component as” among vertices is an equivalence relation because it is symmetric and transitive. The equivalence classes correspond to the connected components in the graph.

When we build a graph ADT that gives clients the ability to test whether two vertices are in the same connected component, we are implementing an equivalence-relation ADT that provides clients with the ability to test whether two objects are equivalent. In practice, this correspondence is significant because the graph is a succinct representation of the equivalence relation (see Exercise 19.71). In fact, as we saw in Chapters 1 and 18, to build such an ADT we need to maintain only a single vertex-indexed vector.

A partial order ![]() is a transitive relation that is also irreflexive. As a direct consequence of transitivity and irreflexivity, it is trivial to prove that partial orders are also asymmetric: If s