3

Classical Mathematical Models in Financial Engineering and Modern Portfolio Theory

3.1 An Introduction to the Cost of Money in the Financial Market

The Prevailing Financial Scenery

Much of financial engineering depends on an understanding of the perceived behavior of the financial market, particularly the stock markets that include daily trading of corporate and governmental stocks, bonds, mutual funds, derivatives, and many other commodities.

Some Current Observations in the United States: As of August 11, 2016

- Financial Interest Rates: The Cost of Money

A typical local (California) federally insured credit union is advertising the following typical savings and lending rates for the month of July, 2016:

Money market savings account, dividend rate: 0.85% per annum Free checking account, dividend rate: 0.25% per annum Certificate account rate, dividend rate: 1.00% per annum Secured real estate loan rate: 4.00% per annum Credit card loan rate: 8.75% per annum Remark: It certainly should not escape one's attention that such low levels of dividend rates in ordinary savings accounts may well be a prime incentive for one's interests in seeking higher returns in other avenues within the financial market or elsewhere!

- The Financial News Media

CNN reported in http://money.cnn.com that there are “5 Reasons Why Stocks May Keep Going Higher”:

“Most major stock market indexes in the U.S. are trading near their all-time highs. So why do so many investors…and even Wall Street experts…feel so lousy?”

“There is a growing skepticism that stocks can keep hitting new records. Yet, the market keeps grinding higher. Investors may be talking about how nervous they are. But the numbers tell a different story. The VIX, a measure of volatility often dubbed Wall Street's ‘Fear Gauge,’ is near its lowest level in a year. And CNN Money's Fear & Greed Index, which looks at the VIX and six other indicators of investor sentiment, has been showing signs of Extreme Greed in the market for the past month. Concerns about the U.K. Brexit (viz., United Kingdom of Britain exiting the European Union) vote initially rocked the market…(for less than a week)…but such worries, that Brexit could wind up being the 2016 equivalent of Lehman Brothers, almost turned out to be short-lived. Investors do not seem terribly concerned about the impact that the U.S. presidential election (2016 being such an election year in the United States) will have on stocks either – even though the gains may be modest –”

- For the Foreseeable Future.

Here are five outstanding reasons:

- Corporate America is getting healthier.

- Stocks could stay pricy for a while.

- The Fed (viz., U.S. Federal Reserve Banks—the central banking system) is still your friend!

- The (corporate) merger boom is not over yet!

- “Slow and steady wins the race!”

Conclusion: “Patient investors should be rewarded if they don't do anything crazy. We are invested conservatively and believe it is better to make money slowly than just take speculative bets. There are still ways to make money and do it smartly.”

Creative Financing

Indeed, there are virtually unlimited ways to create opportunities of financing. Here are two examples:

- Fixed Rate Mortgage versus Adjustable Rate Mortgage (ARM) versus LIBOR ARM

- A fixed rate mortgage has the same payment for the entire term of the loan.

- An Adjustable Rate Mortgage (ARM) has a rate that can change, causing the monthly payment to increase or decrease or remain unchanged.

- LIBOR (London InterBank Offered Rate) is an index set by a group of London-based banks, and sometimes used as a base for U.S. adjustable rate mortgages.

- Seller-Financing

As an example, the owner of a real asset may offer his own line of credit to any acceptable buyers of the said asset.

3.2 Modern Theories of Portfolio Optimization

In making investment decisions in asset allocation, the modern portfolio theory focuses on potential return in relation to the concomitant potential risk. The strategy is to select and then evaluate individual investments (securities, bonds, funds, derivatives, commodities, etc.) as part of an overall portfolio rather than solely for their own strengths or weaknesses as an investment.

Asset allocation is therefore a primary tactic—because it allows investors to create portfolios—to obtain the strongest possible return without assuming a greater level of risk than they would like to bear!

Another critical feature of an acceptable portfolio theory is that investors must be rewarded, in terms of realizing a greater return, for assuming greater risk. Otherwise, there would be little motivation and incentive to make investments that might result in a loss of principal!

With such preconditions, two outstanding theories of portfolio allocation are presented herein:

- The Markowitz model

- The Black–Litterman model

There are numerous modifications/improvements for these “standard bearers” that maintain a fruitful area in research and development in mathematical finance and financial engineering. In this chapter, these two approaches will be presented, followed by the more favored modifications of these theories!

3.2.1 The Markowitz Model of Modern Portfolio Theory (MPT)

Modern Portfolio Theory

Modern portfolio theory, or mean variance analysis, is a mathematical model for building a portfolio of financial assets such that the expected return is maximized for a given level of risk, defined as the variance. Its key feature is as follows:

“The risks of the asset and the return of profits should not be assessed by themselves, but by how it contributes to an overall risk and return of the portfolio.”

3.2.1.1 Risk and Expected Return

The MPT assumes that investors are risk-averse, meaning that given the two portfolios that offer the same expected return, investors will prefer the less risky one: Thus, an investor will take on increased risk only if compensated by higher expected returns. Conversely, an investor who wants higher expected returns must accept more risk.

This trade-off will be the same for all investors, but different investors will evaluate the trade-off differently based on individual risk-aversion characteristics. The implication is that a rational investor will not invest in a portfolio if a second portfolio exists with a more favorable risk-expected return profile—that is, if for that level of risk, an alternative portfolio exists that has better expected returns.

Under the model:

- Portfolio return is the proportion-weighted combination of the constituent assets' returns.

- Portfolio volatility is a function of the correlations ρij of the component assets, for all asset pairs (i, j).

In general:

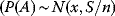

- Expected return:

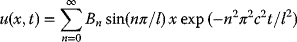

(3.1)

where

- Rp is the return on the portfolio,

- Ri is the return on asset i, and wi is the weighting of component asset i (i.e., the proportion of asset i in the portfolio).

- Portfolio return variance σp is the statistical sum of the variances of the individual components {σi, σj} defined as follows:

(3.2)

where ρij is the correlation coefficient between the returns on assets i and j.

Alternatively, the expression can be written as follows:

(3.3)

where ρij = 1 for i = j.

- Portfolio return volatility (standard deviation):

(3.4)

Thus, for a two-asset portfolio:

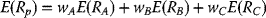

- Portfolio return:

(3.5)

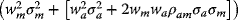

- Portfolio variance:

(3.6)

And, for a three-asset portfolio:

- Portfolio return:

(3.7)

- Portfolio variance:

(3.8)

3.2.1.2 Diversification

An investor may reduce portfolio risk simply by holding combinations of instruments that are not perfectly positively correlated:

In other words, investors can reduce their exposure to individual asset risk by selecting a diversified portfolio of assets: Diversification may allow the same portfolio expected return with reduced risk. These ideas had been first proposed by Markowitz and later reinforced by other economists and mathematicians who have expressed ideas in the limitation of variance through portfolio theory.

Thus, if all the asset pairs have correlations of 0 (viz., they are perfectly uncorrelated), the portfolio's return variance is the sum of all assets of the square of the fraction held in the asset times the asset's return variance (and the portfolio standard deviation is the square root of this sum).

3.2.1.3 Efficient Frontier with No Risk-Free Assets

As shown in the graph in Figure 3.1, every possible combination of the risky assets, without including any holdings of the risk-free asset, may be plotted in risk versus expected-return space, and the collection of all such possible portfolios defines a characteristic region in this space.

Figure 3.1 Efficient Frontier: The hyperbola, popularly known as the “Markowitz Bullet,” is the efficient frontier if no risk-free asset is available. (For a risk-free asset, the straight line is the efficient frontier.)

The left boundary of this region is a hyperbola, and the upper edge of this region is the efficient frontier in the absence of a risk-free asset (called “the Markowitz Bullet”). Combinations along this upper edge represent portfolios (including no holdings of the risk-free asset) for which there is lowest risk for a given level of expected return. Equivalently, a portfolio lying on the efficient frontier represents the combination offering the best possible expected return for the given risk level. The tangent to the hyperbola at the tangency point indicates the best possible Capital Allocation Line (CAL).

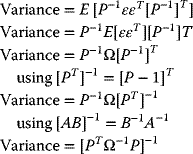

In the description and mathematical development of the MPT, matrices are generally preferred for calculations of the efficient frontier.

Remarks:

In matrix form, for a given “risk tolerance” q ∈ (0, ∞), the efficient frontier may be obtained by minimizing the following expression:

Here

- w is a vector of portfolio weights and ∑i wi = 1. (The weights may be negative, which means investors can short a security.)

- ∑ is the covariance matrix for the returns on the assets in the portfolio.

- q ≥ 0 is a risk tolerance factor, where 0 results in the portfolio with minimal risk and ∞ results in the portfolio infinitely far out on the frontier with both expected return and risk unbounded.

- R is a vector of expected return.

- wT∑w is the variance of portfolio return.

- RTw is the expected return on the portfolio.

The above optimization finds the point on the frontier at which the inverse of the slope of the frontier would be q if portfolio return variance instead of standard deviation were plotted horizontally. The frontier is parametric on q.

3.2.1.4 The Two Mutual Fund Theorem

An important result of the above analysis is the Two Mutual Fund Theorem that states:

“Any portfolio on the efficient frontier may be generated by using a combination of any two given portfolios on the frontier; the latter two given portfolios are the ‘mutual funds’ in the theorem's name.”

Thus, in the absence of a risk-free asset, an investor may achieve any desired efficient portfolio even if all that is available is a pair of efficient mutual funds:

- If the location of the desired portfolio on the frontier is between the locations of the two mutual funds, then both mutual funds may be held in positive quantities.

- If the desired portfolio is outside the range spanned by the two mutual funds, then one of the mutual funds must be sold short (held in negative quantity) while the size of the investment in the other mutual fund must be greater than the amount available for investment (the excess being funded by the borrowing from the other fund).

3.2.1.5 Risk-Free Asset and the Capital Allocation Line

A risk-free asset is the asset that pays a risk-free rate. In practice, short-term government securities (such as U.S. Treasury Bills) are considered a risk-free asset, because they pay a fixed rate of interest and have exceptionally low default risks (none, in the United States, so far!). The risk-free asset has zero variance in returns (being risk-free). It is also uncorrelated with any other asset (by definition, since its variance is zero).

As a result, when it is combined with any other asset or portfolio of assets, the change in return is linearly related to the change in risk as the proportions in the combination vary.

3.2.1.6 The Sharpe Ratio

In mathematical finance, the Sharpe Ratio (or the Sharpe Index, or the Sharpe Measure, or the Reward-to-Variability Ratio) is an index for examining the performance (or risk premium) per unit of deviation in an investment asset or a trading strategy, typically referred to as risk (and is a deviation risk measure). It is named after W.F. Sharpe (recipient of the 1990 Nobel Memorial Prize in Economic Sciences).

In application, the Sharpe Ratio is similar to the Information Ratio; whereas the Sharpe Ratio is the excess return of an asset over the return of a risk-free asset divided by the variability or standard deviation of returns, the Information Ratio is the active return to the most relevant benchmark index divided by the standard deviation of the active return.

3.2.1.7 The Capital Allocation Line (CAL)

When a risk-free asset is included, the half-line shown in Figure 3.2 becomes the new efficient frontier: It is tangent to the hyperbola at the pure risky portfolio with the highest Sharpe Ratio. Its vertical intercept represents a portfolio with 100% of holdings in the risk-free asset; the tangency with the hyperbola represents a portfolio with no risk-free holdings and 100% of assets held in the portfolio occurring at the point of contact, namely, the tangency point:

- Points between these two positions are portfolios containing positive amounts of both the risky tangency portfolio and the risk-free asset.

- Points on the half-line beyond the tangency point are leveraged portfolios involving negative holdings of the risk-free asset (the latter has been sold short—in other words, the investor has borrowed at the risk-free rate) and an amount invested in the tangency portfolio equal to more than 100% of the investor's initial capital. This efficient half-line is called the Capital Allocation Line (CAL), and its equation may be shown to be

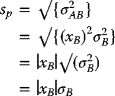

Figure 3.2 mu <- A*muA + B*muB.

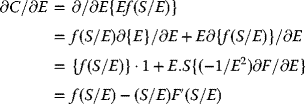

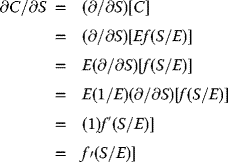

In Equation (3.11),

- P is the subportfolio of risky assets at the tangency with the Markowitz bullet.

- F is the risk-free asset.

- C is the combination of portfolios P and F.

Using the diagram, the introduction of the risk-free asset as a likely component of the portfolio has improved the range of risk-expected return combinations available because everywhere (except at the tangency portfolio) the half-line has a higher expected return than the hyperbola does at every possible risk level. That all points on the linear efficient locus can be achieved by a combination of holdings of the risk-free asset and the tangency portfolio is known as the “One Mutual Fund Theorem,” in which the mutual fund referred to is the tangency portfolio.

3.2.1.8 Asset Pricing

Up to this point, the analysis describes the optimal behavior of an individual investor. Asset pricing theory depends on this analysis in the following way:

Since each investor holds the risky assets in identical proportions to each other, namely, in the proportions given by the tangency portfolio, in market equilibrium the risky assets' prices, and therefore their expected returns, will adjust so that the ratios in the tangency portfolio are the same as the ratios in which the risky assets are supplied to the market. Thus, relative supplies will equal relative demands:

Modern Portfolio Theory derives the required expected return for a correctly priced asset in this context.

3.2.1.9 Specific and Systematic Risks

- Specific risks are the risks associated with individual assets. Within a portfolio, these risks may be reduced through diversification, namely, canceling out each other. Specific risk is also called diversifiable, unique, unsystematic, or idiosyncratic risk.

- Systematic risks, namely, portfolio risks or market risks are risks common to all securities—except for selling short. Systematic risk cannot be diversified away within one market. Within the market portfolio, asset-specific risk may be diversified away to the extent possible. Systematic risks are, therefore, equated with the risks of the market portfolio.

Since a security will be purchased only if it improves the risk-expected return characteristics of the market portfolio, the relevant measure of the risk of a security is the risk it adds to the market portfolio, and not its risk in isolation. In this context, the volatility of the asset and its correlation with the market portfolio are historically observed and are, therefore, available for consideration.

Systematic risks within one market can be managed through a strategy of using both long and short positions within one portfolio, creating a “market-neutral” portfolio. Market-neutral portfolios will have a correlation of zero.

3.2.2 Capital Asset Pricing Model (CAPM)

For a given asset allocation, the return depends on the price and total amount paid for the asset. The goal of the investment is that the price paid should ensure that the market portfolio's risk-return characteristics improve when the asset is added to it. The CAPM is an approach that derives the theoretical required expected return (i.e., discount rate) for an asset in a market, given the risk-free rate available to investors and the risk of the market as a whole.

The CAPM is usually expressed as follows:

where β is the asset sensitivity to a change in the overall market, and is usually found via correlations on historical data, noting that

- β > 1: signifying more than average “riskiness” for the asset's contribution to overall portfolio risk;

- β < 1: signifying a lower than average risk contribution to the portfolio risk.

- [E(Rm) – Rf] is the market premium, the expected excess return of the market portfolio's expected return over the risk-free rate.

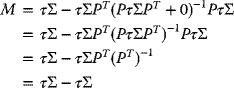

The above conclusions may be established as follows:

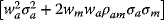

- The initial risks of the portfolio

When an additional risky asset a is added to the market portfolio m, the incremental impact on risk and expected return follows from the formulas for a two-asset portfolio. The results may then be used to derive the asset-appropriate discount rate as follows:

When an additional risky asset a is added to the market portfolio m, the incremental impact on risk and expected return follows from the formulas for a two-asset portfolio. The results may then be used to derive the asset-appropriate discount rate as follows: - The market portfolio's risk =

Hence, the risk added to the portfolio =

Hence, the risk added to the portfolio =  but the weight of the asset will be relatively low, namely,

but the weight of the asset will be relatively low, namely,  , hence

, hence  , so that the additional risk ≈ 2wmwaρamσaσm.

, so that the additional risk ≈ 2wmwaρamσaσm. - Since the market portfolio's expected return =

,the additional expected return = [waE(Ra)].

,the additional expected return = [waE(Ra)]. - On the other hand, if an asset a is accurately priced, the improvement in its risk-to-expected return ratio obtained by adding it to the market portfolio m will at least match the gains of spending that money on an increased stake in the market portfolio. The assumption here is that the investor will buy the asset with funds borrowed at the risk-free rate Rf. This is reasonable if

- The initial risks of the portfolio

Hence,

that is,

or

where [σam]/[σmm] is the “beta”, βreturn: the covariance between the asset's return and the market's return divided by the variance of the market return, which is the sensitivity of the asset price to movement in the market value of the portfolio.

3.2.2.1 The Security Characteristic Line (SCL)

Equation (3.11) may be computed statistically using the following regression equation, known as the SCL regression equation:

in which

- αi is the asset's alpha coefficient,

- βi is the asset's beta coefficient, and

- SCL is the Security Characteristic Line.

If the Expected Return E(Ri) is calculated using CAPM, then the future cash flow of the asset may be discounted to their present value using this rate to establish the correct price for the asset.

Remarks:

- A riskier stock will have a higher beta and will be discounted at a higher rate.

- Less-sensitive stocks may have lower betas and may be discounted at a lower rate.

- Theoretically, an asset is correctly priced when its observed price is the same as its value calculated using the CAPM-derived discount rate.

- If the observed price is higher than the valuation, then the asset is overvalued; and it is undervalued for a too low price.

- Despite its theoretical importance, critics of the Modern Portfolio Theory (MPT) question whether it is an ideal investment tool, because its model of financial markets does not match the real world in many ways.

- The risk, return, and correlation measures used by MPT are based on expected values, namely, they are mathematical statements about the future; the expected value of returns is

- explicit in the foregoing equations, and

- implicit in the definitions of variance and covariance.

In practice, financial analysts must rely on predictions based on historical records of asset return and volatility for these values in the model equations.

Often such expected values are at variance with the situations of the prevailing circumstances that may not exist when the historical data were generated.

- Probabilistic Characteristics: It should not escape one's attention that when using the Modern Portfolio Theory (MPT), financial analysts may need to estimate some key parameters from past market data because MPT attempts to model risk in terms of the likelihood of losses, without indicating why those losses might occur. Thus, the risk measurements used are probabilistic in nature, not structural. This is a major difference as compared to many alternative approaches to risk management.

- Estimation of Errors: This is critical in the Modern Portfolio Theory (MPT). In an MPT or mean-variance optimization analysis, accurate estimation of the Variance–Covariance matrix is critical. In this context, numerical forecasting with Monte Carlo simulation with the Gaussian copula and well-specified marginal distributions may be effective. The modeling process may be expected to adjust for empirical characteristics in stock returns such as autoregression, asymmetric volatility, skewness, and kurtosis. Neglecting to account for these factors may result in severe estimation errors occurring in the correlation of the Variance Covariance, resulting in high negative biases.

- MPT: This has one important conceptual difference from the Probabilistic Risk Assessment (PRA) used in the risk assessment of (say) nuclear power plants. In classical econometrics, a PRA is considered as a structural model in which the components of a system and their relationships are modeled using Monte Carlo simulations: if valve X fails, it causes a loss of back pressure on pump Y, which, in turn, causing a drop in flow to vessel Z, etc. However, in the Black–Scholes model and MPT, there is no attempt to explain an underlying structure to price changes. Various outcomes are simply given as probabilities. And, unlike the PRA, if there is no history of a particular system-level event like a liquidity crisis. Thus, there is no way to compute its odds!

(If nuclear safety engineers compute risk management this way, they would not be able to compute the odds of a nuclear meltdown at a particular plant until several similar events occurred in the same reactor design—to produce realistic nuclear safety data!)

- Mathematical risk measurements are useful only to the extent that they reflect investors' true concerns—There is no point minimizing a variable that nobody cares about in practice. Modern Portfolio Theory (MPT) uses the mathematical concept of variance to quantify risk, and this might be acceptable if the assumptions of MPT such as the assumption of returns may be taken as normally distributed returns. For general returns, distributions of other risk measures might better reflect investors' true preference.

3.2.3 Some Typical Simple Illustrative Numerical Examples of the Markowitz MPT Using R

To demonstrate the Markowitz MPT model, two numerical examples are selected:

- An illustrative example that may be treated with simple arithmetical calculations.

- An example selected from the R Package MarkowitzR, available from CRAN, is chosen. For this example, the associated R program is run, outputting the concomitant associated numerical and graphical results.

3.2.3.1 Markowitz MPT Using R: A Simple Example of a Portfolio Consisting of Two Risky Assets

This example introduces modern portfolio theory in a simple setting of only a single risk-free asset and two risky assets.

A Portfolio of Two Risky Assets

Consider an investment problem in which there are two nondividend-paying stocks: Stock A and Stock B, and over the next month let

- RA be the monthly simple return on the Stock A, and

- RB be the monthly simple return on the Stock B.

Since these returns will not be realized until the end of each month, their returns may be treated as random variables.

Assume that the returns RA and RB are jointly normally distributed, and that the following information are available regarding the means, variances, and covariances of the probability distribution of the two returns:

The means µ, standard deviations σ, covariance σAB, and correlation coefficient ρAB are defined as follows:

where E is the expectation and var is the variance.

Generally, these values are estimated from historical return data for the two stocks. On the other hand, they may also be subjective estimates by an analyst!

For this exercise, one may assume that these values are taken as given:

Remarks:

- For the monthly returns on each of the two stocks, the expected returns µA and µB are considered to be best estimated expectations. However, since the investment returns are random variables, one must recognize that the actual realized returns may be different from these expectations. The variances

and

and  provide some estimated measures of the uncertainty associated with these monthly returns.

provide some estimated measures of the uncertainty associated with these monthly returns. - One may also consider the variances as measuring the risk associated with the investments:

- Assets with high return variability (or volatility) are often considered—understandably—to be risky.

- Assets with low return volatility are often thought to be safe.

- The covariance σAB may provide some probabilistic information about the direction of any linear dependence between returns:

- If σAB > 0, the two returns tend to move in the same direction.

- If σAB < 0, the two returns tend to move in opposite directions.

- If σAB = 0, the two returns tend to move independently.

- The strength of the dependence between the returns is provided by the correlation coefficient ρAB:

|

- If | ρAB | → 1, the returns approach each other very closely.

- If | ρAB | → 0, the returns show very little relationship.

Assets Allocation

For a given amount of initial liquid asset, totaling T0, the task at hand is that one will fully invest all this amount in the two stocks A and B. The investment problem is the asset allocation decision: how much to allocate in asset A and how much in asset B?

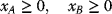

Let xA be the fraction of T0 to be invested in stock A and xB be the fraction of T0 to be invested in stock B.

The values of xA and xB may be positive, zero, or negative:

- Positive values imply long positions, in the purchase, of the asset.

- Negative values imply short positions, in the sales, of the asset.

- Zero values imply no positions in the asset.

As all the wealth is invested in these two assets, it follows that

Thus, if asset A is “shorted,” then the proceeds of the short sale will be used to buy more of asset B and vice versa. (To “short” an asset, one borrows the asset, usually from a broker, and then sells it: and the proceeds from the short sale are usually kept on account with a broker—often there may be some restrictions that prevent the use of these funds for the purchase of other assets. The short position is closed out when the asset is repurchased, and then returned to original owner. Should the asset drops in value, then a gain is made on the short sale; and if the asset increases in value, then a loss occurred!) Hence, the investment problem is

to ascertain the values of xA and xB in such a way that the overall profit of the investment will always be maximized!

The present investment in the two stocks forms a portfolio, and the shares of A and B are the portfolio shares or weights.

In this example, the return on the portfolio, Rp, over the following month is a random variable, given by

that is, a weighted average or linear combination of the random variables RA and RB. Moreover, as both RA and RB are assumed to be normally distributed, it follows from (3.23) that Rp is also normally distributed. Moreover, using the properties of linear combinations of random variables, (3.23) may be used to determine the mean and variance of the distribution of Rp, that is, the return of the entire portfolio.

Probable Expected Return and Variance of the Portfolio

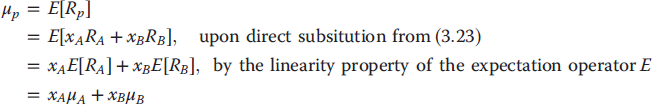

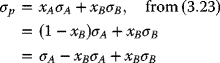

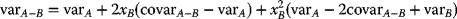

Again, according to (3.23), the mean, variance, and standard deviation of the distribution of the return on the portfolio may be readily derived as follows:

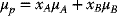

- The mean of the portfolio µp is given by

namely,

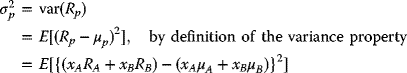

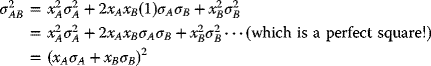

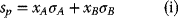

- The variance of the portfolio

is given by

is given by

upon direct substitutions, respectively, from (3.23) and (3.24):

upon squaring the terms

upon expanding the multiplication of terms

upon removing all the parentheses

upon rearranging and recollecting terms

upon further rearranging and recollecting terms

upon further rearranging terms

upon further factoring terms

upon further factoring terms

upon further factoring the expression

namely,

Finally,

- These relationships imply that

Remarks:

- Equation (3.25) shows that the variance of the portfolio is given as a weighted average of the variances of the individual component assets plus twice the product of the portfolio weights times the covariance between the component assets.

- Thus, if the portfolio weights are both positive, then a positive covariance will likely increase the portfolio variance since both returns tend to vary in the same direction. Likewise, a negative covariance may reduce the portfolio variance.

- Hence, assets with negatively correlated returns may be beneficial when building up a portfolio since the concomitant risk, as indicated by portfolio standard deviation, is reduced.

- Forming portfolios with positively correlated assets may reduce risk as long as the correlation is small.

Remark:

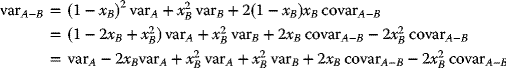

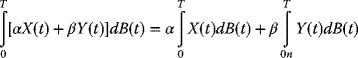

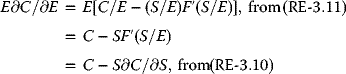

The following R-code* segment may be used for this computations:

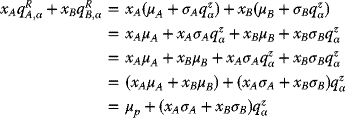

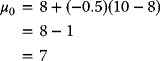

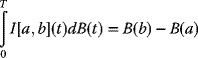

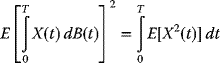

- mu <- A*muA + B*muB

- var <- A*A*sigA*sigA + B*B*sigB*sigB + 2*A*B*sigAB)

*A comprehensive presentation of the R computer code is provided in Chapter 4.

Here, one may have recourse to using the R code to facilitate repetitive computations, in order to investigate results for other choices of xA, and hence xB.

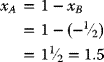

Thus, for

the following R code segment would undertake the computation. The results follow, together with some graphic presentations of the results.

In the R domain:

>> muA <- 0.2> sigmasqA <- 0.05> muB <- 0.04> sigmasqB <- 0.01> sigA <- -0.2236> sigB <- -0.1000> sigAB <- -0.0038> rhoAB <- -0.17>> A <- c(1, 0.75, 0.50, 0.25, 0, 1.5, -0.5)> B <- c(0, 0.25, 0.50, 0.75, 1, -0.5, 1.5)> mu <- A*muA + B*muB> var <- A*A*sigA*sigA + B*B*sigB*sigB + 2*A*B*sigAB>> mu # Outputting:[1] 0.20 0.16 0.12 0.08 0.04 0.28 -0.04>> var # Outputting:[1] 0.04999696 0.02732329 0.01309924 0.00732481[2] 0.01000000 0.12069316 0.04069924>> plot(A, mu) # Outputting: Figure 3.2> plot(A, var) # Outputting: Figure 3.3>

Figure 3.3 var<- A*A*sigA*sigA + B*B*sigB*sigB + 2*A*B*sigAB.

Representing:

Representing:

Remarks:

- The variance of the portfolio is a weighted average of the variance of the individual assets plus twice the product of the portfolio weights times the covariance between the assets.

- If the portfolio weights are both positive, then a positive covariance will tend to increase the portfolio variance, because both returns tend to move in the same direction; likewise, a negative covariance will tend to reduce the portfolio variance.

- Hence, choosing assets with negatively correlated returns may be beneficial when forming portfolios because risk, as measured by portfolio standard deviation, is reduced.

- Note also that what forming portfolios with positively correlated assets can also reduce risk as long as the correlation is not too large.

In the R domain:

>> muA <- 0.2> sigmasqA <- 0.05> muB <- 0.04> sigmasqB <- 0.01> sigA <- -0.2236> sigB <- -0.1000> sigAB <- -0.0038> rhoAB <- -0.17>> A <- c(1, 0.75, 0.50, 0.25, 0, 1.5, -0.5)> B <- c(0, 0.25, 0.50, 0.75, 1, -0.5, 1.5)> mu <- A*muA + B*muB> var <- A*A*sigA*sigA + B*B*sigB*sigB + 2*A*B*sigAB> sigma <- sqrt(var)>> mu[1] 0.20 0.16 0.12 0.08 0.04 0.28 -0.04>> sigma[1] 0.2236000 0.1652976 0.1144519 0.0855851[5] 0.1000000 0.3474092 0.2017405>> plot(A, sigma) # Outputting: Figure 3.4> RER <- mu/sigma> RER[1] 0.8944544 0.9679513 1.0484753 0.9347421[5] 0.4000000 0.8059660 -0.1982745>> plot(A, RER) # Outputting: Figure 3.5

Figure 3.4 plot(A, sigma).

Figure 3.5 plot(A, RER).

3.2.3.2 Evaluating a Portfolio

Consider an initial investment I0 in a portfolio of assets A and B, for which

- the return is to be given by (3.23) ,

- the expected return is to be given by (3.24) , and

- the variance is to be given by (3.25) .

These relationships show that

Now, for α∈(0, 1), the 100α% portfolio value-at-risk is

where ![]() is the α quantile of the distribution of Rp and is given by

is the α quantile of the distribution of Rp and is given by

where ![]() is the α quantile of the standard normal distribution. If Rp is a continuously compounded return, then the implied simple return quantile is

is the α quantile of the standard normal distribution. If Rp is a continuously compounded return, then the implied simple return quantile is ![]() .

.

Relationship between the Portfolio VaR and the Individual Asset VaR

In general, the Portfolio VaR is not the weighted average of the Individual Asset VaRs. They may be seen in the following counterexample: Consider the portfolio weighted average of the individual asset return quantiles for a two-asset system (A, B) for which the weighted average of the asset return quantiles may be expressed as

Remark:

The weighted asset quantile (3.30) is not equal to the portfolio quantile (3.29) unless σp = ρAB = 1. Hence, weighted asset VaR, in general, is not equal to portfolio VaR because the quantile (3.30) ignores the correlation between RA and RB.

| µA | µB | σA | σB | σAB | ρAB | ||

| 0.2 | 0.05 | 0.04 | 0.01 | 0.2236 | 0.1000 | −0.0038 | −0.17 |

Remark: Note that

and

namely,

because

3.2.4 Management of Portfolios Consisting of Two Risky Assets

In the management of portfolios consisting of two risky assets, the approach may begin by constructing portfolios that are mean-variance efficient, using the following distribution of asset returns and the investors' behavior:

| 1. All returns are |

|

This implies that means, variances, and covariances of returns are constant over the investment horizon and completely characterize the joint distribution of returns.

- Investors know the values of asset return means, variances, and covariances.

- Investors are only concerned about the portfolio expected return and portfolio variance. Investors prefer portfolios with high expected return but not portfolios with high return variance.

With the above assumptions, it is possible to characterize the set of efficient portfolios, that is, those portfolios that have the highest expected return for a given level of risk as measured by portfolio variance. These portfolios are the investors' most likely choice.

For a numerical example of the management of portfolios consisting of two risky assets, use the data set in Table 3.2.

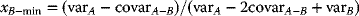

3.2.4.1 The Global Minimum-Variance Portfolio

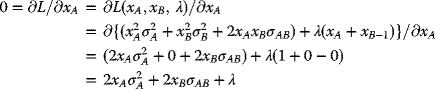

Using elementary calculus, finding the global minimum-variance portfolio is a simple exercise in calculus. This constrained optimization may be defined as

This is a constrained optimization problem and may be solved in the following two ways:

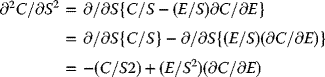

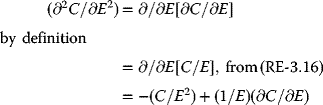

First Method: The method of substitution—using the constraint relationship to substitute one of the two variables (xA, xB) to transform the constrained optimization problem (in two variables) into an unconstrained optimization problem in only one variable. Thus, substituting, from xA + xB = 1, by inserting xB = 1 − xA into the formula for ![]() reduces the optimization problem to

reduces the optimization problem to

The condition for a local stationary point in the expression in (3.36) is that

The differentiation may be achieved using the chain rule:

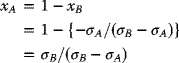

Using (3.37), one obtains ![]() given by

given by

namely,

or

or

or

namely,

and

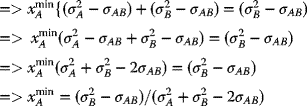

Second Method: The Method of Auxiliary Lagrange Multipliers λ—

| First, one puts the constraint: | xA + xB = 1 into a homogeneous form, |

| and writing: | F1 = xA + xB −1 = 0 |

| as well as: |

The Lagrangian Multiplier Function L is formed by adding to F2 the homogeneous constraint F1, multiplied by an auxiliary variable λ, the Lagrangian Multiplier, to give

Next, (3.39) is minimized, with respect to xA, xB, and λ, leading to three auxiliary conditions:

With the Lagrangian Multiplier Function L given by (3.39), one has

Combining (3.41a) and (3.41b), one obtains

namely,

namely,

namely,

namely,

namely,

or,

Finally, to obtain the required values ![]() , one may incorporate the third Lagrangian Multiplier condition (3.41c), namely, combining (3.42) and (3.41c):

, one may incorporate the third Lagrangian Multiplier condition (3.41c), namely, combining (3.42) and (3.41c):

Combining (3.43a) and (3.43b):

which is, of course, the same as (3.38a):

Finally,

which is, of course (again) (3.38b).

Remarks:

- In the Method of Auxiliary Lagrange Multipliers, the Lagrangian Multiplier Function, λ, is introduced at the beginning. And, after λ facilitated in solving the problem, in a rather elegant but indirect way, it is no longer required in further analysis!

- In the mathematics of optimization, the Method of Lagrange.

Langrangian Multipliers (named after the French mathematician Joseph Lagrange, 1811) are used as a methodology for locating the minima and the maxima of a function subject to a set of equality constraints. For instance, in Figure 3.7, consider the following optimization problem:

“To maximize f(x, y), subject to the constraint g(x, y) = 0.”

Figure 3.7 Method of Lagrange Multipliers: Finding x and y to maximize (or minimize), subject to a constraint (in red): g(x, y) = constant.

It is understood that both f and g have continuous first partial derivatives.

The Method of Lagrangian Multipliers

Introduce a new variable λ, called a Lagrange Multiplier and study the Lagrange Function (or the Lagrangian) defined by

where the λ term may be either added or subtracted. If f(x0, y0) is a maximum of f(x, y) for the original constrained problem, then there exists λ0 such that (x0, y0, λ0) is a stationary point for the Lagrange function (stationary points are those points where the partial derivatives of L are zero). However, not all stationary points yield a solution of the original problem. Thus, the method of Lagrange multipliers yields a necessary condition for optimality in constrained problems. Sufficient conditions for a minimum or maximum also exist (Figure 3.8).

Figure 3.8 Contour Map of Figure 3.7. The red line represents the constraint g(x, y) = c. The blue lines are contours of f(x, y). The solution is the point where the red line tangentially touches a blue contour. (Since d1 > d2, the solution is a maximization of the function f(x, y). (Both ordinates and abscissae are arbitrary.)

Suggested Exercise for Computation Using R:

As an exercise, write a program in R to undertake all the computations.

3.2.4.2 Effects of Portfolio Variance on Investment Possibilities

For a portfolio of any two assets, A and B, the correlation between A and B may strongly affect the investment possibilities of the portfolio. Thus,

- If ρAB is close to 1, then the investment set approaches a linear relationship such that the return is close to a straight line connecting the portfolio with all the funds invested in asset B, namely, (xA, xB) = (0, 1), to the portfolio with all the funds placed in asset A only, that is, (xA, xB) = (1, 0).

- In a plot of µp (as the ordinate) versus σp (as the abscissa) (see Figure 1.2), as ρAB approaches 0, the set bows toward the µp-axis, and the power of diversification starts to make its presence felt! If ρAB = −1, the set will actually tangentially touch the µp-axis. This implies that the assets A and B will be perfectly negatively correlated, and there exists a portfolio of A and B that has positively expected return but zero variance! And to determine the portfolio with

when ρAB = −1, one may use (3.44a) and (3.44b), and the condition that(3.27)

when ρAB = −1, one may use (3.44a) and (3.44b), and the condition that(3.27)

to give

Since ρAB = −1

(3.45)

Now, from (3.44),

(3.44a)

And using (3.44b),

(3.44b)

3.2.4.3 Introduction to Portfolio Optimization

For the efficient set of portfolios as described in Figure 3.6, the critical question will be

“Which portfolio should an investor choose? And why?”

Of the efficient portfolios, investors will select the one that closely supports their risk preferences. In general, risk-averse investors will prefer a portfolio that has low risk, namely, low volatility, and will prefer a portfolio close to the global minimum-variance portfolio.

On the other hand, risk-tolerant investors will ignore volatility and seek portfolios with high expected returns. These investors will choose portfolios with large amounts of asset A that may involve short-selling asset B.

3.2.5 Attractive Portfolios with Risk-Free Assets

In Section 3.1.4, an efficient set of portfolios in the absence of a risk-free asset was constructed. Now consider what happens when a risk-free asset is being introduced.

A risk-free asset is equivalent to default-free pure discount bond that matures at the end of the assumed investment period. The risk-free rate rf, may be represented by the nominal return on a low-risk bond. For example, if the investment period is 1 month, then the risk-free asset is a 30-day U.S. Treasury bill (T-bill), and the risk-free rate is the nominal rate of return on the T-bill. (The “default-free” assumption of U.S. national debt has been questioned owing to the possible, and probable, inability of the U.S. Congress to address the long-term debt problems of the U.S. government.)

If the portfolio holding of the risk-free assets is positive, then it is equivalent to “lending money” at the risk-free rate. If the portfolio holding is negative, at “risk-free” rates, then one is “borrowing” money that is risk-free!

3.2.5.1 An Attractive Portfolio with a Risk-Free Asset

Consider an arbitrary portfolio with asset B, one may examine the consequences if one should introduce a risk-free asset (say, a T-Bill) into this portfolio.

Now the risk-free rate is constant (fixed) over the investment portfolio that has the following properties:

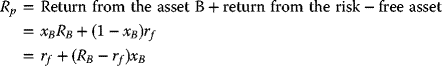

Then, within this portfolio,

- let the proportion of the investment in asset B = xB,

- then the proportion of the investment in T-Bills = (1 − xB)and hence the return of this portfolio is

Rp = Return owing to the asset B + return owing to the risk-free T-Bills = RB xB.

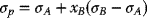

Hence, the portfolio return is given by

The term (RB − rf) is the Excess Return over the T-Bills return on asset B.

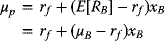

Hence, the Expected Return of the portfolio is

In (3.47), the factor (µB − rf) is the Expected Excess Return or Risk Premium on asset B.

Remarks:

- The risk premium is generally positive for risky assets, showing that investors will expect a higher return on the risky assets than the safe assets. This “risk premium” on the portfolio may be expressed in terms of the risk premium on asset B as follows, using (3.47):

(3.47)

(3.48)

(3.48)

- Thus, the more one invests in asset B, the higher the risk premium on the portfolio.

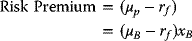

- Since the risk-free rate is constant, the portfolio variance depends only on the variability of asset B, and is given by

(3.49)

- Hence, the portfolio standard deviation is proportional to the standard deviation on asset B:

(3.50a)

which may be solved for xB:

- The Capital Allocation Line (CAL)—Combining (3.47) and (3.50b), one obtains the set of Efficient Portfolios:

(3.47)

(3.48)

(3.48)

or

Hence,

which is a straight line in the (σp,µp)-space, with slope {(µB − rf)/σB}, and ordinate intercept rf.

This is the Capital Allocation Line (CAL)—see Section 3.1.1.7.

The slope of this line is the Sharpe Ratio (SR)—see Section 3.1.1.6. It is a measure of the risk premium on the asset per unit risk—as measured by the standard deviation of the asset.

- Characteristics of a two-asset portfolio with one asset taking short positions to appreciate the economic characteristics of a two-asset portfolio; one may consider the effects of combining two assets to form a portfolio. One can extend the analysis to cases in which short positions may be taken.

First, assume that one may either go long on the risk-free asset lend) or take a short position in it borrow) at the same interest rate, e1.

Let xA and xB be the proportions invested in assets A and B, respectively, and

let eA and eB be their respective returns.

Then, the Expected Return of the whole portfolio, ep, will be given by

and since

namely,

(3.52) may be written as

namely,

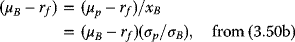

The variance of the portfolio, varp, will be a function of

- the proportions, xA and xB, invested in these assets,

- their return variances: varA and varB, and

- the covariance between their returns: covarA–B:

Upon substituting (1 − xB) for xA to derive an expression relating the variance of the portfolio to the amount invested in asset B:

namely,

and the standard deviation of return, SDR, is the square root of this variance:

For any two given assets in a portfolio, and without loss of generality, one may assume that Asset 1 has less risk and, concomitantly, smaller expected return.

Now, consider the risk-return trade-offs associated with different combinations of the two assets. Also, consider the shape of the curves for mean-variance and mean-standard deviation plots that result as more investment is added to the risky asset, namely, as x2 (for the risky asset) is increased and x1 (for the risk-free asset) is decreased.

3.2.5.1.1 Investments with One Risk-Free Asset and One Risky Asset

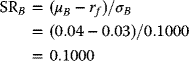

When the investment consists only asset A and a risk-free asset (T-Bills), the Sharpe Ratio, SRA (viz., the excess return of an asset over the return of a risk-free asset divided by the variability or standard deviation of returns, see Section 3.1.1.6) is given by

On the other hand, when the investment consists only asset B and T-Bills, the Sharpe Ratio, SRB, is

Here the data are taken from Table 3.2, and the risk-free rate, rf = 0.03, is the nominal return on the bond. Thus, for example, if the investment horizon is 1 month, then the risk-free asset is a “30-day U.S. Treasury Bill (T-Bill), and the risk-free rate rf is the nominal rate of return on the T-Bill.

Remarks:

- The expected return-risk trade-off for these portfolios are linear.

Figure 3.9 plots the locus of mean-standard deviation combinations for values.

If xA and xB are the proportions invested in assets A and B, respectively, and µA and µB are their respective expected returns, then the expected return of the portfolio of these two assets, µp, will be given by

Since

hence,

for xB between 0 and 1 when xA = 6, µB = 10, σA = 0, σB = 15, and covarA–B = 0.

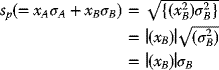

In this case, as in every case involving a riskless and a risky asset, the relationship is linear. This is easily seen. Recall that ep is always linear in xB as shown earlier. If asset A is risk-free, sp (= xA σA + xB σB) will also be linear in xB, since both

and covarA–B will equal zero. In such a case,

and covarA–B will equal zero. In such a case,

where |(xB)| denotes the absolute value of xB.

This result may be applied to cases where it is possible to take short positions. First, assume one can either go long on the risk-free asset (lend) or take a short position in it (borrow) at the same interest rate (eA). The above equations may then be applied. Figure 3.9 shows the results obtained by using leverage in this way. Thus, the point marked 1.5 is associated with xB = 1.5 and xA = −0.5. It shows that by “leveraging up” an investment in asset B by 50%, one may obtain a probability distribution of return on initial capital with an expected value of 12% and a standard deviation of 22.5%. The other points in the figure correspond to the indicated values of xB. Those above the original point involve borrowing (xA < 0) while those below it involve lending (xA > 0).

- The portfolios that are combinations of asset A and T-Bills have Expected Returns uniformly higher than the portfolios that are combinations of asset B and T-Bills. This result due to the Sharpe Ratio of asset A, 0.7603, is higher than the Sharpe Ratio of Asset B, 0.1000.

- The portfolios of asset A and T-Bills are more efficient relative to the portfolios of asset B and T- Bills.

- The Sharpe Ratio may be used as an index for ranking the return efficiencies of individual assets: Assets with higher Sharpe Ratios have superior risk-returns than assets with lower Sharpe Ratios. Thus, in financial engineering, analysts do rank assets on the basis of their Sharpe Ratios.

- For an investment portfolio consisting of a risky asset A and a risk-free asset (such as T-Bills), one may assume that the risk-free rate is constant (viz., fixed) over the investment period during which it has the following special properties: for the risk-free asset, it is assumed that

(3.56b)

(3.56c)

(3.56c)

Figure 3.9 Locus of mean standard deviations.

If xA denote the share of investment in asset A and xf denote the share of investment in the T-Bill portion of the portfolio, then

For this investment, the portfolio return, Rp, is given by

namely,

Remarks:

- The quantity (µA – rf), in (3.55), is the Expected Excess Return, or Risk Premium on asset A (over and above a risk-free asset). For risky assets, the Expected Excess Return is typically positive. That is, investors will expect a higher return on more risky investments.

- The Expected Excess on the portfolio may be expressed in terms of Expected Excess on the risky asset A as follows:

Equation (3.59) shows that for this category of investment portfolio, the greater the proportion of risky asset A invested, the higher the Expected Excess.

- Since the risk-free return rate is constant, the variance of the whole portfolio will depend only on the variability of the risky asset A, and is given by

Hence, the portfolio standard deviation σp is proportional to the standard deviation of asset A:

from which one may solve for xA:

- The feasibility and efficiency of the portfolio is given by the follow relationship: From (3.59):

and from (3.60c):

which is a straight line in the (µp, σp) space with slope {(µA − rf)/σA} and intercept rf.

The straight line (3.61) is the Capital Allocation Line (CAL) and the slope of CAL is called the Sharpe Ratio (SR) (formerly the Reward-to-Variability Ratio). It measures the ratio of the Expected Value of a zero-investment strategy to the Standard Deviation of that strategy. A special case of this approach involves a zero-investment strategy where funds are borrowed at a fixed rate of interest and invested in a risky asset!

Figure 3.10 plots the locus of mean-standard deviation combinations for values of xB between 0 and 1 when µA = 6, µB = 10, σA = 0, σB = 15 and covarA–B = 0.

As shown earlier,

- ep (= xAµA + xBµB) is always linear in xB, and

- If asset A is risk-free, sp (= xA σA + xB σB) will also be linear in xB, since both

and covarA–B will equal zero. In such a case,

and covarA–B will equal zero. In such a case,

- If an investor short the risky asset (xB < 0) and invest the proceeds obtained from the short sale in the risk-free asset A, the standard equations apply. However, note that the variance will be positive, as will the standard deviation, since a negative number (xB) squared is always positive. Figure 3.11 shows the effects of negative xB values.

Figure 3.10 Effects of shorting the risky asset B.

Figure 3.11 A two-asset portfolio: 1 risk-free and 1 risky.

3.2.5.1.2 Investments with One Risk-Free Asset and Two Risky Assets

Next consider a portfolio of two risky assets, A and B, and some T-Bills. In this case, the efficient set will still be a straight line in the (µp, σp) space with intercept rj. The slope of the efficient set, namely, the maximum Sharpe Ratio, is such that it is tangential to the efficient set constructed just using the two risky assets A and B.

Remarks:

- If one invests only in asset A and T-Bills, then this gives a Sharpe Ratio of

and the Capital Allocation Line (CAL) will intersect the efficiency parabola (say, at point A). Thus, this is certainly not the efficient set of portfolios.

- On the other hand, if one invests only in asset B and T-Bills, then this gives a Sharpe Ratio of

and the Capital Allocation Line (CAL) will intersect the efficiency parabola (say, at point B). Again, this is certainly not the efficient set of portfolios.

- Indeed, one could do better if one invests some combination of assets A and B, together with some T-Bills. And clearly, the most efficient portfolio would be one such that the CAL is tangential to the parabola. This Tangency Portfolio will consist of assets A and B such that the CAL is just tangential to the parabola.

- And so the set of efficient portfolios will consist of such sets of assets A and B, together with some risk-free assets, such as T-Bills. These portfolios are Tangency Portfolio of assets A and B.

Remarks:

- If, during the process of allocation of assets, one takes short positions, and then the foregoing result can be extended to such cases as well.

- For example, if in one investment portfolio, one goes long on the risk-free asset (viz., “lend”), or chooses a short position instead (viz., “borrow”) at the same rate of interest, e1, then the above analysis may be applied.

- The results obtained from applying this leverage method is illustrated in Figure 3.10. Thus, the point labeled 1.5 is associated with

This shows that by the use of the leverage method applying to asset B by 50% (from xB = 1.0–1.5) in an investment, the investor may obtain a probability distribution of return on initial investment capital with

- an expected return value of 12% (as indicated in the ordinate of the “Expected return” axis), and

- a predicted standard deviation of 22.5% (as read on the abscissa of the “Standard Deviation” axis.

- In this figure, the other points correspond to the indicated values of xB = 0, 0.5, 1.0, and 2.0. Those above the original point (xB = 1.5) imply borrowing, namely, xA < 0 and xB > 1, and those points below the original point imply lending.

- Should an investor first short the risky asset, namely, xB < 0, and then invest the short sale proceeds in the risk-free asset xA, the analysis presented herein will still be applicable. However, in such cases, the variance and the standard deviation will be positive—since a negative number xB squared is positive. The effects of negative xB values is showed in Figure 3.11.

- In the world of business, it is a common practice that when moneys are borrowed, they usually come at a higher rate (say, 8%) than the rate at which they are being lent (say, 6%). This is being illustrated in Figure 3.14.

This plot shows the loci of the µAB–σAB combinations plotted as two lines, where

and

Figure 3.14 Effects between rate differences for “borrowed” and “lent” funds.

The first line is associated with the lower lending rate, and the second line is associated with the higher borrowing rate. Again, one may assume that the risky asset offers an Expected Return of 10% and a Risk of 15%. This efficient investment system is shown in Figure 3.14 in which the efficient frontier is represented by solid lines, and the options that are available if the investor could lend at 8% are shown in the broken line.

Moreover, the rates charged for borrowing may inverse with the loan amounts, so the locus of the σAB–µAB combinations may increase at a decreasing rate as the risk, σAB, increases beyond the amount for a full unlevered portfolio containing the risky asset: xB = 1. For such conditions, one may expect decreasing returns for taking risks!

To Combine Two Perfectly Positively Correlated Risky Assets

If the two returns are perfectly positively correlated, then σAB = 1, and (3.54) becomes

so that

Remarks:

- The notation |f(x)| denotes the absolute value of the enclosed expression f(x).

- Since neither σA nor σB is negative, when both xA and xB are nonnegative, the expression (xAσA + xBσB) in (3.55) will be nonnegative, and the absolute value of sp is understood implicitly. However, if one of the two x-values is sufficiently negative, then the absolute value of sp should be used explicitly.

- For long positions in the two assets, namely, xA, xB ≥ 0, consider the following combinations:

Since

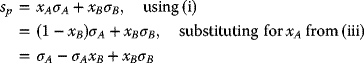

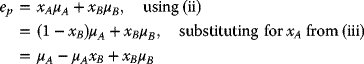

By substituting for xA in (i), using (iii), one obtains from (i):

or

(3.56)

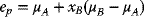

Similarly, by substituting for xA in (ii), using (iii), one obtains from (ii):

or

(3.57)

- All such relationships will lie on a straight line, which represents the two assets (see Figure 3.15).

- Extension of the values of xB to xB > 1 or xB < 0:

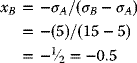

Consider the Minimum-Variance Portfolio, namely, the combination that results in the least possible risk. To achieve a variance of 0, one may seek the value of xB for which sp = 0. And using (3.56):

Solving for xB:,

and

Applying this result to the foregoing numerical example, it may be seen that a risk-free portfolio may be obtained by using (3.58a):

and (3.58b):

- This portfolio may be achieved by taking a short position in asset B equal to half of the total investment funds and investing this amount, together with the original amount of funds, in asset A. This action may need the pledging of some other collateral to form an adequate guarantee to the lender of asset B that the short position may be covered whenever required.

- The need to create a risk-free portfolio by choosing offsetting positions in two perfectly positively correlated assets results in creating a configuration similar to the case for a risk-free asset combining with a risky asset. The following steps show how this goal may be achieved:

Let the expected return on the zero-variance portfolio be

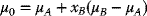

Hence, for the foregoing numerical examples, µA = 8, µB = 10, and xB = −0.5,

And the portfolio returns and risks may be obtained by considering any combinations of the risk-free asset A and either asset A or asset B. Either option provides the graph associated with a risk-free asset and a risky asset. This result is illustrated in Figure 3.16.

- In this case, as in every case involving a risk-free and a risky asset, the relationship is linear. This is easily seen. Recall that ep is always linear in xB, as shown earlier. If asset A is risk-free, sp will also be linear in xB, since both

and covarA–B will equal zero. In such a case,

and covarA–B will equal zero. In such a case,

namely,

where |xB| denotes the absolute value of xB.For cases in which it is possible to take short positions, this result is applicable: First assume one may either go long (viz., to lend) with the risk-free asset A or take a short position in it (viz., to borrow) at the same rate of interest µA. Then the foregoing formulas may be applied directly: This case has been illustrated in Figure 3.10 and Example 3.8.

- Figure 3.17 shows the locus of mean standard deviation combinations for values of xB between 0 and 1, when

Figure 3.15 Two perfectly positively correlated risky assets: σAB = 1, Point 1: Asset A, for which µA = 8, σA = 5; Point 2: Asset B, for which µB = 10, σB = 15.

Figure 3.16 Creating a risk-free portfolio: µ0 = 7, by choosing off-setting positions in two perfectly positively correlated assets: Point 1: Asset A, for which µA = 8, σA = 5; Point 2: Asset B, for which µB = 10, σB = 15.

Figure 3.17 Combining a risk-free asset and a risky asset when µA = 6, µB = 10, σA = 0, σB = 15, and covarA−B = 0.

Again, in this special case, as in cases involving a risk-free asset and a risky asset, the relationship is linear. This observation may be shown as follows:

Since

ep is linear in xB; and when asset A is risk-free, sp will also be linear in xB. Since

both ![]() and covarA–B will equal zero. Therefore,

and covarA–B will equal zero. Therefore,

and

where |xB| is the absolute value of xB.

Remark: It should not escape one's attention that when an investment involves short positions, and between ending asset and liability values, additional liquid capital must be pledged to cover these short positions, as well as possible liability values. Alternatively, a higher rate may have to be charged accordingly for all the short positions.

Remarks:

- The cases are coincidental at the endpoints (x1 = 1, x2 = 0) and (x1 = 0, x2 = 1).

- For all interior combinations, when the Correlation Coefficient ρAB < 1.0, risk is less than proportional to the risks of two assets; the greater the extent of risk reduction, the smaller the correlation coefficient:

- The yellow curve, r12 = 1.0, provides no risk reduction, only risk-averaging.

- The red curve, r12 = 0.5, provides some risk reduction.

- The green curve, r12 = 0, provides some more risk reduction.

- The blue curve, r12 = 0.5, provides even more.

Remarks:

- |f(xA, xB)| represents the absolute value of the expression enclosed.

- If both xA and xB are nonnegative, namely, zero or positive, then the expression (xAσA + xBσB) will be nonnegative, since neither σA nor σB is negative.

- If either xA or xB is sufficiently negative, the expression (xAσA + xBσB) may become negative, and the absolute value should apply.

- Risks: From the combinations of long positions in these two assets, namely,

and in any such combinations: σp = xAσA + xBσB, from (3.23),

namely,

(3.56)

- Similarly, for returns, for such combinations:

namely,

(3.57)

- In Figure 3.20, the σ–µ plot represents the following system:

Both the risk and the return will be proportional to xB, such portfolios will be on a straight line—connecting the points representing the two assets, namely, point 1: (σA = 5, µA = 8), and point 2: (σB = 15, µB = 10).

Figure 3.20 A portfolio of two perfectly positively correlated risky assets.

Remarks:

- In Figure 3.21, the minimum-variance portfolio (in white) is a familiar and distinctive diagram—see Figures 3.9–3.12, 3.15, and 3.17—for portfolios that has one risk-free asset.

- Note that long positions in two perfectly negatively correlated assets are similar to the following:

- A long position in one of two perfectly positively correlated assets.

- A short position in the other asset.

- In many cases, asset correlations have values between −1 and +1.

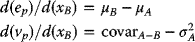

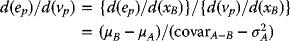

- A general formula for the minimum-variance portfolio may be derived as follows: Starting with a reduced-form equation where the variance varp of a two-asset portfolio is being expressed as a function of xB, starting with

(3.54)

for the two-asset portfolio, assets A and B.

Differentiating both sides of (3.54), with respect to xB, namely, undertaking the ∂/∂xB operation on both sides of (3.54), the result is

(3.55)

To obtain a stationary point, one sets (3.55) to zero, and solve for the value of xB that will provide the minimum-variance portfolio, namely, solving for xB-min. The result is

from which

(3.56)

This minimum-variance portfolio may have a lower risk than either of the two-component assets, and may also have a higher return!

- Considering the point at which xB = 0, one has

and

(3.57)

Assuming that µB > µA, if the slope d(ep)/d(vp) is to be negative, then (3.57) shows that

or

(3.58)

For example, if σA = 5, σB = 15, µB > µA, and σAB < 5/15, the minimum-variance portfolio will dominate asset A, resulting in both higher expected return and lower risk—a double bonus!

3.2.5.2 The Tangency Portfolio

Next, one may proceed to expand the foregoing analysis by considering portfolios consisting of asset A, asset B, and risk-free asset (say, T-Bills). For this case, the efficient set will also be given by (3.51):

namely, a straight line in the (µp, σp) space with intercept rf. For this efficient set, its slope is the maximum Sharpe Ratio, namely, it is tangential to the efficient set consisting of only the two risky assets: A and B.

Tangent Portfolios are portfolios of stocks and bonds designed for long-term investors. To find the Tangent Portfolios, one may start by asking how much one would be prepared to lose in a worst-case scenario without dropping out of the market: 20%? 25%? or 33%? Once the maximum loss level is chosen, the Tangent Portfolios will try to deliver a high rate of return for that level of risk.

There is a human temptation to invest on the basis of the BLASH (Buy-High-And-Sell-Low) route! Thus, most investors tend to take on large amounts of risk during good times (Buy High), and then sell out during bad times (Sell Low)—ruining their returns in the process. The Tangent Portfolios are designed to let the investor do well enough during both good and bad times to keep one in the markets throughout. This allows the investor reap the long-term benefits from investing in stocks and bonds with a simple, low-maintenance solution.

Figure 3.22, same as Figure 3.1, illustrates the Efficient Frontier. The hyperbola is sometimes referred to as the Markowitz Bullet, and is the efficient frontier if no risk-free asset is available. With a risk-free asset, the straight line is the efficient frontier.

Figure 3.22 The tangency portfolio (same as Figure 3.1).

Efficient Frontiers

Different combinations of assets may produce different levels of return. The Efficient Frontier represents the best of these combinations, that is, those that produce the maximum expected return for a given level of risk. The efficient frontier is the basis for modern portfolio theory.

Example of Efficient Frontiers

Markowitz, in 1952 (see Figure 3.23), published a formal portfolio selection model in The Journal of Finance. He continued to develop and publish research on the subject over the next 20 years, eventually winning the 1990 Nobel Memorial Prize in Economic Science for his work on the efficient frontier and other contributions to modern portfolio theory. According to Markowitz, for every point on the efficient frontier, there is at least one portfolio that can be constructed from all available investments that has the expected risk and return corresponding to that point. An example is given here: Notice that the efficient frontier allows investors to understand how an expected returns of a portfolio vary with the amount of risk taken.

Figure 3.23 The efficient frontier according to Markowitz (1952).

An important part of the efficient frontier is the relationship that the invested assets have with one another. Some assets' prices move in the same direction under similar circumstances, while others move in opposite directions. The more out of step that the assets in the portfolio are (i.e., the lower their covariance), the smaller the risk (standard deviation) of the portfolio that combines them. The efficient frontier is curved because there is a diminishing marginal return to risk. Each unit of risk added to a portfolio gains a smaller and smaller amount of return. When Markowitz introduced the efficient frontier, it was a seminal contribution to financial engineering science: One of its greatest contributions was its clear demonstration of the power of diversification.

Markowitz's theory relies on the claim that investors tend to choose, either purposely or inadvertently, portfolios that generate the largest possible returns with the least amount of risk. In other words, they seek out portfolios on the efficient frontier!

It should not be unaware that there is no one efficient frontier because individual investors as well as portfolio managers can and do edit the number and characteristics of the assets in the investing universe to conform to their own personal specific needs. For example, one individual investor may require the portfolio to have a minimum dividend yield, or another client may rule out investments in ideologically (e.g., politically, ethically, ethnically, or religiously) nonpreferred industries. Thus, only the remaining assets are included in the efficient frontier calculations.

Recent Historical Performance of the Stock Market

Maximum Losses: For all the rolling 12-month periods from 2010 going back to 1926:

- The Tangent 20 portfolio had a maximum 1 year inflation-adjusted loss of 20%

- The Tangent 25 portfolio had a maximum 1 year inflation-adjusted loss of 25%

- The Tangent 33 portfolio had a maximum 1 year inflation-adjusted loss of 33%

The period 1926–2010 includes the following extraordinary events:

- The stock market crash of 1929

- The Great Depression

- The World War II

- The Cold War

- Sputnik

- Assassination of a U.S. President

- Race riots

- The Vietnam War

- Inflation

- The stock market crash of 1987

- 9/11 (2001)

- Bubbles and collapse of the .com bubbles

- The panic of 2008

- and so on.

These estimates of losses form historical benchmarks of the bad times that the stock market might face!

The following table shows that the performance of various portfolios respond after correcting for inflation over rolling 12-month periods from 1926 to 2008, before adjusting for applicable taxes and other relevant expenses.

Remarks:

- The Tangent 20 portfolio delivered almost twice the average returns of a portfolio of Treasury Bills, with only slightly more risk of 1-year loss.

- The Tangent 33 portfolio delivered most of the returns of the U.S. stock market, with substantially less risk.

- These are hypothetical and historical notations for reference.

- There is no guarantee that these levels of risk or returns will be maintained in the future.

- Individual investment losses or gains may vary, depending on the levels of risk tolerance, investment objectives, and so on (Table 3.3).

Table 3.3 Estimated total stock market returns, 1926–2008.

| Portfolios | Average year | Worst year |

| U.S. Stocks | 7.9% | −65% |

| Tangent 33 | 7.6% | −33% |

| Tangent 25 | 6.0% | −25% |

| Tangent 20 | 4.6% | −20% |

| T-Bills | 2.4% | −16% |

3.2.5.3 Computing for Tangency Portfolios

To estimate the Tangency Portfolios, one may begin by determining the values of xA and xB that maximize the Sharpe Ratio of the portfolio that is on the envelope of the parabola.

For a given set of two available assets A and B to formally solve for the Tangency Portfolio, the task consists of finding the values of xA and xB that maximize the Sharpe Ratio (SR) of a portfolio that is on the envelope of the parabola: This calls for solving the following constrained maximization problem:

such that

This problem, as stated in (3.59a) and (3.59b), may be reduced to

for which the solutions are as follows:

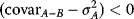

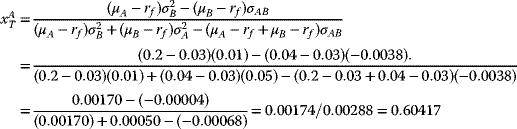

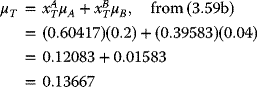

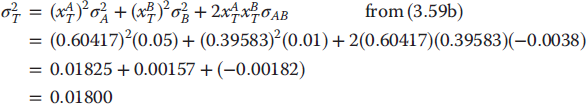

A Numerical Example for the Tangency Portfolio for the Sample Data

For the example data in Table 3.2 and using (3.60a) and (3.60b), one obtains

and

The expected return, variance, and standard deviation on this tangency portfolio are

Cleary, if repeated computations are required, a simple R code may be used to undertake the numerical calculations involving (3.59) and (3.60)

3.2.6 The Mutual Fund Separation Theorem

Until this point, it has been shown that the efficient portfolios are combinations of two classes of assets:

- Tangency portfolios

- Risk-free assets, such as T-Bill

Hence, applying (3.56) and (3.57b):

one may write the expected return and standard deviation of any efficient portfolios:

where

- xT represents the fraction of investments in the tangency portfolio,

- (1 − xT) represents the fraction of wealth invested in risk-free assets (e.g., T-Bills), and

- µT and σT represent, respectively, the expected return and standard deviation of the tangency portfolio.

This result is called the Mutual Fund Separation Theorem.

Remarks:

- The Tangency Portfolio may be considered as a mutual fund of two risky assets—in which the shares of the two risky assets are determined by the tangency portfolio weights:

and

and  determined from (3.60a) and (3.60b), and the T-Bills may be considered as a mutual fund of risk-free assets.

determined from (3.60a) and (3.60b), and the T-Bills may be considered as a mutual fund of risk-free assets. - The exact combination of the tangency portfolio and the T-Bills will be dependent on the risk preference of the investor: If the investor is highly risk-adverse, then this investor may choose a portfolio with low volatility, namely, a portfolio with very small weight in the tangency portfolio together with a very large weight in the T-Bills! Clearly, this option will produce a portfolio with an expected return close to the risk-free rate, and a variance that is nearly zero! On the other hand, if the investor can tolerate a large amount risk, then the preferred portfolio will have high expected return regardless of the volatility.

This portfolio may consist of borrowing at the risk-free rate (known as “leveraging”) and investing the proceeds in the tangency portfolio to achieve an overall high expected returns.

3.2.7 Analyses and Interpretation of Efficient Portfolios

For a given risk level, efficient portfolios have high expected returns—as measured by portfolio standard deviations. Thus, for those portfolios that yield expected returns above the T-Bill rates, the efficient portfolios may also be characterized as those that have minimum risks for a given target expected return—as measured by the portfolio standard deviation.

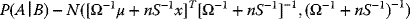

3.3 The Black–Litterman Model

With the foregoing discussions of the development of the study of asset allocation in terms of Markowitz's Modern Portfolio Theory of the mean-variance approach to asset allocation and portfolio optimization, this may well be the suitable point of departure to go from the Markowitz model on to the Black–Litterman model.

Asset allocation is the continuing decision facing an investor who must decide on the optimum allocation of the assets in the portfolio across a few (say up to 20) asset classes. For example, a globally invested mutual fund must select the proportions of the total investment for allocation to each major country or global financial region.

It is true that the Modern Portfolio Theory (the mean-variance approach of Markowitz) may provide a plausible solution to this problem once the expected returns and covariances of the assets are available. Thus, while Modern Portfolio Theory is an important theoretical approach, its application does encounter a serious problem: Although the covariances of a few assets may be adequately estimated, it is difficult to ascertain (with reasonable estimates) of the Expected Returns!

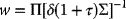

The Black–Litterman approach resolves this problem by the following:

- Not requiring the use of input estimates of expected return.

- Instead, it assumes that the initial expected returns are whatever is required so that the equilibrium asset allocation is equal to what one observes in the markets.

- The user is only required to state how one's assumptions about expected returns differ from the expected returns in the market, and to state one's degree of confidence in the alternative assumptions.

- From this, the Black–Litterman method computes the preferred Mean-Variance Efficient asset allocation.

In general, to overcome portfolio constraints—for example, when short sales are not allowed— the way to find the optimal portfolio is to use the Black–Litterman model to generate the expected returns for the assets, and then use a mean-variance optimization procedure to solve the resultant optimization problem.

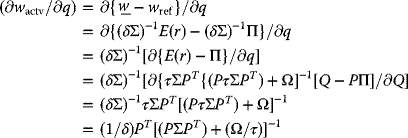

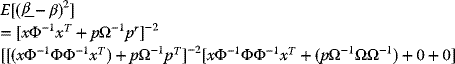

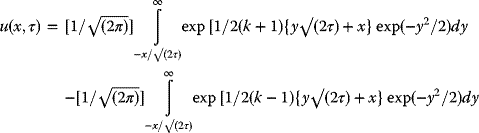

Efficient Frontier