2.5. Visual-Based Feature Extraction and Pattern Classification

This section discusses several commonly used feature extraction algorithms for visual-based biometric systems. The most important task of a feature extractor is to extract the discriminant information that is invariant to as many variations embedded in the raw data (e.g., scaling, translation, rotation) as possible. Since various biometric methods have different invariant properties (e.g., minutiae positions in fingerprint methods, texture patterns in iris approaches), it is important for the system designer to understand the natural characteristics of the biometric signals and the noise models that could possibly be embedded in the data acquisition process.

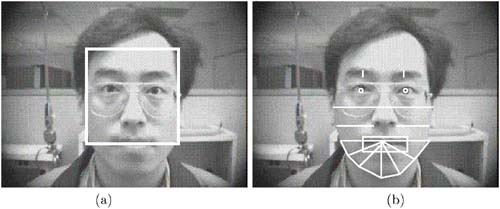

Table 2.3 shows several useful features in image-based biometric identification problems. Two main classes of feature extraction techniques apply to the recognition of digital images: geometric feature extraction and template feature extraction. Geometric features are obtained by measuring the geometric characteristics of several feature points from sensory images (e.g., length of the nose, or the distance between two minutiae). Template features are extracted by applying global-level processing to the subimage of interest. The techniques are based on template matching, where a two-dimensional array of intensity values (or more complicated features extracted from it) is involved. Figure 2.8 shows a human face as an example of the difference between these two feature extraction techniques. The nonimage-based biometric approaches (e.g., thermography [287], pen pressure and movement [278], and motion [29]) are not within the scope of this book.

Figure 2.8. Possible template used for a face recognition problem. (a) Image in the white box; (b) geometric measurements indicated in white lines.

| Template | Geometric Features | Auxiliary Information | |||||

|---|---|---|---|---|---|---|---|

| Low-Frequency Component | High-Frequency Component | Principal Component | Texture | High-Level Features | |||

| Gaussian Pyramid | Laplacian Pyramid— edge filtering | KL SVD APLEX | Gabor Filters— Wavelets | NN | |||

| Face | ○ | ○ | ○ | ○ | ○ | ○ | Color, motion, thermography |

| Palm | ○ | ○ | ○ | ||||

| Fingerprint | ○ | ○ | ○ | ○ | ○ | ||

| Iris/Retina | ○ | ○ | ○ | ○ | |||

| Signature | ○ | ○ | ○ | Pen movement, pen pressure | |||

2.5.1. Geometric Features

Geometric feature extraction techniques are widely used in many works of biometric identification. People measure geometric features either manually or automatically to verify identity. In an early work done by Kelly [178], 10 measurements are extracted manually for face recognition, including height, width of head, neck, shoulders, and hips. Kanade [173] uses an automatic approach to extract 16-dimensional vectors of facial features. Cox et al. [65] manually extract 30 facial features for a mixed-distance face recognition approach. Kaufman and Breeding [177] measure geometric features from face profile silhouettes. The ID3D Handkey system [10] automatically measures hand geometric features by an edge-detection technique. Gunn and Nixon [121] use an active contour model "snake" [174] to automatically extract the boundary of the human head. Most automatic fingerprint identification systems (AFIS) detect the location of minutiae (anomalies on a fingerprint map, such as ridge ending and ridge bifurcation) and measure their relative distances [56, 62, 198, 286, 295].

Feature point localization is a crucial step for geometric feature extraction. For example, two corners of an eye ("feature points") must be located before the width is measured. People have applied various techniques for automatic feature point localization because locating them manually is both tiresome and impractical. Automatic feature point localization techniques include the Hough transform [263], spatial filters [92, 299, 396], deformable template [126, 399], morphological filters [295, 399], Gabor wavelets [230], knowledge-based approaches [57], and neural networks [207, 323, 343]. The difficulty of feature point localization varies from application to application. For fingerprint approaches, the localizer is easier to design since all feature points are similar (most fingerprint approaches detect no more than two types of minutiae [295]). For face recognition methods, designing localizers is more complex because a much larger variation exists between various facial feature points (e.g., corners of the eye, tip of the nose, bottom of the chin).

One disadvantage to geometric feature extraction is that many feature points must be correctly localized to make a correct measurement. Compared to template-based approaches, which need only locate two to four feature points (to locate the template position) [211, 251], geometric methods often need to locate up to 100 feature points to extract meaningful geometric features.

2.5.2. Template Features

Template-based methods crop a particular subimage (the template) from the original sensory image, and extract features from the template by applying global-level processing, without a priori knowledge of the object's structural properties. Compared to geometric feature extraction algorithms, image template approaches need to locate far fewer points to obtain a correct template. For example, in the PDBNN face recognition system (cf. Chapter 8), only two points (left and right eyes) need to be located to extract a facial recognition template. Brunelli and Poggio [38] compared these two types of feature extraction methods on a face recognition problem. They found the template approach to be faster and able to generate more accurate recognition results than the geometric approach.

The template that is directly cropped out of the original image is not suitable for recognition without further processing, for the following reasons: (1) The feature dimensions of the original template are usually too high. A template of 320 x 240 pixels means a feature vector dimension of 76,800. It is impractical to use such a high-dimensional feature vector for recognition. (2) The original template can be easily corrupted by many types of variation. Table 2.3 lists five types of commonly used feature extraction methods for template-based approaches.

The most commonly used features are high-frequency components (or edge features). Although edge information is more prone to the influence of noise, it reflects more of the structural properties of an object. Many fingerprint approaches use directional edge filtering and thinning process to extract fingerprint structures [198, 295]. Rice analyzes the vein structures of human hands by extracting edge information from infrared images [310]. Wood et al. use a modified Laplacian of Gaussian (▿2G) operator to detect retina vessel structures [385].

Low-frequency components (or Gaussian pyramids) are good for removing high-frequency noise as well as for dimension reduction. Sung and Poggio use low-resolution images for face detection [343]. Rahardja et al. use the Gaussian pyramid structure to represent image data for their facial expression classification system [293]. Baldi uses low-pass filtering techniques as the first preprocessing step of his neural network fingerprint recognizer [16]. Golomb and Sejnowski [115] and Turk and Pentland [356] also use low-pass filtering and down-sampling as preprocessing steps for face recognition.

As shown in Table 2.3, high-frequency component features have been widely used for various biometric methods, including face recognition. In the face recognition system described in Lin et al. [211] (see also Chapter 8), low-frequency features were also adopted to assist in recognition.

2.5.3. Texture Features

Another useful feature for biometric identification is texture information. Wavelet methods are often used to extract texture information. For example, the FBI has adopted wavelet transform as the compression standard for fingerprint images [34]. Daugman uses Gabor wavelets to transform an iris pattern into a 256-byte "iris code" [67]. He claims the theoretical error rate could be as low as 10-6. Lades et al. also use Gabor wavelets as features in their dynamic link architecture (DLA)— an elastic matching type of neural network—for face recognition [194]. To effectively represent the vast number of facial features and reduce computational complexity, in Zhang and Guo [401], wavelet transform was used to decompose facial images so that the lowest resolution subband coefficients can be used for facial representation.

2.5.4. Subspace Projection for Feature Reduction

Raw image data usually involve very large dimensions. It is therefore necessary to reduce feature dimensions to facilitate an efficient face detector or classifier. There are several prominent subspace methods for dimensionality reduction: (1) unsupervised methods such as principal component analysis and independent component analysis; and (2) supervised methods such as linear discriminant analysis and support vector machines.

If the diversity of input patterns is sufficient to show statistical distribution properties, principal component analysis can be a simple and effective approach to feature extraction. Pentland et al. assume that the set of all possible face patterns occupies a small and parameterized subspace (i.e., the eigenface space) derived from the original high-dimensional input image space [250, 272, 356]. Therefore, they apply the PCA to obtain the eigenfaces—the principal components in the eigenspace—for face recognition.

To reduce the computational burden, facial features are first extracted by the PCA method [87, 356]. With supervised training, the features can be further processed by Fisher's linear discriminant analysis technique to acquire lower-dimensional discriminant patterns. In Er et al. [87], a paradigm was proposed whereby data information is encapsulated in determining the structure and initial parameters of the classifier prior to the actual learning phase.

One of the purposes of feature selection is to support the creation of a face-class model. In Sadeghi et al. [327], a method for face-class modeling in the eigenfaces space using a large-margin classifier, such as SVM, is presented. Two main issues were addressed: (1) the required number of eigenfaces to achieve an effective classification rate and (2) how to train the SVM for effective generalization. Moreover, different strategies for choosing the dimensions of the PCA space and their effectiveness in face-class modeling were analyzed.

It is well known that distribution of facial images, with perceivable variations in viewpoint and illumination or facial expression, is highly nonlinear and complex. Thus, it was no surprise that linear subspace methods, including PCA and LDA, were found to be unable to provide reliable solutions to cope with the complexities of facial variations [217]. The inadequacy of linear reduction can be effectively compensated for by using the nonlinear dimension reduction schemes discussed in the following subsection.

2.5.5. Neural Networks for Feature Selection

Recently, increasing attention has been placed on the use of neural networks to extract information for classification. To obtain a nonlinear model, nonlinear hidden-layers are adopted in a multi-layer network. One advantage of neural network feature extractors is their learning ability. To more effectively capture the nonlinear relationship between the compression and output layers and to facilitate the nonlinear dimension reduction effect, Feraund et al. [95] suggested that an additional hidden-layer (with reduced dimensions) be incorporated into the multi-layer networks. The weighting parameters in a neural network feature extractor can be trained so that the network can extract features that contain the most discriminant information for the subsequent classifier.

In fact, most neural network feature extractors are connected to the neural network classifiers so that both modules can be trained together. Examples of neural network feature extractors are the Baldi and Yves convolutional neural network for fingerprint recognition [16]; the Weng, Huang, and Ahuja Cresceptron for face detection [376]; Rowley's convolutional neural network for face detection [323]; and Lades's dynamic link architecture for face recognition. Lawrence et al. [195] combine a self-organizing map [184] and convolutional neural networks for face recognition. More than a 96% recognition rate has been reported on the face database from Olivetti Research Laboratory [329]. The neural network feature extraction approach was also successfully applied to the face recognition system described in Lin et al. [211] (see also Chapter 8), where two neural network components (i.e., face detector and eye localizer) are adopted to locate and normalize the facial templates.