8.6. Application Examples for Face Recognition Systems

The PDBNN face recognizer can be considered an extension of the PDBNN face detector. As mentioned in Section 7.5, PDBNN possesses the OCON structure—that is, there is one subnet in the PDBNN designated for a person to be recognized. For a k-person recognition problem, a PDBNN face recognizer consists of K different subnets. Analogous to the PDBNN detector, a subnet i in the PDBNN recognizer models the distribution of person i only and treats those patterns that do not belong to person i as "non-i" patterns.

The network architecture, learning rules, and performance evaluation of the multiple subnet PDBNNs were discussed in Chapter 7. This section focuses on their application to face recognition; the performance of the PDBNN face recognizer will be evaluated based on various experimental results. A hierarchical PDBNN face recognition system that exploits the discriminative power of PDBNNs is introduced in Section 8.5.7. This section also discusses several possible applications for the face recognition system.

The performance of PDBNN face recognition system is discussed from three aspects and corresponding experiments. The first experiment trained and tested PDBNN with three face databases consisting of frontal view face images (frontal view face databases are used in most face recognition research groups). The second experiment explored PDBNN's superior capability of rejecting intruders, which makes PDBNN attractive for applications such as access control. The third experiment compared PDBNN's performance under different variation factors.

8.6.1. Network Security and Access Control

The main purpose of designing the PDBNN face recognition system is access control. The PDBNN face recognition system diagram in Figure 8.7 fits right into the distributed biometric access-control system in Figure 2.3. The sensory device for the PDBNN system is a video camera. The reference database can either be stored locally in a central database or evenly distributed across a network. With the OCON structure of PDBNNs, it is possible to implement the face recognition network on a smart card system; the network parameters in the subnet representing the person can be stored in a smart card after proper encryption. When approaching the access point, a user should simply insert the card into the reader and then smile at the camera. The advantage of the smart card system is that the local matcher need not store the reference data.

The face recognition access-control system can also be adopted to user authentication over a network. With advances in multimedia infrastructure, more PCs are now equipped with video cameras. It is therefore possible to use a face image to assist, if not replace, the text-based password for the identity authentication process. The same technique can also be used in applications such as video conferencing or videophones. Figure 8.12 shows several frames from a live sequence, which demonstrates the recognition accuracy and processing speed of the PDBNN face recognition system.

Figure 8.12. A live sequence of the hierarchical face recognition system. (a) X Window interface; the image in the upper-left corner was acquired from a video camera in real time. (b) A person entering the camera range (time index: 0 sec). (c) The system detected the presence of a face; since the face in the image was too small, the system asked the person to stand closer. Text on picture: "Please step closer"—time index: 0.33 sec. (d) The person stood too close to the camera; the system asked the person to step back. Text on picture: "Please lean back"-time index: 4.7 sec. (e) The system located the face. Text on picture: "Dominant Face/Object Found"—time index: 5 sec. (f) Facial features were extracted−time index: 6 sec. (g) Hairline features were extracted—time index: 6.33 sec. (h) The person was recognized—text on picture: "Authorized: Shang Hung Lin"—time index: 9.1 sec. Note: Special sound effects (a doorbell sound and a human voice calling the name of the authorized person) were generated between time 6.33 sec and time 9.1 sec. The actual recognition time took less than half a second. (i) The pictures of the three individuals most similar to the person under test—time index: 9.2 sec.

8.6.2. Video Indexing and Retrieval

In many video applications, browsing through a large amount of video material to find relevant clips is an extremely important task [402]. The video database indexed by human faces allows users to efficiently acquire video clips about a person of interest. For example, a film study student can easily extract clips of his or her favorite actor from a movie archive, or a TV news reporter can quickly find from the news database clips containing images of the governor of California in 1980s.

A video indexing and retrieving scheme based on human face recognition was proposed by Lin [206]. The scheme contains three steps. First, the video sequence is segmented by applying a scene change detection algorithm [392]. Scene change detection indicates when a new shot starts and ends. Each segment created by scene change detection is considered as a story unit of the sequence. Second, a PDBNN face detector is invoked to find the segments most likely to contain human faces. From every video shot, the representative frame (Rframe) is selected and fed to the face detector. The representative frames for which the detector gives high face detection confidence scores are annotated and serve as the indices for browsing. Third, a PDBNN face recognizer finds all shots containing the persons of interest based on the user's request. The PDBNN recognizer is composed of several subnets, each representing a person to be recognized in the video sequence. If a representative frame has a high confidence score on one of the subnets, it is considered a frame containing that particular person. Figure 8.13 shows the system diagram of this face-based video browsing scheme; not all people who appear in the video sequence are assigned a PDBNN recognizer. This is similar to browsing, where a neural network needs not be built to recognize persons who are not in the cast.

Figure 8.13. A PDBNN face-based video browsing system. The scene change algorithm divides the video sequences into a number of shots, and the face detector examines all representative frames to determine whether they contain human faces. If so, the detector passes the frame to a face recognizer to find out whose face it is.

A preliminary experiment was conducted using a 4-minute news report sequence. This sequence, which was sampled at 15 frames/sec with a spatial resolution of 320 x 240, contained three persons: an anchorman, a male interviewee, and a female interviewee. The goal was to find all of the frames that contained the anchorman. The sequence was divided into 32 segments; representative frames are illustrated in Figure 8.14(a). The anchorman's face appeared in 7 segments (4 in frontal view, 3 in side view). The PDBNN face detector and eye localizer mentioned before were again applied here to pinpoint the face location on each representative frame. It took about 10 seconds to process one frame. A PDBNN recognizer was then built to recognize the anchorman; approximately 2 seconds' length of frames (32 frames) were used as training examples. It took about 1 minute for the network to learn all of the training examples, and only seconds to process all of the index frames. The experimental result showed that the four segments containing the anchorman's frontal view images were successfully recognized with very high confidence scores—Figure 8.14(b). No other video segments were incorrectly identified as the anchorman.

Figure 8.14. Representative (a) frames of the news report sequence and (b) frames of the anchorman found by the PDBNN face recognizer.

Notice that since this approach requires the appearance of both eyes, the three side-view segments of the anchorman could not be recognized; overcoming this limitation is considered as future work. The face detection step needed much more processing time than the face recognition step did. For this news report sequence, the system took about 5 minutes (32 Rframes x 10 sec/frame) in the detection step. The long processing time is the result of a multiresolution search that locates faces with different sizes in the face detection step. Several methods can be applied to reduce total processing time. For example, the number of Rframes can be reduced by roughly merging similar Rframes based on their color histogram or shape similarity. In fact, since the face detector's job is to locate and annotate faces in the sequence, it need run only once. Thus, face detection time does not affect the system's online performance.

8.6.3. Airport Security Application

Several field studies have suggested that it is still premature to claim reliable and robust performance by any face recognition product for airport security applications. On an optimistic note, Cognitec seems to have had some initial success. Its automatic passport checking system, SmartGate, had successfully processed 62,000 transactions of 4,200 enrolled Qantas Air crew members at Sydney's airport by November 2003. In contrast, disappointing results were reported at Boston's Logan International Airport after it tested both Identix and Viisage technologies in 2002 [145]; the system failed to identify positive matches 38% of the time. Although false positives based on an operator's decision didn't exceed 1%, machine-generated false positives exceeded 50%. The major difference between Sydney's success and Boston's failure is that face recognition technology was applied to different applications. Unlike the trial in Boston, where illumination at checkpoints can change drastically, SmartGate was installed under a controlled lighting environment and received testers' full cooperation.

In general, when applications show vulnerability with respect to illumination and pose variation, poor performance and unacceptable results are often anticipated. A major R&D investment is needed to enable face recognition to become a mature and effective commercial product for airport security applications.

8.6.4. Face Recognition Based on a Three-Dimensional CG Model

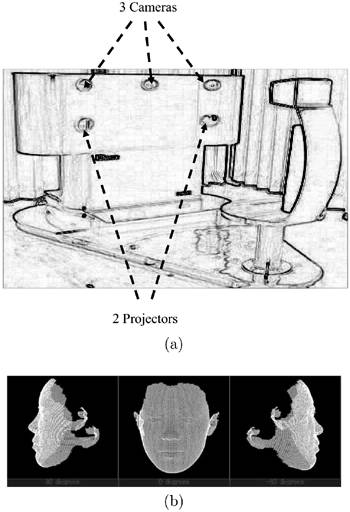

In conventional approaches to face recognition, facial features are extracted from two-dimensional facial images. The main problem of such features is that a straight viewing angle and well-controlled lighting conditions are critically important for good performance. To overcome such difficulties, a novel three-dimensional computer graphics (CG) face model was proposed by Sakamoto et al. [328]. A range finder, as illustrated in Figure 8.15, was developed to acquire the three-dimensional face models. Facial depth (i.e., the distance between the face and camera) can be calibrated by projecting two point-source lights through sinusoidal grating films onto the facial surface. This allows the facial depth to be estimated in very fine resolution (on a scale of millimeters). This comes at a huge computation cost in the offline training phase. In fact, in terms of computational requirements, the range finder requires approximately 180 giga-operations (GOs) per person.

Figure 8.15. Generation of three-dimensional face CG models. (a) The range finder consists of three cameras and two projectors. To calibrate the depth, the projector projects a point-source light through a sinusoidal grating film; this allows the facial depth to be estimated in very fine resolution (in mm scale). (b) The resulting face images of the three-dimensional CG face model with three different angles of approximately − 45%, 0%, and +45%. Adapted from Sakamoto et al. [328].

In the recognition phase, when an input photo query is presented, the registered CG faces are modified so that the adjusted face pose and illumination conditions can best match that of the input photo. The optimally adjusted CG face and query photo can thus be directly compared using the straightforward two-norm distance metrics. During this phase, the matching operation must be performed for all possible viewing angles (1 angle in each 5-degree interval). This amounts to a computational requirement of 10 GOs per person in realtime processing. This implies that a total of 1 tera-operation per second (TOPS) (= 100 x 10 GOs) will be required for a database containing 100 registrants. This in turn implies a required realtime processing power of 1 TOPS if the allowed recognition latency is 1 second. Such a high speed far exceeds the capability offered by today's state-of-the-art digital signal processing technology. However, according to Moore's law on the rapid growth of integrated circuit technology, such a processing speed could become a reality in less than a decade.

8.6.5. Opportunities for Commercial Applications

Face recognition technology can find attractive commercial application opportunities when an application has a controlled environment or, even better, can expect user cooperation. In such cases, current face recognition technology usually works very well, as seen in Cognitec's passport checking system and Viisage's Illinois driver's license photo scan.

Another promising business opportunity can occur in situations where false decisions cause only inconvenience but are not really damaging. For instance, Fuji film's digital photo processing kiosk uses face recognition techniques to detect the presence of people in everyday photos. When human faces are detected, the kiosk balances the color based on the face region and makes the skin tone of the printed photo more vivid [140]. Automatic red-eye reduction and advanced autofocus are immediate extensions of this line of applications. Face recognition represents yet another promising add-on feature for video game products. Many face recognition companies (e.g., Viisage and Identix) have already provided authorization software (or screensaver) and data-mining tools to search the Internet or image databases for PC and mobile devices. The technology will become more mature in the future and find a broader domain of applications including automatic teller machine (ATM) access and gate control for buildings.