Chapter 5. Planning Your System’s Design

Parts I and II were theory—a comprehensive description of the graphical grammar and the tools needed to conceptualize reputation systems. The remaining chapters put all of that theory into practice. We describe how to define the requirements for a reputation model; design web interfaces for the gathering of user evaluations; provide patterns for the display and utilization of reputation; and provide advice on implementation, testing, tuning, and understanding community effects on your system.

Every reputation system starts as an idea from copying a competitor’s model or doing something innovative. In our experience, that initial design motivation usually ignores the most important questions that should be asked before rushing into such a long-term commitment.

Asking the Right Questions

When you’re planning a reputation system—as in most endeavors in life—you’ll get much better answers if you spend a little time up front considering the right questions. This is the point where we pause to do just that. We explore some very simple questions: why are we doing this? What do we hope to get out of it? How will we know we’ve succeeded?

The answers to these questions undoubtedly will not be quite so simple. Community sites on the Web vary wildly in makeup, involving different cultures, customs, business models, and rules for behavior. Designing a successful reputation system means designing a system that’s successful for your particular set of circumstances. You’ll therefore need a fairly well-tuned understanding of your community and the social outcomes that you hope to achieve.

We’ll get you started. Some careful planning and consideration now will save you a world of heartache later.

Here are the questions that we help you answer in this chapter:

What are your goals for your application?

Is generating revenue based on your content a primary focus of your business?

Will user activity be a critical measure of success?

What about loyalty (keeping the same users coming back month after month)?

What is your content control pattern?

Who is going to create, review, and moderate your site’s content and/or community?

What services do staff, professional feed content, or external services provide?

What roles do the users play?

The answers to these questions will tell you how comprehensive a reputation system you need. In some cases, the answer will be none at all. Each content control pattern includes recommendations and examples of incentive models to consider for your system.

Given your goals and the content models, what types of incentives are likely to work well for you?

Should you rely on altruistic, commercial, or egocentric incentives, or some combination of those?

Will karma play a role in your system? Should it?

Understanding how the various incentive models have been demonstrated to work in similar environments will narrow down the choices you make as you proceed to Chapter 6.

What Are Your Goals?

Marney Beard was a longtime design and project manager at Sun Microsystems, and she had a wonderful guideline for participating in any team endeavor. Marney would say, “It’s all right to start selfish. As long as you don’t end there.” (Marney first gave this advice to her kids, but it turns out to be equally useful in the compromise-laden world of product development.)

So, following Marney’s excellent advice, we encourage you to—for a moment—take a very self-centered view of your plans for a rich reputation system for your website. Yes, ultimately your system will be a balance among your goals, your community’s desires, and the tolerances and motivations of everyone who visits the site. But for now, let’s just talk about you.

Both people and content reputations can be used to strengthen one or more aspects of your business. There is no shame in that. As long as we’re starting selfish, let’s get downright crass. How can imbuing your site with an historical sense of people and content reputation help your bottom line?

User engagement

Perhaps you’d like to deepen user engagement—either the amount of time that users spend in your community or the number and breadth of activities that they partake in. For a host of reasons, users who are more engaged are more valuable, both to your community and to your business.

Offering incentives to users may persuade them to try more of your offering than they would try by virtue of their own natural curiosity alone. Offline marketers have long been aware of this phenomenon; promotions such as sweepstakes, contests, and giveaways are all designed to influence customer behavior and deepen engagement.

User engagement can be defined in many different ways. Eric T. Peterson offers up a pretty good list of basic metrics for engagement. He posits that the most engaged users are those who do the following (list adapted from http://blog.webanalyticsdemystified.com/weblog/2006/12/how-do-you-calculate-engagement-part-i.html):

View critical content on your site

Have returned to your site recently (made multiple visits)

Return directly to your site (not by following a link) some of the time

Have long sessions, with some regularity, on your site

Have subscribed to at least one of your site’s available data feeds

This list is definitely skewed toward an advertiser’s or a content publisher’s view of engagement on the Web. It’s also loaded with subjective measures. (For example, what constitutes a “long” session? Which content is “critical”?) But that’s fine. We want subjective—at this point, we can tailor our reputation approach to achieve exactly what we hope to get out of it.

So what would be a good set of metrics to determine community engagement on your site? Again, the best answer to that question for you will be intimately tied to the goals that you’re trying to achieve.

Tara Hunt, as part of a longer discussion on Metrics for Healthy Communities, offers the following suggestions (list adapted from http://www.horsepigcow.com/2007/10/03/metrics-forhealthy-communities/):

The rate of attrition in your community, especially with new members.

The average length of time it takes for a newbie to become a regular contributor.

Multiple community crossover. If your members are part of many communities, how do they interact with your site? Flickr photos? Twittering? Etc.?

The number of both giving actions and receiving actions—for example, posters give (advice, knowledge, etc.), and readers receive. (See Figure 5-1.)

Community participation in tending and policing the community to keep it a nice place (for example, users who report content as spam, who edit a wiki for better layout, etc.).

This is a good list, but it’s still highly subjective. Once you decide how you’d like your users to engage with your site and community, you’ll need to determine how to measure that engagement.

Establishing loyalty

Perhaps you’re interested in building brand loyalty among your site’s visitors, establishing a relationship with them that extends beyond the boundaries of one visit or session. Yahoo! Fantasy Sports employs a fun reputation system, shown in Figure 5-2, enhanced with nicely illustrated trophies for achieving milestones (such as a winning season in a league) for various sports.

This simple feature serves many purposes: the trophies are fun and engaging, they may serve as an incentive for community members to excel at a sport, they help extend each user’s identity and give the user way to express her own unique set of interests and biases to the community, and they are also an effective way of establishing a continuing bond with Fantasy Sports players—one that persists from season to season and sport to sport.

Any time a Yahoo! Fantasy Sports user is considering a switch to a competing service (fantasy sports in general is big business, and there are any number of very capable competitors), the existence of the service’s trophies provides tangible evidence of the switching cost for doing so: a reputation reset.

Coaxing out shy advertisers

Maybe you are concerned about your site’s ability to attract advertisers. User-generated content is a hot Internet trend that’s almost become synonymous with Web 2.0, but it has also been slow to attract advertisers—particularly big, traditional (but deep-pocketed) companies worried about displaying their own brand in the Wild West environment that’s sometimes evident on sites like YouTube or Flickr.

Once again, reputation systems offer a way out of this conundrum. By tracking the high-quality contributors and contributions on your site, you can guarantee to advertisers that their brand will be associated only with content that meets or exceeds certain standards of quality.

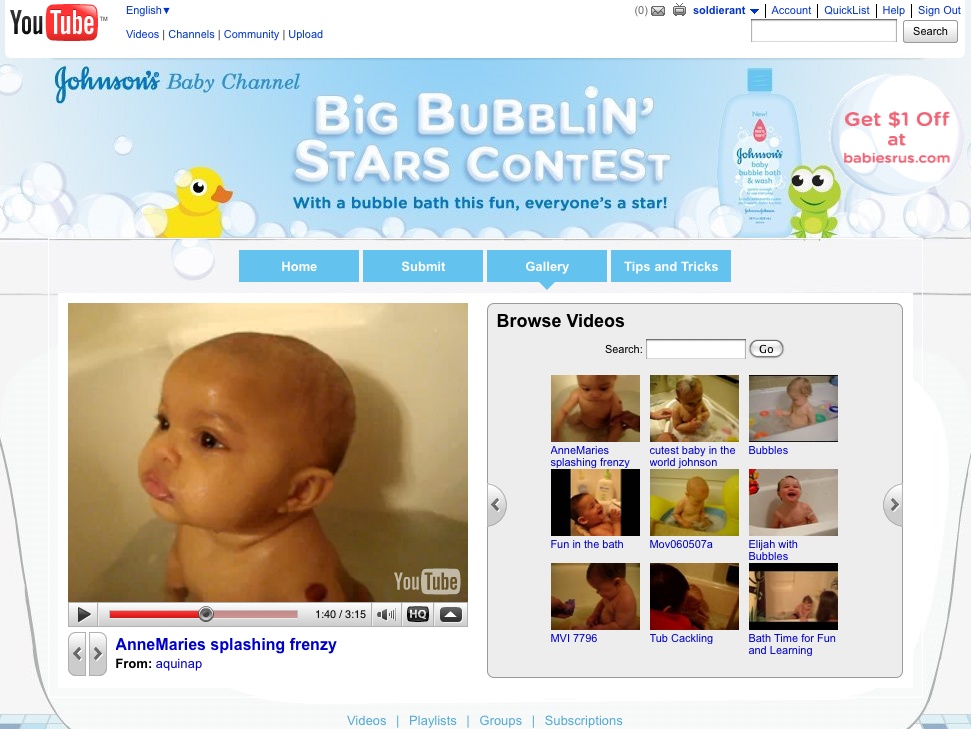

In fact, you can even craft your system to reward particular aspects of contribution. Perhaps, for instance, you’d like to keep a “clean contributor” reputation that takes into account a user’s typical profanity level and also weighs abuse reports against him into the mix. Without some form of filtering based on quality and legality, there’s simply no way that a prominent and respected advertiser like Johnson’s would associate its brand with YouTube’s user-contributed, typically anything-goes videos (see Figure 5-3).

Of course, another way to allay advertisers’ fears is by generally improving the quality (both real and perceived) of content generated by the members of your community.

Improving content quality

Reputation systems really shine at helping you make value judgments about the relative quality of content that users submit to your site. Chapter 8 focuses on the myriad techniques for filtering out bad content and encouraging high-quality contributions. For now, it’s only necessary to think of “content” in broad strokes. First, let’s examine content control patterns—patterns of content generation and management on a site. The patterns will help you make smarter decisions about your reputation system.

Content Control Patterns

The question of whether you need a reputation system at all and, if so, the particular models that will serve you best, are largely a function of how content is generated and managed on your site. Consider the workflow and life cycle of content that you have planned for your community, and the various actors who will influence that workflow.

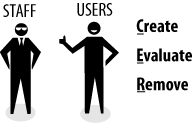

First, who will handle your community’s content? Will users be doing most of the content creation and management? Or staff? (“Staff” can be employees, trusted third-party content providers, or even deputized members of the community, depending on the level of trust and responsibility that you give them.)

In most communities, content control is a function of some combination of users and staff, so we’ll examine the types of activities that each might be doing. Consider all the potential activities that make up the content life cycle at a very granular level:

Who will draft the content?

Will anyone edit it or otherwise determine its readiness for publishing?

Who is responsible for actually publishing it to your site?

Can anyone edit content that’s live?

Can live content be evaluated in some way? Who will do that?

What effect does evaluation have on content?

Can an evaluator promote or demote the prominence of content?

Can an evaluator remove content from the site altogether?

You’ll ultimately have to answer all of these fine-grained questions, but we can abstract them somewhat at this stage. Right now, the questions you really need to pay attention to are these three:

Who will create the content on your site? Users or staff?

Who will evaluate the content?

Who has responsibility for removing content that is inappropriate?

There are eight different content control patterns for these questions—one for each unique combination of answers. For convenience, we’ve given each pattern a name, but the names are just placeholders for discussion, not suggestions for recategorizing your product marketing.

Tip

If you have multiple content control patterns for your site, consider them all and focus on any shared reputation opportunities. For example, you may have a community site with a hierarchy of categories that are created, evaluated, and removed by staff. Perhaps the content within that hierarchy is created by users.

In that case, two patterns apply: the staff-tended category tree is an example of the Web 1.0 content control pattern, and as such it can effectively be ignored when selecting your reputation models. Focus instead on the options suggested by the Submit-Publish pattern formed by the users populating the tree.

Web 1.0: Staff creates, evaluates, and removes

When your staff is in complete control of all of the content on your site—even if it is supplied by third-party services or data feeds—you are using a Web 1.0 content control pattern. There’s really not much a reputation system can do for you in this case; no user participation equals no reputation needs. Sure, you could grant users reputation points for visiting pages on your site or clicking indiscriminately, but to what end? Without some sort of visible result to participating, they will soon give up and go away.

Neither is it probably worth the expense to build a content reputation system for use solely by staff, unless you have a staff of hundreds evaluating tens of thousands of content items or more.

Bug report: Staff creates and evaluates, users remove

In this content control pattern, the site encourages users to petition for removal or major revision of corporate content—items in a database created and reviewed by staff. Users don’t add any content that other users can interact with. Instead, they provide feedback intended to eventually change the content. Examples include bug tracking and customer feedback platforms and sites, such as Bugzilla and GetSatisfaction. Each site allows users to tell the provider about an idea or problem, but it doesn’t have any immediate effect on the site or other users.

A simpler form of this pattern is when users simply click a button to report content as inappropriate, in bad taste, old, or duplicate. The software decides when to hide the content item in question. AdSense, for example, allows customers who run sites to mark specific advertisements as inappropriate matches for their site—teaching Google about their preferences as content publishers.

Typically, this pattern doesn’t require a reputation system; user participation is a rare event and may not even require a validated login. In cases where a large number of interactions per user are appropriate, a corporate reputation system that rates a user’s effectiveness at performing a task can quickly identify submissions from the best contributors.

This pattern resembles the Submit pattern (see Submit-publish: Users create, staff evaluates and removes), though the moderation process in that pattern typically is less socially oriented than the review process in this pattern (since the feedback is intended for the application operators only). These systems often contain strong negative feedback, which is crucial to understanding your business but isn’t appropriate for review by the general public.

Reviews: Staff creates and removes, users evaluate

This popular content control pattern—the first generation of online reputation systems—gave users the power to leave ratings and reviews of otherwise static web content, which then was used to produce ranked lists of like items. Early, and still prominent, sites using this pattern include Amazon.com and dozens of movie, local services, and product aggregators. Even blog comments can be considered user evaluation of otherwise tightly controlled content (the posts) on sites like BoingBoing or The Huffington Post.

The simplest form of this pattern is implicit ratings only, such as Yahoo! News, which tracks the most emailed stories for the day and the week. The user simply clicks a button labeled “Email this story,” and the site produces a reputation rank for the story.

Historically, users who write reviews usually have been motivated by altruism (see Incentives for User Participation, Quality, and Moderation). Until strong personal communications tools arrived—such as social networking, news feeds, and multidevice messaging (connecting SMS, email, the Web, and so on)—users didn’t produce as many ratings and reviews as many sites were looking for. There were often more site content items than user reviews, leaving many content items (such as obscure restaurants or specialized books) without reviews.

Some site operators have tried to use commercial (direct payment) incentives to encourage users to submit more and better reviews. Epinions offered users several forms of payment for posting reviews. Almost all of those applications eventually were shut down, leaving only a revenue-sharing model for reviews that are tracked to actual purchases. In every other case, payment for reviews seemed to have created a strong incentive to game the system (by generating false was-this-helpful votes, for example), which actually lowered the quality of information on a site. Paying for participation almost never results in high-quality contributions.

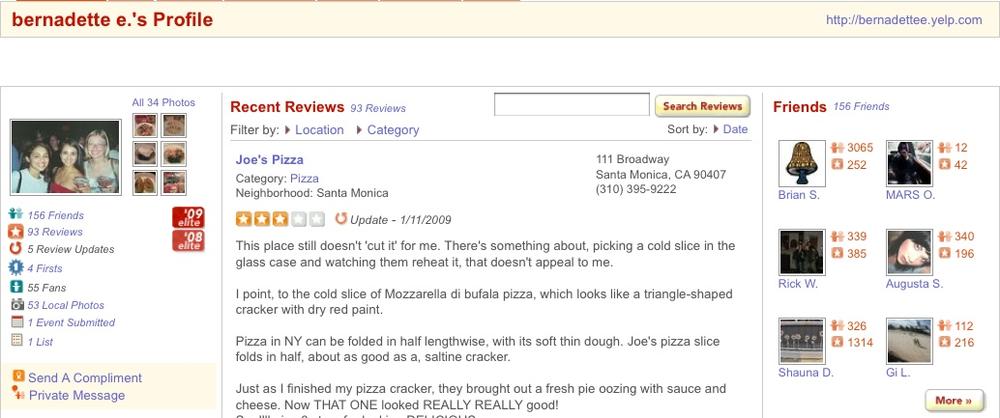

More recently, sites such as Yelp have created egocentric incentives for encouraging users to post reviews: Yelp lets other users rate reviewers’ contributions across dimensions such as “useful,” “funny,” and “cool,” and it tracks and displays more than 20 metrics of reviewer popularity. This configuration encourages more participation by certain mastery-oriented users, but it may result in an overly specialized audience for the site by selecting for people with certain tastes. Yelp’s whimsical ratings can be a distraction to older audiences, discouraging some from contributing.

What makes the reviews content control pattern special is that it is by and for other users. It’s why the was-this-helpful reputation pattern has emerged as a popular participation method in recent years—hardly anyone wants to take several minutes to write a review, but it only takes a second to click a thumb-shaped button. Now a review itself can have a quality score and its author can have the related karma. In effect, the review becomes its own context and is subject to a different content control pattern: Basic social media: Users create and evaluate, staff removes.

Surveys: Staff creates, users evaluate and remove

In the surveys content control pattern, users evaluate and eliminate content as fast as staff can feed it to them. This pattern’s scarcity in public web applications usually is related to the expense of supplying content of sufficient minimum quality. Consider this pattern a user-empowered version of the reviews content control pattern, where content is flowing so swiftly that only the fittest survive the user’s wrath. Probably the most obvious example of this pattern is the television program American Idol and other elimination competitions that depend on user voting to decide what is removed and what remains, until the best of the best is selected and the process begins anew. In this example, the professional judges are the staff that selects the initial acts (content) that the users (the home audience) will see perform (content) from week to week, and the users among the home audience who vote via telephone act as the evaluators and removers.

The keys to using this pattern successfully are as follows:

Keep the primary content flowing at a controlled rate appropriate for the level of consumption by the users, and keep the minimum quality consistent or improving over time.

Make sure that the users have the tools they need to make good evaluations and fully understand what happens to content that is removed.

Consider carefully what level of abuse mitigation reputation systems you may need to counteract any cheating. If your application will significantly increase or decrease the commercial or egocentric value of content, it will provide incentives for people to abuse your system. For example, this web robot helped win Chicken George a spot as a housemate on Big Brother: All Stars (from the Vote for the Worst website):

Click here to open up an autoscript that will continue to vote for chicken George every few seconds. Get it set up on every computer that you can, it will vote without you having to do anything.

Submit-publish: Users create, staff evaluates and removes

In the submit-publish content control pattern, users create content that will be reviewed for publication and/or promotion by the site. Two common evaluation patterns exist for staff review of content: proactive and reactive. Proactive content review (or moderation) is when the content is not immediately published to the site and is instead placed in a queue for staff to approve or reject. Reactive content review trusts users’ content until someone complains and only then does the staff evaluate the content and remove it if needed.

Some websites that display this pattern are television content sites, such as the site for the TV program Survivor. That site encourages viewers to send video to the program rather than posting it, and they don’t publish it unless the viewer is chosen for the show. Citizen news sites such as Yahoo! You Witness News accept photos and videos and screen them as quickly as possible before publishing them to their sites. Likewise, food magazine sites may accept recipe submissions that they check for safety and copyright issues before republishing.

Since the feedback loop for this content control pattern typically lasts days, or at best hours, and the number of submissions per user is minuscule, the main incentives that tend to drive people fall under the altruism category: “I’m doing this because I think it needs to be done, and someone has to do it.” Attribution should be optional but encouraged, and karma is often worth calculating when the traffic levels are so low.

An alternative incentive that has proven effective to get short-term increases in participation for this pattern is commercial: offer a cash prize drawing for the best, funniest, or wackiest submissions. In fact, this pattern is used on many contest sites, such as YouTube’s Symphony Orchestra contest (http://www.youtube.com/symphony). YouTube had judges sift through user-submitted videos to find exceptional performers to fly to New York City for a live symphony concert performance of a new piece written for the occasion by the renowned Chinese composer Tan Dun, which was then republished on YouTube. As Michael Tilson Thomas, director of music, San Francisco Symphony, said:

How do you get to Carnegie Hall? Upload! Upload! Upload!

Agents: Users create and remove, staff evaluates

The agents content control pattern rarely appears as a standalone form of content control, but it often appears as a subpattern in a more complex system. The staff acts as a prioritizing filter of the incoming user-generated content, which is passed on to other users for simple consumption or rejection. A simple example is early web indexes, such as the 100% staff-edited Yahoo! Directory, which was the Web’s most popular index until web search demonstrated that it could better handle the Web’s exponential growth and the types of detailed queries required to find the fine-grained content available.

Agents are often used in hierarchical arrangements to provide scale, because each layer of hierarchy decreases the work on each individual evaluator several times over, which can make it possible for a few dozen people to evaluate a very large amount of user-generated content. We mentioned that the contest portion of American Idol was a surveys content control pattern, but talent selection initially goes through a series of agents, each prioritizing and passing them on to a judge, until some of the near-finalists (selected by yet another agent) appear on camera before the celebrity judges. The judges choose the talent (the content) for the season, but they don’t choose who appears in the qualification episodes—the producer does.

The agents pattern generally doesn’t have many reputation system requirements, depending on how much power you invest in the users to remove content. In the case of the Yahoo! Directory, the company may choose to pay attention to the links that remain unclicked in order to optimize its content. If, on the other hand, your users have a lot of authority over the removal of content, consider the abuse mitigation issues raised in the “Surveys: Staff Creates, Users Evaluate and Remove” pattern (see Surveys: Staff creates, users evaluate and remove).

Basic social media: Users create and evaluate, staff removes

An application that lets users create and evaluate a significant portion of the site’s content is what people are calling basic social media these days. On most sites with a basic social media content control pattern, content removal is controlled by staff, for two primary reasons:

- Legal exposure

Compliance with local and international laws on content and who may consume it cause most site operators to draw the line on user control here. In Germany, for instance, certain Nazi imagery is banned from websites, even if the content is from an American user, so German sites filter for it. No amount of user voting will overturn that decision. U.S. laws that affect what content may be displayed and to whom include the Children’s Online Privacy and Protection Act (COPPA) and the Child Online Protection Act (COPA), which govern children’s interaction with identity and advertising, and the Digital Copyright Millennium Act (DCMA), which requires sites with user-generated content to remove items that are alleged to violate copyright on the request of the content’s copyright holder.

- Minimum editorial quality and revenue exposure

When user-generated content is popular but causes the company grave business distress, it is often removed by staff. A good example of a conflict between user-generated content and business goals surfaces on sites with third-party advertising: Ford Motor Company wouldn’t be happy if one of its advertisements appeared next to a post that read, “The Ford Taurus sucks! Buy a Scion instead.” Even if there is no way to monitor for sentiment, often a minimum quality of contribution is required for the greater health of the community and business. Compare the comments on just about any YouTube video to those on popular Flickr photos. This suggests that the standard for content quality should be as high as cost allows.

Often, operators of new sites start out with an empty shell, expecting users to create and evaluate en masse, but most such sites never gather a critical mass of content creators, because the operators didn’t account for the small fraction of users who are creators (see Honor creators, synthesizers, and consumers). But if you bootstrap yourself past the not-enough-creators problem, through advertising, reputation, partnerships, and/or a lot of hard work, the feedback loop can start working for you (see The Reputation Virtuous Circle). The Web is filled with examples of significant growth with this content control pattern: Digg, YouTube, Slashdot, JPG Magazine, etc.

The challenge comes when you become as successful as you dreamed, and two things happen: people begin to value their status as a contributor to your social media ecosystem, and your staff simply can’t keep up with the site abuse that accompanies the increase in the site’s popularity. Plan to implement your reputation system for success—to help users find the best stuff their peers are creating and to allow them to point your moderation staff at the bad stuff that needs attention. Consider content reputation and karma in your application design from the beginning, because it’s often disruptive to introduce systems of users judging each other’s content after community norms are well established.

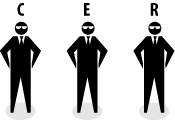

The Full Monty: Users create, evaluate, and remove

What? You want to give users complete control over the content? Are you sure? Before you decide, read the section Basic social media: Users create and evaluate, staff removes to find out why most site operators don’t give communities control over most content removal.

We call this content control pattern the Full Monty, after the musical about desperate blue-collar guys who’ve lost their jobs and have nothing to lose, so they let it all hang out at a benefit performance, dancing naked with only hats for covering. It’s kinda like that—all risk, but very empowering and a lot of fun.

There are a few obvious examples of appropriate uses of this pattern. Wikis were specifically designed for full user control over content (that is, if you have reason to trust everyone with the keys to the kingdom, get the tools out of the way). The Full Monty pattern works very well inside companies and nonprofit organizations, and even in ad hoc workgroups. In these cases, some other mechanism of social control is at work—for example, an employment contract or the risk of being shamed or banished from the group. Combined with the power for anyone to restore any damage (intentional or otherwise) done by another, these mechanisms provide enough control for the pattern to work.

But what about public contexts in which no social contract exists to define acceptable behavior? Wikipedia, for example, doesn’t really use this pattern: it employs an army of robots and professional editors who watch every change and enforce policy in real time. Wikipedia follows a pattern much more like the one described in Basic social media: Users create and evaluate, staff removes.

When no external social contract exists to govern users’ actions, you have a wide-open community, and you need to substitute a reputation system in order to place a value on the objects and the users involved in it. Consider Yahoo! Answers (covered in detail in Chapter 10). Yahoo! Answers decided to let users themselves remove content from display on the site because of the staff backlog. Because response time for abusive content complaints averaged 12 hours, most of the potential damage had already been done by the time the offending content was removed. By building a corporate karma system that allowed users to report abusive content, Yahoo! Answers dropped the average amount of time that bad content was displayed to 30 seconds. Sure, customer care staff was still involved with the hardcore problem cases of swastikas, child abuse, and porn spammers, but most abusive content came to be completely policed by users.

Notice that catching bad content is not the same as identifying good content. In a universe where the users are in complete control, the best you can hope to do is encourage the kinds of contributions you want through modeling the behavior you want to see, constantly tweaking your reputation systems, improving your incentive models, and providing clear lines of communication between your company and customers.

Incentives for User Participation, Quality, and Moderation

Why do people do the things they do? If you believe classical economics, it’s because of incentives. An incentive creates an expectation in a person’s mind (of reward, or delight, or punishment) that leads them to behave in a certain way. If you’re going to attempt to motivate your users, you’ll need some understanding of incentives and how they influence behavior.

Predictably irrational

When analyzing what role reputation may have in your application, you need to look at what motivates your users and what incentives you may need to provide to facilitate your goals. Out of necessity, this will take us on a short side trip through the intersection of human psychology and market economics.

In Chapter 4 of his book Predictably Irrational (HarperCollins), Duke University Professor of behavioral economics Dan Ariely describes a view of two separate incentive exchanges for doing work and the norms that set the rules for them; he calls them social norms and market norms.

Social norms govern doing work for other people because they asked you to—often because doing the favor makes you feel good. Ariely says these exchanges are “wrapped up in our social nature and our need for community. They are usually warm and fuzzy.” Market norms, on the other hand, are cold and mediated by wages, prices, and cash: “There’s nothing warm and fuzzy about [them],” writes Ariely. Market norms come from the land of “you get what you pay for.”

Social and market norms don’t mix well. Ariely gives several examples of confusion when these incentive models mix. In one, he describes a hypothetical scene after a family home-cooked holiday dinner, in which he offers to pay his mother $400, and the outrage that would ensue, and the cost of the social damage (which would take a long time to repair). In a second example, less purely hypothetical and more common, Ariely shows what happens when social and market norms are mixed in dating and sex. A guy takes a girl out on a series of expensive dates. Should he expect increased social interaction—maybe at least a passionate kiss? “On the fourth date he casually mentions how much this romance is costing him. Now he’s crossed the line (and has upset his date!). He should have known you can’t mix social and market norms—especially in this case—without implying that the lady is a tramp.”

Ariely goes on to detail an experiment that verifies that social and market exchanges differ significantly, at least when it comes to very small units of work. The work-effort he tested is similar to many of reputation evaluations we’re trying to create incentives for. The task in the experiments was trivial: use a mouse to drag a circle into a square on a computer screen as many times as possible in five minutes. Three groups were tested: one group was offered no compensation for participating in the test, one group was offered 50 cents, and the last group was offered $5. Though the subjects who were paid $5 did more work than those who were paid 50 cents, the subjects who did the most work were the ones who were offered no money at all. When the money was substituted with a gift of the same value (a Snickers bar and a box of Godiva chocolates), the work distinction went away—it seems that gifts operate in the domain of social norms, and the candy recipients worked as hard as the subjects who weren’t compensated. But when a price sticker was left on the chocolates so that the subjects could see the monetary value of the reward, it was again market norms that applied, and the striking difference in work results reappeared—with volunteers working harder than subjects who received priced candy.

Incentives and reputation

When considering how a content control pattern might help you develop a reputation system, be careful to consider two sets of needs: what incentives would be appropriate for your users in return for the tasks you are asking them to do on your behalf? And what particular goals do you have for your application? Each set of needs may point to a different reputation model—but try to accommodate both.

Ariely talked about two categories of norms—social and market—but for reputation systems, we talk about three main groups of online incentive behaviors:

Interestingly, these behaviors map somewhat to social norms (altruistic and egocentric) and market norms (commercial and egocentric). Notice that egocentric motivation is listed both a social and a market norm. This is because market-like reputation systems (like points or virtual currencies) are being used to create successful work incentives for egocentric users. In effect, egocentric motivation crosses the two categories in a entirely new virtual social environment—an online reputation-based incentive system—in which these social and market norms can coexist in ways that we might normally find socially repugnant in the real world. In reputation-based incentive systems, bragging can be good.

Altruistic or sharing incentives

Altruistic, or sharing, incentives reflect the giving nature of users who have something to share—a story, a comment, a photo, an evaluation—and who feel compelled to share it on your site. Their incentives are internal. They may feel an obligation to another user or to a friend, or they may feel loyal to (or despise) your brand.

Altruistic or sharing incentives can be characterized into several categories:

Tit-for-tat or pay-it-forward incentives: “I do it because someone else did it for me first.”

Friendship incentives: “I do it because I care about others who will consume this.”

Know-it-all or crusader or opinionated incentives: “I do it because I know something everyone else needs to know.”

Other altruistic incentives: If you know of other incentives driven by altruism or sharing, please contribute them to the website for this book: http://buildingreputation.com.

When you’re considering reputation models that offer altruistic incentives, remember that these incentives exist in the realm of social norms; they’re all about sharing, not accumulating commercial value or karma points. Avoid aggrandizing users driven by altruistic incentives—they don’t want their contributions to be counted, recognized, ranked, evaluated, compensated, or rewarded in any significant way. Comparing their work to anyone else’s will actually discourage them from participating.

Tit-for-tat and pay-it-forward incentives

A tit-for-tat incentive is at work when a user has received a benefit either from the site or from the other users of the site, and then contributes to the site to return the favor. Early social sites, which contained only staff-provided content and followed a content control patterns such as reviews, provided no incentives to participate. On those sites, users most often indicated that they were motivated to contribute only because another user’s review on the site helped them.

A pay-it-forward incentive (from the book by that title by Catherine Ryan Hyde, and the motion picture in 2000) is at work when a user contributes to a site with the goal of improving the state of the world by doing an unrequested deed of kindness toward another—with the hope that the recipient would do the same thing for one or more other people, creating a never-ending and always expanding world of altruism. It can be a model for corporate reputation systems that track altruistic contributions as an indicator of community health.

Friendship incentives

In Fall 2004, when the Yahoo! 360° social network first introduced the vitality stream (later made popular by Facebook and Twitter as the personal news feed), it included activity snippets of various types, such as status and summary items that were generated by Yahoo! Local whenever a user’s friends wrote a review of a restaurant or hotel. From the day the vitality stream was launched, Yahoo! Local saw a sustained 45% increase in the number of reviews written daily. A Yahoo! 360° user was over 50 times more likely to write a review than a typical Yahoo! Local user.

The knowledge that friends would be notified when you wrote a review—in effect notifying them both of where you went and what you thought—became a much stronger altruistic motivator than the tit-for-tat incentive. There’s really no reputation system involved in the friendship incentive; it’s simply a matter of displaying users’ contributions to their friends through news feed events, in item searches, or whenever they happen to encounter a reputable entity that a friend evaluated.

Crusader, opinionated incentives, and know-it-all

Some users are motivated to contribute to a site by a passion of some kind. Some passions are temporary; for example, the crusaders are like those who’ve had a terrible customer experience and might wish to share their frustration with the anonymous masses, perhaps exacting some minor revenge on the business in question. Some passions stem from deeply held religious or political beliefs that they feel compelled to share; these are the opinionated. The know-it-all users’ passions emerge from topical expertise and others who are just killing time. In any case, people seem to have a lot to say that has very mixed commercial value. Just glancing at the comments on a popular YouTube video will show many of these motivations all jumbled together.

This group of altruistic incentives is a mixed bag. It can result in some great contributions as well as a lot of junk (as we mentioned in There’s a Whole Lotta Crap Out There). If you have reason to believe that a large portion of your most influential community members will be motivated by controversial ideas, carefully consider the costs of evaluation and removal in the content control pattern that you choose. Having a large community that is out of control can be worse than having no community at all.

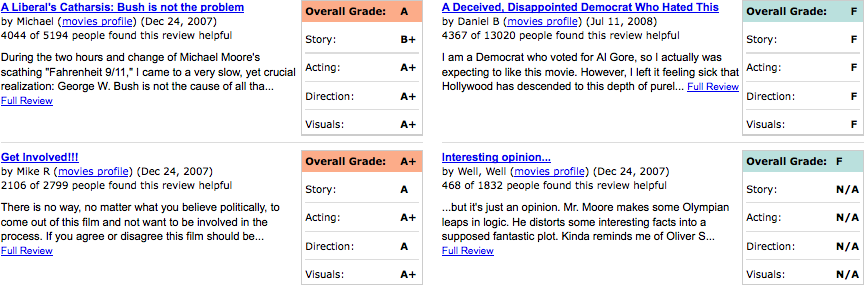

On any movie review site, look at the way people respond to one another’s reviews for hot-button movies like Fahrenheit 9/11 (Figure 5-4) or The Passion of the Christ. If the site offers “Was this review helpful?” voting, the reviews with the highest total votes are likely to be very polarized. Clearly, in these contexts the word helpful means “agreement with the review-writer’s viewpoint.”

Commercial incentives

Commercial incentives fall squarely in the range of Ariely’s market norms. They reflect people’s motivation to do something for money, though the money may not come in the form of direct payment from the user to the content creator. Advertisers have a nearly scientific understanding of the significant commercial value of something they call branding. Likewise, influential bloggers know that their posts build their brand, which often involves the perception of them as subject matter experts. The standing that they establish may lead to opportunities such as speaking engagements, consulting contracts, improved permanent positions at universities or prominent corporations, or even a book deal. A few bloggers may actually receive payment for their online content, but more are capturing commercial value indirectly.

Reputation models that exhibit content control patterns based on commercial incentives must communicate a much stronger user identity. They need strong and distinctive user profiles with links to each user’s valuable contributions and content. For example, as part of reinforcing her personal brand, an expert in textile design would want to share links to content that she thinks her fans will find noteworthy.

But don’t confuse the need to support strong profiles for contributors with the need for a strong or prominent karma system. When a new brand is being introduced to a market, whether it’s a new kind of dish soap or a new blogger on a topic, a karma system that favors established participants can be a disincentive to contribute content. A community decides how to treat newcomers—with open arms or with suspicion. An example of the latter is eBay, where all new sellers must “pay their dues” and bend over backward to get a dozen or so positive evaluations before the market at large will embrace them as trustworthy vendors. Whether you need karma in your commercial incentive model depends on the goals you set for your application. One possible rule of thumb: if users are going to pass money directly to other people they don’t know, consider adding karma to help establish trust.

There are two main subcategories of commercial incentives:

- Direct revenue incentives

Extracting commercial value (better yet, cash) directly from the user as soon as possible

- Branding incentives

Creating indirect value by promotion—revenue will follow later

Direct revenue incentives

A direct revenue incentive is at work whenever someone forks over money for access to a content contributor’s work and the payment ends up, sometimes via an intermediary or two, in the contributor’s hands. The mechanism for payment can be a subscription, a short-term contract, a transaction for goods or services, on-content advertising like Google’s AdSense, or even a PayPal-based tip jar.

When real money is involved, people take trust seriously, and reputation systems play a critical role in establishing trust. By far the most well-known and studied reputation system for online direct revenue business is eBay’s buyer and seller feedback (karma) reputation model. Without a way for strangers to gauge the trustworthiness of the other party in a transaction, no online auction market could exist.

When you’re considering reputation systems for an application with a direct revenue incentive, step back and make sure that you might not be better off with either an altruistic or an egocentric incentive. Despite what you may have learned in school, money is not always the best motivator, and for consumers it’s a pretty big barrier to entry. The ill-fated Google Answers failed because it was based on a user-to-user direct revenue incentive model in which competing sites, such as WikiAnswers, provided similar results for free (financed, ironically, by using Google AdSense to monetize answer pages indexed by, you guessed it, Google).

Incentives through branding: Professional promotion

Various forms of indirect commercial incentives can together be referred to as branding, the process of professionally promoting people, goods, or organizations. The advertiser’s half of the direct revenue incentive model lives here, too. The goal is to expose the audience to your message and eventually capture value in the form of a sale, a subscriber, or a job.

Typically, the desired effects of branding are preceded by numerous branding activities: writing blog posts, running ads, creating widgets to be embedded on other sites, participating in online discussions, attending conferences, and so on. Reputation systems offer one way to close that loop by capturing direct feedback from consumers. They also help measure the success of branding activities.

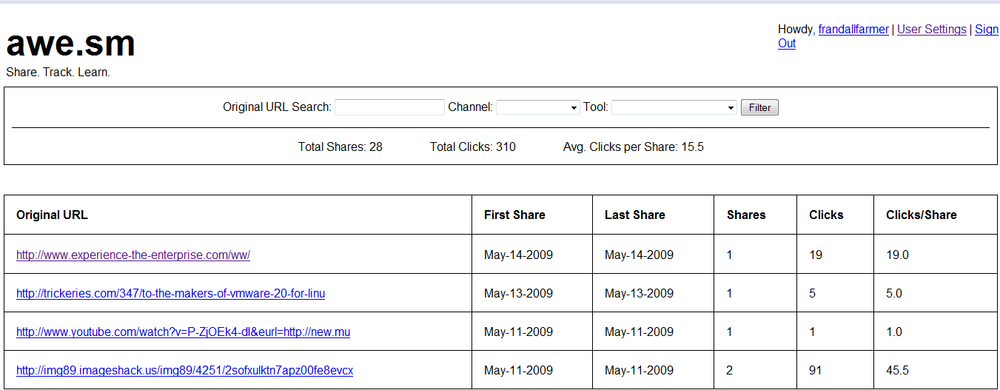

Take the simple act of sharing a URL on a social site such as Twitter. Without a reputation system, you have no idea how many people followed your link or how many other people shared it. The URL-shortening service Awe.sm, shown in Figure 5-5, provides both features: it tracks how many people click your link and how many different people shared the URL with others.

For contributors who are building a brand, public karma systems are a double-edged sword. If a contributor is at the top of his market, his karma can be a big indicator of trustworthiness, but most karma scores can’t distinguish inexperience from a newly registered account from complete incompetence—this fact handicaps new entrants.

An application can address this experience-inequity by including time-limited scores in the karma mix. For example, B.F. Skinner was a world-renowned and respected behavioral scientist, but his high reputation has a weakness—it’s old. In certain contexts, it’s even useless. For example, his great reputation would do me no good if I were looking for a thesis advisor, because he’s been dead for almost 20 years.

Egocentric incentives

Egocentric incentives are often exploited in the design online in computer games and many reputation-based websites. The simple desire to accomplish a task taps into deeply hardwired motivations described in behavioral psychology as classical and operant conditioning (which involves training subjects to respond to food-related stimulus) and schedules of reinforcement. This research indicates that people can be influenced to repeat simple tasks by providing periodic rewards, even a reward as simple as a pleasing sound.

But, an individual animal’s behavior in the social vacuum of a research lab is not the same as the ways in which we very social humans reflect our egocentric behaviors to one another. Humans make teams and compete in tournaments. We follow leaderboards comparing ourselves to others and comparing groups that we associate ourselves with. Even if our accomplishments don’t help another soul or generate any revenue for us personally, we often want to feel recognized for them. Even if we don’t seek accolades from our peers, we want to be able to demonstrate mastery of something—to hear the message, “You did it! Good job!”

Therefore, in a reputation system based on egocentric incentives, user profiles are a key requirement. In this kind of system, users need someplace to show off their accomplishments—even if only to themselves. Almost by definition, egocentric incentives involve one or more forms of karma. Even with only a simple system of granting trophies for achievements, users will compare their collections to one another. New norms will appear that look more like market norms than social norms: people will trade favors to advance their karma, people will attempt to cheat to get an advantage, and those who feel they can’t compete will opt out altogether.

Egocentric incentives and karma do provide very powerful motivations, but they are almost antithetical to altruistic ones. The egocentric incentives of many systems have been overdesigned, leading to communities consisting almost exclusively of experts. Consider just about any online role playing game that survived more than three years. For example, to retain its highest-level users and the revenue stream they produce, World of Warcraft must continually produce new content targeted at those users. If it stops producing new content for its most dedicated users, its business will collapse. This elder game focus stunts its own growth by all but abandoning improvements aimed at acquiring new users. When new users do arrive (usually in the wake of a marketing promotion), they end up playing alone because the veteran players are only interested in the new content and don’t want to bother going through the long slog of playing through the lowest levels of the game yet again.

We describe three subcategories of egocentric incentives:

- Fulfillment incentives

The desire to complete a task, assigned by oneself, a friend, or the application

- Recognition incentives

The desire for the praise of others

- The Quest for Mastery

Personal and private motivation to improve oneself

Fulfillment incentives

The simplest egocentric incentive is the desire to complete a task: to fulfill a goal as work or personal enjoyment. Many reputation model designs that tap the desire of users to complete a complex task can generate knock-on reputations for use by other users or in the system as a whole. For example, free music sites are based on the desire of some users to personalize their own radio stations to gather ratings, which they can then use to recommend music to others. Not only can reputation models that fulfill preexisting user needs gather more reputation claims than altruism and commercial incentives can, but the data typically is of higher quality because it more closely represents users’ true desires.

Recognition incentives

Outward-facing egocentric incentives are all related to personal or group recognition. They’re driven by admiration, praise, or even envy, and they’re focused exclusively on reputation scores. That’s all there is to it. Scores often are displayed on one or more public leaderboards, but they can also appear as events in a user’s news feed—for example, messages from Zynga’s Mafia Wars game that tell all your friends you became a Mob Enforcer before they did, or that you gave them the wonderful gift of free energy that will help them get through the game faster.

Recognition is a very strong motivator for many users, but not all. If, for example, you give accumulating points for user actions in a context where altruism or commercial incentives produce the best contributions, your highest-quality contributors will end up leaving the community, while those who churn out lower-value content fill your site with clutter.

Always consider implementing a quality-based reputation system alongside any recognition incentives to provide some balance between quality and quantity. In May 2009, Wired writer Nate Ralph wrote “Handed Keys to Kingdom, Gamers Race to Bottom” in which he details examples of how Digg, and the games Little Planet, and City of Heroes were hijacked by people who gamed user recognition reputation systems to the point of seriously decreasing the quality of the applications’ key content. Like clockwork, it didn’t take long for reputation abuse to hit the iTunes application library as well.

Personal or private incentives: The quest for mastery

Personal or private forms of egocentric incentives can be summarized as motivating a quest for mastery. For example, when people play solitaire or do crossword or sudoku puzzles alone, they do it simply for the stimulation and to see if they can accomplish a specific goal; maybe they want to beat a personal score or time or even just manage to complete the game. The same is true for all single-player computer games, which are much more complex. Even multiple-player games, online and off, such as World of Warcraft or Scrabble, have strong personal achievement components, such as bonus multipliers or character mastery levels.

Notice especially that players of online single-player computer games—or casual game sites, as they are known in the industry—skew more female and older than most game-player stereotypes you may have encountered. According to the Consumer Electronics Association, 65% of the gamers are women. And women between the ages of 35 and 49 are more likely to visit online gaming websites than even teenage boys. So, if you expect more women than men to use your application, consider staying away from recognition incentives and using mastery-based incentives instead.

Common mastery incentives include achievements (feedback that the user has accomplished one of a set of multiple goals), ranks or levels with caps (acknowledgments of discrete increases in performance—for example, Ensign, Corporal, Lieutenant, and General—but with a clear achievable maximum), and performance scores such as percentage accuracy, where 100% is the desired perfect score.

Resist the temptation to keep extending the top tier of your mastery incentives. Doing so would lead to user fatigue and abuse of the system. Let the user win. It’s OK. They’ve already given you a lot of great contributions and likely will move on to other areas of your site.

Consider Your Community

Introducing a reputation system into your community will almost certainly affect its character and behavior in some way. Some of these effects will be positive (we hope! I mean, that’s why you’re reading this book, right?). But there are potentially negative side effects to be aware of, too. It is almost impossible to predict exactly what community effects will result from implementing a reputation system because—and we bet you can guess what we’re going to say here—it is so bound to the particular context for which you are designing. But the following sections present a number of community factors to consider early in the process.

What are people there to do?

This question may seem simple, but it’s one that often goes unasked: what, exactly, is the purpose of this community? What are the actions, activities, and engagements that users expect when they come to your site? Will those actions be aided by the overt presence of content- or people-based reputations? Or will the mechanisms used to generate reputations (event-driven inputs, visible indicators, and the like) actually detract from the primary experience that your users are here to enjoy?

Is this a new community? Or an established one?

Many of the models and techniques that we cover in this book are equally applicable, whether your community is a brand-new, aspiring one or has been around a while and already has acquired a certain dynamic. However, it may be slightly more difficult to introduce a robust reputation system into an existing and thriving community than to have baked-in reputation from the beginning. Here’s why:

An established community already has shared mores and customs. Whether the community’s rules have been formalized or not, users do indeed have expectations about how to participate, including an understanding of what types of actions and behaviors are viewed as transgressive. The more established and the more strongly held those community values are, the more important it is for you to match your reputation system’s inputs and rewards to those values.

Some communities may have been around long enough to suffer from problems of scale that would not affect an early stage or brand-new site. The amount of conversation (or noise, depending on how you look at it) might already be overwhelming. And some sites face migration issues:

Should you grandfather in old content or just leave it out of the new system?

Will you expect people to go back and grade old content retroactively? (In all likelihood, they won’t.)

In particular, it may be difficult to make changes to an existing reputation system. Whether the changes are as trivial and opaque as tweaking some of the parameters that determine a video’s popularity ranking or as visible and significant as introducing a new level designation for top performers, you are likely to encounter resistance (or, at the very least, curiosity) from your community. You are, in effect, changing the rules of your community, so expect the typical reaction: some will welcome the changes, others (typically, users who benefited most under the old rules) will denounce them.

We don’t mean to imply, however, that designing a reputation system for a new, greenfield community is an easy task. For a new community, rather than identify the community characteristics that you’d like to enhance (or leave unharmed), your task is to imagine the community effects that you’re hoping to influence, then make smart decisions to achieve those outcomes.

The competitive spectrum

Is your community a friendly, welcoming place? Helpful? Collaborative? Argumentative or spirited? Downright combative? Communities can put a whole range of behaviors on display, and it can be dangerous to generalize too much about any specific community. But it’s important to at least consider the overall character of the community that you plan to influence through your reputation system.

A very telling aspect of community character (though it’s not the only one worth considering) is the level of perceived competitiveness in your community (see Figure 5-6). That aspect includes the individual goals of community members and to what degree those goals coexist peacefully or conflict. What are the actions that community members engage in? How might those actions impinge on the experiences of other community members? Do comparisons or contests among people produce the desired behaviors?

In general, the more competitive a group of people in a community is, the more appropriate it is to compare those people (and the artifacts that they generate) to one another.

Read that last bit again, and carefully. A common mistake made by product architects (especially for social web experiences) is assuming a higher level of competitiveness than what really exists. Because reputation systems and their attendant incentive systems are often intended to emulate the principles of engaging game designs, designers often gravitate toward the aggressively competitive—and comparative—end of the spectrum.

Even the intermediate stages along the spectrum can be deceiving. For example, where would you place a community like Match.com or Yahoo! Personals along the spectrum? Perhaps your first instinct was to say, “I would place a dating site firmly in the ‘competitive’ part of the spectrum.” People are competing for attention, right? And dates?

Remember, though, the entire context for reputation in this example. Most importantly, remember the desires of the person who is doing the evaluating on these sites. A visitor to a dating site probably doesn’t want competition, and she may not view her activity on the site as competitive at all but as a collaborative endeavor. She’s looking for a potential dating partner who meets her own particular criteria and needs—not necessarily “the best person on the site.”

Tip

“The Competitive Spectrum” is expanded upon in Christian Crumlish and Erin Malone’s Designing Social Interfaces (O’Reilly).

Better Questions

Our goal for this chapter was to get you asking the right questions about reputation for your application. Do you need reputation at all? How might it promote more and better participation? How might it conflict with your goals for community use of your application? We’ve given you a lot to consider in this chapter, but your answers to these questions will be invaluable as you dive into Chapter 6, where we teach you how to define the what, who, how, and limits of your reputation system.