CHAPTER 7

Infrastructure Security

This chapter covers the following topics from Domain 7 of the CSA Guidance:

• Cloud Network Virtualization

• Security Changes with Cloud Networking

• Challenges of Virtual Appliances

• SDN Security Benefits

• Microsegmentation and the Software Defined Perimeter

• Hybrid Cloud Considerations

• Cloud Compute and Workload Security

Companies in every industry need to assume that a software revolution is coming.

—Marc Andreessen

Although the title of this chapter is “Infrastructure Security,” we need to be aware that the cloud is truly possible only because of the software that is used to automate and orchestrate the customer’s use of the underlying physical infrastructure. I’m not sure exactly when Mr. Andreessen made the preceding statement, but I think it’s fair to say the software revolution is now officially here. The great news is that you will be a part of it as a cloud security professional. This chapter covers some of the new software-driven technologies that make the cloud possible.

Virtualization isn’t exactly new. Some claim that it started back in the 1960s, while others argue the virtualization we know today began in the 1990s. Either way, today’s virtualization is very different from what it was when it was essentially a way to run multiple virtual servers on a single physical computer. Workloads in a cloud environment are so much more than just virtual machines. Today’s cloud infrastructure uses software defined networking (SDN), containers, immutable instances, serverless computing, and other technologies that are covered in this chapter.

How is this virtual infrastructure protected? How are detection and response performed? Where are your log files being stored? Are they protected in the event of an account compromise? These are just some of the questions that arise with these new forms of virtualized environments. If your plan for protection is based on installing an agent on a server instance and your plan for vulnerability assessments in the cloud is limited to running a scanner against a known IP address, you need to rethink your options in this new environment.

The physical infrastructure is the basis for everything in computing, including the cloud. Infrastructure is the foundation of computers and networks upon which we build everything else, including the layer of abstraction and resource pooling that makes the cloud rapidly scalable, elastic, and, well, everything that it is. Everything covered in this domain applies to all cloud deployment models, from private to public clouds.

I won’t cover data center security principles such as the best number of candle watts required for a walkway versus a parking lot, or how rocks should be integrated into the landscape to protect the data center, because such items aren’t covered in the CSA Guidance and you won’t be tested on them. Although you may be disappointed that I won’t cover the merits of boulders versus metal posts as barriers, I will cover workload virtualization, network virtualization, and other virtualization technologies in this chapter.

I’ll begin by discussing the two areas (referred to as macro layers in the CSA Guidance) of infrastructure. First are the fundamental resources such as physical processors, network interface cards (NICs), routers, switches, storage area networks (SANs), network attached storage (NAS), and other items that form the pools of resources that were covered back in Chapter 1. These items are, of course, fully managed by the provider (or by your company if you choose to build your own private cloud). Next we have the virtual/abstracted infrastructure (aka virtual world, or metastructure) that is created by customers who pick and choose what they want from the resource pools. This virtual world is managed by the cloud user.

Throughout the chapter, we will be taking a look at some networking elements that operate behind the scenes and are managed by the provider. Although the backgrounder information provided here won’t be included on your CCSK exam, this information will help you gain an appreciation for some of the new technologies that are being used when you adopt cloud services. I’ll call out when subject material is for your information and not on the exam.

By the way, I’m including callouts to relevant requests for comment (RFCs) throughout this chapter. If you are unfamiliar with RFCs, they are formal documents from the Internet Engineering Task Force (IETF) that are usually associated with publishing a standard (such as TCP, UDP, DNS, and so on) or informational RFCs that deal with such things as architectures and implementations. These are included here in case you really want to dive headfirst into a subject. RFCs can always be considered the authoritative source for networking subjects, but you do not need to read the associated RFCs in preparation for your CCSK exam.

Cloud Network Virtualization

Network virtualization abstracts the underlying physical network and is used for the network resource pool. How these pools are formed, and their associated capabilities, will vary based on the particular provider. Underneath the virtualization are three networks that are created as part of an Infrastructure as a Service (IaaS) cloud: the management network, the storage network, and the service network. Figure 7-1 shows the three networks and the traffic that each supports.

Figure 7-1 Common networks underlying IaaS

We’ll check out the virtualization technologies that run on top of the underlying physical networks that make this whole cloud thing a reality. Before we go there, though, I think a crash review of the famed OSI reference model is required. There’s nothing cloud-specific in the following OSI backgrounder, but it is important for you to know about, if for nothing more than understanding what I mean when I say something “operates at layer 2.”

OSI Reference Model Backgrounder

The Open Systems Interconnection (OSI) reference model is a pretty ubiquitous model that many people are familiar with. Ever heard the term “layer 7 firewall” or “layer 2 switch”? That’s a nod to the OSI reference model layers. You will not be tested on the OSI reference model as part of the CCSK exam because the CSA considers it assumed knowledge. As such, I’m not going to get into every tiny detail of the model but will look at it from a high level.

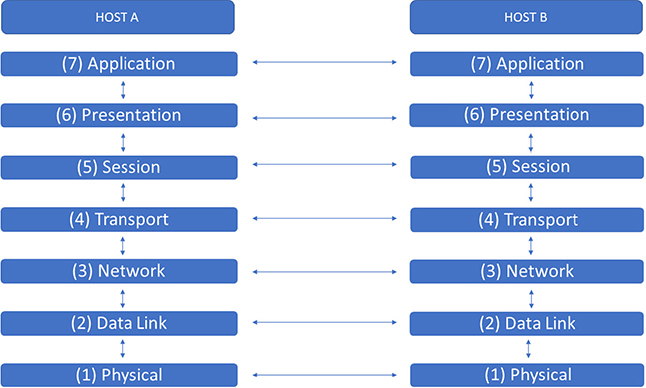

The OSI stack consists of seven layers (I call it a seven-layer bean dip), ranging from the application layer at the top (layer 7) down to the physical medium layer (layer 1). There’s a mnemonic to help us remember the OSI reference model: “All People Should Try New Diet Pepsi” (layer 7 to layer 1), or, alternatively, “Please Do Not Trust Sales People’s Advice” (layer 1 to layer 7). Figure 7-2 shows the OSI reference model in all its glory.

Figure 7-2 OSI reference model

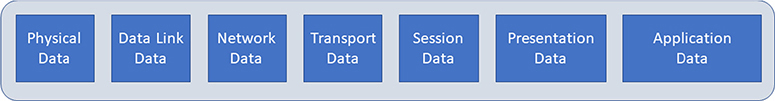

The reference model is reflected in how a packet is created. Application data is encapsulated (Figure 7-3) by each layer as it goes down the stack. Relevant information is added by each layer and then sent across the network, where it is picked up by the receiving machine (such as the media access control [MAC] hardware address at layer 2) and corresponding layer data is stripped off and sent up the stack.

Figure 7-3 Packet encapsulation at each layer on the OSI stack

Table 7-1 highlights what happens at each layer of the OSI reference model.

Table 7-1 What Happens at Each Layer of the OSI Reference Model

VLANs

Virtual local area networks (VLANs) have been around for a very long time. VLAN technology was standardized in 2003 by the Institute of Electrical and Electronic Engineers as IEEE 802.1Q and operates at layer 2 of the OSI model. VLAN technology essentially uses tagging of network packets (usually at the port on the switch to which a system is connected) to create single broadcast domains. This creates a form of network segmentation, not isolation. Segmentation can work in a single-tenant environment like a trusted internal network but isn’t optimal in a cloud environment that is multitenant by nature.

Another issue when it comes to the use of VLANs in a cloud environment is address space. Per the IEEE 802.1Q standard, a VLAN can support 4096 addresses (it uses 12 bits for addressing, so 212 = 4096). That’s not a whole lot when you consider there are IaaS providers out there with more than a million customers.

VXLAN

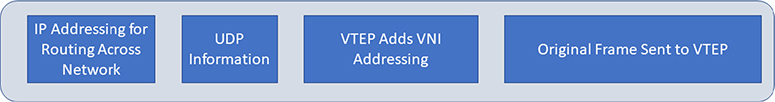

Virtual Extensible LAN (VXLAN) isn’t mentioned at all in the CSA Guidance, but core features of VXLAN are discussed as part of SDN, so you should know this material for your exam. (And, as a bonus, it will help you understand how SDN does what it does.) VXLAN is a network virtualization technology standard (RFC 7348, released in August 2014) that was created by VMware and Cisco (along with others) and supported by numerous vendors to address the scalability and isolation issues with VLANs. VXLAN encapsulates layer 2 frames within UDP packets by using a VXLAN Tunnel End Point (VTEP), essentially creating a tunneling scenario where the layer 2 packets are “hidden” while they traverse a network, using layer 3 (such as IP) addressing and routing capabilities. Inside these UDP packets, a VXLAN network identifier (VNI) is used for addressing; this packet encapsulation is shown in Figure 7-4. Unlike the VLAN model discussed earlier, VXLAN uses 24 bits for tagging purposes, meaning approximately 16.7 million addresses, thus addressing the scalability issue faced by normal VLANs.

Figure 7-4 Example of VXLAN packet encapsulation

As you can see in Figure 7-4, the original Ethernet frame is received by the tunnel endpoint, which then adds its own addressing, throws that into a UDP packet, and assigns a routable IP address; then this tunneled information is sent on its merry way to its destination, which is another tunnel endpoint. Using VXLAN to send this tunneled traffic over a standard network composed of routers (such as the Internet) is known as creating an overlay network (conversely, the physical network is called an underlay network). By encapsulating the long hardware addresses inside a routable protocol, you can extend the typical “virtual network” across office buildings or around the world (as shown in Figure 7-5).

Figure 7-5 Example of VXLAN overlay network

Networking Planes Backgrounder

Before we get to the SDN portion of this chapter, there’s one last area that we should explore regarding the different planes associated with networking equipment in general. Every networking appliance (such as a router or switch) contains three planes—management, control, and data—that perform different functions. The following is a quick discussion of each plane:

• Management plane Maybe this term sounds familiar? It should! Just as you manage the metastructure in a cloud via the management plane, you access the management plane to configure and manage a networking device. This plane exposes interfaces such as a CLI, APIs, and graphical web browsers, which administrators connect to so they can manage a device. Long story short, you access the management plane to configure the control plane.

• Control plane This plane establishes how network traffic is controlled, and it deals with initial configuration and is essentially the “brains” of the network device. This is where you configure routing protocols (such as Routing Information Protocol [RIP], Open Shortest Path First [OSPF], and so on), spanning tree algorithms, and other signaling processes. Essentially, you configure the networking logic in the control plane in advance so traffic will be processed properly by the data plane.

• Data plane This is where the magic happens! The data plane carries user traffic and is responsible for forwarding packets from one interface to another based on the configuration created at the control plane. The data plane uses a flow table to send traffic where it’s meant to go based on the logic dictated by the control plane.

Understanding the three planes involved is important before we get to the next topic: software defined networking. Just remember that the control plane is the brains and the data plane is like the traffic cop that sends packets to destinations based on what the control plane dictates.

Software Defined Networking

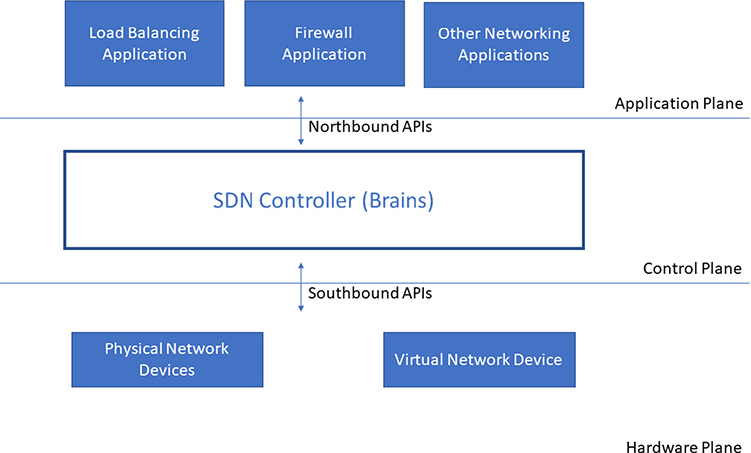

SDN is an architectural concept that enables centralized management and emphasizes the role of software in running networks to dynamically control, change, and manage network behavior. Centralized management is achieved by breaking out the control plane (brains) and making this plane part of an SDN controller that manages the data plane, which remains on the individual networking components (physical or virtual). Dynamic change and management are supplied through the application plane. All three of these planes (mostly) communicate via APIs. Figure 7-6 shows the various planes in an SDN environment.

Figure 7-6 Simplified SDN architecture

So SDN separates the control plane from the data plane. Wait…hold on. Those are already separate, right? Exactly so, but as I said in the previous section, in traditional networking gear, all three planes exist in the single hardware appliance. SDN moves the control plane from the actual networking device to an SDN controller. This consolidation and centralization of control result in a more agile and flexible networking environment. Remember that SDN isn’t a networking protocol, but VXLAN is a networking protocol. Quite often, as in the CSA Guidance, people will combine the two technologies when talking about SDN, but just remember that SDN is an architectural concept that can be realized by using a protocol such as VXLAN.

You can’t spell SDN without OpenFlow. Well, let me rephrase that: OpenFlow is very integral to the whole SDN discussion and is referenced 34 times in informational RFC 7426, “SDN Layers and Architecture Terminology.” The OpenFlow protocol was first released in 2011 by the Open Networking Foundation (ONF) and is considered the enabler of SDN. In fact, many people refer to the southbound APIs as the “OpenFlow Specification.” OpenFlow is defined in RFC 7426 as “a protocol through which a logically centralized controller can control an OpenFlow switch. Each OpenFlow-compliant switch maintains one or more flow tables, which are used to perform packet lookups.”

There are multiple open source OpenFlow SDN controllers available in the marketplace, such as the OpenDaylight Project and Project Floodlight. In addition to the open source OpenFlow standard, many vendors have seen the power of SDN and have come up with their own proprietary SDN implementations (such as Cisco’s Application Centric Infrastructure [ACI] or Juniper Contrail).

The OpenFlow SDN controllers will communicate with the OpenFlow-compliant networking devices using the OpenFlow specification (such as southbound APIs) to configure and manage the flow tables. Communication between the controller and the applications occurs over the northbound interface. There is no standard communication method established for these northbound interfaces, but typically APIs are used.

Through the implementation of SDN (and enabling technologies), cloud providers can offer clients much higher flexibility and isolation. By design, cloud providers offer clients what they are generally accustomed to getting. For example, clients can select whatever IP range they want in the cloud environment, create their own routing tables, and architect the metastructure networking exactly the way they want it. This is all possible through the implementation of SDN (and related technologies). The SDN implementation not only hides all the underlying networking mechanisms from customers, but it also hides the network complexities from the virtual machines running in the provider’s network. All the virtual instance sees is the virtual network interface provided by the hypervisor, and nothing more.

Network Functions Virtualization

Network functions virtualization (NFV) is another area that isn’t covered in the CSA Guidance, so it won’t be on your test, but it’s a technology that I think is worth exploring a little bit. NFV is different from SDN, but the two can work together in a virtualized network, and you’ll often see “SDN/NFV” used in multiple publications.

The NFV specification was originally created by the European Telecommunications Standards Institute (ETSI) back in 2013. The goal of NFV is to transform network architectures by replacing physical network equipment that performs network functions (such as a router) with virtual network functions (VNFs) that could be run on industry-standard servers and deliver network functions through software. According to the ETSI, such an approach could decrease costs, improve flexibility, speed innovation through software-based service deployment, improve efficiencies through automation, reduce power consumption, and create open interfaces to enable different vendors to supply decoupled elements.

NFV requires a lot of virtualized resources, and as such, it requires a high degree of orchestration to coordinate, connect, monitor, and manage. I’m sure you can already see how SDN and NFV can work together to drive an open-virtualized networking environment that would benefit a cloud service provider. Remember the distinction between the two in that SDN separates the control plane from the underlying network devices and NFV can replace physical networking appliances with virtualized networking functions.

How Security Changes with Cloud Networking

Back in the good old days of traditional networking, security was a whole lot more straightforward than it is today. Back then, you may have had two physical servers with physical network cards that would send bits over a physical network, and then a firewall, intrusion prevention system (IPS), or another security control would inspect the traffic that traversed the network. Well, those days are gone in a cloud environment. Now, virtual servers use virtual network cards and virtual appliances. Although cloud providers do have physical security appliances in their environments, you’re never going to be able to ask your provider to install your own physical appliances in their cloud environment.

Pretty much the only commonality between the old days of physical appliance security controls and today’s virtual appliances is that both can be potential bottlenecks and single points of failure. Not only can appliances become potential bottlenecks, but software agents installed in virtual machines can also impact performance. Keep this in mind when architecting your virtual controls in the cloud, be they virtual appliances or software agents.

These new ways of securing network traffic in a cloud environment offer both challenges and benefits.

Challenges of Virtual Appliances

Keep in mind that both physical and virtual appliances can be potential bottlenecks and single points of failure. After all, virtual machines can crash just like their associated physical servers, and an improperly sized virtual appliance may not be able to keep up with the amount of processing that is actually required. Also, remember the costs associated with the virtual appliances that many vendors now offer in many Infrastructure as a Service (IaaS) environments.

Think about the following simplistic scenario for costs associated with these things: Suppose your cloud environment consists of two regions, both with six subnets, and the vendor recommends that their virtual appliance be placed in each subnet. This translates to a total of 12 virtual appliances required. Single point of failure you say? Well, assuming the vendor supports failover (more on this later), you need to double the required virtual firewall appliances to 24. Say the appliance and instance cost a total of $1 an hour. That’s $24 an hour. You may be thinking that $24 an hour is not such a big deal. But unlike humans who work 8 hours a day (so I hear), appliances work 24 hours a day, so your super cheap $24 an hour means an annual cost of more than $210,000 (365 days equals 8760 hours, multiplied by $24, comes to $210,240). That’s for a super simple scenario! Now imagine your company has fully embraced the cloud, is running 70 accounts (for recommended isolation), and each account has two subnets (public and private) and a failover region as part of the company’s business continuity/disaster recovery (BC/DR) planning. That requires 280 (70 accounts × 2 subnets × 2 regions) appliances at $1 an hour, for a grand total of more than $2.4 million a year. Darn. I forgot the single point of failure aspect. Make that $4.8 million a year. This is a great example of how architecture in the cloud can financially impact an organization.

On the other hand, the capabilities offered by virtual appliance vendors are all over the map. Some providers may support high availability and auto-scaling to take advantage of the elasticity of the cloud and offer other features to address potential performance bottleneck scenarios; others may not support these features at all. You must understand exactly what the provider is offering.

The vendors I particularly love (which shall remain nameless) are those that advertise that their “Next Generation Firewall” product offers multiple controls (firewall, intrusion detection system, intrusion prevention system, application control, and other awesome functionalities) and high availability through clustering, but when you actually read the technical documentation, nothing works in a cloud environment other than the core firewall service, and that cannot be deployed in HA mode in a cloud. This forces you into a single point of failure scenario.

It’s important that you identify virtual appliances that are designed to take advantage of not only elasticity but also the velocity of change associated with the cloud, such as moving across regions and availability zones. Another area to consider is the workloads being protected by the virtual appliance. Can a virtual appliance handle an auto-scaling group of web servers that suddenly jumps from five servers to fifty and then back to five in a matter of minutes, or can it handle changes to IP addresses associated with particular workloads? If the virtual appliance tracks everything by manually configured IP addresses, you’re immediately in trouble, because the IP addresses associated with particular virtual servers can change often, especially in an immutable environment (see the later section “Immutable Workloads Enable Security”).

Benefits of SDN Security

I’ve covered the purpose and basic architecture of SDN, but I didn’t specifically call out the security benefits associated with SDN that you will need to know for your CCSK exam. Here, then, are the security benefits associated with SDN according to the CSA Guidance:

• Isolation You know that SDN (through associated technologies) offers isolation by default. You also know that, thanks to SDN, you can run multiple networks in a cloud environment using the same IP range. There is no logical way these networks can directly communicate because of addressing conflicts. Isolation can be a way to segregate applications and services with different security requirements.

• SDN firewalls These may be referred to as “security groups.” Different providers may have different capabilities, but SDN firewalls are generally applied to the virtual network card of a virtual server. They are just like a regular firewall in that you make a “policy set” (aka firewall ruleset) that defines acceptable traffic for both inbound (ingress) and outbound (egress) network traffic. This means SDN firewalls have the granularity of host-based firewalls but are managed like a network appliance. As part of the virtual network itself, these firewalls can also be orchestrated. How cool is it that you can create a system that notices a ruleset change and automatically reverts back to the original setting and sends a notification to the cloud administrator? That’s the beauty of using provider-supplied controls that can be orchestrated via APIs.

• Deny by default SDN networks are typically deny-by-default-for-everything networks. If you don’t establish a rule that explicitly allows something, the packets are simply dropped.

• Identification tags The concept of identifying systems by IP address is dead in a cloud environment; instead, you need to use tagging to identify everything. This isn’t a bad thing; in fact, it can be a very powerful resource to increase your security. Using tags, you could automatically apply a security group to every server, where, for example, a tag states that a server is running web services.

• Network attacks Many low-level network attacks against your systems and services are eliminated by default. Network sniffing of an SDN network, for example, doesn’t exist because of inherent isolation capabilities. Other attacks, such as ARP spoofing (altering of NIC hardware addresses), can be eliminated by the provider using the control plane to identify and mitigate attacks. Note that this doesn’t necessarily stop all attacks immediately, but there are numerous research papers discussing the mitigation of many low-level attacks through the software-driven functionality of SDN.

Microsegmentation and the Software Defined Perimeter

You know that a VLAN segments out networks. You can take that principle to create zones, where groupings of systems (based on classification, perhaps) can be placed into their own zones. This moves network architecture from the typical “flat network,” where network traffic is inspected in a “north–south” model (once past the perimeter, there is free lateral movement), toward a “zero-trust” network based on zones, and traffic can be inspected in both a “north–south” and “east–west” (or within the network) fashion. That’s the same principle behind microsegmentation—except microsegmentation takes advantage of network virtualization to implement a fine-grained approach to creating these zones.

As the networks themselves are defined in software and no additional hardware is required, it is far easier and practical for you to implement smaller zones to create a more fine-grained approach to workload zoning—say, grouping five web servers together in a microsegmented zone rather than creating a single demilitarized zone (DMZ) with hundreds of servers that shouldn’t need to access one another. This enables the implementation of fine-grained “blast zones,” where if one of the web servers is compromised, the lateral movement of an attacker would be limited to the five web servers, not every server in the DMZ. This fine-grained approach isn’t very practical with traditional zoning restrictions associated with using VLANs to group systems together (remember that VLANs have a maximum of 4096 addresses versus 16.7 million for VXLAN).

Now this isn’t to say that implementing microsegmentation is a zero-cost effort. There may very well be an increase in operational costs associated with managing all of the networks and their connectivity requirements.

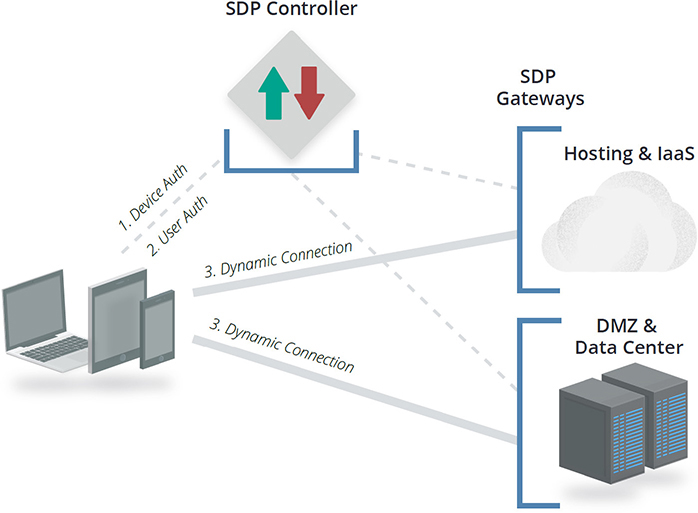

Building on the concepts discussed in SDN and microsegmentation, the CSA has developed a model called the Software Defined Perimeter (SDP). The SDP combines both device and user authentication to provision network access to resources dynamically. There are three components in SDP (as shown in Figure 7-7):

• An SDP client (agent) installed on a device

• The SDP controller that authenticates and authorizes SDP clients based on both device and user attributes

• The SDP gateway that serves to terminate SDP client network traffic and enforces policies in communication with the SDP controller

Figure 7-7 Software Defined Perimeter. (Used with permission of Cloud Security Alliance.)

Additional Considerations for CSPs or Private Clouds

Unlike consumers of the cloud, CSPs are required to properly secure the physical aspects of a cloud environment that everything is built upon. A security failure at the physical layer can lead to devastating results, where all customers are impacted. As the consumer, you must always remember to ensure that the provider has acceptable security in place at the physical layer, because other tenants, especially in a public cloud, must be considered as untrusted—and even potentially adversarial. Private clouds should be treated the same way.

As mentioned, SDN offers the ability to maintain segregation and isolation for the multitenant environment. Providers must always consider all tenants as being potentially hostile, and, as such, CSPs must address the additional overhead associated with properly establishing and maintaining SDN security controls. Providers must also expose security controls to cloud consumers so they can appropriately manage their virtual network security.

Perimeter security still matters in a cloud environment. Providers should implement standard perimeter security controls such as distributed denial of service (DDoS) protection, IPS, and other technologies to filter out hostile traffic before it can impact consumers in the cloud environment. This is not to say that consumers should assume that the provider will block any potentially hostile network traffic at the perimeter. Consumers are still required to implement their own network security.

Finally, as far as reuse of hardware is concerned, providers should always be able to properly clean or wipe any resources (such as volumes) that have been released by clients before reintroducing them into the resource pool to be used by other customers.

Hybrid Cloud Considerations

Recall from Chapter 1 an example of a hybrid cloud—when a customer has their own data center and also uses cloud resources. As far as large organizations are concerned, this connection is usually made via a dedicated wide area network (WAN) link or across the Internet using a VPN. In order for your network architects to incorporate a cloud environment (especially IaaS), the provider has to support arbitrary network addressing as determined by the customer, so that cloud-based systems don’t use the same network address range used by your internal networks.

As a customer, you must ensure that both areas have the same levels of security applied. Consider, for example, a flat network in which anyone within a company can move laterally (east–west) through the network and can access cloud resources as a result. You should always consider the network link to your cloud systems as potentially hostile and enforce separation between your internal and cloud systems via routing, access controls, and traffic inspection (such as firewalls).

The bastion (or transit) virtual network is a network architecture pattern mentioned in the CSA Guidance to provide additional security to a network architecture in the cloud. Essentially, a bastion network can be defined as a network that data must go through in order to get to a destination. With this in mind, creating a bastion network and forcing all cloud traffic through it can act as a chokepoint (hopefully in a good way). You can tightly control this network (as you would with a bastion host) and perform all the content inspection that is required to protect traffic coming in and out of the data center and cloud environments. Figure 7-8 shows the bastion network integration between a cloud environment and a data center.

Figure 7-8 Bastion/transit network between the cloud and a data center

Cloud Compute and Workload Security

A workload is a unit of processing. It can be executed on a physical server, on a virtual server, in a container, or as a function on someone else’s virtual server—you name it. Workloads will always run on some processor and will consume memory, and the security of those items is the responsibility of the provider.

Compute Abstraction Technologies

The CSA Guidance covers four types of “compute abstraction” technologies that you will likely encounter in a cloud environment. I will introduce each of these technologies next, but you’ll find more detailed information regarding each technology in later chapters, which I’ll point out in each section.

Virtual Machines

These are the traditional virtual machines that you are probably accustomed to. This is the same technology offered by all IaaS providers. A virtual machine manager (aka hypervisor) is responsible for creating a virtual environment that “tricks” a guest OS (an instance in cloud terminology) into thinking that it is talking directly to the underlying hardware, but in reality, the hypervisor takes any request for underlying hardware (such as memory) and maps it to a dedicated space reserved for that particular guest machine. This shows you the abstraction (the guest OS has no direct access to the hardware) that hypervisors perform. Allocating particular memory spaces assigned to a particular guest environment demonstrates segregation and isolation (the memory space of one guest OS cannot be accessed by another guest OS on the same machine).

The isolation qualities of a hypervisor make multitenancy possible. If this isolation were to fail, the entire multitenancy nature, and therefore business model, of cloud services would be thrown into question. Vulnerabilities in the past have intended to break memory isolation, such as the Meltdown and Spectre vulnerabilities (and subsequent offshoots). Both of these vulnerabilities had to do with exploiting the manner by which many CPUs handled accessing memory spaces, essentially bypassing the isolation of memory spaces. This meant that if this vulnerability were ever exploited in a multicloud environment, one tenant could be able to access the memory used by another tenant. Note that this was not a software vulnerability; it was a hardware vulnerability that impacted the way memory was handled by hardware vendors and was leveraged by operating systems.

Meltdown and Spectre vulnerabilities needed to be addressed (patched) by the cloud provider because they involved hardware issues, but as many operating systems were also using the same techniques of memory mapping, customers were advised to patch their own operating systems to ensure that one running application could not access the memory space of another application on the same virtual server. (You can read more about the Meltdown vulnerability by checking out CVE-2017-5754.) This example is not meant to say in any way that isolation is a “broken” security control—because it isn’t. In fact, I think it goes to show just how quickly cloud vendors can address a “black swan” vulnerability. Mostly used in the financial world, the term black swan vulnerability is used when an event is a risk that isn’t even considered, yet would have a devastating impact if realized. This hardware vulnerability is by all means a black swan event, so much so that when discovered, researchers dismissed it as an impossibility. Lessons have been learned, and both hardware and software vendors continue to improve isolation capabilities.

I cover virtual machine security a bit more in Chapter 8.

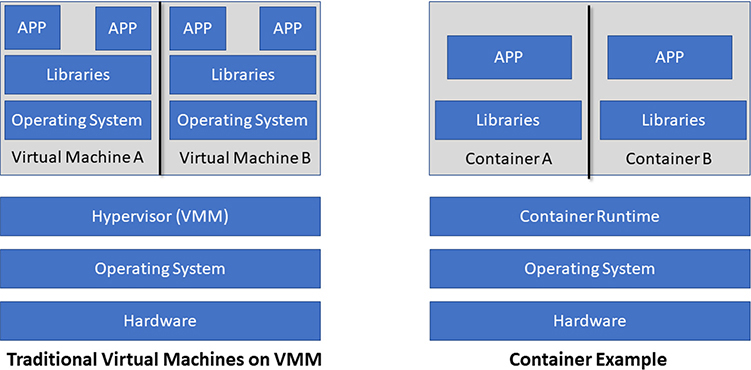

Containers

Containers are sort of an evolution of the virtual machines just covered. They are compute virtualization technologies, but they are applied differently. “A picture paints a thousand words” comes to mind here, so take a look at Figure 7-9 to see what containers are and how they differ from the standard virtualized machine running on top of a virtual machine manager.

Figure 7-9 Virtual machines versus containers

As you can see in the figure, containers differ from traditional virtual machines in that a container doesn’t have all the “bloat” of an operating system to deal with. (I mean, really, does your application require calc.exe in order to run?) Instead of cramming an operating system, required libraries, and the application itself into a 30GB package with a VM, you can place the application and any dependencies (such as libraries) the application requires in a much smaller package, or container if you will. With containers, the application uses a shared kernel and other capabilities of the base OS. The container provides code running inside a restricted environment with access only to the processes and capabilities defined in the container configuration. This technology isn’t specific to cloud technologies; containers themselves can run in a virtualized environment or directly on a single server.

Because a container is much smaller than a traditional virtual machine, it offers two primary benefits: First, a container can launch incredibly quickly because it involves no OS that needs time to boot up. This aspect can help you with agility. Second, a container can help with portability. Note that I said “help,” not “fully address,” portability. Moving a container is obviously a quick operation, but the container itself will require a supported shared kernel. But that only addresses the runtime (engine) dependency itself, and many providers will support the Docker Engine, which has become pretty much the default container engine today. Where portability can get derailed is in all of the other elements of containerization technology, such as container hosts, images, and orchestration via management systems (such as Docker Swarm or Kubernetes). You need to consider and address all of the aspects involved with containers if you plan on using containers to address portability across providers, or even between your data center and the cloud.

I discuss the various components of containers in Chapter 8.

Platform-Based Workloads

Platform-based workloads are defined in the CSA Guidance as anything running on a shared platform that isn’t a virtual machine or a container. As you can imagine, this encompasses an incredibly wide array of potential services. Examples given in the SCA Guidance include stored procedures running on a multitenant Platform as a Service (PaaS) database and a job running on a machine-learning PaaS. I’m going to stick with those two here, because I don’t want to add confusion to the subject. The main thing to remember for your CCSK exam is that although the provider may expose a limited amount of security options and controls, the provider is responsible for the security of the platform itself and, of course, everything else down to the facilities themselves, just like any other PaaS offering.

Serverless Computing

“Serverless,” in this context, is essentially a service exposed by the provider, where the customer doesn’t manage any of the underlying hardware or virtual machines and simply accesses exposed functions (such as running your Python application) on the provider’s servers. It’s “serverless” to you, but it absolutely runs on a backend, which could be built using containers, virtual machines, or specialized hardware platforms. The provider is responsible for securing everything about the platform they are exposing, all the way down to the facilities.

Serverless computing is discussed in Chapter 10.

How the Cloud Changes Workload Security

The main item that changes security in a cloud environment is multitenancy. It is up to the provider to implement segregation and isolation between workloads, and this should be one of the provider’s top priorities. Some providers may offer a dedicated instance capability, where one workload operates on a single physical server, but this option is usually offered at additional cost. This is true for both public and private cloud environments.

Immutable Workloads Enable Security

Immutable workloads are a key capability that a cloud customer with mature cloud processes will eventually implement. I say this because, in reality, most enterprises take a “like-for-like” approach (aka “We’ve always done it this way”) to cloud adoption at first. When you hear the term “immutable,” you should think of ephemeral, which means “lasting for a very short time.” However, immutable has some incredible security benefits. This section will cover what immutable workloads are and why you want to use them.

I always describe immutable servers as the “Vegas model of compute.” Unlike the traditional “pets” model of running servers where you patch them, take care of them, and they are around for years, the immutable model takes a “cattle” approach to server maintenance. The server runs, does its job, and then is terminated and replaced with a new server (some call this the “nuke and pave” approach). Now where does the “Vegas model” come into play? Well, if you’ve ever been to a casino and you sat at a table for a while, you will eventually see the dealer clap her hands and leave. Why do dealers do this? Because the casinos want to address any possible collusion between a dealer and a customer.

How does this translate to cybersecurity? You know that after an attacker successfully exploits a vulnerability, they’ll install a back door for continued access. The attacker knows it’s only a matter of time until you get around to patching the vulnerability. The attacker will slowly explore your network and siphon off data. It can take months until they perform their ultimate goal. That said, in the typical approach, your system patch affects the original vulnerability and does nothing to the back door that’s been installed. But in an immutable approach, you replace the server with a new instance from a patched server image, thus removing the vulnerability and the back door (assuming you do things properly!). You essentially “time out” your servers, just like Vegas times out casino dealers.

How is this done? It depends on how the provider exposes this functionality. Typically, you need to take advantage of the elasticity supplied by the cloud provider through the use of auto-scaling. You would state that you require, say, three identical web server instances running in an auto-scaling group (you can call this a cluster if you like). When the time comes (say, every 45 days), you would update the image with the latest patches (keep in mind that not all patches are security patches) and test the new image. Once you are happy with the image itself, you tell the system to use the new image, which should be used for the auto-scaling group, and blow away (terminate) a server. The auto-scaling group will detect that an instance is missing and will build a new server instance based on that new image. At that point, one of your server instances will be built from the new image. Do your real-life error monitoring, and assuming everything passes, you rinse and repeat until the entire group is running the newly patched image.

Sounds simple, right? Well, it is—at the metastructure level. Your applications need to be able to deal with this immutability as well, and that’s where things can become very complicated. For example, if you are using a single storage volume to host the operating system, application, and all the data, you’re probably going to need to re-architect your applications so that data is written to a location other than the volume used to run the OS.

The biggest “gotcha” in using an immutable approach? Manual changes made to instances. When you go immutable, you cannot allow any changes to be made directly to the running instances, because they will be blown away with the instances. Any and all changes must be made to the image, and then you deploy the image. To achieve this, you would disable any remote logins to the server instances.

I’ve provided an example of the pure definition of immutability. Of course, hybrid approaches can be taken, such as the preceding example, including pushing application updates via some form of a centralized system. In this case, the basic requirement of restricting access to the servers directly is still in place, and the security of the system is still addressed, because everything is performed centrally and people are restricted from making changes directly on the server in question.

There are also other security benefits of an immutable approach. For example, whitelisting applications and processes requires that nothing on the server itself be changed. As such, arming the servers with some form of file integrity monitoring should also be easier, because if anything changes, you know you probably have a security issue on your hands.

Requirements of Immutable Workloads

Now that you understand the immutable approach and its benefits, let’s look at some of the requirements of this new approach.

First, you need a provider that supports this approach—but that shouldn’t be an issue with larger IaaS providers. You also need a consistent approach to the process of creating images to account for patch and malware signature updates (although those could be performed separately, as discussed with the hybrid approach).

You also need to determine how security testing of the images themselves will be performed as part of the image creation and deployment process. This includes any source code testing and vulnerability assessments, as applicable. (You’ll read more about this subject in Chapter 10.)

Logging and log retention will require a new approach as well. In an immutable environment, you need to get the logs off the servers as quickly as possible, because the servers can disappear on a moment’s notice. This means you need to make sure that server logs are exported to an external collector as soon as possible.

All of these images need to have appropriate asset management. This may introduce increased complexity and additional management of the service catalog that maintains accurate information on all operational services and those being prepared to be run in production.

Finally, running immutable servers doesn’t mean that you don’t need to create a disaster recovery plan. You need to determine how you can securely store the images outside of the production environment. Using cross-account permissions, for example, you could copy an image to another account, such as a security account, for safekeeping. If your production environment is compromised by an attacker, they will probably delete not only your running instances but any images as well. But if you have copied these images elsewhere for safekeeping, you can quickly rebuild.

The Impact of the Cloud on Standard Workload Security Controls

With all the new approaches to running workloads beyond standard virtual machines, you’ll want to be familiar with some changes to traditional security for your CCSK exam. Here is a list of potential challenges you will face in different scenarios:

• Implementing software agents (such as host-based firewalls) may be impossible, especially in a serverless environment (see Chapter 10).

• Even if running agents is possible, you need to ensure that they are lightweight (meaning they don’t have a lot of overhead) and will work properly in a cloud environment (for example, they will keep up with auto-scaling). Remember that any application that relies on IP addresses to track systems (via either the agent side or the central console) is basically useless, especially in an immutable environment. CSA often refers to this as having an “ability to keep up with the rapid velocity of change” in a cloud environment. The bottom line is this: ask your current provider if they have “cloud versions” of their agents.

• By no means a cloud specific, agents shouldn’t increase the attack surface of a server. Generally, the more ports that are open on a server, the larger the attack surface. Beyond this, if the agents consume configuration changes and/or signatures, you want to ensure that these are sourced from a trusted authoritative system such as your internal update servers.

• Speaking of using internal update services, there is little reason why these systems shouldn’t be leveraged by the systems in a cloud environment. Consider how you do this today in your traditional environment. Do you have centralized management tools in every branch office, or do you have a central management console at the head office and have branch office computers update from that single source? For these update services, you can consider a cloud server instance in the same manner as a server running in a branch office, assuming, of course, you have appropriate network paths established.

Changes to Workload Security Monitoring and Logging

Both security monitoring and logging of virtual machines are impacted by the traditional use of IP addresses to identify machines; this approach doesn’t work well in a highly dynamic environment like the cloud. Other technologies such as serverless won’t work with traditional approaches at all, because they offer no ability to implement agents. This makes both monitoring and logging more challenging.

Rather than using IP addresses, you must be able to use some other form of unique identifier. This could, for example, involve collecting some form of tagging to track down systems. Such identifiers also need to account for the ephemeral nature of the cloud. Remember that this applies both to the agents and to the centralized console. Speak with your vendors to make sure that they have a cloud version that tracks systems by using something other than IP addresses.

The other big area that involves change is logging. I mentioned that logs should be taken off a server as soon as possible in a virtual server environment, but there’s more to the story than just that. Logging in a serverless environment will likely require some form of logging being implemented in the code that you are running on the provider’s servers. Also, you need to look at the costs associated with sending back potentially large amounts of traffic to a centralized logging system or security information and event management (SIEM) system. You may want to consider whether your SIEM vendor has a means to minimize the amount of network traffic this generates through the use of some kind of “forwarder” and ask for their recommendations regarding forwarding of log data. For example, does the vendor recommend that network flow logs not be sent to the centralized log system due to overhead? If network traffic costs are a concern (and when is cost of operations not a concern?), you may need to sit down and create a plan on what log data is truly required and what isn’t.

Changes to Vulnerability Assessment

There are two approaches to performing vulnerability assessments (VAs). On one hand, some companies prefer to perform a VA from the viewpoint of an outsider, so they will place a scanner on the general Internet and perform their assessment with all controls (such as firewall, IPS, and so on) taken into consideration. Alternatively, some security professionals believe that a VA should be performed without those controls in place so that they can truly see all of the potential vulnerabilities in a system or application without a firewall hiding the vulnerabilities.

The CSA recommends that you perform a VA as close to the actual server as possible, and, specifically, VAs should focus on the image, not just the running instances. With this in mind, here are some specific recommendations from the CSA that you may need to know for your CCSK exam.

Providers will generally request that you notify them of any testing in advance. This is because the provider won’t be able to distinguish whether the scanning is being performed by a “good guy” or a “bad guy.” They’ll want the general information that is normally requested for internal testing, such as where the test is coming from (such as the IP address of the test station, the target of the test, and the start time and end time). The provider may also throw in a clause stating that you are responsible for any damages you cause not just to your system but to the entire cloud environment. (Personally, I think the notion of a scan from a single test station taking out an entire cloud is highly unlikely, but if this is required, you’ll probably want someone with authority to sign off on the test request.)

As for testing the cloud environment itself, forget about it. The CSP will limit you to your use of the environment only. A PaaS vendor will let you test your application code but won’t let you test their platform. Same thing for SaaS—they won’t allow you to test their application. When you think of it, it makes sense after all, because in either example, if you take down the shared component (platform in PaaS or application in SaaS), everyone is impacted, not just you.

Performing assessments with agents installed on the server is best. In the deny-by-default world that is the cloud, controls may be in place (such as SDN firewalls or security groups) that block or hide any vulnerabilities. Having the deny-by-default blocking potentially malicious traffic is great and all, but what if the security group assigned to the instances changes, or if the instances are accidentally opened for all traffic? This is why CSA recommends performing VAs as close to the workload as possible.

Chapter Review

This chapter covered virtualization of both networking and workloads and how security changes for both subjects that are used in a cloud environment. For your CCSK exam, you should be comfortable with the technologies discussed in both the networking (such as SDN) and compute (such as virtual machines and immutable servers) sections. Most importantly, you need to be aware of how security changes as a result of these new technologies. From a more granular perspective, remember the following for your CCSK exam:

• The cloud is always a shared responsibility, and the provider is fully responsible for building the physical aspects of a cloud environment.

• SDN networking should always be implemented by a cloud provider. A lack of a virtualized network means a loss of scalability, elasticity, orchestration, and, most importantly, isolation.

• Remember that the isolation capabilities of SDN allow for the creation of multiple accounts/segments to limit the blast radius of an incident impacting other workloads.

• CSA recommends that providers leverage SDN firewalls to implement a deny-by-default environment. Any activity that is not expressly allowed by a client will be automatically denied.

• Network traffic between workloads in the same virtual subnet should always be restricted by SDN firewalls (security groups) unless communication between systems is required. This is an example of microsegmentation to limit east–west traffic.

• Ensure that virtual appliances can operate in a highly dynamic and elastic environment (aka “keep up with velocity of change”). Just like physical appliances, virtual appliances can be bottlenecks and single points of failure if they’re not implemented properly.

• For compute workloads, an immutable environment offers incredible security benefits. This approach should be leveraged by customers whenever possible.

• When using immutable servers, you can increase security by patching and testing images and replacing nonpatched instances with new instances built off the newly patched image.

• If using immutable servers, you should disable remote access and integrate file integrity monitoring because nothing in the running instances should change.

• Security agents should always be “cloud aware” and should be able to keep up with the rapid velocity of change (such as never use IP addresses as system identifiers).

• As a best practice, get logs off servers and on to a centralized location as quickly as possible (especially in an immutable environment), because all servers must be considered ephemeral in the cloud.

• Providers may limit your ability to perform vulnerability assessments and penetration testing, especially if the target of the scans is their platform.

Questions

1. When you’re considering security agents for cloud instances, what should be a primary concern?

A. The vendor has won awards.

B. The vendor uses heuristic-based detection as opposed to signature-based detection.

C. The vendor selected for cloud server instances is the same vendor you use for internal instances.

D. The vendor agent does not use IP addresses to identify systems.

2. Which of the following is/are accurate statement(s) about the differences between SDN and VLAN?

A. SDN isolates traffic, which can help with microsegmentation. VLANs segment network nodes into broadcast domains.

B. VLANs have roughly 65,000 IDs, while SDN has more than 16 million.

C. SDN separates the control plane from the hardware device and allows for applications to communicate with the control plane.

D. All of the above are accurate statements.

3. When you’re using immutable servers, how should administrative access to the applistructure be granted to make changes to running instances?

A. Administrative access should be limited to the operations team. This is in support of the standard separation of duties approach to security.

B. Administrative access should be limited to the development team. This is in support of the new approach to software development, where the developers own the applications they build.

C. Administrative access should be restricted for everyone. Any changes made at the applistructure level should be made to the image, and a new instance is created using that image.

D. Administrative access to the applistructure is limited to the provider in an immutable environment.

4. Which of the following is the main purpose behind microsegmentation?

A. It is a fine-grained approach to grouping machines to make them easier to administer.

B. It is a fine-grained approach to grouping machines that limits blast radius.

C. Microsegmentation can leverage traditional VLAN technology to group machines.

D. Microsegmentation implements a zero-trust network.

5. Which of the following statements is accurate when discussing the differences between a container and a virtual machine?

A. A container contains the application and required dependencies (such as libraries). A virtual machine contains the operating system, application, and any dependencies.

B. A virtual machine can be moved to and from any cloud service provider, while a container is tied to a specific provider.

C. Containers remove the dependency of a specific kernel. Virtual machines can run on any platform.

D. All of the above are accurate statements.

6. What is the main characteristic of the cloud that impacts workload security the most?

A. Software defined networks

B. Elastic nature

C. Multitenancy

D. Shared responsibility model

7. Select two attributes that a virtual appliance should have in a cloud environment.

A. Auto-scaling

B. Granular permissions for administrators

C. Failover

D. Ability to tie into provider’s orchestration capability

8. Wendy wants to add an instance to her cloud implementation. When she attempts to add the instance, she is denied. She checks her permissions and nowhere does it say she is denied the permission to add an instance. What could be wrong?

A. Wendy is trying to launch a Windows server but has permission to create only Linux instances.

B. Wendy does not have root access to the Linux server she is trying to run.

C. This is because of the deny-by-default nature of the cloud. If Wendy is not explicitly allowed to add an instance, she is automatically denied by default.

D. Wendy is a member of a group that is denied access to add instances.

9. How is management centralized in SDN?

A. By removing the control plane from the underlying networking appliance and placing it in the SDN controller

B. By using northbound APIs that allow software to drive actions at the control layer

C. By using southbound APIs that allow software to drive actions at the control layer

D. SDN is a decentralized model

10. Before beginning a vulnerability assessment (VA) of one of your running instances, what should be done first?

A. Select a VA product that works in a cloud environment.

B. Determine whether a provider allows customers to perform a VA and if any advance notice is required.

C. Open all SDN firewalls to allow a VA to be performed.

D. Establish a time and date that you will access the provider’s data center so you can run the VA on the physical server your instance is running on.

Answers

1. D. The best answer is that the agent does not use IP addressing as an identification mechanism. Server instances in a cloud can be ephemeral in nature, especially when immutable instances are used. All the other answers are optional in nature and not priorities for cloud security agents.

2. D. All of the answers are correct.

3. C. Administrative access to the servers in an immutable environment should be restricted for everyone. Any required changes should be made to an image, and that image is then used to build a new instance. All of the other answers are incorrect.

4. B. The best answer is that the purpose behind implementing microsegmentation is to limit the blast radius if an attacker compromises a resource. Using microsegmentation, you are able to take a very fine-grained approach to grouping machines (such as five web servers in the DMZ, but not every system in the DMZ, can communicate). This answer goes beyond answer D, that microsegmentation creates a “zero-trust” network, so B is the better and more applicable answer.

5. A. A container contains the application and required dependencies (such as libraries). A virtual machine contains the operating system, application, and any dependencies.

6. C. The best answer is that multitenancy has the greatest impact on cloud security, and for this reason, cloud providers need to make sure they have very tight controls over the isolation capabilities within the environment. Although the other answers have merit, none of them is the best answer.

7. A, C. Auto-scaling and failover are the two most important attributes that a virtual appliance should have in a cloud environment. Any appliance can become a single point of failure and/or a performance bottleneck, and these aspects must be addressed by virtual appliances in a cloud environment. Granular permissions are a good thing to have, but they are not cloud specific. Finally, tying into a provider’s orchestration would be great, but this is not one of the two best answers. You may be thinking that elasticity is tying into the orchestration, and you would be correct. However, the degree of the integration isn’t mentioned. For an example of orchestration, does the virtual appliance have the ability to change a firewall ruleset based on the actions of a user in the cloud or a particular API being called? That’s the type of orchestration that would be ideal, but it requires the vendor to have very tight integration with a particular provider. This type of orchestration is usually native with the provider’s services (for example, a security group can be automatically changed based on a particular action).

8. C. A cloud provider should take a deny-by-default approach to security. Therefore, it is most likely that Wendy is not explicitly allowed to launch an instance. Although it is possible that Wendy is also a member of a group that is explicitly denied access to launch an instance, C is the better answer. Metastructure permissions are completely different from operating system permissions, so A and B are incorrect answers.

9. A. SDN is centralized by taking the “brains” out of the underlying networking appliance and placing this functionality in the SDN controller. Answer B is a true statement in that northbound APIs allow applications (or software if you will) to drive changes, but it does not answer the question posed. I suppose you could argue that C is also a true statement, but again, it doesn’t answer the question posed.

10. B. You should determine whether your provider allows customers to perform a VA of their systems. If they don’t and you haven’t checked, you may find yourself blocked, because the provider won’t know the source of the scan, which could be coming from a bad actor. An agent installed in the applistructure of a server will function regardless of whether the server is a virtual one in a cloud or a physical server in your data center. Opening all firewalls to perform a VA, answer C, would be a very unfortunate decision, because this may open all traffic to the world if it’s done improperly (any IP address on the Internet could have access to any port on the instance, for example). Finally, you are highly unlikely to gain access to a provider’s data center, yet alone be given permission to run a VA against any provider-owned and -managed equipment.