CHAPTER 11

Data Security and Encryption

This chapter covers the following topics from Domain 11 of the CSA Guidance:

• Data Security Controls

• Cloud Data Storage Types

• Managing Data Migrations to the Cloud

• Securing Data in the Cloud

b692bb0826305047a235d7dda55ca2a0

This quote shows encryption (albeit it not very strong) in action. Do yourself and your company a favor and never use MD5 as a way to “encrypt” credentials in code (in a cloud or in a data center). Bonus points if you run this string through an MD5 decryption program online.

This book has previously addressed information risk management from a business perspective. In this chapter, we turn our attention to security controls that are key enforcement tools for implementing information and data governance. We need to be selective with our controls and take a risk-based approach to securing data, because not all data requires the same level of protection.

Data stored in a cloud does not require any changes to your data security approach. That said, there is a natural inclination among many business leaders to apply strong security controls to any data that is to be hosted in a cloud environment instead of sticking with a tried-and-true, risk-based approach, which will be far more secure and cost-effective than a blanket policy that protects all data (even data meant to be publicly consumed) with strong security controls.

Nobody ever said all data must be stored in a cloud. If your company deems that a particular data set is so critically important to the company that improper access by the provider would be devastating, then maybe that data should not be held in the cloud in the first place, encrypted or not.

When considering data security, you need to focus especially on the basics, which still matter. Once you get the basics right, you can move on to more advanced controls such as data encryption both at rest and in transit. After all, how important is “military-grade-strength encryption” when a file is accidentally set up to be accessed by anyone in the world?

Data Security Controls

When considering data security controls in cloud environments, you need to consider three main components:

• Determine which data is allowed to be stored in the cloud, based on data classifications (covered in Chapter 5) that address your legal and regulatory compliance requirements. Pay particular attention to permitted jurisdictions and storage media.

• Protect and manage data security in the cloud. This will involve establishing a secure architecture, proper access controls, encryption, detection capabilities, and other security controls as needed.

• Ensure compliance with proper audit logging established and backups and business continuity in place.

Cloud Data Storage Types

Back in Chapter 8, you learned about the physical and virtual implementations of storage. This chapter dives deeper into the different ways storage technologies are generally exposed to customers. Following are the most common ways storage can be consumed by customers of a cloud provider:

• Object storage This storage type is presented like a file system and is usually accessible via APIs or a front-end interface (such as the Web). Files (such as objects) can be made accessible to multiple systems simultaneously. This storage type can be less secure, as it has often been discovered to be accidentally made available to the public Internet. Examples of common object storage include Amazon S3, Microsoft Azure Block binary large objects (blobs), and Google Cloud Storage service.

• Volume storage This is a storage medium such as a hard drive that you attach to your server instance. Generally a volume can be attached only to a single server instance at a time.

• Database Cloud service providers may offer customers a wide variety of database types, including commercial and open source options. Quite often, providers will also offer proprietary databases with their own APIs. These databases are hosted by the provider and use existing standards for connectivity. Databases offered can be relational or nonrelational. Examples of nonrelational databases include NoSQL, other key/value storage systems, and file system–based databases such as Hadoop Distributed File System (HDFS).

• Application/platform This storage is managed by the provider. Examples of application/platform storage include content delivery networks (CDNs), files stored in Software as a Service (SaaS) applications (such as a customer relationship management [CRM] system), caching services, and other options.

Regardless of the storage model in use, most CSPs employ redundant, durable storage mechanisms that use data dispersion (also called “data fragmentation of bit splitting” in the CSA Guidance). This process takes data (say, an object), breaks it up into smaller fragments, makes multiple copies of these fragments, and stores them across multiple servers and multiple drives to provide high durability (resiliency). In other words, a single file would not be located on a single hard drive, but would be spread across multiple hard drives.

Each of these storage types has different threats and data protection options, which can differ depending on the provider. For example, typically you can give individual users access to individual objects, but a storage volume is allocated to a virtual machine (VM) in its entirety. This means your approach to securing data in a cloud environment will be based on the storage model used.

Managing Data Migrations to the Cloud

Organizations need to have control over data that is stored with private and public cloud providers. This is often driven by the value of data and the laws and regulations that create compliance requirements.

To determine what data is “cloud friendly,” you need to have policies in place that state the types of data that can be moved, the acceptable service and deployment models, and the baseline security controls that need to be applied. For example, you may have a policy in place that states that personally identifiable information (PII) is allowed to be stored and processed only on specific CSP platforms in specific jurisdictions that are corporately sanctioned and have appropriate controls over storage of data at rest.

Once acceptable storage locations are determined, you must monitor them for activity using tools such as a database activity monitor (DAM) and file activity monitor (FAM). These controls can be not only detective in nature but may also prevent large data migrations from occurring.

The following tools and technologies can be useful for monitoring cloud usage and any data transfers:

• Cloud access security broker (CASB) CASB (pronounced “KAS-bee”) systems were originally built to protect SaaS deployments and monitor their usage, but they have recently expanded to address some concerns surrounding Platform as a Service (PaaS) and Infrastructure as a Service (IaaS) deployments as well. You can use CASB to discover your actual usage of cloud services through multiple means such as network monitoring, integration with existing network gateways and monitoring tools, or even monitoring Domain Name System (DNS) queries. This could be considered a form of a discovery service. Once the various services in use are discovered, a CASB can monitor activity on approved services either through an API connection or inline (man-in-the-middle) interception. Quite often, the power of a CASB is dependent on its data loss prevention (DLP) capabilities (which can be either part of the CASB or an external service, depending on the CASB vendor’s capabilities).

• URL filtering URL filtering (such as a web gateway) may help you understand which cloud services your users are using (or trying to use). The problem with URL filtering, however, is that generally you are stuck in a game of “whack-a-mole” when trying to control which services are allowed to be used and which ones are not. URL filtering will generally use a whitelist or blacklist to determine whether or not users are permitted to access a particular web site.

• Data loss prevention A DLP tool may help detect data migrations to cloud services. You should, however, consider a couple of issues with DLP technology. First, you need to “train” a DLP to understand what is sensitive data and what is not. Second, a DLP cannot inspect traffic that is encrypted. Some cloud SDKs and APIs may encrypt portions of data and traffic, which will interfere with the success of a DLP implementation.

CASB Backgrounder

CASB began as an SaaS security control but has recently moved toward protecting both PaaS and IaaS. The CASB serves multiple purposes, the first of which is performing a discovery scan of currently used SaaS products in your organization. It does this by reading network logs that are taken from an egress (outbound) network appliance. As the network logs are read, a reverse DNS lookup is performed to track the IP addresses to provider domain names. The resulting report can then tell you what services are used and how often. Optionally, if the discovery service supports it, you may be able to see the users who are using particular SaaS services.

After the discovery is performed, CASB can be used as a preventative control to block access to SaaS products. This functionality, however, is being quickly replaced through the integration of DLP. Through integration with a DLP service, you can continue to allow access to an SaaS product, but you can control what is being done within that SaaS product. For example, if somebody uses Twitter, you can restrict certain keywords or statements from being sent to the platform. Consider, for example, a financial services company. A disclosure law states that recommendations cannot be made without disclosure regarding the company’s and individual’s ownership position of the underlying stock. In this case, you can allow people to use Twitter for promotion of the brokerage company itself, but you can restrict them from making expressions such as “Apple stock will go up $50 per share.”

Now that you know about CASB discovery and inline filtering capabilities, let’s move on to the API integration aspect that some CASBs can offer. When you are considering a CASB for API integration, you are looking to determine how people are using that cloud product by being able to see things about the metastructure of the offering. This is where CASB can tell you the amount of logins that have occurred, for example, and other details about the cloud environment itself. You need to pay particular attention to whether the CASB vendor supports the platform APIs that you are actually consuming.

Concerning inline filtering versus API integration of CASBs, most vendors have moved toward a hybrid model that offers both. This is a maturing technology and is experiencing rapid changes. When you’re investigating CASB, it is very important that you perform vendor comparisons based on what you actually need versus what the CASB vendors offer. After all, who cares if a CASB supports the most APIs by volume if it doesn’t support any of the providers you use or plan to use in the near future? The same can be said for integration being able to leverage external DLP solutions.

Regarding the use of CASB for control of PaaS and IaaS, this is a fairly new introduction to the majority of CASBs in the marketplace. You will get API coverage of only the metastructure for the most part. In other words, you cannot expect the CASB to act as some form of application security testing tool for the applications that you are running in a PaaS, for example, and you cannot expect to use a CASB to act as a centralized location to perform vulnerability assessment of instances in an IaaS environment.

Securing Cloud Data Transfers

To protect data as it is moving to a cloud, you need to focus on the security of data in transit. For example, does your provider support Secure File Transfer Protocol (SFTP), or do they require you to use File Transfer Protocol (FTP) that uses clear-text credentials across the Internet? Your vendor may expose an API to you that has strong security mechanisms in place, so there is no requirement on your behalf to increase security.

As far as encryption of data in transit is concerned, many of the approaches used today are the same approaches that have been used in the past. This includes Transport Layer Security (TLS), Virtual Private Network (VPN) access, and other secure means of transferring data. If your provider doesn’t offer these basic security controls, get a different provider—seriously.

Another option for ensuring encryption of data in transit is that of a proxy (aka hybrid storage gateway or cloud storage gateway). The job of the proxy device is to encrypt data using your encryption keys prior to it being sent out on the Internet and to your provider. This technology, while promising, has not achieved the expected rate of adoption that many, including myself, anticipated. Your provider may offer software versions of this technology, however, as a service to its customers.

When you’re considering transferring very large amounts of data to a provider, do not overlook shipping of hard drives to the provider if possible. Although data transfers across the Internet are much faster than they were ten years ago, I would bet that shipping 10 petabytes of data would be much faster than copying it over the Internet. Remember, though, that when you’re shipping data, your company may have a policy that states all data leaving your data center in physical form must be encrypted. If this is the case, talk with your provider regarding how best to do this. Many providers offer the capability of shipping drives and/or tapes to them. Some even offer proprietary hardware options for such purposes that they will ship to you.

Finally, some data transfers may involve data that you do not own or manage, such as data from public or untrusted sources. You should ensure that you have security mechanisms in place to inspect this data before processing or mixing it in with your existing data.

Securing Data in the Cloud

You need to be aware of only two security controls for your CCSK exam: The core data security controls are access controls and encryption. Remember that access controls are your number-one controls. If you mess this up, all the other controls fall apart. Once you get the basics right (meaning access controls), then you can move on to implementing appropriate encryption of data at rest using a risk-based approach. Let’s look at these controls.

Cloud Data Access Controls

Access controls must be implemented properly in three main areas:

• Management plane These access controls are used to restrict access to the actions that can be taken in the CSP’s management plane. Most CSPs have deny-by-default access control policies in place for any new accounts that may be created.

• Public and internal sharing controls These controls must be planned and implemented when data is shared externally to the public or to partners. As you read in Chapter 1, several companies have found themselves on the front pages of national newspapers after getting these access controls wrong by making object storage available to the public.

• Application-level controls Applications themselves must have appropriate controls designed and implemented to manage access. This includes both your own applications built in PaaS as well as any SaaS applications your organization consumes.

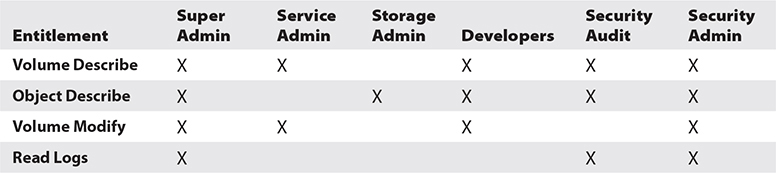

With the exception of application-level controls, your options for implementing access controls will vary based on the cloud service model and the provider’s specific features. To assist with planning appropriate access controls, you can use an entitlement matrix on platform-specific capabilities. This entitlement matrix is essentially a grid, similar to the following, that lists the users, groups, and roles with access levels for resources and functions:

After entitlements are established, you must frequently validate that your controls meet requirements, with a particular focus on public-sharing controls. You can establish alerts for all new public shares or for changes in permission that allow public access to quickly identify any overly permissive entitlements.

It is important that you understand the capabilities exposed by the provider to support appropriate access controls on all data under your control and that you build your entitlement matrix and implement these controls in the environment. This spans all data such as databases and all cloud data stores.

Storage (at Rest) Encryption and Tokenization

Before I discuss model-specific encryption options, I need to address the various ways that data can be protected at rest. The two technologies addressed in the CSA Guidance are encryption and tokenization. Both of these technologies make data unreadable to unauthorized users or systems that are trying to read your data.

Encryption scrambles the data to make it unreadable for the foreseeable future (well, until quantum computing becomes mainstream, at which point all bets are off—but I digress). Tokenization replaces each element of a data set with a random value. The tokenization system stores both the original data and the randomized version in a secure database for later retrieval.

Tokenization is a method proposed by the payment card industry (PCI) as a means to protect credit card numbers. In a PCI tokenization system, for example, a publicly accessible tokenization server can be used as a front end to protect actual credit card information that is held in a secure database in the back end. When a payment is processed, the vendor receives a token that acts like a reference ID that can be used to perform actions on a transaction such as refunds. At no time does the vendor need to store actual credit card information; rather, they store these tokens.

The CSA Guidance states that tokenization is often used when the format of the data is important. Format-preserving encryption (FPE) encrypts data but keeps the same structural format.

In the cloud, there are three components of an encryption system and two locations. The three components are the data itself, the encryption engine, and key management that holds the encryption keys.

Any of these components can be run in any location. For example, your data could be in a cloud environment, and the encryption engine and key-management service that holds the keys could be within your data center. Really, any combination is possible. The combination will often be based on your risk appetite. One organization could be perfectly fine with all three being in a cloud environment, whereas another organization would require that all data be stored in a cloud environment only after being encrypted locally.

These security requirements will drive the overall design of your encryption architecture. When designing an encryption system, you should start with a threat model and answer some basic questions such as these:

• Do you trust the cloud provider to store your keys?

• How could the keys be exposed?

• Where should you locate the encryption engine to manage the threats you are concerned with?

• Which option best meets your risk tolerance requirements: managing the keys yourself or letting the provider do that for you?

• Is there separation of duties between storing the data encryption keys, storing the encrypted data, and storing the master key?

The answers to these and other questions will help guide your encryption system design for cloud services.

Now that you understand the high-level elements of encryption and obfuscation in a cloud environment, let’s look at how encryption can be performed in particular service models.

IaaS Encryption

When considering encryption in IaaS, you need to think about the two main storage offerings: volume-storage and object- and file-storage encryption.

Volume-storage encryption involves the following:

• Instance-managed encryption The encryption engine runs inside the instance itself. An example of this is the Linux Unified Key Setup. The issue with instance-managed encryption is that the key itself is stored in the instance and protected with a passphrase. In other words, you could have AES-256 encryption secured with a passphrase of 1234.

• Externally managed encryption Externally managed encryption stores encryption keys externally, and a key to unlock data is issued to the instance on request.

Object-storage encryption involves the following:

• Client-side encryption In this case, data is encrypted using an encryption engine embedded in the application or client. In this model, you are in control of the encryption keys used to encrypt the data.

• Server-side encryption Server-side encryption is supplied by the CSP, who has access to the encryption key and runs the encryption engine. Although this is the easiest way to encrypt data, this approach requires the highest level of trust in a provider. If the provider holds the encryption keys, they may be forced (compelled) by a government agency to unencrypt and supply your data.

• Proxy encryption This is a hybrid storage gateway. This approach can work well with object and file storage in an IaaS environment, as the provider is not required to access your data in order to deliver services. In this scenario, the proxy handles all cryptography operations, and the encryption keys may be held within the appliance or by an external key-management service.

PaaS Encryption

Unlike IaaS, where there are a few dominant players, there are numerous PaaS providers, all with different capabilities as far as encryption is concerned. The CSA Guidance calls out three areas where encryption can be used in a PaaS environment:

• Application-layer encryption When you’re running applications in a PaaS environment, any required encryption services are generally implemented within the application itself or on the client accessing the platform.

• Database encryption PaaS database offerings will generally offer built-in encryption capabilities that are supported by the database platform. Examples of common encryption capabilities include Transparent Database Encryption (TDE), which encrypts the entire database, and field-level encryption, which encrypts only sensitive portions of the database.

• Other PaaS providers may offer encryption for various components that may be used by applications such as message queuing services.

SaaS Encryption

Encryption of SaaS is quite different from that of IaaS and PaaS. Unlike the other models, SaaS generally is used by a business to process data to deliver insightful information (such as a CRM system). The SaaS provider may also use the encryption options available for IaaS and PaaS providers. CSPs are also recommended to implement per-customer keys whenever possible to improve the enforcement of multitenancy isolation.

Key Management (Including Customer-Managed Keys)

Strong key management is a critical component of encryption. After all, if you lose your encryption keys, you lose access to any encrypted data, and if a bad actor has access to the keys, they can access the data. The main considerations concerning key-management systems according to the CSA Guidance are the performance, accessibility, latency, and security of the key-management system.

The following four key-management system deployment options are covered in the CSA Guidance:

• HSM/appliance Use a traditional hardware security module (HSM) or appliance-based key manager, which will typically need to be on premises (some vendors offer cloud HSM), and deliver the keys to the cloud over a dedicated connection. Given the size of the key material, many vendors will state that there is very little latency involved with this approach to managing keys for data held in a cloud environment.

• Virtual appliance/software A key-management system does not need to be hardware-based. You can deploy a virtual appliance or software-based key manager in a cloud environment to maintain keys within a provider’s environment to reduce potential latency or disruption in network communication between your data center and cloud-based systems. In such a deployment, you still own the encryption keys, and they cannot be used by the provider if legal authorities demand access to your data.

• Cloud provider service This key-management service is offered by the cloud provider. Before selecting this option, make sure you understand the security model and service level agreements (SLAs) to determine whether your key could possibly be exposed. You also need to understand that although this is the most convenient option for key management in a cloud environment, the provider has access to the keys and can be forced by legal authorities to hand over any data upon request.

• Hybrid This is a combination system, such as using an HSM as the root of trust for keys but then delivering application-specific keys to a virtual appliance that’s located in the cloud and manages keys only for its particular context.

Many providers (such as storage) may offer encryption by default with some services (such as object storage). In this scenario, the provider owns and manages the encryption keys. Because the provider holding the encryption keys may be seen as a risk, most providers generally implement systems that impose separation of duties. To use keys to gain access to customer data would require collusion among multiple provider employees. Of course, if the provider is able to unencrypt the data (which they can if they manage the key and the engine), they must do so if required to by legal authorities. Customers should determine whether they can replace default provider keys with their own that will work with the provider’s encryption engine.

Customer-managed encryption keys are controlled to some extent by the customer. For example, a provider may expose a service that either generates or imports an encryption key. Once in the system, the customer selects the individuals who can administer and/or use the key to encrypt and decrypt data. As the key is integrated with the provider’s encryption system, access to encrypted data can be used with the encryption engine created and managed by the provider. As with provider-managed keys, because these keys are accessible to the provider, the provider must use them to deliver data to legal authorities if required to do so.

If the data you are storing is so sensitive that you cannot risk a government accessing it, you have two choices: use your own encryption that you are in complete control of, or don’t process this data in a cloud environment.

Data Security Architecture

You know that cloud providers spend a lot of time and money ensuring strong security in their environments. Using provider-supplied services as part of your architecture can result in an increase of your overall security posture. For example, you can realize large architectural security benefits by something as simple as having cloud instances transfer data through a service supplied by the provider.

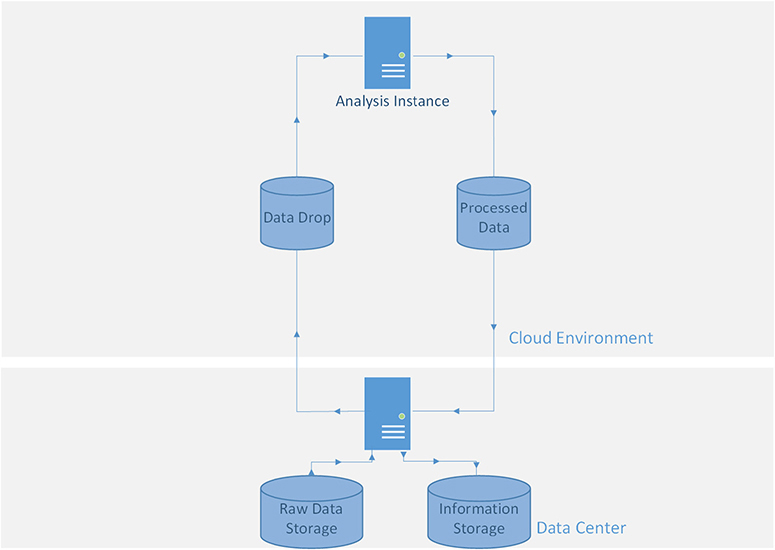

Consider, for example, a scenario in which you need to analyze a set of data. Using a secure architecture design (shown in Figure 11-1), you could copy the data to your provider’s object store. Your cloud analysis instance accesses the object storage, collects the data, and then processes the data. It then sends the processed information to the object storage and sends you a notification that your job is done. To retrieve the information you want, you connect to the storage directly and download the file(s). Your application does what is needed, and there is no path from a server running in a hostile network to your data center. This is just one example of the infinite possibilities regarding new security architecture patterns possible with cloud.

Figure 11-1 Increasing security architecture by integrating provider service

In many cases, you will be able to find opportunities to incorporate provider services into your architecture that will be more scalable, cheaper, and more secure than traditional architecture patterns used in your current computing environment.

Monitoring, Auditing, and Alerting

When considering monitoring of your cloud environment, you need to have access to telemetry data from both the applistructure and metastructure layers. In other words, you need to collect logging from the server and applications (applistructure), as well as from the metastructure itself.

• Applistructure Collect event logs from servers and applications and deliver to security information and event management (SIEM). To collect database activity, consider a DAM solution.

• Metastructure Collect any data from the API activity occurring in your cloud environment, as well as logs from any service you may be consuming, such as any file access in object storage.

Strong monitoring, auditing, and alerting capabilities cannot be seen as optional. Copies of log data should be stored in a safe location, such as a separate logging account. You want to do this for multiple reasons, including chain of custody for legal purposes. Note, however, that log data will likely need to be accessible to administrators and engineers so they can troubleshoot issues. You may want to determine whether the provider offers the ability to replicate this data to the logging account. This way, administrators can access the logs without requiring any access to a centralized logging area.

Additional Data Security Controls

Numerous additional security controls can help augment security. The following sections address some options for securing systems and information in a cloud environment.

Cloud Platform/Provider-Specific Controls

It is essentially impossible to keep up with all of the various controls offered by cloud providers, especially IaaS providers. Providers may offer machine learning–based anomaly detection, intrusion prevention systems, layer 7 firewalls (such as web application firewalls), data classification systems, and more. In fact, provider offerings that are not designed for security can be leveraged to augment security. Examples include increasing security architecture through the implementation of a service that removes direct data paths, or even services that can tell you the various services consumed by an application running in a serverless environment.

Data Loss Prevention

A DLP system is both a preventative and detective control that can be used to detect potential breaches or misuse of data based on information it is programmed to identify. It can work with data in use (installed on an endpoint or server, for example), in motion (such as a network device), or at rest (such as scan data in storage). DLP systems need to be configured to understand what is sensitive data and in what context it is considered sensitive. For example, the words “jump” and “roof” themselves are not words to be concerned about, but the phrase, “I’m going to jump off the roof,” is a very concerning statement. As with the statement, the individual pieces of information may not be considered sensitive or important, but in certain contexts, they are very much so. DLP can be configured to understand what a credit card number looks like, for example, to perform a test (a Luhn algorithm check) to ensure that it is a valid credit card number, and then to report or block that data from being sent to another system or outside of your network.

DLP is generally considered an SaaS security control. You know that DLP services are often used in a CASB to identify and stop potentially sensitive data from being sent to an SaaS product through integration with inline inspection. DLP functionality in a CASB can either be supplied as part of the CASB itself or it can integrate with an existing DLP platform. When investigating potential CASB solutions, you should be sure to identify the DLP functionality and whether it will integrate with an existing DLP platform you own. The success of your CASB to identify and protect sensitive data will rely heavily on the effectiveness of the DLP solution it is leveraging.

Your CSP may offer DLP capabilities as well. For example, cloud file storage and collaboration products may be able to scan uploaded files using preconfigured keywords and/or regular expressions in their DLP system.

Enterprise Rights Management

Enterprise rights management (ERM, not to be confused with enterprise risk management) and digital rights management (DRM) are both security controls that provide control over accessing data. While DRM is more of a mass-consumer control and typically used with media such as books, music, video games, and other consumer offerings, ERM is typically used as an employee security control that can control actions that can be performed on files, such as copy and paste operations, taking screenshots, printing, and other actions. Need to send a sensitive file to a partner but want to ensure the file isn’t copied? ERM can be used to protect the file (to a degree).

Both ERM and DRM technologies are not often offered or applicable in a cloud environment. Both technologies rely on encryption, and as you know, encryption can break capabilities, especially with SaaS.

Data Masking and Test Data Generation

Data masking is an obfuscation technology that alters data while preserving its original format. Data masking can address multiple standards such as the Payment Card Industry Data Security Standard (PCI DSS), personally identifiable information (PII) standards, and protected health information (PHI) standards. Consider a credit card number, for example:

4000 3967 6245 5243

With masking, this is changed to

8276 1625 3736 2820

This example shows a substitution technique, where the credit card numbers are changed to perform the masking. Other data-masking techniques include encryption, scrambling, nulling out, and shuffling.

Data masking is generally performed in one of two ways: test data generation (often referred to as static data masking) and dynamic data masking:

• Test data generation A data-masking application is used to extract data from a database, transform the data, and then duplicate it to another database in a development or test environment. This is generally performed so production data is not used during development.

• Dynamic data masking Data is held within its original database, but the data stream is altered in real time (on the fly), typically by a proxy, depending on who is accessing the data. Take a payroll database, for example. If a developer accesses the data, salary information would be masked. But if an HR manager accesses the same data, she will see the actual salary data.

Enforcing Lifecycle Management Security

As mentioned, data residency can have significant legal consequences. Understand the methods exposed by your provider to ensure that data and systems are restricted to approved geographies. You will want to establish both preventative controls to prohibit individuals from accessing unapproved regions and detective controls to alert you if the preventative controls fail. Provider encryption can also be used to protect data that is accidentally moved across regions, assuming the encryption key is unavailable in the region to which data was accidentally copied.

All controls need to be documented, tested, and approved. Artifacts of compliance may be required to prove that you are maintaining compliance in your cloud environment.

Chapter Review

This chapter addressed the recommendations for data security and encryption according to the CSA Guidance. You should be comfortable with the following items in preparation for your CCSK exam:

• Access controls are your single most important controls.

• All cloud vendors and platforms are different. You will need to understand the specific capabilities of the platforms and services that your organization is consuming.

• Leveraging cloud provider services can augment overall security. In many cases, a provider will offer controls at a lower cost than controls you would have to build and maintain yourself and that don’t have the same scalability or orchestration capabilities.

• Entitlement matrices should be built and agreed upon with a system owner to determine access controls. Once these are finalized, you can implement the access controls. The granularity of potential access controls will be dependent on cloud provider capabilities.

• Cloud access security brokers (CASBs) can be used to monitor and enforce policies on SaaS usage.

• Remember that there are three components involved with encryption (engine, keys, and data), and these components can be located in a cloud or a local environment.

• Customer-managed keys can be managed by a customer but are held by the provider.

• Provider-managed encryption can make encryption as simple as checking a box. This may address compliance, but you need to ensure that your provider’s encryption system is acceptable from a security perspective.

• Whenever the provider can access an encryption key, they may be forced to provide data to government authorities if required to do so by law. If this is an unacceptable risk, either encrypt the data locally with your own keys or avoid storing that data in a cloud environment.

• Using your own encryption (such as using an encryption proxy device) will generally break SaaS. Unlike IaaS and PaaS providers, SaaS providers are often used to process data into valuable information.

• Did I mention access controls are your most important controls?

• New architectural patterns are possible with the cloud. Integrating cloud provider services as part of your architecture can improve security.

• Security requires both protective and detective controls. If you can’t detect an incident, you can never respond to it. Make sure you are logging both API- and data-level activity and that logs meet compliance and lifecycle policy requirements.

• There are several standards for encryption and key-management techniques and processes. These include NIST SP 800-57, ANSI X9.69, and X9.73. (Note: Just know that these exist; you don’t need to study these standards in preparation for your CCSK exam.)

Questions

1. What should be offered by SaaS providers to enforce multitenancy isolation?

A. Provider-managed keys

B. Encryption based on AES-256

C. Per-customer keys

D. Customer-managed hardware security module

2. If your organization needs to ensure that data stored in a cloud environment will not be accessed without permission by anyone, including the provider, what can you do?

A. Use a local HSM and import generated keys into the provider’s encryption system as a customer-managed key.

B. Use an encryption key based on a proprietary algorithm.

C. Do not store the data in a cloud environment.

D. Use customer-managed keys to allow for encryption while having complete control over the key itself.

3. Which of the following controls can be used to transform data based on the individual accessing the data?

A. Enterprise rights management

B. Dynamic data masking

C. Test data generation

D. Data loss prevention

4. Why would an SaaS provider require that customers use provider-supplied encryption?

A. Data encrypted by a customer prior to being sent to the provider application may break functionality.

B. Customer-managed keys do not exist in SaaS.

C. SaaS cannot use encryption because it breaks functionality.

D. All SaaS implementations require that all tenants use the same encryption key.

5. Which of the following storage types is presented like a file system and is usually accessible via APIs or a front-end interface?

A. Object storage

B. Volume storage

C. Database storage

D. Application/platform storage

6. Which of the following should be considered your primary security control?

A. Encryption

B. Logging

C. Data residency restrictions

D. Access controls

7. Which of the following deployment models allows for a customer to have complete control over encryption key management when implemented in a provider’s cloud environment?

A. HSM/appliance-based key management

B. Virtual appliance/software key management

C. Provider-managed key management

D. Customer-managed key management

8. Which of the following security controls is listed by the payment card industry as a form of protecting credit card data?

A. Tokenization

B. Provider-managed keys

C. Dynamic data masking

D. Enterprise rights management

9. Which of the following is a main differentiator between URL filtering and CASB?

A. DLP

B. DRM

C. ERM

D. Ability to block access based on whitelists and blacklists

10. Which of the following is NOT a main component when considering data security controls in cloud environments?

A. Controlling data allowed to be sent to a cloud

B. Protecting and managing data security in the cloud

c. Performing risk assessment of prospective cloud providers

D. Enforcing information lifecycle management

Answers

1. C. SaaS providers are recommended to implement per-customer keys whenever possible to provide better multitenancy isolation enforcement.

2. C. Your only option is not using the cloud. If data is encrypted locally and then copied to a cloud, this would also stop a provider from being able to unencrypt the data if compelled by legal authorities to do so. It is generally not recommended that you create your own encryption algorithms, and they likely wouldn’t work in a provider’s environment anyway.

3. B. Only dynamic data masking will transform data on the fly with a device such as a proxy that can be used to restrict presentation of actual data based on the user accessing the data. Test data generation requires that data be exported and transformed for every user who is accessing the copied database. None of the other answers is applicable.

4. A. If a customer encrypts data prior to sending it to the SaaS provider, it may impact functionality. SaaS providers should offer customer-managed keys to enhance multitenancy isolation.

5. A. Object storage is presented like a file system and is usually accessible via APIs or a front-end interface. The other answers are incorrect.

6. D. Access controls are always your number-one security control.

7. B. The only option for an encryption key-management system in a cloud environment is the implementation of a virtual machine or software run on a virtual machine that the customer manages.

8. A. Tokenization is a control the payment card industry lists as an option to protect credit card data.

9. A. The main difference between URL filtering and CASB is that, unlike traditional whitelisting or blacklisting of domain names, CASB can use DLP when it is performing inline inspection of SaaS connections.

10. C. Although risk assessment of cloud providers is critical, this activity is not a data security control.