CHAPTER 10

Application Security

This chapter covers the following topics from Domain 10 of the CSA Guidance:

• Opportunities and Challenges Surrounding Application Security in the Cloud

• Secure Software Development Lifecycle

• How the Cloud Impacts Application Design and Architectures

• The Rise and Role of DevOps

Quality is never an accident; it is always the result of high intention, sincere effort, intelligent direction, and skillful execution….

—John Ruskin

Security isn’t easy. Software development isn’t easy. What makes application security so challenging is that these two disciplines often work in isolated silos and, quite frankly, neither group really understands what the other does. Getting these two groups working together takes effort and requires a cultural change to allow for adoption of new technologies such as Platform as a Service (PaaS), serverless computing, and DevOps. As Mr. Ruskin said, quality is the result of sincere effort and intelligent direction. This approach will ultimately improve the quality of the software produced by your company.

When you’re talking about application security, you’re talking about a wide body of knowledge. For the CCSK exam, you will not be tested on any programming whatsoever. You do, however, need to be prepared for security questions on design and development, deployment, and operational defenses of applications in production. Application development and security disciplines themselves are evolving at an incredibly fast pace. Application development teams continue to embrace new technologies, processes, and patterns to meet business requirements, while driving down costs. Meanwhile, security is continuously playing catch-up with advances in application development, new programming languages, and new ways of obtaining compute services.

In this chapter, we’ll look at software development and how to build and deploy secure applications in the cloud—specifically in PaaS and Infrastructure as a Service (IaaS) models. Organizations building Software as a Service (SaaS) applications can also use many of these techniques to assist in building secure applications that run in their own data centers or in those of IaaS providers.

Ultimately, because the cloud is a shared responsibility model, you will encounter changes regarding how you address application security. This chapter attempts to discuss the major changes associated with application security in a cloud environment. It covers the following major areas:

• Secure software development lifecycle (SSDLC) Use the SSDLC to determine how cloud computing affects application security from initial design through to deployment.

• Design and architecture Several new trends in designing applications in a cloud environment affect and can improve security.

• DevOps and continuous integration/continuous deployment (CI/CD) DevOps and CI/CD are frequently used in both development and deployment of cloud applications and are becoming a dominant approach to software development, both in the cloud and in traditional data centers. DevOps brings new security considerations and opportunities to improve security from what you do today.

The SSDLC and Cloud Computing

Many different approaches have been taken by multiple groups with regard to SSDLC. Essentially, an SSDLC describes a series of security activities that should be performed during all phases of application design and development, deployment, and operations. Here are some of the more common frameworks used in the industry:

• Microsoft Security Development Lifecycle

• NIST 800-64, “Security Considerations in the System Development Life Cycle”

• ISO/IEC 27034 Application Security Controls Project

• OWASP Open Web Application Security Project (S-SDLC)

Although these frameworks all work toward a common goal of increasing security for applications, they all go about it just a little differently. This is why the Cloud Security Alliance breaks down the SSDLC into three larger phases:

• Secure design and development This phase includes activities ranging from training and developing organizational standards to gathering requirements, performing design review through threat modeling, and writing and testing code.

• Secure deployment This phase addresses security and testing activities that must be performed when you’re moving application code from a development environment into production.

• Secure operations This phase concerns the ongoing security of applications as they are in a production environment. It includes additional defenses such as web application firewalls, ongoing vulnerability assessments, penetration tests, and other activities that can be performed once an application is in a production environment.

Cloud computing will impact every phase of the SSDLC, regardless of which particular framework you use. This is a direct result of the abstraction and automation of the cloud, combined with a greater reliance on your cloud provider.

Remember that in the shared responsibility model, change is based on the service model—whether IaaS, PaaS, or SaaS. If you are developing an application that will run in an IaaS service model, you would be responsible for more security than the provider would be with regard to using and leveraging other features and services supplied by a PaaS provider. In addition, the service model affects the visibility and control that you have. For example, in a PaaS model, you may no longer have access to any network logs for troubleshooting or security investigation purposes.

Secure Design and Development

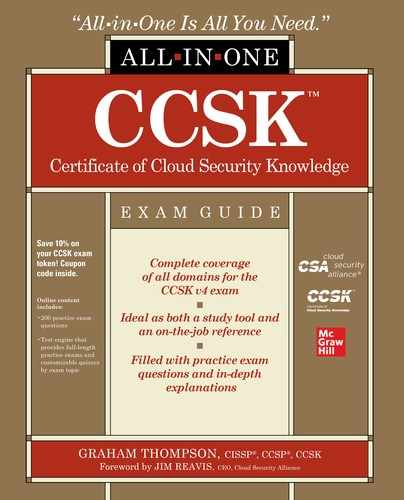

Figure 10-1 shows the CSA-defined five phases involved in secure application design and development, all of which are affected by cloud computing: training, define, design, develop, and test.

Figure 10-1 Five phases in secure application design and development. (Used with permission of Cloud Security Alliance.)

Training

Getting team members trained is the first phase. The CSA Guidance calls for three different roles (developers, operational staff, and security teams) and three categories of training (vendor-neutral cloud security training, vendor-specific training, and development tool training), which should all receive vendor-neutral training on cloud security fundamentals (such as the CCSK). These same groups should also undertake vendor-specific training on the cloud providers and platforms that are being used by an organization. Additionally, developers and operation staff who are directly involved in architecting and managing the cloud infrastructure should receive specific training on any development tools that will be used.

One of the final training elements should deal with how to create security tests. As the old saying goes, the answers you get are only as good as the questions you ask. In fact, some companies tell the developers in advance which security tests will be performed. Because the developers know what will be checked by the security team before a system is accepted, this approach can lead to more secure applications being created in the first place. In a way, this is a way to set up developers for success before they even begin writing code.

Define

In this phase, coding standards are determined (usually based on compliance requirements) and functional requirements are identified. In other words, you determine what this application must do from a security perspective. This is, of course, above and beyond any business requirements that the application needs to address.

Design

During the application design phase, you need to determine whether there are any security issues with the design of the application itself. (Note that this is about design, not actual development.) You need to establish an understanding between the security and software development teams as to how the software application is architected, any modules that are being consumed, and so on. The benefit of going through steps such as threat modeling is that you don’t have to take two steps forward and three steps back after the security team starts reviewing the application code itself. This can save you substantial amounts of time in development. Of course, you need to consider cloud providers and provider services as part of this application review. For example, you could ensure that your provider supports required logging capabilities as part of the design phase.

Threat Modeling Backgrounder

The goal of threat modeling as part of application design is to identify any potential threats to an application that may be successfully exploited by an attacker to compromise an application. This should be done during the design phase, before a single line of code is written.

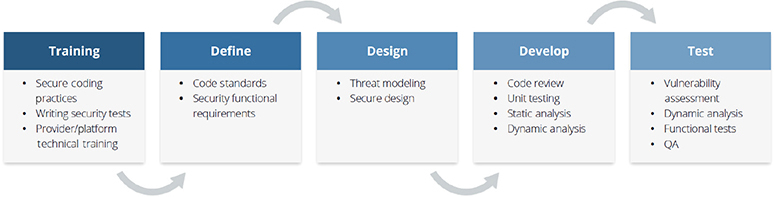

As with everything else, threat modeling can comprise many different variants. In this discussion, I address the STRIDE threat model (which stands for spoofing, tampering, repudiation, information disclosure, denial of service, and elevation of privilege). Table 10-1 gives the lowdown on each of these threats, supplies a high-level description, and offers a common countermeasure.

Table 10-1 STRIDE Threats and Countermeasures

A threat-modeling exercise can be performed in many ways, ranging from the good-old meeting room with a whiteboard, to tools such as the OWASP Threat Dragon or Microsoft Threat Modeling Tool, to the Elevation of Privilege card game from Microsoft (play a card game using a scoring system!). No matter which method you use to perform threat modeling, at least two teams should always be involved: the security team and the developers themselves. Ideally, you can include business analysts, system owners, and others as part of the threat-modeling exercise. In a way, this is a great form of team-building, and it breaks traditional silos between the security and development teams.

In a threat-modeling exercise, the first step involves diagramming all application components (and where they are located), all users and any other systems that will interact with the application, and the direction of data flows. Once this is done, the security and development teams can work together to identify potential threats to the various components and required countermeasures that need to be in place to stop these threats from being realized. Figure 10-2 presents a high-level example diagram provided by the OWASP Threat Dragon tool.

Figure 10-2 OWASP Threat Dragon sample web application diagram. (Used under Apache License 2.0.)

Having been through the threat-modeling exercise myself, I can tell you firsthand that the benefits are worth the effort. In one particular exercise, threat modeling discovered the following highlights:

• Application was using unencrypted FTP with clear-text credentials

• HTTP without certificates

• No input validation

• No API authentication or rate throttling

• No tracking of successful or unsuccessful logins

• Planned to use an improper identity store

• No Network Time Protocol synchronization for logging

This exercise took approximately three hours. Consider how long it would take to address all of these flaws had the application already been built? A whole lot longer than three hours. In fact, at the end of the exercise, the client CISO declared that every application from that point forward would undergo a STRIDE threat-modeling exercise. The CISO was so impressed with the results of the exercise that the organization changed its policies and processes. I think your company would do the same.

Develop

In the development phase, we finally get to build the application. As with every other system and application ever built in the history of mankind, the development environment should be an exact replica of the production environment. In other words, developers should never create applications in a production environment or hold actual production data as a part of the development phase. Developers will also probably be using some form of CI/CD pipeline, which needs to be properly secured, with a particular focus on the code repository (such as GitHub). In addition, if you will be leveraging PaaS or serverless development, enhanced logging must be baked into an application to compensate for the lack of logging that is usually available in such scenarios.

Test

As mentioned a few times already, testing should be performed while an application is being developed. These tests can include code review, unit testing, static analysis, and dynamic analysis. The next section of this chapter will discuss both static application security testing (SAST) and dynamic application security testing (DAST).

Secure Deployment

The deployment phase marks the transition or handover of code from developers to operations. Traditionally, this has been the point at which a final quality check occurs, including user acceptance testing. The cloud, DevOps, and continuous delivery are changing that, however, and are enabling tests to be automated and performed earlier in the lifecycle. Many types of application security tests can be integrated into both development and deployment phases. Here are some of the application security tests highlighted in the CSA Guidance:

• Code review This process does not change as a result of moving to the cloud. There are, however, specific cloud features and functions that may be leveraged as part of an application, and you need to ensure that least privilege is enabled at all times inside the application code and all dependencies. Not only should user permissions follow least privilege, but services and any roles that may be used to access other services should do so as well. About the worst thing you could do from an application-permission perspective is to have tight access controls for the users who are able to access the application and give the application full control over every aspect of a cloud environment. That said, you need to ensure that anything related to authentication, including the credentials used by an application and any required encryption, are reviewed as part of code review.

• Unit testing, regression testing, and functional testing These standard tests are used by developers and should address any API calls being used to leverage the functionality provided by a cloud provider.

• Static application security testing SAST analyzes application code offline. SAST is generally a rules-based test that will scan software code for items such as credentials embedded into application code and a test of input validation, both of which are major concerns for application security.

• Dynamic application security testing While SAST looks at code offline, DAST looks at an application while it is running. An example of DAST is fuzz testing, in which you throw garbage at an application and try to generate an error on the server (such as an “error 500” on a web server, which is an internal server error). Because DAST is a live test against a running system, you may need to get approval in advance from your provider prior to starting.

Cloud Impacts on Vulnerability Assessments

Vulnerability assessments (VAs) should always be performed on images before they are used to launch instances. VAs can be integrated into the CI/CD pipeline. Testing images should occur in a special locked-down test environment such as a virtual network or separate account. As with all security tests, however, this must be done after approval for any testing is given by the provider.

With an ongoing VA of running instances, you can use host-based VA tools to get complete visibility into any vulnerabilities on a system. VAs can also be used to test entire virtual infrastructures by leveraging infrastructure as code (IaC) to build this test environment. This enables you to generate an exact replica of a production environment in minutes, so you can properly assess an application and all infrastructure components without the risk of impacting the production environment.

When it comes to performing VAs, there are two schools of thought. One perspective is that a VA should be performed directly on a system itself to provide complete transparency and to allay fears that security controls in front of the system are hiding any exposures that actually exist. This is known as a “host-based” view. The other approach is to take the view of an outsider and perform the VA with all controls, such as virtual firewalls, taken into account. The CSA Guidance suggests that you use a host-based view by leveraging host-based agents. In this case, cloud provider permission will not be required, because the assessment is performed on the server, not across the provider’s network.

Cloud Impact on Penetration Testing

As with VAs, penetration tests can also be performed in a cloud environment, and you’ll need permission from the provider to perform them. The CSA recommends adapting your current penetration testing for the cloud by using the following guidelines:

• Use testing firms and individuals with experience working with the cloud provider where the application is deployed. Your applications will likely be using services supplied by the cloud service provider (CSP), and a penetration tester who does not understand the CSP’s services may miss critical findings.

• The penetration test should address the developers and cloud administrators themselves. This is because many cloud breaches attack those who maintain the cloud, not just the application running in the cloud. Testing should include the cloud management plane.

• If the application you are testing is a multitenant application, ensure that the penetration tests include attempts to break tenant isolation and gain access to another tenant.

Deployment Pipeline Security

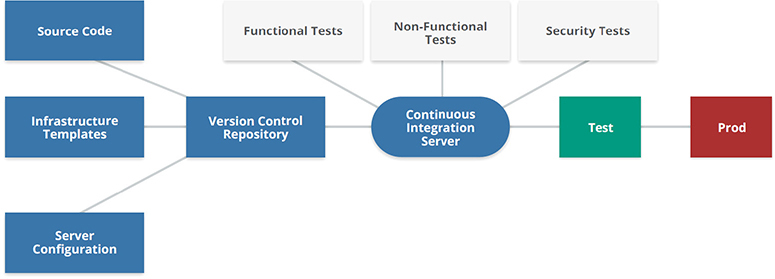

It may seem counterintuitive, but automated CI/CD pipelines can actually enhance security through supporting immutable infrastructures, automating security testing, and providing extensive logging of application and infrastructure changes when such changes are performed through the pipeline. The real power here is that there is no opportunity for human error to occur when it comes to testing—100 percent of the testing is performed 100 percent of the time. Test results can be directed toward a test result bucket so that, in the case of an audit, for example, all testing is easily demonstrated to an auditor. Logs can also be configured to state the person or system that submitted a change, and automated approval can also be implemented in a change management system if all tests were successfully passed.

As with anything else, the pipeline must be tightly secured. Pipelines should be hosted in a dedicated cloud environment with very limited access to production workloads or the infrastructure hosting the pipeline component. Figure 10-3 shows the various components that make up a deployment pipeline.

Figure 10-3 Components of a continuous deployment pipeline. (Used with permission of Cloud Security Alliance.)

As you can see in Figure 10-3, there are two central components of the deployment pipeline: The version control repository (such as GitHub) is where code is stored, and the continuous integration server (such as Jenkins) has the ability to use plug-ins in to perform any prebuild, build, and post-build activities. Activities here could include performing security tests or functional tests and sending results to a specified location. Additionally, the continuous integration server can connect to a change management system that will track any approved changes to an environment. You can also set thresholds on test results. For example, if there are any critical findings, the continuous integration server will not even build the application or perform the action that has been requested.

All of these tests are created in advance. From a security perspective, this means that although you still have separation of duties with a CI/CD pipeline, humans are not performing the tests at build time. With this in mind, you can see how some (and, more importantly, the CSA Guidance) consider an automated continuous deployment pipeline as being a more secure approach to deploying software to an environment.

Impact of IaC and Immutable Workloads

You read about IaC in Chapter 6 and immutable workloads in Chapter 7. So you know that IaC uses templates to create everything, from configuration of a particular server instance to building the entire cloud virtual infrastructure. The depth of IaC capabilities is entirely provider-dependent. If the provider does not support API calls to create a new user account, for example, you must do this manually.

Because these environments are automatically built for us from a set of source file definitions (templates), they can also be immutable. This means that any changes that are manually implemented will be overwritten the next time a template is run. When you use an immutable approach, you must always check for any changes made to the environment, and these changes must be made through these templates—potentially through the continuous deployment pipeline if you are using one. This enables you to lock down the entire infrastructure tightly—much more than is normally possible in a non-cloud application deployment.

The bottom line here is that when security is properly engaged, the use of IaC and immutable deployments can significantly improve security.

Secure Operations

Once the application is deployed, we can turn our attention to security in the operations phase. Other elements of security, such as infrastructure security (Chapter 7), container security (Chapter 8), data security (Chapter 11), and identity and access management (Chapter 12), are key components in a secure operations phase.

The following is additional guidance that directly applies to application security:

• Production and development environments should always be separated. Access to the management plane for production environments should be tightly locked down, compared to that of the development environment. When assessing privileges assigned to an application, you must always be aware of the credentials being used by the application to access other services. These must be assigned on a least-privilege basis, just as you would assign least privilege to user accounts. Multiple sets of credentials for each application service can be implemented to compartmentalize entitlements (permissions) further to support the least-privilege approach.

• Even when using an immutable infrastructure, you should actively monitor for changes and deviations from approved baselines. Again, depending on the particular cloud provider, this monitoring can and should be automated whenever possible. You can also use event-driven security (covered in the next section) to revert any changes to the production environment automatically.

• Application testing and assessment should be considered an ongoing process, even if you are using an immutable infrastructure. As always, if any testing and/or assessments will be performed across the provider’s network, they should be performed with the permission of the CSP to avoid violating any terms of service.

• Always remember that change management isn’t just about application changes. Any infrastructure and cloud management plane changes should be approved and tracked.

How the Cloud Impacts Application Design and Architectures

Cloud services can offer new design and architecture options that can increase application security. Several traits of the cloud can be used to augment security of applications through application architecture itself.

Cloud services can offer segregation by default. Applications can be run in their own isolated environment. Depending on your provider, you can run applications in separate virtual networks or different accounts. Although operational overhead will be incurred when using a separate account for every application, using separate accounts offers the benefit of enabling management plane segregation, thus minimizing access to the application environment.

If you do have animmutable infrastructure, you can increase security by disabling remote logins to immutable servers and other workloads, adding file integrity monitoring, and integrating immutable techniques into your instant recovery plans, as discussed in Chapter 9.

PaaS and serverless technologies can reduce the scope of your direct security responsibilities, but this comes at the cost of increasing your due-diligence responsibilities. This is because you are leveraging a service from the provider (assuming the provider has done a good job in securing the services they offer customers). The provider is responsible for securing the underlying services and operating systems.

I will cover serverless computing in greater detail in Chapter 14. For now, here are two major concepts that serverless computing can deliver to increase security of our cloud environments:

• Software-defined security This concept involves automating security operations and could include automating cloud incident response, changes to entitlements (permissions), and the remediation of unapproved infrastructure changes.

• Event-driven security This puts the concept of software-defined security into action. You can have a system monitoring for changes that will call a script to perform an automatic response in the event of a change being discovered. For example, if a security group is changed, a serverless script can be kicked off to undo the change. This interaction is usually performed through some form of notification messaging. Security can define the events to monitor and use event-driven capabilities to trigger automated notification and response.

Finally, microservices are a growing trend in application development and are well-suited to cloud environments. Using microservices, you can break down an entire application into its individual components and run those components on separate virtual servers or containers. In this way, you can tightly control access and reduce the attack surface of the individual functions by eliminating all services that are not required for a particular function to operate. Leveraging auto-scaling can also assist with availability, as only functions that require additional compute capacity need to be scaled up. There is additional overhead from a security perspective with microservices, however, because communications between the various functions and components need to be tightly secured. This includes securing any service discovery, scheduling, and routing services.

Microservices Backgrounder

Back in the early 2000s, I worked with a project manager named Ralph. One of Ralph’s favorite sayings was, “A system is not a server and a server is not a system.” Ralph was pretty ahead of his time! He called microservices before they even existed.

A microservices architecture breaks out the various components of a system and runs them completely separately from one another. When services need to access one another, they do so using APIs (usually REST APIs) to get data. This data is then presented to the requesting system. The gist is that changes to one component don’t require a complete system update, which differs from what was required with a monolithic system in which multiple components operate on the same server.

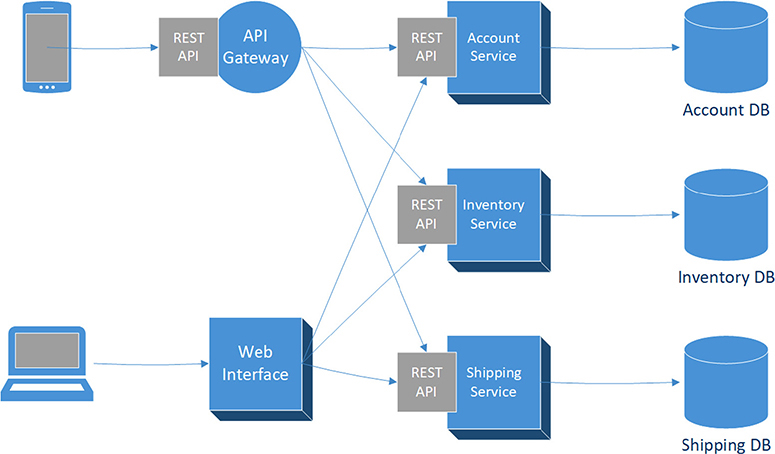

In Chapter 6, I discussed the “API Mandate” from Jeff Bezos at Amazon. The company basically created a microservices architecture, in which every component that was built exposed its APIs. Figure 10-4 shows how various components of an organization like Amazon.com can be broken out into microservices.

Figure 10-4 Microservices architecture diagram

As you can see, Figure 10-4 includes an account service, an inventory service, and a shipping service. Each of these different services can be written in a language that is appropriate for its particular purpose. For example, the account service could be written in Java, the inventory service in Ruby, and the shipping service in Python. When a change needs to be made to the inventory service, for example, neither the account service nor shipping service is impacted as a result. Also, access to changes can be restricted to the individual developers responsible for an individual service. This enforces a least-privileged approach to access.

In the figure’s architecture diagram, notice that an API gateway is being used by the mobile phone application. The API gateway acts as the entry point to all services behind it, so it can handle all aspects of the client accessing a system based on microservices. (I will discuss API gateways and authorizations in Chapter 12.)

The Rise and Role of DevOps

DevOps is a portmanteau (a fancy French word that refers to a combination of two words to create a new one) for “development” and “operations.” It is a culture, not a tool. Nor is it technology that you can just buy off the shelf and implement. The goal of DevOps is to have a deeper integration of development and operations teams that delivers automation of application deployments and infrastructure operations, resulting in higher-quality code in a faster time frame.

From a technical perspective, DevOps relies on the CI/CD pipeline, covered previously in the section, “Deployment Pipeline Security.” It will use programmatic automation tools to improve management of an infrastructure. Although DevOps is a new technology that is often seen in the cloud, it is not exclusively a cloud-based approach to software deployment.

DevOps Backgrounder

In DevOps, the development and operations teams are no longer siloed. Rather, these two teams can be merged into a single team, where software engineers (aka developers) work across the entire application lifecycle, from the development and testing to deployment and finally operations.

DevOps brings significant cultural changes with it, and this is why some companies may be hesitant to adopt it. DevOps can be used to change the way deployments occur, from queuing changes up for weeks and then deploying everything on one weekend, to performing multiple changes throughout the day. You may be wondering how on earth an organization could make multiple changes per day without performing QA testing? That’s an excellent question. To address this, many companies will focus on automatic rollback capability and implement a blue-green deployment approach to minimize risk even further.

The blue-green deployment approach works much like the immutable deployment approach. Although blue-green deployment can be done with containers and load balancers, I’m going to use an example of immutable virtual machine workloads in an auto-scaling group for our discussion. Consider, then, an auto-scaling group of three web servers. To implement a blue-green deployment approach, you update the web server image with the updated application code. You then terminate one of the running instances. When the auto-scaling group notices that one server is missing, it will automatically deploy the server with the newly patched image as part of that group. Once that is done, 33 percent of the incoming network traffic is directed to this newly patched server. That server is monitored, and if all is good, a subsequent server in that auto-scaling group is deleted or terminated. That now leaves you with 66 percent of network traffic being served by the newly updated application running on the web server pool. You rinse and repeat until all servers have been updated. This is how companies, otherwise known as DevOps unicorns (such as Netflix, Pinterest, Facebook, Amazon, and, well, basically any other global 24×7 web operation), will run to achieve effectively zero downtime.

Another item that concerns some business leaders about DevOps is the fact that developers in a DevOps world basically own what they code. This means that in many DevOps environments, the developers not only create the application, but they are also responsible for management of that application. This, of course, is quite different from a main security principle that states separation of duties must be enforced. However, we want developers to be able to push code quickly, we don’t want developers to access production data, and we don’t want developers to jeopardize security checks. A modern CI/CD pipeline and its associated automatic security tests enable us to make all of this possible.

In closing this discussion about DevOps, I think it’s very important to realize that DevOps is not magic, and it can’t happen overnight. It will take some time for business leaders to be convinced, if they aren’t already, that performing small and incremental changes can actually improve the security and availability of applications, not to mention provide a significant decrease in time to market.

Security Implications and Advantages of DevOps

To discuss the security implications in advantages of DevOps, look no further than the information presented in the section, “Deployment Pipeline Security,” earlier in the chapter. Pipelines will allow only approved code to be deployed. Development, test, and production versions are all based on the exact same source files, which eliminates any deviation from known good standards.

The CI/CD pipeline produces master images for virtual machines, containers, and infrastructure stacks very quickly and consistently. This enables automated deployments and immutable infrastructure. How is code approved to be deployed? You integrate security tests into the build process. Of course, manual testing is also possible as a final check prior to the application being launched in a production environment.

Auditing can be supported (and in many cases improved) through this automated testing of application code prior to the application being built. Everything can be tracked down to the individual changes in source files, all the way back to the person who made a change and an entire history of changes, which can be tracked in a single location. This offers considerable audit- and change-tracking benefits.

Speaking of change management, if the change management system you currently use exposes APIs, the CI/CD pipeline can automatically obtain approval for any deployment. Simply put, if a request for deployment comes in through the continuous integration server, the change management system can be set up to approve automatically any change based on the fact that the tests have been successful.

Chapter Review

This chapter discussed application security in a cloud environment. Application security itself does not change as a result of deploying to the cloud, but many new approaches to application architecture and design are possible with the cloud, and these need to be appropriately secured. In addition, the secure software development life cycle (SSDLC) has many different frameworks associated with it, and the CSA divides them into three distinct phases: secure design and development, secure deployment, and secure operations. We also looked at some of the other technologies that are commonly related to (but not exclusive to) the cloud, such as microservices and DevOps.

As for your CCSK exam preparation, you should be comfortable with the following information:

• Understand the security capabilities of your cloud provider and know that every platform and service needs to be inspected prior to adoption.

• Build security into the initial design process. Security is commonly brought into applications when it is too late. Secure applications are a result of a secure design.

• Even if you don’t have a formal software development life cycle in your organization, consider moving toward a continuous deployment method and automating security into the deployment pipeline. This still supports separation of duties, but a human is not performing the tests every single time. The security team creates the tests and the automated system runs them 100 percent thoroughly, 100 percent of the time.

• Threat modeling such as STRIDE, static application security testing (SAST), and dynamic application security testing (DAST) should all be integrated into application development.

• Application testing must be created and performed for a particular cloud environment. A major focus of application checks in a cloud environment is that of API credentials that are being used. Simply stated, you want to ensure that any application does not access other services with excessive privileges.

• If you are going to leverage new architectural options and requirements, you will need to update security policies and standards. Don’t merely attempt to enforce existing policies and standards in a new environment, where things are created and run differently than in a traditional data center.

• Use software-defined security to automate security controls.

• Event-driven security is a game-changer. Using serverless technology, you can easily kick off a script that will automatically respond to a potential incident.

• Applications can be run in different cloud environments, such as under different accounts, to improve segregation of management plane access.

Questions

1. Prior to developing applications in a cloud, which training(s) should be undertaken by security team members?

A. Vendor-neutral cloud training

B. Provider-specific training

C. Development tool training

D. A and B

2. Tristan has just been hired as CIO of a company. His first desired action is to implement DevOps. Which of the following is the first item that Tristan should focus on as part of DevOps?

A. Selecting an appropriate continuous integration server

B. Choosing a proof-of-concept project that will be the first use of DevOps

C. Understanding the existing corporate culture and getting leadership buy-in

D. Choosing the appropriate cloud service for DevOps

3. When you’re planning a vulnerability assessment, what is the first action you should take?

A. Determine the scope of the vulnerability assessment.

B. Determine the platform to be tested.

C. Determine whether the vendor needs to be given notification in advance of the assessment.

D. Determine whether the assessment will be performed as an outsider or on the server instance used by the running application.

4. Better segregation of the management plane can be performed by doing which of the following?

A. Run all applications in a PaaS.

B. Run applications in their own cloud account.

C. Leverage DevOps.

D. Use immutable workloads.

5. How can security be increased in an immutable environment?

A. By disabling remote logins

B. By implementing event-driven security

C. By leveraging serverless computing if offered by the provider

D. By increasing the frequency of vulnerability assessments

6. Which of the following CI/CD statements is false?

A. Security tests can be automated.

B. A CI/CD system can automatically generate audit logs.

C. A CI/CD system replaces the current change management processes.

D. A CI/CD leverages a continuous integration server.

7. How does penetration testing change as a result of the cloud?

A. The penetration tester must understand the various provider services that may be part of the application.

B. In most cases, server instances used to run applications will have customized kernels, which will not be understood by anyone except the provider.

C. Because of the nature of virtual networking, penetration tests must be performed by the cloud provider.

D. Penetration testing is not possible with containers, so many pentest results will be inconclusive.

8. During which phase of the SSDLC should threat modeling be performed by customers?

A. Design

B. Development

C. Deployment

D. Operations

9. During which phase of the SSDLC should penetration testing first be performed by customers?

A. Design

B. Development

C. Deployment

D. Operations

10. What is event-driven security?

A. When a provider will shut down a service for customers in the event of an attack being detected

B. Automating a response in the event of a notification, as established by the provider

C. Automating response in the event of a notification, as established by the customer

D. Automatic notification to a system administrator of an action being performed

Answers

1. D. Security team members should take both vendor-neutral training (such as CCSK) and provider-specific training (these are also recommended for developers and operations staff). Tools that are specific to deployments are not listed as required for security team members, only for operations and development staff.

2. C. Remember that DevOps is a culture, not a tool or technology (although a continuous integration service is a key component of the CI/CD pipeline that will be leveraged by DevOps). Understanding the existing corporate culture and getting leadership buy-in should be Tristan’s first course of action in implementing DevOps in his new position. DevOps is not a cloud technology.

3. C. You should always determine whether a vendor must be advised of any assessment in advance. If the provider requires advance notice as part of the terms and conditions of your use, not advising them of an assessment may be considered a breach of contract. Answers A and B are standard procedures as part of an assessment and must be performed regardless of the cloud. Answer D is an interesting one, because you are not guaranteed even to have a server instance to log onto as part of your assessment. The application could be built using PaaS, serverless, or some other technology managed by the provider.

4. B. Running applications in their own cloud accounts can lead to tighter segregation of the management plane. None of the other answers is applicable for this question.

5. A. When leveraging immutable workloads, security can be increased by removing the ability to log in remotely. Any changes must be made centrally in immutable environments. File integrity monitoring can also be implemented to enhance security, as any change made to an immutable instance is likely evidence of a security incident.

6. C. The false statement is that a CI/CD system replaces the current change management processes. In fact, a CI/CD system can integrate with your current change management system. All the other statements are true.

7. A. There is a high probability that applications will leverage various cloud provider services. How communication between these services occurs is critical for the penetration tester, so only testers with experience in a particular platform should perform these tests.

8. A. Threat modeling should be performed as part of the application design phase, before a single line of code is actually written during the development phase.

9. C. Penetration testing should be initially performed as part of the deployment phase of the SSDLC. You need to have an actual application to perform penetration testing against, and this testing should be performed before the application runs in a production environment. Of course, periodic penetration testing is a good thing during the operations phase, but the question asked when it should first be performed.

10. C. Event-driven security is the implementation of automated responses to notifications. This is created by the customer, who often leverages some form of API monitoring. If an API is used, this will trigger a workflow that may include both sending a message to a system administrator and running a script to address the instance automatically (such as reverting a change, changing virtual firewall rulesets, and so on).