THE OBJECTIVE OF THIS CHAPTER IS TO ACQUAINT THE READER WITH THE FOLLOWING CONCEPTS:

Threats to security, perpetrators, and attack methods

Administrative management controls used to promote security

Implementing data classification schemes to specify appropriate handling of records

Physical security protection methods

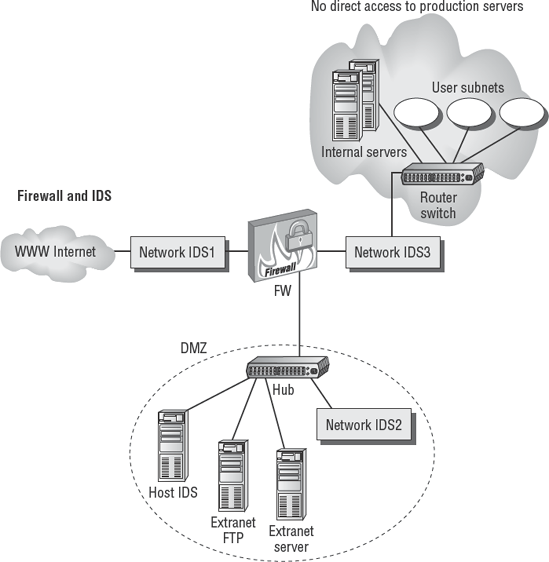

Perimeter security designs, firewalls, and intrusion detection

Logical access controls for identification, authentication, and restriction of users

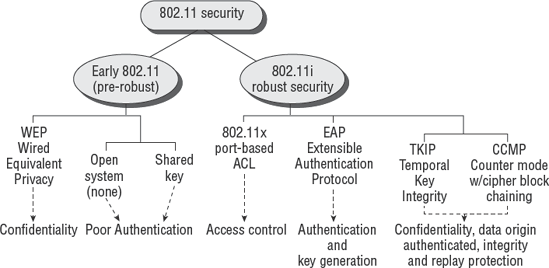

Changes in wireless security, including the robust security network

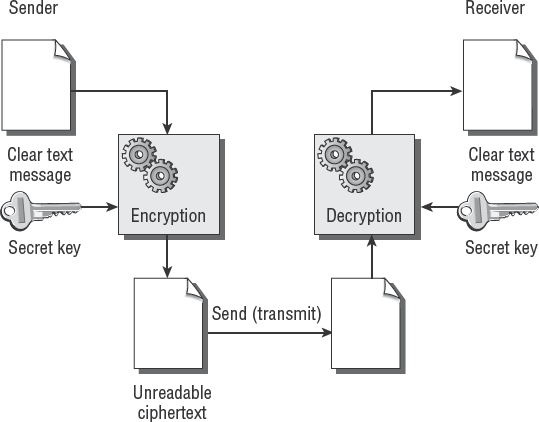

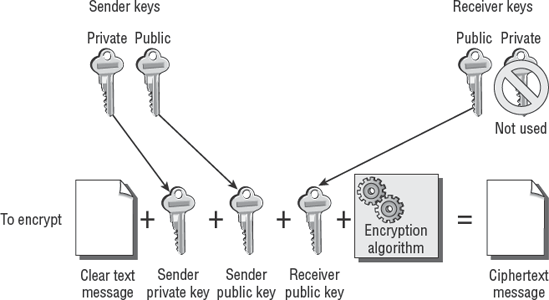

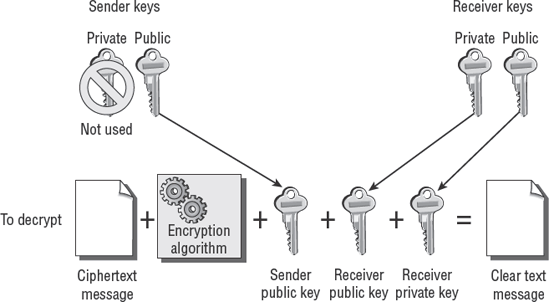

Encryption systems using symmetric and asymmetric public keys

Dealing with malicious software, viruses, worms, and other attacks

Storage, retrieval, transport, and disposition of confidential information

Controls and risks with the use of portable devices

Security testing, monitoring, and assessment tools

The goal of information asset protection is to ensure that adequate safeguards are in use to store, access, transport, and ultimately dispose of confidential information. The auditor must understand how controls promote confidentiality, integrity, and availability.

We will discuss a variety of technical topics related to network security, data encryption, design of physical protection, biometrics, and user authentication. This chapter represents the most significant area of the CISA exam.

Protecting information assets is a significant challenge. The very subject of security conjures up a myriad of responses. This chapter provides you with a solid overview of practical information about security. The unfortunate reality is that concepts of security have not evolved significantly over the last 2,000 years. Let us explain.

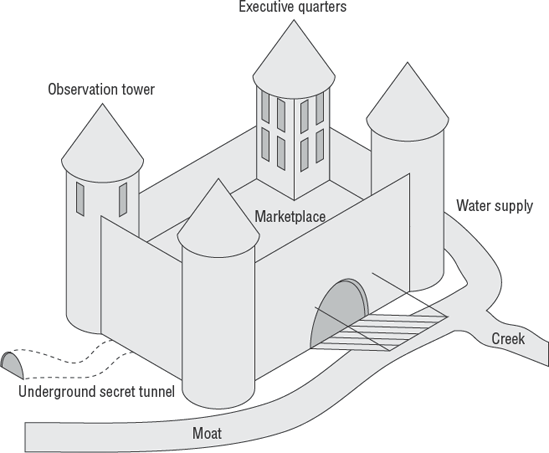

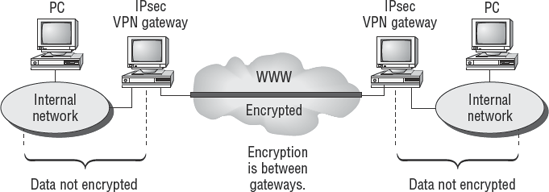

The medieval design of security is still pervasive. Most of your customers will view security as primarily a perimeter defense. History is riddled with failed monuments attesting to the folly of overreliance on perimeter defenses. Consider the castle walls to be equivalent to the office walls of the client's organization. Fresh water from the creek would be analogous to our modern-day utilities. The castle observation towers provide visibility for internal affairs and awareness of outside threats. The observation tower is functionally equal to network management and intrusion detection. A fortress drawbridge provides an equivalent function of the network firewall, allowing persons we trust to enter our organization. The castle courtyard serves as the marketplace or intranet. This is where our vendors, staff, and clients interact. During medieval times, it was necessary for our emissaries to enter and exit the castle fortress in secret. Confidential access was accomplished via a secret tunnel. Our modern-day equivalent to the secret tunnel is a virtual private network (VPN). Consider these thoughts for a moment while you look at Figure 7.1, concerning the medieval defensive design.

It is possible that security has actually regressed. In medieval times, royalty would use armed guards as an escort when visiting trading partners. In the modern world, the princess is given a notebook computer, PDA, cell phone, and airline ticket with instructions to check in later. Where is the security now?

Medieval castles fell as a result of infiltration, betrayal, loss of utilities such as fresh water, and brute force attacks against the fortress walls. This example should make it perfectly clear why internal controls need improvement. The only possible defensive strategy utilizes multiple layers of security with a constant vigil by management. Anything less is just another castle waiting to fall.

Let's take a quick look at some examples of computer crime and threats to the information assets.

There is nothing new about the threats facing organizations. History shows that these threats and crimes date back almost 4,000 years (over 130 generations). Therefore, none of these should be a surprise. We need to take a quick review of the threats and crimes that shall be mitigated with administrative, physical, and technical controls:

- Theft

The theft of information, designs, plans, and customer lists could be catastrophic to an organization. Consider the controls in place to prevent theft of money or embezzlement. Have equivalent controls in place to prevent the theft of valuable intellectual property.

- Fraud

Misrepresentation to gain an advantage is the definition of fraud. Electronic records may be subject to remote manipulation for the purpose of deceit, suppression, or unfair profit. Fraud may occur with or without the computer. Variations of fraud include using false pretenses, also known as pretexting, for any purpose of deceit or misrepresentation.

- Sabotage

Sabotage is defined as willful and malicious destruction of an employer's property, often during a labor dispute or to cause malicious interference with normal operations.

- Blackmail

Blackmail is the unlawful demand of money or property under threat to do harm. Examples are to injure property, make an accusation of a crime, or to expose disgraceful defects. This is commonly referred to as extortion.

- Industrial espionage

The world is full of competitors and spies. Espionage is a crime of spying by individuals and governments with the intent to gather, transmit, or release information to the advantage of any foreign organization. It's not uncommon for governments to eavesdrop on the communications of foreign companies. The purpose is to uncover business secrets to share with companies in their country. The intention is to steal any perceived advancements in position or technology. Telecommunications traveling through each country are subject to legal eavesdropping by governments. Additional care must be taken to keep secrets out of the hands of a competitor.

- Unauthorized disclosure

Unauthorized disclosure is the release of information without permission. The purpose may be fraud or sabotage. For example, unauthorized disclosure of trade secrets or product defects may cause substantial damage that is irreversible. The unauthorized disclosure of client records would cause a violation of privacy laws, not to mention details that would be valuable for a competitor.

- Loss of credibility

Loss of credibility is the damage to an organization's image, brand, or executive management. This can severely impact revenue and the organization's ability to continue. Fraud, sabotage, blackmail, and unauthorized disclosure may be used to destroy credibility.

- Loss of proprietary information

The mishandling of information can result in the loss of trade secrets. Valuable information concerning system designs, future marketing plans, and corporate formulas could be released without any method of recovering the data. Once a secret is out, there is no way to make the information secret again.

- Legal repercussions

The breach of control or loss of an asset can create a situation of undesirable attention. Privacy concerns have created new requirements for public disclosure following a breach. Without a doubt, the last thing an organization needs is increased interest from a government regulator. Stockholders and customers may have grounds for subsequent legal action in alleging negligence or misconduct, depending on the situation.

According to the U.S. Federal Bureau of Investigation (FBI), the top three losses in 2005–2006 were due to virus attack, unauthorized access, and theft of proprietary information. There is a trend of dramatic increase in unauthorized access and theft of proprietary information. So, the auditor may ask, who is doing this?

There is one fundamental difference between a victim and a perpetrator. The victim did not act with malice. The perpetrators of crime may be casual or sophisticated. Their motive may be financial, political, thrill seeking, or a biased grudge against the organization. The damage impact is usually the same regardless of the perpetrator's background or motive. A common trait is that a perpetrator will have time, access, or skills necessary to execute the offense.

Today's computer criminal does not require advanced skills, although they would help. A person with mal-intent needs little more than access to launch their attack. For this reason, strong access controls are mandatory. The FBI reported that the number of internal attacks were approximately equal to the number of external attacks since 2005. So, who is the attacker?

The term hacker has a double meaning. The honorable interpretation of hacker refers to a computer programmer who is able to create usable computer programs where none previously existed. In this Study Guide, we refer to the dishonorable interpretation of a hacker—an undesirable criminal. The criminal hacker focuses on a desire to break in, take over, and damage or discredit legitimate computer processing. The first goal of hacking is to exceed the authorized level of system privileges. This is why it is necessary to monitor systems and take swift action against any individual who attempts to gain a higher level of access. Hackers may be internal or external to the organization. Attempts to gain unauthorized access within the organization should be dealt with by using the highest level of severity, including immediate termination of employment.

The term cracker is a variation of hacker, with the analogy equal to a safe cracker. Some individuals use the term cracker in an attempt to differentiate from the honorable computer programmer definition of hacker. The criminal cracker and criminal hacker terms are used interchangeably. Crackers attempt to illegally or unethically break into a system without authorization.

A number of specialized programs exist for the purpose of bypassing security controls. Many hacker tools began as well-intentioned tools for system administration. The argument would be the same if we were discussing a carpenter's hammer. A carpenter's hammer used for the right purpose is a constructive tool. The same tool is a weapon if used for a nefarious purpose. A script kiddy is an individual who executes computer scripts and programs written by others. Their motive is to hack a computer by using someone else's software. Examples include password decryption programs and automated access utilities. Several years ago, a login utility was created for Microsoft users to get push-button access into a Novell server. This nifty utility was released worldwide before it was recognized that the utility bypassed Novell security. The utility was nicknamed Red Button and became immensely popular with script kiddies. Internal controls must be put in place to restrict the possession or use of administration utilities. Violations should be considered severe and dealt with in the same manner as hacker violations.

There is a reason why the FBI report cited the high volume of internal crimes. A person within the organization has more access and opportunity than anyone else. Few persons would have a better understanding of the security posture and weaknesses. In fact, an employee may be in a position of influence to socially engineer coworkers into ignoring safeguards and alert conditions. This is why it is important to monitor internal employee satisfaction. The great medieval fortresses fell by the betrayal of trusted allies.

The term ethical hacker or white hat is a new definition in computer security. An ethical hacker is one who is authorized to test computer hacks and attacks with the goal of identifying an organization's weakness. Some individuals participate in special training to learn about penetrating computer defenses. This will usually result in one of two outcomes.

In the first outcome, a few of the ethical white-hat technicians will exercise extraordinary restraint and control. The objective of ethical hacking is to exercise hacker techniques only in a highly regimented, totally supervised environment. The white-hat technician will operate from a prewritten test plan, reviewed by internal audit or management oversight. The slightest deviation is grounds for termination. This additional level of control is to protect the organization from error or personal agenda by the white-hat technician.

Tip

Separation of duties requires the white hat (ethical hacker) to operate under the management of internal audit or an equivalent audit department. Forced separation of duties provides evidence that protects both management and the technician. The ethical hacker must not have any operational duties or otherwise be involved in daily IT operations.

The second outcome is that a white-hat technician will direct their own efforts. Some individuals will demonstrate great pride in their ability to circumvent required controls. These self-directed hacking techniques create an unacceptable level of risk for multiple reasons including organizational liability. The series of movies about Jason Bourne, The Bourne Identity (2002), The Bourne Supremacy (2004), and The Bourne Ultimatum (2007) illustrate the risk of self-directed activity. After management loses control, there is no way back. These fictional movies are, in part, based on facts from real events.

Note

As professional auditors, we've been engaged on several occasions to determine whether the internal staff has been using hacker techniques and tools without explicit test plans and approval. Proper test documentation requires keystroke-level detail combined with specific steps to capture corresponding evidence. In each event except one, the technician was fired for violating internal controls. Additional controls are necessary when a white-hat technician is employed by the organization. Honest people may be kept honest with proper supervision.

External persons are referred to as third parties. Third parties include visitors, vendors, consultants, maintenance personnel, and the cleaning crew. These individuals may gain access and knowledge of the internal organization.

Note

You would be surprised by how many times auditors have been invited to join the client in a meeting room with internal plans still visible on the whiteboards. The client's careless disregard is obvious by the words important—do not erase emblazoned across the board. You can bet this same organization allows their vendors to work unsupervised. In the evening, the cleaning crew will unlock and open every door on the floor for several hours while vacuuming and emptying waste baskets. We seriously doubt the cleaning crew would challenge a stranger entering the office. In fact, a low-paid cleaning crew may be exercising their own agenda.

The term ignorance is simply defined as the lack of knowledge. An ignorant person may be a party to a crime and not even know it. Even worse, the individual may be committing an offense without realizing the impact of their actions. Management may be guilty of not understanding their current risks and corresponding regulations. The statement "We/I did not know" is the fastest route to a conviction. Every judge will agree that ignorance of the law is not an excuse. Every manager is expected to research the regulations bearing upon their business practice. There is no legal excuse for ignorance or apathy. Fortunately, ignorance can be cured by training. This is the objective of user training for internal controls. By teaching the purpose of internal security controls, the organization can reduce their overall risk.

Your clients will expect you to have knowledge about the different methods of attacking computers. We will try to take the boredom out of the subject by injecting practical examples. Computer attacks can be implemented with the computer or against a computer. There are basically two types of attacks: passive and active. Let's start with passive attacks.

Passive attacks are characterized by techniques of observation. The intention of a passive attack is to gain additional information before launching an active attack. Three examples of passive attacks are network analysis, traffic analysis, and eavesdropping:

- Network analysis

The computer traffic across a network can be analyzed to create a map of the hosts and routers. Common tools such as HP OpenView or OpenNMS are useful for creating network maps. The objective of network analysis is to create a complete profile of the network infrastructure prior to launching an active attack. Computers transmit large numbers of requests that other computers on the network will observe. Simple maps can be created with no more than the observed traffic or responses from a series of ping commands. The network ping command provides a simple communications test between two devices by sending a single request, also known as a ping. The concept of creating maps by using network analysis is commonly referred to as painting or footprinting.

- Host traffic analysis

Traffic analysis is used to identify systems of particular interest. The communication between host computers can be monitored by the activity level and number of service requests. Host traffic analysis is an easy method used to identify servers on the network.

Specific details on the host computer can be determined by using a fingerprinting tool such as Nmap. The Nmap utility is active software that sends a series of special commands, each command unique to a particular operating system type and version. For example, a Unix system will not respond to a NetBIOS type 137 request. However, a computer running Microsoft Windows will answer. The exact operating system of the computer can usually be identified with only seven or eight simple service requests. Host traffic analysis will provide clues to a system even if all other communication traffic is encrypted. This is an excellent tool for tracking down a rogue IP address. The Nmap utility provides information as to whether the destination address is a Unix computer, Macintosh computer, computer running Windows, or something else like an HP printer. This fingerprinting technique is also popular with hackers for the same reason.

- Eavesdropping

Eavesdropping is the traditional method of spying with the intent to gather information. The term originated from a person spying on others while listening under the roof eaves of a house. Computer network analysis is a type of eavesdropping. Other methods include capturing a hidden copy of files or copying messages as they traverse the network. Email messages and instant messaging are notoriously vulnerable to eavesdropping because of their insecure design. Computer login IDs, passwords, and user keystrokes can be captured by using eavesdropping tools. Encrypted messages can be captured by eavesdropping with the intention of breaking the encryption at a later date. The message can be read later, after the encryption is compromised. Eavesdropping helped the Allies crack the secret code of radio messages sent using the German Enigma machine in World War II. Network sniffers are excellent tools for capturing communications traveling across the network.

Passive attacks tend to be relatively invisible, whereas active attacks are easier to detect. The attacker will proceed to execute an active attack after obtaining sufficient background information. The active attack is designed to execute an act of theft or to cause a disruption in normal computer processing. Following is a list of active attacks:

- Social engineering

Criminals can trick an individual into cooperating by using a technique known as social engineering. The social engineer will fraudulently present themselves as a person of authority or someone in need of assistance. The social engineer's story will be woven with tiny bits of truth. All social engineers are opportunists who gain access by asking for it. For example, the social engineer may pretend to be a contractor or employee sent to work on a problem. The social engineer will play upon the victim's natural desire to help.

- Phishing

A new social engineering technique called phishing (pronounced fishing) is now in use. The scheme utilizes fake emails sent to unsuspecting victims, which contain a link to the criminal's counterfeit website. Anyone can copy the images and format of a legitimate website by using their Internet browser. A phishing criminal copies legitimate web pages into a fake email or to a fake website. The message tells the unsuspecting victim that it is necessary to enter personal details such as U.S. social security number, credit card number, bank account information, or online user ID and password. Phishing attacks can also be used to implement spyware on unprotected computers. Many phishing attacks can be avoided through user education.

- Dumpster diving

Attackers will frequently resort to rummaging through the trash for discarded information. The practice is also known as dumpster diving. Dumpster diving is perfectly legal under the condition that the individuals are not trespassing. This is the primary reason why proper destruction is mandatory. Most paper records and optical disks are destroyed by shredding.

- Virus

Computer viruses are a type of malicious program designed to self-replicate and spread across multiple computers. The purpose of the computer virus is to disrupt normal processing. A computer virus may commence damage immediately or lie dormant, awaiting a particular circumstance such as the date of April Fools' Day. Viruses will automatically attach themselves to the outgoing files. The first malicious computer virus came about in the 1980s during prototype testing for self-replicating software. Antivirus software will stop known attacks by detecting the behavior demonstrated by the virus program (signature detection) or by appending an antivirus flag to the end of a file (inoculation, or immunization). New virus attacks can be detected if any program tries to append data to the antivirus flag. Not all antivirus software works by signature scanning; it can also use heuristic scanning, integrity checking, or activity blocking. These are all valid virus detection methods.

- Worm

Computer worms are destructive and able to travel freely across the computer network by exploiting known system vulnerabilities. Worms are independent and will actively seek new systems on their own.

- Logic bomb

The concept of the logic bomb is designed around dormant program code that is waiting for a trigger event to cause detonation. Unlike a virus or worm, logic bombs do not travel. The logic bomb remains in one location, awaiting detonation. Logic bombs are difficult to detect. Some logic bombs are intentional, and others are the unintentional result of poor programming. Intentional logic bombs can be set to detonate after the perpetrator is gone.

- Trapdoor

Computer programmers frequently install a shortcut, also known as a trapdoor, for use during software testing. The trapdoor is a hidden access point within the computer software. A competent programmer will remove the majority of trapdoors before releasing a production version of the program. However, several vendors routinely leave a trapdoor in a computer program to facilitate user support. The commercial version of PGP encryption software contained a trapdoor designed to recover lost encryption keys and to allow the government to read the encrypted files, if necessary. Trapdoors compromise access controls and are considered dangerous.

- Root kit

One of the most threatening attacks is the secret compromise of the operating system kernel. Attackers embed a root kit into downloadable software. This malicious software will subvert security settings by linking itself directly into the kernel processes, system memory, address registers, and swap space. Root kits operate in stealth to hide their presence. Hackers designed root kits to never display their execution as running applications. The system resource monitor does not show any activity related to the presence of the root kit. Once installed, the hacker has control over the system. The computer is completely compromised.

- Brute force attack

Brute force is the use of extreme effort to overcome an obstacle. For example, an amateur could discover the combination to a safe by dialing all of the 63,000 possible combinations. There is a mathematical likelihood that the actual combination will be determined after trying less than one-third of the possible combinations. Brute force attacks are frequently used against user logon IDs and passwords. In one particular attack, all of the encrypted computer passwords are compared against a list of all the words encrypted from a language dictionary. After the match is identified, the attacker will use the unencrypted word that created the password match. This is why it is important to use passwords that do not appear in any language dictionary.

- Denial of service (DoS)

Attackers can disable the computer by rendering legitimate use impossible. The objective is to remotely shut down service by overloading the system and thereby prevent the normal user from processing anything on the computer.

- Distributed denial of service (DDoS)

The denial of service has evolved to use multiple systems for targeted attacks against another computer to force its crash. This type of distributed attack is also known as the reflector attack. Your own computer is being used by the hacker to launch remote attacks against someone else. Hackers start the attack from unrelated systems that the hacker has already compromised. The attacking computers and target are drawn into the battle—similar in concept to starting a vicious rumor between two strangers, which leads them to fight each other. The hackers sit safely out of the way while this battle wages.

- IP fragmentation attack

One of the common Internet attack techniques is to send a series of fragmented service requests to a computer through a firewall. The technique is successful if the firewall fails to examine each packet. For this reason, firewalls are configured to discard IP fragments.

- Crash-restart

A variation of attack techniques is crash-restart. An attacker loads malicious software onto a computer or reconfigures security settings to the attacker's advantage. Then the attacker crashes the system, allowing the computer to automatically restart (reboot). The attacker can take control of the system after it restarts with the new configuration. The purpose is to install a backdoor for the attacker.

- Maintenance accounts

Most computer systems are configured with special maintenance accounts. These maintenance accounts may be part of the default settings or created for system support. An example is the user account named DBA for database administrator, or tape for a tape backup device. All maintenance accounts should be carefully controlled. It is advisable to disable the default maintenance accounts on a system. The security manager may find an advantage in monitoring access attempts against the default accounts. Any attempted access may indicate the beginnings of an attack.

- Remote access attacks

Most attackers will attempt to exploit remote access. The goal of the attack is often based on personal satisfaction for political gain. There is less personal risk involved in gaining remote access. The common types of remote access attacks are referred to as follows:

- War dialing

The attacker uses an automated modem-dialing utility to launch a brute force attack against a list of phone numbers. The attack generates a list of telephone numbers that were answered by a computer modem. The next step of the attack is to break in through an unsecured modem. This is why it's necessary for modems to reject inbound calls or to be protected by a telephone firewall such as TeleWall by SecureLogix. Remote access servers (RASs) provide better authentication than a modem. RAS logging capability can be used to identify attacks, if properly configured. Using RAS modem pools combined with telephone-firewall products such as TeleWall will reduce the chance of an attacker making a successful penetration.

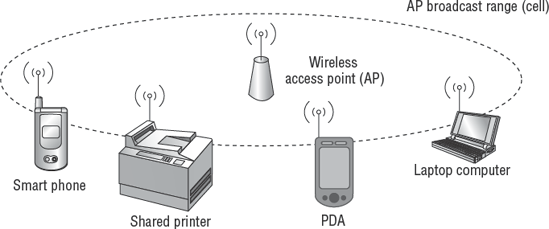

- War driving/walking

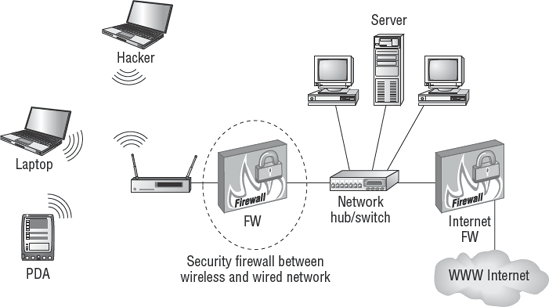

Wireless access is known to be insecure. Wireless manufacturers have seriously compromised security in an effort to improve Plug and Play capabilities for users. The trade-off of fewer user support issues for less security provides casual attackers the opportunity to gain remote access by walking or driving past wireless network transmitters. Previous attackers use symbols to mark the unsuspecting organization's property, to show other attackers that wireless access is available at that location. This marking technique is referred to as war chalking. War chalk maps of insecure access points are available for download on the Internet.

Note

We discuss the latest standards in wireless security later in this chapter. Wired Equivalent Privacy (WEP) and Wi-Fi Protected Access (WPA) have been officially classified as insecure since April 2005 because of implementation failures. Existing equipment using WEP/WPA is designated as insecure. The current standard is IEEE 802.11i known as Robust Security Network.

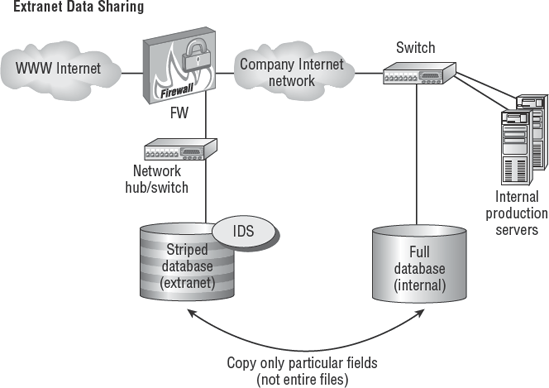

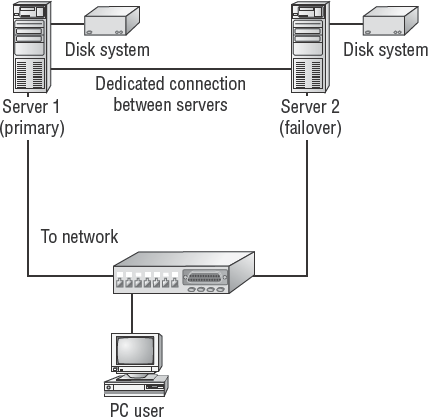

- Cross-network connectivity

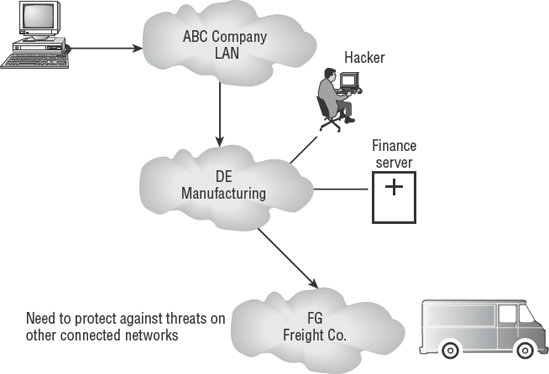

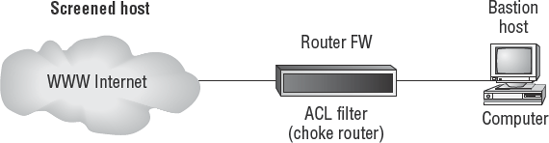

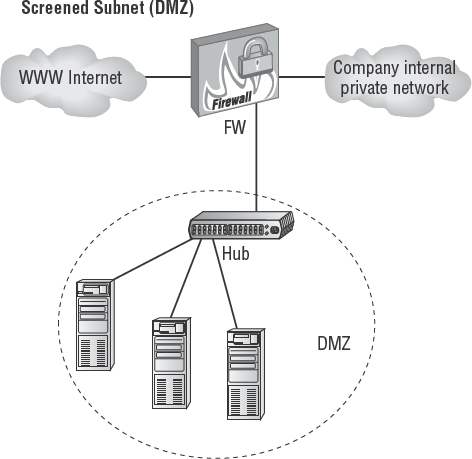

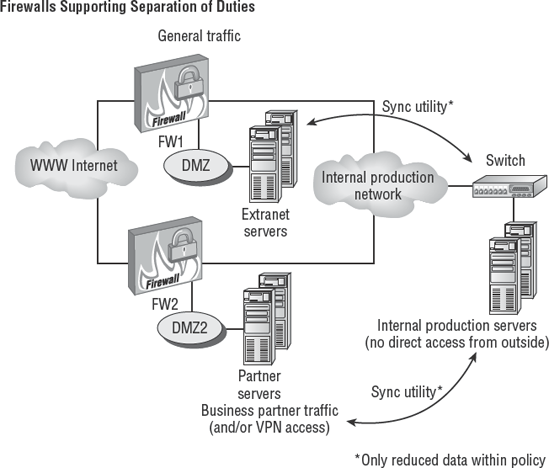

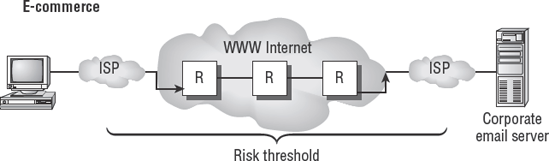

Interconnected networks are effective in business. The connectivity across networks provides an avenue for more-efficient processing by the user. Computer networks are cross-connected internally, and even across the Internet. It is not uncommon for a business partner to have special access. All of these connections can be exploited by an attacker. Business partner connections can provide an opportunity for the attacker to remotely compromise the systems of a partner organization with little chance of detection. The purpose of internal and external firewalls is to block attacks. The implementation of internal firewalls is an excellent practice that dates back to the Great Wall of China. Better-run organizations recognize the need. Since 2005, the deployment of internal firewalls has accelerated. Figure 7.2 shows the threat of attackers entering through business partner connections.

- Source routing

As stated earlier, useful system administration tools can be implemented as weapons. In the early days of networking, it was necessary to send data across a network without any reliance on the network configuration itself. Therefore, a special network protocol known as source routing was developed. Source routing is designed to ignore the configuration of the network routers and follow the instructions designated by the sender (the source). Source routing is a magnificent diagnostic tool for reaching remote networks. As you can imagine, source routing also is popular with hackers, because it allows a hacker to bypass routing configurations used for firewall security. For this reason, every firewall and most routers must be configured to disable source routing.

- Salami technique

The salami technique is used for the commission of financial crimes. The key here is to make the alteration so insignificant that in a single case it would go completely unnoticed—for example, a bank employee inserts a program into the bank's servers that deducts a small amount of money from the account of every customer. No single account holder will probably notice this unauthorized debit, but the bank employee will make a sizable amount of money every month.

- Packet replay

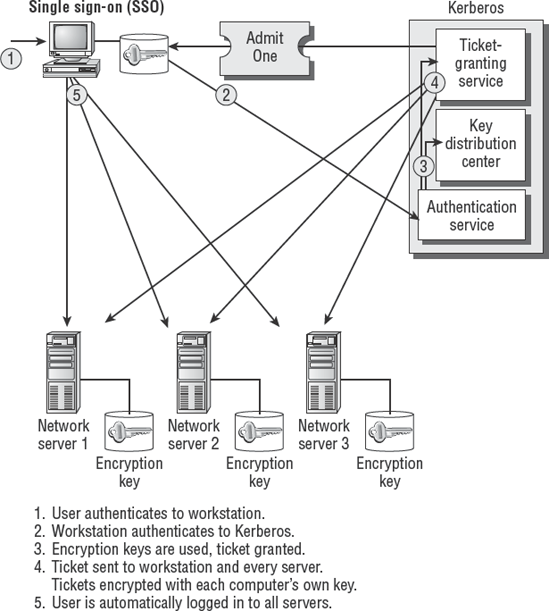

Network communications are sent by transmitting a series of small messages known as packets. The attacker captures a series of legitimate packets by using a capture tool similar to a network sniffer. The packets are retransmitted (replayed) within a short time window to trick a computer system into believing that the sender is a legitimate user. This technique can be combined with a denial of service technique to compromise the system. The legitimate user is knocked off the network by using denial of service, and the attacker attempts to take over communications. This can be effective for hijacking sessions in single sign-on systems such as Kerberos. We discuss Kerberos single sign-on later in this chapter.

- Message modification

Message modification can be used to intercept and alter communications. The legitimate message is captured before receipt by the destination. The content of the message, address, or other information is modified. The modified message is then sent to the destination in a fraudulent attempt to appear genuine.

This technique is commonly used for a man-in-the-middle attack: A third party places themselves between the bona fide sender and receiver. The person in the middle pretends to be the other party. If encryption is used, the middle person tricks the sender into using an encryption key known by the middle person. After reading, the message will be re-encrypted using the key of the true recipient. The message will be retransmitted to be received by the intended recipient. The man-in-the-middle is able to eavesdrop without detection. Neither the sender nor the receiver is aware of the security compromise.

- Email spamming and spoofing

You are probably aware of email spamming. Spamming refers to sending a mass mailing of identical messages to a large number of users. The current laws governing email allow a business to send mass emails as long as the recipient is informed of the sender's legitimate address and the recipient is provided a mechanism to stop the receipt of future emails.

Email spamming is a common mechanism used in phishing attacks. The term spoofing refers to fraudulently altering the information concerning the sender of email. An example of email spoofing is when an attacker sends a fake notice concerning your eBay auction account. The spoofed email address appears as if it were sent by eBay. Email spamming is illegal in some countries, and email spoofing is prosecuted as criminal fraud and/or electronic wire fraud.

A variety of other technical attacks may be launched against the computer. A common attack is to send to the computer an impossible request or series of requests that cannot be serviced. These cause the system to overload its CPU, memory, or communication buffers. As a result, the computer crashes. An example is the old Ping of Death command (ping -l 65510), which exceeded the computer's maximum input size for a communication buffer.

The first step in preventing the loss of information assets is to establish administrative controls. Let's begin the discussion on implementing administrative safeguards.

Throughout this Study Guide, we have discussed the importance of IT governance over internal controls. The first step for a protection strategy is to establish administrative operating rules. Information security management is the foundation of information asset protection. Let's discuss some of the administrative methods used to protect data: information security management, IT security governance, data retention, documenting access paths, and other techniques. We will begin with information security management.

The objective of information security management is to ensure confidentiality, integrity, and availability of computing resources. To accomplish this goal, it is necessary to implement organizational design in support of these objectives. Let's discuss some of the job roles in information security management:

- Chief security officer

The chief security officer (CSO) is a role developed to grant the highest level of authority to the senior information systems security officer. Unfortunately, this tends to be a position of title more than a position of real corporate influence. The purpose of the CSO position is to define and enforce security policies for the organization.

- Chief privacy officer

New demands for client privacy have created the requirement for a chief privacy officer (CPO). This position is equal to or directly below the chief security officer. The CPO is commonly a position of title rather than genuine corporate authority. The CPO is responsible for protecting confidential information of clients and employees.

- Information systems security manager

The information systems security manager (ISSM) is responsible for the day-to-day process of ensuring compliance for system security. The ISSM follows the directives of the CSO and CPO for policy compliance. The ISSM is supported by a staff of information systems security analysts (ISSA) who work on the individual projects and security problems. An ISSM supervises the information systems security analysts and sets the daily priorities.

The concept of IT governance for security is based on security policies, standards, and procedures. For these administrative controls to be effective, it is necessary to define specific roles, responsibilities, and requirements. Let's imagine that an information security policy and matching standard have been adopted. The next step would be to determine the specific level of controls necessary for each piece of data. Data can be classified into groups based on its value or sensitivity. The data classification process will define the information controls necessary to ensure appropriate confidentiality.

The federal government uses an information classification program to specify controls over the use of data. High-risk data is classified top secret, and the classifications cascade down to data available for public consumption. Every organization should utilize an information classification program for their data. International Standard 15489 (ISO 15489) sets forth the requirements to identify, retain, and protect records used in the course of business. It's the only method to ensure integrity with proper handling. Let's take a look at the typical classifications used in business:

- Public

Information approved for public consumption. It is important to understand that data classified as public needs to be reviewed and edited to ensure that the correct message is conveyed. Examples of public information include websites, sales brochures, marketing advertisements, press releases, and legal filings. Most information filed at the courthouse is a public record, viewable by anyone.

- Sensitive

There is a particular type of data that needs to be disclosed to certain parties but not to everyone. We refer to this data as sensitive. This data may be a matter of record or legal fact. However, the organization would not want to go about advertising the details. Examples of sensitive information include client lists, product pricing structure, contract terms, vendor lists, and details of outstanding litigation.

- Private, internal use only

The classification of data for internal use only is commonly applied to operating procedures and employment records. The details of operating procedures are usually provided on a need-to-know basis to prevent a person from designing a method for defeating the procedure. Examples of private records include salary data, health-care information, results of background checks, and employee performance reviews.

- Confidential

This is the highest category of general security classification outside the government. It may be subdivided into confidential and highly confidential trade secrets. Confidential data is anything that must not be shared outside of the organization. Examples include buyout negotiations, secret recipes, and specific details about the inner workings of the organization. Confidential data may be exempt from certain types of legal disclosure.

The overall purpose of using an information classification scheme is to ensure proper handling based on the information content and context. Context refers to the usage of information.

Two major risks are present in the absence of an information classification scheme. The first major risk is that information will be mishandled. The second major risk is that without an information classification scheme, all of the organization's data may be subject to scrutiny during legal proceedings. The information classification scheme safeguards knowledge. Failure to implement a records and data classification scheme leads back to our previous paragraph about ignorance and a speedy conviction.

To implement policies, standards, and procedures, it is necessary to identify persons by their authority. Three levels of authority exist in regard to computers and data. The three levels of authority are owner, custodian, and user.

The data owner refers to executives or managers responsible for the data content. The role of the data owner is to do the following:

Assume responsibility for the data content

Specify the information classification level

Specify appropriate controls

Specify acceptable use of the data

Identify users

Appoint the data custodian

As an IS auditor, you will review the decisions made by the data owner to evaluate whether the actions were appropriate.

The data user is the business person who benefits from the computerized data. Data users may be internal or external to the organization. For example, some data is delivered for use across the Internet. The role of the data user includes the following tasks:

Follow standards of acceptable use

Comply with the owner's controls

Maintain confidentiality of the data

Report unauthorized activity

You will evaluate the effectiveness of management to communicate their controls to the user. The auditor investigates the effectiveness and integration of policies and procedures with the user community. In addition, the auditor determines whether user training has been effectively implemented.

The data custodian is responsible for implementing data storage safeguards and ensuring availability of the data. The custodian's role is to support the business user. If something goes wrong, it is the responsibility of the custodian to deal with this promptly. Sometimes the custodian's role is equivalent to a person holding the bag of snakes at a rattlesnake roundup or the role of a plumber when fixing a clogged drain. The duties of the data custodian include the following:

Implement controls matching information classification

Monitor data security for violations

Administer user access controls

Ensure data integrity through processing controls

Back up data to protect from loss

Be available to resolve any problems

Now we have identified the information classification and the job roles of owner, user, and custodian. The next step is to identify data retention requirements.

Data retention specifies the procedures for storing data, including how long to keep particular data and how the data will be disposed of. All records follow a life cycle similar to the SDLC model in Chapter 5, "Life Cycle Management." The requirements for data retention can be based on the value of data, its useful life, and legal requirements. For example, financial records must be accessible for seven years. Medical records are required to be available indefinitely or at least as long as the patient remains alive. Records regarding the sale or transfer of real property are to be maintained indefinitely, as are many government records.

The purpose of data retention is to specify how long a data record must be preserved. At the end of the preservation period, the data is archived or disposed of. The disposal process frequently involves destruction. We will discuss storage and destruction toward the end of this chapter.

Now the authority roles and data retention requirements have been identified. So, the next administrative step is to document the access routes (paths) to reach the data.

It would be extremely difficult to ensure system security without recognizing common access routes. One of the requirements of internal controls is to document all of the known access paths. A physical map is useful. The network administrator or security manager should have a floor plan of the building. The locations of computer systems, wiring closet, and computer room should be marked on the map. Map symbols should indicate the location of every network jack, telephone jack, and modem. The location of physical access doors and locking doors should also be marked on the map. This process would continue until all the access paths have been marked. Even the network firewall and its Internet communication line should appear on the map.

Next, a risk assessment should be performed by using the map of access paths. Hackers can injure the facility via the Internet or from within an unsupervised conference room. Special attention should be given to areas with modem access. Modems provide direct connections, which bypass the majority of IT security. Computer firewalls are effective only if the data traffic passes directly through the firewall. A computer firewall cannot protect any system with an independent, direct Internet connection.

The purpose of documenting access paths and performing a risk assessment is to ensure accountability. Management is held responsible for the integrity of record keeping. Guaranteeing integrity of a computer system would be difficult if nobody could guarantee that access restrictions were in place.

The change control process should include oversight for changes affecting the access paths. For example, a change in physical access security may introduce another route to the computer room. Persons entering and leaving through the side door, for example, would have a better opportunity to reach the computer room without detection.

The next step to ensure security is to provide constant monitoring. Physical security systems can be monitored with a combination of video cameras, guards, and alarm systems. Badge access through locked doorways provides physical access control with an audit log. A badge access system can generate a list of every identification badge granted access or denied access through the doorway. Unfortunately, a badge access system will have difficulty ensuring that only one person passed through the doorway at a particular time. A mantrap system of two doorways may be used to prevent multiple persons from entering and exiting at the same time. A mantrap allows one person to enter and requires the door to be closed behind the person. After the first door is closed, a second door can be opened. The mantrap allows only one person to enter and exit at a time.

To support the increased security, it will be necessary to train the personnel.

Everyone in the organization should undergo a process of security awareness training. Education is the best defense. Computer training and job training are commonplace. The organization should introduce a training program promoting IT governance in security to generate awareness. Let's consider the possible training programs:

New-hire orientation, which should include IT security orientation

Physical security safeguards and asset protection

Reeducating existing staff about IT security requirements

Introducing new security and safety considerations

Email security mechanisms

Virus protection

Business continuity

Every organization should have a general IT security training program to communicate management's commitment for internal controls.

A good training program can run in 20 minutes or less. The intention is to improve awareness and understanding. This objective does not require a marathon event. Training can occur in combination with normal activities. A favorite technique of ours is to place a 20-minute video presentation on the back end of HR benefits and orientation sessions. This ensures that the audience will be present. HR will provide time and attendance reporting for the participants. Each person on staff will be tracked through a series of presentations, leading to cumulative awareness training. Other methods include a brown-bag lunch event, followed by a contest giveaway to promote attendance.

Physical access is a major concern to IT security. As an IS auditor, you need to investigate how access is granted for employees, visitors, vendors, and service personnel. Which of these individuals are escorted and which are left unattended? What is the nature of physical controls and locking doors? Are there any internal barriers to prevent unauthorized access?

The following is a list of the three top concerns regarding physical access:

- Sensitive areas

Every IS auditor is concerned about physical access to sensitive areas such as the computer room. The computer room and network wiring closets are an attractive target. Physical access to electronic equipment will permit the intruder to bypass a number of logical controls. Servers and network routers can be compromised through their keyboard or service ports. Every device can be disabled by physical damage. It is also possible for the intruder to install eavesdropping access by using wiretaps or special devices.

- Service ports

Network equipment, routers, and servers have communication ports that can be used by maintenance personnel. A serial port provides direct access for a skilled intruder. Shorting out the hardware can create a denial of service situation. Special commands issued through a serial port may bypass the system's password security. When security is bypassed, the contents in memory can be displayed to reveal the running configuration, user IDs, and passwords.

Note

As auditors, we have observed senior maintenance personnel from the two largest router manufacturers. During one particular crisis, the skilled technicians successfully bypassed security and reconfigured a major set of changes to routers without halting network service and without knowing the actual administrator passwords.

- Computer consoles

The keyboard of the server is referred to as the console. Direct access to servers and the console should be tightly controlled. A person with direct access can start and stop the system. The processes stopping the system may be crude and cumbersome, but the outcome will be the same. Direct access also provides physical access to disk drives and special communication ports. It would be impossible to ensure server security without restricting physical access.

Administrative procedures are necessary to ensure that access is terminated when an employee leaves the organization. The access of existing employees should also be reviewed on a regular basis. In a poorly managed organization, the employee will be given access to one area and then to additional areas as their jobs change. Unfortunately, this results in a person with more access than their job requires. Access to sensitive areas should be limited to persons who perform a required job function in the same area. If the person is moved out of the area, that access should be terminated. The IS auditor should investigate how the organization terminates access and whether it reviews existing access levels. The concept of least privilege should be enforced. The minimum level of access is granted to perform the required job role.

Incident handling is an administrative process we discussed in Chapter 6, "IT Service Delivery." Physical damage or an unlocked door at the wrong time should initiate the incident-handling process. Auditors will need to investigate how the organization deals with incident handling in regard to security implications.

Auditors need to ask the following questions:

What are the events necessary to trigger incident response?

Are the user and the IT help desk trained to know when to call?

What is the process for activating the incident response team?

Does the incident response team have an established procedure to ensure a proper investigation and protect evidence?

Are members of the incident response team formally appointed and trained?

Policies and procedures in security plans are ineffective unless management is monitoring compliance. An effective process of monitoring will detect violations. Better control occurs when activity monitoring is separate from the person or activity performing the work. Self-monitoring is a violation of IT governance controls. The built-in reporting conflict of self-monitoring will seriously question the integrity of the reporting process. Separation of duties applies to people, systems, and violation reporting. The IS auditor needs to investigate how violations are reported to management:

Does a formal process exist to report possible violations?

Will a violation report trigger the incident response team to investigate?

The role of the IS auditor in personnel management is to determine whether appropriate controls are in place to manage the activities of people inside the organization. Now we will move on to physical protection.

Physical barriers are frequently used to protect assets. A few pages ago, we discussed the creation of a map displaying access routes and locked doors. After risk assessment, the next step is to improve physical protection.

Let's review a few of the common techniques for increasing physical protection:

- Closed-circuit television

Closed-circuit television can provide real-time monitoring or audit logs of past activity. Access routes are frequently monitored by using closed-circuit television. The auditor may be interested in the image quality and retention capabilities of the equipment. Some intrusions may not be detected for several weeks. Does the organization have the ability to check for events that occurred days or weeks ago?

- Guards

Security guards are an excellent defensive tool. Guards can observe details that the computerized security system would ignore. Security guards can deal with exceptions and special events. In an emergency, security guards can provide crowd control and direction. Closed-circuit television can extend the effective area of the security guard. The monitoring of remote areas should reduce the potential for loss. The only drawback is that guards may be susceptible to bribery or collusion. It's a common practice for the guards to be monitored by a separate security staff in banks and casinos.

- Special locks

Physical locks come in a variety of shapes and sizes. Let's look at three of the more common types of locks:

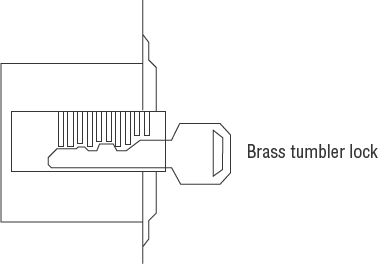

- Traditional tumbler lock

An inexpensive type of lock is the tumbler lock, which uses a standard key. This is identical to the brass key lock used for your home and automobile. The lock is relatively inexpensive and easy to install. It has one major drawback: Everyone uses the same key to open the lock. It is practically impossible to identify who has opened the lock. Figure 7.3 shows a diagram of the tumbler lock.

- Electronic lock

Electronic locks can be used by security systems. The electronic line is frequently coupled with a badge reader. Each user is given a unique ID badge, which will unlock the door. This provides an audit trail of who has unlocked the door for each event. Electronic locks are usually managed by a centralized security system. Unfortunately, electronic locks will not tell us how many people went through the door when it was open. To solve that problem, it would be necessary to combine the electronic lock with closed-circuit television recording.

- Cipher lock

Cipher locks may be electronic or mechanical. The purpose of the cipher lock is to eliminate the brass key requirement. Access is granted by entering a particular combination on the keypad. Low-security cipher locks use a shared unlock code. Higher-security cipher locks issue a unique code for each individual. The FBI office in Dallas has a really slick electronic cipher lock using an LCD touchpad. The user touches a combination of keys in sequence on the LCD keypad. Between each physical touch, the key display changes to prevent an observer from detecting the actual code used. This is an example of a higher-security cipher lock.

- Biometrics

The next level of access control for locked doors is biometrics. Biometrics uses a combination of human characteristics as the key to the door. We discuss this later in this chapter, in the section "Using Technical Protection."

- Burglar alarm

The oldest method of detecting a physical breach is a burglar alarm. Alarm systems are considered the absolute minimum for physical security. An alarm system may be installed for the purpose of signaling that a particular door has been opened. Remote or unmanned facilities frequently implement a burglar alarm to notify personnel of a potential breach. Burglar alarm systems should be monitored to ensure appropriate response in a timely manner.

- Environmental sensors

Technology is not tolerant of water or contaminants. Environmental controls are used to regulate the temperature, humidity, and airflow. The failure of air-conditioning or humidity control can damage sensitive computer equipment. All the servers in the computer room would overheat and crash without continuous air-conditioning. Environmental sensors should be monitored with the same interest and response as a burglar alarm.

We have discussed the need for security to restrict physical access. The data processing facility requires special consideration in its design. Data processing equipment is a valuable asset that needs to be protected from environmental contamination, malicious personnel, theft, and physical damage.

The data center location should not draw any attention to its true contents. This will alleviate malicious interest by persons motivated to commit theft or vandalism. The facility should be constructed according to national fire-protection codes with a 2-hour fire-protection rating for floors, ceilings, doors, and walls.

Some locations in the building are not suitable for the computer room. Basements are a poor choice because they are susceptible to flooding. In 1960, computers were placed behind glass windows to showcase them as a status symbol. A series of riots in the mid-1960s made it apparent that computers needed to be rapidly moved into fortified rooms. The most expedient location was an unused basement with no windows. The standard over the past 50 years has been to place the data center on a middle floor in the building—preferably located between the second floor and one floor below the top floor.

Note

Basements are a poor choice for data centers. Ground-level floors are not a good choice because they are easy to access by both thieves and attackers. A top floor is not recommended because of the likelihood of storm damage and roof leaks. A floor just below the top floor may be acceptable. The second layer of concrete between the floors provides additional protection from roof leaks. Opaque windows are considered acceptable in some environments if the windows are shatterproof and installed by using a sturdy mount equal to the window rating.

Access to the data center should be monitored and restricted. The same level of protection should be given to wiring closets because they contain related support equipment. Physical protection should be designed by using a 3D space consideration: Intruders should not be able to gain access from above, below, or through the side of the facility.

The physical space inside the data processing facility should be environmentally controlled. Let's move on to a discussion of environmental protection.

The first concern in the data center is electrical power. Electrical power is the lifeblood of computer systems. Unstable power is the number one threat to consistent operations. At a minimum, the data center should have power conditioners and an uninterruptible power supply.

Note

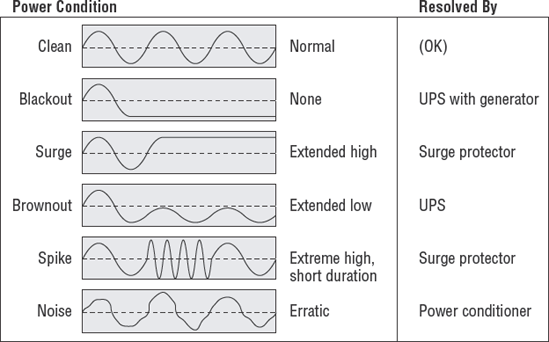

You are expected to understand a few of the terms used to describe conditions that create problems for electrical power.

Figure 7.4 illustrates the different types of electrical power conditions.

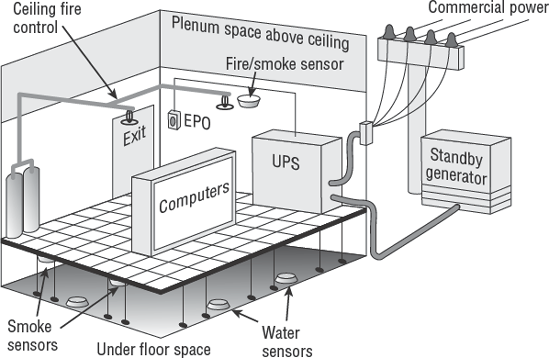

Electricity is both an advantage and a hazard. The national fire-protection code requires an emergency power off (EPO) switch to be located near the exit door. The purpose of this switch is to kill power to prevent an individual from being electrocuted. The EPO switch is a red button, which should have a plastic cover to prevent accidental activation. The switch can be wired into the fire-control system for automatic power shutoff if the fire-control system releases water or chemicals to disable a fire.

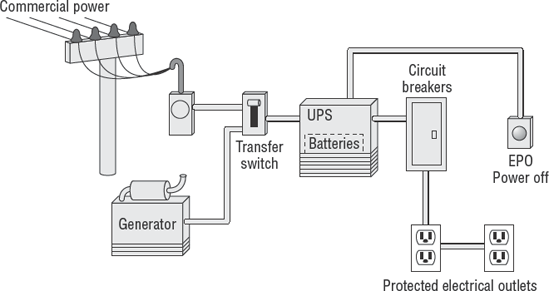

The uninterruptible power supply (UPS) is an intelligent power monitor coupled with a string of electrical batteries. The UPS constantly monitors electrical power. A UPS can supplement low-voltage conditions by using power stored in the batteries. During a power outage, the UPS will provide a limited amount of battery power to keep the systems running. The duration of this battery power depends on the electrical consumption of the attached equipment. Most UPS units are capable of signaling the computer to automatically shut down before the batteries are completely drained. Larger commercial UPS systems have the ability to signal the electrical standby generator to start.

The standby generator provides auxiliary power whenever commercial power is disrupted. The standby generator can be connected to the UPS for an automated start. The UPS will signal the generator that power is required, and the generator will start warming up. After the generator is warmed up, a transfer circuit will switch the electrical feed from commercial power to the generator power. The UPS will filter the generator power and begin recharging batteries. The standby generator can run for as long as it has fuel. Most standby generators run on diesel fuel or natural gas:

- Diesel generator

A diesel generator requires a large fuel-storage tank with at least 12 hours of fuel. Better-prepared organizations will store at least three days worth of fuel and as much as thirty days of fuel. Simple power failures will typically be resolved within three days. Power failures due to storm damage usually take more than one week to resolve. Smart executives would never trust the business to hollow promises from a fuel delivery service. When power stops, attitudes will flare because the business has been shut down. More fuel storage is cheaper than the outage or expense of a court battle with your supplier over late fuel delivery.

- Natural gas generator

Natural gas–powered generators have the advantage of tapping a gas utility pipeline directly or using a connection through a storage tank. The natural gas generator does not require a fuel truck to refill its fuel tank. The local natural gas pipeline provides a steady supply of fuel for an extended period of time. The natural gas supply is a good idea in areas that are geologically stable.

Note

Air-conditioning equipment is the Achilles heel of generators. An enormous amount of fuel is consumed when running AC using generator power. It also takes a very big generator to run AC compressors. Realistic alternatives to AC include cycling cooler subsurface water from deep wells for convection cooling.

Note

One alternate power-conserving method uses a stored tank of liquid nitrogen for temporary cooling over days or weeks. Liquid nitrogen is used by electronic manufacturers in environmental testing for component failure analysis. The same technique could be applied by venting small amounts of liquid nitrogen through a closed-loop condenser system. Air blowing across the condenser will keep the room cool without burdening the generator.

The best way to prevent power outages is to install power leads from two different power substations. It would be extremely expensive to just pay someone to run special power cables. Instead, the location of the building housing the computer room should be selected according to area power grids. Power grids are usually divided along highways. Careful location selection will place your building within a quarter-mile of two power grids. This makes the cost of the dual connection affordable. Dual power leads should approach the building from different directions without sharing the same underground trench. A construction backhoe is extremely effective in destroying underground connections.

The power transfer system, known as a transfer switch, provides the connection between commercial power and UPS battery power in the generator. The transfer switch may be manual or computerized. It is not uncommon for the power transfer switch to fail during a power outage. Therefore, manual power-transfer procedures should be in place. Automated power-transfer switches may not be able to react to a pair of short power failures occurring within the same 30-minute window. After the first power failure, the generator will come online to produce electrical current. After commercial electrical power is restored, the power switch will transfer back to commercial electricity. At the same time, the generator will receive a signal to begin cooling down and finally shut off. If another power outage occurs during the generator cooling period, the power transfer switch will cycle to generator power while the generator is not producing electrical current. This condition may be resolved by increasing the battery capability of the UPS and adjusting generator start and stop times.

Figure 7.5 illustrates the basic electrical power system used for computer installations.

The computer installation requires heating, ventilation, and air-conditioning. Electronic equipment performs well in cold conditions; however, magnetic media should not be allowed to freeze. Ventilation is necessary for cooling computer equipment. Physical damage occurs if the computer circuitry sustains extended use at temperatures of 104 degrees or higher. Physical damage will also occur if the internal electronic circuitry exceeds 115 degrees during operation.

Air-conditioning is also used to control humidity. Humidity will control static electricity that could damage electrical circuits. The ideal humidity for a computer room is between 35 percent and 45 percent at 72 degrees. This will reduce the atmospheric conditions that would otherwise create high levels of static electricity.

The data center and records storage area should be equipped for fire, smoke, and heat detection. Unheated areas may need to be monitored for freezing conditions. There are three basic types of fire detectors, using smoke detection, heat detection, or flame detection:

- Smoke detection

Uses optical smoke detectors or radioactive smoke detection

- Heat detection

Uses a fixed temperature thermostat (which activates above 200 degrees), or rapid-rise detection (which activates the alarm if the temperature increases dramatically within a matter of minutes)

- Flame detection

Relies on ultraviolet radiation from a flame or the pulsation rate of a flame

A fire-detection system activates an alarm to initiate human response. A fire-detection system may also activate fire suppression with or without the discharge of water or chemicals.

Fire suppression is the next step after fire detection. A fire-suppression system may be fully automated or mechanical. There are three basic types of fire-suppression systems:

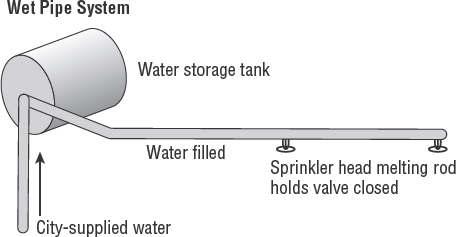

- Wet pipe system

The wet pipe system derives its name from the concept of water remaining inside the pipe. Most sprinkler heads in a ceiling-based system are mechanical. Each sprinkler head is an individual valve held closed by a meltable pin. A fire near the sprinkler head will melt the pin, and the valve will open to discharge whatever is in the pipe. This type of system can burst because of a freeze, or leak due to corrosion, which would create an unscheduled discharge. Figure 7.6 shows a wet pipe system.

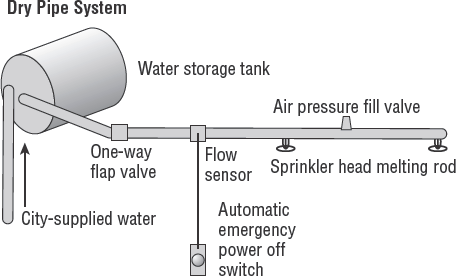

- Dry pipe system

The dry pipe system is an improvement over the wet pipe for two reasons. First, the pipe is full of compressed air rather than water prior to discharge. When the valve opens, there is a delay of a few seconds as the air clears from the line. The water will discharge after the air is purged. This leads us to the second advantage. The flow of rushing air can trigger a flow switch to activate the EPO switch to kill electrical power. Equipment will shut off during the few seconds before the water is discharged. This will reduce the amount of damage to computer equipment. Special computer cabinets are made to shed water away from electronic hardware mounted inside. Figure 7.7 shows a dry pipe system.

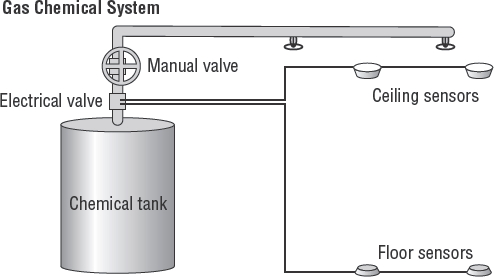

- Dry chemical system

Dry chemical systems are frequently used in computer installations because dry chemicals avoid the hazards created by water. The dry chemical system uses a gas such as FM-200 or NAF-S-3 to extinguish fire.

Note

Gaseous halon is no longer used because it is a chlorofluorocarbon (CFC) that destroys the Earth's ozone layer (1994 international environmental accord). The exception is aircraft and ships. Fires occurring while in flight or at sea could be devastating with tragic loss of life. All former halon installations in computer rooms should have been converted to FM-200 or an equivalent dry chemical.

When electronic sensors detect a fire condition, the dry chemical system will discharge into the room. Figure 7.8 shows the basic design of a dry chemical system.

Special administrative controls are necessary with dry chemical systems. Maintenance personnel must never lift floor tiles or move ceiling tiles while the dry chemical system is armed. Floating particles of dust can activate a discharge of dry chemicals. Humans should not inhale the gas used in dry chemical systems because it may be lethal. A dry chemical discharge introduces a great deal of air pressure into the room within seconds. Fragile glass windows may shatter during discharge, creating a temporary airborne glass hazard.

Note

You need to be aware that a water pipe system may be required even if a dry chemical system is installed. Fire safety codes can require wet pipe systems throughout the building, without exception. Some building owners will not allow the tenant to alter existing fire-control systems. The dry chemical system would then have to be installed in parallel to the existing water-based system.

Water is discharged from air-conditioning or cooling systems and usually runs to a drain located under the raised floor. Water-detection systems are necessary under the floor to alert personnel of a clogged drain or plumbing backup. Water-detection sensors also may be placed in the ceiling to detect leakage from pipes in the roof above. It is common for water pipes to be located over a computer room directly above the ceiling tiles or higher floors. Water can even cascade down inside the building from the roof.

Figure 7.9 provides a simple overview of a data processing center and the various environmental control systems.

To minimize risk, the organization should have a policy prohibiting food, liquids, and smoking in the computer facility. Now it is time to discuss safe storage of assets and media records.

Vital business records and computer media require protection from the environment, fire, theft, and malicious damage. Safe onsite storage is required. The best practice is to use fireproof file cabinets and a fireproof media safe. Standard business records are kept in the fireproof file cabinet. Computer tapes and disk media are stored in a special fire safe. The fire safe provides physical protection and ensures that the internal temperature of the safe will not exceed 130 degrees. Copies of files and archived records are transferred to offsite storage.

The offsite storage facility provides storage for a second copy of vital records and data backup files. The offsite storage facility should be used for safe long-term retention of records. The standard practice is to send backup tapes offsite every day or every other day. The offsite storage location should be a well-designed, secure, bonded facility with 24-hour security. This site must be designed for protection from flood, fire, and theft. The offsite vendor should maintain a low profile without visible markings identifying the contents of the facility to a casual passerby. Most offsite storage facilities provide safe media transport.

Business records and magnetic media should be properly boxed for transit. The contents in the box must be properly labeled. An inventory should be recorded prior to shipping media offsite for any reason. The tape librarian is usually responsible for tracking data media in transit. Backup tapes contain the utmost secrets of any organization. All media leaving the primary facility must be kept in secure storage at all times and tracked during transit. New regulations are mandating the use of encryption to protect the standing data on backup tapes. The tape librarian should verify the safe arrival of media at the offsite storage facility. Random media audits at the offsite facility are a good idea. The custodian must track the location and status of all data files outside the primary facility.

Information and media will be disposed of at the end of its life cycle. This is the seventh phase of the SDLC model. A formal authorization process is required to dispose of physical and data assets. Improper controls can lead to the untimely loss of valuable assets. Let's review the common disposal procedures for media:

- Paper, plastic, and photographic data

Nondurable media may be disposed of by using physical destruction such as shredding or burning.

- Durable and magnetic media

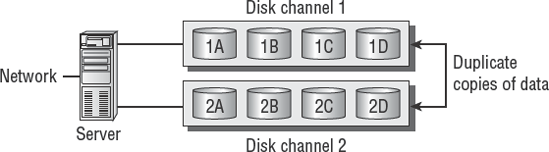

Durable media may be disposed of by using data destruction techniques of overwriting and degaussing:

- Overwriting

Data files are not actually deleted by a Delete command. The only change is that the first character of the file is set to zero. Setting the first character to zero indicates that the remaining contents can be overwritten whenever the computer system needs more storage space. Undelete utilities operate by changing the first character back to a numeric value of one. This makes the file contents readable again. To destroy files without recovery, it is necessary to overwrite the contents of the disc. A file overwrite utility is used to replace every single data bit with a random value such as E6X, BBB, or other meaningless value.

- Degaussing

Degaussing is a bulk erasing process using a strong electromagnet. Degaussing equipment is relatively inexpensive. To operate, the degaussing unit is turned on and placed next to a box of magnetic media. The electromagnet erases magnetic media by changing its electrical alignment. Erasure occurs within minutes or hours, depending on the strength of the device.

Note

As a CISA, you are required to understand the fundamental issues of physical protection. Advanced study in physical security is available from ASIS International, for the Certified Protection Professional (CPP) credential.

You're now ready to begin the last major section of this chapter, which covers technical methods of protection.

Technical protection is also referred to as logical protection. A simple way to recognize technical protection is that technical controls typically involve a hardware or software process to operate. Let's start with technical controls, which are also known as automated controls.

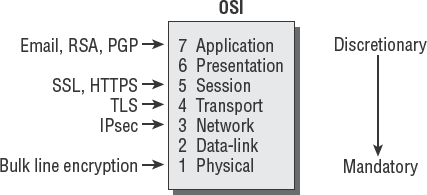

Technical protection may be implemented by using a combination of mandatory controls, discretionary controls, or role-based controls. Let's discuss each:

- Mandatory access controls

Mandatory access controls (MAC) use labels to identify the security classification of data. A set of rules determines which person (subject) will be allowed to access the data (object). The security label is compared to the user access level. The comparison process requires an absolute match to permit access. Here is an example:

(User label "subject" = Data label "object") = ALLOW Label does not match = DENY

The process is explicit. Absolutely no exceptions are made when MAC methods are in use. Under MAC, control is centrally managed and all access is forbidden unless explicit permission is specified for that user. The only way to gain access is to change the user's formal authorization level. The military uses MAC.

- Discretionary access controls

Discretionary access controls (DAC) allow a designated individual to decide the level of user access. DAC access is usually distributed across the organization to provide flexibility for specific use or adjustment to business needs. The data owner determines access control at their discretion. The IS auditor needs to investigate how the decisions concerning DAC access controls are authorized, managed, and regularly reviewed. Most businesses use discretionary access control.

- Role-based access controls

Certain jobs require a particular level of access to fulfill the job duties. Access that is granted on the basis of the job requirement is referred to as role-based access control (RBAC). A user is given the level of access necessary to complete work for their job. The system administrator position is an example of role-based access control.

- Task-based access controls

Individual tasks may need to be performed for the business to operate. Whereas role-based access is used for job roles, task-based access control (TBAC) refers to the need to perform a specific task. Common examples include limited testing, maintenance, data entry, or access to a special report.

The type of access control used is based on risk, data value, and available control mechanisms. Now we need to discuss application software control mechanisms.

Application software controls provide security by using a combination of user identity, authentication, authorization, and accountability. As you will recall, user identity is a claim that must be authenticated (verified). Authorization refers to the right to perform a particular function. Accountability refers to holding a person responsible for their actions. Most application software uses access control lists to assign rights or permissions. The access control list contains the user's identity and permissions assigned.

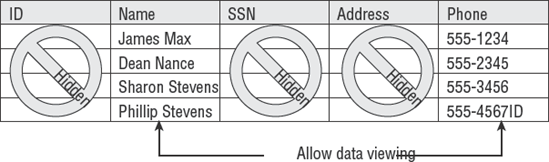

Data within the database can be protected by using database views. The database view is a read restriction placed on particular columns (attributes) in the database. For example, Figure 7.10 illustrates using a personnel file to create a telephone list. Data that is not to be read for the telephone list has been hidden by using the database view.

Another method of limiting access is to use a restricted user interface. The restricted interface may be a menu with particular options grayed out or not displayed at all. Menu access is preferred to prevent the user from having the power of command-line arguments. The command line is difficult to restrict.