THE OBJECTIVE OF THIS CHAPTER IS TO ACQUAINT THE READER WITH THE FOLLOWING CONCEPTS:

Implementing all seven phases of the System Development Life Cycle

Understanding how to evaluate the business case for proposed system and feasibility to ensure alignment to business strategy

Impact of international standards relating to software development

Understanding the process of conducting the system design analysis

Understanding the process for developing systems and infrastructure requirements, acquisition, and development and testing to ensure it meets business objectives

Understanding how to evaluate the readiness of systems for production use

Knowledge of management's responsibility for accepting and maintaining the system

Understanding the purpose of postimplementation system reviews relating to anticipated return on investment and proper implementation of controls

Evaluating system retirement and disposal methods to ensure compliance with legal policies and procedures

Basic introduction to the different methodologies used in software development.

When auditing software development, you will assess whether the prescribed project management, System Development Life Cycle, and change-management processes were followed. You are expected to evaluate the processes used in developing or acquiring software to ensure that the program deliverables meet organizational objectives.

We will discuss software design concepts and terminology that every CISA is expected to know for the CISA exam.

Every organization strives to balance expenditures against revenue. The objective is to increase revenue and reduce operating costs. One of the most effective methods for reducing operating costs is to improve software automation.

Computer programs may be custom-built or purchased in an effort to improve automation. All business applications undergo a common process of needs analysis, functional design, software development, implementation, production use, and ongoing maintenance. In the end, every program will be replaced by a newer version, and the old version will be retired. This is what is referred to as the life cycle.

It is said that 80 percent of a business's functions are related to common clerical office administration tasks. The clerical functions are usually automated with commercial off-the-shelf software. Each organization does not need to custom-write software for word processing and spreadsheets, for example. These basic functions can be addressed through traditional software that is easily purchased on the open market. This type of commodity software requires little customization. The overall financial advantages will be small but useful. When purchasing prewritten software, an organization follows a slightly different model that focuses on selection rather than software design and development. Prewritten software follows a life cycle of needs analysis and selection, followed by implementation, which leads to production use and ongoing maintenance.

The remaining 20 percent of the business functions may be unique or require highly customized computer programs. This is the area of need addressed by custom-written computer software. The challenge is to ensure that the software actually fulfills the organization's strategic objectives.

Let's make sure that you understand the difference between a strategic system and a traditional system.

A strategic system fundamentally changes the way the organization conducts business or competes in the marketplace. A strategic system significantly improves overall business performance with results that can be measured by multiple indicators. These multiple indicators include measured performance increases and noticeable improvement on the organization's financial statement. An organization might, for example, successfully attain a dramatic increase in sales volume as a direct result of implementing a strategic system. The strategic system may create an entirely new sales channel to reach customers.

Auction software implemented and marketed by eBay is an example of a strategic system. The strategic software fundamentally changes the way an organization will be run. For a strategic system to be successfully implemented, management and users must be fully involved. Anything less than significant fundamental change with dramatic, measurable results would indicate that the software is a traditional system.

Note

You should be aware that some software vendors will use claims of strategic value with obscure results to try to sell lesser products at higher profit margins. Your job is to determine whether the organizational objectives have been properly identified and met. Claims of improvement should be verifiable.

Traditional systems provide support functions aligned to fulfill the needs of an individual or department. Examples of traditional systems include general office productivity and departmental databases. The traditional system might provide 18 percent return on investment, whereas a strategic system might have a return of more than 10 times the investment.

Controlling quality is an ongoing process of improving the yield of any effort. True quality is designed into a system from the beginning. In contrast, inspected quality is no more than a test after the fact. This section covers models designed to promote software quality:

Capability Maturity Model (CMM)

ISO Software Process Improvement and Capability dEtermination (SPICE)

Let's review the Capability Maturity Model and introduce the related international standards.

As you may recall from Chapter 3, "IT Governance," the Software Engineering Institute's Capability Maturity Model (CMM) was developed to provide a strategy for determining the maturity of current processes and to identify the next steps required for improvement. The CMM roots are based in lessons learned from assembly-line automation during the U.S. industrial age of the early 1900s. Several analogies exist between CMM and the manufacturing process quality concepts of Walter Shewhart, W.W. Royce, W. Edwards Deming, Joseph Duran, and Philip Crosby. Most of the people who understood the analogy relating manufacturing processes to business processes have long since retired. This promotes a false impression that CMM is new, when it's just new to them. Let's take a quick overview of the levels contained in the CMM model:

- Level 0

This level is implied but not always recognized. Zero indicates that nothing is getting done.

- Level 1 = Initial

Processes at this level are ad hoc and performed by individuals. Typical characteristics are ad hoc activities, firefighting, unpredictable results, and management activities that vary without consistency.

- Level 2 = Repeatable

These processes are documented and can be repeated. Characteristics are more semiformal methods, tension problems between project managers and line managers, inspected quality rather than built in, and no formal priority system.

- Level 3 = Defined

These are lessons learned and integrated into institutional processes. Standardization begins to take place between departments with qualitative measurement (opinion of quality). Formal criteria is developed for use in selection processes.

- Level 4 = Managed

This level equates to quantitative measurement (numeric measurement of quality). Portfolio management is engrained into all decisions. A formal project priority system is practiced with a Project Management Office (PMO) governing projects.

- Level 5 = Optimized

This is the highest level of control with continuous improvement using statistical process control. A culture of constant improvement is pervasive with a desire to fine-tune the last available percentages to squeak out every remaining penny of profit.

The Software Engineering Institute (SEI) estimates that it may take 13 to 25 months to move up to each successive level. It's just not possible to leapfrog over to the next level because of the magnitude of change required to convert the organization's attitude, experiences, and culture. SEI was one of the first organizations to define the evolution of software maturity. The CMM model has been expanded to cover all types of processes used to run a business or a government office.

A significant number of the best practices for quality in American manufacturing have been adopted by the International Organization for Standardization (ISO). ISO is a worldwide federation of government standard bodies operating under a charter to create international standards in order to promote commerce and reduce misrepresentation. One of the functions of the ISO is to identify regional best practices and promote acceptance worldwide. The original work of Shewhart and derivative works of Crosby, Deming, and Duran focused on reducing manufacturing defects. Their original concepts have been expanded over the last 50 years to include almost all business processes. The CMM represents the best-practice method of measuring process maturity. It makes no difference whether the process is administrative, manufacturing, or software development. ISO has modified the descriptive words used in the levels of the CMM for international acceptance.

As a CISA, you should be interested in three of the ISO standards relating to development and maturity.

The ISO 15504 standard is a modified version of the CMM. These changes were intended to clarify the different maturity levels across different languages and cultures. Notice that level 0 is relabeled as incomplete. Level 1 is renamed to indicate that the process has been successfully performed. Level 2 indicates that the process is managed. Level 3 shows that the process is well established in the organization. Level 4 indicates that the process output will be very predictable. Level 5 shows that the process is under a continuous improvement program using statistical process control. Table 5.1 illustrates the minor variations between the CMM and the ISO 15504 standard, also known as SPICE.

Table 5.1. CMM Compared to ISO 15504 (SPICE)

CMM Levels | ISO 15504 Levels |

|---|---|

0 = process did not occur yet | ISO level 0 = Incomplete |

CMM level 1 = Initial | ISO level 1 = Performed |

CMM level 2 = Repeatable | ISO level 2 = Managed |

CMM level 3 = Defined | ISO level 3 = Established |

CMM level 4 = Managed | ISO level 4 = Predictable |

CMM level 5 = Optimized | ISO level 5 = Optimized |

The purpose of ISO 15504 is identical to the CMM. Variations in language forced the ISO version to use slightly different terminology to express their objectives. Let's move on to a quick overview of two ISO quality-management standards.

The ISO has promoted a series of quality practices that were previously known as ISO 9000, 9001, and 9002 for design, manufacturing, and service, respectively. These have now been combined into the single ISO 9001 reference. Many organizations have adopted this ISO standard to facilitate worldwide acceptance of their products in the marketplace. ISO compliance also brings the benefits of a better perception by investors. Compliance does not guarantee a better product, but it does provide additional assurances that an organization should be able to deliver a better product.

Within the ISO 9001 reference, you will find that a formally adopted quality manual is required by the ISO 9001:2000. The ISO 9001:2000 quality manual specifies detailed procedures for quality management by an organization. The same quality manual provides procedures for strong internal controls when working with vendors, including a formal vendor evaluation and selection process. To ensure quality, the ISO 9001:2000 mandates that personnel performing work shall be properly trained and managed to improve competency. Because an organization claiming ISO compliance is required to have a thoroughly written quality manual in place, an IS audit may request evidence demonstrating that the quality processes are actively used.

Note

It's important to understand the naming convention of ISO standards. Names of ISO standards begin with the letters ISO, which are then followed by the standard's numeric number, a colon (:), and the year of implementation. You would read ISO 9001:2000 as ISO standard 9001 adopted in year 2000 (or updated in year 2000).

ISO 9126 is a variation of ISO 9001. The ISO standard 9126-2:2003 explains how to apply international software-quality metrics. This standard also defines requirements for evaluating software products and measuring specific quality aspects.

The six quality attributes are as follows:

Functionality of the software processes

Ease of use

Reliability with consistent performance

Efficiency of resources

Portability between environments

Maintainability with regard to making modifications

Note

You need to know the six major attributes contained in the ISO 9126 standard.

Once again, organizations claiming ISO compliance should be able to demonstrate active use of software metrics and supporting evidence for ISO 9126-2 compliance. You need to remember that no evidence equals no credit.

As a CISA, you should be prepared to identify the terminology used by the CMM and various ISO quality standards. Now that we've reviewed these maturity standards, it is time to mention the matching ISO document-control requirements.

ISO records retention standard 15489:2001 was designed to ensure that adequate records are created, captured, and managed. This standard applies to managing all forms of records and record-keeping policies. It does not matter whether the record format is electronic, printed, or voice. It makes no difference whether the records are used by a public or private organization. The 15489 standard provides the guidance necessary for minimum compliance with ISO 9001 quality standards and records management under ISO 14001. Therefore, an organization must be 15489 compliant to be ISO 9001 or ISO 14001 compliant.

Does it apply to anyone else? The answer is definitely yes. Records management governs the record-keeping practices of any person who creates or uses records in the course of their business activities. It also applies to those activities in which a record is expected to exist. Examples include the following:

Financial bookkeeping records

Contracts and business transactions

Government filings

Setting policies and operating standards

Payroll and HR records

Establishing procedures and guidelines

Keeping records in the normal course of business

All organizations need to identify the regulations that have bearing on their activities. Record keeping is necessary to document their actions in order to provide adequate evidence of compliance. Remember that no evidence equals no proof, which demonstrates noncompliance. Business activities are defined broadly by ISO to include public administration, nonprofit activities, commercial use, and other activities expected to keep records. All fundraising campaigns fall under the ISO 15489 standard.

A record is expected to reflect the truth of the communications between the parties, the action taken, and the evidence of the event. Records are expected to be authentic with reliable information of high integrity. Auditors need to be aware of the legal challenges whenever records are introduced as evidence in a court of law. Every good defense lawyer will attempt to dispute the authenticity or integrity of each record by allegations of tampering, mishandling, incompetence, or computer system compromise. Without excellent record keeping, the value of the record as evidence may be diminished or completely lost. This is why the chain of custody actually starts with how records are created in the first place.

ISO 15489 is used by court judges and lawyers as the international standard for determining liability in addition to sentencing during prosecution. All organizations, including yours, should have already adopted a records classification scheme (data classification). The purpose is to convey to the staff how to properly protect assorted records. Consider the different requirements for each of the following types of records:

Trade secrets

Unfiled patent applications

Personal information and privacy data such as HIPAA or bank account numbers

Intellectual property rights

Commercial contracts (possibly a confidential record) versus government contracts (a public record)

Internal operating reports

Privileged information, including consultation with lawyers

Customer lists and transaction records (including professional certification)

Retirement and destruction of obsolete information

Record retention systems should be regularly reassessed to ensure compliance. The corresponding reports also need to be protected because they serve as evidence in support of compliance activities. Most of the fraud mentioned in the beginning of this book was discovered and prosecuted under ISO 15489 standards.

Note

CISA certification is recognized under the ISO 17024 requirements for all bodies issuing professional certification. ISACA complies with the ISO 15489 records management and ISO 17024 professional requirements governing all professional certifications. CISA certification simply meets the minimum standard under ISO 15489 and ISO 17024 for compliance. CISA is not an ISO standard, just ISO standards compliant.

Let's move on now. It is time to discuss the leadership role of management. We will begin with an overview of the steering committee.

The steering committee should be involved in software decisions to provide guidance toward fulfillment of the organizational objectives. We have already discussed the basic design of a steering committee in Chapter 3.

As you may recall, the steering committee comprises executives and business unit managers. Their goal is to provide direction for aligning IT functions with current business objectives. Steering committees provide the advantage of increasing the attention of top management on IT. The most effective committees hold regular meetings focusing on agenda items from the business objectives rather than IT objectives. Most effective decisions are obtained by mutual agreement of the committee rather than by directive. The steering committee increases awareness of IT functions, while providing an avenue for users to become more involved. In this chapter, we are focusing on the identification of business requirements as they relate to the choices made for computer software.

As a CISA, you should understand how the steering committee has developed the vision for software to fulfill the organization's business objectives. What was the thought process that led the steering committee to its decision? Two common methods are the use of critical success factors (CSFs) and a scenario approach to planning.

A critical success factor (CSF) is something that must go right every time. To fail a CSF would be a showstopper. The process for identifying CSFs begins with each manager focusing on their current information needs. This thought process by the managers will help develop a current list of CSFs.

Some of the factors may be found in the specific industry or chosen business market. External influences—such as customer perception, current economy, pressure on profit margin, and posturing of competitors—could be another source of factors. The organization's internal challenges can provide yet another useful source. These can include internal activities that require attention or are currently unacceptable.

As an IS auditor, you should remain aware that critical success factors are highly dependent on timing. Each CSF should be reexamined on a regular basis to determine whether it is still applicable or has changed.

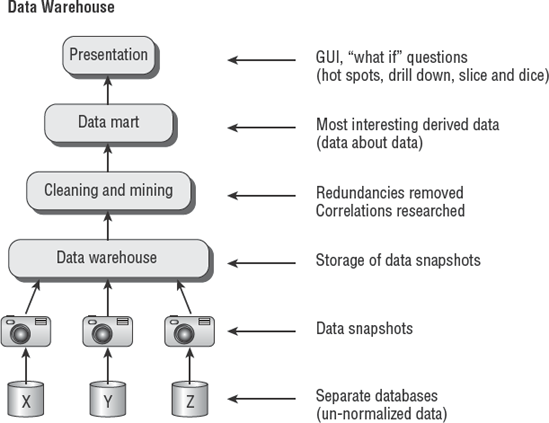

The scenario approach is driven by a series of "what if" questions. This technique challenges the planning assumptions by creating scenarios that combine events, trends, operational relationships, and environmental factors. A series of scenarios are created and discussed by the steering committee. The most likely scenario is selected for a planning exercise.

The major benefit of this approach is the discovery of assumptions that are no longer relevant. Rules based on old assumptions and past situations may no longer apply. The scenario approach also provides an opportunity to uncover the mindset of key decision-makers.

The role of the scenario is to identify the most important business objectives and CSFs. After some discussion, the scenario should reveal valuable information to be used in long-term plans. Remember, the goal is to align computer software with the strategic objectives of the organization, which we will look at next.

As a CISA, you should understand the alignment of computer software to business needs. Information systems provide benefits by alignment and by impact. Alignment is the support of ongoing business operations. Changes created in the work methods and cost structure are referred to as impact.

Each organizational project will undergo a justification planning exercise. Management will need to determine whether the project will generate a measurable return on investment. The purpose of this exercise is to ensure that the time, money, and resources are well spent. The basic business justification entails the following four items:

- Establish the need

Business needs can be determined from internal and external sources. Internal needs can be developed by the steering committee and by interviewing division managers. Internal performance metrics are an excellent source of information. External sources include regulations, business contracts, and competitors.

- Identify the work effort

The next step is to identify the people who can provide the desired results. Management's needs are explained to the different levels of personnel who perform the work. The end-to-end work process is diagrammed in a flowchart. Critical success factors are identified in the process flow. A project plan is created that estimates the scope of the work. This may use traditional project management techniques in combination with the System Development Life Cycle.

- Summarize the impact

The anticipated business impact can be presented by using quantitative and qualitative methods. It is more effective to convert qualitative statements into semiquantitative measurements. Semiquantitative measurements can be converted into a range scale of increased revenue by implementing the system or by cost savings. The CISA candidate should recall the discussion in Chapter 2, "Audit Process," regarding the use of semiquantitative measurement with a range scale similar to A, B, C, and F school report card grades.

- Present the benefits

Management will need to be sold on the value of the system. The benefits will typically entail promises of eliminating an existing problem, improving competitive position, reducing turnaround time, or improving customer relations.

In this chapter, we are focusing our discussions on computer software. The steering committee should be involved in decisions concerning software priorities and necessary functions. Each software objective should be tied to a specific business goal. The combined input will help facilitate a buy versus build decision about computer software. Should the organization buy commercial software or have a custom program written? Let's consider the questions to ask in regard to making this decision. The list presented here is for illustration purposes; however, it is similar to the standard line of questions an auditor will ask:

What are the specific business objectives to be attained by the software? Does a printed report exist?

Is there a defined list of objectives?

What are the quantitative and qualitative measurements to prove that the software actually fulfills the stated objectives?

What internal controls will be necessary in the software?

Is commercial software available to perform the desired function?

What level of customization would be required?

What mechanisms will be used to ensure the accuracy, confidentiality, timely processing, and proper authorization for transactions?

What is the time frame for implementation?

Should building the software be considered because of a high level of customization needed or the lack of available software?

Are the resources available to build custom software?

How will funding be obtained to pay for the proposed cost?

The steering committee should be prepared to answer each of these questions and use the information to select the best available option. Effective committees will participate in brainstorming workshops with representation from their respective functional areas. The goal is to solicit enough information to reach an intelligent decision. The final decision may be to buy software, build software, or create a hybrid of both.

Organizations may use the answers from the questions asked in conjunction with a written request to solicit offers from vendors. The process of inviting offers incorporates a statement of the current situation with a request for proposal (RFP). The term RFP is also related to an invitation to tender (ITT) or request for information (RFI).

The steering committee charters a project team to perform the administrative tasks necessary for an information request (RFI) or proposal request (RFP). The request is sent to a small number of prospective vendors or posted to the public, depending on the client's administrative operating procedure. An internal software development staff may provide their own proposal in accordance with the RFP or participate on the review team. A typical RFP will contain at least the following elements:

Cover letter explaining the specific interest and instructions for responding to the RFP

Overview of the objectives and time line for the review process

Background information about the organization

Detailed list of requirements, including the organization's desired service level

Questions to the vendor about their organization, expertise of specific individuals documented in a skills matrix, support services, implementation services, training, and current clients

Request of a cost estimate for the proposed configuration with details about the initial cost and all ongoing costs

Request for a schedule of demonstrations and visit to the installation site of existing customers

Note

All government agencies and many commercial organizations require separation of duties during the bid review process. A professional purchasing manager will become the vendor's contact point to prevent the vendor from having any direct contact with the buyer. The intention is to eliminate any claims of bias or inappropriate influence over the final decision to purchase.

The RFP project team works with the steering committee to formulate a fair and objective review process. The organization may consult ISO 9001:2000 and ISO 9126-2:2003 standards for guidance. The proposed software could be evaluated by using the CMM. In addition, ISACA's Control Objectives for Information and related Technology (CObIT) provides valuable information to be considered when reviewing a vendor and their products.

As an IS auditor, you should remember that your goal is to be thorough, fair, and objective. Care should be given to ensure that the requirements and review do not grant favor toward a particular vendor. The reviews are actually a form of audit and should include the services of an internal or external IS auditor. It is essential that vendor claims are investigated to ensure that the software will fulfill the desired business objectives.

The systematic process of reviewing vendor proposals is a project unto itself. Each proposal has to be scrutinized to ensure compliance requirements identified in the original RFP documents provided to the vendor. You need to ask the following questions:

Does the proposed system meet the organization's defined business requirements?

Does the proposed system provide an advantage that our competitors will not have, or does the proposed system provide a commodity function similar to that of our competitors?

What is the estimated implementation cost measured in total time and total resources?

How can the proposed benefits be financially calculated? The cost of the system and the revenue it generates should be noticeable in the organization's financial statement. To calculate return on investment, the total cost of the system including manpower is divided by the cost savings (or revenue generated) and identified as a line item in the profit and loss statement.

What enhancements are required to meet the organization's objectives? Will major modifications be required?

What is the level of support available from the vendor? Support includes implementation assistance, training, software update, system upgrade, emergency support, and maintenance support.

Has a risk analysis been performed with consideration of the ability of the organization and/or vendor to achieve the intended goal?

Can the vendor provide evidence of financial stability?

Will the organization be able to obtain rights to the program source code if the vendor goes out of business? Software Escrow refers to placing original software programs and design documentation into the trust of a third party (similar to financial escrow). The original software is expected to remain in confidential storage. If the vendor ceases operation, the client may obtain full rights to the software and receive it from the escrow agent. A small number of vendors may agree to escrow the source code, whereas most would regard the original programs as an intellectual asset that can be resold to another vendor.

Tip

Modern software licenses provide only for the right to benefit from the software's use, not software ownership.

One of the major problems in reviewing a vendor is the inability to get a firm commitment in writing for all issues that have been raised. There are major vendors that will respond to the RFP with a lowball offer that undercuts the minimum requirements. Their motive is to win by low bid and then overcharge the customer with expensive change orders to bring the implementation up to the customer's stated objective.

The accepted method of controlling changes to software is to use a change control board (CCB). Members of the change control board include IT managers, quality control, user liaisons from the business units, and internal auditors. A vice president, director, or senior manager presides as the chairperson. The purpose of the board is to review all change requests before determining whether authorization should be granted. This fulfills the desired separation of duties. Change control review must include input from business users. Every request should be weighed to determine business need, required scope, level of risk, and preparations necessary to prevent failure.

You can refer to the client organization's policies concerning change control. You should be able to determine whether separation of duties is properly enforced. Every meeting should include a complete tracking of current activities and the minutes of the meetings. Approval should be a formal process. The ultimate goal is to prevent business interruption. This is performed by following the principles of version control, configuration management, and testing. We discuss separation of duties with additional detail in Chapter 6, "IT Service Delivery."

Let's move on to a discussion of the challenges in managing a software development project. In this section, you'll learn about the two main viewpoints for managing software development. You'll then take a closer look at the role of traditional project management in software development.

There are two opposing viewpoints on managing software development: evolutionary and revolutionary.

The traditional viewpoint promotes evolutionary development. The evolutionary view is that the effort for writing software code and creating prototypes is only a small portion of software development. The most significant work effort occurs during the planning and design phase. The evolutionary approach works on the premise that the number one source of failures is a result of errors in planning and design. Evolutionary software may be released in incremental stages beginning with a selected module used in the architecture of the first release. Subsequent modules will be added to expand features and improve functionality. The program is not finished until all the increments are completed and assembled. The evolutionary development approach is designed to be integrated into traditional software life cycle management.

The opposing view is that a revolution is required for software development. The invention of advanced fourth-generation programming languages (4GL) empowers business users to develop their own software without the aid of a trained programmer. This approach is in stark contrast to the traditional view of developing specific requirements with detailed specifications before writing software. The revolutionary development approach is based on the premise that business users should be allowed to experiment in an effort to generate software programs for their specific needs. The end user holds all the power of success or failure under this approach. The right person might produce useful results; however, the level of risk is substantially greater. The revolutionary approach is difficult to manage because it does not fit into traditional management techniques. Lack of internal controls and failure to obtain objectives are major concerns in the revolutionary development approach.

Note

The analogy to revolutionary development would be to tell a person to go write their own software. A tiny number of individuals would have the competence necessary to be successful.

Evolutionary software development is managed through a combination of the System Development Life Cycle (SDLC) and traditional project management. We covered the basics of project management using the Project Management Institute (PMI) methodology in Chapter 1, "Secrets of a Successful IS Auditor." The SDLC methodology—which is discussed in detail in the following section—addresses the specific needs of software development, but still requires project management for the nondevelopment business management functions.

When using traditional project management, the advantages include Program Evaluation Review Technique (PERT) with a Critical Path Methodology (CPM). You will need to be aware of the two most common models used to illustrate a software development life cycle: the waterfall model and the spiral model.

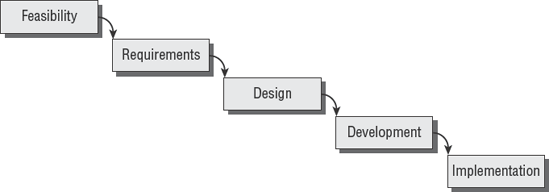

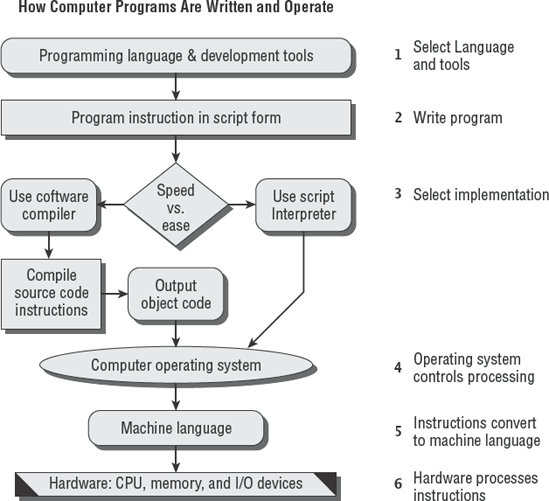

Evolutionary software development is an iterative process of requirements, prototypes, and improvement. In the 1970s, Barry Boehm used W.W. Royce's famous waterfall diagram to illustrate the software development life cycle. A simplified version of the waterfall model used by ISACA is shown in Figure 5.1.

Based on the SDLC phases, this simplified model assumes that development in each phase will be completed before moving into the next phase. That assumption is not very realistic in the real world. Changes are discovered that regularly require portions of software to undergo redevelopment.

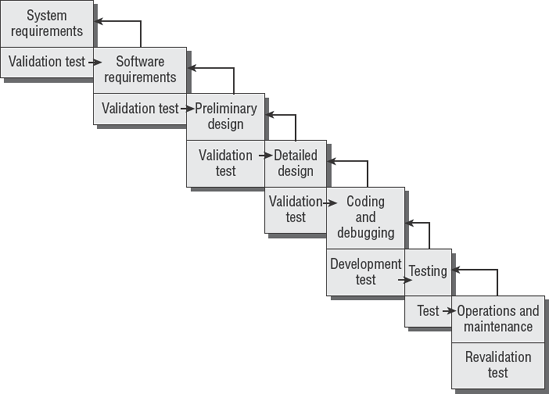

Boehm's version of the software life cycle model contained seven phases of development. Each of the original phases included validation testing with a backward loop returning to the previous phase. The backward loop provides for changes in requirements during development. Changes are cycled back to the appropriate phase and then regression-tested to ensure that the changes do not produce a negative consequence. Figure 5.2 shows Boehm's model as it appeared in 1975 from the Institute of Electrical and Electronics Engineers (IEEE).

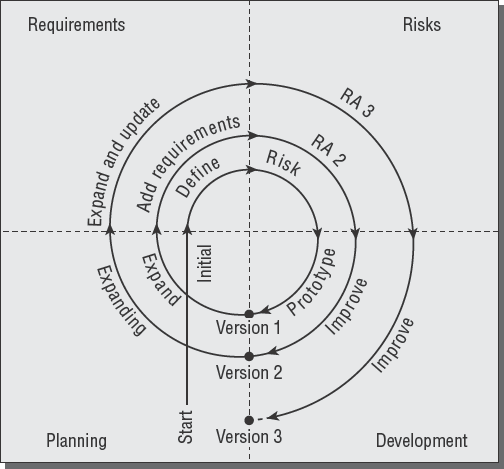

About 12 years later, Boehm developed the spiral model to demonstrate the software life cycle including evolutionary versions of software. The original waterfall model implied management of one version of software from start to finish. This new spiral model provided a simple illustration of the life cycle that software will take in the development of subsequent versions. Each version of software will repeat the cycle of the previous version while adding enhancements. Figure 5.3 shows the cycle of software versions in the spiral model.

Notice how the first version starts in the planning quadrant of the lower left and proceeds through requirements into risk analysis and then to software development. After the software is written, we have our first version of the program. The planning cycle then commences for the second version, following the same path through requirements, risk analysis, and development. The circular process will continue for as long as the program is maintained.

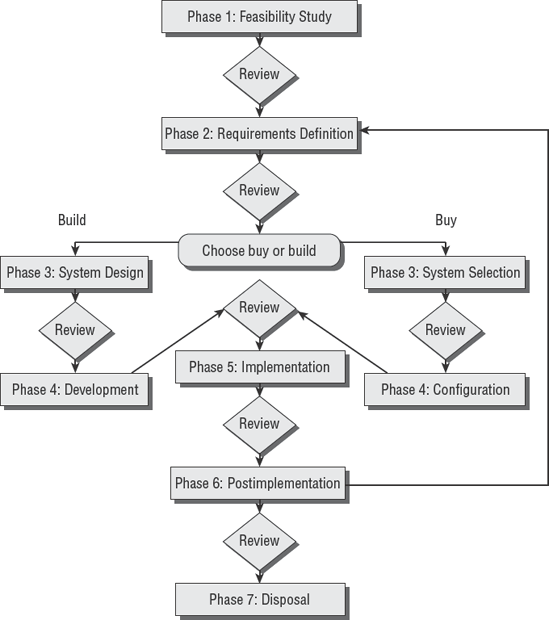

All computer software programs undergo a life cycle of transformation during the journey from inception to retirement. The System Development Life Cycle (SDLC) used by ISACA is designed as a general blueprint for the entire life cycle. A client organization may insert additional steps in their methodology. This international SDLC model comprises seven unique phases with a formal review between each phase (see Figure 5.4).

Tip

Auditors will encounter SDLC models with only five or six phases. Upon investigation, it becomes obvious that someone took an inappropriate shortcut, skipping one of the seven phases. A smart auditor will pick up on this lack of understanding to investigate the organization further and discover any additional weakness created by this mistake. Failure to implement all seven phases indicates that a major control failure is present.

Let's start with a simple overview of SDLC:

- Phase 1: Feasibility Study

This phase focuses on determining the strategic benefits that the new system would generate. Benefits can be financial or operational. A cost estimate is compared to the anticipated payback schedule. Maturity of the business process and personnel capabilities should be factored into the decision. Three primary goals in phase 1 are as follows:

Define the objectives with supporting evidence. New policies might be created to demonstrate support for the stated objectives.

Perform preliminary risk assessment.

Agree upon an initial budget and expected return on investment (ROI).

- Phase 2: Requirements Definition

The steering committee creates a detailed definition of needs. We discussed this topic a few pages ago. The objective is to define inputs, outputs, current environment, and proposed interaction. The system user should participate in the discussion of requirements. In phase 2 the goals include the following:

- Phase 3: System Design or Selection

In phase 3, the objective is to plan a solution (strategy) using the objectives from phase 1 and specifications from phase 2. The decision to buy available software or build custom software is based on management's determination regarding fitness of use. The client moves in one of two possible directions based on whether the decision is to build or to buy:

- Build (Design)

It was decided that the best option is to build a custom software program. This decision is usually reached when a high degree of customization is required. Efforts focus on creating detailed specifications of internal system design. Program interfaces are identified. Database specifications are created by using entity-relationship diagrams (ERDs). Flowcharts are developed to document the business logic portion of design.

- Buy (Selection)

The decision is to buy a commercial software program. The RFP process is used to select the best vendor and product available based on the specification created in phase 2.

- Phase 4: Development or Configuration

The client continues down one of two possible directions based on the earlier decision of build versus buy:

- Build (Development)

The design specifications, ERD, and flowcharts from phase 3 will become the master plan for writing the software. Programmers are busy writing the individual lines of program code. Prototypes are built for functional testing. Software undergoes certification testing to ensure that everything will work as intended without any surprises or material defects. Component modules of software will be written, tested, and submitted for user approval. The first stages of user acceptance testing occur during this phase.

- Buy (Configuration)

Customization is typically limited to program configuration settings with a limited number of customized reports. The selection process for customization choices should be a formal project.

- Phase 5: Implementation

This phase is common to both buy and build decisions. The new software is installed using the proposed production configuration. Everyone from the support staff to the user is trained in the new system. Final user acceptance testing begins. The system undergoes a process of final certification and management accreditation prior to approval for production use:

Certification is a technical process of testing the finished design and the integrity of the chosen configuration.

Accreditation represents management's formal acceptance of the complete system as implemented.

Accreditation includes the environment, personnel, support documentation, configuration, and technology. With formal management accreditation, the approved implementation may now begin production use (go live).

- Phase 6: Postimplementation

After the system has been in production use, it is reviewed for its effectiveness to fulfill the original objectives. The implementation of internal controls is also reviewed. System deficiencies are identified. Goals in phase 6 include the following:

Compare performance metrics to the original objectives.

Analyze lessons learned.

Re-review the specifications and requirements annually.

Implement requests for new requirements, updates, or disposal.

The last step in phase 6 is to perform an ROI calculation comparing cost to the actual benefits received. Over time, the operating requirements will always change.

- Phase 7: Disposal

The final phase is the proper disposal of equipment and purging of data. Assets must undergo a formal review process to determine when the system can be shut down for dismantling. Legal requirements may prohibit the system from being completely shut down. In phase 7, the goals include the following:

Archive old data.

Mark retention requirements and specify destruction date (if any). Be aware that certain types of records may need to be retained forever.

Management signs a formal authorization for the disposal and formally accepts any resulting liability.

If approved for disposal, the system data must be archived, remnants purged from the hardware, and equipment assets disposed of in an acceptable manner. Nobody within the organization should profit from the system disposal.

Note

Be careful not to confuse the SDLC with the Capability Maturity Model (CMM). A system life cycle covers the aspects of selecting requirements, designing software, installation, operation, maintenance, and disposal. The CMM focuses on metrics of maturity. CMM can be used to describe the maturity of IT governance controls.

Now that you have a general understanding of the SDLC model, we will discuss the specific methods used in each phase. These methods are designed to accomplish the stated SDLC objectives.

The Feasibility Study phase begins with the initial concept of engineering. In this phase, an attempt is made to determine a clearly defined need and the strategic benefits of the proposed system. A business case is developed based on initial estimates of time, cost, and resources. To be successful, the feasibility study will combine traditional project management with software development cost estimates.

Let's start with the business side of feasibility. The following points should be discussed and debated, and the outcome agreed upon with appropriate documentation:

Perception of need. Describe the present situation while defining a specific need to be met.

Link the need to a specific mission objective within the long-term strategy.

State the desired outcome.

Identify specific indicators of success and indicators of failure.

Perform a preliminary risk assessment. The outcome should include a statement of the security classification necessary if the decision is to proceed. Will it be common knowledge, or will it involve business secrets, classified data, or the need for other special handling?

Make an assessment of alternatives (AoA). Determine formal and informal criteria in support of the decision for whichever option is selected as the best choice. Document all the answers.

Prepare a preliminary budget for investment review. Traditional techniques need to be combined with an expert estimation of software development costs.

The most common model for estimating software development cost is the constructive cost model, which uses an estimated count of lines of program code and Function Point Analysis. Let's begin with the Constructive Cost Model.

The Constructive Cost Model (COCOMO) was developed by Boehm in 1981. This forecasting model provides a method for estimating the effort, schedule, and cost of developing a new software application. The original version is obsolete because of evolution changes in software development. COCOMO was replaced with COCOMO II in 1995.

The COCOMO II model provides a solid method for performing "what if" calculations that will show the effect of changes on the resources, schedule, staffing, and predicted cost. The COCOMO II model deals specifically with software programming activities but does not provide a definition of requirements. You must compile your requirements before you can use either COCOMO model. COCOMO II templates are available on the Internet to run in Microsoft Office Excel.

The COCOMO II model permits the use of three internal submodels for the estimations: Application Composition, Early Design, or Post Architecture. Within the three internal submodels, the estimator can base their forecast on a count of source lines of code or Function Point Analysis.

Source Lines Of Code (SLOC) forecasts estimates by counting the individual lines of program source code regardless of the embedded design quality. This method has been widely used for more than 40 years and is still used despite advances with 4GL programming tools. It is important for you to understand that counting lines of code will not measure efficiency. The most efficient program could have fewer lines of code, and less-efficient software could have more lines. Having a program with few lines of program code typically indicates that the finished software will run faster. Smaller programs also have the advantage of being easier to debug.

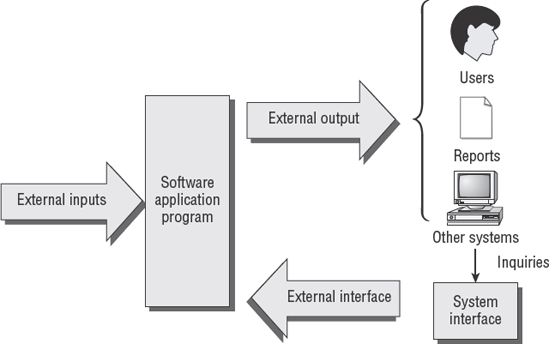

Function Point Analysis (FPA) is a structured method for classifying the required components of a software program. FPA was designed to overcome shortfalls in the SLOC method of counting lines in programs. The FPA method (see Figure 5.5) divides all program functions into five classes:

External input data from users and other applications

External output to users, reports, and other applications

External inquiries from users and other applications

Internal file structure defining where data is stored inside the database

External interface files defining how and where data can be logically accessed

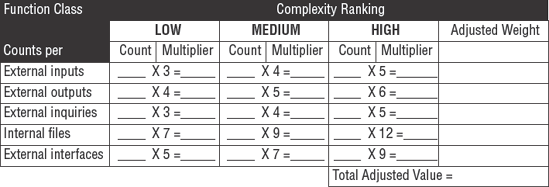

The five classes of data are assigned a complexity ranking of low, average, or high. The ranking is multiplied by a numerical factor and tallied to achieve an estimate of work required (see Figure 5.6).

FPA is designed for an experienced and well-educated person who possesses a strong understanding of functional perspectives. Typically this is a senior-level programmer. An inexperienced person will get a false estimate. This model is intended for counting features that are specified in the early design. It will not create the initial definition of requirements. Progress can be monitored against the function point estimate to assess the level of completion. Changes can be recorded to monitor scope creep. Scope creep refers to the constant changes and additions that can occur during the project. Scope creep may indicate a lack of focus, poor communication, lack of discipline, or an attempt to distract the user from the project team's inability to deliver to the original project requirements.

Note

You should acquire formal training and consult a Functional Point Analysis training manual if you are ever asked to perform FPA.

The overall cost budget should include an analysis of the estimated personnel hours by function. The functions include clerical duties, administrative processes, analysis time, software development, equipment, testing, data conversion, training, implementation, and ongoing support.

Best practices in software development require a review meeting at the end of each phase to determine whether the project should continue to the next phase. The review is attended by an executive chairperson, project sponsor, project manager, and the suppliers of key deliverables.

The meeting is opened by the chairperson. The project manager provides an overview of the business case and presents the initial assessment reports. Presentations are made to convey the results of risk management analysis for the project. Project plans and the initial budget are presented for approval. Meeting attendees review the phase 1 plans to ensure that the skills and resource requirements are clearly understood.

At the end of the phase review meeting, the chairperson determines whether the review has passed or failed based on the evidence presented. In the real world, a third option may exist: deciding that the project should be placed on temporary hold and reassessed at a future date. All outstanding issues must be resolved before granting approval to pass the phase review.

Formal approval is evidenced by a signed project charter accompanied by a preliminary statement of work (SOW). The project manager is responsible for preparing the project plan documentation. The sponsor grants formal authority by physically signing the documents. Without either of these documents, chances are a dispute will evolve into a conflict that compromises the project. A signed charter and SOW are frequently used to force cooperation by other departments or to prevent interruptions by politically motivated outsiders.

In the Feasibility Study phase, you should review the documentation related to the initial needs analysis. As an auditor, you review the risk mitigation strategy. You ask whether an existing system could have provided an alternative solution. The organization's business case and cost justifications are verified to determine whether their chosen solution was a reasonable decision. You also verify that the project received formal management approval before proceeding into the next phase.

The Requirements Definition phase is a documentation process focused on discovering the proposed system's business requirements. Defining the requirements requires a broader approach than the initial feasibility study. It is necessary to develop a list of specific conditions in which the system is expected to operate. Criteria need to be developed to specify the input and output requirements along with the system boundaries. Let's review the basic steps that can help define the requirements:

Functional statement of need as described in phase 1.

Competitive market research. Has the auditee defined what the customer wants? What does the competition offer?

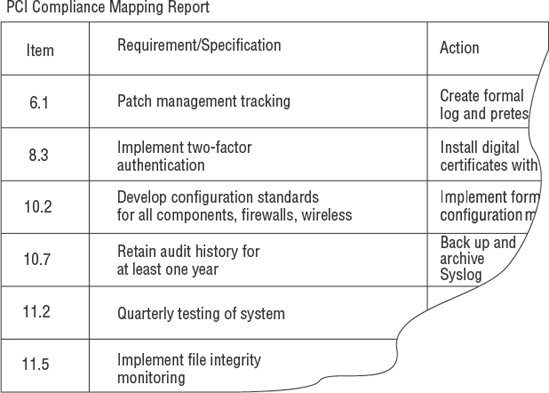

Identification of legal requirements for data security. Somebody needs to download each regulation and create a list of specific shall points referenced by page, paragraph, and line number. This will eliminate scope creep and quell attempts to subvert the project scope. Look at this tiny example relating to compliance under the Payment Card Industry (PCI) laws.

Identification of the type of reports required for legal filings, both government and customer.

Formal selection of security controls. Ignorance of the law is a wonderful way to assure a speedy conviction. The same concept applies to apathy.

Software conversion study. How will the data be migrated to the new system? When will the switch to production occur?

Cost benefit analysis to justify selection of features or functionality. It's doubtful that the first version will have all the features that everyone imagined. However, security and controls should never be compromised.

Risk management plan. A trade-off always occurs in relation to cost, time, scope, and features. An example is to limit internal use to a physical area rather than to violate security by allowing remote access. Later versions may include the additional security controls necessary for safe remote access. The number of uses may be initially restricted. Technical risks must be managed.

Analysis of impact with business cycles. How could the software be developed, tested, and later deployed without conflicting with the business cycle? Traditional project management plans are created to control the tasks.

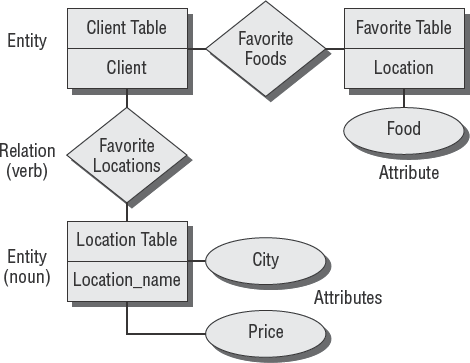

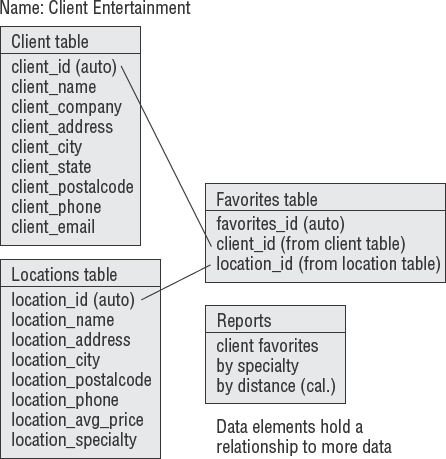

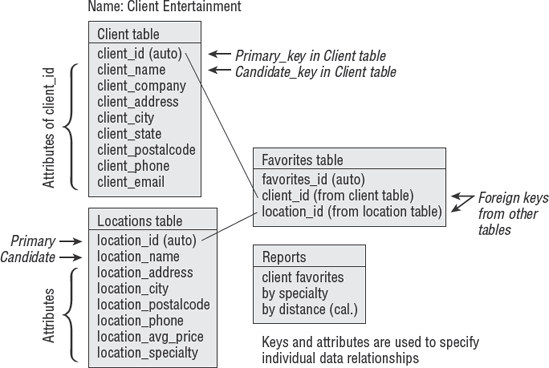

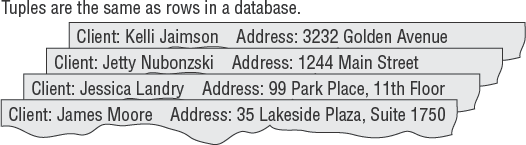

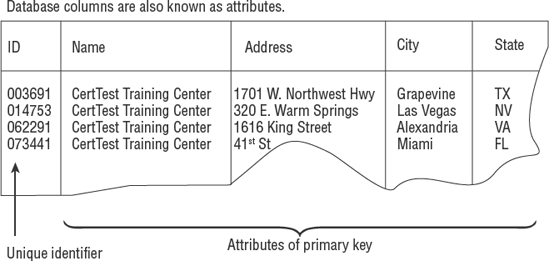

In this phase of gathering detailed requirements, the entity-relationship diagram (ERD) technique is often used. The ERD helps define high-level relationships corresponding to a person, data element, or concept that the organization is interested in implementing. ERDs contain two basic components: the entity and the relationship between entities.

An entity can be visualized as a database comprising reports, index cards, or anything that contains the data to be used in the design. Each entity has specific attributes that relate to another entity. Figure 5.7 shows the basic design of an ERD.

It is a common practice to focus first on defining the data that will be used in the program. This is because the data requirement is relatively stable. The purpose of the ERD exercise is to design the data dictionary. The data dictionary provides a standardized term of reference for each piece of data in the database. After the data dictionary is developed, it will be possible to design a database schema. The database schema represents an orderly structure of all data stored in the database.

After the ERD is complete, it is time to begin construction of transformation procedures used to manipulate the data. The transformation procedures detail how data will be acquired and logically transformed by the application into usable information. Transformation procedures exceed the capability of fourth-generation (4GL) programming tools. It takes old-fashioned knowledge of the business process and the aid of a skilled software engineer (programmer) to refine an idea into usable logic. Business objectives should always win over the programmer's desire to show off the latest tools, or worse, to subvert a good idea that requires more effort.

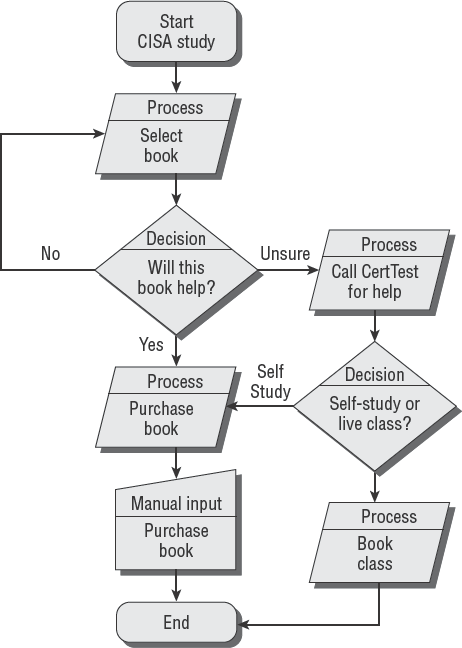

High-level flowcharts define portions of the required business logic. A low-level flowchart illustrates the details of the transformation process from beginning to end. The flowchart concept will map each program process, decision choice, and handling of the desired result. The flowchart is a true blueprint of the business logic used in the program. Figure 5.8 shows a simple program flowchart.

The ERD and flowcharts from phase 2 provide the foundation for the system design in SDLC phase 3. Security controls are added into the design requirement during phase 2. You should understand that internal controls are necessary in all software designs.

The internal controls for user account management functions are included in this phase to provide for separation of duties:

Preventative controls such as data encryption and unique user logins are specified.

Detective controls for audit trails and embedded audit modules are added.

Corrective controls for data integrity are included. Features that are not listed in the requirements phase will most likely be left out of the design.

It is important that the requirements are properly verified and supported by a genuine need. Each requirement should be traced back to a source document detailing the actions necessary for performance of work or legal compliance.

A gap analysis is used to determine the difference between the current environment and the proposed system. Plans need to be created to address the deficiencies that are identified in the gap analysis. The deficiencies may include personnel, resources, equipment, or training.

At the end of phase 2, a phase 2 review meeting is held. This meeting is similar in purpose to the previous phase 1 review. This time, the review focuses on success criteria in the definition of software deliverables and includes a timeline forecast with date commitments. The proposed system users need to submit their final feedback assessment and comments before approval is granted to proceed into phase 3. The purpose of the phase 2 review meeting is to gain the authority to proceed with preliminary software design (phase 3). Once again, all outstanding issues need to be resolved before approval can be granted to proceed to the next phase.

You should obtain a list of detailed requirements. The accuracy of the requirements can be verified by a combination of desktop review of documentation and interviews with appropriate personnel. Conceptual ERD and flowchart diagrams should be reviewed to ensure that they address the needs of the user.

The Requirements Definition phase creates an output of detailed success factors to be incorporated into the acceptance test specifications. As an auditor, you will verify that the project plans and estimated costs have received proper management approval.

The System Design phase expands on the ERD and initial concept flowcharts. Users of the system provided a great deal of input during phase 2, which is then used in this phase for in-depth flowcharting of the logic for the entire system. The general system blueprint is decompiled into smaller program modules.

Internal software controls are included in the design to ensure a separation of duties within the application. The work breakdown structure is created for effective allocation of resources during development. Design and resource planning may be one of the longest phases in the planning cycle. Quality is designed into a system rather than inspected after the fact.

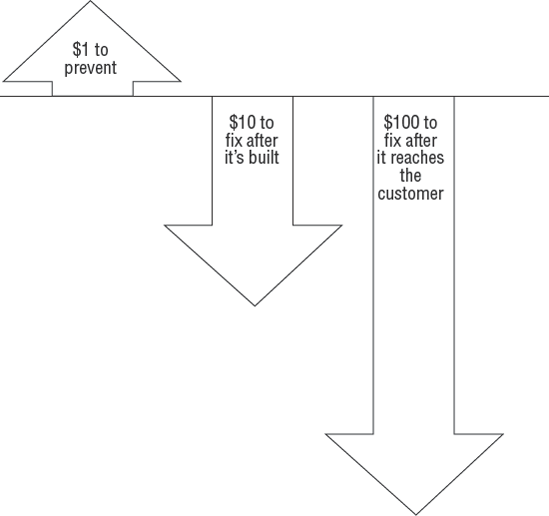

The 1-10-100 rule provides an excellent illustration of the costs of quality-related problems. Figure 5.9 shows that for every dollar spent preventing a design flaw in planning, design, and testing, the organization can avoid the additional cost of noncompliance failures:

$100 to correct a problem reaching the customer

$10 to correct a problem or mistake during production

$1 to prevent a problem

According to quality guru Philip Crosby, there are two primary components of quality—the extra expenses known as the price of nonconformance, and the savings in the price of conformance:

- Price of nonconformance (PONC)

This represents the added costs of not doing it right the first time. Think of this as the extra time and cost of rework or uncompensated warranty repair. It's not uncommon for the overall cost of the rework to exceed your original profits.

- Price of conformance (POC)

Avoiding the headache by doing it right the first time is known as the price of conformance (POC). Employee training and user training is a POC expense that conserves time and money by avoiding the added cost of nonconformance (PONC).

Quality failures will occur because of variation. Poor planning, flawed design, and poor management are the most frequent sources of failure. We can categorize quality failures as common or specific in nature:

- Common quality failures

Common failures are the result of inherent variations inside the process, which are difficult to control. Let's consider writing the software programs for a robotic assembly line. Extreme heat affects the finish or adhesion of drying paint. New people may have been hired during production without enough training or experience. This type of common failure is inside the production process, which can affect the quality of a paint job. Management would be held responsible for fixing the problem because it was inside the process. It's management's responsibility to design a solution to prevent the problem.

- Special quality failures

Special failures occur when something changes outside the normal process. What if the weather was fine, but the paint finish came out wrong? Upon investigation, it was discovered that the problem resulted from using the wrong type of paint or an unapproved substitution. This special failure is something that the workers should be able to fix with their purchasing agent by working with their vendor. It's more of a supply issue than a process problem. Improvements in employee discipline to follow change control for substitution would prevent the defect.

The best way to create loyal customers is to exceed their expectations. It's important to deliver within the original scope to satisfy customer needs. Failing project managers may make the dangerous mistake of attempting to switch the deliverables by using a bait-and-switch technique referred to as gold plating. If you gold-plate doggy poop, it's nice and shiny, but still just fancy poop.

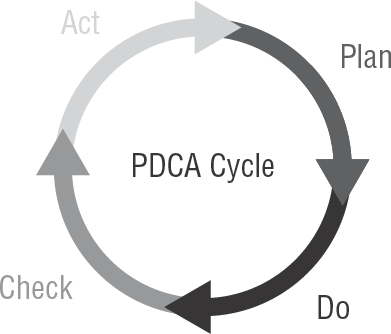

We discussed Deming's planning cycle in Chapter 2 as it related to audit planning. Figure 5.10 shows that the Plan-Do-Check-Act cycle also applies to software design.

Phase 3 is the best time for the software developer to work directly with the user. Most professional programmers encourage the progression of meetings necessary to refine the design before a single line of program code is written. A series of meetings are necessary to help convert user ideas and whims into a structured set of deliverables. Time should be spent on creating screen layouts, designing formats, and matching their desired workflow. Initial plans for developing a prototype in phase 4 are created during this System Design phase.

A significant output during the design phase is to identify how each of the software functions can be tested. Data derived during design provides the base criteria for behavior testing and inspection during phase 4 development testing. Data from user meetings provides a solid basis for user acceptance testing. The documentation created during the design phase initially serves as the road map for programmers during development. Later the phase 3 design documentation will provide a foundation for support manuals and training.

In certain situations, reverse engineering may be used to accelerate the creation of a working system design.

Note

The 2003 movie Paycheck starring Ben Affleck was themed around reverse engineering a competitor's product to jumpstart product development for Affleck's employer.

Reverse engineering is a touchy subject. A software decompiler will convert programs from machine language to a human-readable format. The majority of software license agreements prohibit the decompiling of software in an effort to protect the vendor's intellectual design secrets.

An existing system may loop back into phase 3 for the purpose of reengineering. The intention would be to update the software by reusing as many of the components as is feasible. Depending on the situation, reengineering may support major changes to upgrade the software for newer requirements.

At the end of the System Design phase, a software baseline is created from the design documents. The baseline incorporates all the agreed-upon features that will be implemented in the initial version of software (or next version in the case of reengineering). This baseline is used to gain approval for a design freeze. The design freeze is intended to lock out any additional changes that could lead to scope creep.

The phase 3 review meeting starts with a review of the detailed design for the proposed system. Engineering plans and project management plans are reviewed. Cost estimates are compared to the assumptions made in the business case. A comparison is made between the intended features and final design. Final system specifications, user interface, operational support plan, and test and verification plans are checked for completeness. Data from the risk analysis undergoes a review based on evidence. Approval is requested to proceed to the next phase. Once again, all outstanding issues must be resolved before proceeding to the next phase. Each of the stakeholders and sponsors should physically sign a formal approval of the design before allowing it to proceed into development. This administrative control enforces accountability for the final outcome.

You need to review the software baseline and design flowcharts. The design integrity of each data transaction should be verified. During the design review, you verify that processing and output controls are incorporated into the system. Input from the system's intended power users may provide insight into the effectiveness of the design.

It is important that the needs of the power users are implemented during the design phase. This may include special functions, screen layout, and report layout. You should have a particular interest in the logging of system transactions for traceability to a particular user. You look for evidence that a quality control process is in use during the software design activities. It is important to verify that formal management approval was granted to proceed to the next phase.

Warning

A smart auditor is wary of systems being allowed to proceed into development without formal approval. The purpose of IT governance is to enforce accountability and responsibility. Even the smallest, most insignificant system represents an investment of time, resources, and capital. None of these should be wasted, squandered, or misused.

Now the time has come to start writing actual software in the Development phase. This process is commonly referred to as coding a program. Design planning from previous phases serves as the blueprint for software coding. The systems analysts support programmers with ideas and observations. The bulk of the work is the responsibility of the programmer who is tasked with writing software code.

Standards and quality control are extremely important during the Development phase. A talented programmer can resolve minor discrepancies in the naming conventions, data dictionary, and program logic. Computer software programs will become highly convoluted unless the programmer imposes a well-organized structure during code writing. Unstructured software coding is referred to as spaghetti bowl programming, making reference to a disorganized tangle of instructions.

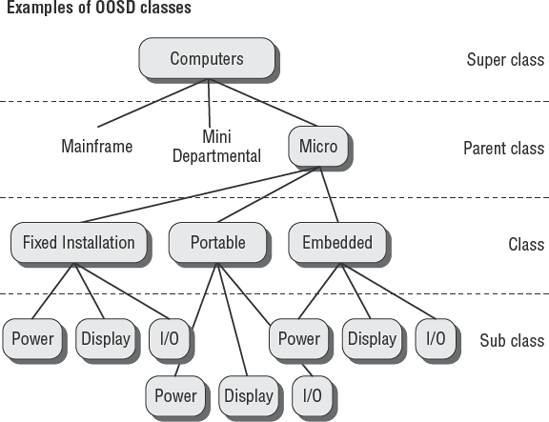

The preferred method of organizing software is to implement a top-down structure. Top-down structured programming divides the software design into distinct modules. If top-down program structures were diagrammed, the result would look like an inverted tree. Within the tree, individual program modules (or subroutines) perform a unique function. Modules are logically chained together to form the finished software program. The modular design exponentially improves maintainability of the finished program. Individual modules can be updated and replaced with relative ease. By comparison, an unstructured spaghetti bowl program would be a nightmare to modify. Modular design also permits the delegation of modules to different teams of programmers. Each module can be individually tested prior to final assembly of the finished program.

The software project needs to be managed to ensure adherence to the planned schedule. Scope creep with unforeseen changes can have a devastating impact on any project. It is common practice to allow up to a 10 percent variance in project cost and time estimates. In government projects, the variance is only 8 percent.

The development project will be required to undergo management oversight review if major changes occur in assumptions, requirements, or methodology. Management oversight review would also be warranted if the total program benefits or cost are anticipated to deviate by more than 8 percent for government or 10 percent in industry. The project schedule needs to be tightly managed to be successful. The change control process should be implemented to ensure that necessary changes are properly incorporated into the software development phase.

A version control system is required to track progress with all of the minor changes that naturally occur daily during development.

The effort to write program code depends on the programming language and development tool selected. Examples of languages include Common Business Oriented Language (COBOL), C language, Java, the Beginner's All-purpose Symbolic Instruction Code (BASIC), and Visual Basic. The choice of programming languages is often predetermined by the organization. If the last 20 years' worth of software was developed using COBOL, it might make sense to continue using COBOL.

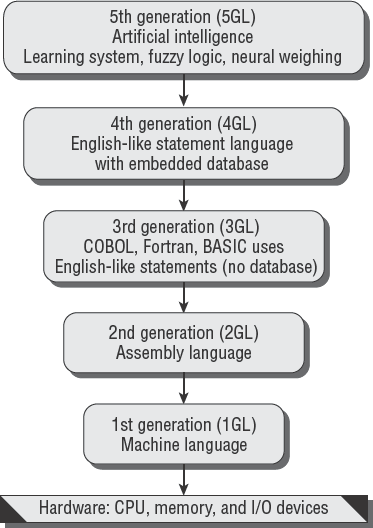

Computer programming languages have evolved dramatically over the past 50 years. The early programming languages were cryptic and cumbersome to write. This is where the term software coding originated. Each generation of software became easier for a human being to use. Let's walk through a quick overview of the five generations of computer programming languages:

- First-generation programming language

The first-generation computer programming language is machine language. Machine language is written as hardware instructions that are easily read by a computer but illegible to most human beings. First-generation programming is very time-consuming but was useful enough to give the computer industry a starting point. The first generation is also known as 1GL. In the early 1950s, 1GL programming was the standard.

- Second-generation programming language

The second generation of computer programming is known as assembly language, or 2GL. Programming in assembly language can be tedious but is a dramatic improvement over 1GL programming. In the late 1950s, 2GL programming was the standard.

- Third-generation programming language

During the 1960s, the third generation (3GL) of programming languages began to make an impact. The third generation uses English-like statements as commands within the program, for example,

if-thenandgoto. Examples of third-generation program languages include COBOL, Fortran, BASIC, and Visual Basic. Another example is the C programming language written by Ken Thompson and Dennis Ritchie. Most 3GL programs were used with manually written databases.- Fourth-generation programming language

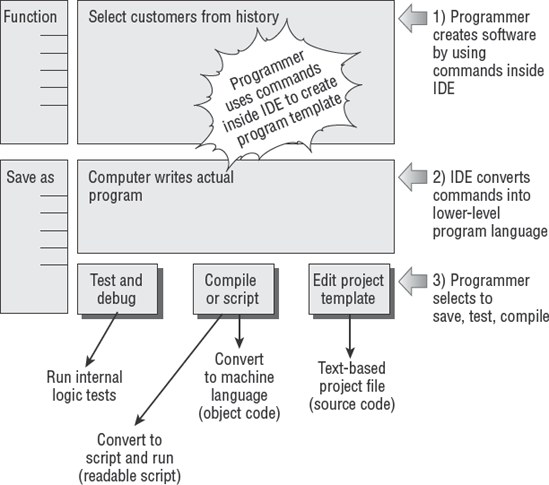

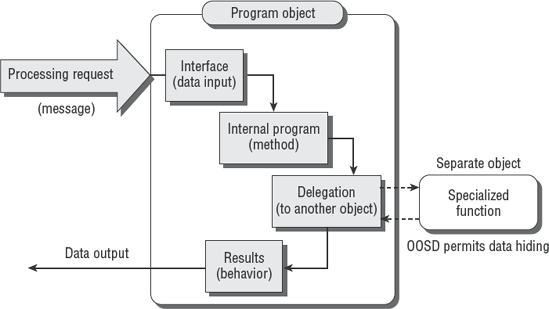

During the late 1970s, the fourth-generation programming languages (4GL) began to emerge. These include prewritten database utilities. This advancement allowed for rapid development due to an embedded database or database interface. The fourth-generation design is a true revolution in computer programming. The programmer creates a template of the software desired by selecting program actions within the development tool. This is referred to as pseudocoding or bytecoding. Their development tool will convert bytecode into actual program code. An untrained user could write a program that merely formats reports on a screen and allows a software-generation utility to write the software automatically. Figure 5.11 illustrates the general concept of pseudocoding inside a 4GL development tool.

A 4GL is designed to automate reports and the storage of data in a database. Unfortunately, it will not create the necessary business logic without the aid of a skilled programmer. An amateur using a 4GL can generate nice-looking form screens and databases. But the amateur's program will be no more than a series of buckets holding data files. The skilled programmer will be required to write transformation procedures (program logic) that turn those buckets of data into useful information. Examples of commercial 4GL development tools include Sybase's PowerBuilder, computer-aided software engineering (CASE) tools, and YesSoftware's Code Charge Studio. 4GL is the current standard for software development.

- Fifth-generation programming language

The fifth-generation programming languages (5GLs) are designed for artificial-intelligence applications. The 5GL is characterized as a learning system that uses fuzzy logic or neural weighing algorithms to render a decision based on likelihood. Google searches on the Internet use a similar design to assess the relevance of search results.

Figure 5.12 shows the hierarchy of the different generations of programming languages.

After the programming language has been selected, the next step is to choose the development tool. There are still some programmers able to sit down and write code manually by using the knowledge contained in their head. This type of old-school approach usually creates very efficient programs with the smallest number of program lines.

The majority of programmers use an advanced fourth-generation software code program to write the actual program instructions. This advanced software enables the programmer to focus on drawing higher-level logic while the computer program creates the lower-level set of instructions similar to what a manual programmer would have done. Simply put, a computer program writes the computer program.

The better development tools provide an integrated environment of design, code creation, and debugging. This type of development tool is referred to as an integrated development environment (IDE).

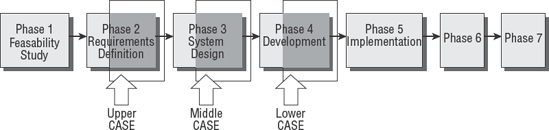

One of the best examples of an IDE is the commercial CASE tool software. You need to understand the basic principles behind CASE tools. CASE tools are divided into three functional categories that support the SDLC phases of 2, 3, and 4, respectively:

- Upper CASE tools

Business and application requirements can be documented by using upper CASE tools. This provides support for the SDLC phase 2 requirements definition. Upper CASE tools permit the creation of ERD relationships and logical flowcharts.

- Middle CASE tools

The middle CASE tools support detailed design from the SDLC phase 3. These tools aid the programmer in designing data objects, logical process flows, database structure, and screen and report layouts.

- Lower CASE tools

The lower CASE tools are software code generators that use information from upper and middle CASE to write the actual program code.

You can see the relationship of CASE tools to the SDLC phases in the following diagram.

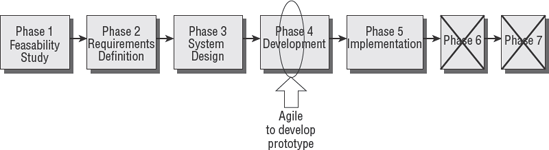

As a CISA, you should be aware of two alternative software development methods: Agile and Rapid Application Development (RAD). Each offers the opportunity to accelerate software creation during the Development phase. The client may want to use either of these methods in place of more-traditional development. Both offer distinct advantages for particular situations. Both also contain drawbacks that should be considered.

Agile uses a fourth-generation development environment to quickly develop prototypes within a specific time window. The Agile method uses time-box management techniques to force individual iterations of a prototype within a very short time span. Agile allows the programmer to just start writing a program without spending much time on preplanning documentation. The drawback of Agile is that it does not promote management of the requirements baseline. Agile does not enforce preplanning. Some programmers prefer Agile simply because they do not want to be involved in tedious planning exercises.

When properly combined with traditional planning techniques, Agile development can accelerate software creation. Agile is designed exclusively for use by small teams of talented programmers. Larger groups of programmers can be broken into smaller teams dedicated to individual program modules.

Note

The primary concept in Agile programming is to place greater reliance on the undocumented knowledge contained in a person's head. This is in direct opposition to capturing knowledge through project documentation.

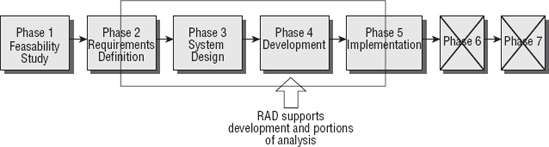

A newer integrated software development methodology is Rapid Application Development (RAD), which uses a fourth-generation programming language. RAD has been in existence for almost 20 years. It automates major portions of the software programmer's responsibilities within the SDLC.

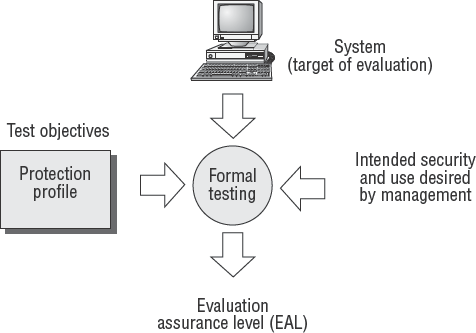

RAD supports the analysis portion of SDLC phase 2, phase 3, phase 4, and phase 5. Unfortunately, RAD does not support aspects of phase 1 or phase 2 that are necessary for the needs of a major enterprise business application. RAD is a powerful development tool when coupled with traditional project management in the SDLC.

During the Development phase, it is customary to create system prototypes. A prototype is a small-scale working system used to test assumptions. These assumptions may be about user requirements, program design, or the internal logic used in critical functions. Prototypes usually are inexpensive to build and are created over a few days or weeks. The principal advantage of a prototype is that it permits change to occur before the major development effort begins.

Prototypes seldom have any internal control mechanisms. Each prototype is created as an iterative process, and the lessons learned are used for the next version. A successful prototype will fulfill its mission objective and validate the program logic. All development efforts will focus on the production version of the program after the prototype has proven successful.

Warning

There is always a serious concern that a working prototype may be rushed into production before it is ready for a production environment. Internal controls are typically absent from prototypes or insufficient for production use.