Chapter 6. Essays about CMMI for Services

This chapter consists of essays written by invited contributing authors. All of these essays are related to CMMI for Services. Some of them are straightforward applications of CMMI-SVC to a particular field; for example, reporting a pilot use of the model in an IT organization. We also have an essay describing how CMMI-SVC could have been used and would have helped in a service environment that the author experienced in a prior job. Other essays are prospective applications to disciplines or domains that haven’t traditionally applied CMMI, such as education and legal services.

In addition, we sought some unusual treatments of CMMI-SVC: Could it be used to bolster corporate social responsibility? Can CMMI-SVC be used in development contexts? Can CMMI-SVC be used with Agile methods—or are services already agile? Finally, several essayists describe how to approach known challenges using CMMI-SVC: adding security concerns to an appraisal, using the practices of CMMI-SVC to capitalize on what existing research tells us about superior IT service, and reminding those who buy services to be responsible consumers, even when buying from users of CMMI-SVC.

In this way, we have sought to introduce ideas about using CMMISVC both for those who are new to CMMI and for those who are very experienced with prior CMMI models. These essays demonstrate the promise, applicability, and versatility of CMMI for Services.

Using CMMI-SVC in a DoD 0rganization

By Mike Phillips

Author Comments: Mike Phillips is the program manager for the entire CMMI Product Suite and an authorized CMMI instructor. Before his retirement from the U.S. Air Force, he managed large programs, including a large operation that could have benefited from CMMI-SVC, had it existed at the time. He looks back at this experience and describes how some of the practices in the Air Force unit he led would have met the goals in the CMMI-SVC model, and how other model content would have helped them if the model were available to them at that time.

In this essay, I highlight some of the ways in which CMMI for Services practices could have been used in a DoD organization and where their use might have solved challenges that we failed to handle successfully. After all, process improvement does not simply document what we do (although that does have a value for improving the capability of new employees who must learn tasks quickly). It also illuminates ways to satisfy our customers and end users.

The organization that I describe in this essay is a U.S. Air Force unit based in Ohio that, at the time, provided a rich mix of services to its customers in a broad research and development domain. It was called a “Test Wing,” and it employed 1,800 military and civilian employees who supported customers across the entire Department of Defense. The goal of the Test Wing’s customers was to investigate the performance and usability of various potential military systems and subsystems. These systems and subsystems were typically avionic units such as radios and radar systems. They were being tested for potential use in aircraft in the Air Force’s operational fleet. However, other military services and agencies were also customers of this Test Wing.

While a wide range of examples could be included, this essay focuses on a segment of the Test Wing. This segment’s activities demonstrate the interaction between CMMI’s service-specific process areas and some of the process areas that are common to all three of the current CMMI constellations.

Organizational Elements and CMMI Terminology

A “Wing” in the Air Force describes a large organization that often occupies an entire “mini city”—often employing thousands of people. The primary work conducted at the Test Wing that I’m describing focused on delivering test services. It, in turn, contained groups dedicated to the following:

• Operating the Test Wing’s collection of aircraft

• Maintaining those aircraft

• Planning and executing modifications to the aircraft to allow new systems to be installed and tested

• Planning the test missions and analyzing and reporting the resulting data to the customers who had requested the service

Each of these work units had a variety of organizational descriptors, but the term project was not used with any frequency. (As a test pilot in the Test Wing, I recall being told at times that I would be given a “special project,” which normally meant additional work to be conducted outside the normal flight rhythm. So, reactions of potential team members to “projects” were often cautious and somewhat negative.)

The CMMI-SVC model defines projects in relation to individual service agreements. For small organizations focused on delivering a single service successfully, that focus is vitally important to their success. However, as organizations grow larger in size and complexity, the term project often must be reinterpreted to ensure the best use of the idea of a “project.” For example, in the Test Wing, each service agreement tended to be of relatively short duration. We called the activities associated with these agreements “missions.” These missions might involve a single aircraft and crew or a collection of aircraft and crews.

To support one memorable service agreement, we had to deploy three aircraft to three different countries to capture telemetry data. These data were captured from a launch vehicle with three successive boosting stages placing a satellite into orbit. To monitor the first stage of the launch from California, the aircraft had to take off in Mexico and fly out over the Pacific. The second stage boost required data collection in Polynesia. The final stage required that an aircraft take off from South Africa and fly toward the South Pole. This mission (or “project” using CMMI-SVC terminology) lasted only three months from the earliest plans to the completion of data gathering. Virtually every work unit of the Test Wing committed members to the successful fulfillment of the service agreement.

These missions had a familiar business rhythm. We had existing agreements to work from, but each new mission called for a specific approach to meet the customer’s needs (Service Delivery SP 2.1) and adequate preparation for the operation (Service Delivery SP 2.2). It was not uncommon for the “critical path” to include securing visas for the passports of a dozen crew members deploying to a distant country.

In this environment, a team spanning many work units needed to satisfy a particular service agreement. The value of fully understanding and executing the practices of Service Delivery cannot be overstated. These practices include the following:

• Analyzing existing agreements and service data

• Establishing the service agreement

• Establishing the service delivery approach

• Preparing for service system operations

• Establishing a request management system

• Receiving and processing service requests

• Operating the service system

• Maintaining the service system

Composing these service-agreement-specific teams for missions also involved the practices in CMMI-SVC that are found in Integrated Project Management (using the project’s defined process and coordinating and collaborating with relevant stakeholders). Each mission required careful analysis of the elements needed to satisfy the customer requiring our services, and then delivering that service—in this case, around the world.

To make missions such as this happen required planning and managing the various work units involved. Organizational units—in our case, “squadrons”—needed an annual plan for how to successfully execute multiple service agreements with team members who needed to gain and maintain proficiency in unusual aircraft. So, at the squadron level, Project Planning, Project Monitoring and Control, and Capacity and Availability Management practices were used. This approach is similar to the approach used by other service provider organizations that need to describe services around a particular aspect of the service: sometimes a resource and sometimes a competence that helps to scope the types of services provided.

This approach leads to the definition of “project” in the CMMISVC glossary. The glossary entry states, “A project can be composed of projects.” The discussion of “project” in Chapter 3 illustrates this aspect of projects as well.

Although the CMMI-SVC model was not available to us at the time, every crew was expected to be adept at Incident Resolution and Prevention. The dynamic nature of test-related activities made for many opportunities to adjust mission plans to meet a changing situation. Aircraft would develop problems that required workarounds. Customer sites would find that support they expected to provide had encountered difficulties that drove changes in the execution of the service agreement. An anomaly on the test vehicle would require rapid change to the support structure as the test was under way. Because many of the test support types were similar, “lessons learned” led to changes in the configuration of our test data gathering and to the actual platforms used as part of the testing infrastructure for the collection of types of equipment being tested. Incident prevention was a strongly supported goal, much like we see in SG 3 of Incident Resolution and Prevention.

Two other process areas merit a brief discussion as well: Service Continuity and Service System Transition. You may not be surprised if you have worked for the military that Service Continuity activities were regularly addressed. Various exercises tested our ability to quickly generate and practice the activities needed to recover from disasters, whether these were acts of war or acts of nature, such as hurricanes. We also had continuity plans and rehearsed how we would maintain critical operations with varying loss of equipment functionality.

Service System Transition, on the other hand, provided the Test Wing staff with a reflection of how technology influenced our service systems. We frequently found ourselves needing to add newer aircraft with differing capabilities and to retire older aircraft and other resources—all while supporting customer needs.

So, CMMI-SVC represents the kind of flexible model that maps well into a military research and test environment, as well as many of the other environments described. The practices often remind us of ways to further improve our methods to achieve the goal of effective, disciplined use of our critical processes.

What We Can Learn from High-Performing IT Organizations to Stop the Madness in IT Outsourcing

By Gene Kim and Kevin Behr

Author Comments: These two authors—who lead the IT Process Institute and work as C level executives in commercial practice—have spent a decade researching the processes in IT that lead to high performance. Based on research in more than 1,500 IT organizations, they describe what processes make the difference between high performance and low or even medium performance. Their observations about the distinguishing characteristics of high performers are consistent with the goals and practices in CMMI for Services. They further note the potential downside of the pervasive trend of outsourcing IT services. Without adept and informed management of these outsourced IT contracts, harm is suffered by both provider and client. In response to this trend, they call on CMMI practitioners to use their experience and techniques to bring sanity to the world of IT outsourcing.

Introduction

Since 1999, a common area of passion for the coauthors has been studying high-performing IT operations and information security organizations. To facilitate our studies, in 2001 we cofounded the IT Process Institute, which was chartered to facilitate research, benchmarking, and development of prescriptive guidance.

In our journey, we studied high-performing IT organizations both qualitatively and quantitatively. We initially captured and codified the observed qualitative behaviors they had in common in the book The Visible Ops Handbook: Starting ITIL in Four Practical Steps.1

1. Behr, Kevin; Kim, Gene; and Spafford, George. The Visible Ops Handbook: Starting ITIL in Four Practical Steps. IT Process Institute, 2004. Introductory and ordering information is available at www.itpi.org. Since its publication, more than 120,000 copies have been sold.

Seeking a better understanding of the mechanics, practice, and measurements of the high performers, we used operations research techniques to understand what specific behaviors resulted in their remarkable performance. This work led to the largest empirical research project of how IT organizations work; we have benchmarked more than 1,500 IT organizations in six successive studies.

What we learned in that journey will likely be no surprise to CMMI-SVC practitioners. High-performing IT organizations invest in the right processes and controls, combine that investment with a management commitment to enforcing appropriate rigor in daily operations, and are rewarded with a four- to five-times advantage in productivity over their non-high-performing IT cohorts.

In the first section of this essay, we will briefly outline the key findings of our ten years of research, describing the differences between high- and low-performing IT organizations, both in their performance and in their controls.

In the second section, we will describe a disturbing problem that we have observed for nearly a decade around how outsourced IT services are acquired and managed, both by the client and by the outsourcer. We have observed a recurring cycle of problems that occur in many (if not most) IT outsourcing contracts, suggesting that an inherent flaw exists in how these agreements are solicited, bid upon, and then managed. We believe these problems are a root cause of why many IT outsourcing relationships fail and, when left unaddressed, will cause the next provider to fail as well.

We will conclude with a call to action to the IT process improvement, management, and vendor communities, which we believe can be both a vanguard and a vanquisher of many of these dysfunctions. Our hope is that you will act and take decisive action, either because you will benefit from fixing these problems or because it is already your job to fix them.

Our Ten-Year Study of High-Performing IT Organizations

From the outset, high-performing IT organizations were easy to spot. By 2001, we had identified 11 organizations that had similar outstanding performance characteristics. All of these organizations had the following attributes:

• High service levels, measured by high mean time between failures (MTBFs) and low mean time to repair (MTTR)

• The earliest and most consistent integration of security controls into IT operational processes, measured by control location and security staff participation in the IT operations lifecycle

• The best posture of compliance, measured by the fewest number of repeat audit findings and lowest staff count required to stay compliant

• High efficiencies, measured by high server-to-system-administrator ratios and low amounts of unplanned work (reactive work that is unexpectedly introduced during incidents, security breaches, audit preparation, etc.)

Common Culture among High Performers

As we studied these high performers, we found three common cultural characteristics.

A culture of change management: In each of the high-performing IT organizations, the first step when the IT staff implements changes is not to first log into the infrastructure. Instead, it is to go to some change advisory board and get authorization that the change should be made. Surprisingly, this process is not viewed as bureaucratic, needlessly slowing things down, lowering productivity, and decreasing the quality of life. Instead, these organizations view change management as absolutely critical to the organization for maintaining its high performance.

A culture of causality: Each of the high-performing IT organizations has a common way to resolve service outages and impairments. They realize that 80 percent of their outages are due to changes and that 80 percent of their MTTR is spent trying to find what changed. Consequently, when working on problems, they look at changes first in the repair cycle. Evidence of this can be seen in the incident management systems of the high performers: Inside the incident record for an outage are all the scheduled and authorized changes for the affected assets, as well as the actual detected changes on the asset. By looking at this information, problem managers can recommend a fix to the problem more than 80 percent of the time, with a first fix rate exceeding 90 percent (i.e., 90 percent of the recommended fixes work the first time).

A culture of planned work and continuous improvement: In each of the high-performing IT organizations, there is a continual desire to find production variance early before it causes a production outage or an episode of unplanned work. The difference is analogous to paying attention to the low-fuel warning light on an automobile to avoid running out of gas on the highway. In the first case, the organization can fix the problem in a planned manner, without much urgency or disruption to other scheduled work. In the second case, the organization must fix the problem in a highly urgent way, often requiring an all-hands-on-deck situation (e.g., six staff members must drop everything they are doing and run down the highway with gas cans to refuel the stranded truck).

For longtime CMMI practitioners, these characteristics will sound familiar, and the supports for them available in the model will be obvious. For those IT practitioners new to CMMI, CMMI-SVC has not only the practices to support these cultural characteristics, but also the organizational supports and institutionalization practices that make it possible to embrace these characteristics and then make them stick.

The Performance Differences between High and Low Performers

In 2003, our goal was to confirm more systematically that there was an empirically observable link between certain IT procedures and controls to improvements in performance. In other words, one doesn’t need to implement all the processes and controls described in the various practice frameworks (ITIL for IT operations, CobiT or ISO 27001 for information security practitioners, etc.).

The 2006 and 2007 ITPI IT Controls Performance Study was conducted to establish the link between controls and operational performance. The 2007 Change Configuration and Release Performance Study was conducted to determine which best practices in these areas drive performance improvement. The studies revealed that, in comparison with low-performing organizations, high-performing organizations enjoy the following effectiveness and efficiency advantages:

• Higher throughput of work

• Fourteen times more production changes

• One-half the change failure rate

• One-quarter the first fix failure rate

• Severity 1 (representing the highest level of urgency and impact) outages requiring one-tenth the time to fix

• One-half the amount of unplanned work and firefighting

• One-quarter of the frequency of emergency change requests

• Server-to-system-administrator ratios that are two to five times higher

• More projects completed with better performance to project due date

• Eight times more projects completed

• Six times more applications and IT services managed

These differences validate the Visible Ops hypothesis that IT controls and basic change and configuration practices improve IT operations effectiveness and efficiency. But the studies also determined that the same high performers have superior information security effectiveness as well. The 2007 IT controls study found that when high performers had security breaches, the following conditions were true.

The security breaches are far less likely to result in loss events (e.g., financial, reputational, and customer). High performers are half as likely as medium performers and one-fifth as likely as low performers to experience security breaches that result in loss.

The security breaches are far more likely to be detected using automated controls (as opposed to an external source, such as the newspaper headlines or a customer). High performers automatically detect security breaches 15 percent more often than medium performers and twice as often as low performers.

Security access breaches are detected far more quickly. High performers have a mean time to detect measured in minutes, compared with hours for medium performers and days for low performers.

These organizations also had one-quarter the frequency of repeat audit findings.

Which Controls Really Matter

By 2006, we had established by analyzing the link between controls and performance that not all controls are created equal. By that time, we had benchmarked about one thousand IT organizations, and had concluded that of all the practices outlined in the ITIL process and CobiT control frameworks, we could predict 60 percent of their performance by asking three questions: To what extent does the IT organization define, monitor, and enforce the following three types of behaviors?

• A standardized configuration strategy

• A culture of process discipline

• A systematic way of restricting privileged access to production systems

In ITIL, these three behaviors correspond to the release, controls, and resolution process areas, as we had posited early in our journey. In CMMI-SVC, these correspond to the Service System Transition, Service System Development, and Incident Resolution and Prevention process areas.

Throughout our journey, culminating in having benchmarked more than 1,500 IT organizations, we find that culture matters, and that certain processes and controls are required to ensure that those cultural values exist in daily operations.

Furthermore, ensuring that these controls are defined, monitored, and enforced can predict with astonishing accuracy IT operational, information security, and compliance performance.

Although behaviors prescribed by this guidance may be common sense, they are far from common practice.

What Goes Wrong in Too Many IT Outsourcing Programs

When organizations decide to outsource the management and ongoing operations of IT services, they should expect not only that the IT outsourcers will “manage their mess for less,” but also that those IT outsourcers are very effective and efficient. After all, as the logical argument goes, managing IT is their competitive core competency.

However, what we have found in our journey spanning more than ten years is that the opposite is often true. Often the organizations that have the greatest pressure to outsource services are also the organizations with the weakest management capabilities and the lowest amount of process and control maturity.

We postulate two distinct predictors of chronic low performance in IT.

IT operational failures: Technology in general provides business value only when it removes some sort of business obstacle. When business processes are automated, IT failures and outages cause business operations to halt, slowing or stopping the extraction of value from assets (e.g., revenue generation, sales order entry, bill of materials generation, etc.).

When these failures are unpredictable both in occurrence and in duration (as they often are), the business not only is significantly affected, but also loses trust in IT. This is evidenced by many business executives using IT as a two-letter word with four-letter connotations.

IT capital project failures: When IT staff members are consumed with unpredictable outages and firefighting, by definition this is often at the expense of planned activity (i.e., projects). Unplanned work and technical escalations due to outages often cause top management to “take the best and brightest staff members and put them on the problem, regardless of what they’re working on.” So, critical project resources are pulled into firefighting, instead of working on high-value projects and process improvement initiatives.

Managers will recognize that these critical resources are often unavailable, with little visibility into the many sources of urgent work. Dates are often missed for critical path tasks with devastating effects on project due dates.

From the business perspective, these two factors lead to the conclusion that IT can neither keep the existing lights on nor install the new lighting that the business needs (i.e., operate or maintain IT and complete IT projects). This conclusion is often the driver to outsource IT management.

However, there is an unstated risk: An IT management organization that cannot manage IT operations in-house may not be able to manage the outsourcing arrangement and governance when the moving parts are outsourced.

A Hypothetical Case Study

This case study reflects a commonly experienced syndrome while protecting the identities of the innocent. The cycle starts as the IT management function is sourced for bids. These are often long-term and expensive contracts, often in the billions of dollars, extending over many years. And as the IT outsourcing providers exist in a competitive and concentrated industry segment, cost is a significant factor.

Unfortunately, the structure of the cost model for many of the outsourcing bids is often fundamentally flawed. For instance, in a hypothetical five-year contract bid, positive cash flow for the outsourcer is jeopardized by year 2. Year 1 cost reduction goals are often accomplished by pay reductions and consolidating software licenses. After that, the outsourcer becomes very reliant on change fees and offering new services to cover up a growing gap between projected and actual expenditures.

By year 3, the outsourcer often has to reduce their head count, often letting their most expensive and experienced people go. We know this because service levels start to decline: There are an ever-increasing number of unplanned outages, and more Severity 1 outages become protracted multiday outages, and often the provider never successfully resolves the underlying or root cause.

This leads to more and more service level agreement (SLA) penalties, with money now being paid from the outsourcer to the client (a disturbing enough trend), but then something far more disturbing occurs. The service request backlog of client requests continues to grow. If these projects could be completed by the outsourcer, some of the cash flow problems could be solved, but instead, the outsourcer is mired with reactive and unplanned work.

So, client projects never get completed, project dollars are never billed, and client satisfaction continues to drop. Furthermore, sufficient cycles for internal process improvement projects cannot be allocated, and service levels also keep dropping. Thus continues the downward spiral for the outsourcer. By year 4 and year 5, customer satisfaction is so low that it becomes almost inevitable that the client puts the contract out for rebid by other providers.

And so the cycle begins again. The cumulative cost to the client and outsourcer, as measured by human cost, harm to stakeholders, damage to competitive ability, and loss to shareholders, is immense.

An Effective System of IT Operations

We believe that it doesn’t really matter who is doing the work if an appropriate system for “doing IT operations” is not in place. The system starts with how IT contributes to the company’s strategy (What must we do to have success?). A clear understanding of what is necessary, the definition of the work to be done, and a detailed specification of quantity, quality, and time are critical to creating accountability and defect prevention. Only then can a system of controls be designed to protect the goals of the company and the output of those controls used to illuminate success or failure.

This situation is betrayed by the focus on SLAs by IT management—which is classic after-the-fact management—versus a broader systemic approach that prevents issues with leading indicator measurements. The cost of defects in this scenario is akin to manufacturing, where orders of magnitude in expense reduction are realized by doing quality early versus picking up wreckage and finding flight recorders and reassembling a crashed airplane to figure out what happened and who is at fault.

Call to Action

In our research, we find a four- to five-times productivity difference between high and low performers.

IT operations

• Are Severity 1 outages measured in minutes or hours versus days or weeks?

• What percentage of the organization’s fixes work the first time? Because they have a culture of causality, high performers average around 90 percent versus 50 percent for low performers.

• What percentage of changes fail, causing some sort of episode of unplanned work? High performers have a culture of change management and average around 95 percent to 99 percent, versus around 80 percent for low performers.

Compliance

• What percentage of audit findings are repeat findings? In high performers, typically fewer than 5 percent of audit findings are not fixed within one year.

Security

• What percentage of security breaches are detected by an automated internal control? In high performers, security breaches are so quickly detected and corrected that they rarely impact customers.

Many of these can be collected by observation, as opposed to substantive audits, and are very accurate predictors of daily operations. Formulating the profile of an outsourcer’s daily operations can help to guide the selection of an effective outsourcer, as well as ensuring that the selected outsourcer remains effective.

We can verify that an effective system of operations exists by finding evidence of the following.

• The company has stated its goals.

• IT has defined what it must do to help the company reach its goals.

• IT understands and has documented the work that needs to be done (e.g., projects and IT operations).

• IT has created detailed specifications with respect to the quantity of work, the quality required to meet the company’s goals, and the time needed to do this work.

• IT understands the capabilities needed to deliver the aforementioned work in terms of time horizons, and other key management skills and organization must be constructed to do the work.

• IT has created a process infrastructure to accomplish the work consistently in tandem with the organizational design.

• IT has created an appropriate system of controls to instrument the effectiveness of the execution of the system and its key components.

CMMI for Services includes practices for all of these and, with its associated appraisal method, the means to gather the evidence of these practices. Without an understanding of the preceding profile (and there is much more to consider), outsourcing success would be more akin to winning the lottery than to picking up a telephone in your office and getting a dial tone.

Plans Are Worthless

By Brad Nelson

Author Comments: From early in the development of the CMMI-SVC model, we began to hear concerns from users about the guidance or policy that might be imposed by government acquirers on providers bidding on service contracts. We sought the participation of experts such as Brad Nelson on our Advisory Group to ensure that we were considering these issues. In this essay, the author, who works on industrial policy for the Office of the Secretary of Defense, makes clear that it is appropriate capability that is sought, not the single digits of a maturity level rating. Further, he describes the ongoing responsibility of the government acquirer, rather than just the responsibility of the provider.

The Limits of the Maturity Level Number

The CMMI model developed by the CMMI Product Development Team (involving representatives from industry, government, and the Software Engineering Institute [SEI] of Carnegie Mellon) can be thought of as an advanced process planning tool. CMMI defines maturity levels ranging from level 1—ad hoc performance—through level 5—process and subprocess optimization.

Stated colloquially, a level 1 organization accomplishes goals without a well-developed organizational memory to ensure that good decisions leading to work accomplishment will be repeated. It’s sometimes said that a level 1 organization is dependent on individual heroes who react well to events and other people. On the other end of the spectrum, a level 5 organization has measureable processes that repeatedly guide good decisions, and those processes are continuously improved.

A level 5 organization has a breadth and depth of institutional capability and culture to reliably optimize workflows and isn’t dependent on any one person. It’s certainly reasonable to expect a much higher probability of project success from a level 5 organization than from a level 1 organization. The SEI certifies individuals to provide CMMI maturity level appraisals using a Standard CMMI Appraisal Method for Process Improvement (SCAMPI). Given this well-organized CMMI infrastructure, wouldn’t it make sense for a buyer to require minimum maturity level ratings for potential suppliers?

What’s wrong with Department of Defense (DoD) staff members who provide opinions that “DoD does not place significant emphasis on capability level or maturity level ratings...?”2 Don’t they get it?

2. CMMI Guidebook for Acquirers Team. Understanding and Leveraging a Supplier’s CMMI Efforts: A Guidebook for Acquirers (CMU/SEI-2007-TR-004). Pittsburgh: Software Engineering Institute, Carnegie Mellon University, March 2007; www.sei.cmu.edu/publications/documents/07.reports/07tr004.html.

Some understanding of this opinion might be gained through the examination of a quote from General Dwight D. Eisenhower, who said that “plans are worthless but planning is everything.” It’s quite a pithy saying, and a couple of things can be quickly inferred. The first is that given complex endeavors with a large number of variables, plans are at high risk of obsolescence. The second is that the familiarization and insights gained from the planning process are invaluable to making informed adaptations to changing conditions.

Perhaps oversimplifying a bit, a plan is a static artifact, and those who rely on static artifacts do so at their own peril. The real value of a plan is that its completion facilitates the detailed situational awareness of the planner and his or her ability to perform well in a changing environment. But don’t continuously improving level 5 organizations avoid the trap of static process planning?

While it may appear like “hairsplitting” to some, it’s critical to observe that the preceding discussion of the value of a plan applies to the CMMI rating, not process plans. In fact, it’s actually the CMMI rating that’s the static artifact.

Viewed from a different angle, even though a high-maturity organization may have process plans that are adapted and optimized over time, the appraiser’s observation of that organization is static. Organizations and the people in them change over time. Furthermore, careful consideration must be given to the relationship between a SCAMPI appraiser’s observations of prior work by one group of people to the new work with possibly different people. What was relevant yesterday may not be relevant today.

Considerations for the Responsible Buyer

When committing hard-earned money to a purchase, a buyer hopes for the best results. Going beyond hope, a smart buyer looks for indicators that a seller actually has the capability to achieve expected results. An experienced smart buyer establishes requirements to accomplish the desired results by a capable supplier. Understanding what a CMMI rating is and isn’t helps the smart and experienced buyer evaluate CMMI ratings appropriately.

To estimate a supplier’s probability of success, a good buyer must first understand what is being purchased. A buyer expecting that a supplier’s mature processes will enhance their probabilities of success must do more than hope that a past CMMI rating is applicable to the current project. Due diligence requires the buyer to analyze the relevance of a potential supplier’s processes to their particular project.

When this analysis is done, it should carry substantially more weight than a CMMI rating. When it’s not done and significant weight is given to a rating, the buyer is effectively placing their due diligence in the hands of the appraiser. This is an obvious misuse of the rating. A CMMI rating can be one of many indicators of past performance, but a rating is a “rating” and not a “qualification.” CMMI appraisers do not qualify suppliers.

Remaining Engaged after Buying

Once a savvy buyer chooses a qualified supplier, the buyer’s real work begins. In the words of ADM Hyman Rickover, father of the nuclear navy, “You get what you inspect, not what you expect.” This principle is applied every day by smart, experienced buyers when they plan and perform contract monitoring. It’s an axiom that performance monitoring should focus on results rather than process.

Nevertheless, intermediate results of many endeavors are ambiguous, and forward-looking process monitoring can reduce the ambiguity and point to future results. CMMI can provide valuable goals and benchmarks that help a supplier to develop mature processes leading to successful results, and SCAMPI ratings can provide constructive independent feedback to the process owner.

A rating, though, carries with it no binding obligation to apply processes at appraised levels to particular projects or endeavors. A rating is not a qualification, and it’s also not a license. Qualifications and licenses imply mutual responsibilities and oversight by the granting authority. Once a CMMI rating is granted, neither responsibilities nor oversight is associated with it.

A failure or inability to maintain process performance at a rated level carries no penalty. There is no mechanism for the SEI or a SCAMPI appraiser to reduce or revoke a rating for inadequate performance. The appraiser has no monitoring function he or she uses after providing an appraisal rating at a point in time, and certainly has no responsibility to a buyer.

The obligation to perform to any particular standard is between the buyer and the supplier. Essentially, if a buyer depends on a supplier’s CMMI rating for project performance and uses it as justification for reducing project oversight, it would be a misunderstanding and misuse of the CMMI rating as well as an abdication of their own responsibility.

Seeking Accomplishment as Well as Capability

It is intuitive that mature processes enable high-quality completion of complex tasks. CMMI provides an advanced framework for the self-examination necessary to develop those processes. The satisfaction of reaching a high level of capability represented by a CMMI rating is well justified. It is possible, though, to lose perspective and confuse capability with accomplishments.

It’s been said that astute hiring officials can detect job applicants’ resumes that are overweighted with documented capabilities and underweighted with documented accomplishments. This may be a good analogy for proposal evaluation teams. The familiar phrase “ticket punching” cynically captures some of this imbalance. Herein lies another reason why CMMI can be quite valuable, yet entirely inappropriate, as a contract requirement.

In the cold, hard, literal world of contracts and acquisition, buyers must be careful what they ask for. Buyers want suppliers that embrace minimum levels of process maturity, but “embrace” just doesn’t make for good contract language. While it might at first seem to be a good substitute to require CMMI ratings instead, it can inadvertently encourage a cynical “ticket punching” approach to the qualification of potential suppliers. Because ratings themselves engender no accountability, there should be no expectation that ratings will improve project outcomes.

Pulling this thread a bit more, it’s not uncommon to hear requests for templates to develop the CMMI artifacts necessary for an appraisal. While templates could be useful to an organization embracing process maturity, they could also be misused by a more cynical organization to shortcut process maturation and get to the artifact necessary for a “ticket punching” rating. Appraisers are aware of this trap and do more than merely examine the standard artifacts. But as a practical matter, it can be difficult to distinguish between the minimum necessary to get the “ticket punch” and a more sincere effort to develop mature processes.

If an influential buyer such as the Department of Defense were to require CMMI ratings, it would likely lead to more CMMI ticket punching rather than CMMI embracing. The best that the DoD can do to accomplish the positive and avoid the negative has already been stated as “not placing significant emphasis on capability level or maturity level ratings, but rather promot[ing] CMMI as a tool for internal process improvement.”3

3. Ibid.

Summary

To summarize, it certainly appears reasonable to expect more mature organizations to have a higher probability of consistently achieving positive results than less mature organizations. CMMI ratings are external indicators that provide information, but they are only indicators. A savvy buyer must know what the indicators mean and what they don’t mean. Ultimately, accountability for project success is between the buyer and the seller. CMMI and SCAMPI ratings are well structured to provide important guidance and feedback to an organization on its process maturity, but responsible buyers must perform their own due diligence, appraisal of supplier capabilities, and monitoring of work in progress.

How to Appraise Security Using CMMI for Services

By Kieran Doyle

Author Comments: CMMI teams have regularly considered how to include security (and how much of it to include) in the various CMMI models. For the most part, coverage of security is modest and is treated as a class of requirement or risk. However, service organizations using CMMI-SVC, especially IT service organizations, would like to handle security concerns more fully. In this essay, Kieran Doyle, an instructor and certified high-maturity appraiser with Lamri, explains how security content from ISO 27001 can be included in a CMMI-SVC appraisal. His advice, based on a real case, is also useful for anyone using CMMI-SVC alongside another framework, such as ITIL or ISO standards.

“We would like to include security in the scope of our CMMI for Services appraisal.” With these words, the client lays down the gaunt-let. The subtext is, “We are already using something that includes security. I like CMMI, but I want to continue covering everything that I am currently doing.” These were the instructions received from a recent change sponsor within a Scandinavian government organization. So, from where does the challenge emerge to include security in the appraisal scope? More importantly, is there a way to use the power of CMMI and SCAMPI to address all of the client’s needs?

Information security is already an intrinsic part of both the Information Technology Infrastructure Library (ITIL) and the international standard for IT service management, ISO 20000. Both are in common use in the IT industry. The ISO 20000 standard provides good guidance on what is needed to implement an appropriate IT service management system. ITIL provides guidance on how IT service management may be implemented.

So, there is at least an implied requirement with many organizations that CMMI-SVC should be able to deal with most if not all of the topics that ISO 20000 and ITIL already address; and by and large it does! Indeed, there are probably advantages to using all three frameworks as useful tools in your process and business improvement toolbox.

As I’ve mentioned, ISO 20000 provides guidance on the requirements for an IT service management system. But it does not have the evolutionary structure that CMMI contains. In other words, CMMISVC can provide a roadmap along which the process capability of the organization can evolve.

Similarly, ITIL is a useful library of how to go about implementing IT service processes. In ITIL Version 3, this sense of it being a library of good ideas has come even more to the fore. But it needs something like CMMI-SVC to structure why we are doing it, and to help select the most important elements in the library for the individual implementation.

Thus, ISO 20000, ITIL, and CMMI-SVC work extremely well together. But CMMI-SVC doesn’t cover IT security, and it is not unreasonable for organizations already using ISO 20000 or ITIL to ask a lead appraiser if they can include their security practices in the appraisal scope, particularly when conducting baseline and diagnostic appraisals. So, how can we realistically answer this question?

One answer is just to say, sorry, CMMI-SVC is not designed to cover this area, at least not yet. But there is a different tack we can use.

The SCAMPI approach is probably one of the most rigorous appraisal methods available. Although it is closely linked with CMMI, it can potentially be used with any reference framework to evaluate the processes of an organization. So, if we had a suitable reference framework, SCAMPI could readily cope with IT security.

What might such a reference framework look like? Well, we could look to ISO 27001 for ideas. This standard provides the requirements for setting up, then running and maintaining, the system that an organization needs for effective IT information security. How could we use this standard with the principles of both CMMI and SCAMPI?

One thing that CMMI, in all its shapes, is very good at helping organizations do is to institutionalize their processes. As longtime CMMI users know, the generic goals and practices are extremely effective at getting the right kind of management attention for setting up and keeping an infrastructure that supports the continued, effective operation of an organization’s processes. No matter what discipline we need to institutionalize, CMMI’s generic goals and practices would need to be in the mix somewhere.

So, in our appraisal of an IT security system, we would need to look for evidence of its institutionalization. The generic practices as they currently stand in CMMI-SVC can be used to look for evidence of planning the IT security processes, providing adequate resources and training for the support of the IT security system, and so on. But it turns out that ISO 27001 has some useful content in this respect as well.

Certain clauses in the ISO 27001 standard map very neatly to the CMMI generic practices. For example, consider the following:

• Clause 4.3, Documentation Requirement: contains aspects of policy (GP 2.1) and configuration control (GP 2.6).

• Clause 5, Management Responsibility: details further aspects of policy (GP 2.1) plus the provision of resources (GP 2.3), training (GP 2.5), and assigning responsibility (GP 2.4).

• Clause 6, Internal ISMS Audits: requires that the control activities, processes, and procedures of the IT security management system are checked for conformance to the standard and that they perform as expected (GP 2.9).

• Clause 7, Management Review of the IT Security Management System: necessitates that managers make sure that the system continues to operate suitably (GP 2.10). But additionally, this management check may take input from measurement and monitoring type activities (GP 2.8).

• Clause 8, IT Security Management System Improvement: looks to ensure continuous improvement of the system. Some of this section looks similar to GP 2.9, but there is also a flavor of GP 3.1 and GP 3.2.

So, collecting evidence in a Practice Implementation Indicator (PII) for IT security as we do in SCAMPI, we could use these sections of the ISO 27001 like GPs to guide our examination. But what is it about the material that is more unique to setting up and running an IT security management system? In CMMI, this material would be contained in the specific goals and practices.

Looking once more to ISO 27001, we find material that is a suitable template for this type of content. The following clauses of the standard appear appropriate.

• Clause 4.2.1, Establish the Information Security Management System: This deals with scoping the security system; defining policies for it; defining an approach to identifying and evaluating security threats and how to deal with them; and obtaining management approval for the plans and mechanisms defined.

• Clause 4.2.2, Implement and Operate the Information Security Management System: This deals with formulating a plan to operate the security system to manage the level of threat and then implementing that plan.

• Clause 4.2.3, Monitor and Review the Information Security Management System: This uses the mechanisms of the system to monitor threats to information security. Where action is required to address a threat (e.g., a security breach), it is implemented and tracked to a satisfactory conclusion.

• Clause 4.2.4, Maintain and Improve the Information Security Management System: This uses the data from measuring and monitoring the system to implement corrections or improvements of the system.

Incorporating this content into the typical structure of a CMMI process area could provide a suitable framework for organizing the evidence in a SCAMPI type appraisal of IT security management. Often, CMMI process areas are structured with one or more specific goals concerned with “Preparing for operating a process or system” and one or more specific goals dealing with “Implementing or providing the resultant system.” The match of this structure to the relevant ISO 27001 clauses is very appropriate.

We could structure our specific component of the PII to look for evidence in two main blocks.

1. Establishing and Maintaining an Information Security Management System: This involves activities guided by the principles in section 4.2.1 of the standard and would look something like this.

• Identify the scope and objectives for the information security management system.

• Identify the approach to identifying and assessing information security threats.

• Identify, analyze, and evaluate information security threats.

• Select options for treating information security threats relevant to the threat control objectives.

• Obtain commitment to the information security management system from all relevant stakeholders.

2. Providing Information Security Using the Agreed Information Security Management System: This would then involve implementing the system devised in part (a) and would look something like this.

• Implement and operate the agreed information security management system.

• Monitor and review the information security management system.

• Maintain and improve the information security management system.

Such an approach allows us to more easily include this discipline in the scope of a SCAMPI appraisal and enables the prior data collection and subsequent verification that is a signature of SCAMPI appraisals. It means that a non-CMMI area can be included alongside CMMI process areas with ease.

The intention has been to give organizations a tool that they can use to address IT security within their CMMI-SVC improvement initiatives. Such a pragmatic inclusion will make it easier for organizations to take advantage of the evolutionary and inclusive approach to service management improvement offered by CMMI-SVC. This makes CMMI-SVC adoption a case of building on the good work done to date.

Public Education in an Age of Accountability

By Betsey Cox-Buteau

Author Comments: The field of education is among the service types in which we frequently hear people say that they hope to see application of CMMI for Services. (The other two areas most commonly mentioned are health care and finance.) Because good results in education are important to society, process champions are eager to see the benefits of process improvement that have been realized in other fields. Betsey Cox-Buteau is a school administrator and consultant, who works with struggling schools to improve test scores and professional development. Here she makes the case for how CMMI-SVC could make a difference in U.S. schools.

Orienting Education to Delivering Services

For generations, schools have been more about “teaching” than about “student learning.” It has often been said that the job of the teacher is to teach and the job of the student is to learn. Over time, some teachers have adopted this as an excuse to shrug off the need to change their pedagogy to produce higher levels of learning among all students. “I teach the curriculum—if the students don’t learn it, then it’s their fault.” This attitude can no longer be tolerated. The school’s professional community is responsible for seeing that all students learn and that they make adequate progress toward specific curriculum goals. It has now become the teacher’s responsibility not just to deliver curriculum instruction, but also to ensure that each student actually learns the curriculum and is able to apply it. Teachers must adjust their pedagogy to meet the needs of all of their students so that all can learn.

In other words, the staffs of our schools have been adjusting to the notion that their profession is becoming defined as a service industry. Hence, the CMMI for Services model comes to us at an opportune moment in the history of public education.

Along with curriculum accountability, schools are always experiencing pressure to hold down the cost of educating their students. Public schools are expected to provide the best education at the lowest cost per student. Even school districts in wealthier communities face this pressure, especially during difficult economic times. This dual expectation is a call for the most efficient education system possible, a system that provides society with well-prepared young workers and invested members of a democratic society, and a system that streamlines processes and delivers the greatest level of student learning for the investment. Where do these expectations leave school administration?

School administrators have very little formal training in business practices or process improvement methods, and they tend to inherit a predetermined system of service delivery practices when they walk into a new position. These inherited practices are often unclear; in most cases they are documented poorly or not at all. Incoming administrators rarely have the opportunity to learn from their predecessors before they leave the job. Often, new administrators find that they must learn the old system from the office secretary, if the secretary stayed when the old boss left. This practice leads to a fair amount of “reinventing the wheel” each time a new person comes into a school system to lead it. For school superintendents nationwide, that is an average of every five to six years.4 The figures for school principals are similar.

4. Glass, Thomas E.; Bjork, Lars; and Brunner, C. Cryss. “The Study of the American School Superintendency, 2000. A Look at the Superintendent of Education in the New Millennium.” American Association of School Administrators (Arlington, VA: 2000); www.eric.ed.gov:80/ERICWebPortal/custom/portlets/recordDetails/detailmini.jsp?_nfpb=true&_&ERICExtSearch_SearchValue_0=ED440475&ERICExtSearch_SearchType_0=no&accno=ED440475.

Federal Legislation Drives Change

On January 8, 2002, then-President George W. Bush signed into law the No Child Left Behind (NCLB) Act of 2001. This legislation was unprecedented in its federal reach into every public school in the nation. That reach shook the foundation of a very old system that has remained largely an ad hoc operation in the majority of America’s school districts for more than 100 years. For the first time, if public schools wished to continue to receive federal funds, they had to demonstrate progress toward a goal of 100 percent of children reading and performing math at their grade level by the year 2014.

To measure progress toward that goal, each state was required to formulate an annual assessment process. Under these new requirements, if schools did not show adequate yearly progress (AYP), they would fall subject to varying levels of consequences to be imposed by their state. The consequences included allowing students to attend other schools in their district, creating written school improvement plans, and replacing teachers and administrators. School boards and administrations had to reconsider the frequently used excuse that public schools were different from businesses because their products were human beings; therefore, business standards and processes did not apply to them. The NCLB Act forced all stakeholders to revisit the real product of a public school because of this new accountability. The product of the public school is now being defined as “student learning,” and it is measurable.

As the curriculum accountability required by the NCLB Act has become institutionalized over time through state testing, and the focus tightens on data analysis regarding levels of student learning, the concept that schools provide a service to students, parents, and society has become clearer to those who work in our schools and those who create educational policy.

A Service Agreement for Education

So, how can public education begin to take advantage of the CMMI for Services model? The education service system is already in place, and students have been graduating for a long, long time, so the system must be working, right? It may be “working” in some sense, but the same questions present themselves to each new administrator when he or she comes into a building or a central office. Is the present system working well? Is it efficient? Are the processes institutionalized, or will they fade away over time? It is time for school administrators, from the central office to the individual buildings, to examine the processes involved in their service system and determine their efficacy against their “service agreement” with taxpayers. The CMMI for Services model provides many process areas within which to accomplish these tasks.

For example, look at any school’s mission statement. It often begins something like this: “Our school is dedicated to serving the individual academic, social-emotional, and physical needs of each student, to create lifelong learners....” Are these goals real? Are they measurable? If so, do our public schools have in place a reliable method to measure the achievement of these goals? If schools begin to look at themselves as service providers, then their services must be defined in a measurable manner. When the goals are measurable the processes to deliver those services can be measured and analyzed. Using the resulting data, the services can be redesigned, refined, and institutionalized.

Although the nomenclature is different, the “mission statement” is in essence a school’s “service agreement” with its customers. The CMMI for Services model offers guidance in the Service Delivery process area (SP 1.1 and 1.2) to address the process for developing a measurable service agreement. Once a measurable service agreement is in place, all the stakeholders will have a firm foundation on which to build the processes necessary to successfully meet the requirements of that agreement.

A Process for Consistent Curriculum Delivery

One of those requirements critical to the measurable service agreement is the delivery of curriculum. This area is another in which the CMMI for Services model could move a school system toward a streamlined, dynamic curriculum delivery system. Public schools are ripe for a well-documented structuring of the delivery of their services in curriculum delivery, due to the accountability requirements of the NCLB Act. Curriculum delivery is tied directly to student learning and ultimately to test scores. The challenge here is to be consistent in how we assess student learning so that progress (or the lack of it) can be recognized and understood.

Standardized processes should be in place for the review of learning assessment data, which in turn refocuses and improves the delivery of the curriculum. Ideally, curriculum review against assessment data should remain in place no matter who is in the front or central office. All too often, the superintendent or building principal leaves, and the curriculum review and improvement process breaks down. There are many reasons for this breakdown, not the least of which is personnel turnover. One of the many possible applications of the Process and Product Quality Assurance process area of the CMMI for Services model is curriculum development. This would benefit much of the curriculum development and delivery by enabling a curriculum delivery review system and measuring employee compliance with that delivery.

A Process for Efficient Decision Making

Beyond the more obvious areas of application, such as curriculum delivery, other education practices can benefit from the discipline of the CMMI for Services model. For example, the decision making in school buildings can be as simple as a librarian choosing a book or as involved as a large committee choosing a curriculum program. Decisions can be made by a harried teacher attempting to avoid internal conflict or by a principal who wants to defuse the anger of a parent. Many decisions are made. Some decisions affect few, and some affect many. Some decisions may have long-lasting implications for a child’s life or for a parent’s or taxpayer’s trust in the system; and that trust (or lack of it) shows up in the voting booth each year. If the processes of each service delivery subsystem are mature and transparent, the provider and the customers will be satisfied and will trust each other. When applied to refine the decision-making process in a school district, the Decision Analysis and Resolution process area of the model can be instrumental in ensuring that personnel make the best decisions possible using a standard, approved, and institutionalized process; the result is the establishment of greater trust with the customer.

Providing for Sustained Improvement

In this era of rapid turnover in school administration, the institutionalization of effective processes is paramount to the continuity of providing high-quality service to all stakeholders. As superintendents and school principals move to other administrative positions and school districts, the use of the model’s generic goals and practices can provide a means of ensuring that these effective system processes will have lasting continuity. Each time a process area is established and refined, the generic goals and practices provide a framework in place behind it that helps to keep the improvements “locked in” even after later personnel changes. Policies documenting the adopted processes, positions of responsibility named for the implementation and follow-through of these new procedures, and other generic practices will remain intact and in effect long after any one person moves through the organization.

Other Applications for the Model

These process areas are just a few of the many process areas of the CMMI for Services model that would be beneficial when applied to the public education system. Others would include

• Integrated Project Management, for the inclusion of stakeholders, (i.e., parents and the community) in the education of their children

• Measurement and Analysis, to ensure the correct and continuous use of data to inform all aspects of the educational process

• Organizational Innovation and Deployment, to ensure an orderly and organized process for the piloting and adoption of new curricula, educational programs, delivery methods, and technologies

• Organizational Process Definition, to organize the standard processes in a school district ranging from purchasing supplies to curriculum review cycles

• Organizational Process Performance, to establish the use of data to provide measures of improvement of the processes used in the district in an effort to continually improve them

• Organizational Training, to establish the ongoing training of teachers and other staff members so that as they transition into and out of a building or position, continuity of the delivery of curriculum and other services is maintained

• Service System Transition, to establish a smooth transition from one way of doing things to another, while minimizing disruption to student learning

A Better Future for American Education

With no expectation of a lifting or significant easing of the present assessment and accountability measures placed on public schools by society’s expectations and drivers such as the No Child Left Behind Act, these institutions of student learning can benefit from the application of this model. If our schools are to deliver the highest rate of student learning using the least amount of taxpayer dollars, the CMMI for Services model is a natural and essential tool for accomplishing this goal.

National Government Services Uses CMMI-SVC and Builds on a History with CMMI-DEV

By Angela Marks and Bob Green

Author Comments: National Government Services works in the health care domain and is among the earliest adopters of CMMI-SVC. The organization has a long history of successful use of CMMI-DEV. Like many users of CMMI, the organization does some development and some service. In its case, the predominant mission is service, and the CMMI-SVC model is a good fit for its work. However, the service system it relies on is large and complex enough that the Service System Development (SSD) process area in CMMI-SVC is not sufficient for its purposes. The organization needs to use the Engineering process areas in CMMI-DEV instead. This is one of the tailorings that the builders of CMMI-SVC envisioned to accommodate the full range of service users and the reason that SSD is an “addition” (or is optional). Working with SEI Partner Gary Norausky, National Government Services has field-tested the notion of CMMI-SVC use with added Engineering process areas from CMMI-DEV—with good results.

Introduction

How does a mid-size CMMI-DEV maturity level 3 organization leverage its experience, knowledge, extant library of processes, and existing structures in a successful implementation of CMMI-SVC? This was the question that the IT Governance Team at National Government Services, the nation’s largest Medicare contractor, faced as it created and evaluated a strategic plan for its participation in the January 2009 four-day CMMI-SVC pilot evaluation.

Because of the nonprescriptive nature of the CMMI models, National Government Services used a unique approach that paid off, especially in terms of a soft return on investment, by carefully integrating CMMI-DEV and CMMI-SVC. That strategy forms the context of this case study.

Overview and Strategy

During its strategy development sessions, the IT Governance Team at National Government Services, working with Norausky Process Solutions, created a plan to leverage its strong development background in the implementation of CMMI-SVC, complementing that which was already in place and refining extant processes.

Rather than viewing CMMI-SVC as another separate and distinct model, National Government Services set out to merge CMMI-DEV and CMMI-SVC with the goal of augmenting its current library of process assets as well as using this blended approach and further institutionalizing its self-mandate to strive for continuous process improvement in the way it does business. In short, National Government Services was successful in getting the best out of both constellations. A reasonable question, then, is how National Government Services could be confident its strategic approach would work. Tailoring best sums up the answer.

Model Tailoring

A critical element of the IT Governance Team’s strategy included incorporating the best of both constellations; namely, the best of CMMI-DEV and CMMI-SVC. National Government Services posits that tailoring serves as the best strategic approach for accomplishing a nonbinary approach to augmenting IT service delivery. For example, the IT Governance Team, using a tailoring matrix approach, easily established Incident Resolution and Prevention (IRP) and Service Continuity (SCON) practices by using extant practices that were already deeply embedded in the company’s disaster recovery processes and culture.

National Government Services’ study of the CMMI-SVC model made evident the fact that tailoring is a critical aspect, or component, of Strategic Service Management (STSM), where the purpose is to establish and maintain standard services in concert with strategic needs and plans. Because National Government Services is a CMMI-DEV maturity level 3 organization, the focus was on tailoring as a way to put several pieces of the CMMI-DEV and CMMI-SVC models together.

Pieces of Two Puzzles

By way of an analogy, National Government Services initially viewed CMMI-DEV and CMMI-SVC as two boxes of a multiplicity of puzzle pieces that, in the end, assemble to create a single picture. National Government Services had already assembled the majority of the pieces from the CMMI-DEV box and was reaching deep into the CMMI-SVC box to find the pieces that would complete the picture.

As National Government Services explored CMMI-SVC, it began to recognize that CMMI-SVC adds dimension to and completes the delivery manner, and methods, of its suite of processes and products. By tailoring existing processes, National Government Services was able to achieve a full-spectrum means of doing business and meeting customer needs.

Results of the Strategy

Critical to National Government Services’ strategic approach was the IT Governance Team, which serves as the Engineering Process Group. The IT Governance Team realized that CMMI-DEV covers only certain areas of IT, but the augmentation of CMMI-DEV with CMMI-SVC enabled the IT Governance Team to introduce standardized process improvement opportunities into such nondevelopment areas of IT as Systems Security, Networking/Infrastructure, and Systems Service Desks. Additionally, National Government Services has been implementing ITIL and has found that CMMI-SVC complements ITIL.

A key aspect of National Government Services’ model-blending strategy was our discovery of the CMMI-DEV practices that were used to form the Service System Development (SSD) process area. SSD, the purpose of which is to analyze, design, develop, integrate, verify, and validate service systems, including service system components, to satisfy existing or anticipated service agreements, is satisfied by strongly developed practices in CMMI-DEV process areas such as Requirements Development (RD), Technical Solution (TS), Product Integration (PI), Verification (VER), and Validation (VAL).

Since SSD provides an alternative means of achieving the practices of the “Engineering” process areas of the CMMI-DEV constellation, National Government Services determined that SSD was not necessary, because its robust development processes provided full coverage of this area. By invoking Decision Analysis and Resolution (DAR), a process area in both models, National Government Services determined that, due to the scope of its projects, which tend to be large, it was easier to establish the necessary behaviors under the CMMI-DEV process areas. For its portfolio of projects, National Government Services uses the SSD process area to provide guidance for tailoring its CMMI-DEV process areas to augment service delivery of its large-scale, complex service systems.

In this way, National Government Services builds on its success with CMMI-DEV while applying CMMI-SVC to the service delivery work that is its core mission. Such an approach illustrates why CMMI was created and how it is designed to work. The models are part of an integrated framework that enables organizations such as National Government Services to gain an advantage from what it has learned using one CMMI model when beginning implementation with another.

Treating Systems Engineering as a Service

By Suzanne Garcia-Miller

Author Comments: Somewhat to the surprise of the authors of CMMI for Services, we have seen keen interest from developers in applying the model to their work. In this essay, Suzanne Garcia-Miller, a noted CMMI author and an applied researcher working on systems of systems and other dynamic system types, writes about the value to be gained by treating development, and systems engineering in particular, as a service.

Many of us who have worked in and around CMMs for a long time are accustomed to applying them to a system development lifecycle. Sometimes, when applying the practices to settings that provide valuable services to others (e.g., independent verification and validation agents for U.S. government contracts, or providers of data-mining services for market research firms in the commercial sector), we provided an ad hoc service to help an organization in that setting to tailor its implementation of the CMM in use (usually CMMI-DEV in recent years). We did this tailoring by

• Interpreting definitions in different ways (e.g., looking at a project as the time span associated with a service level agreement)

• Providing ideas for different classes of work products not typically discussed in development-focused models (e.g., the service level agreement as a key element of a project plan)

• Interpreting the scope of one or more process areas differently than originally described (e.g., the scope of the Requirements Management process area being interpreted as the requirements associated with a service agreement [usually a contract])

With the publication of CMMI for Services, V1.2, organizations whose primary business focus is service delivery now have an opportunity to use good practices from their business context to improve the capability of their delivery and support processes. And an emerging discipline called “service systems engineering” explicitly focuses on applying the tools, techniques, and thinking style of systems engineering to services of many types. (A Google search in May 2009 on “service systems engineering” as a phrase yielded more than 7,000 hits.)

In preparing to teach this new CMMI-SVC constellation, especially in the early days of its adoption, many of the students attending pilot course offerings were people much more experienced with a product development context using CMMI-DEV. Several of us were struck by the difficulty more than a few experienced people were having when trying to “shift gears” into a service mindset. To help them (and myself, who sometimes inadvertently slipped into “tangible product mode”), I started thinking about the service aspects of pretty much everything I came into contact with: service aspects of research, service aspects of teaching CMMI courses, service aspects of systems-of-systems governance, and, inevitably, service aspects of systems engineering.

In thinking about conversations going back as far as the development of the initial Systems Engineering CMM (1993–1995), I remember struggles to capture what was different about systems engineering as a discipline. In trying to capture important practices, we kept coming across the problem of trying to express “practices” associated with

• The holistic viewpoint that a good systems engineer brings to a project

• The conflict resolution activities (among both technical and organizational elements) that are a constant element of a systems engineer’s role

• The myriad coordination aspects of a systems engineer’s daily existence

• Being the ongoing keeper of the connection between the technical and the business or mission vision that was the source of the system being developed

• Switching advocacy roles depending on who the systems engineer is talking to—being the user’s advocate when the user isn’t in the room; being the project manager’s advocate when his or her viewpoint is under-represented in a tradeoff study

Looking back with the benefit of hindsight, I can easily conceive of these as difficulties in trying to fit the service of systems engineering into a model focused on the product development to which systems engineering is applied. I also remember a paper that Sarah Sheard wrote about the 12 roles of systems engineering [Sheard 1996]. Out of those 12 roles, at least three of them, Customer Interface, Glue among Subsystems, and Coordinator, were heavily focused on services: intangible and nonstorable products (CMMI-SVC 1.2). When looking for references for this viewpoint of systems engineering as a service on the Web, I found little that addressed the service aspect of systems engineering within the typical context of complex system development.

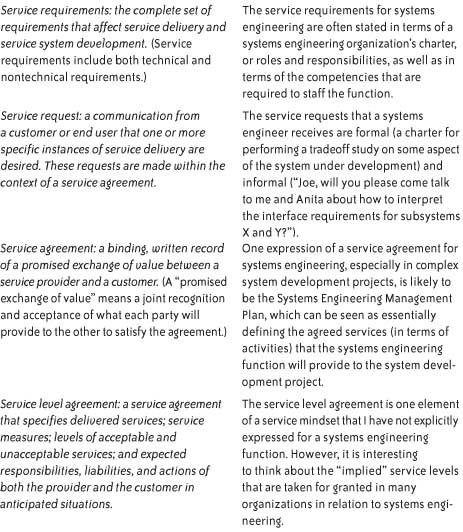

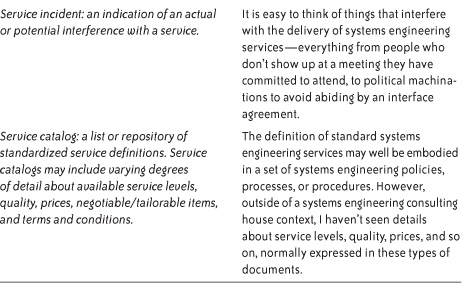

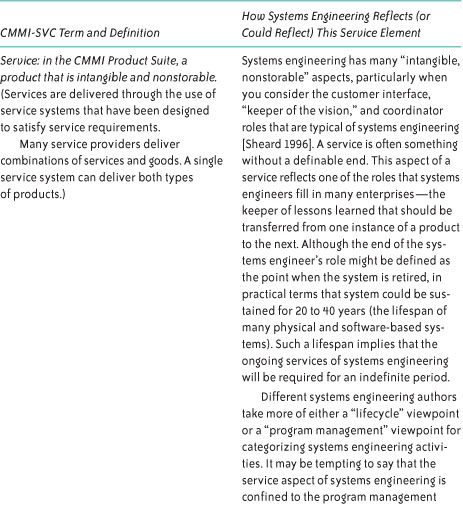

To satisfy myself that I wasn’t taking my services obsession too far, I pulled out some key definitions related to service delivery from CMMI-SVC, V1.2, and thought about how systems engineering being performed in the context of complex system development could be viewed as a service. Table 6.1 reflects my initial thoughts on this subject, and I hope they will engender a dialogue that will be productive to the systems engineering community.

Table 6.1 Nominal Mapping of Systems Engineering Aspects to CMMI-SVC Service Terms

Having completed the mapping, and, with some exception, finding it easy to interpret systems engineering from a services mindset, the next question for me is this: What value could systems engineering practitioners derive from looking at their roles and activities in this way? I’ll close this essay with a few of my ideas framed as questions, with the hope that the systems engineering community will go beyond my initial answers.

• How does viewing systems engineering as a service change the emphasis of systems engineering competencies (knowledge, skills, and process abilities)?

By making the service aspect of systems engineering explicit, the need for competencies related to the service aspects (coordination, conflict resolution, etc.) can also be made explicit, perhaps resulting in more value being placed on systems engineers who go beyond performing the translation of one development work product into another.