Chapter 6. Essays on CMMI-ACQ in Government and Industry

This chapter provides insights, guidance, and recommendations useful for organizations looking to improve their acquisition processes by using the CMMI for Acquisition (CMMI-ACQ) model.

The first set of essays describes successes and challenges experienced by organizations that have adopted CMMI-ACQ within the public sector, primarily government defense and civil agencies in the United States and in France. Included is analysis from the organization that achieved the first-ever CMMI-ACQ maturity level 5 rating.

The second set of essays discusses private-sector adoption and the unique challenges faced by industry when outsourcing IT products and services.

The third set of essays highlights some important lifecycle aspects of CMMI-ACQ that help reduce program risk. These risks can arise anywhere in the lifecycle—from planning the acquisition strategy to transitioning products and services into use.

The next set of essays covers special topics that include acquiring interoperable systems, Agile acquisition, and process improvement.

The chapter closes with a view toward how the future of CMMI might evolve and how the CMMI constellations can be used to enable enterprise-wide improvement.

Critical Issues in Government Acquisition

Mike Phillips, Brian Gallagher, and Karen Richter

Since the first edition of this book was published, even more activity has taken place to reform acquisition in the Department of Defense (DoD). Before discussing this activity, we have updated our discussion of the earlier “Defense Acquisition Performance Assessment Report” (the DAPA report) [Kadish 2006] to this new version of the model.

“Big A” Versus “Little a” Acquisition

In his letter delivering the report to Deputy Secretary of Defense Gordon England, the panel chair, Gen Kadish, noted:

Although our Acquisition System has produced the most effective weapon systems in the world, leadership periodically loses confidence in its efficiency. Multiple studies and improvements to the Acquisition System have been proposed—all with varying degrees of success. Our approach was broader than most of these studies. We addressed the “big A” Acquisition System because it includes all the management systems that [the] DoD uses, not [just] the narrow processes traditionally thought of as acquisition. The problems [the] DoD faces are deeply [e]mbedded in the “big A” management systems, not just the “little a” processes. We concluded that these processes must be stable for incremental change to be effective—they are not.

In developing the CMMI-ACQ model—a model we wanted to apply to both commercial and government organizations—we considered tackling the issues raised in the DAPA report. However, successful model adoption requires an organization to embrace the ideas (i.e., best practices) of the model for effective improvement of the processes involved.

As the preceding quote illustrates, in addition to organizations traditionally thought of as the “acquisition system” (“little a”) in the DoD, there are also organizations that are associated with the “big A,” including the Joint Capabilities Integration and Development System (JCIDS) and the Planning, Programming, Budgeting, and Execution (PPBE) system. These systems are governed by different stakeholders, directives, and instructions. To be effective, the model would need to be adopted at an extremely high level—by the DoD itself. Because of this situation, we resolved with our sponsor in the Office of the Secretary of Defense to focus on the kinds of “little a” organizations that are able to address CMMI best practices once the decision for a materiel solution has been reached.

In reality, we often find that models can have an impact beyond what might be perceived to be the “limits” of process control. The remainder of this essay highlights some of the clear leverage points at which the model supports DAPA recommendations for improvement.

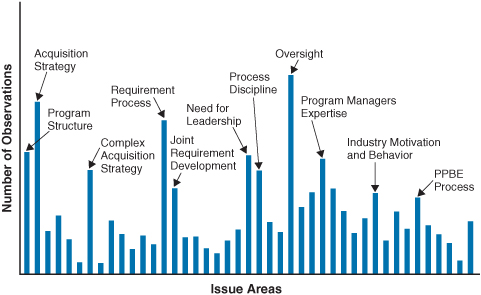

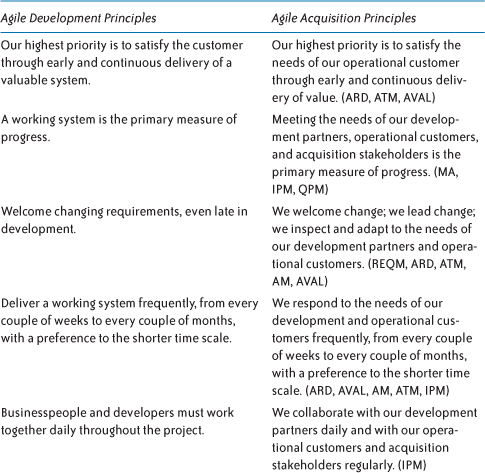

Figure 6.1 illustrates some of the issues and their relative importance as observed by the DAPA project team in reviewing previous recommendations and speaking to experts [Kadish 2006]. The issue areas in Figure 6.1 are discussed next. Although we would never claim that the CMMI-ACQ model offers a complete solution to all of the key issues addressed in the DAPA report, building the capabilities covered in the CMMI-ACQ model offers opportunities to address many of the risks in these issue areas.

Figure 6.1 Observations by Issue Area

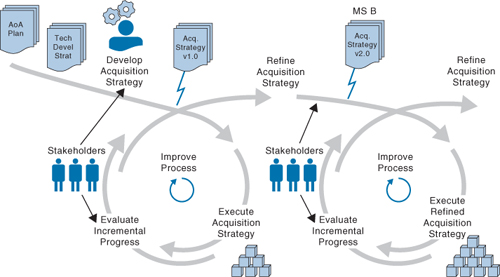

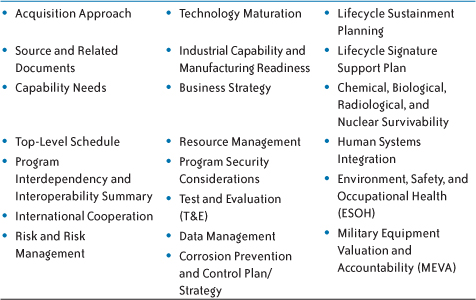

Acquisition Strategy

Acquisition Strategy is one of the more significant additions to the CMMI Model Foundation (CMF) to support the acquisition area of interest. Multiple process area locations (including Acquisition Requirements Development and Solicitation and Supplier Agreement Development) were considered before placing the acquisition strategy practice into Project Planning. Feedback from various acquisition organizations noted that many different organizational elements are stakeholders in strategy development, but its criticality to the overall plan suggested an effective fit as part of this process area. We also found that some projects might develop the initial strategy before the formal establishment of full acquisition offices, which would then accept the long-term management responsibility for the strategy. Note that the practice does not demand a specific strategy, but does expect the strategy to be a planning artifact. The full practice, “Establish and maintain the acquisition strategy,” in Project Planning specific practice 1.1, recognizes that maintenance may require strategy updates if the changing environment reflects that need. The DAPA report (p. 14) calls out some recommended strategies in the DoD environment [Kadish 2006].

Additional discussion is contained in the Acquisition Strategy: Planning for Success essay, found later in this chapter.

Program Structure

Although a model such as CMMI-ACQ should not direct specific organizational structures, the CMMI Product Team looked for ways to encourage improved operations on programs. Most significant might be practices for creating and managing teams with effective rules and guidelines such as those suggested in the Organizational Process Definition process area. The DAPA report (p. 76) noted deficiencies in operation. Organizations following model guidance may be better able to address these shortfalls.

Requirement Process

The CMMI-ACQ Product Team, recognizing the vital role played by effective requirements development in acquisition, assigned Acquisition Requirements Development to maturity level 2.1 Key to this effectiveness is specific goal 2, “Customer requirements are refined and elaborated into contractual requirements.” The importance of this activity cannot be overemphasized, as poor requirements are likely to be the most troubling type of “defect” that the acquisition organization injects into the system. The DAPA report notes that requirement errors are often injected late into the acquisition system. Examples in the report included operational test requirements unspecified by the user. Although the model cannot address such issues directly, it does provide a framework that aids in the traceability of requirements to the source, which in turn facilitates the successful resolution of issues. (The Requirements Management process area also aids in this traceability.)

Oversight

Several features in CMMI-ACQ should help users address the oversight issue. Probably the most significant help is found in the Acquisition Technical Management process area, which recognizes the need for the effective technical oversight of development activities. Acquisition Technical Management is coupled with the companion process area of Agreement Management, which addresses business issues with the supplier via contractual mechanisms. For those individuals familiar with CMMI model construction, the generic practice associated with reviewing the activities, status, and results of all the processes with higher level management and resolving issues offers yet another opportunity to resolve issues.

Need for Leadership, Program Manager Expertise, and Process Discipline

We have grouped leadership, program manager expertise, and process discipline issues together because they reflect the specific purpose of creating CMMI-ACQ—to provide best practices that enable leadership to develop capabilities within acquisition organizations, and to instill process discipline where clarity might previously have been lacking. Here the linkage between DAPA report issues and CMMI-ACQ is not specific, but very general.

Complex Acquisition System and PPBE Process

Alas, for complex acquisition system and PPBE process issues, the model does not have any specific assistance to offer. Nevertheless, the effective development of the high maturity elements of process-performance modeling, particularly if shared by both the acquirer and the system development team, may help address the challenges of “big A” budgetary exercises with more accurate analyses than otherwise would be produced.

Continuing Acquisition Reform in the Department of Defense

In 2009, the Weapon System Acquisition Reform Act of 2009 (WSARA) was passed by Congress. This act was of interest to the CMMI-ACQ community because of its emphasis on systems engineering within DoD acquisition. The CMMI-ACQ Product Team recognized the importance of systems engineering in acquisition by giving the model a solid foundation in the Acquisition Engineering process areas: Acquisition Requirements Development, Acquisition Technical Management, Acquisition Verification, and Acquisition Validation. Acquisition Engineering is designated as a new category in CMMI-ACQ V1.3 to stress its importance.

The National Defense Acquisition Act (NDAA) for fiscal year 2010 required a new acquisition process for information technology (IT) systems. This new process must be designed to include the following elements:

• Early and continual involvement of the user

• Multiple, rapidly executed increments or releases of capability

• Early, successive prototyping to support an evolutionary approach

• A modular, open-systems approach

Of course, the CMMI-ACQ model is designed with sufficient flexibility so that it may be applied to all kinds of systems and services, including IT systems. For example, the last three elements identified above are all requirements that would need to be included in the acquisition strategy developed at the very beginning of the Project Planning process area, as discussed earlier in this essay and in the Acquisition Strategy: Planning for Success essay later in this chapter.

The first new requirement for IT system acquisition—early and continual involvement of the user—is addressed pervasively throughout the CMMI-ACQ model. Clearly, in the new IT acquisition process, the “relevant stakeholder” group would always include the user.

Involvement of all relevant stakeholders in the execution of the acquisition processes is discussed throughout all process areas in various activities. Specifically, institutionalizing a process at capability or maturity level 2 requires the generic practice, “Identify and involve the relevant stakeholders of the process as planned.”

Involvement of stakeholders is an important consideration in activities including, but not limited to, the following:

• Planning

• Decision making

• Making commitments

• Communicating

• Coordinating

• Reviewing

• Conducting appraisals

• Defining requirements

• Resolving problems and issues

A specific practice in Project Planning, “Plan the involvement of identified stakeholders,” sets up the involvement early in the project. Another specific practice, “Monitor stakeholder involvement against the project plan,” in Project Monitoring and Control ensures that the project carries out the plan for the stakeholder involvement. Finally, a specific goal, “Coordination and collaboration between the project and relevant stakeholders are conducted,” in Integrated Project Management ensures this behavior is carried throughout the project.

In 2010, Secretary of Defense Robert Gates began an initiative to deliver better value to the warfighter and the taxpayer by improving the way the DoD does business. Under Secretary of Defense (Acquisition, Technology and Logistics) Ashton Carter’s implementation of this initiative included measures focused on improving the tradecraft in the acquisition of services. When planning the CMMI-ACQ model, we made a concerted effort to cover the acquisition of both products and services. Best practices that may help the DoD in its efforts in improving the acquisition of services are found throughout the model.

Although the CMMI-ACQ model cannot address all of the problems in the complex DoD acquisition process, it can provide a firm foundation for improvement in acquisition offices in line with the intent of further improvement initiatives.

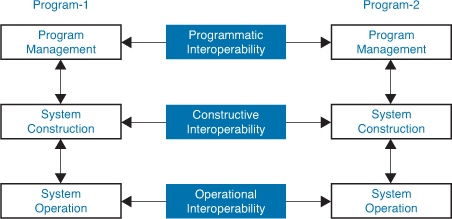

Systems-of-Systems Acquisition Challenges

Although CMMI-ACQ is aimed primarily at the acquisition of products and services, it also outlines some practices that would be especially important in addressing systems-of-systems issues. Because of the importance of this topic in government acquisition, we decided to add this discussion and the Interoperable Acquisition section later in this chapter to assist readers who are facing the challenges associated with systems of systems.

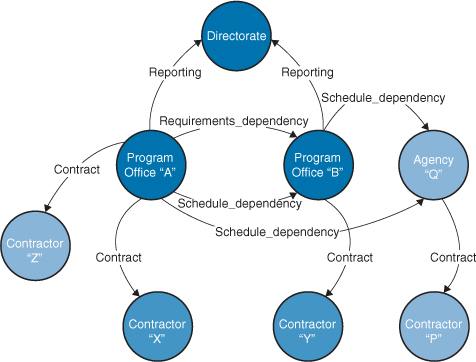

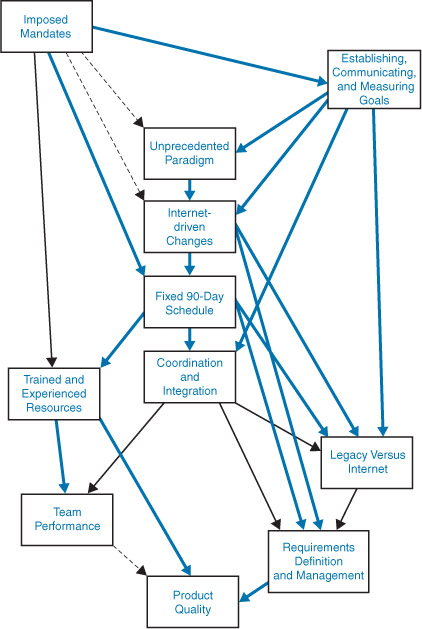

A source document for this discussion is Interoperable Acquisition for Systems of Systems: The Challenges [Smith 2006], which describes the ever-increasing interdependence of systems necessary to provide needed capabilities to the user. As an example cited in the document, in one key satellite communications program, at least five separate and independent acquisition programs needed to be completed successfully before the actual capability could be delivered to the various military services. The increasing emphasis on net-centric operations and service-oriented architectures added to the challenge. Figure 6.2 provides a visual depiction of some of the complexity in this program.

Figure 6.2 Systems-of-Systems Acquisition Challenges

Figure 6.2 shows the many ways that complexity grows quickly as the number of critical programmatic interfaces increases. Each dependency creates a risk for at least one of the organizations. The figure suggests that for two of the programs, a shared reporting directorate can aid in mitigating risks. This kind of challenge can be described as one in which applying recursion is sufficient to meet the demands. The system is composed of subsystems, and a single overarching management structure controls the subordinate elements. For some large program offices, for example, the system might be an aircraft with its supporting maintenance systems and required training systems. All elements may be separate programs, but coordination occurs within a single management structure. Figure 6.2, however, shows the additional challenges evident when no single management structure has been established. Critical parts of the capability are delivered by separate management structures, often with widely different motivators and priorities.

Although the CMMI-ACQ model was not constructed to specifically solve these challenges, especially the existence of separate management structures, the complexity was familiar to CMMI-ACQ authors. Those individuals who have used the CMM or CMMI model in the past will recognize the Risk Management process area in this model. In addition to noting the existence of significant risks in managing the delivery of a system through any outside agent, such as a supplier, the model emphasizes the value of recognizing both internal and external sources of risk. The following note is part of Risk Management specific practice 1.1:

Identifying risk sources provides a basis for systematically examining changing situations over time to uncover circumstances that impact the ability of the project to meet its objectives. Risk sources are both internal and external to the project. As the project progresses, additional sources of risk may be identified. Establishing categories for risks provides a mechanism for collecting and organizing risks as well as ensuring appropriate scrutiny and management attention to risks that can have serious consequences on meeting project objectives.

Varying approaches to addressing risks across multiple programs and organizations have been taken. One of these, Team Risk Management, has been documented by the SEI [Higuera 2005]. CMMI-ACQ anticipated the need for flexible organizational structures to address these kinds of issues and chose to expect that organizations would establish and maintain teams crossing organizational boundaries. The following is part of Organizational Process Definition specific practice 1.7:

In an acquisition organization, teams are useful not just in the acquirer’s organization but between the acquirer and supplier and among the acquirer, supplier, and other relevant stakeholders, as appropriate. Teams may be especially important in a systems of systems environment.

Perhaps the most flexible and powerful model feature used to address the complexities of systems of systems is the effective employment of the specific practices in the second specific goal of Acquisition Technical Management: Perform Interface Management. The practices under this specific goal require successfully managing needed interfaces among the system being focused on and other systems not under the project’s direct control. The foundation for these practices is established in Acquisition Requirements Development:

Develop requirements for the interfaces between the acquired product and other products in the intended environment. (ARD SP 2.1.2)

The installation of the specific goal in Acquisition Technical Management as part of overseeing effective system development from the acquirer’s perspective is a powerful means of addressing systems-of-systems problems. The following note is part of Acquisition Technical Management specific goal 2:

Many integration and transition problems arise from unknown or uncontrolled aspects of both internal and external interfaces. Effective management of interface requirements, specifications, and designs helps to ensure implemented interfaces are complete and compatible.

The supplier is responsible for managing the interfaces of the product or service it is developing. At the same time, the acquirer identifies those interfaces, particularly external interfaces, that it will manage.

Although these model features provide a basis for addressing some of the challenges of delivering the capabilities desired in a systems-of-systems environment, the methods best suited for various acquisition environments await further development in future documents. CMMI-ACQ is positioned to facilitate that development and mitigate the risks inherent in these acquisitions.

The IPIC Experience

Daniel J. Luttrell and Steven Kelley

A Brief History

The Minuteman III system now deployed is the pinnacle of more than 50 years of rocket science applied to Intercontinental Ballistic Missiles (ICBM). In 1954, the U.S. Air Force awarded the Systems Engineering and Technical Direction (SETD) contract to the Ramo-Wooldridge Corporation (now Northrop Grumman) for Ballistic Missile support. That contract award was the seed that eventually grew to become the ICBM Prime Integration Contract (IPIC).2

As ICBMs were developed, from the early Atlas, Thor, and Titan missiles into the Minuteman and Peacekeeper systems and then continuing with the Minuteman III missiles today, Northrop Grumman has built and maintained detailed mathematical models to predict missile flight path, accuracy, and probability of success. In the beginning, these models consisted of technical papers and reports on the current system’s abilities and predictions for future systems being proposed. After the systems were fielded, the predictive models were sustained and continually upgraded with each new data set. Modeling and statistical analysis served as the basis of the original contract and remains the heart of today’s IPIC program.

Due to Minuteman’s success, this system will maintain operational readiness for the foreseeable future. Its ability to continue its mission well beyond its original service life is evidence of its robust design and sustainment activities. Over the years, many of the components and subsystems have been replaced or upgraded, while many other elements of the system remain with limited or no refurbishment.

Transition from Advisor to Integrator and Sustainer

The Northrop Grumman Missile Systems organization, based primarily in Clearfield, Utah, has used statistical analysis to build, sustain, and improve the ICBM weapon system and the organization’s engineering and business processes since ICBMs were first developed. In 1997, the Air Force moved away from managing all of the separate pieces of the ICBM system to using a single prime integrator. Northrop Grumman was awarded the contract, and the IPIC Team (consisting of Northrop Grumman plus more than a dozen subcontractors, including Boeing, Lockheed-Martin, ATK, and Aerojet) assumed responsibility for the integration and sustainment of the end-to-end ICBM system. As the prime integrator, Northrop Grumman manages the contract and the IPIC Team shares responsibility for fulfillment of the ICBM mission with the Air Force. This contract is unique because it is based on system performance with a focus on weapon system health. This contract structure for prime integration has worked effectively over a long period of performance.

The Minuteman III missile is a three-stage, solid-propellant rocket motor with a liquid-propellant fourth stage; it is housed in an underground silo. The service life for the IPIC Minuteman system is being extended through 2030, which presents a technical challenge: The system was originally fielded in the 1970s with an expected service life of 10 years.

The IPIC Team has been the sole integrator of the complete ICBM weapon system, including all subsystems, since program inception. IPIC efforts include sustainment engineering, systems engineering, research and development, modifications, and repair programs.

Through joint technical integration, the IPIC Team advises the Air Force, which makes all decisions on proposed technical approaches. This separation of responsibility allows industry, including the prime integrator and subcontractors, the freedom to deliver a best-value product that meets the customer’s weapon system performance requirements and ensures mission success. The IPIC contract has established a weapon system management approach that empowers both the government and the contractor team, holding them jointly accountable to deliver warfighting capability to the end user.

The Air Force provides incentives for the IPIC Team to maintain system performance as measured by key readiness parameters. The focus is on weapon system capability, with government direction addressing what to do, but not specifying how to do the job. Northrop Grumman has been given the flexibility to manage weapon system risk and streamline processes to achieve the desired outcome.

The IPIC contract was part of the Air Force “Lightning Bolt” acquisition initiative and delivers significant cost savings to the Air Force. While other prime integration contracts have not performed as well as expected on development efforts, Northrop Grumman’s prime integration approach on IPIC has been very successfully applied to Minuteman sustainment and life extension. This contract vehicle establishes a framework that allows for all analysis, advisory, and solution development efforts to be tied to one contract, thereby providing a unified industry position for the decisions the Air Force must make for system sustainment. To a large degree, the same industry base benefits from the ongoing sustainment and upgrade work identified by analysis under the contract. This framework allows the contractors to retain engineering talent and data developed with the original system and keep them up-to-date on system improvements as they happen. It also allows industry to bring in new personnel and train them, alongside seasoned veterans, in the systems and processes used to maintain and enhance the system.

The advantage to the Air Force of this arrangement is that it can rely on a stable team that performs well together and has done so for more than a decade. Today, the IPIC Team continues to evolve, improve teaming relationships, and deliver performance in support of the Air Force customer.

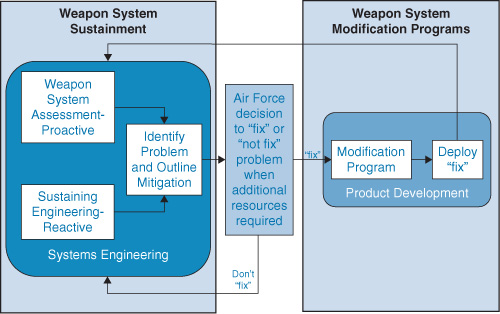

IPIC consists of two major types of efforts, as illustrated in Figure 6.3: (1) sustainment to analyze the aging Minuteman system and (2) development of modification programs to modernize the system as required to extend its service life through the year 2030. Modification programs are integrated into the IPIC contract individually as they are approved and negotiated.

Figure 6.3 Two Major Types of Efforts Handled by IPIC

Sustainment consists of sustaining engineering and weapon system assessment. The sustaining engineering function responds to issues with the operational system as they arise. The weapon system assessment function analyzes system subelement data proactively gathered from testing. Data from all sources are fed into performance models that enable the team to identify weapon system risks far enough in advance of system impact that team members can fully characterize the extent of the risk, determine appropriate short-term mitigation actions, create a plan, and ultimately mitigate the risk entirely. System risk assessment is driven by statistical models for missile performance to support the key readiness parameters: availability, reliability, accuracy, and hardness. Analyzing these performance models in conjunction with aging models enables the IPIC Team to determine whether all subsystems and the system as a whole will function correctly through the target dates (2030 and beyond).

How IPIC Works

Effective sustainment of the Minuteman force requires the government and Northrop Grumman to continually balance dual priorities: keeping the missiles on alert, while fielding vital new hardware and software upgrades that extend the service life of the system. All elements of the system are involved— cmillions of custom software lines, thousands of computers, all types of communications, and thousands of mission hardware items. All must be maintained and enhanced both safely and cost-effectively. Engineering productivity has been increased by consolidating contractor statements of work, eliminating redundant tasking, and employing commercial best practices. When combined with innovative procurement approaches for major subsystem upgrades, Northrop Grumman’s approach significantly reduces the overall cost of system sustainment.

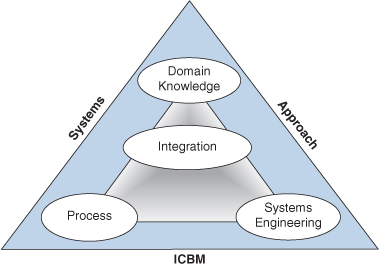

Applying a systems approach has been the key factor in ICBM success for more than a half century, since the first strategic land-based deterrent was introduced in the 1950s. The ICBM Systems Approach is pictured in Figure 6.4.

Applying systems engineering requires a complete spectrum of engineering and science disciplines to understand and manage complex interdependencies. Systems engineering also interacts with the goal of achieving overall weapon system optimization. A full review of all considerations is completed prior to every relevant action on the system. This review employs a comprehensive weapon system risk management process. As experience has shown, the Northrop Grumman systems approach is the best way to identify and mitigate unintended consequences resulting from subsystem changes.

To further enhance the systems approach, Northrop Grumman has developed mature and disciplined processes that have improved with time to provide consistent and reliable results. Documented in an easily accessible organizational command media and program data site, these processes are essential for maintaining the quality demanded of such a mission-critical system. These processes have been tested by time and improved through measurement against industry best practices such as ISO standards, AS9100, CMMI, and Lean Six Sigma.

Domain knowledge consists of extensive and sustained expertise about the system—from the beginning of concept development through deployment and decommissioning. Northrop Grumman maintains a full history of data for all aspects of the system (i.e., ICBM performance data, system configurations, and upgrades) along with long-term human expertise in the concept, design, development, deployment, modification, infrastructure, operations, and support functions used to maintain system viability.

Engineers employed at the beginning of the ICBM program are still on the job today. Northrop Grumman systematically hires new graduates and assigns them to work alongside these experienced engineers, from whom they absorb domain knowledge; this practice ensures that the new-generation engineers will be ready to carry on when older generations retire. This domain knowledge enables thorough analysis when issues arise.

The final ingredient of ICBM’s success is total weapon system integration—the control and coordination of separate complex elements and activities (e.g., interfaces, resources, milestones) to achieve required system performance. Integration is the activity that enables IPIC to avoid gaps, overlaps, and system suboptimization. Complete integration of all related subsystem activities is vital to avoid disconnects or unintended consequences when implementing changes and upgrades.

When so many changes are taking place across the system concurrently, the system engineering, integration, and process functions become critical as means to avoid adverse impacts. Management of individual efforts is also critical to performance and reporting on cost and schedule, as each effort is tracked and rolled up to the system level for top-level customer review. The complex system and contract include parallel activities both in the execution of modifications and in deployment. Integration and optimization of deployment activity minimizes resource expenditure while ensuring that operational readiness is maintained at acceptable levels.

Industry Process Standards and Models

The IPIC mission is to maintain the total ICBM weapon system and modernize the Minuteman III to extend its useful life to 2030. The expected product of this mission is an ICBM weapon system that maintains its readiness to launch 24/7/365 while at the same time undergoing testing, evaluation, and upgrades. Success, in this case, is measured by comparing predicted results with observed results and getting no surprises from year to year. Process discipline, predictability, and improvement are vital across the enterprise to enable mission success.

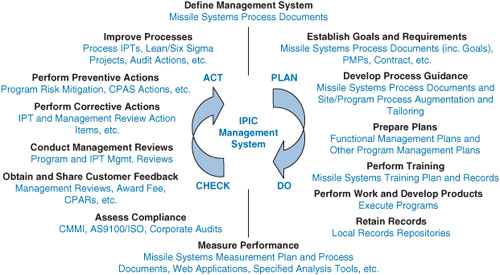

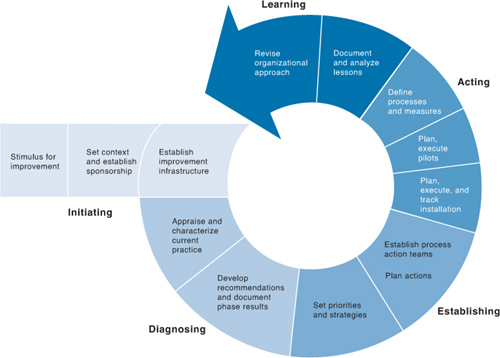

Over its lifetime, the IPIC program has examined process standards and models from DoD, IEEE, ISO/AS9100, CMMI-DEV, CMMI-SVC, and CMMI-ACQ to help frame its internal process development and improvement activities. As with all programs, some standards have proved more useful than others. Nearly all share the same fundamental “Plan, Do, Check, Act” construct. Northrop Grumman arranged the AS9100 framework (a contractual compliance requirement) around the simple “Plan, Do, Check, Act” core to codify a broad process framework for management. Illustrated in Figure 6.5, this operating philosophy is the essence of what Northrop Grumman and its subcontract team must do to succeed.

Figure 6.5 Northrop Grumman Operating Philosophy

This operating philosophy includes the following elements:

Define Management System: Documented on a website accessible by all Program Team members (including teammates, subcontractors, and the Air Force) are assets that include program requirements establishing local policy for performing work, implementation plans, additional clarification on how processes are to be implemented, and other plans to establish a framework for operations. The site also contains handbooks, guides, templates, and tools to support the processes.

Establish Goals and Requirements: In the Management System diagram, IPIC’s primary goal is to meet or exceed all customer expectations and achieve excellent award fees. All other goals flow from the primary goal and from government contract direction.

Develop Process Guidance: Accessed via the website, the process guidance documentation includes mandatory items plus handbooks, guides, forms, tools, and templates. Each mandatory document has a process leader, a subject matter expert, and a process reviewer who has the authority to approve or reject changes after review by the appropriate change control board.

Prepare Plans: IPIC plans are a subset of process guidance, also accessed via the web page, that is mandatory for compliance. Each program element and key function has an approved plan for execution (e.g., Measurement, Program Management, Data Management).

Perform Training: Mandatory and optional classes and online computer-based training are available, along with informal mentoring and attendance at seminars and conferences.

Perform Work and Develop Products: The primary product of assessment engineering is ongoing weapon system modeling and risk analysis. As subsystems are sustained and upgraded, new issues may arise that also require mitigation. Some mitigations may consist of small corrective efforts or simple updates to technical orders. Others may require significant product development such as the design and manufacture of one or more system components.

Retain Records: Records are retained in a variety of databases and web tools with appropriate levels of configuration control.

Measure Performance: IPIC maintains a plan describing what to measure, how to measure it, how to analyze the data, and where the data and analysis are to be reported and stored. A strong measurement and statistical analysis program is in place to measure and predict future costs and schedule performance as well as weapon system health and readiness.

Assess Compliance: Northrop Grumman has an active internal assessment program. If a recurring lack of compliance is identified, the team first evaluates the underlying process documentation to determine if the process should be updated or improved before assuming that poor discipline is the source of the problem. Northrop Grumman measures IPIC against the ISO/AS9100 standard and the CMMI-ACQ model with both internal and external audits, and has completed most audits without negative findings. Northrop Grumman Missile Systems/IPIC was the first organization externally appraised at maturity level 5 for CMMI-ACQ and published by the SEI on the Published Appraisal Results (PAR) website in 2009.

Obtain and Share Customer Feedback: Customer feedback comes in the form of both praise and constructive criticism on a daily basis. Two formal feedback mechanisms are Contract Performance Assessment Reports (CPAR) and Award Fee Letters. These reports require formal response and action items to address negative remarks. Award fee results are also analyzed for recurring themes and shared with the IPIC employees.

Conduct Management Reviews: Northrop Grumman conducts internal and external reviews at various program levels. Staff meetings, contractor Integrated Product Team (IPT) meetings, joint IPT meetings, and program-level management reviews are conducted on a recurring basis.

Perform Corrective and Preventive Actions: Corrective actions are required for cost, schedule, or technical variances, and are also created at all levels of the program and the organization to address internal or external audit findings, negative customer feedback, and other issues that arise. Preventive actions may also be required depending on the issue or in instances where the issue appears in more than one place.

Improve Processes: An ongoing improvement activity serves to enhance program performance and customer/employee satisfaction with the process architecture. IPIC maintains a database that can be used by any employee, teammate, or customer to submit a problem discovered or a suggestion for improvement. The process integration team reviews and prioritizes suggestions for action. Northrop Grumman uses the Lean and Six Sigma tool sets along with Theory of Constraints thinking processes to guide data collection and process improvement planning and execution.

IPIC and CMMI Models

Starting with the “Plan, Do, Check, Act” core with an encircling AS9100 framework, which provides the broad basis for the IPIC management system (as outlined in the preceding section), CMMI models—with their narrower focus—add a much-needed depth of guidance for management activities.

IPIC was successfully appraised both internally and externally against CMMI-DEV for applicable process areas (excluding Technical Solution and Product Integration). Because Northrop Grumman does not develop products on IPIC, but rather acquires them from subcontractors that develop those products from system-directed requirements, other CMMI models were investigated as they were released. After examining and ruling out the CMMI-SVC model, Missile Systems evaluated the CMMI-ACQ model. The Acquisition model is a more suitable evaluation structure for the pure acquisition work performed by Northrop Grumman on this contract.

Mathematical models and statistical analysis were developed as a technical solution to support the customer (i.e., the Air Force) for decades with a proven track record of success. The voice of the process on the technical side supplied good historical data to support and update technical baselines. Modeling future expectations from these baselines was a logical extension that provided solid information for technical decisions.

When Northrop Grumman began to investigate CMMI models for use on IPIC, it became evident that these same data analysis, baseline development, and mathematical modeling techniques could be applied successfully in the nontechnical arena to manage the program, the business, and the underlying processes. Significant process improvements were derived from high maturity elements of CMMI models using baselines discovered in existing program data as a source for new model development.

As an example, applying moving range analysis to program management data produced a predictive model that could accurately forecast when program work accomplishment trends were eroding compared to plan, even though the standard program metrics might show no cause for concern. Analysis of payment cycle time data, stratified by contract type, yielded stable baselines to provide the basis for another new model to analyze payment trends—a key business process output.

For acquisition, Northrop Grumman “discovered” another baseline in readily available data for mitigation plan development. Combining these data with an existing technical model yielded another new model around the key function of what to acquire and when. Baselines for first-pass acceptance and internal assessment processes led to further acquisition related models that produced actionable information enabling the organization to improve acquired product acceptance to an essentially error-free state and to gain an improved understanding of enterprise-wide process performance.

Generic practices provided another opportunity to expand process maturity. Rather than institutionalizing only CMMI processes, Northrop Grumman chose to apply generic practices to all of its process areas (e.g., Human Resources, Business Development). Beyond expanding the application of CMMI within Northrop Grumman, generic practices such as Process and Product Quality Assurance (PPQA) facilitated even more improvements by helping the organization to develop a more objective and useful QA process.

Prior to adopting CMMI, audits were focused merely on outputs, with only the government Data Item Description (DID) serving as the standard. Process audits did not “feel” objective to those being audited. With CMMI, Northrop Grumman separated product from process audits and developed standards for each. Key steps were identified for each process, and the audits became much less confrontational and easier to complete. Process audit findings are never directed toward the performer, but are focused instead on the key steps. The organization cross-trains audit volunteers who want to understand other parts of the business, thereby helping engineers learn about business processes, and business process employees learn about engineering. Audits are no longer viewed as contentious events to be avoided, but rather as a chance to discuss work and ideas with a fellow employee who wants to learn and ensure the success of the program.

The focus of Acquisition Requirements Development (ARD) and Acquisition Requirements Management (ARM) in the CMMI-ACQ model versus Requirements Development (RD) and Requirements Management (REQM) in the CMMI-DEV model is a good example of how the change in focus provided a much better fit for IPIC at Northrop Grumman. ARD and ARM specific practices dive deep into the way in which requirements flow down the supply chain and suppliers are held accountable for meeting them, while RD and REQM focus on deriving lower level requirements from user needs.

Managing supplier development and delivery to requirements is a substantial process area that Northrop Grumman executes on the contract, for which no credit could be taken using the CMMI-DEV model. Solicitation and Supplier Agreement Development (SSAD) was also a good fit. Northrop Grumman (on IPIC) does not directly develop any product components, but does frequently initiate new supplier agreements. Having a process area focused on the critical aspects of the solicitation and agreement development process from an acquirer’s viewpoint is a new and useful tool for both government and industry acquirers. From a contract value perspective, acquisition accounts for 81 percent of the total contract value, and “getting it done right” in the beginning pays dividends later in the acquisition.

Coming up to speed on CMMI-ACQ has been a rewarding experience. Northrop Grumman began a pilot of the model on IPIC in the summer of 2008. In the same time frame, the Air Force independently requested a SCAMPI B appraisal. Only the ACQ processes were evaluated, and even with little time to prepare and no experience with this new model, the outcome was generally positive. In May 2009, with an improved understanding of the model, Northrop Grumman conducted an internal SCAMPI C appraisal covering all process areas. Again, the results were positive, leading to success in the first ever recorded SCAMPI A maturity level 5 against the CMMI-ACQ model in December 2009. The Lead Appraiser was also one of the model developers.

Given this resounding success, is there anything about the CMMI-ACQ model that could be improved? It has been our experience that contract organizations such as Northrop Grumman (on IPIC) and military organizations that perform acquisition have very small staffs who are assigned to any single acquisition. Staff numbers typically range from one or two to four or five people, supported by larger organizations that supply staff augmentation for short periods such as Solicitation and Supplier Agreement Development or Validation. The day-to-day work is carried out by a small team whose size is not equivalent to that of the project teams in an average development organization. Therefore, some of the process areas normally handled within a typical development project would more naturally be performed outside the acquisition project team.

Some of these process areas are obvious (CM and PPQA), but others are ambiguous (AVAL and QPM). Goals for quantitative management are driven by the larger organization, and not by the small acquisition teams. For example, Northrop Grumman’s acquisition project data for IPIC are aggregated across multiple acquisitions and not analyzed by each project. This approach is intentional because of insufficient data inside each acquisition project and because many process improvements are intended for the larger organization and not just for the specific project. Perhaps, then, QPM in the CMMI-ACQ model could be described as Quantitative Process Management (rather than Project Management), and the decision of where the practices are performed might be left to the organization.

Conclusion

Northrop Grumman’s unique approach to Minuteman III sustainment and modernization has provided the following objective, tangible results:

• Consistently high award fee scores—averaging 95.5 percent

• High Contractor Performance Assessment Reports (CPARs)—average score of purple or better

• Favorable cost performance—a 3 percent favorable cost variance on more than $6 billion worth of executed program

• Technical performance resulting in weapon system readiness far above threshold specifications

• Consistently reliable flight test demonstrations since program inception

This contract structure, with its integration of prime integrator and customer decision makers, enhanced by management systems and implemented models for organizational management in addition to technical and programmatic variables, is an excellent approach for any mature, fielded weapon system.

CMMI: The Heart of the Air Force’s Systems Engineering Assessment Model and Enabler to Integrated Systems Engineering—Beyond the Traditional Realm

George Richard Freeman, Technical Director, U.S. Air Force Center for Systems Engineering3

When the U.S. Air Force (AF) consolidated various systems engineering (SE) assessment models into a single model for use across the entire AF, the effort was made significantly easier by the fact that every model used to build the expanded AF model was based on Capability Maturity Model Integration (CMMI) content and concepts. This consolidation also set into motion an approach toward addressing information used across the enterprise in ways that were previously unimagined.

In the mid-1990s, pressure was mounting for the Department of Defense (DoD) to dramatically change the way it acquired, fielded, and sustained weapons systems. Faced with increasingly critical Government Accounting Office (GAO) reports, and with a barrage of media headlines reporting on program multi-billion-dollar cost overruns, late deliveries, and systems that failed to perform at desired levels, the DoD began implementing what was called acquisition reform.

Many believed that the root of the problem originated with the government’s hands-on approach, which called for it to be intimately involved in requirements generation, design, and production processes. The DoD exerted control primarily through government-published specifications, standards, methods, and rules, which were then codified into contractual documents. These documents directed—in often excruciating detail—how contractors were required to design and build systems.

This approach resulted in the government unnecessarily bridling contractors. In response, the contractors vehemently insisted that if they were free of these inhibitors, they could deliver better products at lower costs. This dual onslaught from the media and contractors resulted in sweeping changes that included the rescinding of a large number of the military specifications and standards, slashing of program documentation requirements, and a major reduction in the size of government acquisition program offices.

The language of contracts was changed to specify the desired “capability,” with contractors being given the freedom to determine “how” to best deliver this capability. This action effectively transferred systems engineering to the sole purview of the contractors. The contractors were being relied upon to deliver the desired products and services, while the responsibility for the delivery of viable solutions remained squarely with the government.

What resulted was a vacuum in which neither the government nor the contractors accomplished the necessary systems engineering. Over the following decade, the government’s organic SE capabilities virtually disappeared. This absence of SE capability became increasingly apparent in multiple-system (systems-of-systems) programs in which more than one contractor was involved.

Overall acquisition performance did not improve; indeed, in many instances, it became even worse. While many acquisition reform initiatives were beneficial, it became increasingly clear that the loss of integrated SE was a principal driver behind continued cost overruns, schedule slips, and performance failures.

In response to these problems, on February 14, 2003, the AF announced the establishment of the Air Force Center for Systems Engineering (AF CSE). The CSE was chartered to “re-vitalize SE across the AF.” Simultaneously, major AF Acquisition Centers initiated efforts to swing the SE pendulum back the other way.

To regain some level of SE capability, many centers used a process-based approach to address the challenge. Independently, three of these centers turned to the CMMI construct and began tailoring it to create a model to be used within their various program offices, thereby molding CMMI to meet the specific needs of these separate acquisition organizations.

Recognizing the potential of this approach and with an eye toward standardizing SE processes across the entire AF enterprise, in 2006 the AF CSE was tasked to do the following:

• Develop and field a single Air Force Systems Engineering Assessment Model (AF SEAM)

• Involve all major AF centers (acquisition, test, and sustainment)

• Leverage current SE CMMI-based assessment models in various stages of development or use at AF centers

Following initial research and data gathering, in the summer of 2007 the AF CSE established a working group whose members came from the eight major centers across the AF (four Acquisition Centers, one Test Center, and three Sustainment Centers). Assembled members included those who had either built their individual center models or would be responsible for the AF SEAM going forward.

The team’s first objective was to develop a consistent understanding of SE through mutual agreement of process categories and associated definitions. Once this understanding was established, the team members used these process areas and, building on the best practices of existing models, together developed a single AF SEAM. Desired characteristics included the following:

• Be viewed as adding value by Program Managers and therefore “pulled” for use to aid in the following:

• Ensuring standardized core SE processes are in place and being followed

• Reducing technical risk

• Improving program performance

• Scalable for use by all programs and projects across the entire AF

• Self-assessment based

• Independent verification capable

• A vehicle to share SE lessons learned and best practices

• Easily maintained

To ensure a consistent understanding of SE across the AF and provide the foundation on which to build AF SEAM, the working group clearly defined ten SE process areas (presented in alphabetical order here):

The model’s structure is based on the CMMI construct of process areas (PAs), specific goals (SGs), specific practices (SPs), and generic practices (GPs). The AF SEAM development team subsequently amplified the 10 PAs listed above with 33 SGs, 119 SPs, and 7 GPs.

Each practice includes a title, description, typical work products, references to source requirements and/or guidance, and additional considerations to provide context. While some PAs are largely an extract from CMMI, others are combinations of SPs from multiple CMMI PAs and other sources. Additionally, two PAs not explicitly covered in the CMMI model were added—namely, Manufacturing and Sustainment.

It is equally important to understand where AF SEAM fits within the assessment continuum (depicted in Figure 6.6). The “low” end of this continuum is best defined as an environment where policies are published and “handed off” to the field, with the expectation that they will be followed and in turn yield the desired outcomes. In contrast, the “high” end of the assessment continuum is best defined as an environment replete with a high degree of independent engagements, including highly comprehensive CMMI assessments. AF SEAM was designed to target the “space” between the two (“CMMI Light”) and provides one more potential tool for use, when and where appropriate.

Figure 6.6 The Assessment Continuum

To aid in the establishment of a systems engineering process baseline and, in turn, achieve the various aims stated above, programs and projects are undergoing one or more assessments followed by continuous process improvement. An assessment focuses on process existence and use; thus it serves as a leading indicator of potential future performance.

The assessment is not a determination of product quality or a report card on the people or the organization (i.e., lagging indicators). To allow for full AF-wide scalability, the assessment method is intentionally designed to support either a one- or two-step process.

In the first step, each program or project performs a self-assessment using the model to verify process existence, identify local references, and identify work products for each SP and GP—in other words, the program or project “grades” itself. If leadership determines that verification is beneficial, an independent assessment is performed.

This verification is conducted by an external team that confirms the self-assessment results, adds other applicable information, and rates each SP and GP as either “satisfied” or “not satisfied.” The external assessment is conducted in a coaching style, which facilitates and promotes continuous process improvement.

Results are provided to the program or project as an output of the independent assessment. The information is presented in a manner that promotes the sharing of best practices and facilitates reallocation of resources to underperforming processes. The AF SEAM development working group has also developed training for leaders, self-assessors, and independent assessors, who undergo this training on a “just-in-time” basis.

Since it was originally fielded, the use of AF SEAM continues to grow across the AF. With a long history of using multiple CMMI-based models across the AF, significant cultural inroads have been made to secure the acceptance of this process-based approach. The creation and use of a single AF-wide Systems Engineering Assessment Model is playing a significant role in the revitalization of sound SE practices and the provision of mission-capable systems and systems-of-systems on time and within budget.

Additionally, this process-based methodology has opened up an entirely new frontier—specifically, the advancement of a disciplined systems engineering approach to other areas across the enterprise, otherwise known as integrated systems engineering: looking beyond the traditional realms.

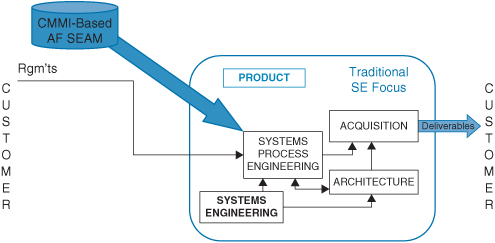

Figure 6.7 illustrates the traditional “product” focus of systems engineering, which AF SEAM applies to. The process begins with the identification of requirements by the customer. Using the various SE processes applied to Systems Process Engineering, these requirements are broken down and allocated to various subelements that are then processed through the Acquisition process. Both areas are supported by Architecture. The end result is a Deliverable to the customer. This view, which is commonly referred to as the “product view,” illuminates the application of AF SEAM in the context of other associated activities.

Figure 6.7 Traditional “Product” Focus of Systems Engineering

An enterprise must continually make resource allocation decisions in its never-ending drive to deliver the highest “value” to the customer at the lowest possible total ownership cost (TOC), within acceptable levels of risk.4 To achieve this goal, the data and information that are produced within the product view must be fed back to leadership.

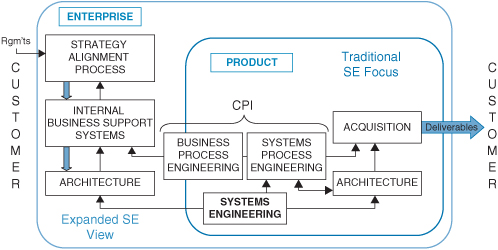

This provision of information to leadership is achieved through the addition of the “enterprise view” depicted in Figure 6.8. Note the addition of the Business Process Engineering activity adjacent to the Systems Process Engineering activity. Also note that although systems process engineering deals with customer requirements, more often than not these requirements are communicated through enterprise business processes.

Figure 6.8 Continuous Process Improvement

Gaining an increased understanding of how process-based assessments are executed in the context of other influencing activities is vitally important. Understanding the context of assessments also points one toward potential avenues for expansion of process-based analysis and improvement—hence the designation of continuous process improvement (CPI) and its applicability to both Business and Systems Process Engineering.

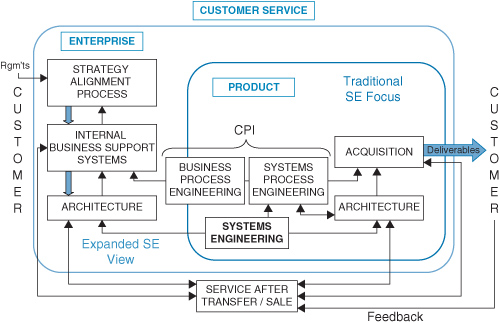

Data and information that are created within an enterprise are used to support resource allocation and other business decisions. However, this information is incomplete without inclusion of the customer’s voice. In Figure 6.9, the customer’s voice is depicted through the addition of the “service view.” Note the feedback loops, including where they traditionally enter into the various processes. Also note the inherent challenges in transmitting this feedback to the activities that would truly impart change upon the processes supporting each of the views.

Appropriately visualizing and addressing process activities as the heartbeat of the enterprise is another aid to the AF in its goal to deliver needed capabilities on time and within budgetary constraints. Beginning in 2007 with the CMMI-based AF SEAM development effort that focused on the SE processes primarily supporting the product view, this approach set into motion an entirely new way of examining this complex enterprise.

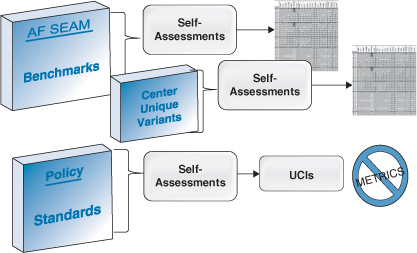

The path ahead for AF SEAM continues to look promising. Individual users are finding value in using AF SEAM, and even in an environment of austere resources it has taken hold as the SE process analysis tool of choice at the practitioner level. This “grassroots” support is directly attributable to the firm foundation upon which the model was constructed combined with the collaborative way in which it was built. Policy makers and leaders are also looking for ways to capitalize further on this process-based leading indicator approach. Figure 6.10 depicts the current state of AF SEAM in many locations as its use continues to mature. AF SEAM is being augmented by various center variants, while a separate path takes an office through its preparations for a Unit Compliance Inspection (UCI). A UCI measures various artifacts and, therefore, is an assessment of lagging indicators.

Figure 6.10 Current State of AF SEAM in Many Locations

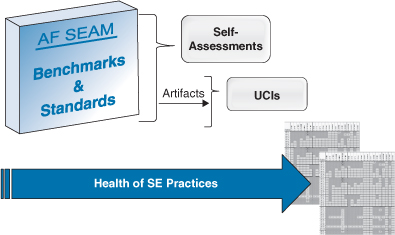

As AF SEAM continues to mature and its use proliferates across the AF centers and individual program offices, the AF is moving toward the merging of independent leading and lagging indicator assessments. Figure 6.11 suggests how further evolution of standard processes (leading) might merge desired outcomes (lagging) into a single coordinated effort. The combination of these two assessments into a single holistic approach is intended to lessen the workload on individual offices while simultaneously providing a clearer picture of processes to leaders.

Figure 6.11 Evolution of Standard Processes

Armed with the continually improving process-based approach described in this essay, leaders are more likely to take advantage of these combined tools, which promise to yield improved information with less overall effort. This evolution will, in turn, facilitate improved allocation of resources. Finally, as the challenge of working within highly complex systems-of-systems environments becomes the norm, it is increasingly important that the associated supporting processes have the ability to integrate with one another in ways previously unimagined. The standardization of AF SEAM and the associated processes it describes across the AF enterprise represents a positive step toward achieving these aims.

Lessons Learned by DGA on CMMI-ACQ

Dominique Luzeaux, Eric Million-Picallion, and Jean-René Ruault

General Context and Motivations for CMMI-ACQ

The Direction Générale de l’Armement (DGA) is part of the French Ministry of Defense and is responsible for the design, acquisition, and evaluation of the systems that equip the armed forces, the export of these systems, and the preparation of future weapon systems. Its action encompasses the whole lifecycle of these systems.

The DGA is organized into seven directorates: strategy, operations, technique, international development, planning and budget, quality and modernization, and human resources. The Operations directorate is directly responsible for the design and acquisition of the equipment used by the armed forces and the corresponding budget. Operations is divided into ten divisions: land systems, helicopters, missiles, aircraft systems, naval systems, space and C4ISR systems, deterrence, nuclear and bacteriological protection, the “Coelacanthe” weapon system, and the “Rafale” weapon system. The Land Systems division, which is the focus of this essay, is in charge of all land systems (i.e., combat, transportation, and bridging vehicles; direct and indirect fire systems; ammunitions; infantry and paratrooper equipment; various equipment for troop sustainment; and training and simulation).

The DGA passed ISO 9001 certification four years ago at the enterprise level and is engaged in continuously improving its main processes. The quality process implemented by the DGA relies on a long-term vision aimed at improving the value of acquired systems. Therefore, the decision was made in 2007 to obtain a CMMI level rating, starting with level 2 and then addressing higher levels.

The expected benefits of CMMI-ACQ for the DGA included the following:

• Improved efficiency achieved by measuring tried and tested practices, capitalizing them into documented process references, and increasing professionalism

• Organization improvement by the optimization of estimation, planning, and monitoring and skills development and resource allocation

• Improved operational results through better respect of foreseen budgets and delays and productivity gains

• Recognition by partners, through objective proof of acquisition efficiency

To reach level 2 of the CMMI-ACQ model, an enterprise should implement project and acquisition management processes, such as Requirements Management (REQM), Configuration Management (CM), and Acquisition Requirements Development (ARD). These process areas implement DGA’s Stakeholder Requirements Definition Process and the Requirements Analysis Process.

These process areas are very important topics for DGA because they concern its core acquisition activities. Indeed, DGA’s architects take as input the needs expressed by the Joint Staff, as well as other stakeholder requirements, such as regulations and laws. They translate these needs and requirements into technical requirements to elaborate technical specifications. These technical specifications form the core of the acquisition agreement between the DGA and its suppliers. They allow the DGA to acquire solutions that satisfy stakeholder needs within the allotted budget and schedule constraints. Furthermore, the DGA’s architects must maintain the systems’ definition throughout the whole lifecycle.

It is the systems architect’s job to account for the flow of evolving needs and to assess their impacts on the definition of the current system architecture. The architect must upgrade the current systems’ architectures, maintain operational capabilities, and manage the systems to the budget. These tasks imply that the architect analyzes the impact of all critical dimensions (i.e., operational, financial, schedule, security, and safety) and decides how to implement these requirements and elaborate adequate technical specifications. To achieve these goals, the architect must have an up-to-date architecture definition of the baseline system, a clear set of baseline requirements with their rationale, and appropriate system engineering methods and tools.

In this context, systems engineering processes and activities must be defined and applied. Moreover, these activities must be more and more agile and efficient, allowing the systems architect to master both the architecture definition of the system and evolving requirements to assess the effects of change requests, and to make the best decision.

The CMMI-ACQ model gives the DGA an opportunity to improve its systems engineering practices aimed at achieving this agility and efficiency. Current guidelines and procedures are mapped to CMMI-ACQ specific goals and practices. If necessary, the lack of relevant documents is identified and corrected, and appropriate sets of measurements are defined and applied to activities to enable these activities to reach a higher efficiency level.

CMMI Level 2 Certification Project

The CMMI certification process was launched in 2007. The first assessment focused on management practices and highlighted the differences between the practices implemented by the DGA and the recommendations of the CMMI-ACQ model. Three divisions were chosen among the ten in a first stage. All of these divisions have submitted to a Standard CMMI Appraisal Method for Process Improvement (SCAMPI B) evaluation. The goal in mind was a level 2 certification with evaluation on activities such as process management, project management, and contract and supplier management. This evaluation process ended with a successful SCAMPI A evaluation at the end of 2009 for two divisions; evaluation of the third division was scheduled to be completed at the end of 2010.

For the Land Systems division, several major projects have been evaluated (i.e., infantry combat vehicle, soldier combat system, large-caliber indirect fire system, armored light vehicle, light battle tank, main battle tank, future crossing bridge system), which are in different phases of their lifecycle. Half of the projects have been evaluated on all nine process areas required to achieve level 2 certification. Objective evidence has been submitted to the evaluators and interviews of the integrated project teams have been conducted.

For each project, all the available documents were analyzed before the interviews were conducted. Both divisions were evaluated on very different projects, but the evaluation yielded similar results—which is not especially surprising given that the DGA has been focusing on sharing common methods for quite some time and relies on a matrix organization that fosters such a fruitful exchange. Besides, both divisions have taken advantage of DGA’s CMMI project to share and harmonize their practices and to apply common improvements on a short-term basis in a true continuous improvement process.

Lessons Learned

The bottom-up approach underlying CMMI, which consists of identifying and sharing good practices as observed in everyday life, has been widely appreciated by DGA’s various teams. These teams are convinced that the CMMI approach supports a quality approach, an internal improvement process, and a standardization of identified best practices. For instance, the reporting spreadsheets developed for some of the projects have been generalized to the various projects.

Several DGA team members have dedicated a large part of their time to the CMMI project. (The word “project” is not misused here: The management processes have been applied in a recursive manner to the CMMI-based improvement effort with planning, resource estimation, project meetings, resource management, and so on.) Working groups have been established to find solutions when gaps between performance and the model are identified.

A special effort is dedicated to the collection of meaningful artifacts in each project. As the DGA’s experience demonstrates, the resource investment involved in CMMI-based improvement is not a negligible one. The amount of work on average for both of the DGA’s SCAMPI evaluations can be estimated at one person-day per sector per project per evaluation, if the project is already mature in its management. Because the DGA’s integrated project teams encompass various skills (e.g., project manager, technical experts, quality experts, finance experts, procurement specialists), the workload differs from one member of the team to the other; for this reason, most preparation work for the evaluations was performed by the quality experts.

Several opportunities for process improvement have been found—for example, in the documentation management, resource allocation, and objective evaluations. Some of them were not a real surprise, and the CMMI project has helped to implement solutions to these issues. A side effect of the whole approach has been the forging of a much closer relationship between the evaluated divisions. Usually they deal with completely different customers and systems, but within the CMMI project they had to share their practices to achieve success within the given schedule.

The main criticism that could be raised about the effectiveness of this evaluation relates to the proof collection process, in that the definition of “proof” seemed rather subjective depending on the experts involved. The proof required from the divisions differed for the experts whom the teams relied on at the beginning of the project and the lead appraiser at the end of the project. This problem was exacerbated because the model (CMMI-ACQ V1.2) and the context of its implementation (a state-owned procurement agency) were new. We sometimes had the feeling that we were required to submit a “proof of a proof.” A lot of time was spent on that issue, and the return on investment for that first stage was rather low because most of the time the proofs already existed but were recorded differently.

CMMI-ACQ As the Driver for an Integrated Systems Engineering Process Applied to “Systems of Systems”

For “systems of systems,” DGA experts based the systems engineering processes on the ISO/IEC 15288:2008 standard. However, this standard describes high-level processes and does not describe in an explicit manner the means to measure and improve them. In this context, CMMI-ACQ complements ISO/IEC 15288:2008 by defining key systems engineering processes and enabling their measurement and improvement.

Requirements Management (REQM), Acquisition Requirements Development (ARD), and Configuration Management (CM), as defined by the CMMI-ACQ model, are the keystones of DGA systems engineering processes and activities.

The Requirements Management process area details the following specific practices: Understand Requirements, Obtain Commitment to Requirements, Manage Requirements Change, Maintain Bidirectional Traceability of Requirements, and Identify Inconsistencies Between Project Work and Requirements. This process area is of great importance to DGA because it deals with understanding input requirements, obtaining commitments from stakeholders, and managing requirements changes. These activities are based on bidirectional traceability and consistencies between requirements and product work, and they help the architect of the DGA complete his or her work.

Although this process area is necessary, it is not sufficient by itself. There is a huge gap between customer requirements from the Joint Staff that expresses its needs with an operational capability point of view and the contractual requirements. Integrated teams must translate these operational customer requirements into functional requirements. To achieve this feat, they use functional analysis to elaborate functional architecture that supports attended operational capabilities.

After verifying the functional architecture and functional requirements with the Joint Staff using simulations and battle labs, the team translates them into technical requirements, which will be necessary for the acquisition process (e.g., tender, selection of a contractor) to follow.

The acquisition process can be modeled as the following activities:

• Operational requirements are translated into functional requirements.

• Functional requirements are translated into technical requirements.

• Technical requirements are used as the basis of the technical specification.

Every requirement is traceable from any one level to the one above and the one below. Likewise, each requirement, at each level, is traceable between engineering activities and integration activities, and follows a complete validation process.

Thus the Acquisition Requirements Development process area complements the REQM process area by specifying activities in three specific goals: Develop Customer Requirements, Develop Contractual Requirements, and Analyze and Validate Requirements.

During these activities, changes happen both as a result of evolving customer requirements and from compliance with suppliers’ requirements (e.g., unavailability of a technology, changing regulation). These changes have many effects. To avoid loss of the consistency of the architecture definition, each change triggers a change request procedure. An impact assessment is made based on the architecture definition of the current baseline. A review translates this information into a change request. If the change request is accepted, it implies an evolution of the architecture definition. These activities are controlled by the Configuration Management process area. The configuration control board is very important for tracking change and controlling consistency.

Once the mapping between the various activities of the systems engineering process is complete, improving the CMMI level at the enterprise level provides a mechanism for improving systems engineering. The mapping takes advantage of the measurement and continuous improvement possibilities provided by the CMMI-ACQ model, while sticking to a standardized engineering process. The various tools and technical standards developed for systems engineering can be related to the best practices promoted by CMMI-ACQ.

CMMI-ACQ and the “Three R’s” of DoD Acquisition

Claude Bolton

Much has been written about the state of program management, often called “acquisition” in the U.S. Department of Defense (DoD), and much of this discussion has not been flattering or positive. Acquisition is described as constantly late to schedule. It does not acquire what was needed. Its performance is less than expected. It spends grossly over original cost estimates.

As a result, a number of laws, rules, policies, and regulations have been produced over the years in an attempt to fix—or at least improve—the DoD acquisition process. In addition, a number of studies, commissions, think tank reviews, and other analyses have been chartered to determine the cause of the poor state of the DoD acquisition process. At last count, more than 100 such studies had been conducted, out-briefed, and “shelved,” with no apparent actual improvement in sight.

One has to ask, “Why hasn’t there been any significant progress? Why does the DoD acquisition process continue to struggle and underperform? Why is it, according to a 2008 GAO report, that of the nearly 100 major DoD programs reviewed, the majority of them experienced cost overruns of 27 percent on average and were 18 to 24 months behind schedule?”

While the GAO report’s methodology has been challenged with no rebuttal from the GAO, the fact remains that many programs are not performing adequately despite years of focus on improving the DoD acquisition process. Again, the question is “Why?” Are we not asking the right questions when it comes to DoD acquisition improvement? Are we not addressing the real root problem, but rather focusing on its symptoms? Are we collectively personifying Einstein’s definition of insanity—that is, are we continuing to do the same thing, yet expecting a different outcome? When it comes to the DoD acquisition process, Einstein may be on to something: From my vantage point, with rare exception, virtually all of the studies to date have not addressed the real problem.

“And what is the real problem?” you ask. If I were to tell you now, you might not bother to read the rest of this essay. And given that I may have to “prepare the battlefield” so that you will better understand the answer, let me give you my thoughts first and then explain the answer.

Would you be surprised if I were to tell you that all this fuss over the DoD acquisition process could end in a heartbeat and the process improve almost overnight by just following the “three R’s”? Or that actually executing acquisition in the DoD is as simple as the “three R’s”? It’s true. Don’t believe me? Let me tell you about the “three R’s” and how they, combined with CMMI-ACQ, could change DoD acquisition forever.

Most of us heard about and studied the “three R’s” when we attended grammar school: reading, writing, and arithmetic. If you could master those skills, you were well on your way to a great education and much success in the U.S. school system. Everything we learned involved the basics of the “three R’s.” Just look at what we are doing at this very moment. I am writing an essay that you are reading, so we are collectively using two of the “three R’s.” The idea is very basic, but without a good understanding of the basics early in our formative years, we would have a tough time communicating today. The same is true with arithmetic. Earlier in this essay, I shared some simple arithmetic regarding the GAO. Again, without learning the basics early in our lives, you and I would be lost by now.

What does all of this have to do with DoD acquisition? My view is that DoD acquisition, when reduced to its basics and practiced accordingly, is as simple as the “three R’s.” I know, I know. You are probably saying, “Bolton is crazy and must have lost a few brain cells once he retired.” Well, I may have lost a few brain cells, but not when it comes to acquisition and doing it right.

Let’s take a look at DoD acquisition and let’s see how the “three R’s” apply. First, I will admit that the “three R’s” for acquisition are different from those for grammar school. The “three R’s” in DoD acquisition have different meanings but are still quite simple. The following is a brief description of what I mean by the acquisition “three R’s”: