10 Phase 4: Maintaining Access Trojans, Backdoors, and Rootkits ... Oh My!

After completing Phase 3, the attacker has gained access to the target systems. So, the camel’s nose is under the tent. Now what? After gaining their much-coveted access, attackers want to maintain that access. This chapter discusses the tools and techniques they use to keep access and control systems. To achieve these goals, attackers utilize techniques based on malicious software such as Trojan horses, backdoors, bots, and rootkits. To understand how attacks occur and especially how to defend our networks, a sound understanding of these tools is essential.

Trojan Horses

You remember your ancient Greek history, right? The Greeks were attacking the city of Troy, which was well protected against external attacks. After numerous unsuccessful battles, the Greeks hatched an ingenious scheme to take the city. They built an immense wooden horse, which they left at the gates of Troy. The unsuspecting Trojans thought the horse was a gift from the retreating army (why anyone would think a retreating army would leave a gift is beyond me!). They brought the horse inside the gates, and, as the Trojans slept that night, the Greek warriors crept out of the horse and took the city.

Fast-forward a few millennia. Trojan horse software programs are among the most widely used classes of computer attack tools. Like their counterparts in ancient Greece, Trojan horse software consists of programs that appear to have a benign and possibly even useful purpose, but hide a malicious capability. An attacker can trick a user or administrator into running a Trojan horse program by making it appear attractive and disguising its true nature. Alternatively, bad guys can install a Trojan horse on a victim machine themselves, disguising the malicious code as some useful or expected program so that unsuspecting users and administrators cannot detect the attackers’ presence. Essentially, at some level, a Trojan horse is an exercise in social engineering: Can the attacker dupe the user into believing that the program is beneficial or con the user into running it? The moral of the story: Beware of geeks bearing gifts!

Some Trojan horse programs are merely destructive; they are designed to crash systems or destroy data. One such example of a purely destructive Trojan horse program was a DVD writer software package available for download on the Internet. This amazing gem had great functionality claims. It would convert a standard read-only DVD drive (used to install software or play movies) into a drive that could write DVDs—all through just installing this free software upgrade! According to the README file distributed with this apparently fantastic tool, you could create your own movie DVDs or back up your system with just a free software upgrade. There were only two catches to this astounding deal. First, it is simply physically impossible to do this in software when the underlying hardware is incapable of this function. Second, and tragically, the tool was a Trojan horse that deleted all contents of the poor users’ hard drives. Unfortunately, some unwitting users downloaded the tool and lost all of their data.

Whereas some Trojan horse tools are merely destructive, other Trojan horse programs are even more powerful, allowing an attacker to steal data or even remotely control systems. But let’s not get ahead of ourselves; to understand these capabilities, it’s important to explore the nature of another category of attack tools: backdoors.

Backdoors

As their name implies, backdoor software allows an attacker to access a machine using an alternative entry method. Normal users log in through front doors, such as login screens with user IDs and passwords, token-based authentication (using a physical token such as a smart card), or cryptographic authentication (such as the logon process for Windows or SSH). Attackers use backdoors to bypass these normal system security controls that act as the front door and its associated locks. Once attackers install a backdoor on a machine, they can access the system without using the passwords, encryption, and account structure associated with normal users of the machine.

The system administrator might add new-fangled, ultra-strong security controls for access to a machine, requiring super encryption and multiple passwords for any user on the box. However, with a backdoor in place, an attacker can access the system on the attacker’s terms, not the system administrator’s terms. The attacker might set up a backdoor requiring only a single backdoor password for access, or no password at all. The classic movie War Games illustrates the backdoor concept quite well. In that movie, the attacker types in the password Joshua. For the main computer in War Games, typing that password activated a backdoor that allowed the attacker, as well as the original system designer, to have complete access to the entire system.

Netcat as a Backdoor on UNIX Systems

As we discussed in Chapter 8, Phase 3: Gaining Access Using Network Attacks, a simple yet powerful example of a backdoor can be created using Netcat to listen on a specific port. You remember our good friend Netcat, the tool that is designed to simply and transparently move data around the network from any port on any machine to any other port on any other machine. Suppose an attacker has gained access to a system (perhaps using one of the techniques discussed in Chapter 7, Phase 3: Gaining Access Using Application and Operating System Attacks, or Chapter 8 such as buffer overflows or session hijacking), has broken into a user account with a login name of fred, and wants to set up a command-shell backdoor.

To use Netcat as a backdoor, the attacker must compile it with its GAPING_SECURITY_HOLE option, so that Netcat can be used to start running another program on the victim machine, attaching standard input and output of that program to the network. This option can be easily configured into Netcat while the attacker is compiling it. With a version of Netcat that includes the GAPING_SECURITY_HOLE option, the attacker can run the program with the -e flag to force Netcat to execute any other program, such as a command shell, to handle traffic received from the network. After loading the Netcat executable onto the victim machine, an attacker who has broken into the fred account on a system can type this:

This command will run Netcat as a backdoor listening on local TCP port 12345. Remember, nc is the program name for Netcat. However, an attacker can call the Netcat program any other name desired. When the attacker (or anyone else, for that matter) connects to TCP port 12345 using Netcat as a client, the Netcat backdoor will execute a command shell. As we saw in Chapter 8, a Netcat client runs on the attacker’s machine to connect to a backdoor implemented as a Netcat listener on the victim machine. The attacker then has an interactive shell session across the network to execute any commands of the attacker’s choosing on the victim machine. The context of the command shell session (i.e., the account name, privileges, and the current working directory) will be the same as the attacker who executed the Netcat listener in the first place. In our example, the command was executed from an account belonging to the user fred, so the attacker using the backdoor will have fred’s privileges. Table 10.1 provides commands and explanations to show what an attacker sees on the screen when interacting with this backdoor listener. (The attacker’s keystrokes are in bold.)

Table 10.1 Attacker’s Netcat Commands and Responses for a Backdoor Listener with Explanations

There are several items of interest to note in this interactive session. First, notice that no user ID and password are required when going through this particular backdoor. The attacker simply connects to port 12345 and starts typing commands, which our Netcat listener dutifully feeds into the command line for execution. Of course, an attacker could have used a specialized login routine, requiring a password to access the backdoor. Sometimes, attackers write a simple authentication script around Netcat to check a user ID and password before running the command shell. Also, note that there is no command prompt displayed for these commands. The Netcat listener running /bin/sh on Linux or UNIX does not return a command prompt, requiring the attacker to type commands without the prompt character. When using the Windows version of Netcat, the familiar c: > command prompt is displayed. Finally, notice how the commands are executed in the context of the user that started the backdoor listener. The ls command showed the contents of the working directory of the attacker when the Netcat listener was started. The whoami command showed the effective user ID to be fred, the account used by the attacker when the backdoor listener was run.

An attacker can also create a very similar backdoor on a Windows system using the Windows version of Netcat with the Windows command shell, cmd.exe. The command to execute to create such a listener is:

You might wonder, “Yes, but why? If the attacker has access to the system with account fred, why set up a backdoor listener for access? Why implement a backdoor when you’ve already got access through the front door?” Good question. Attackers often establish a backdoor as a hedge against the possibility that their normal front-door access might be shut down. A backdoor, ideally, will continue to provide access for the attacker even as the system configuration changes, with users being added and deleted and services being turned off and on. What if normal SSH access goes away because a new system administrator decides to disable SSH and uses a fancy Web-based administrator console for the box? The attacker can still use a backdoor to gain access even if the original entry point is closed by a more diligent system administrator. Once attackers gain access, they want to keep it. Backdoors provide just what the attackers need: reliable, consistent access on their own terms.

The Devious Duo: Backdoors Melded into Trojan Horses

We’ve seen pure Trojan horses (the evil DVD writer example) and pure backdoors (the example with the Netcat listener executing a shell). Things get far more interesting when the two classes of tools are melded together into Trojan horse backdoors. These programs appear to have a useful function, but in reality, allow an attacker to access a system and bypass security controls—a deadly combination of Trojan horse and backdoor characteristics. Although not every Trojan horse is a backdoor, and not every backdoor is a Trojan horse, those tools that fall into both categories are particularly powerful weapons in the attacker’s arsenal.

Roadmap for the Rest of the Chapter

Throughout the rest of this chapter, we discuss several tools that fall into the Trojan horse backdoor genre, all operating at different layers of our systems: application-level Trojan horse backdoors, user-mode rootkits (which modify or replace critical operating system executable programs or libraries), and kernel-mode rootkits (which modify the kernel of the operating system). Section by section through the rest of the chapter, we dissect each of these layers one by one, examining the capabilities of malicious code at each layer and offering defenses for each. As we progress through these layers, the attacker’s ability to hide increases significantly. Table 10.2 highlights each of these classes of Trojan horse backdoors. In the table, an analogy is included to illustrate how the particular tool works. For the analogy, consider a scenario where you are trying to eat soup and an attacker is trying to poison you.

Table 10.2 Categories of Trojan Horse Backdoors

As you can see, all of the tools in this class are quite powerful in the hands of attackers, with each category providing a deeper level of infiltration and control of a system. Given their power and widespread use, it is critical to understand how these tools are used and how to defend against them. As we look at each level of malicious code in more detail, we’ll return to that “Analogy” column from Table 10.2 to get a feel for how each specimen of Trojan horse backdoor impacts your system, as though you were eating a bowl of poisoned soup. We analyze each category of Trojan horse backdoor, starting our detailed analysis by looking at the very popular application-level Trojan horse backdoors.

Nasty: Application-Level Trojan Horse Backdoor Tools

As described in Table 10.2, application-level Trojan horse backdoors are tools that add a separate application to a system to give the attacker a presence on the victim machine. This software could provide the attacker with backdoor command-shell access to the machine, give the attacker the ability to control the system remotely, or even harvest sensitive information from the victim. The application-level Trojan horse backdoor analogy of Table 10.2 involves an attacker adding poison to your bowl of soup. A foreign entity has been introduced into your meal, allowing an attacker access to your tummy.

An enormous number of application-level Trojan horse backdoors have been developed for Windows platforms of all types. Because of the use of Windows on millions of computers worldwide, attackers want to exercise control over these machines. Although the techniques discussed in this section could also be applied to Linux or UNIX machines (or any type of general-purpose operating system for that matter), they are most widely used on Windows systems, due to the prevalence of Windows on the desktop. Application-level Trojan horse backdoors come in a variety of flavors, each with a separate focus in allowing the bad guy to achieve some goal. Let’s zoom in on three different types of application-level Trojan horse backdoors that support different attacker goals: remote-control backdoors, bots, and spyware.

Remote-Control Backdoors

What can the poison in your belly allow the attacker to do on your machine? First, application-level Trojan horse backdoors can give an attacker the ability to control a system across the network. If an attacker can get one of these beasts installed on your laptop, desktop, or server, the attacker will “0wn” your machine, having complete control over the system’s configuration and use. With a remote-control backdoor, the attacker can read, modify, or destroy all information on the system, from financial records to other sensitive documents, or whatever else is stored on the machine. Critical system applications can be stopped, impacting Internet services or Windows-controlled machinery and equipment.

Demonstrating the power of remote-control backdoors in the hands of skilled attackers, Microsoft itself appears to have been attacked with this type of tool in October 2000. Based on reports in the media, it appears that a Microsoft employee working from home was the victim of an application-level Trojan horse backdoor called QAZ. Once installed on the telecommuter’s computer, the Trojan horse spread itself around Microsoft’s corporate network, gathering passwords and allowing the attackers to snoop around, even viewing source code from Microsoft products.

Figure 10.1 shows the simple architecture of these tools. The attacker installs or tricks the user into installing the remote-control backdoor server on the target machine. Once installed, the backdoor server waits for connections from the attacker, or polls the attacker asking for commands to execute. The attacker uses a specialized remote-control client tool to generate the command for the remote-control backdoor server. When it receives a command, the remote-control backdoor executes the commands and sends a response back to the client. The attacker installs the client on a separate machine, and uses it to control the server across a network, such as an organization’s intranet or the Internet itself.

Figure 10.1 An attacker uses a remote-control backdoor to access and control a victim across the network.

Software developers in the computer underground have released thousands of tools with the exact same architecture shown in Figure 10.1. Sadly, it almost seems like a rite of passage for some in the computer underground to create a remote-control backdoor and release it publicly. To demonstrate their coding skills, numerous attackers craft a remote-control tool for Windows, release it to the world, and then move on to bigger and better attacks, including the rootkit tools we discuss later in this chapter. When these remote-control backdoor tools are initially released, the antivirus vendors scramble to devise new signatures to detect each one. For a short time after release, however, signatures don’t yet exist, making the bad guy’s job easier.

The Megasecurity Web site at www.megasecurity.org lists thousands of remote-control backdoor tools. This very comprehensive site is maintained by Aphex, Da_Doc, Magus, and MasterRat. This team provides a comprehensive inventory, listing each tool’s name, author, country of origin, and a screenshot showing the user interface. They also include a list of TCP and UDP port numbers used by each remote-control backdoor, the registry keys it modifies or adds, and a brief summary of the tool’s functionality. Although Megasecurity offered the code of each tool for download in the past, they currently do not distribute the software itself anymore. Now, the site is focused on providing a comprehensive inventory of these tools, with a list sorted by month of release from March 2000 through today. Some months have a relatively small number of tools released (a dozen), but many months have more than 50 of these darn things! Figure 10.2 shows a small sample of the user interfaces of some of the items inventoried at Megasecurity.

Figure 10.2 A small sampling of remote-control backdoors at Megasecurity. Note the different languages and styles, yet all use the same remote-control client–server architecture.

Whenever I’m investigating an attack associated with a remote-control backdoor, I typically search the Megasecurity site based on the Registry keys, port numbers, or file artifacts I’ve found associated with the attacker’s tool. Although the Megasecurity site offers its own built-in search capability, I prefer using Google’s handy “site:” directive that we discussed in Chapter 5, Phase 1: Reconnaissance, to scour through Megasecurity’s records. I frequently perform Google searches for site:megasecurity.org followed by the port number, Registry key name, and file name that I’ve discovered in the wild during an investigation. Note that this technique of looking for file names and related artifacts via search engines is just the starting point of an investigation. I also often move the evil specimen to an isolated laboratory system without any sensitive data loaded on it. There, I run the evil program to observe its capabilities before completely restoring the deliberately infected system to its original state.

Another huge list of remote-control backdoor tools (running on a variety of Windows and non-Windows platforms) is maintained by Joakim von Braun (of von Braun Consultants) at www.simovits.com/nyheter9902.html. The von Braun list shows the names and default ports used by each Trojan horse backdoor tool. Although hundreds of varieties of these backdoor Windows tools exist, the script kiddie masses focus on a small number of these tools. Based on my observations of these tools in the wild, the most popular Windows remote-control tools are the following (in decreasing order of popularity):

- The Virtual Network Computing (VNC) tool, a free, cross-platform (UNIX and Windows) tool used for legitimate remote administration but often abused as a backdoor, freely available at www.realvnc.com.

- Dameware, a legitimate commercial remote-control tool available for a fee, but also with a free demo version, at www.dameware.com. Like VNC, this normally legitimate tool is sometimes abused by attackers as a backdoor.

- Back Orifice 2000, at www.bo2k.com, one of the first and most powerful tools in this category.

- SubSeven, a very popular remote-control backdoor suite, with several competing versions available on the Internet.

What Can a Remote-Control Backdoor Do?

Although the functionality of various remote-control backdoors varies, most of them draw from a basic set of similar underlying functions. One particular tool might offer better control of the GUI (such as VNC), whereas others might include more control over local resources, including the hard drive, memory, and file system (such as BO2K). Still others excel at acting as a relay in moving traffic across the network to obscure the location of the attacker (such as SubSeven). Although particular tool functionality varies, Table 10.3 provides a round-up of various capabilities included in a majority of the tools listed at the Megasecurity Web site.

Table 10.3 A Sampling of Remote-Control Backdoor Functionality

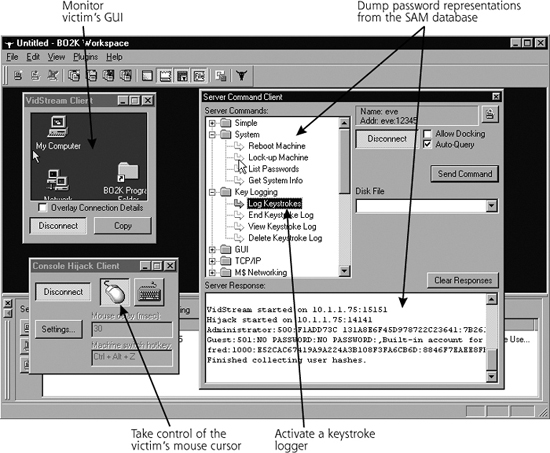

As an example of these capabilities implemented in one venerable remote-control backdoor, consider Figure 10.3, which shows an image of the BO2K screen. The attacker has configured BO2K to watch the GUI of the victim, dump the encrypted password representations from the target machine, and activate a keystroke logger. The attacker is now about to take over mouse control of the victim system.

What Is So Evil About That?

With these capabilities, most remote-control backdoors look remarkably like legitimate remote-control programs designed for system administrators and remote users, such as the commercial tools Symantec’s pcAnywhere, Altiris Carbon Copy, VNC, Dameware, Laplink, or even Microsoft’s own built-in Windows Remote Desktop utility. Indeed, many remote-control backdoor tools do the same thing as these useful remote-control programs, and in some cases, have added capabilities, together with source code. In fact, as we discussed earlier, attackers abuse some of the legitimate commercial tools such as VNC and Dameware, using them for illicit remote control.

In a sense, remote-control tools, whether created by commercial companies, open source developers, or the computer underground, are like a hammer. You can use a hammer to build a house, or you can hit someone in the head with it. It’s the user motivation that determines whether the tool is used for evil, and nothing in the tool itself. The tool can be used by the white hats (i.e., legitimate system administrators and security personnel) or the black hats (i.e., the attackers).

Build Your Own Trojans Without Any Programming Skill!

How does an attacker get a remote-control backdoor installed on the victim machine? Most often, the attackers trick the victim user into installing it. But there’s a catch: If I e-mail you a program titled Evil Backdoor or even VNC, you probably won’t run it (although, lamentably, some users will run anything you send them). One of the most popular methods for distribution of malicious code today remains mass e-mailing. Every day, millions of spoofed e-mails are sent from infected machines to everyone in the e-mail contact list of the infected machine, containing an attachment that implements an application-level Trojan horse backdoor. Because they use the harvested e-mail addresses from one victim’s e-mail contact list, these spoofed e-mail messages might appear to be legitimate, because they appear to be sent by an acquaintance. Increasingly, we are seeing highly skilled attackers sending targeted e-mail with Trojan horse backdoor attachments into specific companies and government organizations, designed to infiltrate those targets on behalf of an attacker. With a spoofed source e-mail address making the message appear to come from an important contact in the target, such as a CEO or other high-ranking person, the odds that the e-mail attachment will be executed increase massively.

To further increase the likelihood that a user will install the backdoor, the computer underground has released programs called wrappers or binders. These tools are useful in creating Trojan horses that install a remote-control backdoor. A wrapper attaches a given .EXE application (such as a simple game, an office application, or any other executable program) to the remote-control backdoor server executable (or any other executable, for that matter). The two separate programs are wrapped together in one resulting executable file that the attackers can name anything they want. Two executables enter the wrapper, and one executable leaves with the blended functionality of both input programs.

When the user runs the resulting wrapped executable file, the system first installs the remote-control backdoor, and then runs the benign application. The user only sees the latter action (which will likely be running a simple game or other program), and is duped into installing the remote-control backdoor. By wrapping a remote-control backdoor server around an electronic greeting card, I can send a birthday greeting that will install the backdoor as the user watches a birthday cake dancing across the screen. These wrapping programs are essentially do-it-yourself Trojan horse creation programs, allowing anyone to create a Trojan horse without doing any programming.

Numerous wrapper programs have been released, including Silk Rope, Saran-Wrap, EliteWrap, AFX File Lace, and Trojan Man. The AFX File Lace and Trojan Man programs even encrypt the malicious code before the wrapping process occurs, a process illustrated in Figure 10.4. That way, antivirus programs with signatures for the malicious code will not be able to detect the encrypted, wrapped malicious code, because the encrypted code no longer matches the signatures. To make this encrypted code functional, however, these wrappers include additional embedded software in the resulting output program that decrypts the malicious code when the combined package is executed on the victim machine. Of course, the antivirus vendors have created signatures to detect the decryption software employed by AFX File Lace and Trojan Man. Still, in future versions of these types of wrappers, we might see decryptors that dynamically alter their code to evade antivirus signatures. By recoding itself on the fly, such software would morph itself as it runs, altering its code, but not its functionality, by choosing from functionally equivalent machine-language instructions. Software implementing this technique is known as polymorphic code. This fancy term applies to pieces of code that have the exact same functionality, but a different set of instructions. With polymorphic code, an antivirus signature that detects one version of the code will not be able to detect the other, functionally equivalent, code. Yet, although the signature doesn’t match, the functionality does. Using a sophisticated wrapper with polymorphic capabilities, an attacker could create morphed decryptors that evade detection.

Figure 10.4 Wrapping two executables into a single package, and using encryption to evade antivirus tools.

But Where Are My Victims?

One of the fundamental problems with these application-level Trojan horse backdoor tools, from an attacker’s perspective, involves knowing where the ultimate victims are. Consider a scenario where an attacker uses a wrapper program to create a holiday greeting card with a remote-control backdoor wrapped up inside. The bad guy sends the resulting package via e-mail to one victim. This victim runs the program and loves the dancing ornaments and jamming holiday tunes. The unsuspecting victim wants to spread this holiday cheer with other people, forwarding the pretty but poisonous e-mail to two friends. These two friends like the holiday greeting as well, and forward it to two friends, and so on, and so on, infesting hundreds or even thousands of computers with the remote-control backdoor. Ultimately, the attacker doesn’t know who all the victims are, and cannot remotely control them without knowing the victim’s IP address. After all, the remote-control client requires the attacker to enter in the IP address of the victim to be controlled. How can an enterprising attacker solve this dilemma?

To solve this problem, some of the remote-backdoor programs, including BO2K and SubSeven, include notification functionality to alert the bad guys when a new victim falls under their control. Some of these tools advertise the fact that a system with a remote-control backdoor on it has started up by sending an e-mail to the attacker in effect saying, “Come and get me!” Now, e-mail can take several minutes to propagate across the Internet. Attackers in a hurry might want realtime notification about a new victim, rather than waiting for e-mail to arrive. Impatient attackers sometimes rely on notification via an Internet Relay Chat (IRC) channel to announce a new remote-control backdoor server in real time. Beyond this announcement capability for newly infected systems, we’ll look at additional uses of IRC for application-level Trojan horse backdoors later in this chapter, when we cover bots.

Shipping Remote-Control Backdoors via the Web: ActiveX Controls

Remote-control backdoors get even more powerful when melded with some of the active content mechanisms on the World Wide Web. ActiveX is a Microsoftdeveloped technology for distributing executable content via the Web. Like Sun’s Java, ActiveX sends code from a Web server to a browser, where it is executed.

These individual applications are referred to as ActiveX controls. Unlike Java applets, which are confined to a sandbox that limits their ability to attack the host machine, an ActiveX control can do anything on users’ machines that the users themselves can do: alter the configuration, delete files, send data anywhere on the network, and so on. You simply surf to my Web site with a browser configured to run ActiveX controls, and my Web server pumps an ActiveX control including a remote-control backdoor server to your browser, which runs the program and installs my evil code without your noticing.

Microsoft has engineered ActiveX controls to run only if they have a proper digital signature, using Microsoft’s Authenticode technology. Unfortunately, users can disable this signature check in their browsers, allowing some very nasty code to run on their systems. Alternatively, an improperly signed or unsigned ActiveX control forces most browsers to prompt a user asking whether the untrusted code should be executed. Most users unwittingly click OK without realizing that they’ve just given control of their machines over to an attacker.

Trojan Horses of a Different Color: Phishing Attacks and URL Obfuscation

As we have seen, attackers frequently distribute backdoor software as e-mail attachments. However, another Trojan horse activity associated with e-mail has no attachment at all, but instead a link to a Web site that appears to belong to a legitimate online enterprise. In these so-called phishing attacks, the bad guys spew thousands or millions of e-mail messages to a target list of addresses harvested from victim machines. These e-mails are spoofed to appear to come from a trusted source such as a bank, e-commerce company, or other financial services organization dealing with sensitive data. Some of these phishing e-mails are quite convincing, exhorting users to click on the link to reset their password, review recent purchase activity, or otherwise log in to their account to handle an urgent situation. But, of course, the link in the e-mail points not to the legitimate Web site, but instead to a cleverly disguised Web site controlled by the attacker. When an unsuspecting user clicks the link and sees what appears to be the e-commerce site, he or she might fill in critical account information, including credit card numbers, Social Security numbers, or banking account numbers. The bogus Web site, operated by the attacker, then dutifully harvests this sensitive information on behalf of the bad guys, who will later use it for fraudulent transactions or full-scale identity theft.

With phishing, instead of distributing a Trojan horse backdoor as an e-mail attachment, the e-mail simply points to a Web site that is itself the Trojan horse. It sure looks like the user’s bank, but it is, in fact, an evil duplicate.

The links included in phishing e-mails are actually accessing the attacker’s site, but trick a user in any one of a variety of ways. The attackers want their links in the e-mail to appear to point to the legitimate site, but to access their own evil site when clicked. Often, the attackers use an <HREF> tag to display certain text for the link on an HTML-enabled e-mail client screen, with the link actually pointing somewhere else.

First, and perhaps most simply, the attacker could dupe the user by creating a link that displays the text www.goodwebsite.org on the screen but really links to an evil site. To achieve this, the attacker could compose a link like the following and embed it in an e-mail message or on a Web site:

<A HREF=“http://www.evilwebsite.org”>www.goodwebsite.org</A><p>

Most HTML-rendering mail clients screen merely show a hot-link labeled www.goodwebsite.org. When a user clicks it, however, he or she will be directed to www.evilwebsite.org. Browser history files, proxy logs, and filters, however, will not be tricked by this mechanism at all, because the full evil URL is still sent in the HTTP request, without any obscurity. This technique is designed to fool human users only. Of course, although this form of obfuscation can be readily detected by viewing the source HTML of the e-mail message, it will still trick many victims and is commonly utilized in phishing schemes.

More subtle methods of disguising URLs can be achieved by combining this tactic with a different encoding scheme for the evil Web site URL. The vast majority of browsers and e-mail clients today support encoding URLs in a hex representation of ASCII or in Unicode (a 16-bit character set designed to represent more characters than plain old 8-bit ASCII). Using any ASCII-to-Hex-to-Unicode calculator, such as the handy free online tool at http://www.mikezilla.com/exp0012.html, an attacker could convert www.evilwebsite.org into the following ASCII or Unicode representations and include them in an HREF tag:

- <A HREF=“http://%77%77%77%2E%65%76%69%6C%77%65%62%73%69%74%65%2E%6F %72%67">www.goodwebsite.org</A><p>

- <A HREF=“http://www.evilweb site.org">www.goodwebsite.org</A><p>

These tactics just scratch the surface of the several dozen mechanisms bad guys use to obscure their URLs. Other tactics include sending Javascript in the message that encrypts the e-mail content, including the URLs, only decrypting it when it is displayed in a mail reader or browser’s HTML rendering engine and run. If a user views the source of the message, the decrypting script will be displayed, along with a bunch of cryptographic gibberish. Other URL obscuring tactics involve including special characters in the URL that make browsers have problems displaying a full URL, such as the %01 character, which would make old versions of Internet Explorer stop displaying all parts of the URL after that character.

These phishing and URL obscuring attacks get even more insidious when combined with the evil SSL manipulation techniques we discussed in Chapter 8. A bad guy could generate an SSL certificate that appears to be from a bank or e-commerce company. When a user clicks the link in a phishing e-mail, an SSL connection is established with the attacker’s own Web server. At this point, the browser might alert the user that the certificate does not appear to be signed by a legitimate Certificate Authority. The security of the situation is then all left in the user’s hands. Will the user allow this unsecured connection and then supply the attacker’s Web site with sensitive information? Sadly, many users will, completely overriding any security that might have been offered by SSL. Phishing, URL obfuscation, and SSL trickery are a truly devious combination that we face on a regular basis today, making it very difficult for users to keep their information secure.

Also Nasty: The Rise of the Bots

The remote-control backdoors we’ve been discussing are designed so that the bad guy can have complete control over a machine, one victim at a time. The attacker can log in to his new prey, control it, log out, and then move on to control a different victim. However, another class of application-level Trojan horse backdoor raises the ante significantly: bots. Bots are simply software programs that perform some action on behalf of a human on large numbers of infected machines. Unlike the one-at-a-time architecture of remote-control backdoors, bots are designed for economies of scale. Using bot software, a single attacker could have dozens, hundreds, thousands, or even more systems under control simultaneously, each with bot software installed to maintain and coordinate that control, as illustrated in Figure 10.5. An attacker installs bots or tricks users into installing them on as many machines as possible, the more the merrier (for the attacker, that is).

Figure 10.5 Bots are designed to be used en masse, increasing the economies of scale of the bad guy’s attack.

Collections of bots under the control of a single attacker are called bot-nets, and the people controlling such systems are sometimes called bot-herders, a name that conjures images of a cowboy sitting at a laptop corralling digital “cattle.” With thousands or hundreds of thousands of bots, a bot-herder can cause significant damage. Indeed, the largest bot-net our team has handled involved 171,000 systems under the control of a single attacker! The attacker could have collectively utilized the resources of all of those victim machines, which included home user systems connected to DSL and cable-modem lines, university machines in computer centers and dorm rooms, corporate computers on vast intranets, and government machines scattered all over the Internet.

Bots originated in the early 1990s as a tool to maintain control of an IRC channel. Some owners and users of various IRC channels noticed that when they logged out of a channel, an attacker would grab control of the channel or take over their chat username with a bot. Once in control of the channel, the attacker would kick his or her enemies out of the channel and allow in only those who curried favor with the intruder. The bot would monitor the channel and grab control when the channel owner or user left. To help minimize this kind of attack, the channel owners themselves turned to bots, making sure they never gave up control of the channel in the first place by employing a bot to periodically send keep-alive traffic to the IRC channel. Of course, an arms race quickly erupted, with the bad guys deploying more and more bots to gang up on the channel owners’ own bots, trying to force them out. Although these little bot skirmishes of yesteryear fighting over IRC turf were certainly entertaining, newer bots have gone mainstream with far more functionality.

Dozens of bot variations are available today, with source code available freely for download and customization. Some of the most popular and prolific are the phatbot family (which includes more than 500 variations based on tweaks of the same original code, with names like phatbot, gaobot, agobot, and forbot), the sdbot family (which includes sdbot, rbot, and others), and the mIRC bot family. Each of these specimens includes very modular code, which is rapidly being updated by the attacker community. Because the code is so modular and available in its original source code format, new mutant strains of bots arise almost every day on the Internet. Whereas some bots are cobbled together out of poorly written code (such as the sdbot family), others are very elegantly written, finely tuned for their malicious purposes (such as the phatbot family). In fact, one bot researcher commented on the high quality of the phatbot code by saying, “The code reads like a charm; it’s like dating the devil.”

From a functionality perspective, most bots include numerous actions that the bot can take when it receives commands from the attacker across the network. The phatbot family includes more than 100 different functions, each in a modular block of code the attacker can choose to embed in the bot or leave out if the given function is not desired. Variations of phatbot include all of the functionality we analyzed for remote-control backdoors, including all of the features of Table 10.3, such as a remote command shell, remote registry alterations, and streaming video and audio of a victim machine. However, bot functionality has evolved even further than the Table 10.3 backdoor capabilities, including special features that take advantage of a large number of infected systems in a bot-net. Table 10.4 includes some bot-specific features.

Table 10.4 A Sampling of Bot Functionality

Most bot-nets, including variations of phatbot, sdbot, and mIRC bots, are controlled via IRC, a protocol that gives the attackers numerous advantages. First, many networks, especially those ripe with poorly secured systems like home user machines and university student systems, allow outbound IRC communication. But even more important, IRC offers the attackers a built-in one-to-many communications path, in effect implementing a multicast channel. Think about it. If an attacker wants to send a single command to 171,000 bot-infected machines, the bad guy could write code that creates this message once and then sends it to each of the 171,000 machines, one at a time. That’s a time-consuming process, even for software on a relatively fast machine. IRC is a much more efficient bot communication channel. The various bots in the bot-net are all configured to log into a single IRC channel. The attacker then logs into this channel and sends commands across the channel to all of the bots, which then execute the commands. The attacker doesn’t even need to use a specialized client to control the bots. Instead, the bad guy can log into the channel using any IRC client, and type special bot-control commands into the channel to make the bots do his or her bidding. There’s no need to replicate the message 171,000 times, because IRC does that automatically. This use of IRC also lets the bots poll the attacker for commands, initiating an outbound connection from the bot-infected system to an IRC server. If the victim machine’s personal firewall blocks inbound connections, that’s okay for the attacker, whose commands are riding into the victim on an outbound IRC session. By default, IRC traffic is carried over TCP port 6667 listening on the IRC server. Most bots today still use this default IRC port, although attackers are increasingly using the same IRC protocol, but configuring their IRC servers to listen on a different TCP port. That way, their actions are a bit stealthier, without the telltale TCP port 6667 instantly tipping off investigators.

Although most bots use IRC today, a small number of them are employing other even more powerful protocols for communication with the attacker. IRC has numerous benefits for the bad guys, but it has one significant problem: its reliance on one or a small number of IRC servers to carry the message to all of the bots. If an investigator shuts down the IRC server or removes the particular channel used by the bot-net, the attacker is out of business with a headless bot-net the attacker cannot control. To alleviate this problem, some variations of phatbot employ another very pernicious method of communication, a peer-to-peer protocol called Waste. Originally created by America Online for file sharing among users, Waste is a highly distributed communication mechanism, without a centralized server to coordinate communications. Using the Waste protocol, various bot-infected machines automatically discover each other by scanning for a certain attacker-chosen TCP port. Once they discover each other, each bot keeps the other bots up to date regarding commands received from the attacker by shipping the commands across the network to all other bot-infected systems that were discovered. So, suppose an attacker has a bot-net of 171,000 systems, controlled via Waste. The attacker can inject commands into any one or more of these machines, which will dutifully relay that command to other systems on the bot-net, which will carry the command further to other systems in the bot-net, and so on and so forth until all of the massed hordes receive the attacker’s information. Now, suppose an investigator discovers some systems on the bot-net and shuts them down. Let’s assume that we’ve got an amazing investigator who is able to prune 30,000 bots off of this bot-net, removing the bot software from each of those machines. Is the attacker out of business now? Hardly! Using Waste, the remaining systems will continue to communicate the attacker’s wishes. With Waste, the bad guys have a much more resilient protocol than IRC. Expect to see much more of this kind of bot communication in the future.

One additional bot feature included in some variants of the phatbot family is worth noting: the ability to detect a virtual machine environment. Some bot authors recognized that the good guys are researching the latest bots by running them in a virtual machine environment, such as VMware or VirtualPC, to perform dynamic analysis of the bot’s behavior. These virtual machine tools let a user run one or more guest operating systems on top of a host operating system. With these tools, you could run three or four Windows machines on a single Linux box, or vice versa. Whenever I’m looking at the latest bot myself to see how it functions, I instinctively run the tool in VMware. If the bot under analysis hoses up my virtual machine, VMware lets me revert to the last good virtual machine image, quickly and easily removing all traces and damages of the bot without having to reinstall my operating system.

Yet, because so many researchers rely on virtual machine environments to analyze malicious code such as bots, the bad guys are trying to foil our analysis. Some phatbot specimens check to see if they are running in a virtual machine. If so, they shut off some of their more dastardly functionality so that researchers cannot observe it. This capability reminds me of some of the actions of my own children. My son sometimes gets into fights with my daughter while I’m in the other room. I hear a huge commotion and the upset shouts of my daughter, a sure sign that the boy has done something wrong. Yet, when I walk into the room to scope out the situation, my son almost always smiles at me with a look of pure innocence on his face, as if to say, “I’ve done nothing wrong, Daddy. Please move on.” Malicious code, in the form of virtual-machine-detecting bots, sometimes operates in the same manner when a researcher is investigating its capabilities.

Most of today’s bots detect virtual machines in a very lame fashion by looking for virtual machine environment artifacts in the file system, Registry, and running process of the machine. If the bot finds any of the files, Registry keys, or processes associated with VMware or VirtualPC, it alters its functionality. However, these types of artifacts are typically created in the host operating system, and are often left out of the guest operating system itself, where the researcher typically executes the bot. Thus, most of today’s virtual-environment-detecting capabilities can be trivially fooled. But that won’t always be the case.

A brilliant researcher named Joanna Rutkowska has introduced a tool at www.invisiblethings.org that detects a virtual machine environment in a much more subtle and fundamental way. Her tool is called the Red Pill, in homage to the Matrix movie where Keanu Reeves’ character Neo takes a Red Pill to leave the Matrix and enter the real world. The Red Pill program runs a single machine-language instruction for x86 processors, called SIDT. This instruction stores the contents of the Interrupt Descriptor Table Register (IDTR) in a given memory location.

You see, the IDTR points to a table in memory that tells the operating system where it should go to get code to handle various types of interrupts. Under normal circumstances, this interrupt table (pointed to by the IDTR) is typically located very near the start of system memory. Yet, when two machines are running on a single piece of hardware (which they are in the case of a host and guest operating system of a virtual machine environment), they cannot use the same IDTR, because that would make them pretty much the same operating system. Therefore, virtual machines typically have their own interrupt table located at a higher memory location than a real system’s interrupt table.

The Red Pill simply looks at the IDTR (via the SIDT instruction). If it is a small number (less than 0xd0), the Red Pill prints out a message saying that we are running on a real operating system. If it is greater than this value, the Red Pill says we’re on a virtual machine. It works amazingly well on both Linux and Windows, with both VMware and VirtualPC, and is extremely hard to dodge. I expect to see the technique used in the future iterations of bots very soon.

Distributing Bots: The Worm-Bot Feedback Loop

We’ve analyzed bot functionality and bot communications, but how do these bots get installed on a victim machine in the first place? Attackers sometimes rely on the same vectors for bot propagation they’ve historically used to deploy remote-control backdoors, namely, installing bots themselves or tricking users into installing them. Although such techniques certainly work, they can be difficult avenues by which to achieve a truly enormous bot-net. To improve their chances of conquering hundreds of thousands of victims with a bot, attackers have turned to worms.

Worms are self-replicating code that propagates across a network in an automated fashion. A worm conquers one machine using a given exploit, such as a buffer overflow vulnerability. Then, once lodged into that victim system, the worm uses it to scan for and compromise other machines. This new set of victims is likewise used to scan for and take over even more systems, resulting in an exponential rise in the number of systems with the worm installed.

Historically, worms focused on spreading copies of themselves. Worms begat worms, which begat more worms. But today, attackers are using worms and bots together. Suppose an attacker has compromised only ten measly machines with a bot. That bad guy could write a worm to infect new machines, and use those ten bot-infected boxes as a nice running start for worm distribution. Let’s suppose that those ten bots spread the worm to 100 systems each, resulting in 1,000 newly compromised machines. The attacker can include that vary same bot as a payload in the worm. When the worm takes over a new victim, it carries the bot (and with it, the attacker’s control) to that new system. Now, the bad guy is up more than 1,000 bot-infected systems, a 100-fold increase in the bot-net size. The attacker can then craft a new worm that exploits another flaw, using the more than 1,000 bot-infected machines to compromise, let’s say, another 100,000 machines, installing a bot on them as well. So, we’ve entered a vicious feedback loop, as illustrated in Figure 10.6. Bots are spreading worms, which are spreading bots, which are spreading even more worms. No wonder the bad guys are establishing vast bot-nets around the world!

Figure 10.6 Bots spread worms, which spread bots, which spread worms, which...

One of the most popular forms of bot-worm combos is a mass-mailing worm that carries a bot. The attacker sends e-mail spam with an attachment claiming to be an important document or a critical system patch the user must install.

Some unsuspecting users run the attachment, which installs a worm–bot combo on their machines. The bot gives the attacker control. The worm component then harvests e-mail addresses from the users’ e-mail program, and forwards the same message to a new set of victims. Interestingly, many of these worms spoof the source address of the e-mail. So, suppose Victim A gets infected and has e-mail messages from Victim B and Victim C in his e-mail client. The nasty worm then sends an e-mail from Victim A’s machine, with a source e-mail address of Victim B and a destination address of Victim C. Victim C will not even realize that Victim A is infected, and might trust the e-mail appearing to come from Victim B. With thousands of e-mail addresses harvested from Victim A, this tactic can spread the worm and bot to a big number of new victims, where the cycle repeats itself. We’ve seen such tactics applied to many worms that carry bots, including variations of the widespread Sobig, Bagle, Netsky, and MyDoom malicious code. Such techniques are likewise applied in phishing attacks.

Additional Nastiness: Spyware Everywhere!

In addition to remote-control backdoors and bots, another frustratingly common form of application-level Trojan horse backdoor is spyware. The Internet today is a cesspool of spyware, with the threat growing all the time as unscrupulous advertisers and scam artists aggressively foist their spyware on huge numbers of users around the world. Some innocent Web surfers are often shocked to discover dozens or even hundreds of spyware specimens installed on their systems. Spyware, as its name implies, spies on users to watch their activities on their machine on behalf of the spyware’s author or controller. This spying ranges from fairly innocuous activities to major invasions of users’ privacy, possibly even leading to identity theft. Some of the most popular spyware capabilities are summarized in Table 10.5. It is important to note a distinction between spyware and the backdoors and bots we’ve been analyzing. The remote-control backdoors of Table 10.3 and the bots of Table 10.4 typically include huge amalgamations of different functional doo-dads, bundling together many different rows from those tables into a single package. Individual spyware specimens, however, tend to be pretty focused, with each spyware package typically offering only one or two functions listed in Table 10.5. Some would consider this a major limitation, but, as someone who values privacy, I’m happy we haven’t seen all of these capabilities bundled together in a single package ... yet!

Table 10.5 A Sampling of Spyware Functionality

So this spyware is capable of some pretty invasive stuff, but how does it get installed on a victim machine in the first place? In some instances, spyware rides along inside a bot, installed by an attacker or a worm. However, by far the most common method of spyware propagation is users themselves, who are tricked into installing spyware that is bundled with other programs. Some of the add-on search bars for popular browsers include spyware that aggregates user surfing habits or even tailors search results based on advertisers’ wishes. Some computer games available for free or even on a commercial basis include spyware capabilities. A few other unique system add-ons, such as those annoying little animated mouse cursors, special screen backgrounds, and screen savers carry an undocumented extra spyware bonus packaged with their main functionality. A few pornographic Web sites require users to install special video player software or other tools to optimize those sites’ images on users’ machines. Such tools quite often include specialized spyware devoted to the porn industry.

Sometimes, spyware itself is disguised as an antispyware program, designed to trick users into installing it on their systems, thinking that they’ve gotten some level of protection. In particular, the wonderful Ad-Aware program by Lavasoft is a really good antispyware program, detecting many forms of spyware on a machine. Ad-Aware is available for free as a tool that you run on demand, or on a commercial basis with extra features like real-time spyware installation detection. I use Ad-Aware on my own machine on a regular basis and have been very pleased with its results in fighting nasty spyware. However, there are some evil imposters out there, with tools sometimes named A-daware and even Ada-ware that pretend to be the normal, wholesome Ad-Aware. Sadly, the imposters actually install spyware on users’ machines. Because of this concern, make sure you use Ad-Aware downloaded only from www.lavasoft.com and those mirrors that the main site directly links to. Otherwise, you never know what you’re going to get!

So many programs available for free download on the Internet today include spyware because the companies behind the spyware have made it economically beneficial for these programs’ authors to bundle in a little bit of spyware. I recently received a message from a software developer who had written a rather popular computer game, downloaded by 200,000 people over the last year. The game is available for free, and the author created it as a labor of love and to have some fun. This game author had received e-mail from a spyware purveyor containing a pretty lucrative offer. By adding a couple of small additional programs to his game installation package, this developer would reap significant financial rewards. For each installation of a tool that aggregates user surfing habits, the developer would receive a nickel. With every install of a search bar that would filter and inject ads into a user’s browser, the developer would get a dime. For a pop-up ad generator, the developer got a quarter. And there were several other options offered on this spyware purveyor’s menu. With the whole menu in view, the developer realized that by bundling all of these spyware options into his game program, he could make approximately 95 cents for each installation. With over 200,000 people installing his game every year, the developer could make some serious cash on the side, almost $200,000 per year in extra income! Happily, the game author that e-mailed me was horrified at even receiving the offer, and never included these functions in the game. Sadly, however, not all software developers are so scrupulous. Many of them succumb to these scary offers, lacing their programs with an unadvertised spyware bonus. In effect, their programs actually become Trojan horse backdoors. They tease users with one useful or benign function, while surreptitiously installing another function that gives the attacker some level of access to or control over the victim machine and user.

Besides bundling with other programs, spyware (and other forms of malicious code) are increasingly propagating via Web browser vulnerabilities. As we discussed in Chapter 7, attackers have exploited otherwise-innocent Web sites and placed malicious code designed to infect machines that browse these now-toxic sites. By simply surfing to the wrong site with a vulnerable browser, a victim machine becomes infected with spyware.

Defenses Against Application-Level Trojan Horse Backdoors, Bots, and Spyware

Bare Minimum: Use Antivirus and Antispyware Tools

The vast majority of the remote-control backdoors and bots described in this chapter have a well-known way of altering the system, adding particular Registry keys, creating specific files, and starting certain services. Antivirus programs include signatures to detect these artifacts created by each tool on a hard drive and in system memory. Although remote-control backdoors and bots are not computer viruses (because they do not automatically infect other applications or documents), they can be detected by antivirus tools. All of the major antivirus program vendors have released versions of their software that can detect and remove the most popular evil backdoors and bots. It’s important to note, however, that most antivirus tools do not have signatures for Netcat and VNC, two programs sometimes used legitimately, but often abused by attackers as remote-control backdoors.

Beyond the backdoors and bots, which can be controlled by antivirus tools, we also need to deploy antispyware tools diligently. These tools include signatures to look for the most common forms of spyware on the Internet. Some antivirus tools even include antispyware capabilities. Unfortunately, the antispyware capabilities of some of the antivirus tools are watered down, due to economic and legal factors. From an economic perspective, some antivirus vendors limit the comprehensiveness of the signature base of their bundled antispyware capabilities to help encourage customers to buy a separate add-on antispyware tool. Rather than selling one program to a user, the vendor can now sell two.

From a legal perspective, some spyware purveyors have sued antivirus companies, claiming that their so-called spyware programs aren’t, in fact, malicious. They point out that their programs are merely helping to customize the user’s Web experience based on that user’s particular needs and habits. Underscoring their position, these spyware people point out that their licensing agreements specifically tell users how their information will be gathered and used, and that users must agree to these actions before the program is installed. Of course, this licensing agreement is typically several pages long, written in indecipherable legalese, and flashed quickly on the user’s screen in small text with a big OK button that many users reflexively click. Thus, argue these spyware vendors, they’ve gotten the user’s permission, and therefore their tools aren’t evil. One person’s spyware is another person’s meal ticket, I suppose. When an antivirus company labels spyware as malicious, that costs the spyware authors money, so they sometimes respond with lawsuits. Many antispyware programs get around this legal imbroglio by not calling discovered spyware specimens “malicious code.” Instead, any discovered spyware is labeled Potentially Unwanted Programs (PUPs). It’s up to the user to evaluate whether a given PUP should be there or should be deleted, so the antispyware vendor has thus dodged some significant legal problems.

To deal with these issues, I prefer to run both an antivirus tool and a separate antispyware tool on each of my machines to get two layers of protection, one against each type of threat. That way, I don’t have to worry about watered-down antispyware capabilities impacted by economic or legal wrangling. I can also carefully manage my PUPs based on my own needs. And, best of all, some of the antispyware tools label Netcat and VNC as a PUP, letting me make the decision of whether it’s my own version of these tools installed for administration, or some evildoer’s version that I want to eradicate.

Because attackers are constantly developing new remote-control backdoors, bots, and spyware, it is critical for organizations to load the latest antivirus and antispyware definitions into antivirus and antispyware software. These virus definition files should be updated daily or as new signatures are released. The antivirus and antispyware vendors have all developed capabilities to download virus definitions across the Internet, and have included automatic installation of the latest checks. By taking time to implement an effective antivirus and antispyware program, users and organizations can minimize the threat posed by application-level Trojan horses and greatly improve the security of their critical information resources.

Looking for Unusual TCP and UDP Ports

Many of the remote-control backdoors and bots we’ve discussed listen on a given TCP or UDP port. These ports can be discovered using a variety of mechanisms that we discussed in Chapter 6, Phase 2: Scanning. Remember, the built-in Windows netstat command, as well as third-party tools like TCPView, Fport, and ActivePorts, can help you find strange listening ports on a Windows machine. On Linux and UNIX, the netstat command comes in handy as well, along with the lsof -i command.

Knowing Your Software

Although antivirus and antispyware tools provide a good deal of protection, in the end, you have to be wary of what you run on your systems. Understand who wrote your software and what it is supposed to do. When you troll the Internet and find some apparently new, useful tool, be very careful with it! Can you trust it? Antivirus and antispyware tools can help here by checking to see if the executable has any detectable signatures of malicious software. However, antivirus and antispyware tools are not a panacea. They only know certain characteristics of malicious software, and cannot predict the maliciousness of all programs.

Therefore, beyond virus and spyware checking, you should consider the developer of the program you are downloading. Is the developer trustworthy? Do you really want to run a program you downloaded from www.thisevilprogramwillannihilateyourcomputer.com, even if your antivirus and antispyware scanners give it an apparent clean bill of health? To avoid problems with application-level Trojan horse backdoor tools, only run software from trusted developers. Of course, many of the tools discussed in this book come from developers you might not trust. That is why you should use them with such care, on nonproduction systems for evaluation purposes.

So, who is a trusted developer, and how do you make sure software came from a trusted source? The software development community has developed a variety of techniques to determine the trustworthiness of software. Many software programs distributed on the Internet include a digital fingerprint so a user can verify that the program has not been altered. Other developers go further and include a digital signature to identify the developer of the program and verify its integrity. By recalculating the fingerprint or verifying the signature of a downloaded program, a user can be more certain that the program was written by the developer and was not altered by an attacker.

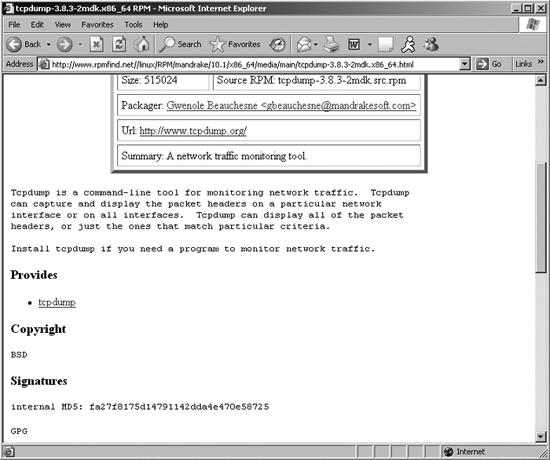

Digital fingerprints are typically implemented using a hash algorithm. The Message Digest 5 (MD5) algorithm and the Secure Hash Algorithm 1 (SHA-1) are common routines used by software developers to create a digital fingerprint. By running a program such as md5sum or sha1sum, which are distributed with many Linux operating systems, the developer creates a digital fingerprint. This fingerprint is stored in a safe place, such as the developer’s own Web site or a high-profile public Web site. After downloading a program from the developer, users can calculate the fingerprint of the program on their own system using md5sum or sha1sum on Linux. Alternatively, you could rely on the md5deep and sha1deep programs for Linux, UNIX, and Windows, written by Jesse Kornblum and distributed for free. The public fingerprint can be compared with the just-calculated fingerprint of the downloaded program to verify the program hasn’t been altered. In this way, fingerprints give users assurance of the integrity of a program. Figure 10.7 shows an MD5 fingerprint at the very useful www.rpmfind.net Web site for the sniffer program, tcpdump. Still, you need to be careful. If attackers break into a software distribution site, they might load a Trojan horse backdoor of the software and alter the MD5 sum or SHA-1 hash on the site to match their own malicious code. For this reason, I always download a new program from a couple of different mirrors and compare the hashes between the different sites to minimize the chance of an attacker substituting evil code in place of the program I want to use.

Figure 10.7 MD5 hash of tcpdump helps ensure it hasn’t been Trojanized.

Going further, other programs carry a digital signature created by the program’s developer. These digital signatures provide integrity assurances and authentication of the tool’s developer. For example, a developer could use the PGP or Gnu Privacy Guard (GnuPG) programs to digitally sign the code. Alternatively, Microsoft has created its Authenticode initiative for digitally signing software developed for Microsoft platforms. By using a PGP- or GnuPG-compatible program or Internet Explorer’s built in Authenticode signature capabilities, a user can check the signature of a program to verify that the program came from a given developer and hasn’t been altered.

So with these technologies, you can verify that a program was not altered and that it was written by a given developer. That still leaves open the issue of whether you can trust that developer. Who can you trust, after all? Can you trust the software from a major software company? Perhaps. Can you trust the software from a small developer on the Internet you’ve never heard of until you stumbled on their latest cool game? That is purely a policy issue, and a decision you have to make for yourself and your organization.

User Education Is Also Critical

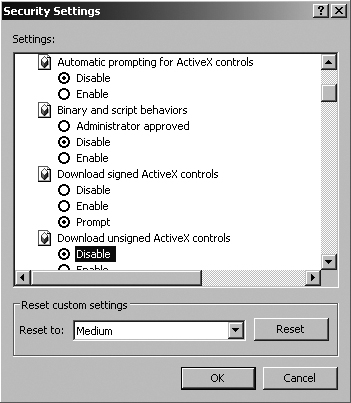

To prevent application-level Trojan horse backdoor attacks, you must configure your browsers conservatively so they don’t automatically run ActiveX controls downloaded from the network. All of your Web users should be educated to avoid alteration of the security settings of their browsers. In particular, the browser should be configured to execute only signed ActiveX controls from trusted software houses. Better yet, just disable all ActiveX—now there’s an idea! Of course, if you turn off all ActiveX, some applications on the Internet might not work. Figure 10.8 shows the security settings of Internet Explorer that cover downloading and running ActiveX controls, located in your browser under Tools ![]() Internet Options

Internet Options ![]() Security

Security ![]() Custom Level. If users alter these settings, they could cause major trouble, allowing malicious software to seep in from the Web to be executed on a protected network.

Custom Level. If users alter these settings, they could cause major trouble, allowing malicious software to seep in from the Web to be executed on a protected network.

Figure 10.8 Internet Explorer’s ActiveX control settings.

Because of these concerns, you might want to block ActiveX controls without proper digital signatures from trusted sources at your firewalls to prevent them from coming into your network. Several firewall vendors have the ability to drop all improperly signed ActiveX controls. By blocking bad ActiveX controls at the perimeter of your network, you won’t have to worry about these beasts getting through your barriers.

Finally, educate your user base about phishing attacks, and make sure they don’t respond to unsolicited e-mail that appears to come from e-commerce sites or banks. Whenever they surf to a Web site that requests sensitive information, users should check to make sure that any certificates associated the site appear to come from a legitimate site and a legitimate Certificate Authority. If you find some nefarious phishing e-mail, report it to the Anti-Phishing Working Group, at www.antiphishing.org, a great team that works to stomp out phishing by shutting down phishers’ Web sites and improving user awareness.

Even Nastier: User-Mode Rootkits

The application-level Trojan horse backdoors we’ve discussed so far (Netcat listeners, remote-control backdoors, bots, and spyware) are separate applications that an attacker adds to a system to act as a backdoor. Although these application-level Trojan horse backdoors are very powerful, they are often detectable because they are separate application-level programs running on a machine. Going back to our soup analogy from Table 10.2, you could use a poison detector to determine if someone has added poison to your soup. Similarly, by detecting the additional software running on a machine (using antivirus and antispyware programs, for example), a system administrator can investigate and detect the application-level Trojan horse backdoor.

User-mode rootkits are a more insidious form of Trojan horse backdoor than their application-level counterparts. User-mode rootkits raise the ante by altering or replacing existing operating system software, as shown in Figure 10.9. Rather than running as a foreign application (such as Netcat or a bot), usermode rootkits modify critical operating system executables or libraries to let an attacker have backdoor access and hide on the system. They are called user-mode rootkits because these tools alter the programs and libraries that users and administrators can invoke on a system, as opposed to the kernel-mode rootkits that change the heart of the operating system, the kernel, which we discuss later in this chapter. Back to our analogy, rather than adding poison to the soup, usermode rootkits genetically alter your existing potatoes so that they become poisonous, making detection even more difficult. There is no foreign additive to the soup; instead parts of the soup itself have been altered with malicious alternatives. By replacing or tweaking operating system components, rootkits can be far more powerful than application-level Trojan horse backdoors.

Figure 10.9 Comparing application-level Trojan horse backdoors with user-mode rootkits (for Linux and UNIX systems in this example).

User-mode rootkits have been around for well over a decade, with the first very powerful rootkits detected in the early 1990s on UNIX systems. Many of the early rootkits were kept within the underground hacker community and distributed via IRC for a few years. Throughout the 1990s and into the new millennium, user-mode rootkits have become more and more powerful and radically easier to use. Now, user-mode rootkit variants are available that practically install themselves, allowing an attacker to “rootkit” a machine in less than ten seconds.

What Do User-Mode Rootkits Do?

Contrary to what their name implies, rootkits do not allow an attacker to gain root access to a system initially. Rootkits depend on the attackers’ having already obtained super-user access (that is, root on Linux and UNIX machines, or administrator or SYSTEM privileges on Windows machines). In a rootkit attack, this super-user access is likely obtained using the techniques described in Chapters 7 and 8, including buffer overflows, password cracking, session hijacking, and other means. Once an attacker conquers root, administrator, or SYSTEM privileges on a machine, a rootkit is a suite of tools that let the attacker maintain super-user access by implementing a backdoor and hiding evidence of the system compromise. User-mode rootkits are available for a variety of platforms, including Linux, BSD, Solaris, HP-UX, AIX, and other UNIX variations. Several usermode rootkits have also been released for Windows platforms as well. We’ll look at Linux/UNIX and Windows user-mode rootkits separately in this chapter.

Linux/UNIX User-Mode Rootkits

Most Linux and UNIX user-mode rootkits replace critical operating system files with new versions that let an attacker get backdoor access to the machine and hide the attacker’s presence on the box. Each rootkit might alter a half-dozen or more critical executables to achieve these goals. Most Linux/UNIX rootkits include several elements, including backdoors, sniffers, and various hiding tools, each of which we explore next.

Linux/UNIX User-Mode Rootkit Backdoors

Some of the most fundamental components of many user-mode rootkits for Linux and UNIX are a full complement of backdoor executables that replace existing operating system programs on the victim machine with new rootkit versions. But how do these rootkits implement their backdoors? To understand rootkit backdoors, it’s important to know what happens when you log in to a Linux or UNIX machine. When you log in to a system, whether by typing at the local keyboard or accessing the system across a network using telnet, the /bin/login program runs. Alternatively, if you log in using SSH, the ssh daemon runs, typically located in /usr/sbin/sshd. The system uses the login or sshd executables to gather and check the user authentication credentials, such as the user’s ID and password for /bin/login and the user’s public key for specific configurations of sshd. Once the user provides authentication credentials, the login or sshd program checks the system’s password file or the user’s SSH credentials to determine whether the authentication credentials are accurate. If they are okay, we’ve verified the user’s identity, so the login or sshd routine allows the user into the system.

Many user-mode rootkits replace the login and sshd programs with modified versions that include a backdoor password for root access hard-coded into the login and sshd executables themselves. If the attacker enters the backdoor root password, the modified login and sshd programs give access to the system, instantly as root. Even if the system administrator alters the legitimate root password for the system (or wipes the password file clean), the attacker can still log in as root using the backdoor password embedded in the login and sshd executables. So, a rootkit’s login and sshd routines are really backdoors, because they can be used to bypass normal system security controls. Furthermore, they are Trojan horses, because although they look like normal, happy programs, they are really evil backdoors.

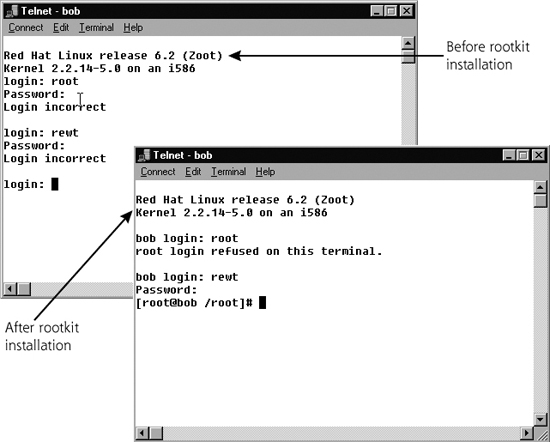

Figure 10.10 shows a user logging onto a system before and after a user-mode rootkit is installed. In this example, the login routine is replaced with a backdoor version from the widely used Linux RootKit, lrk6. Note the subtle differences in behavior of the original login routine and the new backdoor version.

Figure 10.10 Behavior of a login executable before and after installation of a Linux rootkit.

In Figure 10.10, the first difference we notice in the before and after pictures is the inclusion of the system name before the login prompt on the rootkitted system, which says “bob login:” instead of simply “login:”. Additionally, when we tried to log in as root, the original login routine requested our password. The system is configured to disallow incoming telnet as root, a common configuration on Linux and UNIX systems, so it gathered the password but wouldn’t allow the login. The original login executable just displayed the “login:” prompt again. The rootkitted login program however, displayed a message saying, “root login refused on this terminal.”

Of course, a more sophisticated attacker would first observe the behavior of the login routine, and very carefully select (or even construct) a rootkit login routine to make sure that it properly mimics the behavior of the original login routine. However, if the behavior of your login routine (or sshd executable) ever changes, as shown in Figure 10.10, this could be a tip-off that something is awry with your system. You should investigate immediately. The difference could be due to a patch or system configuration change, or it could be a sign of something sinister.