Chapter 9. Virtual Worlds and Fraud

Jeff Bardzell, Shaowen Bardzell, and Tyler Pace

9.1 Introduction

The gaming industry is seeing a rapid increase in massively multiplayer online games (MMOGs), in terms of both the number of players and the amount of revenue generated. What was an isolated phenomenon—online gaming—only 10 years ago is now a household staple. The significance of gaming is extending its reach beyond entertainment systems, such as the Sony Playstation, as non-entertainment uses of game technologies become prevalent. Health, education, military, and marketing uses of game technologies are leading a wave of “serious games,” backed by both government and private funding.

At the same time, and independently of the progress of the gaming industry, online fraud has grown at a remarkable pace. According to the research firm Javelin, identity theft cost U.S. businesses $50 billion in 2004 and $57 billion in 2005—more recent numbers have not yet been publicly released, but all point to a further increase of the problem. The costs of click fraud1 in many ways are more difficult to estimate, among other things due to the fact that there are no postmortem traces of it having occurred. As early as 2004, click fraud was recognized as one of the emerging threats faced by online businesses [69].

An increasing number of researchers and practitioners are now starting to worry about a merger of these two patterns, in which MMOGs are exposed to online fraud and provide new avenues for online fraud [401]. The former is largely attributable to the potential to monetize virtual possessions, but will, with the introduction of product placements, also have a component associated with click fraud and impression fraud. The latter is due to the fact that scripts used by gamers may also host functionality that facilitates fraudulent activities beyond the worlds associated with the game. More concretely, gamer-created/executed scripts may carry out phishing attacks on players, may be used to distribute spam and phishing emails, and may cause false clicks and ad impressions.

9.1.1 Fraud and Games

While research on both fraud and, increasingly, video games is available, comparatively little has been written on intersections between the two. Identity theft is one domain of electronic fraud that has been explored in the context of games. Chen et al. [52] provide a useful analysis of identity theft in online games, with a focus on East Asian context. In addition to providing a general introduction to online gaming, they explore several types of online gaming crime, especially virtual property and identity theft, relying in part on statistics collected by the National Police Administration of Taiwan. For them, identity theft is considered the most dangerous vulnerability, and they argue that static username–password authentication mechanisms are insufficient, before exploring alternative authentication approaches.

It is beyond the scope of Chen et al.’s work to consider fraud more generally than identity theft and authentication. Likewise, in their pragmatic focus on designing better authentication systems, they do little conceptualizing or theoretical development in this space. As we approach that topic in this chapter, we will summarize current research on cheating and games, provide a functional and architectural overview of MMOGs, summarize types of electronic fraud, and introduce an updated model of security for online games.

9.1.2 Cheating and Games

An area of direct relevance for game security pertains to cheating in games. Cheating and fraud in games are similar but nonidentical phenomena. Yan and Randell [485] define cheating as follows:

Any behavior that a player uses to gain an advantage over his peer players or achieve a target in an online game is cheating if, according to the game rules or at the discretion of the game operator (i.e., the game service provider, who is not necessarily the developer of the game), the advantage or target is one that he is not supposed to have achieved. (p.1)

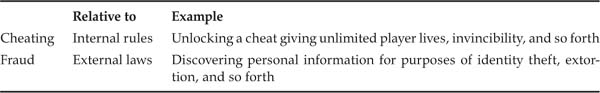

Both cheating and fraud involve players misusing game environments and rule systems to achieve ends that are unfair. One primary difference between the two is that, whereas cheating is defined relative to the rules of the game as set by the game service provider, fraud involves violations of national and international law (Table 9.1). In addition, whereas cheating is limited to the games themselves, game-related fraud may extend to external media, such as web forums and email. Examples of external purpose include exploiting trust built in a game environment to trick a user to install malware, such as keylogging software, which in turn is used to gain access to real-life bank accounts.

Table 9.1. Fraud versus cheating in MMOGs.

In extreme cases, the actions of virtual identities have resulted in real-life murder. In 2005, the China Daily reported that two players found a rare item in the popular game Legend of Mir 3. The players sold the item for 7200 yuan ($939). When one player felt the other cheated him of his fair share of the profits, he murdered his former comrade [292].

The most systematic overview of online cheating can be found in Yan and Randell [485], which offers what is intended to be a complete taxonomy of cheating in games. The authors identify three axes with which to classify all forms of cheating in games:

• Vulnerability. This axis focuses on the weak point of the game system that is attacked. It includes people, such as game operators and players, as well as system design inadequacies, such as those in the game itself or in underlying systems (e.g., network latency glitches).

• Possible failures. This axis refers to the consequences of cheating across the foundational aspects of information security: loss of data (confidentiality), alteration of game characteristics (integrity), service denial (availability), and misrepresentation (authenticity).

• Exploiters. On this axis are the perpetrators of the cheating. It includes cooperative cheating (game operator and player, or multiple players) as well as individual cheating (game operator, single player).

Yan and Randell’s framework can be extended more generally to game-related fraud by expanding the scope of each category beyond games as information systems. Vulnerabilities, for example, include technologies that enable the proliferation of trojan horses. Possible failures extend to attacks that access banking information via game subscription payment systems. Exploiters go beyond developers and players to any criminals who use games as part of their portfolio for attacks, without regard for whether they are themselves players or service providers.

9.2 MMOGs as a Domain for Fraud

Massively multiplayer online games are information systems, as are web-based banking applications and auction sites. But MMOGs are also simulated worlds, with embodied avatars, sophisticated communication and social presence tools, and participant-created content. As a result, MMOGs have both commonalties and differences compared to two-dimensional, web-based applications.

9.2.1 Functional Overview of MMOGs

Massively multiplayer online games are a subset of video games uniquely categorized by a persistent, interactive, and social virtual world populated by the avatars of players. Persistence in MMOGs permits the game to run, or retain state, whether or not players are currently engaged with the game. Their interactivity means that the virtual world of a MMOG exists on a server that can be accessed remotely and simultaneously by many players [49]. The social nature of virtual worlds is a result of combining human players; methods for communication, such as chat or voice systems; and different mechanisms for persistent grouping.

MMOGs share the following traits with all games [378]:

• Players, who are willing to participate in the game

• Rules, which define the limits of the game

• Goals, which give rise to conflicts and rivalry among the players

To these traits, MMOGs add network communications, economies with real-world value, and complex social interaction inside a shared narrative/ simulation space. Both the rule-bound nature of games and their sociocultural context—including, for example, persistent groups and social interaction—create possibilities for fraudulent behavior that do not have analogs in two-dimensional, web-based banking or auction sites.

Rules govern the development of a game and establish the basic interactions that can take place within the game world. Two types of rules exist in computer games: game world rules and game play rules (Table 9.2). Game world rules place constraints on the game’s virtual world (e.g., gravity). Game play rules identify the methods by which players interact with the game (e.g., number of lives). Differences in games are primarily derived from differences in game play rules [489]. Working around or manipulating these rules creates opportunities for cheating and fraud. Violations of game world and game play rules are not mutually exclusive; thus the same violation may involve the infraction of both types of rules.

Table 9.2. Game world and game play rules.

One may also consider a higher level of rules, describing the hardware and software limitations associated with the devices used to play games. This is a meaningful angle to consider, given that these “meta-rules” govern which types of abuses of the states of the games are possible—for example, by malware affecting the computers used to execute a given game and, thereby, the game itself.

MMOGs as social spaces require further breakdown of game play rules into intra-mechanic and extra-mechanic categories [379] (see Table 9.3). Intra-mechanic rules are the set of game play rules created by the designers of the game. The graphics card bug mentioned in Table 9.2 is an example of an intra-mechanic cheat. Extra-mechanic rules represent the norms created by players as a form of self-governance of their social space. Rules implemented by persistent user groups (often called guilds) that subject new recruits to exploitative hazing rituals would fall into this category. The interplay between intra- and extra-mechanic rules may change over time, with some game developers integrating player-created extra-mechanic rules into the game to form new intra-mechanic rules. The key insight here is that the concept of “rules” goes far beyond the rules of the game as intended by the game designer; it also encompasses everything from enabling technologies to social norms. All can be manipulated for undesirable ends.

Table 9.3. Intra- versus extra-mechanic rules.

As game worlds mature and the complexity of player interactions increases, the number and scope of the rules tend to increase dramatically. They certainly far outstrip the complexity of the rule systems involved in online banking sites and auctions, making rule complexity one of the distinguishing characteristics of MMOGs, from a security standpoint.

The complexity of game rules provides ever-increasing opportunities for misbehavior. Clever players may knowingly use a flaw in the game rules to realize an unfair advantage over fellow players. Players using a game flaw for personal gain are performing an “exploit” of the game [111]. Commonly, exploits are used to provide players with high-powered abilities that allow them to dominate against their peers in the competitive milieu of the game. Given the competitive nature of gaming, and the pervasiveness of “cheat codes” built into most large-budget games and their wide availability on the Internet and in gaming magazines, the discovery and deployment of exploits are often tolerated—even celebrated. However, the monetization of virtual economies creates growing incentives for players to exploit the game world as a means for cold profit, rather than for the pleasure of achieving game-prescribed goals. The ambiguous nature of the exploit as somewhat undesirable and yet a part of game culture, and as an act that is usually legal, creates a new conceptual and technical space for attacks.

9.2.2 Architectural Overview of MMOGs

Massively multiplayer online games are complex pieces of software, whose code is literally executed around the globe. Understanding the basics of their architecture provides the basis for understanding certain kinds of attacks.

Network Architecture

The network architectures for MMOGs are client/server, peer-to-peer (P2P), or a combination of the models. Client/server architecture is the most common owing to its ease of implementation and ability to retain complete control over game state on the server. On the downside, the client/server model is prone to bottlenecks when large numbers of players connect to a small set of servers. A P2P architecture reduces the likelihood of bottlenecks but requires complex synchronization and consistency mechanisms to maintain a valid game state for all players [38]. Many MMOGs, such as World of Warcraft, use the client/server model for game play and the P2P model for file distribution. For example, MMOGs require frequent updates, as game publishers enhance security and game play as the game changes over time. Major updates, or patches, come out every few months and are often hundreds of megabytes in size; all players are required to implement them as soon as they are released. The bandwidth issues associated with a large percentage of World of Warcraft’s 8 million subscribers needing a 250 MB file over a period of hours are far more practically resolved through P2P distribution (Figure 9.1) than through central server distribution.

Figure 9.1. MMOG patches are often distributed via both public and private peer-to-peer infrastructures. This screenshot shows the official World of Warcraft patch service.

Interfaces and Scripts

The client-side executable interfaces with the player using the screen and soundcard as well as input devices. Typical input devices include the keyboard, mouse and trackballs, joysticks, and microphones. Technically, these devices write in unique registers associated with these devices in question, which the CPU polls after it detects interrupts generated by the input devices. This causes the contents of the registers to be transferred to the executable. Any process that can write in these registers, or otherwise inject or modify data during the transfer, can impersonate the player to the client-side executable.

Alternatively, if the entire game is run in a virtual machine, the process may impersonate the player by writing data to the cells corresponding to the input device registers of the virtual machines. These processes are commonly referred to as scripts. Scripts present a major threat to game publishers, as they are the chief tools used by game hackers for activities ranging from simple augmentation of player abilities to large-scale attacks on game servers. A major outlet for scripts, botting, is discussed later in this chapter.

The detection of virtual machines is a growing concern for online game publishers. Game hackers may run virtual machines that host private game servers that grant the hackers unlimited access to modify the game world, avoid or disable DRM and copyright protection systems, and allow willing players to bypass the game publisher’s subscription services and play games for free on “black market” servers. Improper virtual machine detection techniques have led publishers to inadvertently cancel accounts for thousands of paying customers who legitimately run their games in virtual machines such as WINE to play the game on the Linux OS [240].

Detecting Scripts

Misuse detection schemes represent attacks in the form of signatures to detect scripts and their subsequent variations designed to avoid known detection measures [399]. Misuse techniques are closely tied to virus detection systems, offering effective methods to protect against known attack patterns, while providing little resistance to unknown attacks. Pattern matching and state transition analysis are two of the numerous misuse detection system techniques available. Pattern matching encodes known attack signatures as patterns and cross-references these patterns against audit data. State transition analysis tracks the pre- and post-action states of the system, precisely identifying the critical events that must occur for the system to be compromised.

Techniques to detect scripts assume that all activities intrusive to the system are essentially anomalous [399]. In theory, cross-referencing a known, normal activity profile with current system states can alert the system to abnormal variances from the established profile and unauthorized or unwarranted system activity. Statistical and predictive pattern generation are the two approaches used in anomaly detection systems. The statistical approach constantly assesses state variance and compares computational profiles to the initial activity profile. Predictive pattern generation attempts to predict future events based on prior documented actions, using sequential patterns to detect anomalous actions.

A variety of techniques exist for detection avoidance. According to Tan, Killourhy, and Maxiom [406], methods for avoiding detection may be classified into two broad categories. First, the behavior of normal scripts is modified to look like an attack, with the goal of creating false positives that degrade anomalous detection algorithms. Second, the behavior of attacks are modified to appear as normal scripts, with the goal of generating false negatives that go unnoticed by both anomalous and signature-based detection systems.

An important subcategory of the latter detection avoidance method is facilitated by rootkits,2 or software that allows malicious applications to operate stealthily. Rootkits embed themselves into operating systems and mask an attacker’s scripts from both the OS and any detection programs running within the OS.

9.3 Electronic Fraud

Electronic fraud is an umbrella term that encompasses many types of attacks made in any electronic domain, from web banking systems to games. In the preceding section, we explored the MMOG as a specific domain of electronic fraud. In this section, we summarize major forms of fraud in any electronic domain. Later, we engage in more specific exploration of fraud in MMOGs.

9.3.1 Phishing and Pharming

Phishing attacks are typically deception based and involve emailing victims, where the identity of the sender is spoofed. The recipient typically is requested to visit a web site and enter his or her credentials for a given service provider, whose appearance the web site mimics. This web site collects credentials on behalf of the attacker. It may connect to the impersonated service provider and establish a session between this entity and the victim, using the captured credentials, a tactic known as a man-in-the-middle attack.

A majority of phishing attempts spoof financial service providers. Nevertheless, the same techniques can be applied to gain access to any type of credentials, whether the goal is to monetize resources available to the owner of the credentials or to obtain private information associated with the account in question. The deceptive techniques used in phishing attacks can also be used to convince victims to download malware, disable security settings on their machines, and so on.

A technique that has become increasingly common among phishers is spear-phishing or context-aware phishing [197]. Such an attack is performed in two steps. First, the attacker collects personal information relating to the intended victim, such as by data mining. Second, the attacker uses this information to personalize the information communicated to the intended victim, with the intention of appearing more legitimate than if the information had not been used.

Pharming is a type of attack often used by phishers that compromises the integrity of the Domain Name System (DNS) on the Internet, the system that translates URLs such as www.paypal.com to the IP addresses used by computers to network with one another. An effective pharming attack causes an incorrect translation, which in turn causes connection to the wrong network computer. This scheme is typically used to divert traffic and to capture credentials. Given that pharming can be performed simply by corruption of a user computer or its connected access point [389], and that this, in turn, can be achieved from any executable file on a computer, we see that games and mods—programs that run inside the game executable—pose real threats to user security.

9.3.2 Misleading Applications

Misleading applications masquerade as programs that do something helpful (e.g., scan for spyware) to persuade the user to install the misleading application, which then installs various types of crimeware on the user’s system. The pervasiveness of user downloads in online gaming leaves great room for attackers to transmit their crimeware via misleading applications. Since the release of World of Warcraft, for example, attackers have used game mod downloads to transmit trojan viruses to unsuspecting players [497]. The practice has since grown in frequency and is cited by the developers of World of Warcraft as a chief customer service concern.

Keyloggers and screenscrapers are common types of crimeware installed by misleading applications. A keylogger records the keystrokes of a user, whereas a screenscraper captures the contents of the screen. Both techniques can be used to steal credentials or other sensitive information, and then to export associated data to a computer controlled by an attacker.

Another type of crimeware searches the file system of the user for files with particular names or contents, and yet another makes changes to settings (e.g., of antivirus products running on the affected machine). Some crimeware uses the host machine to target other users or organizations, whether as a spam bot or to perform click fraud, impression fraud, or related attacks. This general class of attacks causes the transfer of funds between two entities typically not related to the party whose machine executes the crimeware (but where the distributor of the crimeware typically is the benefactor of the transfer). The funds transfer is performed as a result of an action that mimics the actual distribution of an advertisement or product placement.

9.4 Fraud in MMOGs

As previously discussed in this chapter, cheating and fraud are separate but related activities. Cheating is a means to obtain an unfair and unearned advantage over another person or group of people relative to game-specified goals. Fraud is an intentional deception intended to mislead someone into parting with something of value, often in violation of laws. In online games, cheating is a prominent facilitator of fraud. Any unfair advantage that can be earned via cheating may be put to use by a defrauder to supplement or enhance his or her fraudulent activity.

For example, a cheat may allow players to replicate valuable items to gain in-game wealth, whereas an attacker may use the opportunity to create in-game wealth via the cheat and then monetize the illicitly replicated items for real-world profit, thereby committing a form of fraud. The same valuable items can be obtained over time with a great investment of time in the game and can still be monetized, but a cheat makes the fraud not only technically feasible, but also economically viable. Thus the game account itself is also a source of value. In 2007, Symantec reported that World of Warcraft account credentials sold for an average of $10, whereas U.S. credit card numbers with card verification values sold for $1 to $6 on the Internet underground market [400].

The following sections recognize cheating as a component of fraud and outline an extended model of security for online games that integrates the potential effect of cheaters into the popular confidentiality, integrity, availability, and authenticity model of security.

9.4.1 An Extended Model of Security for MMOGs

The four foundational aspects of information security—confidentiality, integrity, availability, authenticity—can be used to discuss and model threats against online games [485]. Games, like all information technology platforms, may suffer from data loss (confidentiality), modification (integrity), denial-of-service (availability) and misrepresentation (authenticity) attacks. In the context of a game, all of these attacks result in one player or group of players receiving an unfair or unearned advantage over others. Given the competitive, goal-oriented foundation of most behavior in online games—a foundation that does not exist in the same way with a web-based bank application (where one user is not trying to “beat” another user while banking online)—incentives to cheat are built internally into the game application itself. This unique implication of security in online games extends the traditional model of security to include an evaluation of fairness as a principle of security in online games.

The incentive to cheat and defraud in online games is high. As large virtual worlds, games provide a great deal of anonymity for their users. Few players know each other, and the participants are often scattered across vast geographic locations. Additionally, players’ relationships are typically mediated by in-game, seemingly fictional goals, such as leveling up a character or defeating a powerful, computer-controlled enemy; thus people may feel that they are interacting with one another in much more limited ways than in real-world relationships, which are often based on friendship, intimacy, professional aspirations, and so on. As a result, the social structures that prevent cheating in the real world wither away in online games. Security, with a new emphasis on fairness, can serve as a new mechanism for maintaining fair play in MMOGs [485].

9.4.2 Guidelines for MMOG Security

The following MMOG security guidelines are derived from the extended model of security in online games. These guidelines are intended to serve as a set of high-level considerations that can direct and inform game design and implementation decisions in regard to information security in MMOGs:

Protect sensitive player data.

Maintain an equitable game environment.

Protecting sensitive player data involves a diverse set of concerns. Traditional notions of protecting personally identifiable information such as credit card number, billing information, and email addresses are as applicable to online worlds as they are to e-commerce web sites. However, the complexity of player information found in online games deeply questions the meaning of “sensitive.” In-game spending habits, frequented locations, times played, total time played, communication logs, and customer service history are all potentially sensitive pieces of information present in virtual worlds. Determining a player’s habits, without his or her knowledge or consent, can open up that player to exploitation and social engineering. Such attacks could include attempts to manipulate players via player–player trade in-game. Alternatively, some locations in virtual worlds that people participate in under the expectation of anonymity are socially taboo, such as fetish sex–based role-playing communities [32]; knowing a player’s location in-world can expose participation in such communities, that can be exploited in a number of unsavory ways. Additionally, knowing when someone typically plays can help someone predict a player’s real-life location. Unlike a web-based banking application, MMOGs often require special software clients and powerful computers to play, indicating when someone is likely to be home or away. A careful evaluation and prioritization of all stored data is critical to protecting “sensitive” player data.

For example, Linden Labs, the creators of Second Life, recently implemented a measure whereby players (called “residents” in Second Life) are no longer able to see the online status of most other residents (previously they could). A notable, and appropriate, exception to this is one’s friends list, which includes all individuals whom one has explicitly designated as a friend. A more murky area is Second Life’s “groups,” which are somewhat akin to guilds in other MMOGs. Residents are able to view the online status of fellow group members. Every resident can belong to a maximum of 25 groups, and most groups are open to the public and free to join. All residents have profiles, and included in their profiles is a list of all groups to which they belong. Through a combination of simply joining a large number of large groups, looking up a given resident’s group list, and temporarily joining one of those groups, it is still possible to discover the online status of most residents in Second Life, although Linden Labs clearly meant to disable this capability. As this example shows, sensitive player data includes more than online status; it includes group membership as well, because the latter can lead to the former.

Maintaining an equitable game environment requires the extension of fairness as a component of the extended model of security for MMOGs. However, providing a level game field for all players is a great challenge. The naturally competitive nature of many online games, combined with the anonymity of virtual worlds, creates a strong incentive for players to negotiate abuse of the game world to gain advantages over their peers.

A fair game world is maintained through careful balancing and protection of three facets of the game:

Player Ability

Player ability refers to the preservation of the naturally bounded abilities provided to players by the game mechanics. Amount of player health, power (often measured by the amount of damage players can inflict in combat), and the virtual currency players have are all forms of player ability. Because computers and game rules are used to simulate familiar sensibilities about player ability, participants are vulnerable to people who manipulate the rules in ways that are not possible in real life, so it does not occur to them that these kinds of cheaters are possible in a virtual world, and therefore players do not protect themselves from this behavior.

A couple of examples demonstrate how these attacks can occur. Perhaps the simplest ruse is a single player who masquerades as multiple players in an online casino. By pretending to be multiple players, the player gains access to far more information than is available to another single player [484]. Yet it may not even occur to the victim that this situation is possible, because in real life no one can simultaneously be three out of four players at a poker table. Likewise, many games involve quick reflexes, such as the pressing of a key on a keyboard to fire a gun. Reflex augmentation technologies, which simulate keypresses at inhuman speeds, make it possible for a player to fire a gun much faster than other players can. The main issue at stake is that real life, in combination with game rules, produces certain mental models about player abilities, and attackers can manipulate systems in blind spots created by these mental models.

Player Treatment

Player treatment is a twofold category encompassing both how players treat other players and how players are treated by the environment.

Community is critical to the success of online games, and negative interactions between players can erode the quality of a player base. Griefing, as online harassment is commonly known, is the chief violation of player treatment and is often enabled by a breach in player ability. A simple example of griefing, which recent games have disabled, occurs when a high-level player spends time in an area set aside for new, weaker players and kills the lower-level players over and over for malicious pleasure. A more recent, and more difficult to defeat, form of griefing includes activities such as Alt+F4 scams, in which experienced players tell new players that pressing Alt+F4 enables a powerful spell; in reality, it quits the game, forcing victims to log back in. Both examples are forms of player–player treatment violations.

The other major type of player treatment is world–player treatment, which refers to how the game world itself treats players. The world should treat all players equally, providing a conducive environment for game play and enjoyment. Unfortunately, certain cheats create global inequities. For example, in Shadowbane, attackers compromised game servers to create ultra-powerful monsters that killed all players. This, in turn, forced the publisher to shut down the game, roll back to a previous state, and patch the game files to remove the exploit [479].

In both player–player and world–player treatment types, the basic principle that no player’s achievements or pleasure should be subjected without consent to the control of another player can be violated. These violations may lead to griefing, cheating, or fraud, depending on the circumstances.

The implementation of bots is a common means for influencing the game world’s treatment of fellow players. A bot is a computer-controlled automated entity that simulates a human player, potentially through the use of artificial intelligence. Bots are used to give players unfair advantages, such as automating complex processes at inhuman speeds to level characters (i.e., raise their abilities through extensive play), raise virtual money, and so on. Some bots in World of Warcraft, for example, would walk in circles in hostile areas, automatically killing all the monsters (commonly known as mobs) to raise experience and gold. This form of gold harvesting threatens the entire virtual economy, cheapening the value of gold for all other players and thereby diminishing their own in-game accomplishments. In Second Life, some residents developed a bot that could automatically replicate proprietary in-world three-dimensional shapes and textures (e.g., virtual homes and clothing) that other residents had made and were selling for real-world income, thereby devaluing the work of and threatening Second Life’s entrepreneurial class.

Golle and Duchenault [147] propose two approaches to eliminate bots from online games, based on conceptual principles that require players to interact in specific ways. CAPTCHA tests—Completely Automated Public Turing Test to Tell Computers and Humans Apart—are cornerstones of these approaches. A CAPTCHA test has the ability to generate and evaluate a test that the average human can pass but current computer programs cannot, such as reading a string of characters in a grainy image (Figure 9.2). Another approach abandons the use of software, transforming existing game-input controllers into physical CAPTCHA devices. Similar to a keyboard or joystick, the CAPTCHA device recognizes physical inputs and generates digital outputs, authenticating that a human is using the device. Current research into physical CAPTCHAs suggests that the costs of developing tamper-proof devices and forcing the hardware upgrades onto users are quite high and may prevent future efforts in the area [147].

Figure 9.2. A CAPTCHA on a MMOG-related web site that allows players to comment on items, monsters, and locations found in the game. The CAPTCHA, represented by the code c2646, ensures that actual people are posting responses.

Environmental Stability

Environmental stability encompasses the proper operation and maintenance of the mechanisms that support the game world, such as the game servers, databases, and network channels. Network latency, fast command execution, and data retrieval are the backbone of any online application, especially in intensive graphical online worlds. Game world downtime is costly in terms of emergency maintenance and customer service response to upset players who demand compensation for the loss of the use of their subscription [276].

Lag, or network latency, also affects the stability of the game world. A player has low lag when communication between his or her client and the server is quick and uninterrupted. Players with higher lag are at a clear disadvantage to those with low lag, because the server is much less responsive to their commands. Lag can be created by a player’s geographic location (e.g., propagation delay), access technology (e.g., ADSL, cable, dial-up), and transient network conditions (e.g., congestion).

Zander, Leeder, and Armitage [491] have shown that game-play scenarios with high lag lead to a decrease in performance, and they suggest that nefarious players can use lag to gain an unfair advantage over other players. To combat lag, Zander et al. implemented a program to normalize network latency by artificially increasing the delay between players and the server for players with faster connections. They tested their system using bots with equal abilities, so that if the system is fair, all players should have equal kill counts. Prior to the normalization, bots with low lag had higher kill counts than bots with higher lag. After the normalization, all of the bots had roughly equal kill counts.

9.4.3 Countermeasures

Countermeasures to online game attacks conveniently map to the previously established guidelines for MMOG security: protecting sensitive player data and maintaining an equitable game environment.

Countermeasures for protecting sensitive player data are well understood and researched in the security community, but may be new to some game developers. Popular games such as Second Life and World of Warcraft have a mixed history with proper authentication, storage, and encryption of sensitive information. Second Life lost 650,000 user accounts during a security breach in 2006 [418]. World of Warcraft is entertaining the idea of introducing dual-factor authentication via USB dongles that must be plugged into the player’s computer to execute the game [139].

The inclusion of participant-created content, particularly with the use of open-source software in some game clients (such as Second Life’s clients) and the release of application programming interfaces (APIs—scripting languages with limited access to the main program), means that players are increasingly installing software written by other players. The potential for crimeware in such circumstances is significant. Although game publishers, such as Blizzard, are doing their part to ensure that game modifications (mods) built with their APIs are safe, they cannot monitor or control all of the downloadable files that claim to be made with their APIs. Some of these files may include additional code that installs crimeware.

The concept of countermeasures must be carefully applied when the focus is maintaining an equitable game world. Fairness in the game world is constantly changing and depends on emergent player behaviors, practices, and beliefs. Maintaining fairness in games requires a highly proactive, rather than reactive, approach to security. The combination of emergent behavior and a variety of attacks available through player ability, player treatment, and environmental stability make mapping and anticipating the attack space very difficult. For example, denial-of-service attacks achieved through an overload in network traffic are common and well understood. However, World of Warcraft experienced a denial-of-service attack through a compromised player ability. Griefing players intentionally contracted a deadly virus from a high-level monster and then teleported to major cities, which are supposed to be safe havens for characters of all levels. The disease spread like a virtual plague, shutting down all highly populated areas for several days, and killing not only low- and medium-level players, but even computer-controlled nonplayer characters placed in the cities [124].

Conclusion

Massively multiplayer online games produce value for their publishers and their players alike. Comprising highly complex rule systems; being deployed via computer networks, protocols, and systems to millions of users; and symbiotically evolving alongside complex subcultures and microcommunities, online games offer a surprisingly diverse array of opportunities for attacks. The security community, having focused much of its attention on two-dimensional web applications, such as banking systems and auction sites, lacks conceptual frameworks for dealing with those aspects of MMOG fraud that are unique to games, including game architecture and game culture. The three-dimensional embodied simulations combined with massive social presence create new opportunities for fraud that merit more serious consideration than they have received to date.