Appendix F. BSIMM Assessment Final Report

April 1, 2016

Prepared for:

FakeFirm

123 Fake Street

Anytown, USA 12345

Prepared by:

Cigital, Inc.

21351 Ridgetop Circle

Suite 400

Dulles, VA 20166

Copyright © 2008-2016 by Cigital, Inc. ® All rights reserved. No part or parts of this Cigital, Inc. documentation may be reproduced, translated, stored in any electronic retrieval system, transmitted in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, without prior written permission of the copyright owner. Cigital, Inc. retains the exclusive title to all intellectual property rights relating to this documentation.

The information in this Cigital, Inc. documentation is subject to change without notice and should not be construed as a commitment by Cigital, Inc. Cigital, Inc. makes no representations or warranties, express or implied, with respect to the documentation and shall not be liable for any damages, including any indirect, incidental, consequential damages (such as loss of profit, loss of use of assets, loss of business opportunity, loss of data or claims for or on behalf of user’s customers), that may be suffered by the user.

Cigital, Inc. and the Cigital, Inc. logo are trademarks of Cigital, Inc. Other brands and products are trademarks of their respective owner(s).

Cigital, Inc.

21351 Ridgetop Circle

Suite 400

Dulles, VA 20166

Phone: + 1 (703) 404-9293

Figure F.2: Normalized Percentage of Activities Observed in each BSIMM Practice (Spider)

Figure F.3: Normalized Percentage of Activities Observed in each BSIMM Practice (Bar)

Figure F.4: BSIMM6 Assessment Score Distribution

Figure F.5: High-Water Mark per Practice Compared to Average of 78 BSIMM6 Firms

Figure F.6: Activities Observed per Practice

Figure F.7: Activities Observed per Level

Figure F.8: BSIMM Scorecard with Earth Data

Figure F.9: High-Water Mark per Practice Compared to Participants in a Vertical

Figure F.10: BSIMM Scorecard with Vertical Data

Audience

This document is intended for FakeFirm employees who will lead the software security group (SSG) and related software security initiative (SSI) activities.

Contacts

The following are the primary Cigital staff to contact with questions regarding this assessment.

1 Executive Summary

The Building Security In Maturity Model (BSIMM) is a unique tool built from our observation-based approach to capturing the collective activities in diverse software security initiatives (SSIs). An SSI is an executive-sponsored, proactive effort comprising all activities aimed at building, acquiring, running, and maintaining secure software. It establishes a formal ability to balance the risk and cost associated with software engineering processes to ensure the firm meets business objectives safely. In addition, an SSI ensures a firm’s software routinely meets applicable regulatory, statutory, and audit requirements while also ensuring clients can meet theirs when using the firm’s software.

To build BSIMM, we initiated data research and analysis in 2008 by assessing software security efforts in nine firms and using those results to create BSIMM1. As of September 2015, we’ve performed BSIMM assessments for 104 firms of various sizes in diverse vertical markets. In that time, we continually adjusted the model to reflect our real-world observations, adding or moving activities to reflect current reality. Over time, we also drop old data to keep the model fresh. Therefore, the BSIMM stands as the only useful and current reflection of actual practices in software security.

There are 78 firms represented in BSIMM6. As a confirmation of BSIMM’s usefulness, 26 of those firms have had two BSIMM assessments and 10 have had three or more assessments. Though initiatives differ in some details, all share common ground that the BSIMM captures and describes. It therefore functions as a universal yardstick, capable of measuring any SSI and facilitating strategic planning by the SSI leadership. As a general term, we call these SSI leaders the software security group (SSG).

Figure F.1 below shows the Software Security Framework (SSF) we use as the BSIMM foundation. It includes four broad domains of Governance, Intelligence, SSDL Touchpoints, and Deployment. See Appendix A: BSIMM Background for additional detail on BSIMM history and the SSF domains.

Within the four SSF domains are 12 practices (e.g., Strategy & Metrics) that collectively contain the 112 software security activities in BSIMM6. Within each practice, we divide these activities into three levels based primarily on observation frequency across all participants. See Appendix B: BSIMM Activities for summaries of each practice, including the activities and their assigned levels. We provide comprehensive activity descriptions in the BSIMM report at http://bsimm.com. Because BSIMM is an observational model and records our research, the activity set it contains changes over time.

Any such attestation-based, time-limited engagement will prevent detailed business process analysis. However, it is important to understand that a BSIMM scorecard is neither an audit finding nor a report card. It is simply a snapshot of current software security effort through the BSIMM lens. Part of its value lies in the fact that, as of BSIMM6, we have conducted 235 assessments in the same manner, making all the results over time directly comparable to each other.

In this assessment for FakeFirm, Cigital interviewed individuals representing various software security roles. In some cases, we also reviewed artifacts that clarified a given topic. Our team then analyzed the resulting data until reaching unanimous agreement on whether we observed in FakeFirm’s environment each of the 112 BSIMM6 activities. Given a final list of observations, we were able to produce the data representations provided in this report. This knowledge helps SSG owners understand where their initiative stands with respect to other real-world SSIs and clarifies possible strategic steps to mature the firm’s efforts.

For this April 2016 BSIMM assessment, Cigital interviewed 11 individuals. At this time, the software security group (SSG), known in FakeFirm as the Application Security Team (AST), has been active for about two years and includes five full-time people. The AST leader has the following reporting chain to FakeFirm’s CEO: Director (AST Lead) → CISO → CIO → CEO. There are currently six people in the AST’s satellite, referred to as “S-SDLC Risk Managers.” The AST and Risk Managers support 850 developers in creating, acquiring, and maintaining a portfolio of 250 applications. FakeFirm uses a spreadsheet to track the software portfolio and the inventory is currently incomplete.

FakeFirm has centralized the AST in a corporate group outside the various business units. The AST embodies its high-level approach to software security governance in a documented secure SDLC with two software security gates, one at beginning of the SDLC to establish testing expectations and one at the end of the SDLC to do testing. However, adherence to the gates is voluntary at this time. The AST supplements the secure SDLC with policies and standards, including some secure coding standards. It works directly with Legal, Risk, and Compliance groups to ensure the FakeFirm also meets privacy and compliance objectives related to software. It also works with IT to specify and maintain operating system, server, and device security controls. New developers, testers, and architects receive software security training via a brief in-person session during onboarding. The AST does outreach to executives and other groups, but only through informal meetings and data sharing.

The AST works directly with engineering teams to discover software security defects through architecture analysis, penetration testing, and static analysis. While the AST manages these efforts centrally, much of the labor is out-sourced. The AST usually enhances such testing by taking advantage of attack intelligence. Processes ensure most security defects receive an assigned a severity level and the AST tracks those security defects scheduled for remediation.

Other important SSI characteristics include:

• A relatively mature static source code analysis process that uses customized rules

• A mature process to capture information about attacks against FakeFirm software and create developer training

• Black-box security testing for Web applications embedded in the quality assurance process, helping to ensure security defects are caught during the development cycle

• Service-level agreement boilerplate for software security responsibilities, but limited use in vendor contracts

• Data classification that is informal and effectively equates to “everything is important”

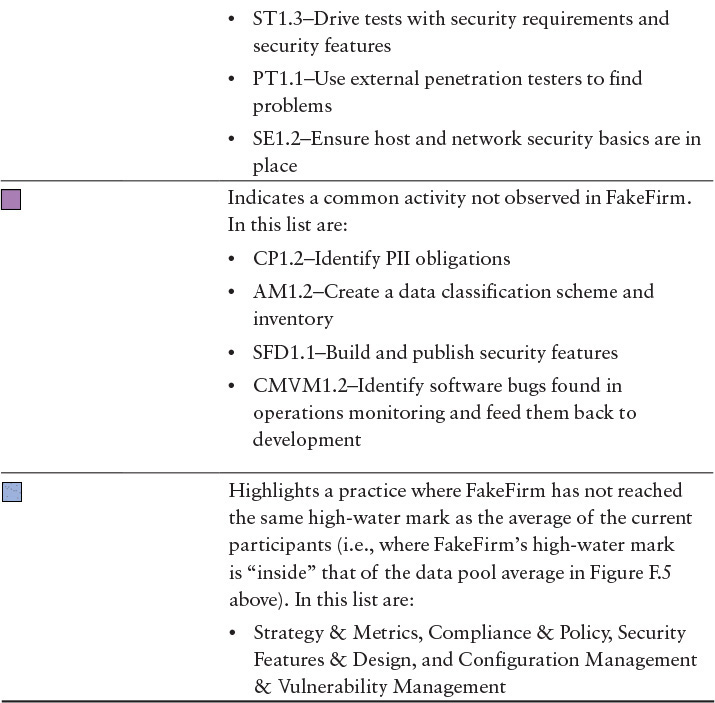

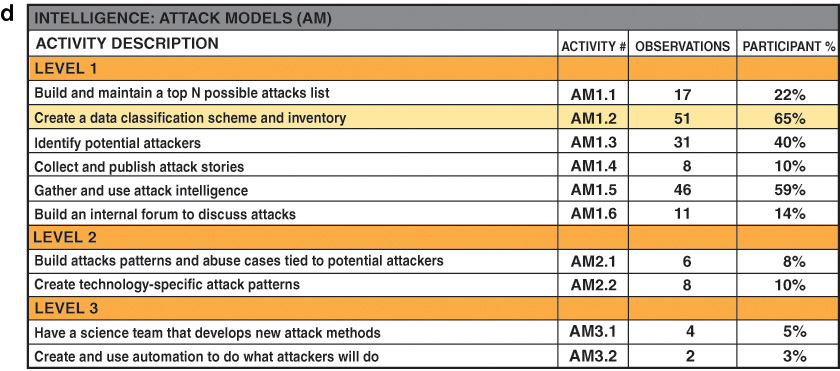

From FakeFirm’s software security efforts summarized above, Cigital observed 37 BSIMM activities in this assessment. Figure F.2 shows in dark gray (blue in eBook) the distribution of the observed activities across the 12 SSF practices, normalized to a 100% scale. For example, if we observed half of the activities in a given practice, the chart would display that as 50%. To allow for comparison, the light gray (orange in eBook) area shows the normalized averages for the entire BSIMM6 participant pool.

To aid in visualization, Figure F.3 below shows the same data, but as a bar chart.

Recall that the SSF shown in Figure F.1 above forms the BSIMM foundation. The SSF comprises 12 practices, each of which contains several BSIMM activities, for a total of 112. After a BSIMM assessment, we create a scorecard showing the number of activities observed out of 112 (37 for this assessment). To allow comparisons, Figure F.4 shows the distribution of scores for the 78 firms in the BSIMM6 data pool along with the average age of the SSIs in each group.

From a planning perspective, our experience shows that it is better to have a well-rounded effort distributed across the SSF practices. It is also important to remember that we have never observed all 112 activities in a single firm and such a feat is probably not a reasonable goal. A firm should always base activity selection—resource allocation in the SSI—on actual need.

While a BSIMM assessment provides an unbiased inventory of the software security activities underway, this targeted review alone cannot provide a complete measurement of SSI sufficiency or effectiveness. That requires additional data and analysis.

In the following sections, Cigital provides additional representations of FakeFirm’s BSIMM assessment results. FakeFirm can use these results and accompanying recommendations to guide SSI improvements.

2 Data Gathering

A BSIMM assessment objectively creates a scorecard depicting current software security activity, thereby facilitating internal analysis, decision support, and budgeting. To gather this information, Cigital conducts interviews to get a detailed understanding of FakeFirm’s approach to and execution of its SSI. Cigital may also review artifacts that explain important software security processes. Cigital then analyzes the resulting data to give credit in a BSIMM scorecard for each software security activity observed out of 112.

Typically, we conduct our primary interviews with the SSG owner and one or more of his or her direct reports. We usually follow this with interviews of others directly involved in planning, instantiating, or executing the SSI. These individuals may be in any of several roles, including SSG executive sponsor, business analysis, architecture, development, testing, operations, audit, risk, and compliance.

For this BSIMM assessment, Cigital interviewed the following individuals:

• Person, CIO

• Person, CISO and SSG Leader

• Person, SSG member

• Person, SSG member

• Person, SSG member

• Person, Security Architecture Head

• Person, Security Operations Head

• Person, Quality Assurance Head

• Person, Mobile Development Head

• Person, Web Development Head

• Person, Risk, and Compliance

3 High-Water Mark

As seen in Appendix B: BSIMM Activities, each of the 112 BSIMM6 activities is assigned a level of 1, 2, or 3. Cigital used interview data to create a scorecard and then chart the highest level activity—the “high-water mark”—observed in each of the twelve BSIMM practices. We assign the high-water mark with a very simple algorithm. If we observed a level 3 activity in a given practice, we assign a “3” without regard for whether level 2 or 1 activities were also observed. We assigned a high-water mark of 2, 1, or 0 similarly.

Figure F.5 below compares FakeFirm high-water marks with the average high-water marks for the BSIMM6 participant pool. Because the spider diagram shows only a single data point per practice, it is a very low-resolution view of software security effort. However, this view provides useful comparisons between firms, between business units, and within the same firm over time.

Compared to the average high-water marks of all BSIMM6 participants, FakeFirm marks appear above the average in Training, Attack Models, Architecture Analysis, Code Review, and Penetration Testing. FakeFirm marks appear near the average in Compliance & Policy, Standards & Requirements, Security Testing, Software Environment, and Configuration Management & Vulnerability Management. FakeFirm marks appear below the average in Strategy & Metrics and in Security Features & Design.

4 BSIMM Practices

Recall that the spider diagram in Figure F.5 above shows only the high-water mark level reached in each BSIMM practice. While useful for comparing groups or visualizing change over time, performing a single high-level activity in a practice—and few or no other activities—skews the spider diagram such that it may not accurately reflect true effort within a given practice.

To overcome this and facilitate additional analysis, the two diagrams below provide a higher resolution view of the 37 BSIMM6 activities observed at FakeFirm. This type of diagram makes evident the “activity density” by showing the observed activities segregated vertically into their respective practices and then horizontally into their respective levels.

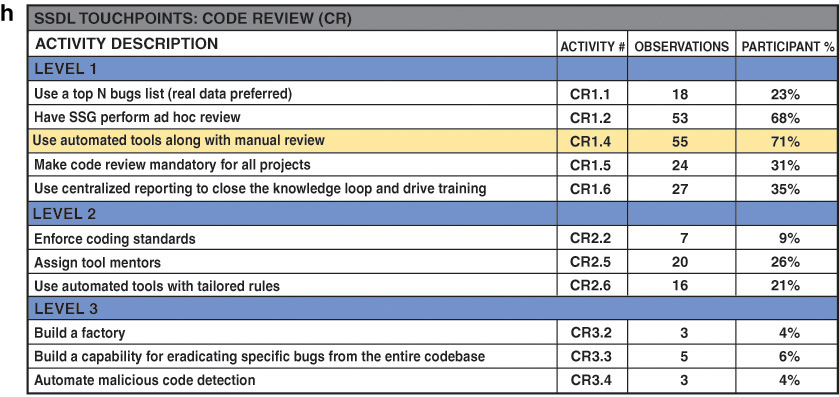

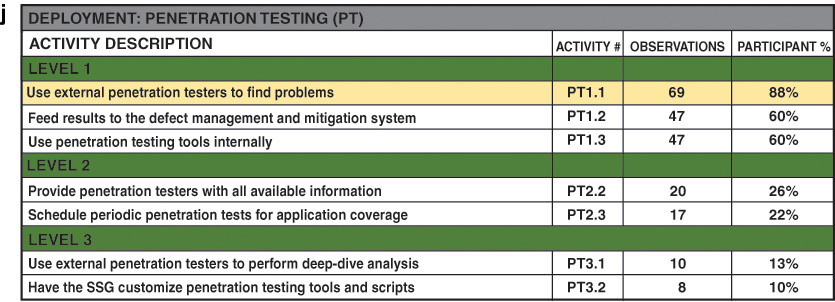

Figure F.6 below facilitates analysis by practice to clarify where Cigital may have observed higher-level activities with few or no associated lower-level activities. From a vertical practice perspective, FakeFirm achieved a high-water mark of “3” in two practices: Code Review and Penetration Testing. In Penetration Testing, we also observed a majority of lower level activities, indicating practice maturity, but we did not observe such a majority of lower level activities in Code Review. Similarly, FakeFirm achieved a high-water mark of “2” in eight practices and here we observed a majority of lower level activities in four practices, with Training, Attack Models, Software Environment, and Configuration Management & Vulnerability Management being the exceptions. We observed a majority of activities in one of the two practices where FakeFirm achieved a high-water mark of “1,” with Security Features & Design being the exception.

Figure F.7 below facilitates analysis by level to highlight where the current SSI does not include foundational level 1 activities. From a horizontal software security foundation perspective, we observed activities in all practices at level 1, but we did not observe a majority of level 1 activities in Training, Attack Models, Security Features & Design, Software Environment, and Configuration Management & Vulnerability Management. We observed level 2 activities in nine of 12 practices; however, we observed only a single level 2 activity in nearly every practice, with Standards & Requirements being the exception. For the two practices where we observed a level 3 activity, each has a single observation.

To facilitate an additional level of analysis, Cigital provides below the assessment scorecard containing data on each of the activities observed as well as comparable observation data from other firms.

5 BSIMM Scorecard

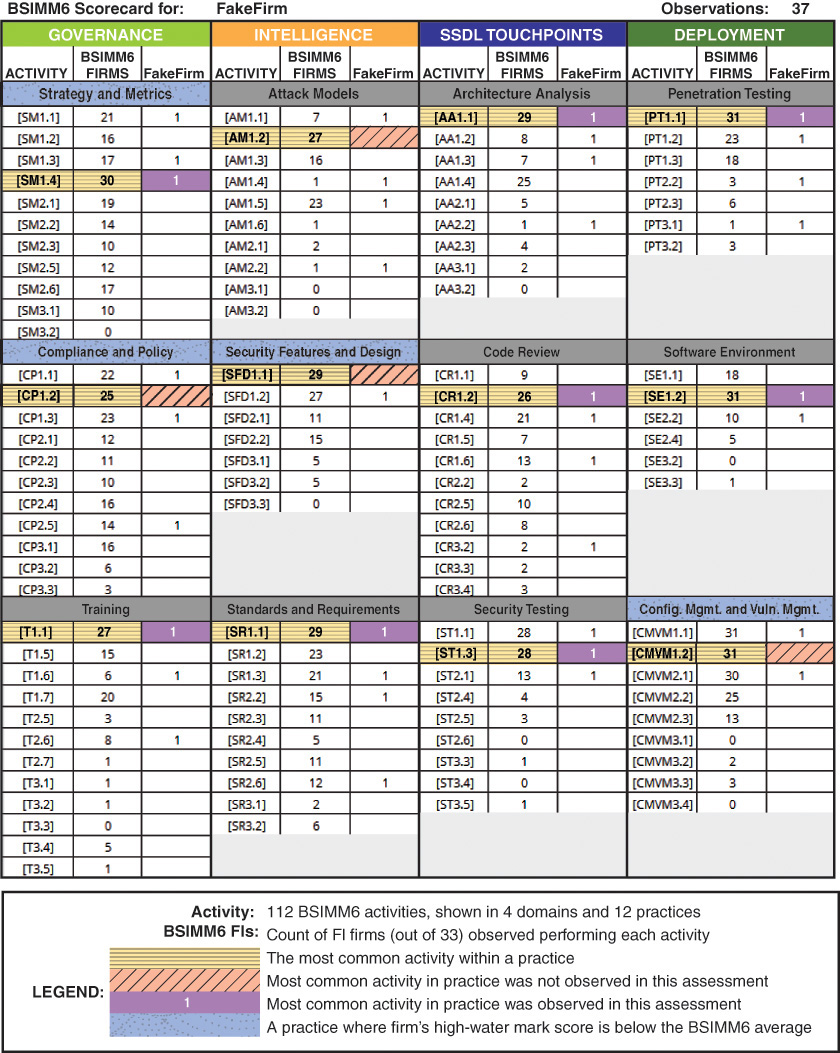

Figure F.8 below provides detailed information about FakeFirm’s SSI. Primarily, it lists in the four columns marked “FakeFirm” the 37 activities Cigital observed during this assessment. In the “BSIMM6 Firms” columns, the scorecard provides the count of firms (out of 78) in which Cigital observed each activity. See the table on the following page for more explanation. In addition, see Appendix B: BSIMM Activities for the short name associated with each BSIMM activity (e.g., SM1.3 is “Educate executives”).

The following is an explanation of the scorecard shown in Figure F.8 above:

It is important to remember that this scorecard represents Cigital’s observations specific to software security activity as measured by the BSIMM. Observation—or the lack of observation—of a given activity is inherently neither good nor bad. Judging sufficiency and effectiveness for the activities observed requires a deeper analysis of FakeFirm’s business objectives, processes, and software. Results of such an analysis can form a cornerstone for strategic broadening and deepening of the current SSI.

6 Comparison within Vertical

Figure F.9 below summarizes the level reached by FakeFirm in each practice and compares it to the subset of BSIMM6 participants in the financial industry (FI) vertical.

Compared to the average high-water marks of all current financial industry BSIMM6 participants, FakeFirm marks appear above the average in Training, Attack Models, Architecture Analysis, Code Review, Security Testing, Penetration Testing, and Software Environment. FakeFirm marks appear near the average in Compliance & Policy, Standards & Requirements, and Configuration Management & Vulnerability Management. FakeFirm marks appear below the average in Strategy & Metrics and Security Features & Design.

Compared to the averages shown in Figure F.5 above for the entire BSIMM data pool, the most significant changes are in Strategy & Metrics, Compliance & Policy, Training, and Standards & Requirements, where the high-water mark average is higher amongst FIs than for the entire data pool (BSIMM Earth), and Software Environment, where the average is lower.

Figure F.10 below provides detailed information about FakeFirm’s SSI. Primarily, it lists in the four columns marked “FakeFirm” the 37 activities Cigital observed during this assessment. In the “BSIMM6 FI” columns, the scorecard provides the count of financial firms (out of 33) in which Cigital observed each activity.

The following is an explanation of the scorecard shown in Figure F.10 above:

7 Conclusion

FakeFirm is performing the single most important activity related to improving software security: it has a dedicated software security group that can get resources and drive organizational change.

Compared to the average high-water marks of all BSIMM6 participants, FakeFirm marks appear above the average in Training, Attack Models, Architecture Analysis, Code Review, and Penetration Testing. FakeFirm marks appear near the average in Compliance & Policy, Standards & Requirements, Security Testing, Software Environment, and Configuration Management & Vulnerability Management. FakeFirm marks appear below the average in Strategy & Metrics and in Security Features & Design.

From a vertical practice perspective, FakeFirm achieved a high-water mark of “3” in two practices: Code Review and Penetration Testing. In Penetration Testing, we also observed a majority of lower level activities, indicating true practice maturity, but we did not observe such a majority of lower level activities in Code Review. Similarly, FakeFirm achieved a high-water mark of “2” in eight practices and here we observed a majority of lower level activities in four practices, with Training, Attack Models, Software Environment, and Configuration Management & Vulnerability Management being the exceptions. We observed a majority of activities in one of the two practices where FakeFirm achieved a high-water mark of “1,” with Security Features & Design being the exception.

From a horizontal software security foundation perspective, we observed activities in all practices at level 1, but we did not observe a majority of level 1 activities in Training, Attack Models, Security Features & Design, Software Environment, and Configuration Management & Vulnerability Management. We observed level 2 activities in nine of 12 practices; however, we observed only a single level 2 activity in nearly every practice, with Standards & Requirements being the exception. For the two practices where we observed a level 3 activity, each has a single observation.

Using the BSIMM assessment data, FakeFirm might choose one or more of the following to broaden and deepen its SSI:

• Determine whether it is appropriate to begin doing the remaining four activities (those marked with slashes in Figure F.8) from the 12 common activities.

• Perform a more complete risk, compliance, and needs analysis for the SSI. These results of such an analysis can drive the larger strategy for comprehensive top-down enhancements.

• Perform a software security business process analysis focusing on sufficiency, efficiency, and maturity. The results of such an analysis can drive tactical changes that increase effectiveness and reduce cost.

• Commission a detailed analysis of a set of SDLC artifacts, such as the requirements, design, code, and deployed module for one or more critical applications. Determining the root causes (e.g., lack of a given BSIMM activity) for the software security defects discovered can drive targeted bottom-up SSI enhancements.

Independent of the general choices above and based on our experience in similar environments, we recommend FakeFirm consider the following when choosing its next set of SSI improvements.

• Secure SDLC—FakeFirm has created an SDLC overlay that includes two security gates, one for “Permit to Build” and one for “Permit to Deploy.” However, the SSG is not involved in all development projects. In addition, the software security gates are voluntary even in large, critical projects. Over the next 12 months, FakeFirm should institute process improvements that ensure the SSG is aware of all development and software acquisition projects worldwide. At the same time, FakeFirm should phase in mandatory compliance with various aspects of the SDLC security gates. For example, mandatory remediation of critical security defects within a given timeframe could be required immediately, while phasing in remediation of high and medium security defects over a period of months. Similarly, static analysis and penetration testing should quickly become mandatory for all critical applications, and should become mandatory for all applications over the next 12-18 months.

• Inventory—FakeFirm does not have a robust inventory of applications, PII, or open source software. Ensuring all software flows appropriately through various SDLC gates becomes complicated when the inventory is unknown. Without a data classification scheme, prioritizing projects and making a PII inventory is effectively impossible. FakeFirm should immediately begin an inventory initiative that accounts for all applications in the SSG’s purview, ensures each application receives a criticality rating, and associates each application with the levels of data levels. Over the next 12 months, expand the inventory to include the open source used and the current security status for each application. In addition, begin including software security waiver information for each application.

• Training—FakeFirm has a small amount of software security training that it uses to improve awareness. However, FakeFirm provides the training only in person, only to developers, and only at onboarding time. Over the next six months, FakeFirm should begin providing on-demand, role-based software security training to all roles involved in the SDLC. This will increase global awareness and increase technical skill in the major engineering roles such as requirements analysis, architecture, development, and testing. FakeFirm should also investigate the opportunity to provide training in the developer environment using IDE-based tools.

In our experience, it is better to have a well-rounded effort distributed across the SSF practices than to focus on a small number of practices. It is also important to remember that we have never observed all 112 activities in a single firm and such a feat is probably not a reasonable goal. A firm should always base activity selection—that is, resource allocation in the SSI—on actual need.

Appendix A: BSIMM Background

Where did BSIMM come from? The Building Security In Maturity Model (BSIMM) is the result of a multi-year study of real-world software security initiatives. We present the model as built directly out of data observed in 78 software security initiatives from firms including: Adobe, Aetna, ANDA, Autodesk, Bank of America, Black Knight Financial Services, BMO Financial Group, Box, Capital One, Cisco, Citigroup, Comerica, Cryptography Research, Depository Trust and Clearing Corporation, Elavon, EMC, Epsilon, Experian, Fannie Mae, Fidelity, F-Secure, HP Fortify, HSBC, Intel Security, JPMorgan Chase & Co., Lenovo, LinkedIn, Marks & Spencer, McKesson, NetApp, NetSuite, Neustar, Nokia, NVIDIA, PayPal, Pearson Learning Technologies, Qualcomm, Rackspace, Salesforce, Siemens, Sony Mobile, Symantec, The Advisory Board, The Home Depot, Trainline, TomTom, U.S. Bank, Vanguard, Visa, VMware, Wells Fargo, and Zephyr Health.

By quantifying the practices of many different organizations, we can describe the common ground they share as well as the variation that makes each unique. Our aim is to help the wider software security community plan, carry out, and measure initiatives of their own. The BSIMM is not a “how to” guide, nor is it a one-size-fits-all prescription. Instead, the BSIMM is a reflection of the software security state of the art.

We recorded observations from these firms using our Software Security Framework (SSF, see Figure F.1) as the basis for our interviews. The SSF comprises four domains and 12 practices.

• In the governance domain, the strategy and metrics practice encompasses planning, assigning roles and responsibilities, identifying software security goals, determining budgets, and identifying metrics and gates. The compliance and policy practice focuses on identifying controls for compliance regimens such as PCI DSS and HIPAA, developing contractual controls such as service level agreements to help control COTS and out-sourced software risk, setting organizational software security policy, and auditing against that policy. Training has always played a critical role in software security because software developers and architects often start with very little security knowledge.

• The intelligence domain creates organization-wide resources. Those resources are divided into three practices. Attack models capture information used to think like an attacker: threat modeling, abuse case development and refinement, data classification, and technology-specific attack patterns. The security features and design practice is charged with creating usable security patterns for major security controls (meeting the standards defined in the next practice), building middleware frameworks for those controls, and creating and publishing other proactive security guidance. The standards and requirements practice involves eliciting explicit security requirements from the organization, determining which COTS to recommend, building standards for major security controls (such as authentication, input validation, and so on), creating security standards for technologies in use, and creating a standards review board.

• The SSDL Touchpoints domain is probably the most familiar of the four. This domain includes the essential software security best practices integrated into the SDLC. Two important software security capabilities are architecture analysis and code review. Architecture analysis encompasses capturing software architecture in concise diagrams, applying lists of risks and threats, adopting a process for review (such as STRIDE or Architectural Risk Analysis), and building an assessment and remediation plan for the organization. The code review practice includes use of code review tools, development of tailored rules, customized profiles for tool use by different roles (for example, developers versus auditors), manual analysis, and tracking/measuring results. The security testing practice is concerned with pre-release testing including integrating security into standard quality assurance processes. The practice includes use of black box security tools (including fuzz testing) as a smoke test in QA, risk-driven white box testing, application of the attack model, and code coverage analysis. Security testing focuses on vulnerabilities in construction.

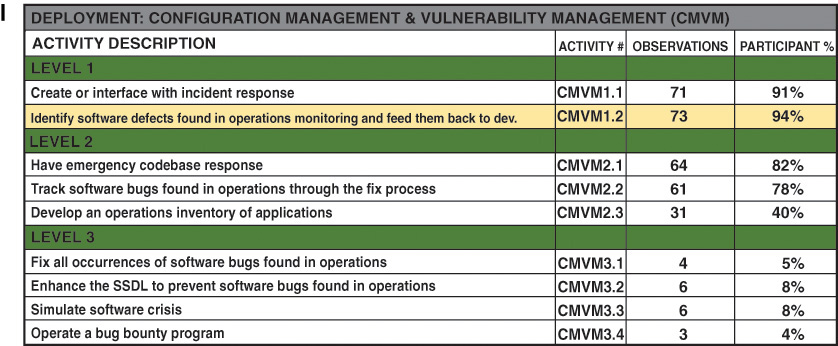

• By contrast, in the deployment domain, the penetration testing practice involves more standard outside—in testing of the sort carried out by security specialists. Penetration testing focuses on vulnerabilities in final configuration, and provides direct feeds to defect management and mitigation. The software environment practice concerns itself with OS and platform patching, web application firewalls, installation and configuration documentation, application monitoring, change management, and ultimately code signing. Finally, the configuration management and vulnerability management practice concerns itself with patching and updating applications, version control, defect tracking and remediation, and incident handling.

What is the BSIMM’s purpose? The BSIMM quantifies the activities carried out by real software security initiatives. Because these initiatives make use of different methodologies and different terminology, the BSIMM requires a framework that allows us to describe all of the initiatives in a uniform way. Our Software Security Framework (SSF) and activity descriptions provide a common vocabulary for explaining the salient elements of a software security initiative, thereby allowing us to compare initiatives that use different terms, operate at different scales, exist in different vertical markets, or create different work products.

We classify our work as a maturity model because improving software security almost always means changing the way an organization works—something that doesn’t happen overnight. We understand that not all organizations need to achieve the same security goals, but we believe all organizations can benefit from using the same measuring stick.

We created the BSIMM in order to learn how software security initiatives work and to provide a resource for people looking to create or improve their own software security initiative. In general, every firm creates their software security initiative with some high-level goals in mind. The BSIMM is appropriate if your business goals for software security include:

• Informed risk management decisions

• Clarity on what is “the right thing to do” for everyone involved in software security

• Cost reduction through standard, repeatable processes

• Improved code quality

By clearly noting objectives and by tracking practices with metrics tailored to your own initiative, you can use the BSIMM as a measurement tool to guide your own software security initiative.

Why do you call it BSIMM6? BSIMM is an “observational” model for which we started gathering data in 2008. That is, it is a descriptive model rather than a prescriptive model. BSIMM does not tell you what you should do; rather, it tells you what the BSIMM community is doing. Put another way, BSIMM is not a set of “best practices” as defined by some committee for some generic problem. Rather, BSIMM is a set of “actual practices” being performed on a daily basis by forward-thinking firms. We update the model approximately every year and BSIMM6 is the sixth such update.

What new terminology have you introduced? Nomenclature has always been a problem in computer security, and software security is no exception. A number of BSIMM terms have particular meanings for us and here are some of the most important:

• Activity—Actions carried out or facilitated by the SSG as part of a practice. We divide activities into three levels. Each activity is directly associated with an objective.

• Domain—One of the four major groupings in the Software Security Framework. The domains are governance, intelligence, SSDL touchpoints, and deployment.

• Practice—One of the twelve categories of BSIMM activities. Each domain in the Software Security Framework has three practices. Activities in each practice are divided into three levels corresponding to maturity.

• Satellite—A group of interested and engaged developers, architects, software managers, and testers who have a natural affinity for software security and are organized and leveraged by a software security initiative.

• Secure Software Development Lifecycle (SSDL)—Any SDLC with integrated software security checkpoints and activities.

• Security Development Lifecycle (SDL)—A term used by Microsoft to describe their Secure Software Development Lifecycle.

• Software Security Framework (SSF)—The basic structure underlying the BSIMM, comprising twelve practices divided into four domains.

• Software Security Group (SSG)—The internal group charged with carrying out and facilitating software security. We have observed that step one of a software security initiative is forming an SSG.

• Software Security Initiative—An organization-wide program to instill, measure, manage, and evolve software security activities in a coordinated fashion. Also known in the literature as an Enterprise Software Security Program (see Chapter 10 of the book, Software Security).

How should I use the BSIMM? The BSIMM is a measuring stick for software security. The best way to use the BSIMM is to compare and contrast your own initiative with the data we present. You can then identify goals and objectives of your own and look to the BSIMM to determine which further activities make sense for you.

The BSIMM data show that high maturity initiatives are well rounded—carrying out numerous activities in all twelve of the practices described by the model. The model also describes how mature software security initiatives evolve, change, and improve over time.

Instilling software security into an organization takes careful planning and always involves broad organizational change. By using the BSIMM as a guide for your own software security initiative, you can leverage the many years of experience captured in the model. You should tailor the implementation of the activities the BSIMM describes to your own organization (carefully considering your objectives). Note that no organization carries out all of the activities described in the BSIMM.

The following are the most common uses for the BSIMM:

• As a measuring stick to facilitate apples-to-apples comparisons between firms, business units, vertical markets, and so on

• As a way to measure an initiative’s improvement over time

• As a way to objectively gather data on current software security activity and use it to drive budgets and change

• As a way to understand software security maturity in vendors, business partners, acquisitions, and so on

• As a way to understand how the software security discipline is evolving worldwide

• As a way to become part of a private community that discusses issues and solutions

Who should use the BSIMM? The BSIMM is appropriate for anyone responsible for creating and executing a software security initiative. We have observed that successful software security initiatives are usually run by senior executives who report to the highest levels in an organization. These executives lead an internal group that we call the Software Security Group (SSG), charged with directly executing or facilitating the activities described in the BSIMM. We wrote the BSIMM with the SSI and SSG leadership in mind.

How do I construct a software security initiative? Of primary interest is identifying and empowering a senior executive to manage operations, garner resources, and provide political cover for a software security initiative. Grassroots approaches to software security sparked and led solely by developers and their direct managers have a poor record of accomplishment in the real world. Likewise, initiatives spearheaded by resources from an existing network security group often run into serious trouble when it comes time to interface with development groups. By identifying a senior executive and putting him or her in charge of software security directly, you address two management 101 concerns—accountability and empowerment. You also create a place in the organization where software security can take root and begin to thrive.

The second most important role in a software security initiative after the senior executive is that of the Software Security Group. Every single one of the 78 programs we describe in the BSIMM has an SSG. Successfully carrying out the activities in the BSIMM successfully without an SSG is very unlikely (and we haven’t observed this in the field), so create an SSG as you start working to adopt the BSIMM activities. The best SSG members are software security people, but software security people are often impossible to find. If you must create software security types from scratch, start with developers and teach them about security.

Though no two of the 78 firms we examined had exactly the same SSG structure (suggesting that there is no one set way to structure an SSG), we did observe some commonalities that are worth mentioning. At the highest level of organization, SSGs come in three major flavors: those organized according to technical SDLC duties, those organized by operational duties, and those organized according to internal business units. Some SSGs are highly distributed across a firm, and others are very centralized and policy-oriented. If we look across all of the SSGs in our study, there are several common “subgroups” that are often observed: people dedicated to policy, strategy, and metrics; internal “services” groups that (often separately) cover tools, penetration testing, and middleware development plus shepherding; incident response groups; groups responsible for training development and delivery; externally-facing marketing and communications groups; and, vendor-control groups.

Of course, all other stakeholders also play important roles. These include:

• Builders, including developers, architects, and their managers must practice security engineering, ensuring that the systems that we build are defensible and not riddled with holes. The SSG will interact directly with builders when they carry out the activities described in the BSIMM. As an organization matures, the SSG usually attempts to empower builders so that they can carry out most of the BSIMM activities themselves with the SSG helping in special cases and providing oversight. In this version of the BSIMM, we often don’t explicitly point out whether a given activity is to be carried out by the SSG or by developers or by testers, although in some cases we do attempt to clarify responsibilities in the goals associated with activity levels within practices. You should come up with an approach that makes sense for your organization and takes into account workload and your software lifecycle.

• Testers concerned with routine testing and verification should do what they can to keep a weather eye out for security problems. Some of the BSIMM activities in the Security Testing practice can be carried out directly by QA.

• Operations people must continue to design reasonable networks, defend them, and keep them up. As you will see in the Deployment domain of the SSF, software security does not end when software is shipped, deployed, or otherwise made available to clients and partners.

• Administrators must understand the distributed nature of modern systems and begin to practice the principle of least privilege, especially when it comes to applications they host or attach to as services in the cloud.

• Executives and middle management, including Line of Business owners and Product Managers, must understand how early investment in security design and security analysis affects the degree to which users will trust their products. Business requirements should explicitly address security needs. Any sizeable business today depends on software to work. Software security is a business necessity.

• Vendors, including those who supply COTS, custom software, and software-as-a-service, are increasingly subjected to SLAs and reviews (such as vBSIMM) that help ensure products are the result of a secure SDLC.

Am I now part of a BSIMM group? The firms participating in the BSIMM Project make up the BSIMM Community. A moderated private mailing list allows participating SSG leaders to discuss solutions with those who face the same issues, discuss strategy with someone who has already addressed an issue, seek out mentors from those are farther along a career path, and band together to solve hard problems.

The BSIMM Community also hosts annual private conferences where representatives from each firm gather in an off-the-record forum to discuss software security initiatives.

The BSIMM website (http://bsimm.com) includes a credentialed BSIMM Community section where we post some information from the conferences, working groups, and mailing list-initiated studies.

Appendix B: BSIMM Activities

This appendix contains a summary table of activities for each of the 12 BSIMM practices.

The assigned levels are also embedded in the activity numeric identifiers (e.g., SM“2”.1 is a Strategy & Metrics activity at level 2). For more detail on each activity, read the BSIMM document at https://www.bsimm.com/download/ (registration not required). Level assignment for each activity stems from its frequency of occurrence in the BSIMM data pool. The most frequently observed activities are generally in level 1, while those activities observed infrequently are in level 3. Changes in the BSIMM data pool over time may result in promoting or demoting activities to other levels, such as promoting from level 2 to level 3 or demoting from level 2 to level 1.

When an activity moves to a new level, it receives a new numeric identifier and its previous identifier is retired. For example, if XX2.2 is promoted to level 3, XX2.2 is not reused later.

Each BSIMM activity is unique. There are no cases where, for example, one activity requires the SSG to do something to 80% of the portfolio and another activity requires the SSG to do the same thing to a larger percentage of the portfolio. Therefore, regardless of the total effort expended, focusing on only one or two activities in a practice will not improve the total BSIMM score because we aren’t observing any additional activities.

As we observe new software security activities in the field, they become candidates for inclusion in the model. If we observe a candidate activity not yet in the model, we determine based on previously captured data and BSIMM mailing list queries how many firms probably carry out that activity. If the answer is multiple firms, we take a closer look at the proposed activity and figure out how it fits with the existing model. If the answer is only one firm, we table the candidate activity as too specialized. Furthermore, if the candidate activity replicates an existing activity or simply refines an existing activity, we drop it from consideration. If several firms carry out the activity today and the activity is not simply a refinement of an existing activity, we will consider it for inclusion in the next BSIMM release.

The list below provides all changes to the BSIMM model since inception:

From BSIMM to BSIMM2, we made the following changes:

• T2.3 Require annual refresher became T3.4

• CR1.3 was removed from the model

• CR2.1 Use automated tools along with manual review became CR1.4

• SE2.1 Use code protection became SE3.2

• SE3.1 Use code signing became SE2.4

From BSIMM2 to BSIMM3, we made the following changes:

• SM1.5 Identify metrics and drive initiative budgets with them became SM2.5

• SM2.4 Require security sign-off became SM1.6

• AM2.3 Gather attack intelligence became AM1.5

• ST2.2 Allow declarative security/security features to drive tests became ST1.3

• PT2.1 Use pen testing tools internally became PT1.3

From BSIMM3 to BSIMM4, we made the following changes:

• T2.1 Role-based curriculum became T1.5

• T2.2 Company history in training became T1.6

• T2.4 On-demand CBT became T1.7

• T1.2 Security resources in onboarding became T2.6

• T1.4 Identify satellite with training became T2.7

• T1.3 Office hours became T3.5

• AM2.4 Build internal forum to discuss attacks became AM1.6

• CR2.3 Make code review mandatory became CR1.5

• CR2.4 Use centralized reporting became CR1.6

• ST1.2 Share security results with QA became ST2.4

• SE2.3 Use application behavior monitoring and diagnostics became SE3.3

• CR3.4 Automate malicious code detection added to model

• CMVM3.3 Simulate software crisis added to model

From BSIMM4 to BSIMM-V, we made the following changes:

• SFD2.3 Find and publish mature design patterns from the organization became SFD3.3

• SR2.1 Communicate standards to vendors became SR3.2

• CR3.1 Use automated tools with tailored rules became CR2.6

• ST2.3 Begin to build and apply adversarial security tests (abuse cases) became ST3.5

• CMVM3.4 Operate a bug bounty program added to model

From BSIMM-V to BSIMM6, we made the following changes:

• SM1.6 Require security sign-off became SM2.6

• SR1.4 Create secure coding standards became SR2.6

• ST3.1 Include security tests in QA automation became ST2.5

• ST3.2 Perform fuzz testing customized to application APIs became ST2.6

On the following pages are tables showing the activities included in each BSIMM6 practice.

About Cigital

Cigital is one of the world’s largest application security firms. We go beyond traditional testing services to help organizations find, fix, and prevent vulnerabilities in the applications that power their business. Our holistic approach to application security offers a balance of managed services, professional services, and products tailored to fit your specific needs. We don’t stop when the test is over. Our experts also provide remediation guidance, program design services, and training that empower you to build and maintain secure applications.

Our proactive methods helps clients reduce costs, speed time to market, improve agility to respond to changing business pressures and threats, and focus resources where they are needed most. Cigital’s managed services maximize client flexibility, while reducing operational friction and cost. Cigital gives organizations of any size access to the scale, security expertise, and practices needed to build a successful software security initiative.

Cigital is headquartered near Washington, D.C. with regional offices in the U.S., London, and India.

For more information, visit us at www.Cigital.com