Chapter 6. Metrics

In This Chapter

• 6.1 How to Define and Structure Metrics to Manage Cyber Security Engineering

• 6.2 Ways to Gather Evidence for Cyber Security Evaluation

6.1 How to Define and Structure Metrics to Manage Cyber Security Engineering

A measure is defined as “an amount or degree of something”; it is an operation for assigning a value to something.1 A metric is defined as “a standard of measurement”; it is the interpretation of assigned values.2 Scientist Lord Kelvin said, “When you can measure what you are speaking about, and express it in numbers, you know something about it; but when you cannot measure it, when you cannot express it in numbers, your knowledge is of a meager and unsatisfactory kind; it may be the beginning of knowledge, but you have scarcely in your thoughts advanced to the stage of science.”3

1. www.merriam-webster.com/dictionary/measure

2. www.merriam-webster.com/dictionary/metric

3. https://en.wikiquote.org/wiki/William_Thomson

Software assurance measurement assesses the extent to which a system or software item possesses desirable characteristics [NDIA 1999]. The desires could be expressed as one or all of the following: requirements, standards, compliance mandates, operational qualities, threats to be addressed, and risks to be avoided. The purpose of software assurance measurement is to “establish a basis for gaining justifiable confidence (trust, if you will) that software will consistently demonstrate one or more desirable properties” [Bartol 2009]. This basis includes properties such as quality, reliability, correctness, dependability, usability, interoperability, safety, fault tolerance, and security [Bartol 2009].

6.1.1 What Constitutes a Good Metric?

Jaquith posits that a good metric has three characteristics [Jaquith 2007]:

• Simple to explain and straightforward to determine so the meaning can be widely understood

• Expressible in time, money, or something that can be converted into these readily accepted parameters

• Readily structured for benchmarking so that change can be quickly identified and evaluated

Further, a good metric must be consistently measurable, able to be gathered at a low cost (preferably automated), preferably quantitative (expressed as a number or percentage), and contextually specific to be relevant for decision makers to take action.

Metrics such as the age of an automobile driver, which an insurance company uses to determine an annual cost for automobile insurance, are backed by decades of statistically analyzed data of actual accident rates for drivers of every age. Likewise, a doctor estimates a patient’s risk of a heart attack by looking at metrics such as age, deviation of weight from the norm, and whether the patient engages in behaviors such as smoking—health information that has been assembled, along with data on actual heart attacks, for decades across major sectors of the population.

Cyber security does not have this kind of historical data from which to structure standardized metrics, but that does not mean we cannot establish good metrics for decision making. We suggest following a well-established methodology called Goal-Question-Metric (GQM), introduced and described by Basili and Rombach [Basili 1984, 1988]. This process for deriving meaningful metrics is to define the objectives/goals, formulate questions relevant to the goal, collect data and indicators that inform the answers to the questions, define metrics, and report metrics (customized to target audiences).

To be useful, software assurance metric data must be consistently valid, retrievable, relevant, and cost-effective. In order to be practically effective, the data must be retrievable, understandable, and relevant. Data must provide concrete values. Consequently, data collection must be based on consistent units of measure that can be normalized. Moreover, once data have been collected, they must be stored so that they retain their usefulness. These are the generic requirements for metrics-based assurance:

• Agreed-on metrics to define the assurance

• Scientifically derived data for each metric

• Sufficient justification for collecting data to account for the added costs of collection

Organizations must define and adopt a frame of reference before assessors can select the particular metrics to use for data collection. The frame of reference provides the practical justification for the metric selection process. A frame of reference is nothing more than the logic (or justification) for choosing the metrics that are eventually used. For instance, there are no commonly agreed on measures to assess security; however, a wide range of standard metrics, such as defect counts or cyclomatic complexity, could be used to measure that characteristic. The metric that is selected depends on the context of its intended use.

The only rule that guides the selection and adoption process is that the metrics must be objectively measurable, produce meaningful data, and fit the frame of reference that has been adopted [Shoemaker 2013].

6.1.2 Metrics for Cyber Security Engineering

Cyber security is not something for which we can assemble a standard set of metrics, such as height, width, length, and weight. A seemingly endless list of metrics could be collected for cyber security, such as the number of security requirements, lines of validated code, vulnerabilities found by code checkers, process steps that include security considerations, hours needed to fix a security bug, and data validation tests passed and failed. Collecting each of these metrics involves time and effort, so the benefit to organizations, projects, and even individuals must be clear. We may already be collecting related metrics (e.g., for quality, safety, reliability, usability, and a whole host of other system and software qualities) that could tell us something about cyber security.

System and software engineering is composed of many aspects that can be evaluated independently but need to be considered collectively. We need security measures for the product, the processes used to create and maintain that product, the capabilities of the engineers performing the construction or those of the vendor for an acquisition, the trust relationships from the product to other products and the controls on those connections, and the operational environment in which the product executes. In addition, we need to establish that the engineering steps we are performing are moving toward the desired cyber security results.

Why is this so difficult? The responsibilities for these various segments can be scattered across teams, divisions, or even separate organizations, depending on how an engineering effort is structured. The control we have over each segment and access to information about it can vary widely and may impact measurement options. The needs for security and available solutions can also vary widely, depending on the operational mission, languages and frameworks used in development of the product, and operational infrastructure choices. There are currently no established standards for assignment of these responsibilities.

Let’s consider software measurement in general. Although it is possible for software to be both incorrect and secure, it is generally the case that incorrect software is also more likely to contain security vulnerabilities. Organizations should establish a broad software measurement program in place and add assurance considerations to it rather than just consider software assurance measures in a vacuum. Software product measurement assesses two related but distinctly different attributes: functional correctness and structural correctness. Functional correctness measures how the software performs in its environment. Structural correctness assesses the actual product and process implementation.

Software functional correctness describes how closely the behavior of the software complies with or conforms to its functional design requirements or specifications. In effect, functional correctness characterizes how completely the piece of software achieves its contractual purpose. Functional correctness is typically implemented, enforced, and measured through software testing. The testing for correctness is done by evaluating the existing behavior of the software against a logical point of comparison. In essence, the logical point of comparison is the basis that a particular decision maker adopts to form a conclusion [IEEE 2000]. Logical points of comparison include diverse things such as “specifications, contracts, comparable products, past versions of the same product, inferences about intended or expected purpose, user or customer expectations, relevant standards, applicable laws, or other criteria” [Shoemaker 2013].

Measurements of structural correctness assess how well the software satisfies environmental or business requirements, which support the actual delivery of the functional requirements. For instance, structural correctness characterizes qualities such as the robustness or maintainability of the software, or whether the software was produced properly. Structural correctness is evaluated through the analysis of the software’s fundamental infrastructure and through the validation of its code against defined acceptability requirements at the unit, integration, and system levels. Structural measurement also assesses how well the architecture adheres to sound principles of software architectural design [OMG 2013].

Consider an example of how metrics were selected and applied.4 The SEI maintains detailed size, defect, and process data for more than 100 software development projects. The projects include a wide range of application domains and project sizes. Five of these projects focus on specific security- and safety-critical outcomes. Let’s take a look at the security results for these projects. Four of these projects reported no post-release safety-critical or security defects in the first year of operation, but the remaining one project had 20 such defects. On one of the projects with no safety-critical or security defects, further study revealed that staff members had been trained to recognize common security issues in development and had been required to build this understanding into their development process. Metrics were collected using review and inspection checklists along with productivity data. These metrics enabled staff to accurately predict the effort and quality required for future components using actual historical data.

4. This example is drawn from Predicting Software Assurance Using Quality and Reliability Measures [Woody 2014].

The teams performed detailed planning for each upcoming code-release cycle and confirmation planning for the overall schedule. For the project with the largest code base, teams conducted a Monte Carlo simulation to identify a completion date within the 84th percentile (i.e., 84% of the project simulated finished earlier than this completion date). The team included estimates for the following:

• Incoming software change requests (SCRs) per week

• Triage rate of SCRs

• Percentage of SCRs closed

• Development work (SCR assigned) for a cycle

• SCR per developer (SCR/Dev) per week

• Number of developers

• Time to develop test protocols

• Software change requests per security verifier and validator (SCR/SVV) per week

• Number of verification persons

The team then committed to complete the agreed work for the cycle, planned what work was being deferred into future cycles, and projected that all remaining work would still fit the overall delivery schedule. The team tracked all defects throughout the projects in all phases (injection, discovery, and fix data). Developers used their actual data to plan subsequent work and reach agreement with management on the schedule, content, process used, and resources required so that the plan could proceed without compromising the delivery schedule.

This example uses several measures collected from various aspects of engineering to assemble a basis of confidence constructed from historical information that can be used in making a decision. Each measure by itself is insufficient, but the collection can be useful. The metrics collected were selected based on information needs to support a specific goal (workload planning) and addressed a range of questions related to the goal, such as productivity of the resources performing the work and level of expected churn in the workload. This follows the GQM methodology [Basili 1984, 1988].

Another important element that can be measured is organizational capability to address cyber security. The National Institute of Standards and Technology (NIST) has developed a framework for improving critical infrastructure cyber security [NIST 2014]. The NIST framework focuses on the operational environment that influences the level of cyber security responsibility, enabling organizations to identify features or characteristics of the infrastructure that support cyber security as opposed to what needs to be engineered directly into the system and software. The framework provides an assessment mechanism that enables organizations to determine their current cyber security capabilities, set individual goals for a target state, and establish a plan for improving and maintaining cyber security programs. After using the assessment mechanism to determine goals and plans for cyber security, organizations can use metrics to monitor and evaluate results. Metrics are collected and analyzed to support a decision so that a decision can determine an action [Axelrod 2012].

Measurement frameworks for security are not new, but broad use of available standards in the lifecycle has been limited by the lack of system and software engineering involvement in security beyond authentication and authorization requirements. With the growing recognition of the ways in which engineering decisions impact security, metrics that support the monitoring and managing of these concerns should be incorporated into the lifecycle.

Standards such as NIST’s Performance Measurement Guide for Information Security [Chew 2008] propose a wide range of possible metrics that can support an information security measurement effort. These metrics are proposed to verify that selected security controls are appropriately implemented and that appropriate federal legislative mandates have been addressed. An international standard, ISO/IEC 27004, “provides guidance on the development and use of measures and measurement in order to assess the effectiveness of an implemented information security management system (ISMS).”5

5. www.iso.org/iso/catalogue_detail?csnumber=42106

6.1.3 Models for Measurement6

6. This section is drawn from Software Assurance Measurement—State of the Practice [Shoemaker 2013].

An organization might already have a measurement model that structures metrics to address selected goals. There are three major categories of measurement models: descriptive, analytic, and predictive.

Measurement models of any type can be created based on equations, or analysis of sets of variables, that characterize practical concerns about the software. A good measurement model allows users to fully understand the influence of all factors that affect the outcome of a product or process, not just primary factors. A good measurement model also has predictive capabilities; that is, given current known values, it predicts future values of those attributes with an acceptable degree of certainty.

Assurance models measure and predict the level of assurance of a given product or process. In that respect, assurance models characterize the state of assurance for any given piece of software. They provide a reasonable answer to questions such as “How much security is good enough?” and “How do you determine when you are secure enough?” In addition, assurance measurement models can validate the correctness of the software assurance process itself. Individual assurance models are built to evaluate everything from error detection efficiency to internal program failures, software reliability estimates, and degree of availability testing required. Assurance models can also be used to assess the effectiveness and efficiency of the software management, processes, and infrastructure of an organization.

Assurance models rely on maximum likelihood estimates, numerical methods, and confidence intervals to make their assumptions. Therefore, it is essential to validate the correctness of a model. Validation involves applying the model to a set of historical data and comparing the model’s predicted outcomes to the actual results. The output from a model should be a metric, and that output should be usable as input to another model.

Assurance modeling provides a quantitative estimate of the level of trust that can be assumed for a system. Models that predict the availability and reliability of a system include estimates such as initial error counts and error models incorporating error generation, uptime, and the time to close software error reports.

Software error detection models characterize the state of debugging the system, which encompasses concerns such as the probable number of software errors that are corrected at a given time in system operation, plus methods for developing programs with low error content and for developing measures of program complexity.

Models of internal program structure include metrics such as the number of paths (modules) traversed, the number of times a path has been traversed, and the probability of failure, as well as advice about any automated testing that might be required to execute every program path.

Testing effectiveness models and techniques provides an estimate of the number of tests necessary to execute all program paths and statistical test models. Software management and organizational structure models contain statistical measures for process performance. These models can mathematically relate error probability to the program testing process and the economics of debugging due to error growth.

Because assurance is normally judged against failure, the use of a measurement model for software assurance requires a generic and comprehensive definition of what constitutes “failure” in a particular measurement setting. That definition is necessary to incorporate considerations of every type of failure at every severity level. Unfortunately, up to this point, a large part of software assurance research has been devoted to defect identification, despite the fact that defects are not the only type of failure. This narrow definition is due partly to the fact that defect data is more readily available than other types of data, but it is mostly due to the lack of popular alternatives for modeling the causes and consequences of failure for a software item. With the advent of exploits against software products that both run correctly and fulfill a given purpose, the definition of failure must be expanded. That expansion would include incorporating into the definition of failure intentional (backdoors) or unintentional (defects) vulnerabilities that can be exploited by an adversary as well as the mere presence of malicious objects in the code.

Existing security standards can be leveraged to fill this measurement need [ISO/IEC 2007]. Practical Measurement Framework for Software Assurance and Information Security [Bartol 2008] identifies similarities and differences among five different models for measurement and would be a useful resource to assist in selecting measurements appropriate to an organization’s cyber security engineering needs.

What Decisions About Cyber Security Need to Be Supported by Metrics?

In this chapter we have focused on metrics for development planning with expected cyber security results. Let’s now take a look at software that is being reused, as opposed to software that is being newly developed. If we plan to reuse software such as a commercial product, open source, or code developed for another purpose, is there something inherent in product cyber security that would motivate us to choose one software product over another? If we can scan the code, we can use software tools to find out what vulnerabilities the code contains as well as the severity of each vulnerability, according to a metrics standard established in the Common Vulnerability Enumeration (CVE). We could use other tools to evaluate the binaries for malware. Each piece of information becomes evidence that supports or refutes a claim about an option.

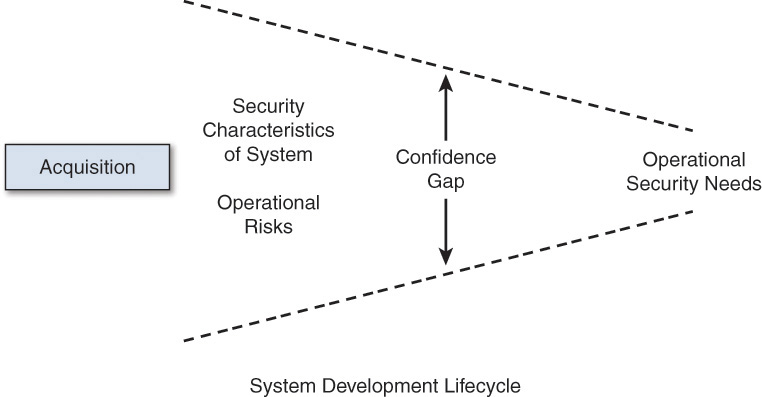

At the start of a development cycle, we have a limited basis for determining our confidence in the behavior of the delivered system; that is, we have a large gap between our initial level of confidence and the desired level of confidence. Over the development lifecycle, we need to reduce that confidence gap, as shown in Figure 6.1, to reach the desired level of confidence for the delivered system [Woody 2014].7

7. This section is drawn from Predicting Software Assurance Using Quality and Reliability Measures [Woody 2014].

In Chapter 1, “Cyber Security Engineering: Lifecycle Assurance of Systems and Software,” we discussed confidence in the engineering of software. To review, we must have evidence to support claims that a system is secure. An assurance case is a documented body of evidence that provides a convincing and valid argument that a specified set of critical claims about a system’s properties are adequately justified for a given application in a given environment. Quality and reliability can be incorporated as evidence into an argument about predicted software security. Details about an assurance case structure and how to build an assurance case can be found in Predicting Software Assurance Using Quality and Reliability Measures [Woody 2014].

6.2 Ways to Gather Evidence for Cyber Security Evaluation

6.2.1 Process Evidence

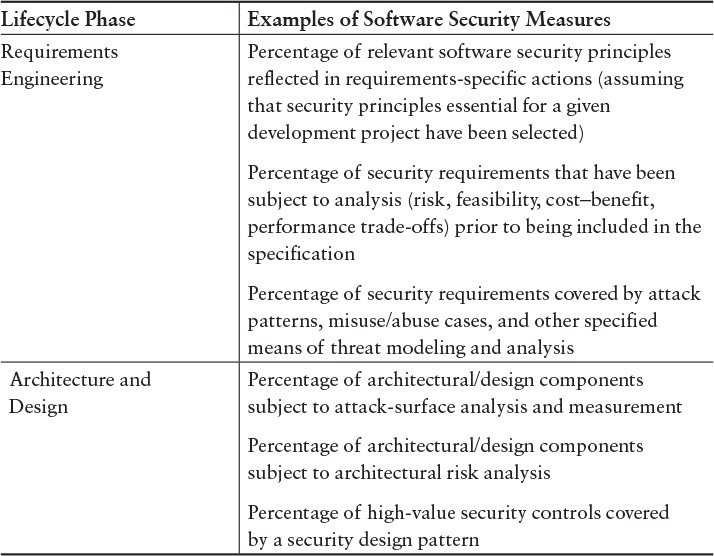

Table 6.1 presents a few examples of lifecycle-phase measures that can aid in demonstrating required levels of software security. Increases in percentages for these measures over time can indicate an expanded focus on security and process improvement for performing security analysis, but they provide no evidence about the actual product. A more extensive list can be found in Appendix G, “Examples of Lifecycle-Phase Measures.” Because of the volume of data that needs to be handled to produce effective measures across an organization, consideration of automation for consistency in collection and effectiveness in monitoring and management is critical. Without automation, labor costs can be expected to be very high and the ability to achieve timely input will be greatly reduced.

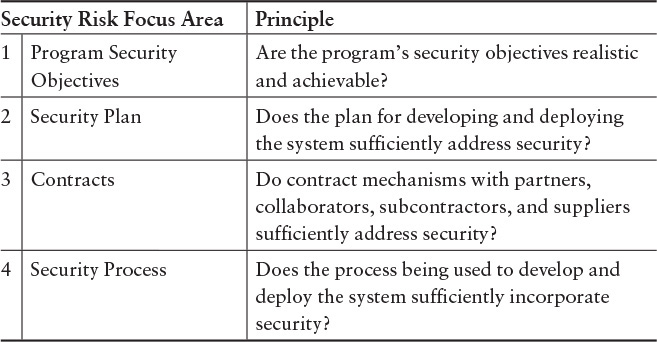

Alberts, Allen, and Stoddard [Alberts 2010] suggest a set of questions to use to assess key lifecycle areas of security risk. Security experts identified 17 process-related areas from across the lifecycle as being important for security. The associated security questions provide a means of evaluating security risk. Many of the terms within the questions, such as “sufficient,” are not absolute and must be defined based on organization-specific criteria. For example, addressing a frequently occurring security problem may mean once a year for one organization and once a minute for another, depending on what the organization is doing. Table 6.2 shows an extract of a full table provided in Appendix G.

Table 6.2 Examples of Questions for Software Security [Alberts 2010]

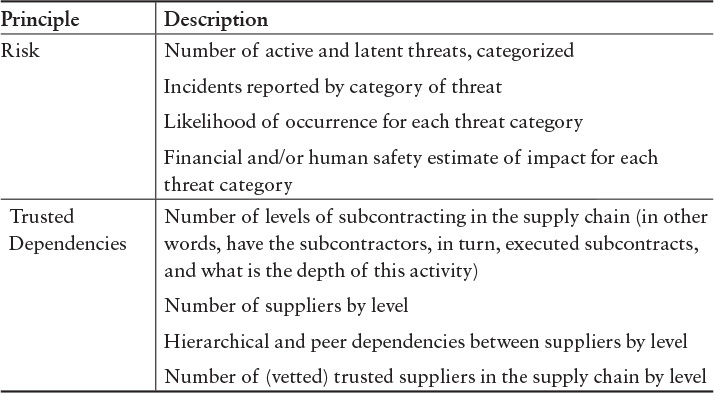

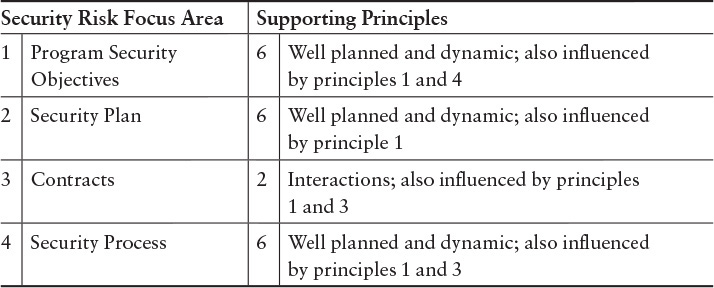

Process-related areas can map to the updated security principles described earlier. Table 6.3 is an extract of such a mapping, from the full table presented in Appendix G.

Table 6.3 Mapping Between Security Risk Focus Areas and Principles for Software Security [Mead 2013b]

A framework of measures linked to the seven principles of evidence defined in Common Weakness Enumeration [MITRE 2014] can provide evidence that organizations are effectively addressing the security risk focus areas [Mead 2013b]. Table 6.4 shows an extract of a full table from Appendix G.

Table 6.4 Example Measures Based on the Seven Principles of Evidence [MITRE 2014]

If the subcontractor has a unique needed skill set, appropriate nondisclosure agreements may provide the needed level of confidence. On the other hand, if the subcontractor is known to have engaged in questionable practices (an extreme example would be accepting bribes to provide inside information about competitive products to its customers), it might be best to replace that subcontractor with another company, even if the replacement company is less experienced in these types of products. Another option would be to decide to do that part of the development in-house, thus reducing the risk of industrial espionage. Of course, this last option assumes that GoFast’s employees have been vetted and deemed trustworthy.

6.2.2 Evidence from Standards

Evidence of effective cyber security engineering can be assembled by leveraging security standards that the organization has chosen to follow. NIST Special Publication 800-53 [NIST 2013] provides a wide range of security and privacy controls. These controls are widely used as implementation mechanisms to meet security requirements. NIST 800-53A [NIST 2014a] is a companion document to NIST 800-53 that describes how an audit could be conducted to collect evidence about the controls. The audit focuses on determining the extent to which the organization has chosen to implement the controls. Information is not provided for determining how well the implementation is performing. NIST 800-55 [NIST 2008], as noted earlier, has a range of specific security measures that can be applied across the lifecycle.

Alberts, Allen, & Stoddard [Alberts 2012b] provide a description of measures derived from the security controls described in NIST 800-53 [NIST 2013] that could be assembled to evaluate the effectiveness of a control. Another useful standard, detailed in Practical Measurement Framework for Software Assurance and Information Security [Bartol 2008], is ISO/IEC 227002, which contains a controls catalog, an ISO equivalent of NIST 800-53.8

8. www.iso27001security.com/html/27004.html

Product Evidence

Combining the process perspective with a product focus would assemble stronger evidence to support confidence that cyber security requirements are met. Practical Measurement Framework for Software Assurance and Information Security [Bartol 2008] provides an approach for measuring how effectively an organization can achieve software assurance goals and objectives at an organizational, program, or project level. This framework incorporates existing measurement methodologies and is intended to help organizations and projects integrate SwA measurement into their existing programs. (Refer to Chapter 3, “Secure Software Development Management and Organizational Models.”)

More recent engineering projects described by Woody, Ellison, and Nichols [Woody 2014] demonstrate that a disciplined lifecycle approach with quality defect identification and removal practices combined with code analysis tooling provide the strongest results for building security into software and systems. Defect prediction models are typically informed by measures of the software product at a specific time, longitudinal measures of the product, or measures of the development process used to build the product. Metrics typically used to analyze quality problems can include the following:

• Static software metrics, such as new and changed LOC, cyclomatic complexity,9 counts of attributes, parameters, methods and classes, and interactions among modules

9. http://en.wikipedia.org/wiki/Cyclomatic_complexity

• Defect counts, usually found during testing or in production, often normalized to size, effort, or time in operation

• Software change metrics, including frequency of changes, LOC changed, or longitudinal product change data, such as number of revisions, frequency of revisions, numbers of modules changed, or counts of bug fixes over time

• Process data, such as activities performed or effort applied to activities or development phases

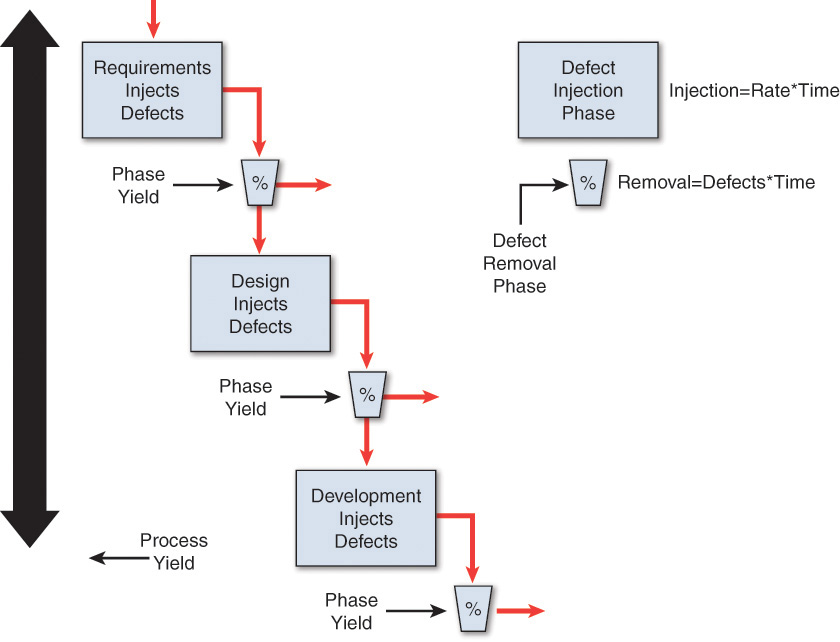

Many models currently in use rely on static or longitudinal product measures, such as code churn. Other approaches use historic performance or experience based on defect injection and removal (generally described using a “tank and filter” metaphor), as shown in Figure 6.2, to monitor and model the defect levels during the development process. The connections between defects, which are typically related to quality and security vulnerabilities, is still an active area of research. Many of the common weakness enumerations (CWEs) cited as the primary reasons for security failures are closely tied to recognized quality issues. Data shows vulnerabilities to be 1% to 5% of reported defects [Woody 2014]. There is wide agreement that quality problems are strong evidence for security problems.

However, focusing just on a product to assure that it has few defects is also insufficient. Removal of defects depends on assuring our capability to find them. Positive results from security testing and static code analysis are often provided as evidence that security vulnerabilities have been reduced, but it is a mistake to rely on them as the primary means for identifying defects. The omission of quality practices, such as inspections, can lead to defects exceeding the capabilities of existing code analysis tools [Woody 2014].

Organizations must establish product relevance before applying the metrics to cyber security. In the research described above, the product was a medical device, and the security requirements being evaluated were assigned to the whole device. These same measures could be performed on a single software application running on this device. The relationship of the component to the whole must be determined before it is possible to observe the utility of applying metrics collected on a component to the composition. For example, a component that handles of the data input and output for the device represents a critical part of the cyber security concern for the device. Measures collected on a USB device driver on the device are not representative of the composition.

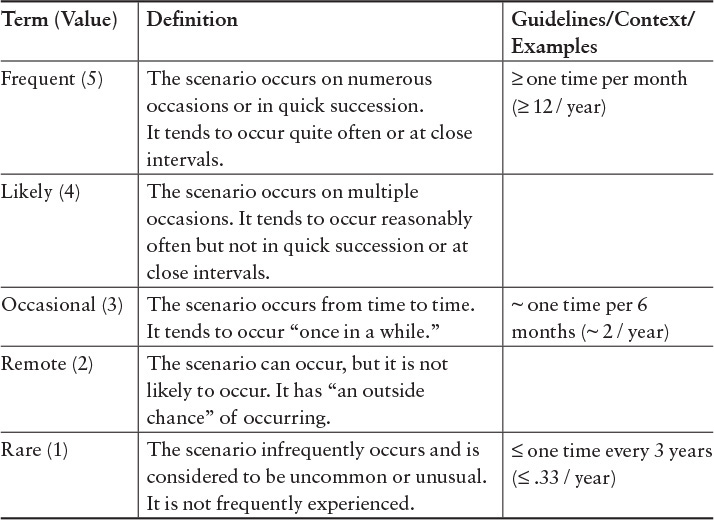

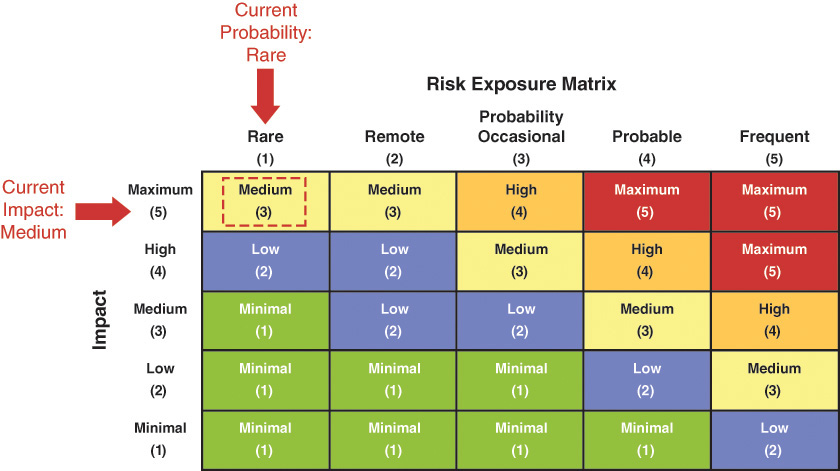

Evaluating the Evidence

Next, we need to be sufficiently specific about the definitions of the words we use to provide consistent and repeatable usage. As an example, consider the word often. In some contexts, this word may mean daily or weekly and in others it may mean multiple times per second. Table 6.5 provides an example of structuring terms related to frequency to establish consistency.

Measures can be qualitative or quantitative; both are useful, but the assigned value (shown in parentheses in Table 6.5) depends on the question being asked and how well the measures support the decision.10 Is it sufficient to have a relative frequency of occurrence, or does the decision demand a specific count? There are trade-offs to consider in making this determination: The more exact the information, the more costly it is to gather.11

10. NIST 800-55 [NIST 2008] also provides a terminology structure to help clarify terms like sufficient that can be easily interpreted many ways.

11. In his book Engineering Safe and Secure Software Systems, Axelrod provides an interesting comparison of qualitative and quantitative measurements for an automobile in Table 7.1 [Axelrod 2012].

6.2.3 Measurement Management12

12. This section is drawn from Measures for Managing Operational Resilience [Allen 2011].

Because software is not created once and left unchanged, we cannot make measurements once and assume that they are sufficient for all ongoing needs. The same is true of cyber security information for the software. If we want to show change over time, we must collect and assemble measurements and structure them for future use. Measurement management ensures that suitable measures are created and applied as needed in the lifecycle of the software or system. Measurement management requires the formulation of coherent baselines of measures that accurately reflect product characteristics and lifecycle management needs. Measurement baselines must be capable of being revised as needed to track the evolving product throughout its lifecycle and evaluate relationships with similar products. Using the appropriate set of baselines, the measurement management process ensures that the right set of metrics is always in place to produce the desired measurement outcomes. This approach is consistent with existing international security measurement standards, such as ISO/IEC 27004 and ISO/IEC 15939, and security measurement guidance, such as Practical Measurement Framework for Software Assurance and Information Security [Bartol 2008].

The measurement management process collects data about discrete aspects of a product and/or process functioning to support operational and strategic decision making about the product. The measurement management process should provide data-driven feedback in real time or near real time to project managers, development managers, and technical staff. The National Vulnerability Database13 is one of the resource baselines publically available for cyber security. This database contains standards-based vulnerability data that can be used by automated tools to verify compliance of software product implementations.

13. www.nist.gov/itl/csd/stvm/nvd.cfm

Managing Through Measurement Baselines

Management control is typically enforced through measurement baselines. A measurement baseline is a collection of discrete metrics that characterize an item of interest for a target of measurement. Using a measurement baseline, it is possible to make meaningful comparisons of product and process performance to support management and operational decision making. Such comparisons can be vital to improving products and processes over time through the use of predictive and stochastic assurance models for decision making. Potential metrics for supporting such an effort might include basic measures such as defect data, productivity data, and threat/vulnerability data. An evolving baseline model provides managers with the necessary assurance insight, cost controls, and business information for any process or product, and it allows value tracking for the assurance process.

Formulating a measurement baseline involves four steps:

1. The organization identifies and defines the target of measurement (the goal aspect of the GQM model, as noted earlier).

2. The organization establishes the requisite questions that define needed metrics to assure the desired measurement objectives; the organization assembles the relevant measures in a formally structured and controlled baseline.

3. The organization establishes the comparative criteria for tracking performance. Those criteria establish what will be learned from analyzing the data from each metric over time.

4. The organization carries out the routine measurement data collection and analysis activities, using the baseline metrics.

Examples showing the results of this approach for operational resilience can be found in Measures for Managing Operational Resilience [Allen 2011],14 which defines 10 strategic measures to support organizational strategic objectives. The following is an example of an organizational objective along with the measures that support it:

14. This report and others in the SEI’s Resilience Measurement and Analysis collection can be found at http://resources.sei.cmu.edu/library/asset-view.cfm?assetid=434555.

Objective: In the face of realized risk, the operational resilience management (ORM) system ensures the continuity of essential operations of high-value services and their associated assets. Realized risk may include an incident, a break in service continuity, or a human-made or disaster or crisis.

Measure 9: Probability of delivered service through a disruptive event

Measure 10: For disrupted, high-value services with a service continuity plan, percentage of services that did not deliver service as intended throughout the disruptive event

Consider using “near misses” and “incidents avoided” as predictors of successful disruptions in the future.