Chapter 4. Engineering Competencies

with Tom Hilburn and Dan Shoemaker

In This Chapter

• 4.1 Security Competency and the Software Engineering Profession

• 4.2 Software Assurance Competency Models

• 4.3 The DHS Competency Model

• 4.4 The SEI Software Assurance Competency Model

4.1 Security Competency and the Software Engineering Profession1

1. This section is drawn from The Software Assurance Competency Model: A Roadmap to Enhance Individual Professional Capability [Mead 2013a].

Modern society increasingly relies on software systems that put a premium on quality and dependability. The extensive use of the Internet and distributed computing has made software security an ever more prominent and serious problem. So, the interest in and demand for software security specialists have grown dramatically in recent years. The Department of Homeland Security (DHS), the Department of Defense (DoD), the Software Engineering Institute (SEI), and other government, commercial, and educational organizations have expressed a critical need for the education and development of software security specialists.

However, to support this need, we must address some key questions. What background and capability does a software security specialist need? How do individuals assess their capability and preparation for software security work? What’s the career path to increased capability and advancement in this area of software development? In this section, we hope to answer these questions and provide guidance to career seekers in software security engineering. These answers might also help employers determine their software security needs and assess and improve their employees’ software security capability.

Software was with us long before the creation of FORTRAN [Backus 1957]. The roots of software engineering as a profession go back to the late 1960s and early 1970s, with the emergence of structured programming, structured design, and process models such as the Waterfall model [Royce 1970]. This means that, at a minimum, software engineering has been a regular profession for at least 42 years.

In the past four decades, there have been numerous general attempts to define what a competent software professional should look like. Examples of this range from Humphrey’s first published work on capability [Humphrey 1989], through the effort to define software engineering as a profession, accompanied by a Software Engineering Body of Knowledge [Abran 2004] and the People Capability Maturity Model [Curtis 2002].

The success of these efforts is still debatable, but one thing is certain: Up to this point, there have been only a few narrowly focused attempts to define the professional qualities needed to develop a secure software product. The Software Assurance (SwA) Competency Model was developed to address this missing element of the profession.

The obvious question, given all of this prior work, is “Why do we need one more professional competency model?” The answer lies in the significant difference between the competencies required to produce working code and those that are needed to produce software free from exploitable weaknesses. That difference is underscored by the presence of the adversary.

In the 1990s, it was generally acceptable for software to have flaws, as long as those flaws did not impact program efficiency or the ability to satisfy user requirements. So development and assurance techniques focused on proper execution with no requirements errors. Now, bad actors can exploit an unintentional defect in a program to cause all kinds of trouble. So although they are related in some ways, the professional competencies that are associated with the assurance of secure software merit their own specific framework.

A specific model for software assurance competency provides two advantages for the profession as a whole. First and most importantly, a standard model allows prospective employers to define the fundamental capabilities needed by their workforce. At the same time, it allows organizations to establish a general, minimum set of competency requirements for their employees; and more importantly, it allows companies to tailor an exact set of competency requirements for any given project.

From the standpoint of the individual worker, a competency model provides software assurance professionals with a standard roadmap that they can use to improve performance by adding specific skills needed to obtain a position and climb the competency ladder for their profession. For example, a new graduate starting in an entry-level position can map out a path for enhancing his/her skills and planning his/her career advances as a software assurance professional. In many respects, this roadmap feature makes a professional competency model a significant player in the development of the workforce of the future, which, of course, is of interest to software engineering educators and trainers.

4.2 Software Assurance Competency Models2

2. This section is drawn from Software Assurance Competency Model [Hilburn 2013a].

In this section, we discuss the SEI’s Software Assurance Competency Model in detail. Before we begin, we provide a list of influential and useful sources—some of the competency models and supporting materials that were studied and analyzed in the development of the SEI’s SwA Competency Model:

• Software Assurance Professional Competency Model (DHS)—Focuses on 10 SwA specialty areas (e.g., Software Assurance and Security Engineering and Information Assurance Compliance) and describes four levels of behavior indicators for each specialty area [DHS 2012].

• Information Technology Competency Model (Department of Labor)—Uses a pyramid model to focus on a tiered set of generic non-technical and technical competency areas (e.g., Personal Effectiveness Competencies for Tier 1 and Industry-Wide Technical Competencies for Tier 4). Specific jobs or roles are not designated [DoLETA 2012].

• A Framework for PAB Competency Models (Professional Advisory Board [PAB], IEEE Computer Society)—Provides an introduction to competency models and presents guidelines for achieving consistency among competency models developed by the Professional Advisory Board (PAB) of the IEEE Computer Society. Provides a generic framework for a professional that can be instantiated with specific knowledge, skills, and effectiveness levels for a particular computing profession (e.g., Software Engineering practitioner) [IEEE-CS 2014].

• “Balancing Software Engineering Education and Industrial Needs”—Describes a study conducted to help both academia and the software industry form a picture of the relationship between the competencies of recent graduates of undergraduate and graduate software engineering programs and the competencies needed to perform as a software engineering professional [Moreno 2012].

• Competency Lifecycle Roadmap: Toward Performance Readiness (Software Engineering Institute)—Provides an early look at the roadmap for understanding and building workforce readiness. This roadmap includes activities to reach a state of readiness: Assess Plan, Acquire, Validate, and Test Readiness [Behrens 2012].

Other work on competency models includes works from academia and government [Khajenoori 1998; NASA 2016]. Other related work, although it was not reviewed prior to development of the SEI’s Software Assurance Competency Model, includes the Skills Framework for the Information Age (SFIA), an international effort related to competencies in information technology.3

4.3 The DHS Competency Model4

4. This section is drawn from Software Assurance Competency Model [Hilburn 2013a].

The DHS competency model was developed independently from the SEI’s competency model. The structure of the DHS model provides insight into how competency models can differ from one another, depending on the model developers, the intended audience, and so on. There is no single “correct” software assurance competency model; however, use of a competency model benefits organizations, projects, and individuals.

4.3.1 Purpose

The DHS model [DHS 2012] is designed to serve the following needs:

• Interagency and public–private collaboration promotes and enables security and resilience of software throughout the lifecycle.

• It provides a means to reduce exploitable software weaknesses and improve capabilities that routinely develop, acquire, and deploy resilient software products.

• Development and publishing of software security content and SwA curriculum courseware are focused on integrating software security content into relevant education and training programs.

• It enables software security automation and measurement capabilities.

4.3.2 Organization of Competency Areas

The DHS organizes its model around a set of “specialty areas” aligned with the National Initiative for Cybersecurity Education (NICE), corresponding to the range of areas in which the DHS has interest and responsibility:

• Software Assurance and Security Engineering

• Information Assurance Compliance

• Enterprise Architecture

• Technology Demonstration

• Education and Training

• Strategic Planning and Policy Development

• Knowledge Management

• Cyber Threat Analysis

• Vulnerability Assessment and Management

• Systems Requirements Planning

4.3.3 SwA Competency Levels

The DHS model designates four “proficiency” levels for which competencies are specified for each specialty area:

• Level 1—Basic—Understands the subject matter and is seen as someone who can perform basic or developmental-level work in activities requiring this specialty.

• Level 2—Intermediate—Can apply the subject matter and is considered someone who has the capability to fully perform work that requires application of this specialty.

• Level 3—Advanced—Can analyze the subject matter and is seen as someone who can immediately contribute to the success of work requiring this specialty.

• Level 4—Expert—Can synthesize/evaluate the subject matter and is looked to as an expert in this specialty.

4.3.4 Behavioral Indicators

For each specialty area, the DHS describes, for each level, how the competency manifests itself in observable on-the-job behavior; these descriptions are called behavioral indicators.

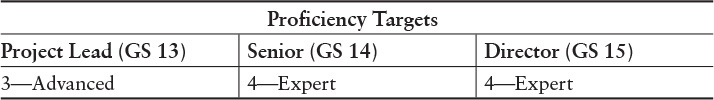

The description of each specialty area also designates proficiency targets (which identify the proficiency at which a person in a specific career level should be performing) and aligns with the behavioral indicator descriptions for the specialty area. For example, the Software Assurance and Security Engineering specialty area designates the targets depicted in Table 4.1.

4.3.5 National Initiative for Cybersecurity Education (NICE)

As noted above, the DHS organizes its model around a set of “specialty areas” aligned with the National Initiative for Cybersecurity Education (NICE). A major government initiative in cyber security work descriptions and training, the National Initiative for Cybersecurity Careers and Studies (NICCS) supporting NICE provides the following insight:5

5. https://niccs.us-cert.gov/home/about-niccs

An important element of the initiative is the National Cybersecurity Workforce Framework:6

6. https://niccs.us-cert.gov/training/tc/framework

The Workforce Framework organizes cyber security into seven high-level categories, each comprised of several specialty areas. The seven categories are Securely Provision, Operate and Maintain, Protect and Defend, Investigate, Collect and Operate, Analyze, and Oversight and Development.

The SEI’s Software Assurance Curriculum Model heavily influenced the Securely Provision section of the NICE framework. Securely Provision includes the following specialty areas: Information Assurance Compliance, Software Assurance and Security Engineering, Systems Development, Systems Requirements Planning, Systems Security Architecture, Technology Research and Development, and Test and Evaluation.

The framework additionally provides a discussion of knowledge, skills, and abilities, competencies, and tasks, in a searchable database. NICE hosts periodic workshops and maintains a training catalog7:

7. https://niccs.us-cert.gov/training/tc/search

4.4 The SEI Software Assurance Competency Model8

8. This section is drawn from Software Assurance Competency Model [Hilburn 2013a].

In the SEI Software Assurance Competency Model, the term competency represents the set of knowledge, skills, and effectiveness needed to carry out the job activities associated with one or more roles in an employment position [IEEE-CS 2014]:

• Knowledge is what an individual knows and can describe (e.g., can name and define various classes of risks).

• Skills are what an individual can do that involves application of knowledge to carry out a task (e.g., can identify and classify the risks associated with a project).

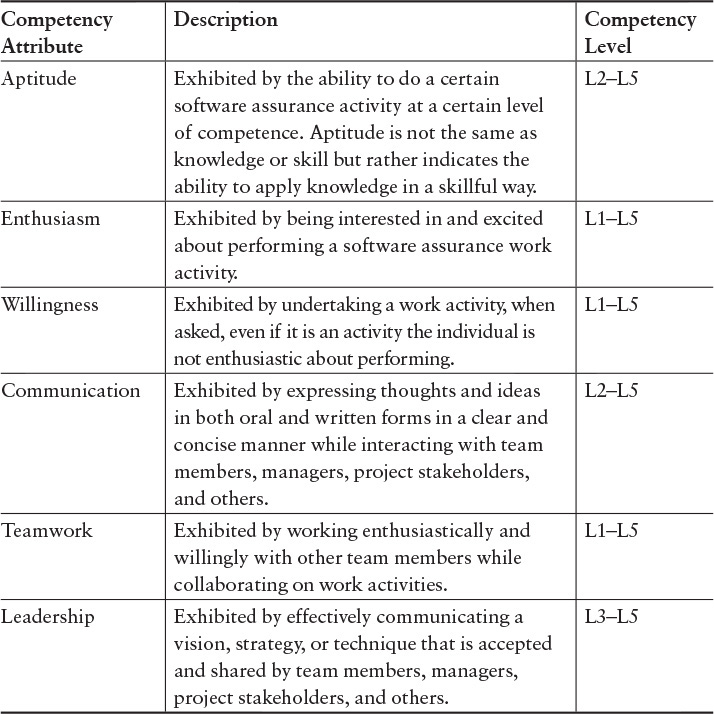

• Effectiveness is concerned with the ability to apply knowledge and skills in a productive manner, characterized by attributes of behavior such as aptitude, initiative, enthusiasm, willingness, communication skills, team participation, and leadership.

As noted above, in the process of developing the SEI’s Software Assurance Competency Model, the authors studied and analyzed a number of other competency models and supporting material. A key reference for the SwA Competency Model is the Master of Software Assurance Reference Curriculum [Mead 2010a]. The curriculum underwent both internal and public review and was endorsed by both the ACM and the IEEE Computer Society as being appropriate for a master’s degree in software assurance. The curriculum document includes a mapping of the software assurance topic areas to GSwE2009 [Stevens Institute of Technology 2009], thus providing a comparison to software engineering knowledge areas. Since then, elements of the curriculum have been adopted by various universities, including the Air Force Academy [Hadfield 2011, 2012], Carnegie Mellon University, Stevens Institute of Technology, and notably by (ISC)2, a training and certification organization. As noted below, the MSwA curriculum was the primary source for the knowledge and skills used in the competency model for various levels of professional competency.

The Software Assurance Competency Model provides employers of software assurance personnel with a means to assess the software assurance capabilities of current and potential employees. In addition, along with the MSwA reference curriculum, this model is intended to guide academic or training organizations in the development of education and training courses to support the needs of organizations that are hiring and developing software assurance professionals.

The SwA Competency Model enhances the guidance of software engineering curricula by providing information about industry needs and expectations for competent security professionals [Mead 2010a, 2010c, 2011b]; the model also provides software assurance professionals with direction and a progression for development and career planning. Finally, a standard competency model provides support for professional certification activities.

4.4.1 Model Features

Professional competency models typically feature competency levels, which distinguish between what’s expected in an entry-level position and what’s required in more senior positions.

In the software assurance competency model, five levels (L1–L5) of competency distinguish different levels of professional capability relative to knowledge, skills, and effectiveness [IEEE-CS 2014]. Individuals can use the competency levels to assess the extent and level of their capability and to guide their preparation for software security work:

• L1—Technician C

• Possesses technical knowledge and skills, typically gained through a certificate or an associate degree program or equivalent knowledge and experience.

• May be employed in system operator, implementer, tester, and maintenance positions with specific individual tasks assigned by someone at a higher hierarchy level.

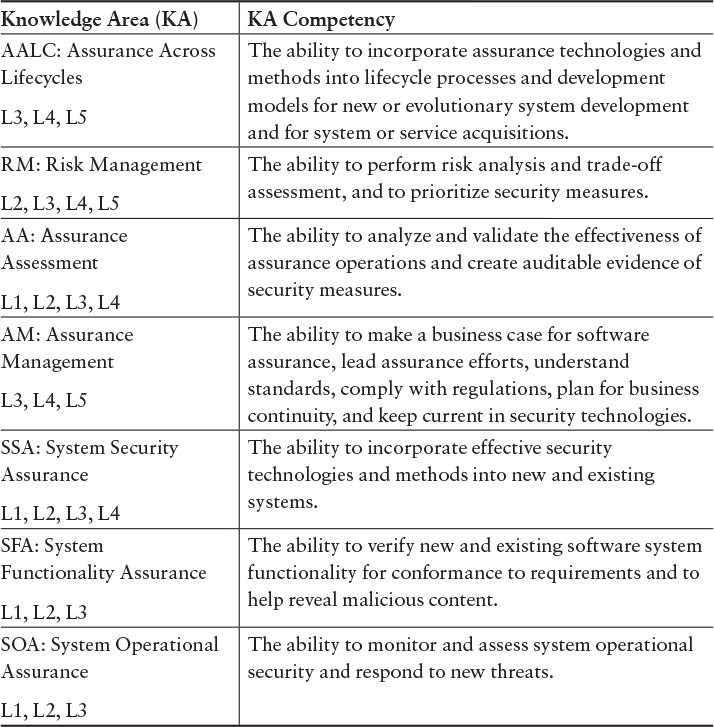

• Main areas of competency are System Operational Assurance (SOA), System Functional Assurance (SFA), and System Security Assurance (SSA) (see Table 4.2).

• Major tasks: low-level implementation, testing, and maintenance.

• L2—Professional Entry Level

• Possesses “application-based” knowledge and skills and entry-level professional effectiveness, typically gained through a bachelor’s degree in computing or through equivalent professional experience.

• May perform all tasks of L1 and, additionally, manage a small internal project, supervise and assign subtasks for L1 personnel, supervise and assess system operations, and implement commonly accepted assurance practices.

• Main areas of competency are SFA, SSA, and Assurance Assessment (AA) (see Table 4.2).

• Major tasks: requirements fundamentals, module design, and implementation.

• L3—Practitioner

• Possesses breadth and depth of knowledge, skills, and effectiveness beyond the L2 level and typically has 2 to 5 years of professional experience.

• May perform all tasks of L2 personnel and, additionally, set plans, tasks, and schedules for in-house projects; define and manage such projects; and supervise teams on the enterprise level, report to management, assess the assurance quality of a system, and implement and promote commonly accepted software assurance practices.

• Main areas of competency are Risk Management (RM), AA, and Assurance Management (AM) (see Table 4.2).

• Major tasks: requirements analysis, architectural design, tradeoff analysis, and risk assessment.

• L4—Senior Practitioner

• Possesses breadth and depth of knowledge, skills, and effectiveness and a variety of work experiences beyond L3, with 5 to 10 years of professional experience and advanced professional development at the master’s level or with equivalent education/training.

• May perform all tasks of L3 personnel and identify and explore effective software assurance practices for implementation, manage large projects, interact with external agencies, etc.

• Main areas of competency are RM, AA, AM, and Assurance Across Lifecycles (AALC) (see Table 4.2).

• Major tasks: assurance assessment, assurance management, and risk management across the lifecycle.

• L5—Expert

• Possesses competency beyond L4; advances the field by developing, modifying, and creating methods, practices, and principles at the organizational level or higher; has peer/industry recognition.

• Typically includes a low percentage of an organization’s workforce within the SwA profession (e.g., 2% or less).

4.4.2 SwA Knowledge, Skills, and Effectiveness

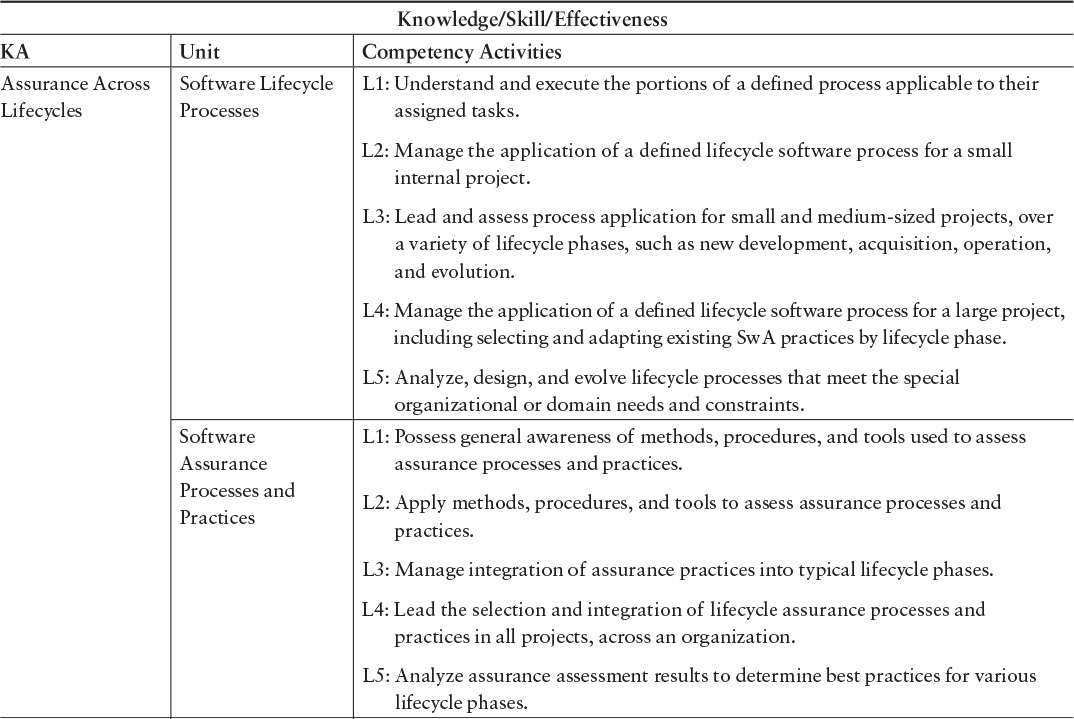

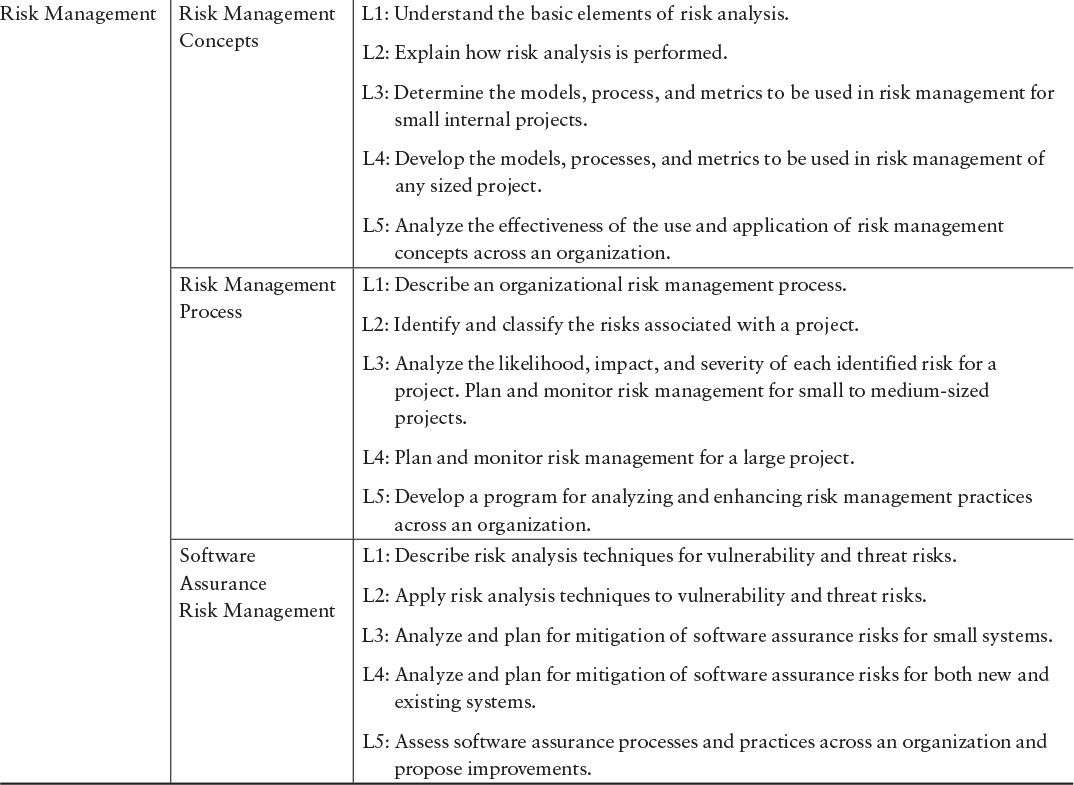

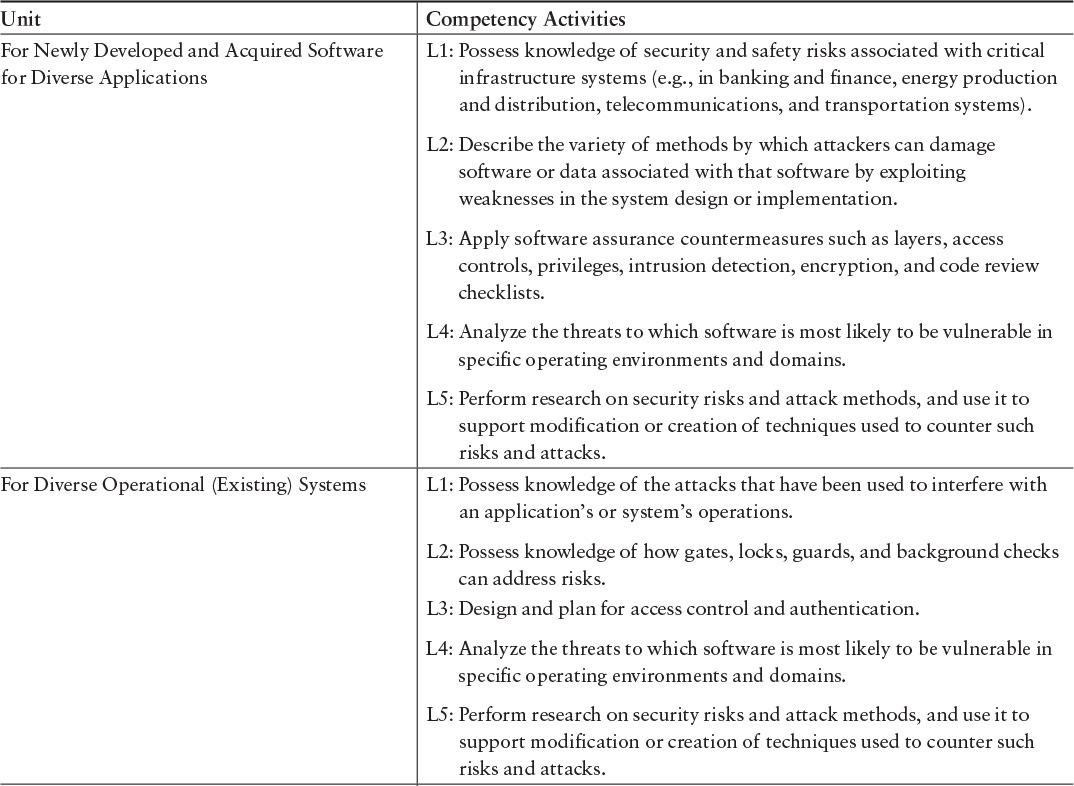

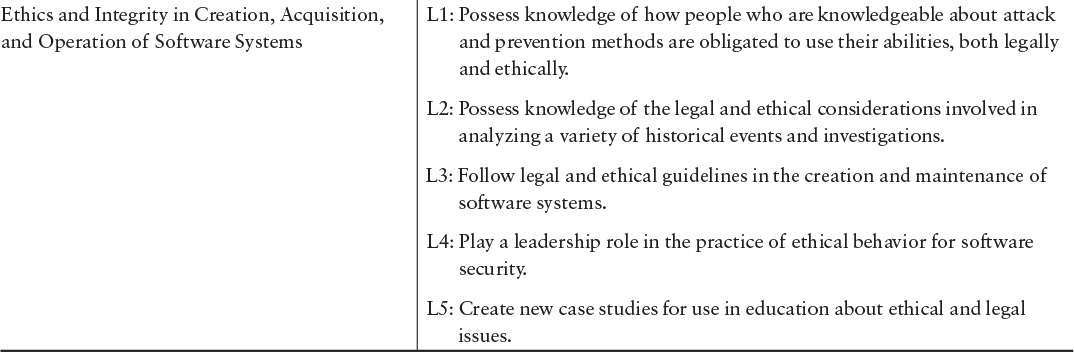

The primary source for SwA Competency Model knowledge and skills is the Core Body of Knowledge (CorBoK), contained in Software Assurance Curriculum Project, Volume I: Master of Software Assurance Reference Curriculum [Mead 2010a]. The CorBoK consists of the knowledge areas listed in Table 4.2. Each knowledge area is further divided into second-level units, as shown later in this chapter, in Table 4.5. For each unit, competency activities are described for each of the levels L1–L5.

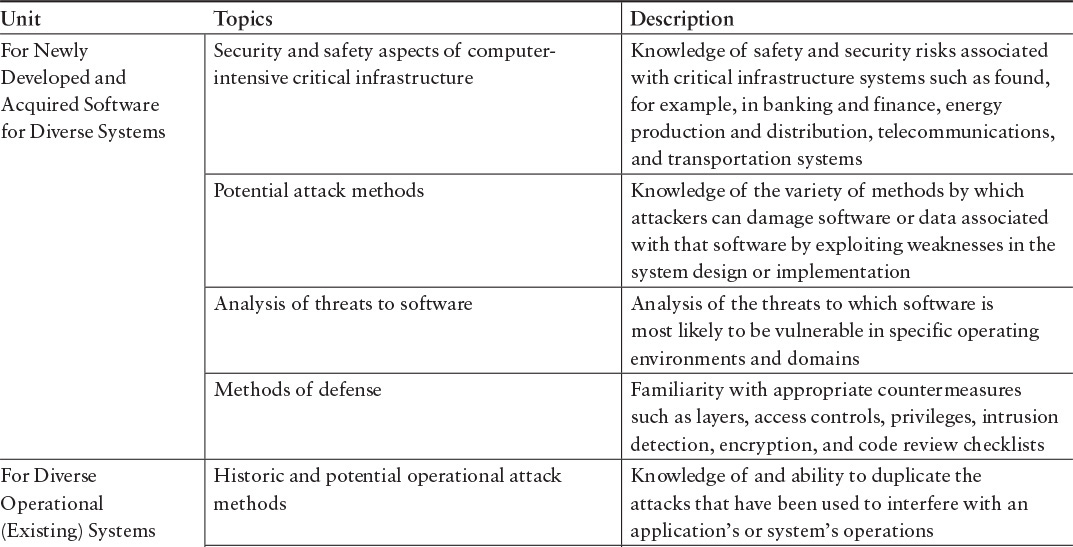

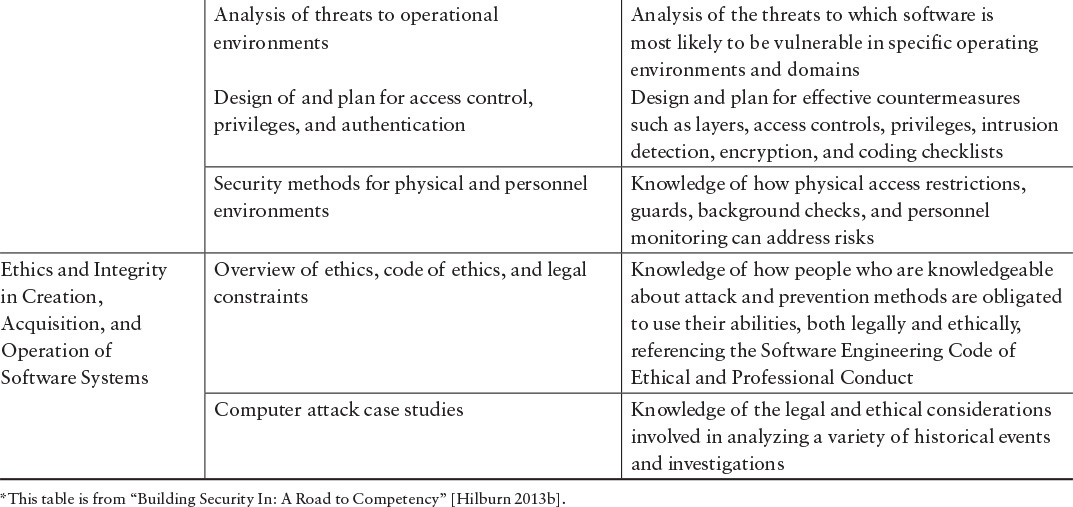

The CorBoK specifies the KAs in greater detail, as illustrated by the specification of the System Security Assurance KA in Table 4.3.

Other than the unit “Ethics and Integrity” in the System Security Assurance Knowledge Area shown in Table 4.3, the CorBoK does not contain topics on competency associated with effectiveness; the effectiveness attributes are listed in Table 4.4 (adapted from [IEEE-CS 2014]). In Table 4.4, for a given attribute, there is no differentiation in effectiveness for the different competency levels; however, professionals would be expected to show an increase in the breadth and depth of capability in these areas of effectiveness as they proceed through their careers and move to higher competency levels.

*This table is from “Building Security In: A Road to Competency” [Hilburn 2013b].

4.4.3 Competency Designations

Table 4.5 presents a portion of the CorBoK knowledge areas and second-level units, along with a description of the appropriate knowledge and skills for each competency level and the effectiveness attributes. The complete table can be found in the competency report [Hilburn 2013a]. A designation of L1 applies to levels L1 through L5; a designation of L2 applies to L2 through L5; and so on. The level descriptions indicate the competency activities that are demonstrated at each level.

4.4.4 A Path to Increased Capability and Advancement9

9. This section is drawn from “Building Security In: A Road to Competency” [Hilburn 2013b].

The SwA Competency Model can provide direction on professional growth and career advancement. Each competency level assumes competency at the lower levels. The model also provides a comprehensive mapping between the CorBoK (KAs and units) and the competency levels. The complete mapping can be found in Appendix D, “The Software Assurance Competency Model Designations.” Table 4.6 illustrates this mapping for the System Security Assurance KA.

4.4.5 Examples of the Model in Practice10

10. This section is drawn from The Software Assurance Competency Model: A Roadmap to Enhance Individual Professional Capability [Mead 2013a].

There are a number of ways the Software Assurance Competency Model can be applied in practice. An organization in which software assurance is critical could use the type of information in Table 4.6 to do all of the following:

• Structure its software assurance needs and expectations

• Assess its software assurance personnel’s capability

• Provide a roadmap for employee advancement

• Use as a basis for software assurance professional development plans

For example, an organization intending to hire an entry-level software assurance professional could examine the L1–L2 levels and incorporate elements of them into job descriptions. These levels could also be used during the interview process by both the employer and the prospective employee to assess the actual expertise of the candidate.

Another application is by faculty members who are developing courses in software assurance or adding software assurance elements to their software engineering courses. The levels allow faculty to easily see the depth of content that is suitable for courses at the community college, undergraduate, and graduate levels. For example, undergraduate student outcomes might be linked to the L1 and L2 levels, whereas graduate courses aimed at practitioners with more experience might target higher levels. In industry, the model could be used to determine if specific competency areas were being overlooked. These areas could point toward corresponding training needs. With a bit of effort, trainers can tailor their course offerings to the target audience. The model eliminates some of the guesswork involved in deciding what level of material is appropriate for a given course.

It can also be used by faculty who are already teaching such courses to assess whether the course material is a good fit for the target audience. The authors of this chapter are currently teaching software assurance courses and use the model to revisit and tailor their syllabi accordingly.

4.4.6 Highlights of the SEI Software Assurance Competency Model11

11. This section is drawn from The Software Assurance Competency Model: A Roadmap to Enhance Individual Professional Capability [Mead 2013a].

The Software Assurance Competency Model was developed to create a foundation for assessing and advancing the capability of software assurance professionals. The span of competency levels L1 through L5 and the decomposition into individual competencies based on the knowledge and skills described in the SwA CorBoK [Mead 2010a] provide the detail necessary for an organization or individual to determine SwA competency across the range of knowledge areas and units. The model also provides a framework for an organization to adapt its features to the organization’s particular domain, culture, or structure.

The model was reviewed by invited industry reviewers and mapped to actual industry positions. These mappings are included in the SEI’s report; the model also underwent public review prior to publication. Dick Fairley, chair of the Software and Systems Engineering Committee of the IEEE Computer Society (IEEE-CS) Professional Activities Board (PAB), endorsed the SEI Software Assurance Competency Model “as appropriate for software assurance roles and consistent with A Framework for PAB Competency Models.”12 In presentations and webinars delivered by the author on software assurance, only about half of the participants had a plan for their own SwA competency development. However, more than 80% said they could use the SwA Competency Model in staffing a project.

12. http://www.cert.org/news/article.cfm?assetid=91675&article=156&year=2014.

The most important outcome of this model is a better trained and educated workforce. As the needs of the software industry for more secure applications continue, the recommendations of this model can be used to ensure better and more trustworthy practice in the process of developing and sustaining an organization’s software assets. That guidance going forward is a linchpin in the overall effort to create trusted systems and provides the necessary reference to allow organizations and individuals to help achieve cyber security.

4.5 Summary

This chapter discussed the SEI’s Software Assurance Competency Model in detail. It also discusses the DHS Competency Model and the related NICE, and it provides pointers to a number of other competency models in the literature. Competency models can be useful in many fields. In software assurance, these models are particularly useful for organizations and individuals trying to assess and improve their own software assurance skills. We recommend that you peruse these models and select one or more for your individual and organizational use. Competency models are essential for gap analysis and development of an overall software assurance improvement plan.

Competency models need to evolve as new methods are developed. Some newer technical topics are discussed in more depth in Chapter 7, “Special Topics in Cyber Security Engineering.” These topics include DevOps, a convergence of concerns from both the development and operations communities, and MORE, a research project that uses malware analysis—an operational technique—to help identify overlooked security requirements to include in future systems.