Operationalizing Decision Equity: The Importance of Flowprinting

One of the most common challenges associated with the operationalization of decision equity pertains to what statisticians refer to as “model misspecification.” Here is an easy example to illustrate the point. Let us think of two cardiologists, each of whom has very favorable perceptions of drug A—a statin used to lower cholesterol among patients. When the pharmaceutical company approached these physicians through a survey, they each reported very favorable perceptions of drug A, the company’s sales force, as well as the company itself. However, the scripting data found very different scripting levels between these two cardiologists. One of them wrote twice as many absolute scripts or prescriptions for drug A compared to the other. Could the model be missing something—because it seems unlikely that two physicians with similar drug perceptions can engage in very different scripting behaviors? If the observed relationship between perceptions and behavior is missing some other critical source of information that can explain such discordance, then the current model is perhaps misspecified. Such misspecification can have serious implications on estimates of decision equity. For instance, if the firm were to invest in an advertising strategy to better communicate the benefits of the drug to cardiologists, then should it use the scripting behavior of the first or the second physician as the baseline case, or should it average the two?

We recently encountered a very similar situation. The decision makers were interested in initiating a promotional campaign to better communicate the efficacy, and the minimal side effects of their drug, and wanted to measure the decision equity associated with these promotional investments. However, they saw evidence similar to the one described above, wherein there seemed to be a weak relationship between physician perceptions of the drug—which was to be enhanced through the promotional campaign—and their scripting behavior. As we worked through the issue and developed it during the flowprinting sessions, four very important considerations emerged.

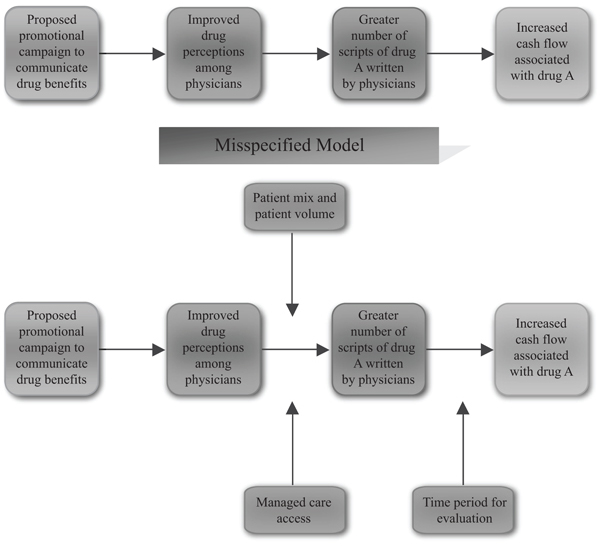

First, there was internal evidence in the organization that the years of experience with drug A can influence the volume of scripts written by the cardiologist. Earlier research had shown that as doctors gain more experience with the drug, their propensity to write a script for it increases. Therefore, it was possible that the difference in scripting behavior across physicians might be driven by their experience with the drug, and while there was little that the drug manufacturer could do to influence this driver of scripting, it had an important implication for the firm. Second, it was also hypothesized, with good empirical support, that the patient mix of each cardiologist can influence their scripting behavior of the drug, because clinical trials had shown drug A to work better with certain patient demographics. Therefore, even if two cardiologists had identical perceptions of the drug, and had similar years of experience with it, they could still exhibit very different scripting behaviors, based on their patient mix, which in turn might depend on the neighborhood where they practiced. A third consideration was simply the number of patients seen by each cardiologist. If some of them spend more time in research and less in actual clinical practice, then the number of scripts they write for the entire category of drugs might be lower vis-à-vis cardiologists who spend more time interacting with patients. Overall, we could have two physicians with similar perceptions and experiences with the drug and similar patient mixes who would differ in the number of scripts written for drug A, because they handled different volumes of patients. Last, but not the least, managed care—that is, the role of health insurance companies—also emerged as a very important consideration. While the two hypothetical physicians in our case study could be identical on all three criteria listed earlier, they each might be dealing with different managed care providers, and in the process have differential ease of access to drug A. This would have a substantial impact on the number of scripts they write for drug A (see Figure 8.1).

Figure 8.1. Model misspecification.

In our example, we could have computed decision equity relative to a baseline with or without model misspecification. A misspecified estimate would be one where we would study the investments in drug promotion, link them to improvements in physician perceptions of the drug, and then link such perceptual changes to scripting behavior, and eventually to the cash flows generated by these scripts over time. However, this would be a classic case of “model misspecification”—that is, an instance where we would have computed these relationships while ignoring many other important influencers that can affect the computation of the decision equity. Such misspecified models can provide estimates of decision equity that can be inflated or deflated, and there is no way to figure out the degree of error, unless other relevant influencers are included in the estimation. A more complete method would be one where measures on each relevant influencer are included in the model toward computing the decision equity of the proposed investment. In our case, this meant getting information on the number of years the cardiologist had been practicing as well as scripting drug A, information on their patient mix and volume, detailed data on managed care access for drug A as well as competing drugs. With the inclusion of all these factors, the estimates of the returns on investing in a drug promotional campaign were more accurate and had greater acceptance among the stakeholders.

The main thesis of this discussion is that the operationalization of the decision equity metric requires a deep understanding of the linkages among the many moveable parts that can influence, or be influenced by, a proposed decision. Organizations and decision makers need to avoid model misspecification, or else the strength of the estimated relationships in the flowprints will be biased, and the exercise will not generate a buy-in from internal champions. We find that misspecification can be avoided largely through careful thought and investments in the up-front flowprinting session. As discussed earlier, two key benefits of flowprinting are (a) recognition of the relationships among the various interconnected and nonstatic organizational silos and (b) a buy-in from relevant decision makers in these silos. The ability to draw from the experience of such decision makers in a flowprint session and in the process minimize the odds of excluding important measures, helps achieve both these objectives. In all our decision equity engagements, we increasingly insist on the need to have the right audience for the flowprint session. We also insist that the firm invest serious energy in designing a model that has face validity among executives, and one that does not exclude any important variable in the estimation of the decision equity metric. The job of the analyst is to then collate the relevant data related to these connected parts, and explore the linkages among them. When these results are presented to the decision makers, the research team essentially repeats the story line that was articulated in the flowprinting session, but with validation from real data. The flowprinting process also adds confidence to the estimates of decision equity, based on a more thorough evaluation of the measures that need to be incorporated in the models.

The need to minimize the exclusion of critical factors from the models requires careful discussion around at least three points. We have observed that these are applicable across industries and that while their individual importance might vary across contexts, their relevance does not.

Coverage of Multiple Domains

As we have noted earlier, the flowprints associated with a single strategic choice can be complex and run through multiple functional domains. The computation of decision equity requires an assessment of the cumulative changes in the costs, revenues, and cash flows associated with each domain and their integration into a single decision equity measure. For example, a large utility provider was exploring the concept of integrated customer centricity. Previously, the senior management of the firm received multiple resource allocation requests that were in line with the concept but were scattered and unrelated to each other. These included requests for more feet on the street for repair, greater investments in weather forecasting technology, investments for more power, and uniform load. However, the president of the company asked everyone to step back and explore the notion of customer centricity from an integrated perspective. Such an approach would give him an overarching equity value associated with implementing the concept. The subsequent evaluation of both residential and business customers provided a comprehensive list of touch points ranging from tree trimming to moving-in experience, and from call center interactions to billing perceptions. The estimation of the equity relating to the decision of being customer centric therefore necessitated an evaluation of the costs and revenues across multiple marketing, operations, and customer service domains, and ultimately led to an integrated and unified approach to selecting a portfolio of actions.

Importance of Time

Because changes in future cash flows form the basis of the computation of decision equity, it is important to calibrate what future really means. Often the specification of the time frame that constitutes the future has an impact on whether equity turns out to be favorable or not, and whether a decision appears worth making. For example, a financial institution we worked with undertook serious cost cutting measures to boost its profits. One of these cost containment measures came from its retail operations and resulted in a decline in the number of tellers at each branch. The bank also started charging a fee for certain services that were free until then.

Not long thereafter, customers began noticing the reduction in the number of tellers, the increased length of waiting lines, and the higher fee structure. Because other competing banks had not undertaken similar measures, customers began defecting in search of lower fees and better service. The customer satisfaction tracking system alerted management to the drop in satisfaction scores. However, many scoffed at the new numbers and pointed to the inverse relationship between increasing profits and falling satisfaction scores. Some even argued that the satisfaction numbers were perhaps wrong. In any case, if the equity associated with the decision to reduce the number of tellers and increase the fees was computed using a relatively short time frame, it would have looked positive and would have supported the decision that was made. The cost savings from the actions were virtually instantaneous while the revenue impact from customer defections would have unfolded over a longer time horizon. However, over the course of the next few months, the impact of the cost savings dried up and the revenue loss from customer defections overwhelmed the bank. As expected, the equity associated with the original decision did not look positive over this longer time horizon.

We observe similar time dependencies across many markets. Some consequences of a decision have a financial impact in very short periods while others unfold over a longer time horizon. For example, in the case of a computer hardware products company, we found differences from similar actions even across customer segments. In this case, the benefit of a positive action within the household segment was observed 3 to 4 years after the initial purchase, typically, when the household went in for a repurchase. However, the effects showed up in a matter of weeks for a small business. Finally, for large businesses, some benefits from service showed some up on a continuous basis in addition to large benefits that materialized only at the time of contract renewal. It is therefore important for firms to be sensitive to time frames when computing decision equity or acting on equity-driven choices. One approach to addressing this problem is to do scenario analyses with varying time frames. Managers can then evaluate the sensitivity of the decision equity to differences in time frames and assess the risk inherent in their decision.

Multiple Terminal Measures

While we have conceptualized decision equity in terms of the present value of incremental future cash flows associated with a decision, we find that firms are often more comfortable working with more traditional metrics that they are used to. For example, managers of the insurance firm that we alluded to earlier were more comfortable with their traditional “top line” versus “bottom line” measures of revenue and margins than with an integrated decision equity measure. Very often, a firm’s ability to compute decision equity as a cash flow or bottom-line metric is also constrained by nonavailability of such financial metrics at the individual customer level. In other cases, organizations prefer other metrics such as economic growth, profit growth, or return on investments. Yet others use industry specific metrics, such as revenue per available room in the hospitality sector, or sales per square foot in the retail sector. However, while the migration to the most appropriate metric may take multiple steps, these firms nevertheless benefit enormously from the process of linkage analysis and discovering the relationships among alternative actions and their downstream consequences. The concept of decision equity is still very applicable, and it is critical to remember that, irrespective of the choice of the downstream metric, decision makers are ultimately interested in the ability to compare noncomparable actions using some common set of measures.