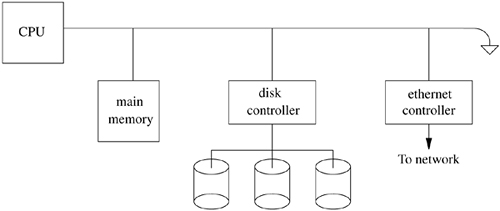

A central component of any operating system is the memory-management system. As the name implies, memory-management facilities are responsible for the management of memory resources available on a machine. These resources are typically layered in a hierarchical fashion, with memory-access times inversely related to their proximity to the CPU (see Figure 5.1 on page 136). The primary memory system is main memory; the next level of storage is secondary storage or backing storage. Main-memory systems usually are constructed from random-access memories, whereas secondary stores are placed on moving-head disk drives. In certain workstation environments, the common two-level hierarchy is becoming a three-level hierarchy, with the addition of file-server machines or network-attached storage connected to a workstation via a local-area network [Gingell, Moran, & Shannon, 1987].

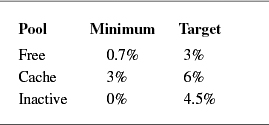

In a multiprogrammed environment, it is critical for the operating system to share available memory resources effectively among the processes. The operation of any memory-management policy is directly related to the memory required for a process to execute. That is, if a process must reside entirely in main memory for it to execute, then a memory-management system must be oriented toward allocating large units of memory. On the other hand, if a process can execute when it is only partially resident in main memory, then memory-management policies are likely to be substantially different. Memory-management facilities usually try to optimize the number of runnable processes that are resident in main memory. This goal must be considered with the goals of the process scheduler (Chapter 4) so that conflicts that can adversely affect overall system performance are avoided.

Although the availability of secondary storage permits more processes to exist than can be resident in main memory, it also requires additional algorithms that can be complicated. Space management typically requires algorithms and policies different from those used for main memory, and a policy must be devised for deciding when to move processes between main memory and secondary storage.

Each process operates on a virtual machine that is defined by the architecture of the underlying hardware on which it executes. We are interested in only those machines that include the notion of a virtual address space. A virtual address space is a range of memory locations that a process references independently of the physical memory present in the system. In other words, the virtual address space of a process is independent of the physical address space of the CPU. For a machine to support virtual memory, we also require that the whole of a process's virtual address space does not need to be resident in main memory for that process to execute.

References to the virtual address space—virtual addresses—are translated by hardware into references to physical memory. This operation, termed address translation, permits programs to be loaded into memory at any location without requiring position-dependent addresses in the program to be changed. This relocation of position-dependent addressing is possible because the addresses known to the program do not change. Address translation and virtual addressing are also important in efficient sharing of a CPU, because position independence usually permits context switching to be done quickly.

Most machine architectures provide a contiguous virtual address space for processes. Some machine architectures, however, choose to partition visibly a process's virtual address space into regions termed segments [Intel, 1984]. Such segments usually must be physically contiguous in main memory and must begin at fixed addresses. We shall be concerned with only those systems that do not visibly segment their virtual address space. This use of the word segment is not the same as its earlier use in Section 3.5, when we were describing FreeBSD process segments, such as text and data segments.

When multiple processes are coresident in main memory, we must protect the physical memory associated with each process's virtual address space to ensure that one process cannot alter the contents of another process's virtual address space. This protection is implemented in hardware and is usually tightly coupled with the implementation of address translation. Consequently, the two operations usually are defined and implemented together as hardware termed the memory-management unit.

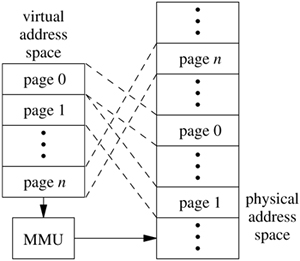

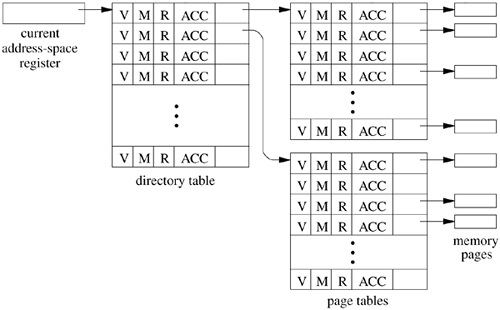

Virtual memory can be implemented in many ways, some of which are software based, such as overlays. Most effective virtual-memory schemes are, however, hardware based. In these schemes, the virtual address space is divided into fixed-sized units, termed pages, as shown in Figure 5.2. Virtual-memory references are resolved by the address-translation unit to a page in main memory and an offset within that page. Hardware protection is applied by the memory-management unit on a page-by-page basis.

Some systems provide a two-tiered virtual-memory system in which pages are grouped into segments [Organick, 1975]. In these systems, protection is usually at the segment level. In the remainder of this chapter, we shall be concerned with only those virtual-memory systems that are page based.

Address translation provides the implementation of virtual memory by decoupling the virtual address space of a process from the physical address space of the CPU. Each page of virtual memory is marked as resident or nonresident in main memory. If a process references a location in virtual memory that is not resident, a hardware trap termed a page fault is generated. The servicing of page faults, or paging, permits processes to execute even if they are only partially resident in main memory.

Coffman & Denning [1973] characterize paging systems by three important policies:

- When the system loads pages into memory—the fetch policy

- Where the system places pages in memory—the placement policy

- How the system selects pages to be removed from main memory when pages are unavailable for a placement request—the replacement policy

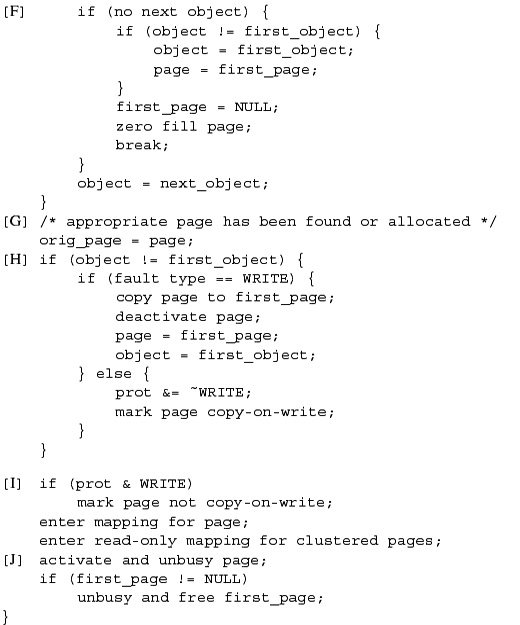

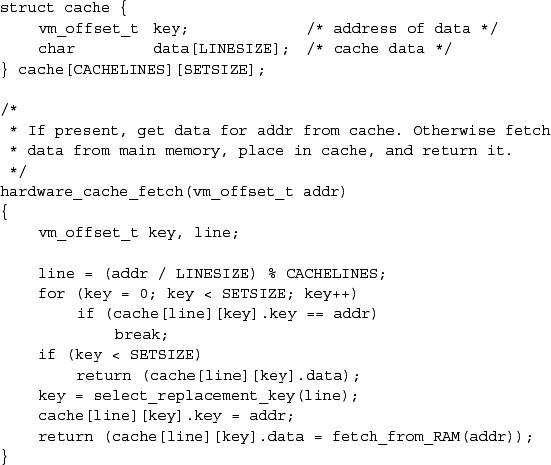

The performance of modern computers is heavily dependent on one or more highspeed caches to reduce the need to access the much slower main memory. The placement policy should ensure that contiguous pages in virtual memory make the best use of the processor cache. FreeBSD uses a coloring algorithm to ensure good placement. Under a pure demand-paging system, a demand-fetch policy is used, in which only the missing page is fetched, and replacements occur only when main memory is full. Consequently, the performance of a pure demand-paging system depends on only the system's replacement policy. In practice, paging systems do not implement a pure demand-paging algorithm. Instead, the fetch policy often is altered to do prepaging—fetching pages of memory other than the one that caused the page fault—and the replacement policy is invoked before main memory is full.

The replacement policy is the most critical aspect of any paging system. There is a wide range of algorithms from which we can select in designing a replacement strategy for a paging system. Much research has been carried out in evaluating the performance of different page-replacement algorithms [Bansal & Modha, 2004; Belady, 1966; King, 1971; Marshall, 1979].

A process's paging behavior for a given input is described in terms of the pages referenced over the time of the process's execution. This sequence of pages, termed a reference string, represents the behavior of the process at discrete times during the process's lifetime. Corresponding to the sampled references that constitute a process's reference string are real-time values that reflect whether the associated references resulted in a page fault. A useful measure of a process's behavior is the fault rate, which is the number of page faults encountered during processing of a reference string, normalized by the length of the reference string.

Page-replacement algorithms typically are evaluated in terms of their effectiveness on reference strings that have been collected from execution of real programs. Formal analysis can also be used, although it is difficult to do unless many restrictions are applied to the execution environment. The most common metric used in measuring the effectiveness of a page-replacement algorithm is the fault rate.

Page-replacement algorithms are defined by the criteria that they use for selecting pages to be reclaimed. For example, the optimal replacement policy [Denning, 1970] states that the "best" choice of a page to replace is the one with the longest expected time until its next reference. Clearly, this policy is not applicable to dynamic systems, as it requires a priori knowledge of the paging characteristics of a process. The policy is useful for evaluation purposes, however, as it provides a yardstick for comparing the performance of other page-replacement algorithms.

Practical page-replacement algorithms require a certain amount of state information that the system uses in selecting replacement pages. This state typically includes the reference pattern of a process, sampled at discrete time intervals. On some systems, this information can be expensive to collect [Babaoglu & Joy, 1981]. As a result, the "best" page-replacement algorithm may not be the most efficient.

The working-set model assumes that processes exhibit a slowly changing locality of reference. For a period of time, a process operates in a set of subroutines or loops, causing all its memory references to refer to a fixed subset of its address space, termed the working set. The process periodically changes its working set, abandoning certain areas of memory and beginning to access new ones. After a period of transition, the process defines a new set of pages as its working set. In general, if the system can provide the process with enough pages to hold that process's working set, the process will experience a low page-fault rate. If the system cannot provide the process with enough pages for the working set, the process will run slowly and will have a high page-fault rate.

Precise calculation of the working set of a process is impossible without a priori knowledge of that process's memory-reference pattern. However, the working set can be approximated by various means. One method of approximation is to track the number of pages held by a process and that process's page-fault rate. If the page-fault rate increases above a high watermark, the working set is assumed to have increased, and the number of pages held by the process is allowed to grow. Conversely, if the page-fault rate drops below a low watermark, the working set is assumed to have decreased, and the number of pages held by the process is reduced.

Swapping is the term used to describe a memory-management policy in which entire processes are moved to and from secondary storage when main memory is in short supply. Swap-based memory-management systems usually are less complicated than are demand-paged systems, since there is less bookkeeping to do. However, pure swapping systems typically are less effective than are paging systems, since the degree of multiprogramming is lowered by the requirement that processes be fully resident to execute. Swapping is sometimes combined with paging in a two-tiered scheme, whereby paging satisfies memory demands until a severe memory shortfall requires drastic action, in which case swapping is used.

In this chapter, a portion of secondary storage that is used for paging or swapping is termed a swap area or swap space. The hardware devices on which these areas reside are termed swap devices.

There are several advantages to the use of virtual memory on computers capable of supporting this facility properly. Virtual memory allows large programs to be run on machines with main-memory configurations that are smaller than the program size. On machines with a moderate amount of memory, it allows more programs to be resident in main memory to compete for CPU time, as the programs do not need to be completely resident. When programs use sections of their program or data space for some time, leaving other sections unused, the unused sections do not need to be present. Also, the use of virtual memory allows programs to start up faster, since they generally require only a small section to be loaded before they begin processing arguments and determining what actions to take. Other parts of a program may not be needed at all during individual runs. As a program runs, additional sections of its program and data spaces are paged in on demand (demand paging). Finally, there are many algorithms that are more easily programmed by sparse use of a large address space than by careful packing of data structures into a small area. Such techniques are too expensive for use without virtual memory, but they may run much faster when that facility is available, without using an inordinate amount of physical memory.

On the other hand, the use of virtual memory can degrade performance. It is more efficient to load a program all at one time than to load it entirely in small sections on demand. There is a finite cost for each operation, including saving and restoring state and determining which page must be loaded, so some systems use demand paging for only those programs that are larger than some minimum size.

Nearly all versions of UNIX have required some form of memory-management hardware to support transparent multiprogramming. To protect processes from modification by other processes, the memory-management hardware must prevent programs from changing their own address mapping. The FreeBSD kernel runs in a privileged mode (kernel mode or system mode) in which memory mapping can be controlled, whereas processes run in an unprivileged mode (user mode). There are several additional architectural requirements for support of virtual memory. The CPU must distinguish between resident and nonresident portions of the address space, must suspend programs when they refer to nonresident addresses, and must resume programs' operation once the operating system has placed the required section in memory. Because the CPU may discover missing data at various times during the execution of an instruction, it must provide a mechanism to save the machine state so that the instruction can be continued or restarted later. The CPU may implement restarting by saving enough state when an instruction begins that the state can be restored when a fault is discovered. Alternatively, instructions could delay any modifications or side effects until after any faults would be discovered so that the instruction execution does not need to back up before restarting. On some computers, instruction backup requires the assistance of the operating system.

Most machines designed to support demand-paged virtual memory include hardware support for the collection of information on program references to memory. When the system selects a page for replacement, it must save the contents of that page if they have been modified since the page was brought into memory. The hardware usually maintains a per-page flag showing whether the page has been modified. Many machines also include a flag recording any access to a page for use by the replacement algorithm.

The FreeBSD virtual-memory system is based on the Mach 2.0 virtual-memory system [Tevanian, 1987], with updates from Mach 2.5 and Mach 3.0. The Mach virtual-memory system was adopted because it features efficient support for sharing and a clean separation of machine-independent and machine-dependent features, as well as multiprocessor support. None of the original Mach system-call interface remains. It has been replaced with the interface first proposed for 4.2BSD that has been widely adopted by the UNIX industry; the FreeBSD interface is described in Section 5.5.

The virtual-memory system implements protected address spaces into which can be mapped data sources (objects) such as files or private, anonymous pieces of swap space. Physical memory is used as a cache of recently used pages from these objects and is managed by a global page-replacement algorithm.

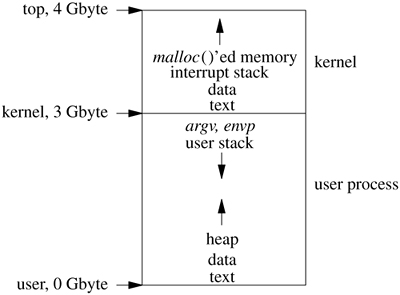

The virtual address space of most architectures is divided into two parts. For FreeBSD running on 32-bit architectures, the top 1 Gbyte of the address space is reserved for use by the kernel. Systems with many small processes making heavy use of kernel facilities such as networking can be configured to use the top 2 Gbyte for the kernel. The remaining address space is available for use by processes. A traditional UNIX layout is shown in Figure 5.3 (on page 142). Here, the kernel and its associated data structures reside at the top of the address space. The initial text and data areas of the user process start at or near the beginning of memory. Typically, the first 4 or 8 Kbyte of memory are kept off-limits to the process. The reason for this restriction is to ease program debugging; indirecting through a null pointer will cause an invalid address fault instead of reading or writing the program text. Memory allocations made by the running process using the malloc() library routine (or the sbrk system call) are done on the heap that starts immediately following the data area and grows to higher addresses. The argument vector and environment vectors are at the top of the user portion of the address space. The user's stack starts just below these vectors and grows to lower addresses. Subject to only administrative limits, the stack and heap can each grow until they meet. At that point, a process running on a 32-bit machine will be using nearly 3 Gbyte of address space.

In FreeBSD and other modern UNIX systems that support the mmap system call, address-space usage is less structured. Shared library implementations may place text or data arbitrarily, rendering the notion of predefined regions obsolete. For compatibility, FreeBSD still supports the sbrk call that malloc() uses to provide a contiguous heap region, and the stack region is automatically grown to its size limit. By default, shared libraries are placed just below the run-time configured maximum stack area.

At any time, the currently executing process is mapped into the virtual address space. When the system decides to context switch to another process, it must save the information about the current-process address mapping, then load the address mapping for the new process to be run. The details of this address-map switching are architecture dependent. Some architectures need to change only a few memory-mapping registers that point to the base, and to give the length of memory-resident page tables. Other architectures store the page-table descriptors in special high-speed static RAM. Switching these maps may require dumping and reloading hundreds of map entries.

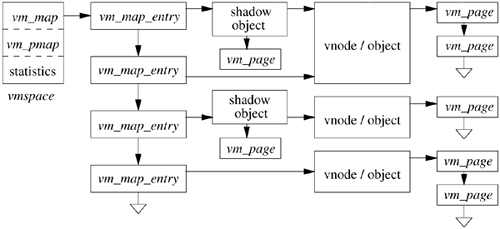

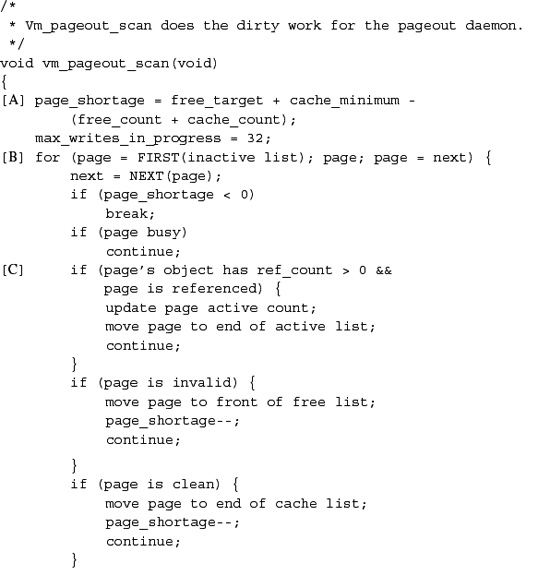

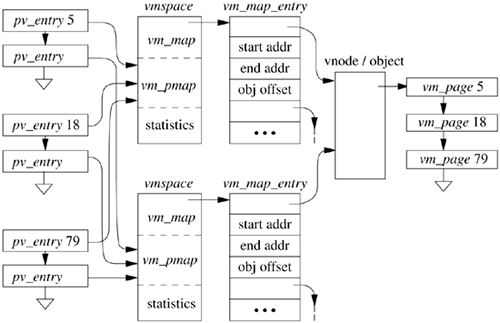

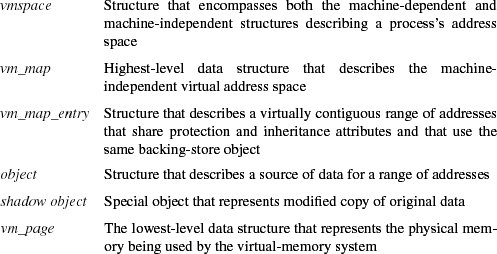

Both the kernel and user processes use the same basic data structures for the management of their virtual memory. The data structures used to manage virtual memory are as follows:

In the remainder of this section, we describe briefly how all these data structures fit together. The remainder of this chapter describes the details of the structures and how the structures are used.

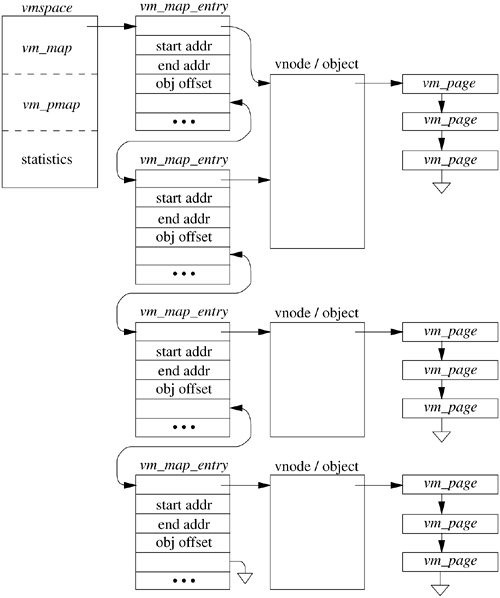

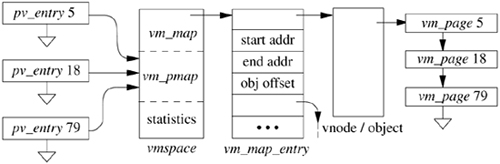

Figure 5.4 shows a typical process address space and associated data structures. The vmspace structure encapsulates the virtual-memory state of a particular process, including the machine-dependent and machine-independent data structures, as well as statistics. The machine-dependent pmap structure is opaque to all but the lowest level of the system and contains all information necessary to manage the memory-management hardware. This pmap layer is the subject of Section 5.13 and is ignored for the remainder of the current discussion. The machine-independent data structures include the address space that is represented by a vm_map structure. The vm_map contains a linked list of vm_map_entry structures, hints for speeding up lookups during memory allocation and page-fault handling, and a pointer to the associated machine-dependent pmap structure contained in the vmspace. A vm_map_entry structure describes a virtually contiguous range of addresses that have the same protection and inheritance attributes. Every vm_map_entry points to a chain of vm_object structures that describes sources of data (objects) that are mapped at the indicated address range. At the tail of the chain is the original mapped data object, usually representing a persistent data source, such as a file. Interposed between that object and the map entry are one or more transient shadow objects that represent modified copies of the original data. These shadow objects are discussed in detail in Section 5.5.

Each vm_object structure contains a linked list of vm_page structures representing the physical-memory cache of the object, as well as the type of and a pointer to the pager structure that contains information on how to page in or page out data from its backing store. There is a vm_page structure allocated for every page of physical memory managed by the virtual-memory system, where a page here may be a collection of multiple, contiguous hardware pages that will be treated by the machine-dependent layer as though they were a single unit. The structure also contains the status of the page (e.g., modified or referenced) and links for various paging queues. A vm_page structure is most commonly and quickly found through a global hash list that is keyed by the object and logical page within that object whose contents are held by the page.

All structures contain the necessary interlocks for multithreading in a multiprocessor environment. The locking is fine grained, with at least one lock per instance of a data structure. Many of the structures contain multiple locks to protect individual fields.

There are two ways in which the kernel's memory can be organized. The most common is for the kernel to be permanently mapped into the high part of every process address space. In this model, switching from one process to another does not affect the kernel portion of the address space. The alternative organization is to switch between having the kernel occupy the whole address space and mapping the currently running process into the address space. Having the kernel permanently mapped does reduce the amount of address space available to a large process (and the kernel), but it also reduces the cost of data copying. Many system calls require data to be transferred between the currently running user process and the kernel. With the kernel permanently mapped, the data can be copied via the efficient block-copy instructions. If the kernel is alternately mapped with the process, data copying requires the use of special instructions that copy to and from the previously mapped address space. These instructions are usually a factor of 2 slower than the standard block-copy instructions. Since up to one-third of the kernel time is spent in copying between the kernel and user processes, slowing this operation by a factor of 2 significantly slows system throughput.

Although the kernel is able to freely read and write the address space of the user process, the converse is not true. The kernel's range of virtual address space is marked inaccessible to all user processes. Writing is restricted so user processes cannot tamper with the kernel's data structures. Reading is restricted so user processes cannot watch sensitive kernel data structures, such as the terminal input queues, that include such things as users typing their passwords.

Usually, the hardware dictates which organization can be used. All the architectures supported by FreeBSD map the kernel into the top of the address space.

When the system boots, the first task that the kernel must do is to set up data structures to describe and manage its address space. Like any process, the kernel has a vm_map with a corresponding set of vm_map_entry structures that describe the use of a range of addresses. Submaps are a special kernel-only construct used to isolate and constrain address-space allocation for kernel subsystems. One use is in subsystems that require contiguous pieces of the kernel address space. To avoid intermixing of unrelated allocations within an address range, that range is covered by a submap, and only the appropriate subsystem can allocate from that map. For example, several network buffer (mbuf) manipulation macros use address arithmetic to generate unique indices, thus requiring the network buffer region to be contiguous. Parts of the kernel may also require addresses with particular alignments or even specific addresses. Both can be ensured by use of submaps. Finally, submaps can be used to limit statically the amount of address space and hence the physical memory consumed by a subsystem.

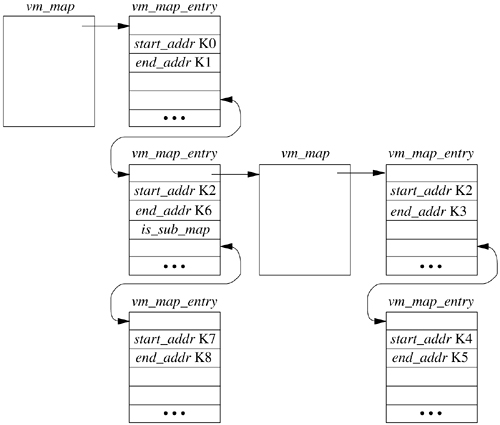

A typical layout of the kernel map is shown in Figure 5.5. The kernel's address space is described by the vm_map structure shown in the upperleft corner of the figure. Pieces of the address space are described by the vm_map_entry structures that are linked in ascending address order from K0 to K8 on the vm_map structure. Here, the kernel text, initialized data, uninitialized data, and initially allocated data structures reside in the range K0 to Kl and are represented by the first vm_map_entry. The next vm_map_entry is associated with the address range from K2 to K6. This piece of the kernel address space is being managed via a submap headed by the referenced vm_map structure. This submap currently has two parts of its address space used: the address range K2 to K3, and the address range K4 to K5. These two submaps represent the kernel malloc arena and the network buffer arena, respectively. The final part of the kernel address space is being managed in the kernel's main map, the address range K7 to K8 representing the kernel I/O staging area.

The virtual-memory system implements a set of primitive functions for allocating and freeing the page-aligned, page-rounded virtual-memory ranges that the kernel uses. These ranges may be allocated either from the main kernel-address map or from a submap. The allocation routines take a map and size as parameters but do not take an address. Thus, specific addresses within a map cannot be selected. There are different allocation routines for obtaining nonpageable and pageable memory ranges.

A nonpageable, or wired, range has physical memory assigned at the time of the call, and this memory is not subject to replacement by the pageout daemon. Wired pages must never cause a page fault that might result in a blocking operation. Wired memory is allocated with kmem_alloc() and kmem_malloc(). Kmem_alloc() returns zero-filled memory and may block if insufficient physical memory is available to honor the request. It will return a failure only if no address space is available in the indicated map. Kmem_malloc() is a variant of kmem_alloc() used by only the general allocator, malloc(), described in the next subsection. This routine has a nonblocking option that protects callers against inadvertently blocking on kernel data structures; it will fail if insufficient physical memory is available to fill the requested range. This nonblocking option allocates memory at interrupt time and during other critical sections of code. In general, wired memory should be allocated via the general-purpose kernel allocator. Kmem_alloc() should be used only to allocate memory from specific kernel submaps.

Pageable kernel virtual memory can be allocated with kmem_alloc_pageable() and kmem_alloc_wait(). A pageable range has physical memory allocated on demand, and this memory can be written out to backing store by the pageout daemon as part of the latter's normal replacement policy. Kmem_alloc_pageable() will return an error if insufficient address space is available for the desired allocation; kmem_alloc_wait() will block until space is available. Currently, pageable kernel memory is used only for temporary storage of exec arguments.

Kmem_free() deallocates kernel wired memory and pageable memory allocated with kmem_alloc_pageable(). Kmem_free_wakeup() should be used with kmem_alloc_wait() because it wakes up any processes waiting for address space in the specified map.

The kernel also provides a generalized nonpageable memory-allocation and freeing mechanism that can handle requests with arbitrary alignment or size, as well as allocate memory at interrupt time. So it is the preferred way to allocate kernel memory other than large, fixed-size structures that are better handled by the zone allocator described in the next subsection. This mechanism has an interface similar to that of the well-known memory allocator provided for applications programmers through the C library routines malloc() and free(). Like the C library interface, the allocation routine takes a parameter specifying the size of memory that is needed. The range of sizes for memory requests are not constrained. The free routine takes a pointer to the storage being freed, but it does not require the size of the piece of memory being freed.

Often, the kernel needs a memory allocation for the duration of a single system call. In a user process, such short-term memory would be allocated on the run-time stack. Because the kernel has a limited run-time stack, it is not feasible to allocate even moderate blocks of memory on it. Consequently, such memory must be allocated dynamically. For example, when the system must translate a pathname, it must allocate a 1-Kbyte buffer to hold the name. Other blocks of memory must be more persistent than a single system call and have to be allocated from dynamic memory. Examples include protocol control blocks that remain throughout the duration of a network connection.

The design specification for a kernel memory allocator is similar, but not identical, to the design criteria for a user-level memory allocator. One criterion for a memory allocator is that it make good use of the physical memory. Use of memory is measured by the amount of memory needed to hold a set of allocations at any point in time. Percentage utilization is expressed as

Here, requested is the sum of the memory that has been requested and not yet freed; required is the amount of memory that has been allocated for the pool from which the requests are filled. An allocator requires more memory than requested because of fragmentation and a need to have a ready supply of free memory for future requests. A perfect memory allocator would have a utilization of 100 percent. In practice, a 50 percent utilization is considered good [Korn & Vo, 1985].

Good memory utilization in the kernel is more important than in user processes. Because user processes run in virtual memory, unused parts of their address space can be paged out. Thus, pages in the process address space that are part of the required pool that are not being requested do not need to tie up physical memory. Since the kernel malloc arena is not paged, all pages in the required pool are held by the kernel and cannot be used for other purposes. To keep the kernel-utilization percentage as high as possible, the kernel should release unused memory in the required pool rather than hold it, as is typically done with user processes. Because the kernel can manipulate its own page maps directly, freeing unused memory is fast; a user process must do a system call to free memory.

The most important criterion for a kernel memory allocator is that it be fast. A slow memory allocator will degrade the system performance because memory allocation is done frequently. Speed of allocation is more critical when executing in the kernel than it is in user code because the kernel must allocate many data structures that user processes can allocate cheaply on their run-time stack. In addition, the kernel represents the platform on which all user processes run, and if it is slow, it will degrade the performance of every process that is running.

Another problem with a slow memory allocator is that programmers of frequently used kernel interfaces will think that they cannot afford to use the memory allocator as their primary one. Instead, they will build their own memory allocator on top of the original by maintaining their own pool of memory blocks. Multiple allocators reduce the efficiency with which memory is used. The kernel ends up with many different free lists of memory instead of a single free list from which all allocations can be drawn. For example, consider the case of two subsystems that need memory. If they have their own free lists, the amount of memory tied up in the two lists will be the sum of the greatest amount of memory that each of the two subsystems has ever used. If they share a free list, the amount of memory tied up in the free list may be as low as the greatest amount of memory that either subsystem used. As the number of subsystems grows, the savings from having a single free list grow.

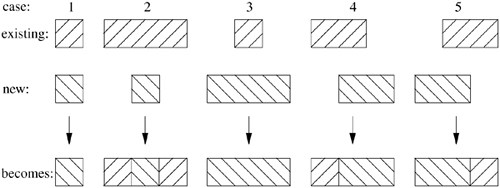

The kernel memory allocator uses a hybrid strategy. Small allocations are done using a power-of-2 list strategy. Using the zone allocator (described in the next subsection), the kernel creates a set of zones with one for each power-of-two between 16 and the page size. The allocation simply requests a block of memory from the appropriate zone. Usually, the zone will have an available piece of memory that it can return. Only if every piece of memory in the zone is in use will the zone allocator have to do a full allocation. When forced to do an additional allocation, it allocates an entire page and carves it up into the appropriate-sized pieces. This strategy speeds future allocations because several pieces of memory become available as a result of the call into the allocator.

Freeing a small block also is fast. The memory is simply returned to the zone from which it came.

Because of the inefficiency of power-of-2 allocation strategies for large allocations, the allocation method for large blocks is based on allocating pieces of memory in multiples of pages. The algorithm switches to the slower but more memory-efficient strategy for allocation sizes larger than a page. This value is chosen because the power-of-2 algorithm yields sizes of 1, 2, 4, 8, . . ., n pages, whereas the large block algorithm that allocates in multiples of pages yields sizes of 1, 2, 3, 4,. . ., n pages. Thus, for allocations of greater than one page, the large block algorithm will use less than or equal to the number of pages used by the power-of-2 algorithm, so the threshold between the large and small allocators is set at one page.

Large allocations are first rounded up to be a multiple of the page size. The allocator then uses a "first-fit" algorithm to find space in the kernel address arena set aside for dynamic allocations. On a machine with a 4-Kbyte page size, a request for a 20-Kbyte piece of memory will use exactly five pages of memory, rather than the eight pages used with the power-of-2 allocation strategy. When a large piece of memory is freed, the memory pages are returned to the free-memory pool and the vm_map_entry structure is deleted from the submap, effectively coalescing the freed piece with any adjacent free space.

Because the size is not specified when a block of memory is freed, the allocator must keep track of the sizes of the pieces that it has handed out. Many allocators increase the allocation request by a few bytes to create space to store the size of the block in a header just before the allocation. However, this strategy doubles the memory requirement for allocations that request a power-of-2-sized block. Therefore, instead of storing the size of each piece of memory with the piece itself, the zone allocator associates the size information with the memory page. Locating the allocation size outside of the allocated block improved utilization far more than expected. The reason is that many allocations in the kernel are for blocks of memory whose size is exactly a power of 2. The size of these requests would be nearly doubled if the more typical strategy were used. Now they can be accommodated with no wasted memory.

The allocator can be called both from the top half of the kernel that is willing to wait for memory to become available and from the interrupt routines in the bottom half of the kernel that cannot wait for memory to become available. Clients show their willingness (and ability) to wait with a flag to the allocation routine. For clients that are willing to wait, the allocator guarantees that their request will succeed. Thus, these clients do not need to check the return value from the allocator. If memory is unavailable and the client cannot wait, the allocator returns a null pointer. These clients must be prepared to cope with this (typically infrequent) condition (usually by giving up and hoping to succeed later). The details of the kernel memory allocator are further described in McKusick & Karels [1988].

Some commonly allocated items in the kernel such as process, thread, vnode, and control-block structures are not well handled by the general purpose malloc interface. These structures share several characteristics:

• They tend to be large and hence wasteful of space. For example, the process structure is about 550 bytes, which when rounded up to a power-of-2 size requires 1024 bytes of memory.

• They tend to be common. Because they are individually wasteful of space, collectively they waste too much space compared to a denser representation.

• They are often linked together in long lists. If the allocation of each structure begins on a page boundary, then the list pointers will all be at the same offset from the beginning of the page. When traversing these structures, the linkage pointers will all be located on the same hardware cache line, causing each step along the list to produce a cache miss, making the list-traversal slow.

• These structures often contain many lists and locks that must be initialized before use. If there is a dedicated pool of memory for each structure, then these substructures need to be initialized only when the pool is first created rather than after every allocation.

For these reasons, FreeBSD provides a zone allocator (sometimes referred to as a slab allocator). The zone allocator provides an efficient interface for managing a collection of items of the same size and type [Bonwick, 1994; Bonwick & Adams, 2001]. The creation of separate zones runs counter to the desire to keep all memory in a single pool to maximize utilization efficiency. However, the benefits from segregating memory for the small set of structures for which the zone allocator is appropriate outweighs the efficiency gains from keeping them in the general pool. The zone allocator minimizes the impact of the separate pools by freeing memory from a zone based on a reduction in demand for objects from the zone and when notified of a memory shortage by the pageout daemon.

A zone is an extensible collection of items of identical size. The zone allocator keeps track of the active and free items and provides functions for allocating items from the zone and for releasing them back to make them available for later use. Memory is allocated to the zone as necessary.

Items in the pool are type stable. The memory in the pool will not be used for any other purpose. A structure in the pool need only be initialized on its first use. Later uses may assume that the initialized values will retain their contents as of the previous free. A callback is provided before memory is freed from the zone to allow any persistent state to be cleaned up.

A new zone is created with the uma_zcreate() function. It must specify the size of the items to be allocated and register two sets of functions. The first set is called whenever an item is allocated or freed from the zone. They typically track the number of allocated items. The second set is called whenever memory is allocated or freed from the zone. They provide hooks for initializing or destroying substructures within the items such as locks or mutexes when they are first allocated or are about to be freed.

Items are allocated with uma_zalloc(), which takes a zone identifier returned by uma_zcreate(). Items are freed with uma_zfree(), which takes a zone identifier and a pointer to the item to be freed. No size is needed when allocating or freeing, since the item size was set when the zone was created.

To aid performance on multiprocessor systems, a zone may be backed by pages from the per-CPU caches of each of the processors for objects that are likely to have processor affinity. For example, if a particular process is likely to be run on the same CPU, then it would be sensible to allocate its structure from the cache for that CPU.

The zone allocator provides the uma_zone_set_max() function to set the upper limit of items in the zone. The limit on the total number of items in the zone includes the allocated and free items, including the items in the per-CPU caches. On multiprocessor systems, it may not be possible to allocate a new item for a particular CPU because the limit has been hit and all the free items are in the caches of the other CPUs.

As we have already seen, a process requires a process entry and a kernel stack. The next major resource that must be allocated is its virtual memory. The initial virtual-memory requirements are defined by the header in the process's executable. These requirements include the space needed for the program text, the initialized data, the uninitialized data, and the run-time stack. During the initial startup of the program, the kernel will build the data structures necessary to describe these four areas. Most programs need to allocate additional memory. The kernel typically provides this additional memory by expanding the uninitialized data area.

Most FreeBSD programs use shared libraries. The header for the executable will describe the libraries that it needs (usually the C library, and possibly others). The kernel is not responsible for locating and mapping these libraries during the initial execution of the program. Finding, mapping, and creating the dynamic linkages to these libraries is handled by the user-level startup code prepended to the file being executed. This startup code usually runs before control is passed to the main entry point of the program [Gingell et al., 1987].

The initial layout of the address space for a process is shown in Figure 5.6 (on page 152). As discussed in Section 5.2, the address space for a process is described by that process's vmspace structure. The contents of the address space are defined by a list of vm_map_entry structures, each structure describing a region of virtual address space that resides between a start and an end address. A region describes a range of memory that is being treated in the same way. For example, the text of a program is a region that is read-only and is demand paged from the file on disk that contains it. Thus, the vm_map_entry also contains the protection mode to be applied to the region that it describes. Each vm_map_entry structure also has a pointer to the object that provides the initial data for the region. The object also stores the modified contents either transiently, when memory is being reclaimed, or more permanently, when the region is no longer needed. Finally, each vm_map_entry structure has an offset that describes where within the object the mapping begins.

The example shown in Figure 5.6 represents a process just after it has started execution. The first two map entries both point to the same object; here, that object is the executable. The executable consists of two parts: the text of the program that resides at the beginning of the file and the initialized data area that follows at the end of the text. Thus, the first vm_map_entry describes a read-only region that maps the text of the program. The second vm_map_entry describes the copy-on-write region that maps the initialized data of the program that follows the program text in the file (copy-on-write is described in Section 5.6). The offset field in the entry reflects this different starting location. The third and fourth vm_map_entry structures describe the uninitialized data and stack areas, respectively. Both of these areas are represented by anonymous objects. An anonymous object provides a zero-filled page on first use and arranges to store modified pages in the swap area if memory becomes tight. Anonymous objects are described in more detail later in this section.

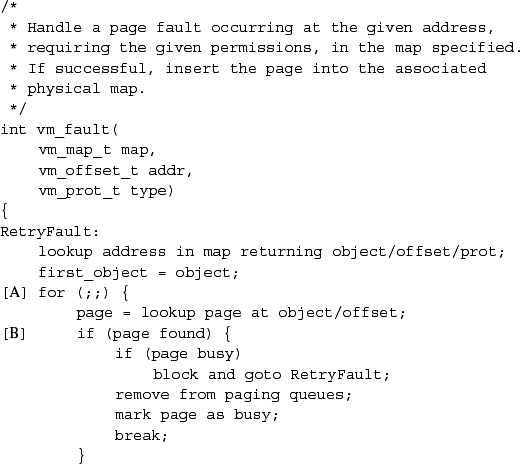

When a process attempts to access a piece of its address space that is not currently resident, a page fault occurs. The page-fault handler in the kernel is presented with the virtual address that caused the fault and the type of access that was attempted (read or write). The fault is handled with the following four steps:

- Find the vmspace structure for the faulting process; from that structure, find the head of the vm_map_entry list.

- Traverse the vm_map_entry list, starting at the entry indicated by the map hint; for each entry, check whether the faulting address falls within its start and end address range. If the kernel reaches the end of the list without finding any valid region, the faulting address is not within any valid part of the address space for the process, so send the process a segment fault signal.

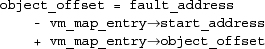

- Having found a vm_map_entry that contains the faulting address, convert that address to an offset within the underlying object. Calculate the offset within the object as

Subtract off the start address to give the offset into the region mapped by the vm_map_entry. Add in the object offset to give the absolute offset of the page within the object.

- Present the absolute object offset to the underlying object, which allocates a vm_page structure and uses its pager to fill the page. The object then returns a pointer to the vm_page structure, which is mapped into the faulting location in the process address space.

Once the appropriate page has been mapped into the faulting location, the page-fault handler returns and reexecutes the faulting instruction.

Objects are used to hold information about either a file or about an area of anonymous memory. Whether a file is mapped by a single process in the system or by many processes in the system, it will always be represented by a single object. Thus, the object is responsible for maintaining all the state about those pages of a file that are resident. All references to that file will be described by vm_map_entry structures that reference the same object. An object never stores the same page of a file in more than one physical-memory page, so all mappings will get a consistent view of the file.

An object stores the following information:

• A list of the pages for that object that are currently resident in main memory; a page may be mapped into multiple address spaces, but it is always claimed by exactly one object

• A count of the number of vm_map_entry structures or other objects that reference the object

• The size of the file or anonymous area described by the object

• The number of memory-resident pages held by the object

• Pointer to shadow objects (described in Section 5.5)

• The type of pager for the object; the pager is responsible for providing the data to fill a page and for providing a place to store the page when it has been modified (pagers are covered in Section 5.10)

There are three types of objects in the system:

• Named objects represent files; they may also represent hardware devices that are able to provide mapped memory such as frame buffers.

• Anonymous objects represent areas of memory that are zero filled on first use; they are abandoned when they are no longer needed.

• Shadow objects hold private copies of pages that have been modified; they are abandoned when they are no longer referenced.

These objects are often referred to as "internal" objects in the source code. The type of an object is defined by the type of pager that that object uses to fulfill page-fault requests.

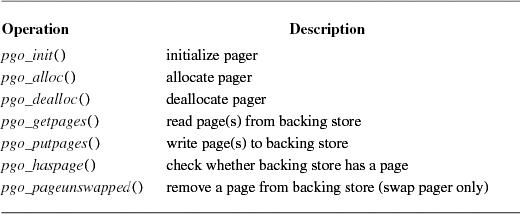

A named object uses either the device pager, if it maps a hardware device, or the vnode pager, if it is backed by a file in the filesystem. The device pager services a page fault by returning the appropriate address for the device being mapped. Since the device memory is separate from the main memory on the machine, it will never be selected by the pageout daemon. Thus, the device pager never has to handle a pageout request.

The vnode pager provides an interface to objects that represent files in the filesystem. The vnode pager keeps a reference to a vnode that represents the file being mapped in the object. The vnode pager services a pagein request by doing a read on the vnode; it services a pageout request by doing a write to the vnode. Thus, the file itself stores the modified pages. In cases where it is not appropriate to modify the file directly, such as an executable that does not want to modify its initialized data pages, the kernel must interpose an anonymous shadow object between the vm_map_entry and the object representing the file; see Section 5.5.

Anonymous objects use the swap pager. An anonymous object services pagein requests by getting a page of memory from the free list and zeroing that page. When a pageout request is made for a page for the first time, the swap pager is responsible for finding an unused page in the swap area, writing the contents of the page to that space, and recording where that page is stored. If a pagein request comes for a page that had been previously paged out, the swap pager is responsible for finding where it stored that page and reading back the contents into a free page in memory. A later pageout request for that page will cause the page to be written out to the previously allocated location.

Shadow objects also use the swap pager. They work just like anonymous objects, except that the swap pager provides their initial pages by copying existing pages in response to copy-on-write faults instead of by zero-filling pages.

Further details on the pagers are given in Section 5.10.

Each virtual-memory object has a pager type, pager handle, and pager private data associated with it. Objects that map files have a vnode-pager type associated with them. The handle for the vnode-pager type is a pointer to the vnode on which to do the I/O, and the private data is the size of the vnode at the time that the mapping was done. Every vnode that maps a file has an object associated with it. When a fault occurs for a file that is mapped into memory, the object associated with the file can be checked to see whether the faulted page is resident. If the page is resident, it can be used. If the page is not resident, a new page is allocated, and the vnode pager is requested to fill the new page.

Caching in the virtual-memory system is identified by an object that is associated with a file or region that it represents. Each object contains pages that are the cached contents of its associated file or region. Objects are reclaimed as soon as their reference count drops to zero. Pages associated with reclaimed objects are moved to the free list. Objects that represent anonymous memory are reclaimed as part of cleaning up a process as it exits. However, objects that refer to files are persistent. When the reference count on a vnode drops to zero, it is stored on a least-recently used (LRU) list known as the vnode cache; see Section 6.6. The vnode does not release its object until the vnode is reclaimed and reused for another file. Unless there is pressure on the memory, the object associated with the vnode will retain its pages. If the vnode is reactivated and a page fault occurs before the associated page is freed, that page can be reattached rather than being reread from disk.

This cache is similar to the text cache found in earlier versions of BSD in that it provides performance improvements for short-running but frequently executed programs. Frequently executed programs include those used to list the contents of directories, show system status, or perform the intermediate steps involved in compiling a program. For example, consider a typical application that is made up of multiple source files. Each of several compiler steps must be run on each file in turn. The first time that the compiler is run, the executable files associated with its various components are read in from the disk. For each file compiled thereafter, the previously created executable files are found, as well as any previously read header files, alleviating the need to reload them from disk each time.

When the system is first booted, the kernel looks through the physical memory on the machine to find out how many pages are available. After the physical memory that will be dedicated to the kernel itself has been deducted, all the remaining pages of physical memory are described by vm_page structures. These vm_page structures are all initially placed on the memory free list. As the system starts running and processes begin to execute, they generate page faults. Each page fault is matched to the object that covers the faulting piece of address space. The first time that a piece of an object is faulted, it must allocate a page from the free list and must initialize that page either by zero-filling it or by reading its contents from the filesystem. That page then becomes associated with the object. Thus, each object has its current set of vm_page structures linked to it. A page can be associated with at most one object at a time. Although a file may be mapped into several processes at once, all those mappings reference the same object. Having a single object for each file ensures that all processes will reference the same physical pages.

If memory becomes scarce, the paging daemon will search for pages that have not been used actively. Before these pages can be used by a new object, they must be removed from all the processes that currently have them mapped, and any modified contents must be saved by the object that owns them. Once cleaned, the pages can be removed from the object that owns them and can be placed on the free list for reuse. The details of the paging system are described in Section 5.12.

In Section 5.4, we explained how the address space of a process is organized. This section shows the additional data structures needed to support shared address space between processes. Traditionally, the address space of each process was completely isolated from the address space of all other processes running on the system. The only exception was read-only sharing of program text. All interprocess communication was done through well-defined channels that passed through the kernel: pipes, sockets, files, and special devices. The benefit of this isolated approach is that, no matter how badly a process destroys its own address space, it cannot affect the address space of any other process running on the system. Each process can precisely control when data are sent or received; it can also precisely identify the locations within its address space that are read or written. The drawback of this approach is that all interprocess communication requires at least two system calls: one from the sending process and one from the receiving process. For high volumes of interprocess communication, especially when small packets of data are being exchanged, the overhead of the system calls dominates the communications cost.

Shared memory provides a way to reduce interprocess-communication costs dramatically. Two or more processes that wish to communicate map the same piece of read-write memory into their address space. Once all the processes have mapped the memory into their address space, any changes to that piece of memory are visible to all the other processes, without any intervention by the kernel. Thus, interprocess communication can be achieved without any system-call overhead other than the cost of the initial mapping. The drawback to this approach is that, if a process that has the memory mapped corrupts the data structures in that memory, all the other processes mapping that memory also are corrupted. In addition, there is the complexity faced by the application developer who must develop data structures to control access to the shared memory and must cope with the race conditions inherent in manipulating and controlling such data structures that are being accessed concurrently.

Some UNIX variants have a kernel-based semaphore mechanism to provide the needed serialization of access to the shared memory. However, both getting and setting such semaphores require system calls. The overhead of using such semaphores is comparable to that of using the traditional interprocess-communication methods. Unfortunately, these semaphores have all the complexity of shared memory, yet confer little of its speed advantage. The primary reason to introduce the complexity of shared memory is for the commensurate speed gain. If this gain is to be obtained, most of the data-structure locking needs to be done in the shared memory segment itself. The kernel-based semaphores should be used for only those rare cases where there is contention for a lock and one process must wait. Consequently, modern interfaces, such as POSIX Pthreads, are designed such that the semaphores can be located in the shared memory region. The common case of setting or clearing an uncontested semaphore can be done by the user process, without calling the kernel. There are two cases where a process must do a system call. If a process tries to set an already locked semaphore, it must call the kernel to block until the semaphore is available. This system call has little effect on performance because the lock is contested, so it is impossible to proceed, and the kernel must be invoked to do a context switch anyway. If a process clears a semaphore that is wanted by another process, it must call the kernel to awaken that process. Since most locks are uncontested, the applications can run at full speed without kernel intervention.

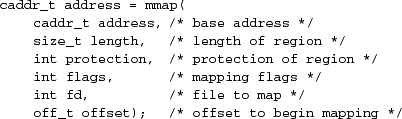

When two processes wish to create an area of shared memory, they must have some way to name the piece of memory that they wish to share, and they must be able to describe its size and initial contents. The system interface describing an area of shared memory accomplishes all these goals by using files as the basis for describing a shared memory segment. A process creates a shared memory segment by using

to map the file referenced by descriptor fd starting at file offset offset into its address space starting at address and continuing for length bytes with access permission protection. The flags parameter allows a process to specify whether it wants to make a shared or private mapping. Changes made to a shared mapping are written back to the file and are visible to other processes. Changes made to a private mapping are not written back to the file and are not visible to other processes. Two processes that wish to share a piece of memory request a shared mapping of the same file into their address space. Thus, the existing and well-understood filesystem name space identifies shared objects. The contents of the file are used as the initial value of the memory segment. All changes made to the mapping are reflected back into the contents of the file, so long-term state can be maintained in the shared memory region, even across invocations of the sharing processes.

Some applications want to use shared memory purely as a short-term interprocess-communication mechanism. They need an area of memory that is initially zeroed and whose contents are abandoned when they are done using it. Such processes neither want to pay the relatively high startup cost associated with paging in the contents of a file to initialize a shared memory segment nor to pay the shutdown costs of writing modified pages back to the file when they are done with the memory. Although FreeBSD does provide the limited and quirky naming scheme of the System V shmem interface as a rendezvous mechanism for such short-term shared memory, the designers ultimately decided that all naming of memory objects for mmap should use the filesystem name space. To provide an efficient mechanism for short-term shared memory, they created a virtual-memory-resident filesystem for transient objects. Unless memory is in high demand, files created in the virtual-memory-resident filesystem reside entirely in memory. Thus, both the initial paging and later write-back costs are eliminated. Typically, a virtual-memory-resident filesystem is mounted on /tmp. Two processes wishing to create a transient area of shared memory create a file in /tmp that they can then both map into their address space.

When a mapping is no longer needed, it can be removed using

munmap(caddr_t address, size_t length);

The munmap system call removes any mappings that exist in the address space, starting at address and continuing for length bytes. There are no constraints between previous mappings and a later munmap. The specified range may be a subset of a previous mmap, or it may encompass an area that contains many mmap'ed files. When a process exits, the system does an implied munmap over its entire address space.

During its initial mapping, a process can set the protections on a page to allow reading, writing, and/or execution. The process can change these protections later by using

mprotect(caddr_t address, int length, int protection);

This feature can be used by debuggers when they are trying to track down a memory-corruption bug. By disabling writing on the page containing the data structure that is being corrupted, the debugger can trap all writes to the page and verify that they are correct before allowing them to occur.

Traditionally, programming for real-time systems has been done with specially written operating systems. In the interests of reducing the costs of real-time applications and of using the skills of the large body of UNIX programmers, companies developing real-time applications have expressed increased interest in using UNIX-based systems for writing these applications. Two fundamental requirements of a real-time system are guaranteed maximum latencies and predictable execution times. Predictable execution time is difficult to provide in a virtual-memory-based system, since a page fault may occur at any point in the execution of a program, resulting in a potentially large delay while the faulting page is retrieved from the disk or network. To avoid paging delays, the system allows a process to force its pages to be resident, and not paged out, by using

mlock(caddr_t address, size_t length);

As long as the process limits its accesses to the locked area of its address space, it can be sure that it will not be delayed by page faults. To prevent a single process from acquiring all the physical memory on the machine to the detriment of all other processes, the system imposes a resource limit to control the amount of memory that may be locked. Typically, this limit is set to no more than one-third of the physical memory, and it may be set to zero by a system administrator that does not want random processes to be able to monopolize system resources.

When a process has finished with its time-critical use of an mlock'ed region, it can release the pages using

munlock(caddr_t address, size_t length);

After the munlock call, the pages in the specified address range are still accessible, but they may be paged out if memory is needed and they are not accessed.

An application may need to ensure that certain records are committed to disk without forcing the writing of all the dirty pages of a file done by the fsync system call. For example, a database program may want to commit a single piece of metadata without writing back all the dirty blocks in its database file. A process does this selective synchronization using

msync(caddr_t address, int length);

Only those modified pages within the specified address range are written back to the filesystem. The msync system call has no effect on anonymous regions.

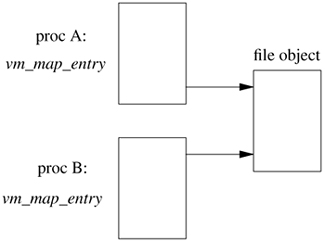

When multiple processes map the same file into their address space, the system must ensure that all the processes view the same set of memory pages. As shown in Section 5.4, each file that is being used actively by a client of the virtual-memory system is represented by an object. Each mapping that a process has to a piece of a file is described by a vm_map_entry structure. An example of two processes mapping the same file into their address space is shown in Figure 5.7. When a page fault occurs in one of these processes, the process's vm_map_entry references the object to find the appropriate page. Since all mappings reference the same object, the processes will all get references to the same set of physical memory, thus ensuring that changes made by one process will be visible in the address spaces of the other processes as well.

A process may request a private mapping of a file. A private mapping has two main effects:

- Changes made to the memory mapping the file are not reflected back into the mapped file.

- Changes made to the memory mapping the file are not visible to other processes mapping the file.

An example of the use of a private mapping would be during program debugging. The debugger will request a private mapping of the program text so that, when it sets a breakpoint, the modification is not written back into the executable stored on the disk and is not visible to the other (presumably nondebugging) processes executing the program.

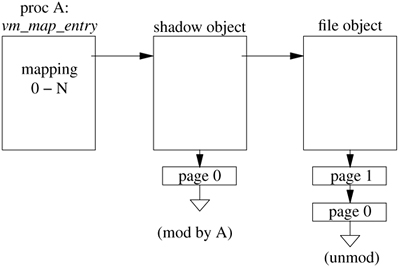

The kernel uses shadow objects to prevent changes made by a process from being reflected back to the underlying object. The use of a shadow object is shown in Figure 5.8. When the initial private mapping is requested, the file object is mapped into the requesting-process address space, with copy-on-write semantics. If the process attempts to write a page of the object, a page fault occurs and traps into the kernel. The kernel makes a copy of the page to be modified and hangs it from the shadow object. In this example, process A has modified page 0 of the file object. The kernel has copied page 0 to the shadow object that is being used to provide the private mapping for process A.

If free memory is limited, it would be better simply to move the modified page from the file object to the shadow object. The move would reduce the immediate demand on the free memory, because a new page would not have to be allocated. The drawback to this optimization is that, if there is a later access to the file object by some other process, the kernel will have to allocate a new page. The kernel will also have to pay the cost of doing an I/O operation to reload the page contents. In FreeBSD, the virtual-memory system never moves the page rather than copying it.

When a page fault for the private mapping occurs, the kernel traverses the list of objects headed by the vm_map_entry, looking for the faulted page. The first object in the chain that has the desired page is the one that is used. If the search gets to the final object on the chain without finding the desired page, then the page is requested from that final object. Thus, pages on a shadow object will be used in preference to the same pages in the file object itself. The details of page-fault handling are given in Section 5.11.

When a process removes a mapping from its address space (either explicitly from an munmap request or implicitly when the address space is freed on process exit), pages held by its shadow object are not written back to the file object. The shadow-object pages are simply placed back on the memory free list for immediate reuse.

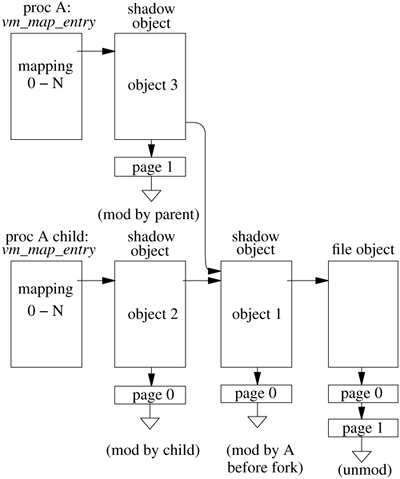

When a process forks, it does not want changes to its private mappings made after it forked to be visible in its child; similarly, the child does not want its changes to be visible in its parent. The result is that each process needs to create a shadow object if it continues to make changes in a private mapping. When process A in Figure 5.8 forks, a set of shadow-object chains is created, as shown in Figure 5.9 (on page 162). In this example, process A modified page 0 before it forked and then later modified page 1. Its modified version of page 1 hangs off its new shadow object, so those modifications will not be visible to its child. Similarly, its child has modified page 0. If the child were to modify page 0 in the original shadow object, that change would be visible in its parent. Thus, the child process must make a new copy of page 0 in its own shadow object.

If the system runs short of memory, the kernel may need to reclaim inactive memory held in a shadow object. The kernel assigns to the swap pager the task of backing the shadow object. The swap pager sets up data structures (described in Section 5.10) that can describe the entire contents of the shadow object. It then allocates enough swap space to hold the requested shadow pages and writes them to that area. These pages can then be freed for other uses. If a later page fault requests a swapped-out page, then a new page of memory is allocated and its contents are reloaded with an I/O from the swap area.

When a process with a private mapping removes that mapping either explicitly with an munmap system call or implicitly by exiting, its parent or child process may be left with a chain of shadow objects. Usually, these chains of shadow objects can be collapsed into a single shadow object, often freeing up memory as part of the collapse. Consider what happens when process A exits in Figure 5.9. First, shadow object 3 can be freed along with its associated page of memory. This deallocation leaves shadow objects 1 and 2 in a chain with no intervening references. Thus, these two objects can be collapsed into a single shadow object. Since they both contain a copy of page 0, and since only the page 0 in shadow object 2 can be accessed by the remaining child process, the page 0 in shadow object 1 can be freed along with shadow object 1 itself.

If the child of process A were to exit, then shadow object 2 and the associated page of memory could be freed. Shadow objects 1 and 3 would then be in a chain that would be eligible for collapse. Here, there are no common pages, so object 3 would retain its own page 1 and acquire page 0 from shadow object 1. Object 1 would then be freed. In addition to merging the pages from the two objects, the collapse operation requires a similar merger of any swap space that has been allocated by the two objects. If page 2 had been copied to object 3 and page 4 had been copied to object 1, but these pages were later reclaimed, the pager for object 3 would hold a swap block for page 2, and the pager for object 1 would hold a swap block for page 4. Before freeing object 1, its swap block for page 4 would have to be moved over to object 3.

A performance problem can arise if either a process or its children repeatedly fork. Without some intervention, they can create long chains of shadow objects. If the processes are long-lived, the system does not get an opportunity to collapse these shadow-object chains. Traversing these long chains of shadow objects to resolve page faults is time consuming, and many inaccessible pages can build up forcing the system to needlessly page them out to reclaim them.

One alternative would be to calculate the number of live references to a page after each copy-on-write fault. When only one live reference remained, the page could be moved to the shadow object that still referenced it. When all the pages had been moved out of a shadow object, it could be removed from the chain. For example, in Figure 5.9, when the child of process A wrote to page 0, a copy of page 0 was made in shadow object 2. At that point, the only live reference to page 0 in object 1 was from process A. Thus, the page 0 in object 1 could be moved to object 3. That would leave object 1 with no pages, so it could be reclaimed leaving objects 2 and 3 pointing at the file object directly. Unfortunately, this strategy would add considerable overhead to the page-fault handling routine which would noticeably slow the overall performance of the system. So FreeBSD does not make this optimization.

FreeBSD uses a lower-cost heuristic to reduce the length of shadow chains. When taking a copy-on-write fault, it checks to see whether the object taking the fault completely shadows the object below it in the chain. When it achieves complete coverage, it can bypass that object and reference the next object down the chain. The removal of its reference has the same effect as if it had exited and allowed the normal shadow chain collapse to be done with the sole remaining reference to the object. For example, in Figure 5.9, when the child of process A wrote to page 0, a copy of page 0 was made in shadow object 2. At that point object 2 completely shadows object 1, so object 2 can drop its reference to object 1 and point to the file object directly. With only one reference to object 1, the collapse of object 3 and object 1 will happen as already described. The cost of determining whether one object completely covers the object below it is much easier than tracking live references. Thus, this approach is used by FreeBSD.

This heuristic works well in practice. Even with the heavily forking parent or child processes found in many network servers, shadow-chain depth generally stabilizes at a depth of about three to four levels.

When a process makes read accesses to a private mapping of an object, it continues to see changes made to that object by other processes that are writing to the object through the filesystem or that have a shared mapping to the object. When a process makes a write access to a private mapping of an object, a snapshot of the corresponding page of the object is made and is stored in the shadow object, and the modification is made to that snapshot. Thus, further changes to that page made by other processes that are writing to the page through the filesystem or that have a shared mapping to the object are no longer visible. However, changes to unmodified pages of the object continue to be visible. This mix of changing and unchanging parts of the file can be confusing.

To provide a more consistent view of a file, a process may want to take a snapshot of the file at the time that it is initially privately mapped. Historically, both Mach and 4.4BSD provided a copy object whose effect was to take a snapshot of an object at the time that the private mapping was set up. The copy object tracked changes to an object by other processes and kept original copies of any pages that changed. None of the other UNIX vendors implemented copy objects, and there are no major applications that depend on them. The copy-object code in the virtual-memory system was large and complex, and it noticeably slowed virtual-memory performance. Consequently, copy objects were deemed unnecessary and were removed from FreeBSD as part of the early cleanup and performance work done on the virtual-memory system. Applications that want to get a snapshot of a file can do so by reading it into their address space or by making a copy of it in the filesystem and then referring to the copy.

Processes are created with a fork system call. The fork is usually followed shortly thereafter by an exec system call that overlays the virtual address space of the child process with the contents of an executable image that resides in the filesystem. The process then executes until it terminates by exiting, either voluntarily or involuntarily, by receiving a signal. In Sections 5.6 to 5.9, we trace the management of the memory resources used at each step in this cycle.

A fork system call duplicates the address space of an existing process, creating an identical child process. The fork set of system calls is the only way that new processes are created in FreeBSD. Fork duplicates all the resources of the original process and copies that process's address space.

The virtual-memory resources of the process that must be allocated for the child include the process structure and its associated substructures, and the user structure and kernel stack. In addition, the kernel must reserve storage (either memory, filesystem space, or swap space) used to back the process. The general outline of the implementation of a fork is as follows:

• Reserve virtual address space for the child process.

• Allocate a process entry and thread structure for the child process, and fill it in.

• Copy to the child the parent's process group, credentials, file descriptors, limits, and signal actions.

• Allocate a new user structure and kernel stack, copying the current ones to initialize them.

• Allocate a vmspace structure.

• Duplicate the address space, by creating copies of the parent vm_map_entry structures marked copy-on-write.

• Arrange for the child process to return 0, to distinguish its return value from the new PID that is returned to the parent process.

The allocation and initialization of the process structure, and the arrangement of the return value, were covered in Chapter 4. The remainder of this section discusses the other steps involved in duplicating a process.

The first resource to be reserved when an address space is duplicated is the required virtual address space. To avoid running out of memory resources, the kernel must ensure that it does not promise to provide more virtual memory than it is able to deliver. The total virtual memory that can be provided by the system is limited to the amount of physical memory available for paging plus the amount of swap space that is provided. A few pages are held in reserve to stage I/O between the swap area and main memory.

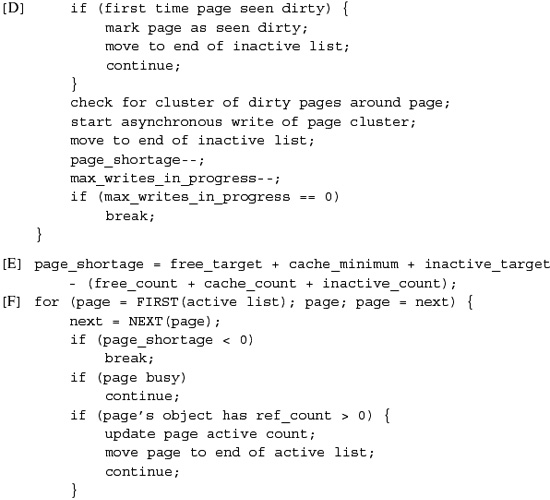

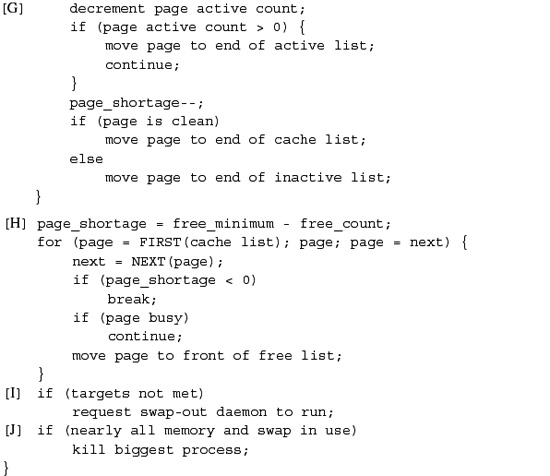

The reason for this restriction is to ensure that processes get synchronous notification of memory limitations. Specifically, a process should get an error back from a system call (such as sbrk, fork, or mmap) if there are insufficient resources to allocate the needed virtual memory. If the kernel promises more virtual memory than it can support, it can deadlock trying to service a page fault. Trouble arises when it has no free pages to service the fault and no available swap space to save an active page. Here, the kernel has no choice but to send a kill signal to the process unfortunate enough to be page faulting. Such asynchronous notification of insufficient memory resources is unacceptable.