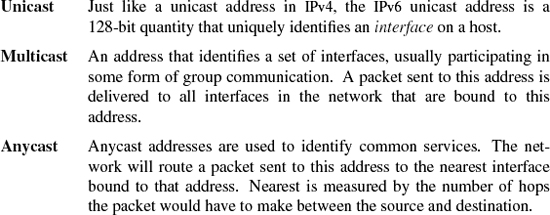

Chapter 12 presented the network-communications architecture of FreeBSD. In this chapter, we examine the network protocols implemented within this framework. The FreeBSD system supports several major communication domains including IPv4, IPv6, Xerox Network Systems (NS), ISO/OSI, and the local domain (formerly known as the UNIX domain). The local domain does not include network protocols because it operates entirely within a single system. The IPv4 protocol suite was the first set of protocols implemented within the network architecture of 4.2BSD. Following the release of 4.2BSD, several proprietary protocol families were implemented by vendors within the network architecture. However, it was not until the addition of the Xerox NS protocols in 4.3BSD that the system's ability to support multiple network-protocol families was visibly demonstrated. Although some parts of the protocol interface were previously unused and thus unimplemented, the changes required to add a second network-protocol family did not substantially modify the network architecture. The implementation of the ISO/OSI networking protocols, as well as other changing requirements, led to a further refinement of the network architecture in 4.4BSD. Two new protocols that were added to the system, IPv6 and IPSec, required several changes because of its need to coexist simultaneously with IPv4. Those changes, as well as IPv6 and IPSec, are presented at the end of this chapter.

In this chapter, we concentrate on the organization and implementation of the IPv4 protocols. This protocol implementation is the standard on which the current Internet is built because it was publicly available when many vendors were looking for tuned and reliable communication protocols. Throughout this chapter we use Internet and IPv4 interchangeably. Specific mention will be made when talking about the new IPv6 protocols, which are meant to eventually supplant IPv4. After describing the overall architecture of the IPv4 protocols, we shall examine their operation according to the structure defined in Chapter 12. We shall also describe the significant algorithms used by the protocols within IPv4. We then shall discuss changes that the developers made in the system motivated by aspects of the IPv6 protocols and their implementation.

IPv4 was developed under the sponsorship of DARPA, for use on the ARPANET [DARPA, 1983; McQuillan & Walden, 1977]. The protocols are commonly known as TCP/IP, although TCP and IP are only two of the many protocols in the family. These protocols do not assume a reliable subnetwork that ensures delivery of data. Instead, IPv4 was devised for a model in which hosts were connected to networks with varying characteristics and the networks were interconnected by routers. The Internet protocols were designed for packet-switching networks using datagrams sent over links such as Ethernet that provide no indication of delivery.

This model leads to the use of at least two protocol layers. One layer operates end to end between two hosts involved in a conversation. It is based on a lower-level protocol that operates on a hop-by-hop basis, forwarding each message through intermediate routers to the destination host. In general, there exists at least one protocol layer above the other two: It is the application layer. The three layers correspond roughly to levels 3 (network), 4 (transport), and 7 (application) in the ISO Open Systems Interconnection reference model [ISO, 1984].

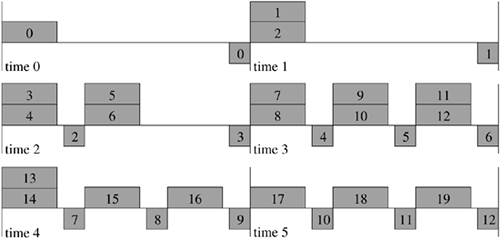

The protocols that support this model have the layering illustrated in Figure 13.1. The Internet Protocol (IP) is the lowest-level protocol in the Model; this level corresponds to the ISO network layer. IP operates hop-by-hop as a datagram is sent from the originating host to the destination via any intermediate routers. It provides the network-level services of host addressing, routing, and, if necessary, packet fragmentation and reassembly if intervening networks cannot send an entire packet in one piece. All the other protocols use the services of IP. The Transmission Control Protocol (TCP) and User Datagram Protocol (UDP) are transport-level protocols that provide additional facilities to applications that use IP. Each protocol adds a port identifier to IP's host address so that local and remote sockets can be identified. TCP provides connection-oriented, reliable, unduplicated, and flow-controlled transmission of data; it supports the stream socket type in the Internet domain. UDP provides a data checksum for checking integrity in addition to a port identifier, but otherwise adds little to the services provided by IP. UDP is the protocol used by datagram sockets in the Internet domain. The Internet Control Message Protocol (ICMP) is used for error reporting and for other, simple network-management tasks; it is logically a part of IP but, like the transport protocols, is layered above IP. It is usually not accessed by users. Raw access to the IP and ICMP protocols is possible through raw sockets (see Section 12.7 for information on this facility).

Figure 13.1. IPv4 protocol layering. Key: TCP—Transmission Control Protocol; UDP—User Datagram Protocol; IP—Internet Protocol; ICMP—Internet Control Message Protocol.

The Internet protocols were designed to support heterogeneous host systems and architectures that use a wide variety of internal data representations. Even the basic unit of data, the byte, was not the same on all host systems; one common type of host supported variable-sized bytes. The network protocols, however, require a standard representation. This representation is expressed using the octet—an 8-bit byte. We shall use this term as it is used in the protocol specifications to describe network data, although we continue to use the term byte to refer to data or storage within the system. All fields in the Internet protocols that are larger than an octet are expressed in network byte order, with the most significant octet first. The FreeBSD network implementation uses a set of routines or macros to convert 16-bit and 32-bit integer fields between host and network byte order on hosts (such as PC systems) that have a different native ordering.

An IPv4 address is a 32-bit number that identifies the network on which a host resides as well as uniquely identifying a network interface on that host. It follows that a host with network interfaces attached to multiple networks has multiple addresses. Network addresses are assigned in blocks by Regional Internet Registries (RIRs) to Internet Service Providers (ISPs), which then dole out addresses to companies or individual users. If address assignment were not done in this centralized way, conflicting addresses could arise in the network, and it would be impossible to route packets correctly.

Historically IPv4 addresses were rigidly divided into three classes (A, B, and C) to address the needs of large, medium, and small networks [Postel, 1981a]. Three classes proved to be too restrictive and also too wasteful of address space. The current IPv4 addressing scheme is called Classless Inter-Domain Routing (CIDR) [Fuller et al., 1993]. In the CIDR scheme each organization is given a contiguous group of addresses described by a single value and a netmask. For example, an ISP might have a group of addresses defined by an 18-bit netmask. This means that the network is defined by the first 18 bits, and the remaining 14 bits can potentially be used to identify hosts in the network. In practice, the number of hosts is less because the ISP will further break up this space into smaller networks, which will reduce the number of bits that can effectively be used. It is because of this scheme that routing entries store arbitrary netmasks with routes.

Each Internet address assigned to a network interface is maintained in an in_ifaddr structure that contains a protocol-independent interface-address structure and additional information for use in the Internet domain (see Figure 13.2). When an interface's network mask is specified, it is recorded in the ia_subnetmask field of the address structure. The network mask, ia_netmask, is still calculated based on the type of the network number (class A, B, or C) when the interface's address is assigned, but this is no longer used to determine whether a destination is on or off the local subnet. The system interprets local Internet addresses using ia_subnetmask value. An address is considered to be local to the subnet if the field under the subnetwork mask matches the subnetwork field of an interface address.

On networks capable of supporting broadcast datagrams, 4.2BSD used the address with a host part of zero for broadcasts. After 4.2BSD was released, the Internet broadcast address was defined as the address with a host part of all is [Mogul, 1984]. This change and the introduction of subnets both complicated the recognition of broadcast addresses. Hosts may use a host part of 0s or 1s to signify broadcast, and some may understand the presence of subnets, whereas others may not. For these reasons, 4.3BSD and later BSD systems set the broadcast address for each interface to be the host value of all is but allow the alternate address to be set for backward compatibility. If the network is subnetted, the subnet field of the broadcast address contains the normal subnet number. The logical broadcast address for the network also is calculated when the address is set; this address would be the standard broadcast address if subnets were not in use. This address is needed by the IP input routine to filter input packets. On input, FreeBSD recognizes and accepts subnet and network broadcast addresses with host parts of 0s or 1s, as well as the address with 32 bits of 1 ("broadcast on this physical link").

Many link-layer networks, such as Ethernet, provide a multicast capability that can address groups of hosts but is more selective than broadcast because it provides several different multicast group addresses. IP provides a similar facility at the network-protocol level, using link-layer multicast where available [Deering, 1989]. IP multicasts are sent using destination addresses with high-order bits set to 1110. Unlike host addresses, multicast addresses do not contain network and host portions; instead, the entire address names a group, such as a group of hosts using a particular service. These groups can be created dynamically, and the members of the group can change over time. IP multicast addresses map directly to physical multicast addresses on networks such as the Ethernet, using the low 24 bits of the IP address along with a constant 24-bit prefix to form a 48-bit link-layer address.

For a socket to use multicast, it must join a multicast group using the setsockopt system call. This call informs the link layer that it should receive multicasts for the corresponding link-layer address, and it also sends a multicast membership report using the Internet Group Management Protocol (Cain et al., 2002) . Multicast agents on the network can thus keep track of the members of each group. Multicast agents receive all multicast packets from directly attached networks and forward multicast datagrams as needed to group members on other networks. This function is similar to the role of routers that forward normal (unicast) packets, but the criteria for packet forwarding are different, and a packet can be forwarded to multiple neighboring networks.

At the IP level, packets are addressed to a host rather than to a process or communications port. However, each packet contains an 8-bit protocol number that identifies the next protocol that should receive the packet. Internet transport protocols use an additional identifier to designate the connection or communications port on the host. Most protocols (including TCP and UDP) use a 16-bit port number for this purpose. Each transport protocol maintains its own mapping of port numbers to processes or descriptors. Thus, an association, such as a connection, is fully specified by the tuple <source address, destination address, protocol number, source port, destination port>. Connection-oriented protocols, such as TCP, must enforce the uniqueness of associations; other protocols generally do so as well. When the local part of the address is set before the remote part, it is necessary to choose a unique port number to prevent collisions when the remote part is specified.

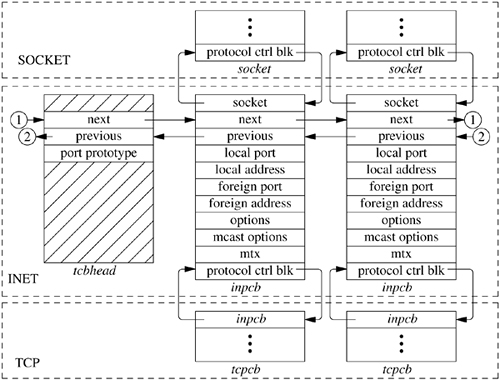

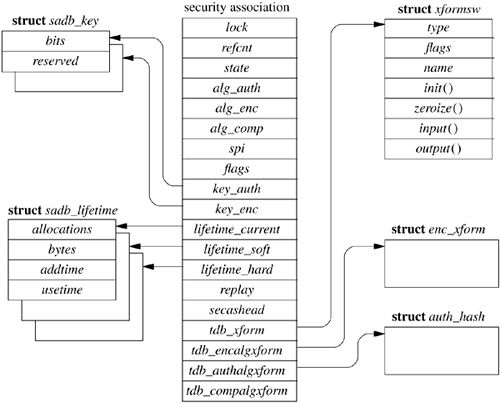

For each TCP- or UDP-based socket, an Internet protocol control block (an inpcb structure) is created to hold Internet network addresses, port numbers, routing information, and pointers to any auxiliary data structures. TCP, in addition, creates a TCP control block (a tcpcb structure) to hold the wealth of protocol state information necessary for its implementation. Internet control blocks for use with TCP are maintained on a doubly linked list private to the TCP protocol module. Internet control blocks for use with UDP are kept on a similar list private to the UDP protocol module. Two separate lists are needed because each protocol in the Internet domain has a distinct space of port identifiers. Common routines are used by the individual protocols to add new control blocks to a list, fix the local and remote parts of an association, locate a control block by association, and delete control blocks. IP demultiplexes message traffic based on the protocol identifier specified in its protocol header, and each higher-level protocol is then responsible for checking its list of Internet control blocks to direct a message to the appropriate socket. Figure 13.3 shows the linkage between the socket data structure and these protocol-specific data structures.

The implementation of the Internet protocols is tightly coupled, as befits the strong intertwining of the protocols. For example, the transport protocols send and receive packets including not only their own header, but also an IP pseudo-header containing the source and destination address, the protocol identifier, and a packet length. This pseudo-header is included in the transport-level packet checksum.

We are now ready to examine the operation of the Internet protocols. We begin with UDP, because it is far simpler than TCP.

The User Datagram Protocol (UDP) [Postel, 1980] is a simple, unreliable datagram protocol that provides only peer-to-peer addressing and optional data checksums. In FreeBSD, checksums are enabled or disabled on a systemwide basis and cannot be enabled or disabled on individual sockets. UDP protocol headers are extremely simple, containing only the source and destination port numbers, the datagram length, and the data checksum. The host addresses for a datagram are provided by the IP pseudo-header.

When a new datagram socket is created in the Internet domain, the socket layer locates the protocol-switch entry for UDP and calls the udp_attach() routine with the socket as a parameter. UDP uses in_pcballoc() to create a new protocol control block on its list of current sockets. It also sets the default limits for the socket send and receive buffers. Although datagrams are never placed in the send buffer, the limit is set as an upper limit on datagram size; the UDP protocol-switch entry contains the flag PR_ATOMIC, requiring that all data in a send operation be presented to the protocol at one time.

If the application program wishes to bind a port number—for example, the well-known port for some datagram service—it calls the bind system call. This request reaches UDP as a call to the udp_bind() routine. The binding may also specify a specific host address, which must be an address of an interface on this host. Otherwise, the address will be left unspecified, matching any local address on input, and with an address chosen as appropriate on each output operation. The binding is done by in_pcbbind(), which verifies that the chosen port number (or address and port) is not in use and then records the local part of the association.

To send datagrams, the system must know the remote part of an association. A program can specify this address and port with each send operation using sendto or sendmsg, or it can do the specification ahead of time with the connect system call. In either case, UDP uses the in_pcbconnect() function to record the destination address and port. If the local address was not bound, and if a route for the destination is found, the address of the outgoing interface is used as the local address. If no local port number was bound, one is chosen at this time.

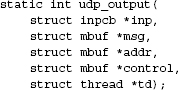

A system call that sends data reaches UDP as a call to the udp_send() routine that takes a chain of mbufs containing the data for the datagram. If the call provided a destination address, the address is passed as well; otherwise, the address from a prior connect call is used. The actual output operation is done by udp_output(),

where inp is an IPv4 protocol control block, msg is chain of mbufs that contain the data to be sent, addr is an optional mbuf containing the destination address, and td is a pointer to a thread structure. Thread structures were discussed in Section 4.2 and are used within the network stack to identify the sender of a packet, which is why they are only used with output routines. Any ancillary data in control are discarded. The destination address could have been prespecified with a connect call; otherwise, it must be provided in the send call. UDP simply prepends its own header, fills in the UDP header fields and those of a prototype IP header, and calculates a checksum before passing the packet on to the IP module for output:

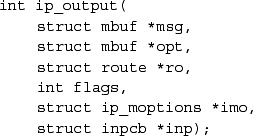

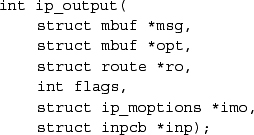

The call to IP's output routine is more complicated than UDP's because the IP routine needs to have more information specified about the endpoint to which it is communicating. The msg parameter indicates the message to be sent, and the opt parameter may specify a list of IP options that should be placed in the IP packet header. For multicast destinations, the into parameter may reference multicast options, such as the choice of interface and hop count for multicast packets. IP options may be set for a socket with the setsockopt system call specifying the IP protocol level and option IP_OPTIONS. These options are stored in a separate mbuf, and a pointer to this mbuf is stored in the protocol control block for a socket. The pointer to the options is passed to ip_output() with each packet sent. The ro parameter is optional and is passed as NULL by the udp_output() routine so that IP will determine a route for the packet. The flags parameter indicates whether the user is allowed to transmit a broadcast message and whether routing is to be bypassed for the message being sent (see Section 13.3). The broadcast flag may be inconsequential if the underlying hardware does not support broadcast transmissions. The flags also indicate whether the packet includes an IP pseudo-header or a completely initialized IP header, as when IP forwards packets.

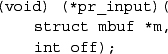

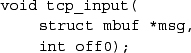

All Internet transport protocols that are layered directly on top of IP use the following calling convention when receiving packets from IP:

Each mbuf chain passed is a single packet to be processed by the protocol module. The packet includes the IP header in lieu of a pseudo-header, and the IP header length is passed as an offset: the second parameter. The UDP input routine udp_input() is typical of protocol input routines. It first verifies that the length of the packet is at least as long as the IP plus UDP headers, and it uses m_pullup() to make the header contiguous. The udp_input() routine then checks that the packet is the correct length and calculates a checksum for the data in the packet. If any of these tests fail, the packet is discarded, and error counts are increased. Any multicasting issues are handled next. Finally, the protocol control block for the socket that is to receive the data is located by in_pcblookup() using the addresses and port numbers in the packet. There might be multiple control blocks with the same local port number but different local or remote addresses; if so, the control block with the best match is selected. An exact association matches best, but if none exists, a socket with the correct local port number but unspecified local address, remote port number, or remote address will match. A control block with unspecified local or remote addresses thus acts as a wildcard that receives packets for its port if no exact match is found. If a control block is located, the data and the address from which the packet was received are placed in the receive buffer of the indicated socket with udp_append(). If the destination address is a multicast address, copies of the packet are delivered to each socket with matching addresses. Otherwise, if no receiver is found and if the packet was not addressed to a broadcast or multicast address, an ICMP port unreachable error message is sent to the originator of the datagram. This error message normally has no effect, as the sender typically connects to this destination only temporarily, and destroys the association before new input is processed. However, if the sender still has a fully specified association, it may receive notification of the error.

UDP supports no control operations and passes calls to its pr_ctloutput() entry directly to IP. It has a simple pr_ctlinput() routine that receives notification of any asynchronous errors. Errors are passed to any datagram socket with the indicated destination; only sockets with a destination fixed by a connect call may be notified of errors asynchronously. Such errors are simply noted in the appropriate socket, and socket wakeups are issued if the process is selecting or sleeping while waiting for input.

When a UDP datagram socket is closed, the udp_detach() routine is called. The protocol control block and its contents are simply deleted with in_pcbdetach(); no other processing is required.

Having examined the operation of a simple transport protocol, we continue with a discussion of the network-layer protocol [Postel, 1981a; Postel et al., 1981]. The Internet Protocol (IP) is the level responsible for host-to-host addressing and routing, packet forwarding, and packet fragmentation and reassembly. Unlike the transport protocols, it does not always operate for a socket on the local host; it may forward packets, receive packets for which there is no local socket, or generate error packets in response to these situations.

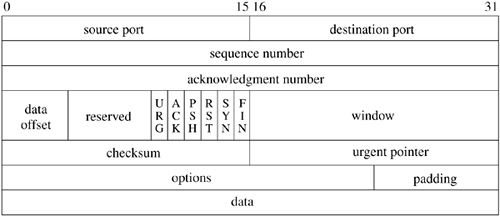

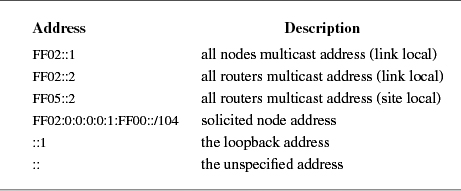

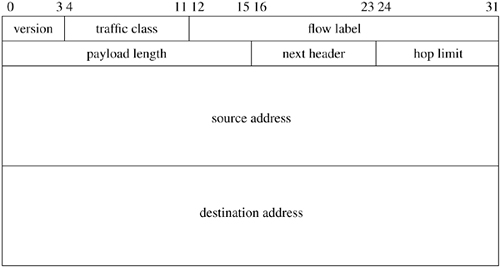

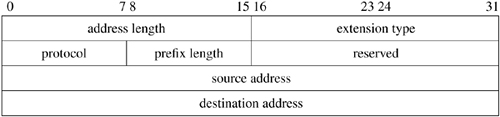

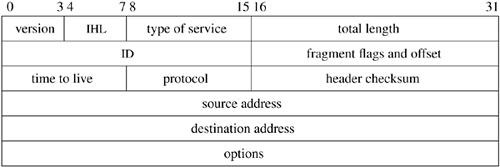

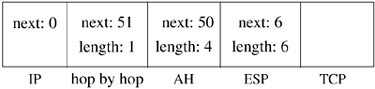

The functions done by IP are illustrated by the contents of its packet header, shown in Figure 13.4. The header identifies source and destination hosts and the destination protocol, and it contains header and packet lengths. The identification and fragment fields are used when a packet or fragment must be broken into smaller sections for transmission on its next hop and to reassemble the fragments when they arrive at the destination. The fragmentation flags are Don't Fragment and More Fragments; the latter flag plus the offset are enough information to assemble the fragments of the original packet at the destination.

Figure 13.4. IPv4 header. IHL is the Internet header length specified in units of four octets. Options are delimited by IHL. All field lengths are given in bits.

IP options are present in an IP packet if the header length field has a value larger than the minimum, which is 20 bytes. The no-operation option and the end-of-option-list option are each one octet in length. All other options are self-encoding, with a type and length preceding any additional data. Hosts and routers are thus able to skip over options that they do not implement. Examples of existing options are the timestamp and record-route options, which are updated by each router that forwards a packet, and the source-route options, which supply a complete or partial route to the destination.

In practice these are rarely used and most network operators silently drop packets with the source-route option because it makes it difficult to manage traffic on the network.

We have already seen the calling convention for the IP output routine, which is

As described in the subsection on output in Section 13.2, the parameter msg is an mbuf chain containing the packet to be sent, including a skeletal IP header; opt is an optional mbuf containing IP options to be inserted after the header. If the route ro is given, it may contain a reference to a routing entry (rtentry structure), which specifies a route to the destination from a previous call, and in which any new route will be left for future use. Since cached routes were removed from the inpcb structure in FreeBSD 5.2, this cached route is seldomly used. The flags may allow the use of broadcast or may indicate that the routing tables should be bypassed. If present, imo includes options for multicast transmissions. The protocol control block, inp, is used by the IPSec subsystem (see Section 13.10) to hold data about security associations for the packet.

The outline of the work done by ip_output() is as follows:

- Insert any IP options.

- Fill in the remaining header fields (IP version, zero offset, header length, and a new packet identification) if the packet contains an IP pseudo-header.

- Determine the route (i.e., outgoing interface and next-hop destination).

- Check whether the destination is a multicast address. If it is, determine the outgoing interface and hop count.

- Check whether the destination is a broadcast address; if it is, check whether broadcast is permitted.

- Do any IPSec manipulations that are necessary on the packet such as encryption.

- See if there are any filtering rules that would modify the packet or prevent us from sending it.

- If the packet size is no larger than the maximum packet size for the outgoing interface, compute the checksum and call the interface output routine.

- If the packet size is larger than the maximum packet size for the outgoing interface, break the packet into fragments and send each in turn.

We shall examine the routing step in more detail. First, if no route reference is passed as a parameter, an internal routing reference structure is used temporarily. A route structure that is passed from the caller is checked to see that it is a route to the same destination and that it is still valid. If either test fails, the old route is freed. After these checks, if there is no route, rtalloc_ign() is called to allocate a route. The route returned includes a pointer to the outgoing interface. The interface information includes the maximum packet size, flags including broadcast and multicast capability, and the output routine. If the route is marked with the RTF_GATEWAY flag, the address of the next-hop router is given by the route; otherwise, the packet's destination is the next-hop destination. If routing is to be bypassed because of a MSG_DONTROUTE option (see Section 11.1) or a SO_DONTROUTE option, a directly attached network shared with the destination is sought; if there is no directly attached network, an error is returned. Once the outgoing interface and next-hop destination are found, enough information is available to send the packet.

As described in Chapter 12, the interface output routine normally validates the destination address and places the packet on its output queue, returning errors only if the interface is down, the output queue is full, or the destination address is not understood.

In Chapter 12, we described the reception of a packet by a network interface and the packet's placement on the input queue for the appropriate protocol. The network-interface handler then schedules the protocol to run by setting a corresponding bit in the network status word and scheduling the network thread. The IPv4 input routine is invoked via this software interrupt when network in`terfaces receive messages for the IPv4 protocol. The input routine, ip_input(), is called with an mbuf that contains the packet it is to process. The dequeueing of packets and the calls into the input routine are handled by the network thread calling netisr_dispatch(). A packet is processed in one of four ways: it is passed as input to a higher-level protocol, it encounters an error that is reported back to the source, it is dropped because of an error, or it is forwarded to the next hop on its path to its destination. In outline form, the steps in the processing of a packet on input are as follows:

- Verify that the packet is at least as long as an IPv4 header and ensure that the header is contiguous.

- Checksum the header of the packet and discard the packet if there is an error.

- Verify that the packet is at least as long as the header indicates and drop the packet if it is not. Trim any padding from the end of the packet.

- Do any filtering or security functions required by ipfw or IPSec.

- Process any options in the header.

- Check whether the packet is for this host. If it is, continue processing the packet. If it is not, and if acting as a router, try to forward the packet. Otherwise, drop the packet.

- If the packet has been fragmented, keep it until all its fragments are received and reassembled, or until it is too old to keep.

- Pass the packet to the input routine of the next-higher-level protocol.

When the incoming packet is passed into the input routine, it is accompanied by a pointer to the interface on which the packet was received. This information is passed to the next protocol, to the forwarding function, or to the error-reporting function. If any error is detected and is reported to the packet's originator, the source address of the error message will be set according to the packet's destination and the incoming interface.

The decision whether to accept a received packet for local processing by a higher-level protocol is not as simple as one might think. If a host has multiple addresses, the packet is accepted if its destination matches any one of those addresses. If any of the attached networks support broadcast and the destination is a broadcast address, the packet is also accepted.

The IPv4 input routine uses a simple and efficient scheme for locating the input routine for the receiving protocol of an incoming packet. The protocol field in the packet is 8 bits long; thus, there are 256 possible protocols. Fewer than 256 protocols are defined or implemented, and the Internet protocol switch has far fewer than 256 entries. Therefore, ip_input() uses a 256-element mapping array to map from the protocol number to the protocol-switch entry of the receiving protocol. Each entry in the array is initially set to the index of a raw IP entry in the protocol switch. Then, for each protocol with a separate implementation in the system, the corresponding map entry is set to the index of the protocol in the IP protocol switch. When a packet is received, IP simply uses the protocol field to index into the mapping array and calls the input routine of the appropriate protocol.

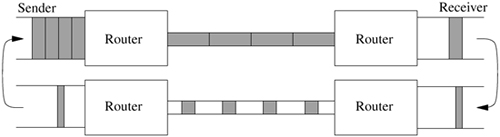

Implementations of IPv4 traditionally have been designed for use by either hosts or routers, rather than by both. That is, a system was either an endpoint for packets (as source or destination) or a router (which forwards packets between hosts on different networks but only uses upper-level protocols for maintenance functions). Traditional host systems do not incorporate packet-forwarding functions; instead, if they receive packets not addressed to them, they simply drop the packets. 4.2BSD was the first common implementation that attempted to provide both host and router services in normal operation. This approach had advantages and disadvantages. It meant that 4.2BSD hosts connected to multiple networks could serve as routers as well as hosts, reducing the requirement for dedicated router hardware. Early routers were expensive and not especially powerful. Alternatively, the existence of router-function support in ordinary hosts made it more likely for misconfiguration errors to result in problems on the attached networks. The most serious problem had to do with forwarding of a broadcast packet because of a misunderstanding by either the sender or the receiver of the packet's destination. The packet-forwarding router functions are disabled by default in FreeBSD. They may be enabled at run time with the sysctl call. Hosts not configured as routers never attempt to forward packets or to return error messages in response to misdirected packets. As a result, far fewer misconfiguration problems are capable of causing synchronized or repetitive broadcasts on a local network, called broadcast storms.

The procedure for forwarding IP packets received at a router but destined for another host is the following:

- Check that forwarding is enabled. If it is not, drop the packet.

- Check that the destination address is one that allows forwarding. Packets destined for network 0, network 127 (the official loopback network), or illegal network addresses cannot be forwarded.

- Save at most 64 octets of the received message in case an error message must be generated in response.

- Determine the route to be used in forwarding the packet.

- If the outgoing route uses the same interface as that on which the packet was received, and if the originating host is on that network, send an ICMP redirect message to the originating host. (ICMP is described in Section 13.8.)

- Handle any IPSec updates that must be made to the packet header.

- Call ip_output() to send the packet to its destination or to the next-hop gateway.

- If an error is detected, send an ICMP error message to the source host.

Multicast transmissions are handled separately from other packets. Systems may be configured as multicast routers independently from other routing functions. Multicast routers receive all incoming multicast packets, and forward those packets to local receivers and group members on other networks according to group memberships and the remaining hop count of incoming packets.

The most used protocol of the Internet protocol suite is the Transmission Control Protocol (TCP) [Cerf & Kahn, 1974; Postel, 1981b]. TCP is the reliable connection-oriented stream transport protocol on top of which most application protocols are layered. It includes several features not found in the other transport and network protocols described so far:

• Explicit and acknowledged connection initiation and termination

• Reliable, in-order, unduplicated delivery of data

• Flow control

• Out-of-band indication of urgent data

• Congestion avoidance

Because of these features, the TCP implementation is much more complicated than are those of UDP and IP. These complications, along with the prevalence of the use of TCP, make the details of TCP's implementation both more critical and more interesting than are the implementations of the simpler protocols. Figure 13.5 shows the flow of data through a TCP connection. We shall begin with an examination of the TCP itself and then continue with a description of its implementation in FreeBSD.

Figure 13.5. Data flow through a TCP/IP connection over an Ethernet. Key: ETHER—Ethernet header; PIP—pseudo-IP header; IP—IP header; TCP—TCP header; IF—interface.

A TCP connection may be viewed as a bidirectional, sequenced stream of data transferred between two peers. The data may be sent in packets of varying sizes and at varying intervals—for example, when they are used to support a login session over the network. The stream initiation and termination are explicit events at the start and end of the stream, and they occupy positions in the sequence space of the stream so that they can be acknowledged in the same way as data are. Sequence numbers are 32-bit numbers from a circular space; that is, comparisons are made modulo 232, so zero is the next sequence number after 232-l. The sequence numbers for each direction start with an arbitrary value, called the initial sequence number, sent in the initial packet for a connection. Following Bellovin [1996], the TCP implementation selects the initial sequence number by computing a function over the 4 tuple local port, foreign port, local address, foreign address that uniquely identifies the connection, and then adding a small offset based on the current time. This algorithm prevents the spoofing of TCP connections by an attacker guessing the next initial sequence number for a connection. This must be done while also guaranteeing that an old duplicate packet will not match the sequence space of a current connection.

Each packet of a TCP connection carries the sequence number of its first datum and (except during connection establishment) an acknowledgment of all contiguous data received. A TCP packet is known as a segment because it begins at a specific location in the sequence space and has a specific length. Acknowledgments are specified as the sequence number of the next sequence number not yet received. Acknowledgments are cumulative and thus may acknowledge data received in more than one (or part of one) packet. A packet may or may not contain data, but it always contains the sequence number of the next datum to be sent.

Flow control in TCP is done with a sliding-window scheme. Each packet with an acknowledgment contains a window, which is the number of octets of data that the receiver is prepared to accept, beginning with the sequence number in the acknowledgment. The window is a 16-bit field, limiting the window to 65535 octets by default; however, the use of a larger window may be negotiated. Urgent data are handled similarly; if the flag indicating urgent data is set, the urgent-data pointer is used as a positive offset from the sequence number of the packet to indicate the extent of urgent data. Thus, TCP can send notification of urgent data without sending all intervening data, even if the flow-control window would not allow the intervening data to be sent.

The complete header for a TCP packet is shown in Figure 13.6. The flags include SYN and FIN, denoting the initiation (synchronization) and completion of a connection. Each of these flags occupies a sequence space of one. A complete connection thus consists of a SYN, zero or more octets of data, and a FIN sent from each peer and acknowledged by the other peer. Additional flags indicate whether the acknowledgment field (ACK) and urgent fields (URG) are valid, and include a connection-abort signal (RST). Options are encoded in the same way as are IP options: The no-operation and end-of-options options are single octets, and all other options include a type and a length. The only option in the initial specification of TCP indicates the maximum segment (packet) size that a correspondent is willing to accept; this option is used only during initial connection establishment. Several other options have been defined. To avoid confusion, the protocol standard allows these options to be used in data packets only if both end-points include them during establishment of the connection.

The connection-establishment and connection-completion mechanisms of TCP are designed for robustness. They serve to frame the data that are transferred during a connection so that not only the data but also their extent are communicated reliably. In addition, the procedure is designed to discover old connections that have not terminated correctly because of a crash of one peer or loss of network connectivity. If such a half-open connection is discovered, it is aborted. Hosts choose new initial sequence numbers for each connection to lessen the chances that an old packet may be confused with a current connection.

The normal connection-establishment procedure is known as a three-way handshake. Each peer sends a SYN to the other, and each in turn acknowledges the other's SYN with an ACK. In practice, a connection is normally initiated by a client attempting to connect to a server listening on a well-known port. The client chooses a port number and initial sequence number and uses these selections in the initial packet with a SYN. The server creates a protocol control block for the pending connection and sends a packet with its initial sequence number, a SYN, and an ACK of the client's SYN. The client responds with an ACK of the server's SYN, completing connection establishment. As the ACK of the first SYN is piggybacked on the second SYN, this procedure requires three packets, leading to the term three-way handshake. The protocol still operates correctly if both peers attempt to start a connection simultaneously, although the connection setup requires four packets.

FreeBSD includes three options along with the SYN when initiating a connection. One contains the maximum segment size that the system is willing to accept [Jacobson et al., 1992]. The second of these options specifies a window-scaling value expressed as a binary shift value, allowing the window to exceed 65535 octets. If both peers include this option during the three-way handshake, both scaling values take effect; otherwise, the window value remains in octets. The third option is a timestamp option. If this option is sent in both directions during connection establishment, it will also be sent in each packet during data transfer. The data field of the timestamp option includes a timestamp associated with the current sequence number and also echoes a timestamp associated with the current acknowledgment. Like the sequence space, the timestamp uses a 32-bit field and modular arithmetic. The unit of the timestamp field is not defined, although it must fall between 1 millisecond and 1 second. The value sent by each system must be monotonically nondecreasing during a connection. FreeBSD uses the value of ticks, which is incremented at the system clock rate, HZ. These time-stamps can be used to implement round-trip timing. They also serve as an extension of the sequence space to prevent old duplicate packets from being accepted; this extension is valuable when a large window or a fast path, such as an Ethernet, is used.

After a connection is established, each peer includes an acknowledgment and window information in each packet. Each may send data according to the window that it receives from its peer. As data are sent by one end, the window becomes filled. As data are received by the peer, acknowledgments may be sent so that the sender can discard the data from its send queue. If the receiver is prepared to accept additional data, perhaps because the receiving process has consumed the previous data, it will also advance the flow-control window. Data, acknowledgments, and window updates may all be combined in a single message.

If a sender does not receive an acknowledgment within some reasonable time, it retransmits data that it presumes were lost. Duplicate data are discarded by the receiver but are acknowledged again in case the retransmission was caused by loss of the acknowledgment. If the data are received out of order, the receiver generally retains the out-of-order data for use when the missing segment is received. Out-of-order data cannot be acknowledged because acknowledgments are cumulative. A selective acknowledgment mechanism was introduced in Jacobson et al. [1992] but is not implemented in FreeBSD.

Each peer may terminate data transmission at any time by sending a packet with the FIN bit. A FIN represents the end of the data (like an end-of-file indication). The FIN is acknowledged, advancing the sequence number by 1. The connection may continue to carry data in the other direction until a FIN is sent in that direction. The acknowledgment of the FIN terminates the connection. To guarantee synchronization at the conclusion of the connection, the peer sending the last ACK of a FIN must retain state long enough that any retransmitted FIN packets would have reached it or have been discarded; otherwise, if the ACK were lost and a retransmitted FIN were received, the receiver would be unable to repeat the acknowledgment. This interval is arbitrarily set to twice the maximum expected segment lifetime (known as 2MSL).

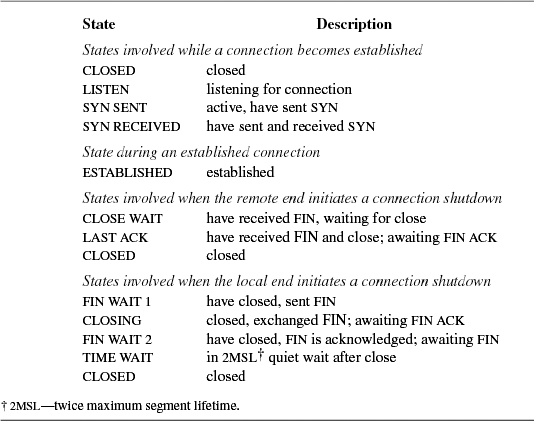

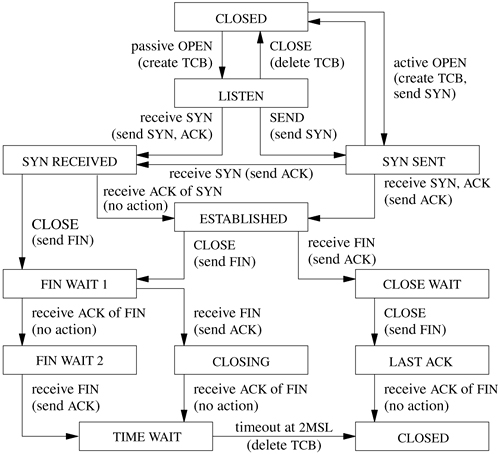

The TCP input-processing module and timer modules must maintain the state of a connection throughout that connection's lifetime. Thus, in addition to processing data received on the connection, the input module must process SYN and FIN flags and other state transitions. The list of states for one end of a TCP connection is given in Table 13.1. Figure 13.7 (on page 532) shows the finite-state machine made up by these states, the events that cause transitions, and the actions during the transitions.

If a connection is lost because of a crash or timeout on one peer but is still considered established by the other, then any data sent on the connection and received at the other end will cause the half-open connection to be discovered. When a half-open connection is detected, the receiving peer sends a packet with the RST flag and a sequence number derived from the incoming packet to signify that the connection is no longer in existence.

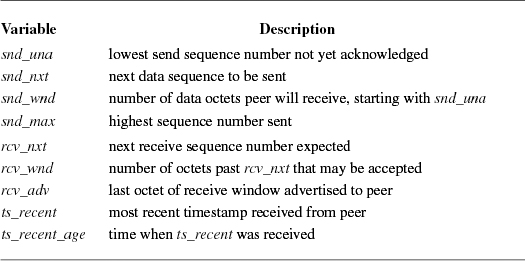

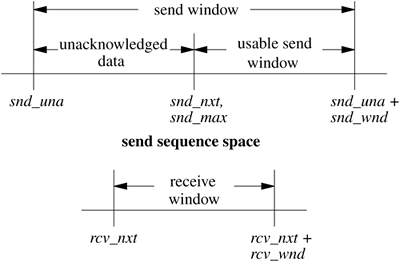

Each TCP connection maintains a large set of state variables in the TCP control block. This information includes the connection state, timers, options and state flags, a queue that holds data received out of order, and several sequence number variables. The sequence variables are used to define the send and receive sequence space, including the current window for each. The window is the range of data sequence numbers that are currently allowed to be sent, from the first octet of data not yet acknowledged up to the end of the range that has been offered in the window field of a header. The variables used to define the windows in FreeBSD are a superset of those used in the protocol specification [Postel, 1981b]. The send and receive windows are shown in Figure 13.8. The meanings of the sequence variables are listed in Table 13.2.

The area between snd_una and snd_una + snd_wnd is known as the send window. Data for the range snd_una to snd_max have been sent but not yet acknowledged and are kept in the socket send buffer along with data not yet transmitted. The snd_nxt field indicates the next sequence number to be sent and is incremented as data are transmitted. The area from snd_nxt to snd_una + snd_wnd is the remaining usable portion of the window, and its size determines whether additional data may be sent. The snd_nxt and snd_max values are normally maintained together except when TCP is retransmitting. The area between rcv_nxt and rcv_nxt + rcv_wnd is known as the receive window.

These variables are used in the output module to decide whether data can be sent, and in the input module to decide whether data that are received can be accepted. When the receiver detects that a packet is not acceptable because the data are all outside the window, it drops the packet but sends a copy of its most recent acknowledgment. If the packet contained old data, the first acknowledgment may have been lost, and thus it must be repeated. The acknowledgment also includes a window update, synchronizing the sender's state with the receiver's state.

If the TCP timestamp option is in use for the connection, the tests to see whether an incoming packet is acceptable are augmented with checks on the time-stamp. Each time that an incoming packet is accepted as the next expected packet, its timestamp is recorded in the ts_recent field in the TCP protocol control block. If an incoming packet includes a timestamp, the timestamp is compared to the most recently received timestamp. If the timestamp is less than the previous value, the packet is discarded as being an old duplicate and a current acknowledgment is sent in response. In this way, the timestamp serves as an extension to the sequence number, avoiding accidental acceptance of an old duplicate when the window is large or sequence numbers can be reused quickly. However, because of the granularity of the timestamp value, a timestamp received more than 24 days ago cannot be compared to a new value, and this test is bypassed. The current time is recorded when ts_recent is updated from an incoming timestamp to make this test. Of course, connections are seldom idle for longer than 24 days.

Now that we have introduced TCP, its state machine, and its sequence space, we can begin to examine the implementation of the protocol in FreeBSD. Several aspects of the protocol implementation depend on the overall state of a connection. The TCP connection state, output state, and state changes depend on external events and timers. TCP processing occurs in response to one of three events:

- A request from the user, such as sending data, removing data from the socket receive buffer, or opening or closing a connection

- The receipt of a packet for the connection

- The expiration of a timer

These events are handled in the routines tcp_usr_send(), tcp_input(), and a set of timer routines. Each routine processes the current event and makes any required changes in the connection state. Then, for any transition that may require sending a packet, the tcp_output() routine is called to do any output that is necessary.

The criteria for sending a packet with data or control information are complicated, and therefore the TCP send policy is the most interesting and important part of the protocol implementation. For example, depending on the state- and flow-control parameters for a connection, any of the following may allow data to be sent that could not be sent previously:

• A user send call that places new data in the send queue

• The receipt of a window update from the peer

• The expiration of the retransmission timer

• The expiration of the window-update (persist) timer

In addition, the tcp_output() routine may decide to send a packet with control information, even if no data may be sent, for any of these reasons:

• A change in connection state (e.g., open request, close request)

• Receipt of data that must be acknowledged

• A change in the receive window because of removal of data from the receive queue

• A send request with urgent data

• A connection abort

We shall consider most of these decisions in greater detail after we have described the states and timers involved. We begin with algorithms used for timing, connection setup, and shutdown; they are distributed through several parts of the code. We continue with the processing of new input and an overview of output processing and algorithms.

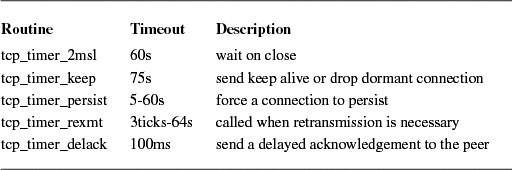

Unlike a UDP socket, a TCP connection maintains a significant amount of state information, and, because of that state, some operations must be done asynchronously. For example, data might not be sent immediately when a process presents them because of flow control. The requirement for reliable delivery implies that data must be retained after they are first transmitted so that they can be retransmitted if necessary. To prevent the protocol from hanging if packets are lost, each connection maintains a set of timers used to recover from losses or failures of the peer. These timers are stored in the protocol control block for a connection. The kernel provides a timer service via a set of callout() routines. The TCP module can register up to five timeout routines with the callout service, as shown in Table 13.3. Each routine has its own associated time at which it will be called. In earlier versions of BSD, timeouts were handled by the tcp_slowtimo() routine that was called every 500 milliseconds and would then do timer processing when necessary. Using the kernel's timer service is both more accurate, since each timer can be handled independently, and has less overhead, because no routine is called unless absolutely necessary.

Two timers are used for output processing. Whenever data are sent on a connection, the retransmit timer (tcp_rexmt()) is started by a call to callout_reset(), unless it is already running. When all outstanding data are acknowledged, the timer is stopped. If the timer expires, the oldest unacknowledged data are resent (at most one full-sized packet), and the timer is restarted with a longer value. The rate at which the timer value is increased (the timer backoff) is determined by a table of multipliers that provides an exponential increase in timeout values up to a ceiling.

The other timer used for maintaining output flow is the persist timer (tcp_timer_persist()) This timer protects against the other type of packet loss that could cause a connection to constipate: the loss of a window update that would allow more data to be sent. Whenever data are ready to be sent but the send window is too small to bother sending (zero, or less than a reasonable amount), and no data are already outstanding (the retransmit timer is not set), the persist timer is started. If no window update is received before the timer expires, the routine sends as large a segment as the window allows. If that size is zero, it sends a window probe (a single octet of data) and restarts the persist timer. If a window update was lost in the network, or if the receiver neglected to send a window update, the acknowledgment will contain current window information. On the other hand, if the receiver is still unable to accept additional data, it should send an acknowledgment for previous data with a still-closed window. The closed window might persist indefinitely; for example, the receiver might be a network-login client, and the user might stop terminal output and leave for lunch (or vacation).

The third timer used by TCP is a keepalive timer (tcp_timer_keep()) The keepalive timer has two different purposes at different phases of a connection. During connection establishment, this timer limits the time for the three-way handshake to complete. If the timer expires during connection setup, then the connection is closed. Once the connection completes, the keepalive timer monitors idle connections that might no longer exist on the peer because of a network partition or a crash. If a socket-level option is set and the connection has been idle since the most recent keepalive timeout, the timer routine will send a keepalive packet designed to produce either an acknowledgment or a reset (RST) from the peer TCP. If a reset is received, the connection will be closed; if no response is received after several attempts, the connection will be dropped. This facility is designed so that network servers can avoid languishing forever if the client disappears without closing. Keepalive packets are not an explicit feature of the TCP protocol. The packets used for this purpose by FreeBSD set the sequence number to 1 less than snd_una, which should elicit an acknowledgment from the peer if the connection still exists.

The fourth TCP timer is known as the 2MSL timer ("twice the maximum segment lifetime"). TCP starts this timer when a connection is completed by sending an acknowledgment for a FIN (from FIN_WAIT_2) or by receiving an ACK for a FIN (from CLOSING state, where the send side is already closed). Under these circumstances, the sender does not know whether the acknowledgment was received. If the FIN is retransmitted, it is desirable that enough state remain that the acknowledgment can be repeated. Therefore, when a TCP connection enters the TIME_WAIT state, the 2MSL timer is started; when the timer expires, the control block is deleted. If a retransmitted FIN is received, another ACK is sent, and the timer is restarted. To prevent this delay from blocking a process closing the connection, any process close request is returned successfully without the process waiting for the timer. Thus, a protocol control block may continue its existence even after the socket descriptor has been closed. In addition, FreeBSD starts the 2MSL timer when FIN_WAIT_2 state is entered after the user has closed; if the connection is idle until the timer expires, it will be closed. Because the user has already closed, new data cannot be accepted on such a connection in any case. This timer is set because certain other TCP implementations (incorrectly) fail to send a FIN on a receive-only connection. Connections to such hosts would remain in FIN_WAIT_2 state forever if the system did not have a timeout.

The final timer is the tcp_timer_delack(), which processes delayed acknowledgments. This will be described in Section 13.6.

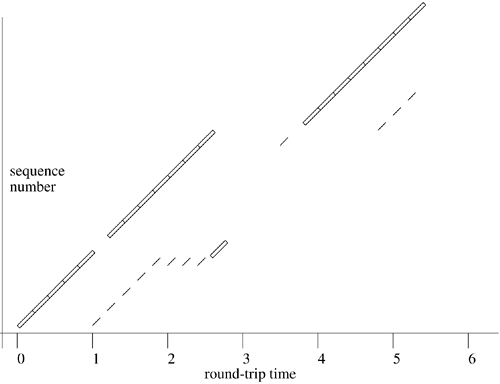

When connections must traverse slow networks that lose packets, an important decision determining connection throughput is the value to be used when the retransmission timer is set. If this value is too large, data flow will stop on the connection for an unnecessarily long time before the dropped packet is resent. Another round-trip time interval is required for the sender to receive an acknowledgment of the resent segment and a window update, allowing it to send new data. (With luck, only one segment will have been lost, and the acknowledgment will include the other segments that had been sent.) If the timeout value is too small, however, packets will be retransmitted needlessly. If the cause of the network slowness or packet loss is congestion, then unnecessary retransmission only exacerbates the problem. The traditional solution to this problem in TCP is for the sender to estimate the round-trip time (rtt) for the connection path by measuring the time required to receive acknowledgments for individual segments. The system maintains an estimate of the round-trip time as a smoothed moving average, srtt [Postel, 1981b], using

In addition to a smoothed estimate of the round-trip time, TCP keeps a smoothed variance (estimated as mean difference, to avoid square-root calculations in the kernel). It employs an a value of 0.875 for the round-trip time and a corresponding smoothing factor of 0.75 for the variance. These values were chosen in part so that the system could compute the smoothed averages using shift operations on fixed-point values instead of floating-point values because on many hardware architectures it is expensive to use floating-point arithmetic. The initial retransmission timeout is then set to the current smoothed round-trip time plus four times the smoothed variance. This algorithm is substantially more efficient on long-delay paths with little variance in delay, such as satellite links, because it computes the BETA factor dynamically [Jacobson, 1988].

For simplicity, the variables in the TCP protocol control block allow measurement of the round-trip time for only one sequence value at a time. This restriction prevents accurate time estimation when the window is large; only one packet per window can be timed. However, if the TCP timestamps option is supported by both peers, a timestamp is sent with each data packet and is returned with each acknowledgment. Here, estimates of round-trip time can be obtained with each new acknowledgment; the quality of the smoothed average and variance is thus improved, and the system can respond more quickly to changes in network conditions.

There are two ways in which a new TCP connection can be established. An active connection is initiated by a connect call, whereas a passive connection is created when a listening socket receives a connection request. We consider each in turn.

The initial steps of an active connection attempt are similar to the actions taken during the creation of a UDP socket. The process creates a new socket, resulting in a call to the tcp_attach() routine. TCP creates an inpcb protocol control block and then creates an additional control block (a tcpcb structure), as described in Section 13.1. Some of the flow-control parameters in the tcpcb are initialized at this time. If the process explicitly binds an address or port number to the connection, the actions are identical to those for a UDP socket. Then a tcp_connect() call initiates the actual connection. The first step is to set up the association with in_pcbconnect(), again identically to this step in UDP. A packet-header template is created for use in construction of each output packet. An initial sequence number is chosen from a sequence-number prototype, which is then advanced by a substantial amount. The socket is then marked with soisconnecting(), the TCP connection state is set to TCPS_SYN_SENT, the keepalive timer is set (to 75 seconds) to limit the duration of the connection attempt, and tcp_output() is called for the first time.

The output-processing module tcp_output() uses an array of packet control flags indexed by the connection state to determine which control flags should be sent in each state. In the TCPS_SYN_SENT state, the SYN flag is sent. Because it has a control flag to send, the system sends a packet immediately using the prototype just constructed and including the current flow-control parameters. The packet normally contains three option fields: a maximum-segment-size option, a window-scale option, and a timestamps option (see Section 13.4). The maximum-segment-size option communicates the largest segment size that TCP is willing to accept. To compute this value, the system locates a route to the destination. If the route specifies a maximum transmission unit (MTU), the system uses that value after allowing for packet headers. If the connection is to a destination on a local network the maximum transmission unit of the outgoing network interface is used, possibly rounding down to a multiple of the mbuf cluster size for efficiency of buffering. If the destination is not local and nothing is known about the intervening path, the default segment size (512 octets) is used.

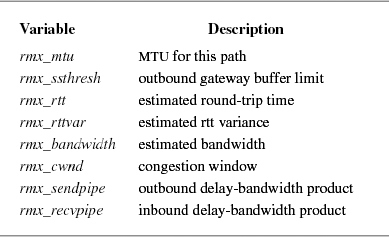

In earlier versions of FreeBSD many of the important variables relating to TCP connections, such as the MTU of the path between the two endpoints, and the data used to manage the connection were contained in the route entry that described the connection and in a routing entry as a set of route metrics. The TCP host cache was developed to centralize all this information in one easy-to-find place so that information that was gathered on one connection could be reused when a new connection was opened to the same endpoint. The data that is recorded on a connection is shown in Table 13.4. All the variables stored in a host cache entry are described in various parts of later sections of this chapter when they become relevant to our discussion of how TCP manages a connection.

Whenever a new connection is opened, a call is made to tcp_hc_get() to find any information on past connections. If an entry exists in the cache for the target endpoint, TCP uses the cached information to make better-informed decisions about managing the connection. When a connection is closed, the host cache is updated with all the relevant information that was discovered during the connection between the two hosts. Each host cache entry has a default lifetime of one hour. Anytime that the entry is accessed or updated, its lifetime is reset to one hour. Every five minutes the tcp_hc_purge() routine is called to clean out any entries that have passed their expiration time. Cleaning out old entries ensures that the host cache does not grow too large and that it always has reasonably fresh data.

TCP can use Path MTU Discovery as described in Mogul & Deering [1990]. Path MTU discovery is a process whereby the system probes the network to see what the maximum transfer unit is on a particular route between two nodes. It does this by sending packets with the IP flag don't fragment set on each packet. If the packet encounters a segment on its path to its destination on which it would have to be fragmented, then it is dropped by the intervening router, and an error is returned to the sender. The error message contains the maximum size packet that the segment will accept. This information is recorded in the TCP host cache for the appropriate endpoint and transmission is attempted with the smaller MTU. Once the connection is complete, because enough packets have made it through the network to establish a TCP connection, the revised MTU recorded in the host cache is confirmed. Packets will continue to be transmitted with the don't fragment flag set so that if the path to the node changes, and that path has an even smaller MTU, this new smaller MTU will be recorded. FreeBSD currently has no way of upgrading the MTU to a larger size when a route changes.

When a connection is first being opened, the retransmit timer is set to the default value (6 seconds) because no round-trip time information is available yet. With a bit of luck, a responding packet will be received from the target of the connection before the retransmit timer expires. If not, the packet is retransmitted and the retransmit timer is restarted with a greater value. If no response is received before the keepalive timer expires, the connection attempt is aborted with a "Connection timed out" error. If a response is received, however, it is checked for agreement with the outgoing request. It should acknowledge the SYN that was sent and should include a SYN. If it does both, the receive sequence variables are initialized, and the connection state is advanced to TCPS_ESTABLISHED. If a maximum-segment-size option is present in the response, the maximum segment size for the connection is set to the minimum of the offered size and the maximum transmission unit of the outgoing interface; if the option is not present, the default size (512 data bytes) is recorded. The flag TF_ACKNOW is set in the TCP control block before the output routine is called so that the SYN will be acknowledged immediately. The connection is now ready to transfer data.

The events that occur when a connection is created by a passive open are different. A socket is created and its address is bound as before. The socket is then marked by the listen call as willing to accept connections. When a packet arrives for a TCP socket in TOPS LISTEN state, a new socket is created with sonewconn(), which calls the TCP tcp_usr_attach() routine to create the protocol control blocks for the new socket. The new socket is placed on the queue of partial connections headed by the listening socket. If the packet contains a SYN and is otherwise acceptable, the association of the new socket is bound, both the send and the receive sequence numbers are initialized, and the connection state is advanced to TCPS_SYN_RECEIVED. The keepalive timer is set as before, and the output routine is called after TF_ACKNOW has been set to force the SYN to be acknowledged; an outgoing SYN is sent as well. If this SYN is acknowledged properly, the new socket is moved from the queue of partial connections to the queue of completed connections. If the owner of the listening socket is sleeping in an accept call or does a select, the socket will indicate that a new connection is available. Again, the socket is finally ready to send data. Up to one window of data may have already been received and acknowledged by the time that the accept call completes.

One problem in previous implementations of TCP was that it was possible for a malicious program to flood a system with SYN packets, thereby preventing it from doing any useful work or servicing any real connections. This type of denial of service attack became common during the commercialization of the Internet in the late 1990s. To combat this attack, a syncache was introduced to efficiently store, and possibly discard, SYN packets that do not lead to real connections. The syncache handles the three-way handshake between a local server and connecting peers.

When a SYN packet is received for a socket that is in the LISTEN state, the TCP module attempts to add a new syncache entry for the packet using the syncache_add() routine. If there are any data in the received packet, they are not acknowledged at this time. Acknowledging the data would use up system resources, and an attacker could exhaust these resources by flooding the system with SYN packets that included data. If this SYN has not been seen before, a new entry is created in the hash table based on the packet's foreign address, foreign port, the local port of the socket, and a mask. The syncache module responds to the SYN with a SYN/ACK and sets a timer on the new entry. If the syncache contains an entry that matches the received packet, then it is assumed that the original SYN/ACK was not received by the peer initiating the connection, and another SYN/ACK is sent and the timer on the syncache entry is reset. There is no limit set on the number of SYN packets that can be sent by a connecting peer. Any limit would not follow the TCP RFCs and might impede connections over lossy networks.

A TCP connection is symmetrical and full-duplex, so either side may initiate disconnection independently. As long as one direction of the connection can carry data, the connection remains open. A socket may indicate that it has completed sending data with the shutdown system call, which results in a call to the tcp_usr_shutdown() routine. The response to this request is that the state of the connection is advanced; from the ESTABLISHED state, the state becomes FIN_WAIT_1. The ensuing output call will send a FIN, indicating an end-of-file. The receiving socket will advance to CLOSE_WAIT but may continue to send. The procedure may be different if the process simply closes the socket. In that case, a FIN is sent immediately, but if new data are received, they cannot be delivered. Normally, higher-level protocols conclude their own transactions such that both sides know when to close. If they do not, however, TCP must refuse new data. It does so by sending a packet with the RST flag set if new data are received after the user has closed. If data remain in the send buffer of the socket when the close is done, TCP will normally attempt to deliver them. If the socket option SO_LINGER was set with a linger time of zero, the send buffer is simply flushed; otherwise, the user process is allowed to continue, and the protocol waits for delivery to conclude. Under these circumstances, the socket is marked with the state bit SS_NOFDREF (no file-descriptor reference). The completion of data transfer and the final close can take place an arbitrary amount of time later. When TCP finally completes the connection (or gives up because of timeout or other failure), it calls tcp_close(). The protocol control blocks and other dynamically allocated structures are freed at this time. The socket also is freed if the SS_NOFDREF flag has been set. Thus, the socket remains in existence as long as either a file descriptor or a protocol control block refers to it.

Although TCP input processing is considerably more complicated than UDP input handling, the preceding sections have provided the background that we need to examine the actual operation. As always, the input routine is called with parameters

The first few steps probably are beginning to sound familiar:

- Locate the TCP header in the received IP datagram. Make sure that the packet is at least as long as a TCP header, and use m_pullup() if necessary to make it contiguous.

- Compute the packet length, set up the IP pseudo-header, and checksum the TCP header and data. Discard the packet if the checksum is bad.

- Check the TCP header length; if it is larger than a minimal header, make sure that the whole header is contiguous.

- Locate the protocol control block for the connection with the port number specified. If none exists, send a packet containing the reset flag RST and drop the packet.

- Check whether the socket is listening for connections; if it is, follow the procedure described for passive connection establishment.

- Process any TCP options from the packet header.

- Clear the idle time for the connection, and set the keepalive timer to its normal value.

At this point, the normal checks have been made, and we are prepared to deal with data and control flags in the received packet. There are still many consistency checks that must be made during normal processing; for example, the SYN flag must be present if we are still establishing a connection and must not be present if the connection has been established. We shall omit most of these checks from our discussion, but the tests are important to prevent wayward packets from causing confusion and possible data corruption.

The next step in checking a TCP packet is to see whether the packet is acceptable according to the receive window. It is important that this step be done before control flags—in particular RST—are examined because old or extraneous packets should not affect the current connection unless they are clearly relevant in the current context. A segment is acceptable if the receive window has nonzero size and if at least some of the sequence space occupied by the packet falls within the receive window. If the packet contains data, some of the data must fall within the window. Portions of the data that precede the window are trimmed, since they have already been received, and portions that exceed the window also are discarded, since they have been sent prematurely. If the receive window is closed (rcv_wrul is zero), then only segments with no data and with a sequence number equal to rcv_nxt are acceptable. If an incoming segment is not acceptable, it is dropped after an acknowledgment is sent.

The processing of incoming TCP packets must be fully general, taking into account all the possible incoming packets and possible states of receiving end-points. However, the bulk of the packets processed falls into two general categories. Typical packets contain either the next expected data segment for an existing connection or an acknowledgment plus a window update for one or more data segments, with no additional flags or state indications. Rather than considering each incoming segment based on first principles, tcp_input() checks first for these common cases. This algorithm is known as header prediction. If the incoming segment matches a connection in the ESTABLISHED state, if it contains the ACK flag but no other flags, if the sequence number is the next value expected (and the timestamp, if any, is nondecreasing), if the window field is the same as in the previous segment, and if the connection is not in a retransmission state, then the incoming segment is one of the two common types. The system processes any timestamp option that the segment contains, recording the value received to be included in the next acknowledgment. If the segment contains no data, it is a pure acknowledgment with a window update. In the usual case, round-trip-timing information is sampled if it is available, acknowledged data are dropped from the socket send buffer, and the sequence values are updated. The packet is discarded once the header values have been checked. The retransmit timer is canceled if all pending data have been acknowledged; otherwise, it is restarted. The socket layer is notified if any process might be waiting to do output. Finally, tcp_output() is called because the window has moved forward, and that operation completes the handling of a pure acknowledgment.

If a packet meeting the tests for header prediction contains the next expected data, if no out-of-order data are queued for the connection, and if the socket receive buffer has space for the incoming data, then this packet is a pure insequence data segment. The sequencing variables are updated, the packet headers are removed from the packet, and the remaining data are appended to the socket receive buffer. The socket layer is notified so that it can notify any interested thread, and the control block is marked with a flag, indicating that an acknowledgment is needed. No additional processing is required for a pure data packet.

For packets that are not handled by the header-prediction algorithm, the processing steps are as follows:

- Process the timestamp option if it is present, rejecting any packets for which the timestamp has decreased, first sending a current acknowledgment.

- Check whether the packet begins before rcv_nxt. If it does, ignore any SYN in the packet, and trim any data that fall before rcv_nxt. If no data remain, send a current acknowledgment and drop the packet. (The packet is presumed to be a duplicate transmission.)

- If the packet still contains data after trimming, and the process that created the socket has already closed the socket, send a reset (RST) and drop the connection. This reset is necessary to abort connections that cannot complete; it typically is sent when a remote-login client disconnects while data are being received.

- If the end of the segment falls after the window, trim any data beyond the window. If the window was closed and the packet sequence number is rcv_nxt, the packet is treated as a window probe; TF_ACKNOW is set to send a current acknowledgment and window update, and the remainder of the packet is processed. If SYN is set and the connection was in TIME_WAIT state, this packet is really a new connection request, and the old connection is dropped; this procedure is called rapid connection reuse. Otherwise, if no data remain, send an acknowledgment and drop the packet.

The remaining steps of TCP input processing check the following flags and fields and take the appropriate actions: RST, ACK, window, URG, data, and FIN. Because the packet has already been confirmed to be acceptable, these actions can be done in a straightforward way:

- If a timestamp option is present, and the packet includes the next sequence number expected, record the value received to be included in the next acknowledgment.

- If RST is set, close the connection and drop the packet.

- If ACK is not set, drop the packet.

- If the acknowledgment-field value is higher than that of previous acknowledgments, new data have been acknowledged. If the connection was in SYN_RECEIVED state and the packet acknowledges the SYN sent for this connection, enter ESTABLISHED state. If the packet includes a timestamp option, use it to compute a round-trip time sample; otherwise, if the sequence range that was newly acknowledged includes the sequence number for which the round-trip time was being measured, this packet provides a sample. Average the time sample into the smoothed round-trip time estimate for the connection. If all outstanding data have been acknowledged, stop the retransmission timer; otherwise, set it back to the current timeout value. Finally, drop from the send queue in the socket the data that were acknowledged. If a FIN has been sent and was acknowledged, advance the state machine.

- Check the window field to see whether it advances the known send window. First, check whether this packet is a new window update. If the sequence number of the packet is greater than that of the previous window update, or the sequence number is the same but the acknowledgment-field value is higher, or if both sequence and acknowledgment are the same but the window is larger, record the new window.