12

Kernel Feature Extraction in Signal Processing

Kernel‐based feature extraction and dimensionality reduction are becoming increasingly important in advanced signal processing. This is particularly relevant in applications dealing with very high‐dimensional data. Current methods tackle important problems in signal processing: from signal subspace identification to nonlinear blind source separation, as well as nonlinear transformations that maximize particular criteria, such as variance (KPCA), covariance (kernel PLS (KPLS)), MSE (kernel orthonormalized PLS (KOPLS)), correlation (kernel canonical correlation analysis (KCCA)), mutual information (kernel generalized variance) or SNR (kernel SNR), just to name a few. Kernel multivariate analysis (KMVA) has closed links to KFD analysis as well as interesting relations to information theoretic learning. The application of the methods is hampered in two extreme cases: when few examples are available the extracted features are either overfitted or meaningless, while in large‐scale settings the computational cost is prohibitive. Semi‐supervised and sparse learning have entered the field to alleviate these problems. Another field of intense activity is that of domain adaptation and manifold alignment, for which kernel method feature extraction is currently being used. All these topics are the subject of this chapter. We will review the main kernel feature extraction and dimensionality reduction methods, dealing with supervised, unsupervised and semi‐supervised settings. Methods will be illustrated in toy examples, as well as real datasets.

12.1 Introduction

In the last decade, the amount and diversity of sensory data has increased vastly. Sensors and systems acquire signals at higher rate and resolutions, and very often data from different sensors need to be combined. In this scenario, appropriate data representation, adaptation, and dimensionality reduction become important concepts to be considered. Among the most important tasks of machine learning are feature extraction and dimensionality reduction, mainly due to the fact that, in many fields, practitioners need to manage data with a large dimensionality; for example, in image analysis, medical imaging, spectroscopy, or remote sensing, and where many heterogeneous feature components are computed from data and grouped together for machine‐learning tasks.

Extraction of relevant features for the task at hand boils down to finding a proper representation domain of the problem. Different objectives will give different optimization problems. The field dates back to the early 20th century with the works of Hotelling, Wold, Pearson, and Fisher, just to name a few.1 They originated the field of multivariate analysis (MVA) in its own right. The methods developed for MVA address the problems of dimensionality reduction in a very principled way, and typically resort to linear algebra operations. Owing to their simplicity and uniqueness of the solution, MVA methods have been successfully used in several scientific areas (Wold, 1966a).

Roughly speaking, the goal of MVA methods is to exploit dependencies among the variables to find a reduced set of them that are relevant for the learning task. PCA, PLS, or CCA are amongst the best known MVA methods. PCA uses only the correlation between the input dimensions in order to maximize the variance of the data over a chosen subspace, disregarding the target data. PLS and CCA choose subspaces where the projections maximize the covariance and the correlation between the features and the targets, respectively. Therefore, they should in principle be preferred to PCA for regression or classification problems. In this chapter, we consider also a fourth MVA method known as OPLS that is also well suited to supervised problems, with certain optimality in LS multiregression.

In any case, all these MVA methods are constrained to model linear relationships between the input and output; thus, they will be suboptimal when these relationships are actually nonlinear. This issue has been addressed by constructing nonlinear versions of MVA methods. They can be classified into two fundamentally different approaches (Rosipal, 2011): (1) the modified methods in which the linear relations among the latent variables are substituted by nonlinear relations (Laparra et al. 2014, 2015; Qin and McAvoy 1992; Wold et al. 1989); and (2) variants in which the algorithms are reformulated to fit a kernel‐based approach (Boser et al. 1992; Schölkopf et al. 1998; Shawe‐Taylor and Cristianini 2004). An appealing property of the resulting kernel algorithms is that they obtain the flexibility of nonlinear expressions using straightforward methods from linear algebra.

The field has captured the attention of many researchers from other fields mainly due to interesting relations to KFD analysis algorithms (a plethora of variants has been introduced in the last decade in the fields of pattern recognition and computer vision), and the more appealing relations to particular instantiations of kernel methods for information theoretic learning (e.g., links between covariance operators in Hilbert spaces and information concepts such as mutual information or Rényi entropy). In Section 12.3 we will show some of the relations between KMVA and other feature extraction methods based on nonparametric kernel dependence estimates.

The fact that KMVA methods need the construction of a Gram matrix of kernel dot products between data precludes their direct use in applications involving large datasets, and the fact that in many applications only a small number of labeled samples is also strongly limiting. The first difficulty is usually minimized by the use of sparse or incremental versions of the algorithms, and the second has been overcome by the field of SSL. Approaches to tackle these problems include special types of regularization, guided either by selection of a reduced number of basis functions or by considering the information about the manifold conveyed by the unlabeled samples. This chapter reviews all these approaches (Section 12.4).

Domain adaptation, transfer learning, and manifold alignment are relevant topics nowadays. Note that classification or regression algorithms developed with data coming from one domain (system, source) cannot be directly used in another related domain, and hence adaptation of either the data representation or the classifier becomes strictly imperative (Quiñonero‐Candela et al., 2009; Sugiyama and Kawanabe, 2012). The fields of manifold alignment and domain adaptation have captured high interest nowadays in pattern analysis and machine learning. On many occasions, it is more convenient to adapt the data representations to facilitate the use of standard classifiers, and several kernel methods have been proposed trying to map different data sources to a common latent space where the different source manifolds are matched. Section 12.5 reviews some kernel methods to perform domain adaptation while still using linear algebra.

12.2 Multivariate Analysis in Reproducing Kernel Hilbert Spaces

This section fixes the notation used in the chapter, and reviews the framework of MVA both in the linear case and with kernel methods. An experimental evaluation on standard UCI datasets and real high‐dimensional image segmentation problems illustrate the performance of all the methods.

12.2.1 Problem Statement and Notation

Assume any supervised regression or classification problem, were X and Y are column‐wise centered input and target data matrices of sizes N × d and N × m respectively. Here, N is the number of training data points in the problem, and d and m are the dimensions of the input and output spaces respectively. The output response data (sometimes referred to as the target) Y are typically a set of variables in columns that need to be approximated in regression settings, or a matrix that encodes the class membership information in classification settings. The sample covariance matrices are given by Cx = (1/N)XTX and Cy = (1/N)YTY, whereas the input–output cross‐covariance is expressed as Cxy = (1/N)XTY.

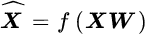

The objective of standard linear multiregression is to adjust a linear model for predicting the output variables from the input ones; that is, ![]() , where W contains the regression model coefficients. The standard LS solution is W = X†Y, where X† = (XTX)−1XT is the Moore–Penrose pseudoinverse2 of X. If some input variables are highly correlated, the corresponding covariance matrix will be rank deficient, and thus its inverse will not exist. The same situation will be encountered if the number N of data is less that the dimension d of the space. In order to turn these problems into well‐conditioned ones it is usual to apply a Tikhonov regularization term; for instance, by also minimizing the Frobenius norm of the weights matrix

, where W contains the regression model coefficients. The standard LS solution is W = X†Y, where X† = (XTX)−1XT is the Moore–Penrose pseudoinverse2 of X. If some input variables are highly correlated, the corresponding covariance matrix will be rank deficient, and thus its inverse will not exist. The same situation will be encountered if the number N of data is less that the dimension d of the space. In order to turn these problems into well‐conditioned ones it is usual to apply a Tikhonov regularization term; for instance, by also minimizing the Frobenius norm of the weights matrix ![]() one obtains the regularized LS solution W = (XTX + λI)−1XTY, where parameter λ controls the amount of regularization.

one obtains the regularized LS solution W = (XTX + λI)−1XTY, where parameter λ controls the amount of regularization.

MVA techniques solve the aforementioned problems by projecting the input data into a subspace that preserves the information useful for the current machine‐learning problem. MVA methods transform the data into a set of features through a transformation X′ = XU, where ![]() will be referred hereafter as the projection matrix, ui being the ith projection vector and nf the number of extracted features. Some MVA methods consider also a feature extraction in the output space, Y′ = YV, with

will be referred hereafter as the projection matrix, ui being the ith projection vector and nf the number of extracted features. Some MVA methods consider also a feature extraction in the output space, Y′ = YV, with ![]() .

.

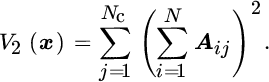

Generally speaking, MVA methods look for projections of the input data that are “maximally aligned” with the targets. Different methods are characterized by the specific objectives they maximize. Table 12.1 summarizes the MVA methods that are being presented in this section. It is important to be aware of that MVA methods are based on the first‐ and second‐order moments of the data, so these methods can be formulated as a generalized eigenvalue problem; hence, they can be solved using standard algebraic techniques.

Table 12.1 Summary of linear and KMVA methods. We state for all methods treated in this section the objective to maximize, constraints for the optimization, and maximum number of features np that can be extracted, and how the data are projected onto the extracted features.

| Method | PCA | SNR | PLS | CCA | OPLS |

| maxu | uTCxu | uTCxyv | uTCxyv | ||

| s.t. | UTU = I | UTCnU = I |  |

|

UTCxU = I |

| np | r(X) | r(X) | r(X) | r(Cxy) | r(Cxy) |

| P(X*) | X*U | X*U | X*U | X*U | X*U |

| KPCA | KSNR | KPLS | KCCA | KOPLS | |

| maxα | αTKxYv | αTKxYv | αTKxYYTKxα | ||

| s.t. | ATKxA = I | ATKxnKnxA = I |  |

|

|

| np | r(Kx) | r(Kx) | r(Kx) | r(KxY) | r(KxY) |

| P |

Kx(X*, X)A | Kx(X*, X)A | Kx(X*, X)A | Kx(X*, X)A | Kx(X*, X)A |

Vectors u and α are column vectors in matrices U and A respectively. r(⋅) denotes the rank of a matrix.

12.2.2 Linear Multivariate Analysis

The best known MVA method, and probably the oldest one, is PCA (e.g., Pearson, 1901), also known as the Hotelling transform or the Karhunen–Loève transform (Jolliffe, 1986). PCA selects the maximum variance projections of the input data, imposing an orthonormality constraint for the projection vectors (see Table 12.1). This method assumes that the projections that contain the information are the ones that have the highest variance. PCA is an unsupervised feature extraction method. Even if supervised methods, which include the target information, may be preferred, PCA and its kernel version, KPCA, are used as preprocessing stages in many supervised problems, possibly because of their simplicity and ability to discard irrelevant directions (Abrahamsen and Hansen 2011; Braun et al. 2008).

In signal processing applications, maximizing the variance of the signal may not be a good idea per se, and one typically looks for transformations of the observed signal such that the SNR is maximized, or alternatively for transformations that minimize the fraction of noise. This is the case of the MNF transform (Green et al., 1998), which extends PCA by maximizing the signal variance while minimizing the estimated noise variance. Let us assume that observations xi follow an additive noise model; that is, xi = si + ni, where ni may not necessarily be Gaussian. The observed data matrix is assumed to be the sum of a “signal” and a “noise” matrix, X = s + N, being typically N > d. Matrix ![]() indicates the centered version of X, and

indicates the centered version of X, and ![]() represents the empirical covariance matrix of the input data. Very often, signal and noise are assumed to be orthogonal, sTN = NTs = 0, which is very convenient for SNR maximization and blind‐source separation problems (Green et al., 1998; Hundley et al., 2002). Under this assumption, one can incorporate easily the information about the noise through the noise covariance matrix

represents the empirical covariance matrix of the input data. Very often, signal and noise are assumed to be orthogonal, sTN = NTs = 0, which is very convenient for SNR maximization and blind‐source separation problems (Green et al., 1998; Hundley et al., 2002). Under this assumption, one can incorporate easily the information about the noise through the noise covariance matrix ![]() .

.

The PLS algorithm (Wold, 1966b) is a supervised method based on latent variables that account for the information in the cross‐covariance matrix Cxy. The strategy here consists of constructing the projections that maximize the covariance between the projections of the input and the output, while keeping certain orthonormality constraints. The procedure is solved iteratively or in block through an eigenvalue problem. In iterative schemes, datasets X and Y are recursively transformed in a process which subtracts the information contained in the already estimated latent variables. This process is usually called deflation, and it can be done in several ways that define the many variants of PLS existing in the literature.3 The algorithm does not involve matrix inversion, and it is robust against highly correlated data. These properties made it usable in many fields, such as chemometrics and remote sensing, where signals typically are acquired in a range of highly correlated spectral wavelengths.

The main feature of CCA is that it maximizes the correlation between projected input and output data (Hotelling, 1936). This is why CCA can manage spatial dimensions in the input or output data that show high variance but low correlation between input and output, which would be emphasized by PLS. CCA is a standard method for aligning data sources, with interesting relations to information‐theoretic approaches. We will come back to this issue in Sections 12.3 and 12.5.

An interesting method we will pay attention to is OPLS, also known as multilinear regression (Borga et al., 1997) or semi‐penalized CCA (Barker and Rayens, 2003). OPLS is optimal for performing multilinear LS regression on the features extracted from the training data; that is:

with W = X′†Y being the matrix containing the optimal regression coefficients. It can be shown that this optimization problem is equivalent to the one stated in Table 12.1. This problem can be compared with maximizing a Rayleigh coefficient that takes into account the projections of input and output data,  . This is why the method is called semi‐penalized CCA. Indeed, it does not take into account the variance of the projected input data, but emphasizes those input dimensions that better predict large variance projections of the target data. This asymmetry makes sense in supervised subspace learning where matrix Y contains target values to be approximated from the extracted input features.

. This is why the method is called semi‐penalized CCA. Indeed, it does not take into account the variance of the projected input data, but emphasizes those input dimensions that better predict large variance projections of the target data. This asymmetry makes sense in supervised subspace learning where matrix Y contains target values to be approximated from the extracted input features.

Actually, OPLS (for classification problems) is equivalent to LDA provided an appropriate labeling scheme is used for Y (Barker and Rayens, 2003). However, in “two‐view learning” problems (also known as domain adaptation or manifold alignment), in which X and Y represent different views of the data (Shawe‐Taylor and Cristianini, 2004, Section 6.5), one would like to extract features that can predict both data representations simultaneously, and CCA could be preferred to OPLS. We will further discuss this matter in Section 12.5.

Now we can establish a simple common framework for PCA, SNR, PLS, CCA, and OPLS, following the formalization introduced in Borga et al. (1997), where it was shown that these methods can be reformulated as (generalized) eigenvalue problems, so that linear algebra packages4 can be used to solve them. Concretely:

Note that CCA and OPLS require the inversion of matrices Cx and Cy. Whenever these are rank deficient, it becomes necessary to first extract the dimensions with nonzero variance using PCA, and then solve the CCA or OPLS problems. Alternative approaches include adding extra regularization terms. A very common approach is to solve the aforementioned problems using a two‐step iterative procedure: first, the projection vectors corresponding to the largest (generalized) eigenvalue are chosen, for which there exist efficient methods such as the power method; and then in the second step known as deflation, one removes from the data (or the covariance matrices) the covariance that can be already obtained from the features extracted in the first step. Equivalent solutions can be found by formulating them as regularized LS problems. As an example, sparse versions of PCA, CCA, and OPLS were introduced by adding sparsity promotion terms, such as LASSO or ℓ1‐norm on the projection vectors, to the LS functional (Hardoon and Shawe‐Taylor 2011; Van Gerven et al. 2012; Zou et al. 2006).

12.2.3 Kernel Multivariate Analysis

The framework of KMVA algorithms is aimed at extracting nonlinear projections while actually working with linear algebra. Let us first consider a function ![]() that maps input data into a Hilbert feature space

that maps input data into a Hilbert feature space ![]() . The new mapped dataset is defined as Φ = [Φ(x1)|⋯|Φ(xl)]T, and the features extracted from the input data will now be given by Φ′ = ΦU, where matrix U is of size

. The new mapped dataset is defined as Φ = [Φ(x1)|⋯|Φ(xl)]T, and the features extracted from the input data will now be given by Φ′ = ΦU, where matrix U is of size ![]() . The direct application of this idea suffers from serious practical limitations when the

. The direct application of this idea suffers from serious practical limitations when the ![]() is very large. To implement practical KMVA algorithms we need to rewrite the equations in the first half of Table 12.1 in terms of inner products in

is very large. To implement practical KMVA algorithms we need to rewrite the equations in the first half of Table 12.1 in terms of inner products in ![]() only. For doing so, we rely on the availability of a kernel matrix Kx = ΦΦT of dimension N × N, and on the representer theorem (Kimeldorf and Wahba, 1971), which states that the projection vectors can be written as a linear combination of the training samples; that is, U = ΦTA, matrix

only. For doing so, we rely on the availability of a kernel matrix Kx = ΦΦT of dimension N × N, and on the representer theorem (Kimeldorf and Wahba, 1971), which states that the projection vectors can be written as a linear combination of the training samples; that is, U = ΦTA, matrix ![]() being the new argument for the optimization.5 Equipped with the kernel trick, one readily develops kernel versions of the previous linear MVA, as shown in Table 12.1.

being the new argument for the optimization.5 Equipped with the kernel trick, one readily develops kernel versions of the previous linear MVA, as shown in Table 12.1.

For PCA, it was Schölkopf et al. (1998) who introduced a kernel version denoted KPCA. Lai and Fyfe in 2000 first introduced the kernel version of CCA denoted KCCA (Lai and Fyfe, 2000a; Shawe‐Taylor and Cristianini, 2004). Later, Rosipal and Trejo (2001) presented a nonlinear kernel variant of PLS. In that paper, Kx and the Y matrix are deflated the same way, which is more in line with the PLS2 variant than with the traditional algorithm “PLS Mode A,” and therefore we will denote it as KPLS2. A kernel variant of OPLS was presented in (Arenas‐García et al., 2007) and is here referred to as KOPLS. Recently, the kernel MNF was introduced in (Nielsen, 2011) but required the estimation of the noise signal in input space. This kernel SNR method was extended in (Gómez‐Chova et al., 2017) to deal with signal and noise relations in Hilbert space explicitly. That is, in the explicit KSNR (KSNRe) the noise is estimated in Hilbert spaces rather than in the input space and then mapped with an appropriate reproducing kernel function (which we refer to as implicit KSNR, KSNRi).

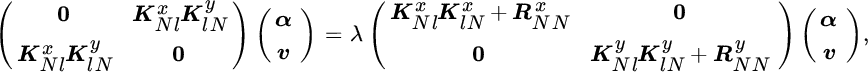

As for the linear case, KMVA methods can be implemented as (generalized) eigenvalue problems:

Note that the output data could also be mapped to some feature space ![]() , as was considered for KCCA for a multiview learning case (Lai and Fyfe, 2000a). Here, we consider instead that it is the actual labels in Y which need to be well represented by the extracted input features, so we will deal with the original representation of the output data.

, as was considered for KCCA for a multiview learning case (Lai and Fyfe, 2000a). Here, we consider instead that it is the actual labels in Y which need to be well represented by the extracted input features, so we will deal with the original representation of the output data.

12.2.4 Multivariate Analysis Experiments

In this section we illustrate through different application examples the use and capabilities of the kernel multivariate feature extraction framework. We start with a toy example where we can visualize the features, and therefore get intuition about what is doing each method. After that we compare the performance of linear and KMVA methods in a real classification problem of (high‐dimensional) satellite images (Arenas‐García and Camps‐Valls 2008; Arenas‐García et al. 2013).

Toy Example

In this first experiment we illustrate the methods presented using toy data. Figure 12.1 illustrates the features extracted by the methods for a toy classification problem with three classes. Figure 12.1 can be reproduced by downloading the SIMFEAT toolbox and executing the script Demo_Fig_14_1.m from the book’s repository. A simplified version of the code is shown in Listing 12.1. Note how all the methods are based on eigendecompositions of covariance matrices or kernels.

Figure 12.1 Toy example of MVA methods in a three‐class problem. Top: features extracted using the training set. Figure shows the variance (var), MSE when the projection is used to approximate y, and the largest covariance (cov) and correlation (corr) achievable using a linear projection of the target data. The first thing we see is that linear methods (top row) perform a rotation on the original data, while the features extracted by the nonlinear methods (second row) are based on more complicated transforms. There is a clear difference between the unsupervised methods (PCA and KPCA) and the supervised methods, which try to pull apart the data coming from different classes. Bottom: classification of test data using a linear classifier trained in the transformed domain, numerical performance is given by the overall accuracy (OA). Since in this case there is no dimensionality reduction, all the linear methods obtain the same accuracy. However, the nonlinear methods obtain very different results. In general, nonlinear methods obtain better results than the linear ones. A special case is KPCA, which obtains very poor results. KPCA is unsupervised and in this case looking for high‐variance dimensions is not useful in order to discern between the classes. KPLS obtains lower accuracy than KOPLS and KCCA, which in turn obtain a similar result since their formulation is almost equivalent (only an extra normalization step is performed by KCCA).

The data were generated from three noisy parabolas, so that a certain overlap exists between classes. For the first extracted feature we show its sample variance, the largest correlation and covariance that can be achieved with a linear transformation of the output, and the optimum MSE of the feature when used to approximate the target. All these are shown above the scatter plot. The first projection for each method maximizes the variance (PCA), covariance (PLS), and correlation (CCA), while OPLS finds the MMSE projection. However, since these methods can just perform linear transformations of the data, they are not able to capture any nonlinear relations between the input variables. For illustrative purposes, we have included in Figure 12.1 the projections obtained using KMVA methods with an RBF kernel. Input data were normalized to zero mean and unit variance, and the kernel width σ was selected as the median of all pairwise distances between samples (Blaschko et al., 2011). The same σ has been used for all methods, so that features are extracted from the same mapping of the input data. We can see that the nonlinear mapping improves class separability.

Satellite Image Classification

Nowadays, multi‐ and hyperspectral sensors mounted on satellite and airborne platforms may acquire the reflected energy by the Earth with high spatial detail and in several spectral wavelengths. Here, we pay attention to the performance of several KMVA methods for image segmentation of hyperspectral images (Arenas‐García and Petersen, 2009). The input data X is the spectral radiance obtained for each pixel (sample) of dimension d equal to the number of spectral channels considered, and the output target variable Y corresponds to the class label of each particular pixel.

The data used is the standard AVIRIS image obtained from NW Indiana’s Indian Pine test site in June 1992.6 The 20 noisy bands corresponding to the region of water absorption have been removed, and we used pixels of dimension d = 200 spectral bands. The high number of narrow spectral bands induces a high collinearity among features. Discriminating among the major crop classes in the area can be very difficult. The image is 145 × 145 pixels and contains 16 quite unbalanced classes (ranging from 20 to 2468 pixels each). Among the available N = 10 366 labeled pixels, 20% were used to train the feature extractors, where the remaining 80% were used to test the methods. The discriminative properties of the extracted features were assessed using a simple classifier constructed with a linear model adjusted with LS plus a “winner takes all” activation function.

The result of the classification accuracy during the test for different number nf of extracted features is shown in Figure 12.2. For linear models, OPLS performs better than all other methods for any number of extracted features. Even though CCA provides similar results for nf = 10, it involves a slightly more complex generalized eigenproblem. When the maximum number of projections is used, all methods result in the same error. Nevertheless, while PCA and PLS2 require nf = 200 features (i.e., the dimensionality of the input space), CCA and OPLS only need nf = 15 features to achieve virtually the same performance.

Figure 12.2 Average classification accuracy (percent) for linear and KMVA methods as a function of the number of extracted features, along some classification maps for the case of nf = 10 extracted features.

We also considered nonlinear KPCA, KPLS2, KOPLS, and KCCA, using an RBF kernel. The width parameter of the kernel was adjusted with a fivefold cross‐validation over the training set. The same conclusions obtained for the linear case apply also to MVA methods in kernel feature space. The features extracted by KOPLS allow us to achieve a slightly better overall accuracy than KCCA, and both methods perform significantly better than KPLS2 and KPCA. In the limit of nf, all methods achieve similar accuracy. The classification maps obtained for nf = 10 confirm these conclusions: higher accuracies lead to smoother maps and smaller error in large spatially homogeneous vegetation covers.

Finally, we transformed all the 200 original bands into a lower dimensional space of 18 features and assessed the quality of the extracted features by standard PCA, SNR/MNF, KPCA, and KSNR approaches. Figure 12.3 shows the extracted features in descending order of relevance. In this unsupervised setting it is evident that incorporating the signal‐to‐noise relations in the extraction with KSNR provides some clear advantages in the form of more “noise‐free” features than the other methods.

Figure 12.3 Extracted features from the original image by PCA, MNF/SNR, KPCA, standard (implicit) KSNR, and explicit KSNR in the kernel space for the first nf = 18 components, plotted in RGB composite of three top components ordered in descending importance (images converted to grey scale for the book).

12.3 Feature Extraction with Kernel Dependence Estimates

In this section we review the connections of feature extraction when using dependency estimation as optimization criterion. First, we present a popular method for dependency estimation based on kernels, the HSIC (Gretton et al., 2005c). We will analyze the connections between HSIC and classical feature extraction methods. After that, we will review two methods that employ HSIC as optimization criterion to find interesting features: the Hilbert–Schmidt component analysis (HSCA) (Daniušis and Vaitkus, 2009) and the kernel dimensionality reduction (KDR) (Fukumizu et al. 2003, 2004). A related problem found in DSP is the BSS problem; here, one aims to find a transformation that separates the input observed signal into a set of independent signal components. We will review different BSS methods based on kernels.

12.3.1 Feature Extraction Using Hilbert–Schmidt Independence Criterion

MVA methods optimize different criteria mostly based on second‐order relations, which is a limited approach in problems exhibiting higher order nonlinear feature relations. Here, we analyze different methods to deal with higher order statistics using kernels. The idea is to go beyond simple association statistics. We will build our discussion on the HSIC (Gretton et al., 2005c), which is a measure of dependence between random variables. The goal of this measure is to discern if sensed variables are related and to measure the strength of such relations. By using this measure, we can look for features that maximize directly the amount of information extracted from the data instead of using a proxy like correlation.

Hilbert–Schmidt Independence Criterion

The HSIC method measures cross‐covariances in an adequate RKHS by using the entire spectrum of the cross‐covariance operator. As we will see, the HSIC empirical estimator has low computational burden and nice theoretical and practical properties. To fix notation, let us consider two spaces ![]() and

and ![]() , where input and output observations (x, y) are sampled from distribution

, where input and output observations (x, y) are sampled from distribution ![]() xy. The covariance matrix is

xy. The covariance matrix is

where ![]() is the expectation with respect to

is the expectation with respect to ![]() xy, and

xy, and ![]() is the marginal expectation with respect to

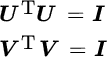

is the marginal expectation with respect to ![]() x (here and below, we assume that all these quantities exist). First‐order dependencies between variables are included into the covariance matrix, and the Hilbert–Schmidt norm is a statistic that effectively summarizes the content of this matrix. The squared sum of its eigenvalues γi is equal to the square of this norm:

x (here and below, we assume that all these quantities exist). First‐order dependencies between variables are included into the covariance matrix, and the Hilbert–Schmidt norm is a statistic that effectively summarizes the content of this matrix. The squared sum of its eigenvalues γi is equal to the square of this norm:

This quantity is zero only in the case where there is no first‐order dependence between x and y. Note that the Hilbert–Schmidt norm is limited to the detection of first‐order relations, and thus more complex (higher order effects) cannot be captured.

The previous notion of covariance operator in the field of kernel functions and measures was proposed in (Gretton et al., 2005c). Essentially, assume a (non‐linear) mapping ![]() in such a way that the dot product between features is given by a positive definite kernel function Kx(x, x′) = 〈ϕ(x), ϕ(x′)〉. The feature space

in such a way that the dot product between features is given by a positive definite kernel function Kx(x, x′) = 〈ϕ(x), ϕ(x′)〉. The feature space ![]() has the structure of an RKHS. Assume another mapping

has the structure of an RKHS. Assume another mapping ![]() with associated kernel Ky(y, y′) = 〈ψ(y), ψ(y′)〉. Under these conditions, a conditions, a cross‐covariance operator between these mappings can be defined, similar to the covariance matrix in Equation 12.4. The cross‐covariance is a linear operator

with associated kernel Ky(y, y′) = 〈ψ(y), ψ(y′)〉. Under these conditions, a conditions, a cross‐covariance operator between these mappings can be defined, similar to the covariance matrix in Equation 12.4. The cross‐covariance is a linear operator ![]() of the form

of the form

where ⊗ is the tensor product, ![]() , and

, and ![]() . See more details in Baker (1973) and Fukumizu et al. (2004). The HSIC is defined as the squared norm of the cross‐covariance operator,

. See more details in Baker (1973) and Fukumizu et al. (2004). The HSIC is defined as the squared norm of the cross‐covariance operator, ![]() , and it can be expressed in terms of kernels (Gretton et al., 2005c):

, and it can be expressed in terms of kernels (Gretton et al., 2005c):

where ![]() is the expectation over both

is the expectation over both ![]() and an additional pair of variables

and an additional pair of variables ![]() drawn independently according to the same law.

drawn independently according to the same law.

Now, given a sample dataset Z = {(x1, y1), …, (xN, yN)} of size N drawn from ![]() xy, an empirical estimator of HSIC is (Gretton et al., 2005c)

xy, an empirical estimator of HSIC is (Gretton et al., 2005c)

where Tr is the trace (the sum of the diagonal entries), Kx and Ky are the kernel matrices for the data x and the labels y respectively, and Hij = δij − (1/N) centers the data and the label features in ![]() and

and ![]() . Here, δ represents the Kronecker symbol, where δij = 1 if i = j, and zero otherwise.

. Here, δ represents the Kronecker symbol, where δij = 1 if i = j, and zero otherwise.

Summarizing, HSIC (Gretton et al., 2005c) is a simple yet very effective method to estimate statistical dependence between random variables. HSIC corresponds to estimating the norm of the cross‐covariance in ![]() , whose empirical (biased) estimator is HSIC := [1/(N − 1)2]Tr(KxKy), where Kx and Ky are kernels working on data from sets

, whose empirical (biased) estimator is HSIC := [1/(N − 1)2]Tr(KxKy), where Kx and Ky are kernels working on data from sets ![]() and

and ![]() . It can be shown that, if the RKHS kernel is universal, such as the RBF or Laplacian kernels, HSIC asymptotically tends to zero when the input and output data are independent, and HSIC = 0 if

. It can be shown that, if the RKHS kernel is universal, such as the RBF or Laplacian kernels, HSIC asymptotically tends to zero when the input and output data are independent, and HSIC = 0 if ![]() . Note that, in practice, the actual HSIC is the Hilbert–Schmidt norm of an operator mapping between potentially infinite‐dimensional spaces, and thus would give rise to an infinitely large matrix. However, due to the kernelization, the empirical HSIC only depends on computable matrices of size N × N. We will come back to the issue of computational cost later.

. Note that, in practice, the actual HSIC is the Hilbert–Schmidt norm of an operator mapping between potentially infinite‐dimensional spaces, and thus would give rise to an infinitely large matrix. However, due to the kernelization, the empirical HSIC only depends on computable matrices of size N × N. We will come back to the issue of computational cost later.

Relation between Hilbert–Schmidt Independence Criterion and Maximum Mean Discrepancy

In Chapter 11 we introduced the MMD for hypothesis testing. We can show that MMD is related to HSIC. Let us define the product space ![]() with a kernel 〈ϕ(x, y), ϕ(x′, y′)〉 = K((x, y), (x′, y′)) = K(x, y)L(x′, y′), and the mean elements are given by

with a kernel 〈ϕ(x, y), ϕ(x′, y′)〉 = K((x, y), (x′, y′)) = K(x, y)L(x′, y′), and the mean elements are given by

and

Therefore, the MMD between these two mean elements is

Relation between Hilbert–Schmidt Independence Criterion and Other Kernel Dependence Measures

HSIC was not the first kernel dependence estimate presented in the literature. Actually, Bach and Jordan (2002) derived a regularized correlation operator from the covariance and cross‐covariance operators, and its largest singular value (the KCCA) was used as a statistic to test independence. Later, Gretton et al. (2005a) proposed the largest singular value of the cross‐covariance operator as an efficient alternative that needs no regularization. This test is called the constrained covariance (COCO) (Gretton et al., 2005a). A variety of empirical kernel quantities derived from bounds on the mutual information that hold near independence were also proposed, namely the kernel generalized variance (KGV) and the kernel mutual information (kMI) (Gretton et al., 2005b). Later, HSIC (Gretton et al., 2005c) was introduced, hence extending COCO by using the entire spectrum of the cross‐covariance operator, not just the largest singular value. As we have seen, a related statistic is the MMD criterion, which tests for statistical differences between embeddings of probability distributions into RKHS. When MMD is applied to the problem of testing for independence, the test statistic reduces to HSIC. A simple comparison between all the methods treated in this section is given in Figure 12.4. Listing 12.2 gives a simple MATLAB implementation comparing Pearson’s correlation and HSIC in this particular example. The book’s repository provides MATLAB tools and links to relevant toolboxes on (kernel) dependence estimation.

Figure 12.4 Dependence estimates for three examples revealing (left) high correlation (and hence high dependence), (middle) high dependence but null correlation, and (right) zero correlation and dependence. The Pearson correlation coefficient R and linear HSIC only capture second‐order statistics (linear correlation), while the rest capture in general higher order dependences. Note that, for MMD, the higher the more divergent (independent), while KGV upper bounds kMI and mutual information.

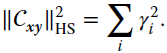

Relations between Hilbert–Schmidt Independence Criterion and the Kernel Multivariate Analysis Framework

HSIC constitutes an interesting framework to study KMVA methods for dimensionality reduction. We summarize the relations between the methods under the HSIC perspective in Table 12.2. For instance, HSIC reduces to PCA for the case of linear kernels. Recall that PCA reduces to find an orthogonal projection matrix V such that the projected data, XV, preserves the maximum variance; that is, ![]() subject to VTV = I, which may be written as follows:

subject to VTV = I, which may be written as follows:

Therefore, PCA can be interpreted as finding V such that its linear kernel has maximum dependence with the kernel obtained from the original data. Assuming that X is again centered, there is no need for including the centering matrix H in the HSIC estimate.

Table 12.2 Relation between dimensionality reduction methods and HSIC, HSIC := Tr(HKxHKy), where all techniques use the orthogonality constraint YTY = I.

| Method | Kx | Ky | Comments |

| PCA (Jolliffe, 1986) | XXT | YYT | — |

| KPCA (Schölkopf et al., 1998) | Kx | YYT | Typically RBF kernels used |

| Maximum variance unfolding (MVU) (Song et al., 2007) | Kx | LLT | Subject to positive definiteness Kx ≽ 0, preservation of local distance structure |

| Multidimensional scaling (MDS) (Cox and Cox, 1994) | YYT | D is the matrix of all pairwise Euclidean distances | |

| Isomap (Tenenbaum et al., 2000) | YYT | Dg is the matrix of all pairwise geodesic distances | |

| Locally linear embedding (LLE) (Roweis and Saul, 2000) | L†, or λmaxI − L | YYT | L = (I − V)(I − V)T, where V is the matrix of locally embedded weights |

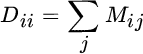

| Laplacian eigenmaps (Belkin and Niyogi, 2003) | L†, or λmaxI − L | YYT | L = D − W, where W is a positive symmetric affinity matrix and D is the corresponding degree matrix |

From HSIC, one can also reach KPCA (Schölkopf et al., 1998) by using a kernel matrix K for the data X and requiring the second random variable y to be orthogonal and unit norm; that is:

where Y is any real‐valued matrix of size N × d. Note that KPCA does not require any other constraint imposed on Y, but for other KMVA methods, as well as for clustering and metric learning techniques, one may require to design Y to include appropriate restrictions.

Maximizing HSIC has also been used in dimensionality reduction with (colored) MVU (Song et al., 2007). This manifold learning method maximizes HSIC between source and target data, and accounts for the manifold structure by imposing local distance constraints on the kernel. In the original version of the MVU, a kernel K is learned via maximizing Tr(K) subject to some constraints. Assuming a similarity/dissimilarity matrix B, one can modify the original MVU by maximizing Tr(KB) instead of Tr(K) subject to the same constraints. This way, one simultaneously maximizes the dependence between the learned kernel K and the kernel B storing our side information. Note that, in this formulation, we center matrix K via one of the constraints in the problem, and thus can exclude matrix H in the empirical estimation of HSIC.

The HSIC framework can also help us to analyze relations to standard dimensionality reduction methods like MDS (Cox and Cox, 1994), Isomap (Tenenbaum et al., 2000), LLE (Roweis and Saul, 2000) and Laplacian eigenmaps (Belkin and Niyogi, 2003) depending on the definition of the kernel K used in Equation 12.7. Ham et al. (2004) pointed out the relation between KPCA and these methods.

Hilbert–Schmidt Component Analysis

The HSIC empirical estimate can be specifically incorporated in feature extraction schemes. For example, the so‐called HSCA method in Daniušis and Vaitkus (2009) iteratively seeks for projections that maximize dependence with the target variables and simultaneously minimize the dependence with previously extracted features, both in HSIC terms. This can be seen as a Rayleigh coefficient that leads to the iterative resolution of the following generalized eigendecomposition problem:

where Kf is a kernel matrix of already extracted projections in the previous iteration. Note that if one is only interested in maximizing source–target dependence in HSIC terms, and uses a linear kernel for the targets Ky = YYT, the problem reduces to

which interestingly is the same problem solved by KOPLS (see Table 12.1). The equivalence means that features extracted with KOPLS are those that maximize statistical dependence (measured by HSIC) between the projected source data and the target data (Izquierdo‐Verdiguier et al., 2012).

Kernel Dimensionality Reduction

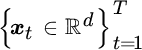

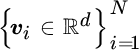

KDR is a supervised feature extraction method that seeks a linear transformation of the data such that it maximizes the conditional HSIC on the labels. Notationally, the input data matrix ![]() , the output (label) matrix is

, the output (label) matrix is ![]() , and

, and ![]() is a projection matrix from the d‐dimensional space to a r‐dimensional space, r ≤ d. Hence, the linear projection is Z = XW, which is constrained to be orthogonal; that is, WTW = I. The optimal W in HSIC terms is obtained by solving the constrained problem

is a projection matrix from the d‐dimensional space to a r‐dimensional space, r ≤ d. Hence, the linear projection is Z = XW, which is constrained to be orthogonal; that is, WTW = I. The optimal W in HSIC terms is obtained by solving the constrained problem

This approach has been followed for both supervised and unsupervised settings:

- Fukumizu et al. (2003, 2004) introduced KDR under the theory of covariance operators (Baker, 1973). KDR reduces to the KGV introduced by Gretton et al. (2005b) as a contrast function for ICA, in which the goal is to minimize mutual information. They showed that KGV is in fact an approximation of the mutual information among the recovered sources around the factorized distributions. The interest in KDR is different: the goal is to maximize the mutual information as a good proxy to the problem of dimensionality reduction. Interestingly, the computational problem involved in KDR is the same as in KICA (Bach and Jordan, 2002).

- The unsupervised KDR (Wang et al., 2010) reduces to seeking W such that the signal X and its (regression‐based) approximation

are mutually independent, given the projection; that is

are mutually independent, given the projection; that is  . The unsupervised KDR method then reduces to

. The unsupervised KDR method then reduces to

.

.The problem is typically solved by gradient‐descent techniques with line search, which constrains the projection matrix W to lie on the Grassmann–Stiefel manifold of WTW = I.

Let us illustrate the performance of the supervised KDR compared with other linear dimensionality reduction methods for the Wine dataset, which is a 13‐dimensional problem obtained from the UCI repository (http://archive.ics.uci.edu/ml/), where here it is used to illustrate projection onto a 2D subspace. Figure 12.5 shows the projection onto the 2D subspace estimated by each method. This example illustrates that optimizing HSIC to achieve linear separability is an alternative valid approach to maximize input–output covariance (PLS) or maximize correlation (CCA). Figure 12.5 can be reproduced by downloading the corresponding MATLAB toolboxes from the book’s repository and executing the script Demo_Fig_14_4.m in the supplementary material provided. A simplified version of the code is shown in Listing 12.3.

Figure 12.5 Projections obtained by different linear projection methods on the Wine data available at the UCI repository (http://archive.ics.uci.edu/ml/). Black and two different grey intensities represent three different classes.

12.3.2 Blind Source Separation Using Kernels

Extracting sources from observed mixed signals without supervision is the problem of BSS; see Chapter 2 for an introductory review to the field. Several approaches exist in the literature to solve the problem, but most of them have relied on ICA (Comon 1994; Gutmann et al. 2014; Hyvärinen et al. 2001). ICA seeks for a basis system where the dimensions of the signal are as independent as possible. Kernel dependence estimates based either on KCCA or HSIC have given rise to kernelized versions of ICA, leveraging better performance at the cost of higher computational cost. An alternative approach to ICA‐like techniques consists of exploiting second‐order BSS methods in an RKH,S like finding orthonormal basis of the submanifold formed by kernel‐mapped data (Harmeling et al., 2003).

Independent Component Analysis

The instantaneous noise‐free ICA model takes the form X = sA, where ![]() is a matrix containing N observations of s sources,

is a matrix containing N observations of s sources, ![]() is the mixing matrix (assumed to have full rank), and

is the mixing matrix (assumed to have full rank), and ![]() contains the observed mixtures. We denote as s and x single rows of matrices S and X respectively, and si is the ith source in s. ICA is based on the assumption that the components si of components si of s, for all i = 1, …, s are mutually statistically independent; that is, the observed vector x depends only on the source vector s at each instant and the source samples s are drawn independently and identically from the pdf

contains the observed mixtures. We denote as s and x single rows of matrices S and X respectively, and si is the ith source in s. ICA is based on the assumption that the components si of components si of s, for all i = 1, …, s are mutually statistically independent; that is, the observed vector x depends only on the source vector s at each instant and the source samples s are drawn independently and identically from the pdf ![]() s. As a conclusion, the mixture samples x are likewise drawn independently and identically from the pdf

s. As a conclusion, the mixture samples x are likewise drawn independently and identically from the pdf ![]() x. The task of ICA is to recover the independent sources via an estimate

x. The task of ICA is to recover the independent sources via an estimate ![]() of the inverse of the mixing matrix A such that the recovered signals

of the inverse of the mixing matrix A such that the recovered signals ![]() have mutually independent components. When the sources s are Gaussian, A can be identified up to an ordering and scaling of the recovered sources via a permutation matrix

have mutually independent components. When the sources s are Gaussian, A can be identified up to an ordering and scaling of the recovered sources via a permutation matrix ![]() and a scaling matrix D. Very often the mixtures X are pre‐whitened via PCA,

and a scaling matrix D. Very often the mixtures X are pre‐whitened via PCA, ![]() , so

, so ![]() , where W contains the whitened observations. Now, assuming that the sources si have zero mean and unit variance, AV is orthogonal and the unmixing model becomes Y = WQ, where Q is an orthogonal unmixing matrix (QQT = I) and

, where W contains the whitened observations. Now, assuming that the sources si have zero mean and unit variance, AV is orthogonal and the unmixing model becomes Y = WQ, where Q is an orthogonal unmixing matrix (QQT = I) and ![]() contains the estimates of the sources. The idea of ICA can also be employed for optimizing CCA‐like problems (for instance, see Gutmann et al. (2014)), where higher order relations are taken into account in order to find the basis to represent different datasets in a canonical space.

contains the estimates of the sources. The idea of ICA can also be employed for optimizing CCA‐like problems (for instance, see Gutmann et al. (2014)), where higher order relations are taken into account in order to find the basis to represent different datasets in a canonical space.

Kernel Independent Component Analysis Using Kernel Canonical Correlation Analysis

The original version of KICA was introduced by Bach and Jordan (2005b), and uses a KCCA‐based contrast function to obtain the unmixing matrix W. For “rich enough” kernels, such as the Gaussian kernel, it was shown that the components of the random vector x are independent if and only if their first canonical correlation in the corresponding RKHS is equal to zero. KICA utilizes a gradient‐descent approach to minimize a KCCA‐based contrast function, taking into account that whitening the features will have the effect of that the unmixing matrix W will have orthonormal vectors. KICA has better performance than the previous three algorithms and also it is more robust against outliers and near‐Gaussianity of the sources. However, these performance improvements come at a higher computational cost. Figure 12.6 shows an example of using KICA to unmix signals that have been mixed using a linear combination. The example can be reproduced using the KICA toolbox linked in the book’s repository and the code in Listing 12.4.

Figure 12.6 Demixing example using KICA. From left to right we show the original source signals, the original (linearly) mixed signals, and the results obtained by KICA and ICA. We indicate the kurtosis in every dimension.

Kernel Independent Component Analysis Using Hilbert–Schmidt Independence Criterion

KICA based on minimizing HSIC as a contrast function has been recently introduced (Shen et al. 2007, 2009). The motivation is clear: in the ICA model, the components si of the sources s are mutually statistically independent if and only if their pdf factorizes ![]() . The problem in this assumption is that pairwise independence does not imply mutual independence (while it holds vice versa). The solution here is that, in the ICA setting, unmixed components can be uniquely identified using only the pairwise independence between components of the recovered sources Y, since pairwise independence between components of Y in this case implies their mutual independence (and thus recovery of the sources S). Hence, by summing all unique pairwise HSIC measures, an HSIC‐based contrast function over the estimated signals Y is defined as

. The problem in this assumption is that pairwise independence does not imply mutual independence (while it holds vice versa). The solution here is that, in the ICA setting, unmixed components can be uniquely identified using only the pairwise independence between components of the recovered sources Y, since pairwise independence between components of Y in this case implies their mutual independence (and thus recovery of the sources S). Hence, by summing all unique pairwise HSIC measures, an HSIC‐based contrast function over the estimated signals Y is defined as

where ![]() is the difference between the kth and the lth samples of the whitened observations, and

is the difference between the kth and the lth samples of the whitened observations, and ![]() represents the empirical expectation over all k and l. The expression of H(Q) reduces to just summing over all possible HSIC estimates of Y. Intuitively, one can see that the goal in KICA is to find an unmixing matrix Q such that the dependence between the estimated unmixed sources QTW is minimal in terms of the HSIC between all pairs of signals. The algorithm was first introduced by Bach and Jordan (2002), and later extended to a fast implementation known as FastKICA that uses an approximate Newton method to perform this optimization (Shen et al. 2007, 2009). The

represents the empirical expectation over all k and l. The expression of H(Q) reduces to just summing over all possible HSIC estimates of Y. Intuitively, one can see that the goal in KICA is to find an unmixing matrix Q such that the dependence between the estimated unmixed sources QTW is minimal in terms of the HSIC between all pairs of signals. The algorithm was first introduced by Bach and Jordan (2002), and later extended to a fast implementation known as FastKICA that uses an approximate Newton method to perform this optimization (Shen et al. 2007, 2009). The fastKICA package is a convenient implementation of the method.

Kernel Blind Source Separation

The previous approaches are based on introducing kernel dependence estimates as contrast functions in ICA. Alternatively, one can follow the standard “kernelization” procedure: first the data are (implicitly) mapped to a high (possibly infinite)‐dimensional kernel feature space, and then a linear algorithm is defined and solved (implicitly) therein by means of the kernel trick. Let us review the two main approaches available in the literature, and their relations to KMVA.

Harmeling et al. (2003) followed the standard kernelization of BSS based on second‐order statistics. In particular, the method exploits temporal decorrelation in Hilbert spaces via the time‐delayed second‐order projection (TDSEP) technique, which relies on simultaneous diagonalization techniques to perform linear blind source separation on the projected data. Therefore, one obtains a number of linear directions of separated nonlinear components in input space. The algorithm was coined kernel TDSEP. After embedding, data form a smaller submanifold in feature space, which is typically smaller than the number of training data points. The approach then tries to adapt to this sort of effective dimension as a preprocessing step and to construct an orthonormal basis of this submanifold. This first step performs standard dimensionality reduction.

In particular, Harmeling et al. (2003) proposed to construct an orthonormal basis in feature spaces from a subset of selected data points therein. Given T observed signals  , and N vectors

, and N vectors  , we define the corresponding kernel matrices Kx = ΦxΦxT and Kx = ΦvΦvT. Now let us assume that the span of Φx is the same as the span of Φv and the rank of Φv is N. Since Φv constitutes a basis, Kv is full rank and has an inverse. The orthonormal basis can thus be defined as V = (ΦvΦvT)−1/2Φv, and hence projecting new data onto the basis reduces simply to Ψx[t] = (ΦvΦvT)−1/2ΦvΦxT[t] = Kv−1Kvx. The definition of the basis could be actually done extracting actually done extracting the most relevant directions in kernel feature spaces via the diagonalization of the full kernel matrix; that is, via KPCA. Nevertheless, the alternative approach to form the basis is very useful for making the subsequent application of BSS linear methods computationally and

, we define the corresponding kernel matrices Kx = ΦxΦxT and Kx = ΦvΦvT. Now let us assume that the span of Φx is the same as the span of Φv and the rank of Φv is N. Since Φv constitutes a basis, Kv is full rank and has an inverse. The orthonormal basis can thus be defined as V = (ΦvΦvT)−1/2Φv, and hence projecting new data onto the basis reduces simply to Ψx[t] = (ΦvΦvT)−1/2ΦvΦxT[t] = Kv−1Kvx. The definition of the basis could be actually done extracting actually done extracting the most relevant directions in kernel feature spaces via the diagonalization of the full kernel matrix; that is, via KPCA. Nevertheless, the alternative approach to form the basis is very useful for making the subsequent application of BSS linear methods computationally and

Kernel Entropy Component Analysis

Kernel entropy component analysis (KECA) was proposed by Jenssen (2010, 2013) to implement a feature extractor according to the entropy components. KECA, like KPCA, is a spectral method based on the kernel similarity matrix. Nevertheless, KECA does not necessarily use the top eigenvalues and eigenvectors of the kernel matrix. Unlike KPCA, which preserves maximally the second‐order statistics of the dataset, KECA is founded on information theory and tries to preserve the maximum Rényi entropy of the input space dataset. KECA has proven useful in, for example, remote sensing (Gómez‐Chova et al., 2012; Luo and Wu, 2012; Luo et al., 2014), face recognition (Shekar et al., 2011), and some other applications (Hu et al. 2013; Jiang et al. 2013; Xie and Guan 2012). Several extensions have been proposed for feature selection (Zhang and Hancock, 2012), class‐dependent feature extraction (Cheng et al., 2014), and SSL as well (Myhre and Jenssen, 2012).

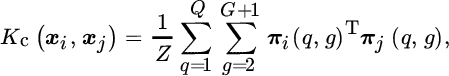

The KECA algorithm relies on the eigendecomposition of the (uncentered) kernel matrix, and sorts the eigenvectors according to the entropy values of the projections. Given a dataset ![]() of dimensionality d, the entropy may be estimated through kernel density estimation (Silverman, 1986) as

of dimensionality d, the entropy may be estimated through kernel density estimation (Silverman, 1986) as ![]() , where V2 is the so‐called information potential (Principe, 2010):

, where V2 is the so‐called information potential (Principe, 2010):

In this expression, Nc ≤ N is the number or retained components and the matrix A is obtained from the (N × N) kernel or Gram matrix K whose entries are the kernel function values K(xi, xj). Equation 12.12 is based on the kernel decomposition introduced in Jenssen (2010):

where E contains the eigenvectors in columns, E = [e1|⋯|eN], and D is a diagonal matrix containing the eigenvalues of K; that is, Dii = λi. An illustrative MATLAB code snippet for KECA is provided in Listing 12.5.

KECA uses the Rényi entropy to sort the basis extracted by PCA. A novel proposal (Izquierdo‐Verdiguier et al., 2016), the optimized KECA (OKECA) method, looks directly for the basis that maximizes the Rényi entropy, therefore maximizing the information using as few components as possible. OKECA is based on a similar idea to ICA, and optimizes an extra rotation matrix on top of PCA directions. The new kernel matrix decomposition is expressed as

where W is an orthonormal linear transformation; that is, QQT = I. In order to solve the OKECA decomposition, OKECA resorts to a gradient‐ascent approach. Note that OKECA is more computationally demanding than KECA because not only requires an eigendecomposition of a kernel matrix but also the gradient ascent procedure to refine the final features. An illustrative MATLAB code snippet for OKECA is provided in Listing 12.6.

In general, both KECA and OKECA are different from (but still intimately related to) KMVA methods. On the one hand, they maintain a probabilistic input space interpretation, seek to capture the entropy of the data in a reduced number of components, and constitute a convergence point between kernel methods and information theoretic learning (Jenssen, 2009). On the other hand, KPCA, KCCA, and KPLS maximize the variance, correlation, or alignment (covariance) with the output variables in kernel feature space respectively. The similarities between these methods arise from the use of a kernel function and from the exploitation of the spectral (eigenvalues and eigenvectors) properties of the corresponding kernel matrices. KECA and OKECA can be used for density estimation. As explained by Girolami (2002b), if the decomposition of the uncentered kernel matrix follows the form K = EDET, where E is orthonormal and D is a diagonal matrix, then the kernel‐based density estimation may be expressed as

where ![]() is the reduced version of E by keeping columns for nf < N, and as usual k* := [K(x*, x1), …, K(x*, xN)]T. Therefore, density estimation

is the reduced version of E by keeping columns for nf < N, and as usual k* := [K(x*, x1), …, K(x*, xN)]T. Therefore, density estimation ![]() can be readily done by fixing a number of extracted features nf. Figure 12.7 illustrates the ability of KECA and OKECA for density estimation in a ring distribution. Note that OKECA concentrates most of the entropy information in the first projection. This agrees with the fact that few components are needed to obtain a good pdf estimation. On the contrary, KECA cannot estimate correctly the pdf using only the first component and actually needs at least five components. We show results for two standard ways to estimate the σ length‐scale parameter: an ML strategy, σML following Duin (1976), and setting σ to 15% of the median distance between points following prescriptions in Jenssen (2010). In both cases OKECA outperforms KECA.

can be readily done by fixing a number of extracted features nf. Figure 12.7 illustrates the ability of KECA and OKECA for density estimation in a ring distribution. Note that OKECA concentrates most of the entropy information in the first projection. This agrees with the fact that few components are needed to obtain a good pdf estimation. On the contrary, KECA cannot estimate correctly the pdf using only the first component and actually needs at least five components. We show results for two standard ways to estimate the σ length‐scale parameter: an ML strategy, σML following Duin (1976), and setting σ to 15% of the median distance between points following prescriptions in Jenssen (2010). In both cases OKECA outperforms KECA.

Figure 12.7 Density estimation for the ring dataset by KECA and OKECA using different number of extracted features n and estimates of the kernel length‐scale parameter σ (ML or squared distance). Black color represents low pdf values and yellow color high pdf values.

and estimates of the kernel length‐scale parameter σ (ML or squared distance). Black color represents low pdf values and yellow color high pdf values.

12.4 Extensions for Large‐Scale and Semi‐supervised Problems

An important problem in kernel‐based feature extraction is related to the computational cost. Since Kx is of size N × N, method complexity scales quadratically with N in terms of memory, and cubically with respect to the computation time. The opposite situation often occurs as well: when N is small, the extracted features may be useless, especially for high‐dimensional ![]() (Abrahamsen and Hansen, 2011). These issues limit the applicability of kernel‐based feature extraction methods in real‐life scenarios with either very large or very small labeled datasets. We next summarize some extensions to deal with large‐scale problems and semi‐supervised situations in which few labeled data are available.

(Abrahamsen and Hansen, 2011). These issues limit the applicability of kernel‐based feature extraction methods in real‐life scenarios with either very large or very small labeled datasets. We next summarize some extensions to deal with large‐scale problems and semi‐supervised situations in which few labeled data are available.

12.4.1 Efficiency with the Incomplete Cholesky Decomposition

Very often, feature extraction methods on kernels rely on the calculation of traces on products of kernel matrices; for instance, see HSCA or KICA that try to maximize the HSIC measure between some random variables. This operation is simple yet computationally demanding when a high number of samples are available. In order to speed this operation up, one can rely on sparse approximations of the basis spanning the solution (as we will see in the next section), by intelligent dataset subsampling (e.g., via AL), or Nyström approximations of the kernel matrices. A convenient alternative is to exploit low‐rank approximations of the kernel matrices via Cholesky decomposition.

Notationally, given two kernel matrices K1 and K2, the cost associated with the operation Tr(K1K2) can be greatly reduced via incomplete Cholesky estimation. An incomplete Cholesky decomposition of a Gram matrix Ki yields a low‐rank approximation, ![]() that greedily minimizes

that greedily minimizes ![]() . The cost of computing the N × r matrix Gi is

. The cost of computing the N × r matrix Gi is ![]() , with r ≪ N. Greater values of r result in a more accurate reconstruction of Ki. Interestingly, it is well known that the spectrum of a Gram matrix based on the (RBF) Gaussian kernel generally decays rapidly, and a small r yields a very good approximation. Plugging the Cholesky eigendecomposition of the two kernels leads to

, with r ≪ N. Greater values of r result in a more accurate reconstruction of Ki. Interestingly, it is well known that the spectrum of a Gram matrix based on the (RBF) Gaussian kernel generally decays rapidly, and a small r yields a very good approximation. Plugging the Cholesky eigendecomposition of the two kernels leads to

which involves an equivalent trace of a much smaller matrix, so we can avoid computing the N × N matrices and just do r1 × r2 matrix calculations. This technique has been widely used in KMVA and also in kernel dependence estimation methods such as the HSIC (Gretton et al., 2005b).

12.4.2 Efficiency with Random Fourier Features

We have already seen the effectiveness of approximating shift‐invariant kernels, using projections on random Fourier features (Rahimi and Recht, 2007, 2009). This technique was illustrated in classification and regression settings; see Section 10.4.2. Let us show the application of the approximation in HSIC presented in Pérez‐Suay and Camps‐Valls (2017). Following previous notation, recall that ![]() can be approximated with the explicitly mapped data via

can be approximated with the explicitly mapped data via ![]() , and collectively grouped in the matrix

, and collectively grouped in the matrix ![]() , and will be denoted as

, and will be denoted as ![]() . The familiar SE Gaussian kernel

. The familiar SE Gaussian kernel ![]() can be approximated using D random features,

can be approximated using D random features, ![]() , 1 ≤ i ≤ D. The randomized HSIC (RHSIC) for fast dependence estimation in large‐scale problems is developed as follows: kernel matrices Kx and Ky are approximated using complex exponentials of projected data matrices,

, 1 ≤ i ≤ D. The randomized HSIC (RHSIC) for fast dependence estimation in large‐scale problems is developed as follows: kernel matrices Kx and Ky are approximated using complex exponentials of projected data matrices, ![]() and

and ![]() and

and ![]() , where

, where ![]() , both drawn from a Gaussian. Now, plugging the corresponding kernel approximations,

, both drawn from a Gaussian. Now, plugging the corresponding kernel approximations, ![]() and

and ![]() , into Equation 12.7, and after manipulating terms, we obtain

, into Equation 12.7, and after manipulating terms, we obtain

which corresponds to the Hilbert–Schmidt norm of the randomized cross‐covariance operator, ![]() , which can be computed explicitly and just once. The computational complexity of RHSIC reduces considerably over the original HSIC. A naive implementation of HSIC runs

, which can be computed explicitly and just once. The computational complexity of RHSIC reduces considerably over the original HSIC. A naive implementation of HSIC runs ![]() , while the RHSIC cost is

, while the RHSIC cost is ![]() , where D = Dx = Dy, since computing matrices Z only involves matrix multiplications and exponentials. We want to note that other KMVA methods have enjoyed use of this randomization technique to expedite performance, such as KCCA and KPCA (López‐Paz et al., 2014).

, where D = Dx = Dy, since computing matrices Z only involves matrix multiplications and exponentials. We want to note that other KMVA methods have enjoyed use of this randomization technique to expedite performance, such as KCCA and KPCA (López‐Paz et al., 2014).

12.4.3 Sparse Kernel Feature Extraction

To address the problems of large‐scale computation and dense solutions in KMVA, several solutions have been proposed. The family of KMVA sparse methods tries to obtain solutions that can be expressed as a combination of a reduced subset of the training data, and therefore require only r kernel evaluations per sample (being r ≪ N) for feature extraction. In contrast to the many linear MVA algorithms that induce sparsity with respect to the original variables, we will only review methods attaining sparse solutions in terms of the samples (i.e., sparsity in the αi vectors).

Roughly speaking, sparsification methods can be classified into low‐rank approximation methods that aim at working with reduced r × r matrices (r ≪ N), and reduced set methods that work with N × r matrices. Following the first approach, the Nyström low‐rank approximation of an N × N kernel matrix KNN is expressed as ![]() , where subscripts indicate row and column dimensions. This method was first used in Gaussian processes, and later by Hoegaerts et al. (2005) in order to approximate the feature mapping instead of the kernel, which leads to sparse versions of KPLS and KCCA.

, where subscripts indicate row and column dimensions. This method was first used in Gaussian processes, and later by Hoegaerts et al. (2005) in order to approximate the feature mapping instead of the kernel, which leads to sparse versions of KPLS and KCCA.

Among the reduced set methods, a sparse KPCA (sKPCA) was proposed by Tipping (2001), where the sparsity in the representation is obtained by assuming a generative model for the data in ![]() that follows a normal distribution and includes a noise term with variance vn. The ML estimation of the covariance matrix is shown to depend on just a subset of the training data, and so it does the resulting solution. A sparse KPLS (sKPLS) was introduced by Momma and Bennett (2003). The method computes the projections with a reduced set of the training samples. Each one of the projections is found using a loss that is similar to the ε insensitive loss used in SVR. The sparsification is induced via a multistep adaptation with high computational burden.

that follows a normal distribution and includes a noise term with variance vn. The ML estimation of the covariance matrix is shown to depend on just a subset of the training data, and so it does the resulting solution. A sparse KPLS (sKPLS) was introduced by Momma and Bennett (2003). The method computes the projections with a reduced set of the training samples. Each one of the projections is found using a loss that is similar to the ε insensitive loss used in SVR. The sparsification is induced via a multistep adaptation with high computational burden.

The algorithms in Tipping (2001) and Momma and Bennett (2003), however, still require the computation of the full kernel matrix during the training.

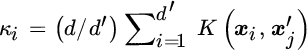

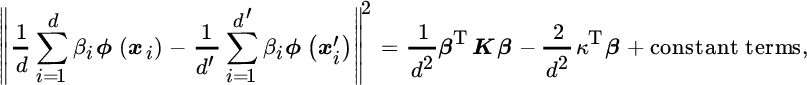

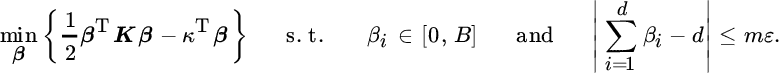

A reduced complexity KOPLS (rKOPLS) was proposed by Arenas‐García et al. (2007) by imposing sparsity in the projection vectors representation a priori, ![]() , where Φr is a subset of the training data containing r samples (r ≪ N) and β is the new argument for the maximization problem, which now becomes

, where Φr is a subset of the training data containing r samples (r ≪ N) and β is the new argument for the maximization problem, which now becomes

Since kernel matrix KrN = ΦrΦT involves the inner products in ![]() of all training points with the patterns in the reduced set, this method still takes into account all data during the training phase, and is therefore different from simple subsampling. This “sparsification” procedure avoids the computation of the full kernel matrix at any step of the algorithm. An additional advantage of this method is that matrices

of all training points with the patterns in the reduced set, this method still takes into account all data during the training phase, and is therefore different from simple subsampling. This “sparsification” procedure avoids the computation of the full kernel matrix at any step of the algorithm. An additional advantage of this method is that matrices ![]() and

and ![]() are both of size r × r, and they can be computed as sums over the training data. This fact makes the storage space grow quadratically with r. Also, there is an implicit regularization imposed by the sparsification, which decreases the overfitting risk of the method. Reduced complexity versions of KPCA and KKCA are shown in the experimental section that use the same sparsification method, and will be referred as rKPCA and rKCCA.

are both of size r × r, and they can be computed as sums over the training data. This fact makes the storage space grow quadratically with r. Also, there is an implicit regularization imposed by the sparsification, which decreases the overfitting risk of the method. Reduced complexity versions of KPCA and KKCA are shown in the experimental section that use the same sparsification method, and will be referred as rKPCA and rKCCA.

Interestingly, the extension to KPLS2 is not straightforward, since the deflation step would still require the full kernel matrix KNN. Alternatively, two sparse KPLS schemes were presented by Dhanjal et al. (2009) under the name of sparse maximal alignment (SMA) and sparse maximal covariance (SMC). Here, KPLS iteratively estimates projections that either maximize the kernel alignment or the covariance of the projected data and the true labels.

Table 12.3 summarizes some computational and implementation issues of the aforementioned sparse KMVA methods, and of standard nonsparse KMVA and linear methods. Some aspects that can be helpful to choose an algorithm for a particular application can be obtained from an analysis of the properties of each algorithm. An important step is to choose an adequate kernel and parameters. KMVA methods can be adjusted using cross‐validation to reduce overfitting at the expense of an increased computational burden, but regularization through sparsification can help to further reduce overfitting. Also, most methods can be implemented as either eigenvalue or generalized eigenvalue problems, whose complexity typically scales cubically with the size of the matrices analyzed. Therefore, both for memory and computational reasons, only linear MVA and the sparse approaches from Arenas‐García et al. (2007) and Dhanjal et al. (2009) are affordable when dealing with large datasets. A final advantage of sparse KMVA is the reduced number of kernel evaluations to extract features for new out‐of‐the‐sample data.

Table 12.3 Main properties of (K)MVA methods. Computational complexity and implementation issues are categorized for the considered dense and sparse methods in terms of the free parameters, number of kernel evaluations (KEs) during training, and storage requirements. Notation: N refers to the size of the labeled dataset, while d and m are the dimensions of the input and output spaces respectively.

| Method | Parameters | KE (training) | Storage requirements |

| PCA | None | None | |

| PLS | None | None | |

| CCA | None | None | |

| OPLS | None | None | |

| KPCA | Kernel | N2 | |

| KPLS | Kernel | N2 | |

| KCCA | Kernel | N2 | |

| KOPLS | Kernel | N2 | |