As we study the literature of digital signal processing, we'll encounter some creative techniques that professionals use to make their algorithms more efficient. These practical techniques are straightforward examples of the philosophy “don't work hard, work smart,” and studying them will give us a deeper understanding of the underlying mathematical subtleties of DSP. In this chapter, we present a collection of these tricks of the trade, in no particular order, and explore several of them in detail because doing so reinforces the lessons we've learned in previous chapters.

Frequency translation is often called for in digital signal processing algorithms. There are simple schemes for inducing frequency translation by 1/2 and 1/4 of the signal sequence sample rate. Let's take a look at these mixing schemes.

First we'll consider a technique for frequency translating an input sequence by fs/2 by merely multiplying a sequence by (–1)n = 1,–1,1,–1, ..., etc., where fs is the signal sample rate in Hz. This process may seem a bit mysterious at first, but it can be explained in a straightforward way if we review Figure 13-1(a).There we see that multiplying a time domain signal sequence by the (–1)n mixing sequence is equivalent to multiplying the signal sequence by a sampled cosinusoid where the mixing sequence samples are shown as the dots in Figure 13-1(a). Because the mixing sequence's cosine repeats every two sample values, its frequency is fs/2. Figure 13-1(b) and (c) show the discrete Fourier transform (DFT) magnitude and phase of a 32-sample (–1)n sequence. As such, the right half of those figures represents the negative frequency range.

Figure 13-1. Mixing sequence comprising (–1)n = 1,–1,1,–1, etc: (a) time-domain sequence; (b) frequency-domain magnitudes for 32 samples; (c) frequency-domain phase.

Let's demonstrate this (–1)n mixing by an example. Consider a real x(n) signal sequence having 32 samples of the sum of three sinusoids whose |X(m)| frequency magnitude and φ(m) phase spectra are as shown in Figure 13-2(a) and (b). If we multiply that time signal sequence by (–1)n, the resulting x1,–1(n) time sequence will have the magnitude and phase spectra are that shown in Figure 13-2(c) and (d). Multiplying a time signal by our (–1)n, cosine shifts half its spectral energy up by fs/2 and half its spectral energy down by –fs/2. Notice in these non-circular frequency depictions that as we count up, or down, in frequency we wrap around the end points.

Figure 13-2. A signal and its frequency translation by fs/2: (a) original signal magnitude spectrum; (b) original phase; (c) the magnitude spectrum of the translated signal; (d) translated phase.

Here's a terrific opportunity for the DSP novice to convolve the (–1)n spectrum in Figure 13-1 with the X(m) spectrum to obtain the frequency-translated X1,–1(m) signal spectrum. Please do so; that exercise will help you comprehend the nature of discrete sequences and their time and frequency-domain relationships by way of the convolution theorem.

Remember now, we didn't really perform any explicit multiplications—the whole idea here is to avoid multiplications, we merely changed the sign of alternating x(n) samples to get x1,–1(n). One way to look at the X1,–1(m) magnitudes in Figure 13-2(c) is to see that multiplication by the (–1)n mixing sequence flips the positive frequency band of X(m) [X(0) to X(16)] about the fs/4 Hz point, and flips the negative frequency band of X(m) [X(17) to X(31)] about the –fs/4 Hz sample. This process can be used to invert the spectra of real signals when bandpass sampling is used as described in Section 2.4. By the way, in the DSP literature be aware that some clever authors may represent the (–1)n sequence with its equivalent expressions of

Two other simple mixing sequences form the real and imaginary parts of a complex –fs/4 oscillator used for frequency down-conversion to obtain a quadrature version (complex and centered at 0 Hz) of a real bandpass signal originally centered at fs/4. The real (in-phase) mixing sequence is cos(πn/2) = 1,0,–1,0, etc. shown in Figure 13-3(a). That mixing sequence's quadrature companion is –sin(πn/2) = 0,–1,0,1, etc. as shown in Figure 13-3(b). The spectral magnitudes of those two sequence are identical as shown in Figure 13-3(c), but their phase spectrum has a 90° shift relationship (what we call quadrature).

Figure 13-3. Quadrature mixing sequences for downconversion by fs/4: (a) in-phase mixing sequence; (b) quadrature phase mixing sequence; (c) the frequency magnitudes of both sequences for N = 32 samples; (d) the phase of the cosine sequence; (e) phase of the sine sequence.

If we multiply the x(n) sequence whose spectrum is that in Figure 13-2(a) and (b) by the in-phase (cosine) mixing sequence, the product will have the I(m) spectrum shown in Figures 13-4(a) and (b). Again, X(m)'s spectral energy is translated up and down in frequency, only this time the translation is by ±fs/4. Multiplying x(n) by the quadrature phase (sine) sequence yields the Q(m) spectrum in Figure 13-4(a) and (c).

Figure 13-4. Spectra after translation down by fs/4: (a) I(m) and Q(m) spectral magnitudes; (b) phase of I(m) ; (c) phase of Q(m).

Because their time sample values are merely 1, –1, and 0, the quadrature mixing sequences are useful because downconversion by fs/4 can be implemented without multiplication. That's why these mixing sequences are of so much interest: downconversion of an input time sequence is accomplished merely with data assignment, or signal routing.

To downconvert a general x(n) = xreal(n) + jximag(n) sequence by fs/4, the value assignments are:

If your implementation is hardwired gates, the above data assignments are performed by means of routing signals (and their negatives). Although we've focused on downconversion so far, it's worth mentioning that upconversion of a general x(n) sequence by fs/4 can be performed with the following data assignments:

There's an efficient way to perform the complex down-conversion and filtering of a real signal by fs/4 process we considered for the quadrature sampling scheme in Section 8.9. We can use a novel technique to greatly reduce the computational workload of the linear-phase lowpass filters[1–3]. In addition, decimation of the complex down-converted sequence by a factor of two is inherent, with no effort on our part, in this process.

Considering Figure 13-5(a), notice that if an original x(n) sequence was real-only, and its spectrum is centered at fs/4, multiplying x(n) by cos(πn/2) = 1,0,–1,0, for the in-phase path and –sin(πn/2) = 0,–1,0,1, for the quadrature phase path to down-convert x(n)'s spectrum to 0 Hz, yields the new complex sequence xnew(n) = xi(n) + xq(n), or

Figure 13-5. Down-conversion by fs/4 and filtering: (a) the process; (b) the data in the in-phase filter; (c) data within the quadrature phase filter.

Next, we want to lowpass filter (LPF) both the xi(n) and xq(n) sequences followed by decimation by a factor of two.

Here's the trick. Let's say we're using five-tap FIR filters and at the n = 4 time index; the data residing in the two lowpass filters would be that shown in Figure 13-5(b) and (c). Due to the alternating zero-valued samples in the xi(n) and xq(n) sequences, we see that only five non-zero multiplies are being performed at this time instant. Those computations, at time index n = 4, are shown in the third row of the rightmost column in Table 13-1. Because we're decimating by two, we ignore the time index n = 5 computations. The necessary computations during the next time index (n = 6) are given in the fourth row of Table 13-1, where again only five non-zero multiplies are computed.

A review of Table 13-1 tells us we can multiplex the real-valued x(n) sequence, multiply the multiplexed sequences by the repeating mixing sequence 1,–1, ..., etc., and apply the resulting xi(n) and xq(n) sequences to two filters, as shown in Figure 13-6(a). Those two filters have decimated coefficients in the sense that their coefficients are the alternating h(k) coefficients from the original lowpass filter in Figure 13-5. The two new filters are depicted in Figure 13-6(b), showing the necessary computations at time index n = 4. Using this new process, we've reduced our multiplication workload by a factor of two. The original data multiplexing in Figure 13-6(a) is what implemented our desired decimation by two.

Figure 13-6. Efficient down-conversion, filtering and decimation: (a) process block diagram; (b) the modified filters and data at time n = 4; (c) process when a half-band filter is used.

Table 13-1. Filter Data and Necessary Computations after Decimation by Two

Time | Data in the Filters | Necessary Computations | ||||

|---|---|---|---|---|---|---|

n = 0 | x(0) | – | – | – | – | i(0) = x(0)h0 |

h0 | h1 | h2 | h3 | h4 | ||

0 | – | – | – | – | q(0) = 0 | |

n = 2 | –x(2) | 0 | x(0) | – | – | i(2) = x(0)h2 –x(2)h0 |

h0 | h1 | h2 | h3 | h4 | ||

0 | –x(1) | 0 | – | – | q(2) = –x(1)h1 | |

n = 4 | x(4) | 0 | –x(2) | 0 | x(0) | i(4) = x(0)h4 –x(2)h2 +x(4)h0 |

h0 | h1 | h2 | h3 | h4 | ||

0 | x(3) | 0 | –x(1) | 0 | q(4) = –x(1)h3 +x(3)h1 | |

n = 6 | –x(6) | 0 | x(4) | 0 | –x(2) | i(6) = –x(2)h4 +x(4)h2 –x(6)h0 |

h0 | h1 | h2 | h3 | h4 | ||

0 | –x(5) | 0 | x(3) | 0 | q(6) = x(3)h3 –x(5)h1 | |

n = 8 | x(8) | 0 | –x(6) | 0 | x(4) | i(8) = x(4)h4 –x(6)h2 +x(8)h0 |

h0 | h1 | h2 | h3 | h4 | ||

0 | x(7) | 0 | –x(5) | 0 | q(8) = –x(5)h3 +x(7)h1dn7 | |

Here's another feature of this efficient down-conversion structure. If half-band filters are used in Figure 13-5(a), then only one of the coefficients in the modified quadrature lowpass filter is non-zero. This means we can implement the quadrature-path filtering as K unit delays, a single multiply by the original half-band filter's center coefficient, followed by another K delay as depicted in Figure 13-6(c). For an original N-tap half-band filter, K is the integer part of N/4. If the original half-band filter's h(N–1)/2 center coefficient is 0.5, as is often the case, we can implement its multiply by an arithmetic right shift of the delayed xq(n).

This down-conversion process is indeed slick. Here's another attribute. If the original lowpass filter in Figure 13-5(a) has an odd number of taps, the coefficients of the modified filters in Figure 13-6(b) will be symmetrical and we can use the folded FIR filter scheme (Section 13.7) to reduce the number of multipliers (at the expense of additional adders) by almost another factor of two!

Finally, if we need to invert the output xc(n') spectrum, there are two ways to do so. We can negate the 1,–1, sequence driving the mixer in the quadrature path, or we can swap the order of the single unit delay and the mixer in the quadrature path.

The quadrature processing techniques employed in spectrum analysis, computer graphics, and digital communications routinely require high speed determination of the magnitude of a complex number (vector V) given its real and imaginary parts, i.e., the in-phase part I and the quadrature-phase part Q. This magnitude calculation requires a square root operation because the magnitude of V is

Assuming that the sum I2 + Q2 is available, the problem is efficiently to perform the square root operation.

There are several ways to obtain square roots. The optimum technique depends on the capabilities of the available hardware and software. For example, when performing a square root using a high-level software language, we employ whatever software square root function is available. Although accurate, software routines can be very slow. By contrast, if a system must accomplish a square root operation in 50 nanoseconds, high-speed magnitude approximations are required[4,5]. Let's look at a neat magnitude approximation scheme that's particularly hardware efficient.

There is a technique called the αMax+βMin (read as “alpha max plus beta min”) algorithm for calculating the magnitude of a complex vector.[†] It's a linear approximation to the vector magnitude problem that requires the determination of which orthogonal vector, I or Q, has the greater absolute value. If the maximum absolute value of I or Q is designated by Max, and the minimum absolute value of either I or Q is Min, an approximation of |V| using the αMax+βMin algorithm is expressed as

There are several pairs for the α and β constants that provide varying degrees of vector magnitude approximation accuracy to within 0.1 dB[4,7]. The αMax+βMin algorithms in Reference [10] determine a vector magnitude at whatever speed it takes a system to perform a magnitude comparison, two multiplications, and one addition. But those algorithms require, as a minimum, a 16-bit multiplier to achieve reasonably accurate results. If, however, hardware multipliers are not available, all is not lost. By restricting the α and β constants to reciprocals of integer powers of two, Eq. (13-6) lends itself well to implementation in binary integer arithmetic. A prevailing application of the αMax+βMin algorithm uses α = 1.0 and β = 0.5[8–10]. The 0.5 multiplication operation is performed by shifting the minimum quadrature vector magnitude, Min, to the right by one bit. We can gauge the accuracy of any vector magnitude estimation algorithm by plotting its error as a function of vector phase angle. Let's do that. The αMax+βMin estimate for a complex vector of unity magnitude, using

over the vector angular range of 0 to 90 degrees, is shown as the solid curve in Figure 13-7. (The curves in Figure 13-7 repeat every 90°.)

An ideal estimation curve for a unity magnitude vector would have a value of one. We'll use this ideal estimation curve as a yardstick to measure the merit of various αMax+βMin algorithms. Let's make sure we know what the solid curve in Figure 13-7 is telling us. It indicates that a unity magnitude vector oriented at an angle of approximately 26° will be estimated by Eq. (13-7) to have a magnitude of 1.118 instead of the correct magnitude of one. The error then, at 26°, is 11.8%, or 0.97 dB. Analyzing the entire solid curve in Figure 13-7 results in an average error, over the 0 to 90° range, of 8.6% (0.71 dB). Figure 13-7 also presents the error curves for the α = 1 and β = 1/4, and the α = 1 and β = 3/8 values.

Although the values for α and β in Figure 13-7 yield rather accurate vector magnitude estimates, there are other values for α and β that deserve our attention because they result in smaller error standard deviations. Consider the α = 7/8 and β = 7/16 pair where its error curve is the solid curve in Figure 13-8. A further improvement can be obtained using α = 15/16 and β = 15/32 having an error shown by the dashed curve in Figure 13-8. The α = 15/16 and β = 15/32 pair give rather good results when compared to the optimum floating point values of α = 0.96043387 and β = 0.397824735 reported in Reference [11], whose error is the dotted curve in Figure 13-8.

Figure 13-8. αMax+βMin estimates for α = 7/8, β = 7/16; α = 15/16, β = 15/32; and α = 0.96043387, β = 0.397824735.

Although using β = 15/16 and β = 15/32 appears to require two multiplications and one addition, its digital hardware implementation can be straightforward, as shown in Figure 13-9. The diagonal lines, 1 for example, denote a hardwired shift of one bit to the right to implement a divide-by-two operation by truncation. Likewise, the 4 symbol indicates a right shift by four bits to realize a divide-by-16 operation. The |I|>|Q| control line is TRUE when the magnitude of I is greater than the magnitude of Q, so that Max = |I| and Min = |Q|. This condition enables the registers to apply the values |I| and |Q|/2 to the adder. When |I| > |Q| is FALSE, the registers apply the values |Q| and |I|/2 to the adder. Notice that the output of the adder, Max + Min/2, is the result of Eq. (13-26). Equation (13-29) is implemented via the subtraction of (Max + Min/2)/16 from Max + Min/2.

In Figure 13-9, all implied multiplications are performed by hardwired bit shifting and the total execution time is limited only by the delay times associated with the hardware components.

One thing to keep in mind. Because the various |V| estimations can exceed the correct normalized vector magnitude value, i.e., some magnitude estimates are greater than one. This means that although the correct magnitude value may be within the system's full-scale word width, an algorithm result may exceed the word width of the system and cause overflow errors. With αMax+βMin algorithms the user must be certain that no true vector magnitude exceeds the value that will produce an estimated magnitude greater than the maximum allowable word width.

The penalty we pay for the convenience of having α and β as powers of two is the error induced by the division-by-truncation process, and we haven't taken that error into account thus far. The error curves in Figure 13-7 and Figure 13-8 were obtained using a software simulation with its floating point accuracy, and are useful in evaluating different α and β values. However, the true error introduced by the αMax+βMin algorithm will be somewhat different, due to division errors when truncation is used with finite word widths. For αMax+βMin schemes, the truncation errors are a function of the data's word width, the algorithm used, the values of both |I| and |Q|, and the vector's phase angle. (These errors due to truncation compound the errors already inherent in our αMax+βMin algorithms.) However, modeling has shown that for an 8-bit system (maximum vector magnitude = 255) the truncation error is less than 1%. As the system word width increases the truncation errors approach 0%, this means that truncation errors add very little to the inherent αMax+βMin algorithm errors.

The relative performance of the various algorithms is summarized in Table 13-2.

The last column in Table 13-2 illustrates the maximum allowable true vector magnitude as a function of the system's full-scale (F.S.) word width to avoid overflow errors.

So, the αMax+βMin algorithm enables high speed vector magnitude computation without the need for math coprocessor or hardware multiplier chips. Of course, with the recent availability of high-speed floating point multiplier integrated circuits—with their ability to multiply in one or two clock cycles—α and β may not always need to be restricted to reciprocals of integer powers of two. It's also worth mentioning that this algorithm can be nicely implemented in a single hardware integrated circuit (for example, a field programmable gate array) affording high speed operation.

Table 13-2. αMax+βMin Algorithm Comparisons

Algorithm |V| ≈ | Largest error (%) | Largest error (dB) | Average error (%) | Average error (dB) | Max |V| (% F.S.) |

|---|---|---|---|---|---|

Max + Min/2 | 11.8% | 0.97 dB | 8.6% | 0.71 dB | 89.4% |

Max + Min/4 | –11.6% | –1.07 dB | –0.64% | –0.06 dB | 97.0% |

Max + 3Min/8 | 6.8% | 0.57 dB | 3.97% | 0.34 dB | 93.6% |

7(Max + Min/2)/8 | –12.5% | –1.16 dB | –4.99% | –0.45 dB | 100% |

15(Max + Min/2)/16 | –6.25% | –0.56 dB | 1.79% | 0.15 dB | 95.4% |

There's an interesting technique for minimizing the calculations necessary to implement windowing of FFT input data to reduce spectral leakage. There are times when we need the FFT of unwindowed time domain data, while at the same time we also want the FFT of that same time-domain data with a window function applied. In this situation, we don't have to perform two separate FFTs. We can perform the FFT of the unwindowed data and then we can perform frequency-domain windowing on that FFT results to reduce leakage. Let's see how.

Recall from Section 3.9 that the expressions for the Hanning and the Hamming windows were wHan(n) = 0.5 –0.5cos(2πn/N) and wHam(n) = 0.54 –0.46cos(2πn/N), respectively. They both have the general cosine function form of

for n = 0, 1, 2, ..., N–1. Looking at the frequency response of the general cosine window function, using the definition of the DFT, the transform of Eq. (13-8) is

Because ![]() , Eq. (13-9) can be written as

, Eq. (13-9) can be written as

Equation (13-10) looks pretty complicated, but using the derivation from Section 3.13 for expressions like those summations we find that Eq. (13-10) merely results in the superposition of three sin(x)/x functions in the frequency domain. Their amplitudes are shown in Figure 13-10.

Notice that the two translated sin(x)/x functions have side lobes with opposite phase from that of the center sin(x)/x function. This means that α times the mth bin output, minus β/2 times the (m–1)th bin output, minus β/2 times the (m+1)th bin output will minimize the sidelobes of the mth bin. This frequency domain convolution process is equivalent to multiplying the input time data sequence by the N-valued window function w(n) in Eq. (13-8)[12–14].

For example, let's say the output of the mth FFT bin is X(m) = am + jbm, and the outputs of its two neighboring bins are X(m–1) = a–1 + jb–1 and X(m+1) = a+1 + jb+1. Then frequency-domain windowing for the mth bin of the unwindowed X(m) is as follows:

To compute a windowed N-point FFT, Xthree-term(m), we can apply Eq. (13-11), requiring 4N additions and 3N multiplications, to the unwindowed N-point FFT result X(m) and avoid having to perform the N multiplications of time domain windowing and a second FFT with its Nlog2(N) additions and 2Nlog2(N) multiplications. (In this case, we called our windowed results Xthree-term(m) because we're performing a convolution of a three-term W(m) sequence with the X(m) sequence.)

The neat situation here are the frequency-domain coefficients values, α and β, for the Hanning window. They're both 0.5, and the multiplications in Eq. (13-11) can be performed in hardware with two binary right shifts by a single bit for α = 0.5 and two shifts for each of the two β/2 = 0.25 factors, for a total of six binary shifts. If a gain of four is acceptable, we can get away with only two left shifts (one for the real and one for the imaginary parts of X(m)) using

In application specific integrated circuit (ASIC) and field-programmable gate array (FPGA) hardware implementations, where multiplies are to be avoided, the binary shifts can be eliminated through hard-wired data routing. Thus only additions are necessary to implement frequency-domain Hanning windowing. The issues we need to consider are which window function is best for the application, and the efficiency of available hardware in performing the frequency domain multiplications. Frequency-domain Hamming windowing can be implemented but, unfortunately, not with simple binary shifts.

Along with the Hanning and Hamming windows, Reference [14] describes a family of windows known as Blackman windows that provide further FFT spectral leakage reduction when performing frequency-domain windowing. (Note: Reference [14] reportedly has two typographical errors in the 4-Term (–74 dB) window coefficients column on its page 65. Reference [15] specifies those coefficients to be 0.40217, 0.49703, 0.09892, and 0.00188.) Blackman windows have five non-zero frequency-domain coefficients, and their use requires the following five-term convolution:

Table 13-3 provides the frequency-domain coefficients for several common window functions.

Let's end our discussion of the frequency-domain windowing trick by saying this scheme can be efficient because we don't have to window the entire set of FFT data; windowing need only be performed on those FFT bin outputs of interest to us. An application of frequency-domain windowing is presented in Section 13.18.

The multiplication of two complex numbers is one of the most common functions performed in digital signal processing. It's mandatory in all discrete and fast Fourier transformation algorithms, necessary for graphics transformations, and used in processing digital communications signals. Be it in hardware or software, it's always to our benefit to streamline the processing necessary to perform a complex multiply whenever we can. If the available hardware can perform three additions faster than a single multiplication, there's a way to speed up a complex multiply operation [16].

The multiplication of two complex numbers, a + jb and c + jd, results in the complex product

We can see that Eq. (13-14) requires four multiplications and two additions. (From a computational standpoint we'll assume a subtraction is equivalent to an addition.) Instead of using Eq. (13-14), we can calculate the following intermediate values

We then perform the following operations to get the final R and I

The reader is invited to plug the k values from Eq. (13-15) into Eq. (13-16) to verify that the expressions in Eq. (13-16) are equivalent to Eq. (13-14). The intermediate values in Eq. (13-15) required three additions and three multiplications, while the results in Eq. (13-16) required two more additions. So we traded one of the multiplications required in Eq. (13-14) for three addition operations needed by Eq. (13-15) and Eq. (13-16). If our hardware uses fewer clock cycles to perform three additions than a single multiplication, we may well gain overall processing speed by using Eq. (13-15) and Eq. (13-16) instead of Eq. (13-14) for complex multiplication.

Upon recognizing its linearity property and understanding the odd and even symmetries of the transform's output, the early investigators of the fast Fourier transform (FFT) realized that two separate, real N-point input data sequences could be transformed using a single N-point complex FFT. They also developed a technique using a single N-point complex FFT to transform a 2N-point real input sequence. Let's see how these two techniques work.

The standard FFT algorithms were developed to accept complex inputs; that is, the FFT's normal input x(n) sequence is assumed to comprise real and imaginary parts, such as

In typical signal processing schemes, FFT input data sequences are usually real. The most common example of this is the FFT input samples coming from an A/D converter that provides real integer values of some continuous (analog) signal. In this case the FFT's imaginary xi(n)'s inputs are all zero. So initial FFT computations performed on the xi(n) inputs represent wasted operations. Early FFT pioneers recognized this inefficiency, studied the problem, and developed a technique where two independent N-point, real input data sequences could be transformed by a single N-point complex FFT. We call this scheme the Two N-Point Real FFTs algorithm. The derivation of this technique is straightforward and described in the literature[17–19]. If two N-point, real input sequences are a(n) and b(n), they'll have discrete Fourier transforms represented by Xa(m) and Xb(m). If we treat the a(n) sequence as the real part of an FFT input and the b(n) sequence as the imaginary part of the FFT input, then

Applying the x(n) values from Eq. (13-18) to the standard DFT,

we'll get an DFT output X(m) where m goes from 0 to N–1. (We're assuming, of course, that the DFT is implemented by way of an FFT algorithm.) Using the superscript * symbol to represent the complex conjugate, we can extract the two desired FFT outputs Xa(m) and Xb(m) from X(m) by using the following:

and

Let's break Eqs. (13-20) and (13-21) into their real and imaginary parts to get expressions for Xa(m) and Xb(m) that are easier to understand and implement. Using the notation showing X(m)'s real and imaginary parts, where X(m) = Xr(m) + jXi(m), we can rewrite Eq. (13-20) as

where m = 1, 2, 3, . . ., N–1. What about the first Xa(m), when m = 0? Well, this is where we run into a bind if we actually try to implement Eq. (13-20) directly. Letting m = 0 in Eq. (13-40), we quickly realize that the first term in the numerator, X*(N–0) = X*(N), isn't available because the X(N) sample does not exist in the output of an N-point FFT! We resolve this problem by remembering that X(m) is periodic with a period N, so X(N) = X(0).[†] When m = 0, Eq. (13-20) becomes

Next, simplifying Eq. (13-21),

where, again, m = 1, 2, 3, . . ., N–1. By the same argument used for Eq. (13-23), when m = 0, Xb(0) in Eq. (13-24) becomes

This discussion brings up a good point for beginners to keep in mind. In the literature Eqs. (13-20) and (13-21) are often presented without any discussion of the m = 0 problem. So, whenever you're grinding through an algebraic derivation or have some equations tossed out at you, be a little skeptical. Try the equations out on an example—see if they're true. (After all, both authors and book typesetters are human and sometimes make mistakes. We had an old saying in Ohio for this situation: “Trust everybody, but cut the cards.”) Following this advice, let's prove that this Two N-Point Real FFTs algorithm really does work by applying the 8-point data sequences from Chapter 3's DFT Examples to Eqs. (13-22) through (13-25). Taking the 8-point input data sequence from Section 3.1's DFT Example 1 and denoting it a(n),

Taking the 8-point input data sequence from Section 3.6's DFT Example 2 and calling it b(n),

Combining the sequences in Eqs. (13-26) and (13-27) into a single complex sequence x(n),

Now, taking the 8-point FFT of the complex sequence in Eq. (13-28) we get

So from Eq. (13-23),

To get the rest of Xa(m), we have to plug the FFT output's X(m) and X(N–m) values into Eq. (13-22).[†] Doing so,

So Eq. (13-22) really does extract Xa(m) from the X(m) sequence in Eq. (13-29). We can see that we need not solve Eq. (13-22) when m is greater than 4 (or N/2) because Xa(m) will always be conjugate symmetric. Because Xa(7) = Xa(1), Xa(6) = Xa(2), etc., only the first N/2 elements in Xa(m) are independent and need be calculated.

OK, let's keep going and use Eqs. (13-24) and (13-25) to extract Xb(m) from the FFT output. From Eq. (13-25),

Plugging the FFT's output values into Eq. (13-24) to get the next four Xb(m)s, we have

The question arises “With the additional processing required by Eqs. (13-22) and (13-24) after the initial FFT, how much computational saving (or loss) is to be had by this Two N-Point Real FFTs algorithm?” We can estimate the efficiency of this algorithm by considering the number of arithmetic operations required relative to two separate N-point radix-2 FFTs. First, we estimate the number of arithmetic operations in two separate N-point complex FFTs.

From Section 4.2, we know that a standard radix-2 N-point complex FFT comprises (N/2) log2N butterfly operations. If we use the optimized butterfly structure, each butterfly requires one complex multiplication and two complex additions. Now, one complex multiplication requires two real additions and four real multiplications, and one complex addition requires two real additions.[†] So a single FFT butterfly operation comprises four real multiplications and six real additions. This means that a single N-point complex FFT requires (4N/2)·log2N real multiplications, and (6N/2)·log2N real additions. Finally, we can say that two separate N-point complex radix-2 FFTs require

Next, we need to determine the computational workload of the Two N-Point Real FFTs algorithm. If we add up the number of real multiplications and real additions required by the algorithm's N-point complex FFT, plus those required by Eq. (13-22) to get Xa(m), and those required by Eq. (13-24) to get Xb(m), the Two N-Point Real FFTs algorithm requires

Equations (13-31) and (13-31') assume that we're calculating only the first N/2 independent elements of Xa(m) and Xb(m). The single N term in Eq. (13-31) accounts for the N/2 divide by 2 operations in Eq. (13-22) and the N/2 divide by 2 operations in Eq. (13-24).

OK, now we can find out how efficient the Two N-Point Real FFTs algorithm is compared to two separate complex N-point radix-2 FFTs. This comparison, however, depends on the hardware used for the calculations. If our arithmetic hardware takes many more clock cycles to perform a multiplication than an addition, then the difference between multiplications in Eqs. (13-30) and (13-31) is the most important comparison. In this case, the percentage gain in computational saving of the Two N-Point Real FFTs algorithm relative to two separate N-point complex FFTs is the difference in their necessary multiplications over the number of multiplications needed for two separate N-point complex FFTs, or

The computational (multiplications only) saving from Eq. (13-32) is plotted as the top curve of Figure 13-11. In terms of multiplications, for N≥32, the Two N-Point Real FFTs algorithm saves us over 45 percent in computational workload compared to two separate N-point complex FFTs.

Figure 13-11. Computational saving of the Two N-Point Real FFTs algorithm over that of two separate N-point complex FFTs. The top curve indicates the saving when only multiplications are considered. The bottom curve is the saving when both additions and multiplications are used in the comparison.

For hardware using high-speed multiplier integrated circuits, multiplication and addition can take roughly equivalent clock cycles. This makes addition operations just as important and time consuming as multiplications. Thus the difference between those combined arithmetic operations in Eqs. (13-30) plus (13-30') and Eqs. (13-31) plus (13-31') is the appropriate comparison. In this case, the percentage gain in computational saving of our algorithm over two FFTs is their total arithmetic operational difference over the total arithmetic operations in two separate N-point complex FFTs, or

The full computational (multiplications and additions) saving from Eq. (13-33) is plotted as the bottom curve of Figure 13-11. This concludes our discussion and illustration of how a single N-point complex FFT can be used to transform two separate N-point real input data sequences.

Similar to the scheme above where two separate N-point real data sequences are transformed using a single N-point FFT, a technique exists where a 2N-point real sequence can be transformed with a single complex N-point FFT. This 2N-Point Real FFT algorithm, whose derivation is also described in the literature, requires that the 2N-sample real input sequence be separated into two parts[19,20]. Not broken in two, but unzipped—separating the even and odd sequence samples. The N even- indexed input samples are loaded into the real part of a complex N-point input sequence x(n). Likewise, the input's N odd-indexed samples are loaded into x(n)'s imaginary parts. To illustrate this process, let's say we have a 2N-sample real input data sequence a(n) where 0 ≤ n ≤ 2N–1. We want a(n)'s 2N-point transform Xa(m). Loading a(n)'s odd/even sequence values appropriately into an N-point complex FFT's input sequence, x(n),

Applying the N complex values in Eq. (13-34) to an N-point complex FFT, we'll get an FFT output X(m) = Xr(m) + jXi(m), where m goes from 0 to N–1. To extract the desired 2N-Point Real FFT algorithm output Xa(m) = Xa,real(m) + jXa,imag(m) from X(m), let's define the following relationships

The values resulting from Eqs. (13-35) through (13-38) are, then, used as factors in the following expressions to obtain the real and imaginary parts of our final Xa(m):

and

Remember now, the original a(n) input index n goes from 0 to 2N–1, and our N-point FFT output index m goes from 0 to N–1. We apply 2N real input time-domain samples to this algorithm and get back N complex frequency-domain samples representing the first half of the equivalent 2N-point complex FFT, Xa(0) through Xa(N–1). Because this algorithm's a(n) input is constrained to be real, Xa(N) through Xa(2N–1) are merely the complex conjugates of their Xa(0) through Xa(N–1) counterparts and need not be calculated. To help us keep all of this straight, Figure 13-12 depicts the computational steps of the 2N-Point Real FFT algorithm.

To demonstrate this process by way of example, let's apply the 8-point data sequence from Eq. (13-26) to the 2N-Point Real FFT algorithm. Partitioning those Eq. (13-26) samples as dictated by Eq. (13-34), we have our new FFT input sequence:

With N = 4 in this example, taking the 4-point FFT of the complex sequence in Eq. (13-41) we get

Using these values, we now get the intermediate factors from Eqs. (13-35) through (13-38). Calculating our first ![]() value, again we're reminded that X(m) is periodic with a period N, so X(4) = X(0), and

value, again we're reminded that X(m) is periodic with a period N, so X(4) = X(0), and ![]() Continuing to use Eqs. (13-35) through (13-38),

Continuing to use Eqs. (13-35) through (13-38),

Using the intermediate values from Eq. (13-43) in Eqs. (13-39) and (13-40),

Evaluating the sine and cosine terms in Eq. (13-44),

Combining the results of the terms in Eq. (13-45), we have our final correct answer of

After going through all the steps required by Eqs. (13-35) through (13-40), the reader might question the efficiency of this 2N-Point Real FFT algorithm. Using the same process as the above Two N-Point Real FFTs algorithm analysis, let's show that the 2N-Point Real FFT algorithm does provide some modest computational saving. First, we know that a single 2N-Point radix-2 FFT has (2N/2) · log22N = N · (log2N+1) butterflies and requires

and

If we add up the number of real multiplications and real additions required by the algorithm's N-point complex FFT, plus those required by Eqs. (13-35) through (13-38) and those required by Eqs. (13-39) and (13-40), the complete 2N-Point Real FFT algorithm requires

and

OK, using the same hardware considerations (multiplications only) we used to arrive at Eq. (13-32), the percentage gain in multiplication saving of the 2N-Point Real FFT algorithm relative to a 2N-point complex FFT is

The computational (multiplications only) saving from Eq. (13-49) is plotted as the bottom curve of Figure 13-13. In terms of multiplications, the 2N-Point Real FFT algorithm provides a saving of >30% when N ≥ 128 or whenever we transform input data sequences whose lengths are ≥256.

Figure 13-13. Computational saving of the 2N-Point Real FFT algorithm over that of a single 2N-point complex FFT. The top curve is the saving when both additions and multiplications are used in the comparison. The bottom curve indicates the saving when only multiplications are considered.

Again, for hardware using high-speed multipliers, we consider both multiplication and addition operations. The difference between those combined arithmetic operations in Eqs. (13-47) plus (13-47') and Eqs. (13-48) plus (13-48') is the appropriate comparison. In this case, the percentage gain in computational saving of our algorithm is

The full computational (multiplications and additions) saving from Eq. (13-50) is plotted as a function of N in the top curve of Figure 13-13.

There are many signal processing applications where the capability to perform the inverse FFT is necessary. This can be a problem if available hardware, or software routines, have only the capability to perform the forward FFT. Fortunately, there are two slick ways to perform the inverse FFT using the forward FFT algorithm.

The first inverse FFT calculation scheme is implemented following the processes shown in Figure 13-14.

To see how this works, consider the expressions for the forward and inverse DFTs. They are

To reiterate our goal, we want to use the process in Eq. (13-51) to implement Eq. (13-52). The first step of our approach is to use complex conjugation. Remember, conjugation (represented by the superscript * symbol) is the reversal of the sign of a complex number's imaginary exponent—if x = ejø, then x* = e–jø. So, as a first step we take the complex conjugate of both sides of Eq. (13-52) to give us

One of the properties of complex numbers, discussed in Appendix A, is that the conjugate of a product is equal to the product of the conjugates. That is, if c = ab, then c* = (ab)* = a*b*. Using this, we can show the conjugate of the right side of Eq. (13-53) to be

Hold on; we're almost there. Notice the similarity of Eq. (13-54) to our original forward DFT expression Eq. (13-51). If we perform a forward DFT on the conjugate of the X(m) in Eq. (13-54), and divide the results by N, we get the conjugate of our desired time samples x(n). Taking the conjugate of both sides of Eq. (13-54), we get a more straightforward expression for x(n):

The second inverse FFT calculation technique is implemented following the interesting data flow shown in Figure 13-15.

In this clever inverse FFT scheme we don't bother with conjugation. Instead, we merely swap the real and imaginary parts of sequences of complex data[21]. To see why this process works, let's look at the inverse DFT equation again while separating the input X(m) term into its real and imaginary parts and remembering that ejø = cos(ø) + jsin(ø).

Multiplying the complex terms in Eq. (13-56) gives us

Equation (13-57) is the general expression for the inverse DFT and we'll now quickly show that the process in Figure 13-15 implements this equation. With X(m) = Xreal(m) + jXimag(m), then swapping these terms gives us

The forward DFT of our Xswap(m) is

Multiplying the complex terms in Eq. (13-59) gives us

Swapping the real and imaginary parts of the results of this forward DFT gives us what we're after:

If we divided Eq. (13-61) by N, it would be exactly equal to the inverse DFT expression in Eq. (13-57), and that's what we set out to show.

If we implement a linear phase FIR digital filter using the standard structure in Figure 13-16(a), there's a way to reduce the number of multipliers when the filter has an odd number of taps. Let's look at the top of Figure 13-16(a) where the five-tap FIR filter coefficients are h(0) through h(4) and the y(n) output is

Figure 13-16. Conventional and simplified structures of an FIR filter: (a) with an odd number of taps; (b) with an even number of taps.

If the FIR filter's coefficients are symmetrical we can reduce the number of necessary multipliers. That is, if h(4) = h(0), and h(3) = h(1), we can implement Eq. (13-62) by

where only three multiplications are necessary as shown at the bottom of Figure 13-16(a). In our five-tap filter case, we've eliminated two multipliers at the expense of implementing two additional adders. This minimum-multiplier structure is called a “folded” FIR filter.

In the general case of symmetrical-coefficient FIR filters with S taps, we can trade (S–1)/2 multipliers for (S–1)/2 adders when S is an odd number. So in the case of an odd number of taps, we need only perform (S–1)/2 + 1 multiplications for each filter output sample. For an even number of symmetrical taps as shown in Figure 13-16(b), the savings afforded by this technique reduces the necessary number of multiplications to S/2.

As of this writing, typical programmable-DSP chips cannot take advantage of the folded FIR filter structure because it requires a single addition before each multiply and accumulate operation.

In Section 12.3 we discussed the mathematical details, and ill effects, of quantization noise in analog-to-digital (A/D) converters. DSP practitioners commonly use two tricks to reduce converter quantization noise. Those schemes are called oversampling and dithering.

The process of oversampling to reduce A/D converter quantization noise is straightforward. We merely sample an analog signal at an fs sample rate higher than the minimum rate needed to satisfy the Nyquist criterion (twice the analog signal's bandwidth), and then lowpass filter. What could be simpler? The theory behind oversampling is based on the assumption that an A/D converter's total quantization noise power (variance) is the converter's least significant bit (lsb) value squared over 12, or

We derived that expression in Section 12.3. The next assumption is: the quantization noise values are truly random, and in the frequency domain the quantization noise has a flat spectrum. (These assumptions are valid if the A/D converter is being driven by an analog signal that covers most of the converter's analog input voltage range, and is not highly periodic.) Next we consider the notion of quantization noise power spectral density (PSD), a frequency-domain characterization of quantization noise measured in noise power per hertz as shown in Figure 13-17. Thus we can consider the idea that quantization noise can be represented as a certain amount of power (watts, if we wish) per unit bandwidth.

In our world of discrete systems, the flat noise spectrum assumption results in the total quantization noise (a fixed value based on the converter's lsb voltage) being distributed equally in the frequency domain, from –fs/2 to +fs/2 as indicated in Figure 13-17. The amplitude of this quantization noise PSD is the rectangle area (total quantization noise power) divided by the rectangle width (fs), or

measured in watts/Hz.

The next question is: “How can we reduce the PSDnoise level defined by Eq. (13-65)?” We could reduce the lsb value (volts) in the numerator by using an A/D converter with additional bits. That would make the lsb value smaller and certainly reduce PSDnoise, but that's an expensive solution. Extra converter bits cost money. Better yet, let's increase the denominator of Eq. (13-65) by increasing the sample rate fs.

Consider a low-level discrete signal of interest whose spectrum is depicted in Figure 13-18(a). By increasing the fs,old sample rate to some larger value fs,new (oversampling), we spread the total noise power density (a fixed value) over a wider frequency range as shown in Figure 13-18(b). The area under the shaded curves in Figure 13-18(a) and 13-18(b) are equal. Next we lowpass filter the converter's output samples. At the output of the filter, the quantization noise level contaminating our signal will be reduced from that at the input of the filter.

Figure 13-18. Oversampling example: (a) noise PSD at an fs,old samples rate; (b) noise PSD at the higher fs,new samples rate; (c) processing steps.

The improvement in signal to quantization noise ratio, measured in dB, achieved by oversampling is:

For example: if fs,old = 100 kHz, and fs,new = 400 kHz, the SNRA/D-gain = 10log10(4) = 6.02 dB. Thus oversampling by a factor of 4 (and filtering), we gain a single bit's worth of quantization noise reduction. Consequently we can achieve N+1-bit performance from an N-bit A/D converter, because we gain signal amplitude resolution at the expense of higher sampling speed. After digital filtering, we can decimate to the lower fs,old without degrading the improved SNR. Of course, the number of bits used for the lowpass filter's coefficients and registers must exceed the original number of A/D converter bits, or this oversampling scheme doesn't work.

With the use of a digital lowpass filter, depending on the interfering analog noise in x(t), it's possible to use a lower performance (simpler) analog anti-aliasing filter relative to the analog filter necessary at the lower sampling rate.

Dithering, another technique used to minimize the effects of A/D quantization noise, is the process of adding noise to our analog signal prior to A/D conversion. This scheme, which doesn't seem at all like a good idea, can indeed be useful and is easily illustrated with an example. Consider digitizing the low-level analog sinusoid shown in Figure 13-19(a), whose peak voltage just exceeds a single A/D converter least significant bit (lsb) voltage level, yielding the converter output x1(n) samples in Figure 13-19(b). The x1(n) output sequence is clipped. This generates all sorts of spectral harmonics. Another way to explain the spectral harmonics is to recognize the periodicity of the quantization noise in Figure 13-19(c).

Figure 13-19. Dithering: (a) a low-level analog signal; (b) the A/D converter output sequence; (c) the quantization error in the converter's output.

We show the spectrum of x1(n) in Figure 13-20(a) where the spurious quantization noise harmonics are apparent. It's worthwhile to note that averaging multiple spectra will not enable us to pull some spectral component of interest up above those spurious harmonics in Figure 13-20(a). Because the quantization noise is highly correlated with our input sinewave—the quantization noise has the same time period as the input sinewave—spectral averaging will also raise the noise harmonic levels. Dithering to the rescue.

Dithering is the technique where random analog noise is added to the analog input sinusoid before it is digitized. This technique results in a noisy analog signal that crosses additional converter lsb boundaries and yields a quantization noise that's much more random, with a reduced level of undesirable spectral harmonics as shown in Figure 13-20(b). Dithering raises the average spectral noise floor but increases our signal to noise ratio SNR2. Dithering forces the quantization noise to lose its coherence with the original input signal, and we could then perform signal averaging if desired.

Dithering is indeed useful when we're digitizing

low-amplitude analog signals,

highly periodic analog signals (like a sinewave with an even number of cycles in the sample time interval), and

slowly varying (very low frequency, including DC) analog signals.

The standard implementation of dithering is shown in Figure 13-21(a). The typical amount of random wideband analog noise used in the this process, provided by a noise diode or noise generator ICs, has a rms level equivalent to 1/3 to 1 lsb voltage level.

Figure 13-21. Dithering implementations: (a) standard dithering process; (b) advanced dithering with noise subtraction.

For high-performance audio applications, engineers have found that adding dither noise from two separate noise generators improves background audio low-level noise suppression. The probability density function (PDF) of the sum of two noise sources (having rectangular PDFs) is the convolution of their individual PDFs. Because the convolution of two rectangular functions is triangular, this dual-noise-source dithering scheme is called triangular dither. Typical triangular dither noise has rms levels equivalent to, roughly, 2 lsb voltage levels.

In the situation where our signal of interest occupies some well defined portion of the full frequency band, injecting narrowband dither noise having an rms level equivalent to 4 to 6 lsb voltage levels, whose spectral energy is outside that signal band, would be advantageous. (Remember though: the dither signal can't be too narrowband, like a sinewave. Quantization noise from a sinewave signal would generate more spurious harmonics!) That narrowband dither noise can then be removed by follow-on digital filtering.

One last note about dithering: to improve our ability to detect low-level signals, we could add the analog dither noise and then subtract that noise from the digitized data, as shown in Figure 13-21(b). This way, we randomized the quantization noise, but reduced the amount of total noise power injected in the analog signal. This scheme is used in commercial analog test equipment[22,23].

We can take advantage of digital signal processing techniques to facilitate the testing of A/D converters. In this section we present two schemes for measuring converter performance; first, a technique using the FFT to estimate overall converter noise, and second, a histogram analysis scheme to detect missing converter output codes.

The combination A/D converter quantization noise, missing bits, harmonic distortion, and other nonlinearities can be characterized by analyzing the spectral content of the converter's output. Converter performance degradation caused by these nonlinearities is not difficult to recognize because they show up as spurious spectral components and increased background noise levels in the A/D converter's output samples. The traditional test method involves applying a sinusoidal analog voltage to an A/D converter's input and examining the spectrum of the converter's digitized time-domain output samples. We can use the FFT to compute the spectrum of an A/D converter's output samples, but we have to minimize FFT spectral leakage to improve the sensitivity of our spectral measurements. Traditional time-domain windowing, however, provides insufficient FFT leakage reduction for high-performance A/D converter testing.

The trick to circumventing this FFT leakage problem is to use an sinusoidal analog input voltage whose frequency is an integer fraction of the A/D converter's clock frequency as shown in Figure 13-22(a). That frequency is mfs/N, where m is an integer, fs is the clock frequency (sample rate), and N is the FFT size. Figure 13-22(a) shows the x(n) time domain output of an ideal A/D converter when its analog input is a sinewave having exactly eight cycles over N = 128 converter output samples. In this case, the input frequency normalized to the sample rate fs is 8fs/128 Hz. Recall from Chapter 3 that the expression mfs/N defined the analysis frequencies, or bin centers, of the DFT, and a DFT input sinusoid whose frequency is at a bin center causes no spectral leakage.

Figure 13-22. Ideal A/D converter output when the input is an analog 8fs/128 Hz sinusoid: (a) output time samples; (b) spectral magnitude in dB.

The first half of a 128-point FFT of x(n) is shown in the logarithmic plot in Figure 13-22(b) where the input tone lies exactly at the m = 8 bin center and FFT leakage has been sufficiently reduced. Specifically, if the sample rate were 1 MHz, then the A/D's input analog tone would have to be exactly 8(106/128) = 62.5 kHz. In order to implement this scheme we need to ensure that the analog test generator be synchronized, exactly, with the A/D converter's clock frequency of fs Hz. Achieving this synchronization is why this A/D converter testing procedure is referred to as coherent sampling[24–26]. That is, the analog signal generator and the A/D clock generator providing fs must not drift in frequency relative to each other—they must remain coherent. (We must take care here from a semantic viewpoint because the quadrature sampling schemes described in Chapter 8 are also sometimes called coherent sampling, and they are unrelated to this A/D converter testing procedure.)

As it turns out, some values of m are more advantageous than others. Notice in Figure 13-22(a), that when m = 8, only nine different amplitude values are output by the A/D converter. Those values are repeated over and over. As shown in Figure 13-23, when m = 7 we exercise many more than nine different A/D output values.

Because it's best to test as many A/D output binary words as possible, while keeping the quantization noise sufficiently random, users of this A/D testing scheme have discovered another trick. They found that making m an odd prime number (3, 5, 7, 11, etc.) minimizes the number of redundant A/D output word values.

Figure 13-24(a) illustrates an extreme example of nonlinear A/D converter operation, with several discrete output samples having dropped bits in the time domain x(n) with m = 8. The FFT of this distorted x(n) is provided in Figure 13-24(b) where we can see the increased background noise level due to the A/D converter's nonlinearities compared to Figure 13-22(b).

Figure 13-24. Non-ideal A/D converter output showing several dropped bits: (a) time samples; (b) spectral magnitude in dB.

The true A/D converter quantization noise levels will be higher than those measured in Figure 13-24(b). That's because the inherent processing gain of the FFT (discussed in Section 3.12.1) will pull the high-level m = 8 spectral component up out of the background quantization noise. Consequently, if we use this A/D converter test technique, we must account for the FFT's processing gain of 10log10(N/2) as indicated in Figure 13-24(b).

To fully characterize the dynamic performance of an A/D converter we'd need to perform this testing technique at many different input frequencies and amplitudes.[†] The key issue here is that when any input frequency is mfs/N, where m is less than N/2 to satisfy the Nyquist sampling criterion, we can take full advantage of the FFT's processing capability while minimizing spectral leakage.

In closing, applying the sum of two analog tones to an A/D converter's input is often done to quantify the intermodulation distortion performance of a converter, which in turn characterizes the converter's dynamic range. In doing so, both input tones must comply with the mfs/N restriction. Figure 13-25 shows the test configuration. It's prudent to use bandpass filters (BPF) to improve the spectral purity of the sinewave generators' outputs, and small-valued fixed attenuators (pads) are used to keep the generators from adversely interacting with each other. (I recommend 3-dB attenuators for this.) The power combiner is typically an analog power splitter driven backward, and the A/D clock generator output is a squarewave. The dashed lines in Figure 13-25 indicate that all three generators are locked to the same frequency reference source.

One problem that can plague A/D converters is missing codes. This defect occurs when a converter is incapable of outputting a specific binary word (a code). Think about driving an 8-bit converter with an analog sinusoid and the effect when its output should be the binary word 00100001 (decimal 33); its output is actually the word 00100000 (decimal 32) as shown in Figure 13-26. The binary word representing decimal 33 is a missing code. This subtle nonlinearity is very difficult to detect by examining time-domain samples or performing spectrum analysis. Fortunately there is a simple, reliable way to detect the missing 33 using histogram analysis.

Figure 13-26. Time-domain plot of an 8-bit converter exhibiting a missing code of binary value 0010001, decimal 33.

The histogram testing technique merely involves collecting many A/D converter output samples and plotting the number of occurrences of each sample value versus that sample value as shown in Figure 13-27. Any missing code (like our missing 33) would show up in the histogram as a zero value. That is, there were zero occurrences of the binary code representing a decimal 33.

While contemplating the convolution relationships in Eq. (5-31) and Figure 5-41, digital signal processing practitioners realized that convolution could sometimes be performed more efficiently using FFT algorithms than it could be using the direct convolution method. This FFT-based convolution scheme, called fast convolution, is diagrammed in Figure 13-28.

The standard convolution equation for an M-tap nonrecursive FIR filter, given in Eq. (5-6) and repeated here, is

where h(k) is the impulse response sequence (coefficients) of the FIR filter and the * symbol indicates convolution. It has been shown that when the final y(n) output sequence has a length greater than 30, the process in Figure 13-28 requires fewer multiplications than implementing the convolution expression in Eq. (13-67) directly. Consequently, this fast convolution technique is a very powerful signal processing tool, particularly when used for digital filtering. Very efficient FIR filters can be designed using this technique because, if their impulse response h(k) is constant, then we don't have to bother recalculating H(m) each time a new x(n) sequence is filtered. In this case the H(m) sequence can be precalculated and stored in memory.

The necessary forward and inverse FFT sizes must of course be equal, and are dependent upon the length of the original h(k) and x(n) sequences. Recall from Eq. (5-29) that if h(k) is of length P and x(n) is of length Q, the length of the final y(n) sequence will be (P+Q–1). For this fast convolution technique to give valid results, the forward and inverse FFT sizes must be equal and greater than (P+Q–1). This means that h(k) and x(n) must both be padded with zero-valued samples, at the end of their respective sequences, to make their lengths identical and greater than (P+Q–1). This zero padding will not invalidate the fast convolution results. So to use fast convolution, we must choose an N-point FFT size such that N ≥ (P+Q–1) and zero pad h(k) and x(n) so they have new lengths equal to N.

An interesting aspect of fast convolution, from a hardware standpoint, is that the FFT indexing bit-reversal problem discussed in Sections 4.5 and 4.6 is not an issue here. If the identical FFT structures used in Figure 13-28 result in X(m) and H(m) having bit-reversed indices, the multiplication can still be performed directly on the scrambled H(m) and X(m) sequences. Then an appropriate inverse FFT structure can be used that expects bit-reversed input data. That inverse FFT then provides an output y(n) whose data index is in the correct order!

Section D.4 in Appendix D discusses the normal distribution curve as it relates to random data. A problem we may encounter is how actually to generate random data samples whose distribution follows that normal (Gaussian) curve. There's a straightforward way to solve this problem using any software package that can generate uniformly distributed random data, as most of them do[27]. Figure 13-29 shows our situation pictorially where we require random data that's distributed normally with a mean (average) of μ' and a standard deviation of σ', as in Figure 13-29(a), and all we have available is a software routine that generates random data that's uniformly distributed between zero and one as in Figure 13-29(b).

Figure 13-29. Probability distribution functions: (a) Normal distribution with mean = μ', and standard deviation σ'; (b) Uniform distribution between zero and one.

As it turns out, there's a principle in advanced probability theory, known as the Central Limit Theorem, that says when random data from an arbitrary distribution is summed over M samples, the probability distribution of the sum begins to approach a normal distribution as M increases[28–30]. In other words, if we generate a set of N random samples that are uniformly distributed between zero and one, we can begin adding other sets of N samples to the first set. As we continue summing additional sets, the distribution of the N-element set of sums becomes more and more normal. We can sound impressive and state that “the sum becomes asymptotically normal.” Experience has shown that for practical purposes, if we sum M ≥ 30 times, the summed data distribution is essentially normal. With this rule in mind, we're half way to solving our problem.

After summing M sets of uniformly distributed samples, the summed set ysum will have a distribution as shown in Figure 13-30.

Figure 13-30. Probability distribution of the summed set of random data derived from uniformly distributed data.

Because we've summed M sets, the mean of ysum is μ = M/2. To determine ysum's standard deviation σ, we assume that the six sigma point is equal to M–μ. That is,

That assumption is valid because we know that the probability of an element in ysum being greater that M is zero, and the probability of having a normal data sample at six sigma is one chance in six billion, or essentially zero. Because μ = M/2, from Eq. (13-68), ysum's standard deviation is set to

To convert the ysum data set to our desired data set having a mean of μ' and a standard deviation of σ', we :

subtract M/2 from each element of ysum to shift its mean to zero,

ensure that 6σ' is equal to M/2 by multiplying each element in the shifted data set by 12σ'/M, and

center the new data set about the desired μ' by adding μ' to each element of the new data.

If we call our desired normally distributed random data set ydesired, then the nth element of that set is described mathematically as

Our discussion thus far has had a decidedly software algorithm flavor, but hardware designers also occasionally need to generate normally distributed random data at high speeds in their designs. For you hardware designers, Reference [30] presents an efficient hardware design technique to generate normally distributed random data using fixed-point arithmetic integrated circuits.

The above method for generating normally distributed random numbers works reasonably well, but its results are not perfect because the tails of the probability distribution curve in Figure 13-30 are not perfectly Gaussian.[†] An advanced, and more statistically correct (improved randomness), technique that you may want to explore is called the Ziggurat method[31–33].

You can cancel the nonlinear phase effects of an IIR filter by following the process shown in Figure 13-31(a). The y(n) output will be a filtered version of x(n) with no filter-induced phase distortion. The same IIR filter is used twice in this scheme, and the time reversal step is a straight left-right flipping of a time-domain sequence. Consider the following. If some spectral component in x(n) has an arbitrary phase of α degrees, and the first filter induces a phase shift of –β degrees, that spectral component's phase at node A will be α–β degrees. The first time reversal step will conjugate that phase and induce an additional phase shift of –θ degrees. (Appendix C explains this effect.) Consequently, the component's phase at node B will be –α+β–θ degrees. The second filter's phase shift of –β degrees yields a phase of –α–θ degrees at node C. The final time reversal step (often omitted in literary descriptions of this zero-phase filtering process) will conjugate that phase and again induce an additional phase shift of –θ degrees. Thankfully, the spectral component's phase in y(n) will be α+θ–θ = α degrees, the same phase as in x(n). This property yields an overall filter whose phase response is zero degrees over the entire frequency range.

An equivalent zero-phase filter is presented in Figure 13-31(b). Of course, these methods of zero-phase filtering cannot be performed in real time because we can't reverse the flow of time (at least not in our universe). This filtering is a block processing, or off-line process, such as filtering an audio sound file on a computer. We must have all the time samples available before we start processing. The initial time reversal in Figure 13-31(b) illustrates this restriction.

There will be filter transient effects at the beginning and end of the filtered sequences. If transient effects are bothersome in a given application, consider discarding L samples from the beginning and end of the final y(n) time sequence, where L is 4 (or 5) times the order of the IIR filter.

By the way, the final peak-to-peak passband ripple (in dB) of this zero-phase filtering process will be twice the peak-to-peak passband ripple of the single IIR filter. The final stopband attenuation will also be double that of the single filter.

Here's an interesting technique for improving the stopband attenuation of a digital under the condition that we're unable, for whatever reason, to modify that filter's coefficients. Actually, we can a filter's double stopband attenuation by cascading the filter with itself. This works, as shown in Figure 13-32(a), where the frequency magnitude response of a single filter is a dashed curve |H(m)| and the response of the filter cascaded with itself is represented by solid curve |H2(m)|. The problem with this simple cascade idea is that it also doubles the passband peak-to-peak ripple as shown in Figure 13-32(b). The frequency axis in Figure 13-32 is normalized such that a value of 0.5 represents half the signal sample rate.

Figure 13-32. Frequency magnitude responses of a single filter and that filter cascaded with itself: (a) full response; (b) passband detail.

Well, there's a better scheme for improving the stopband attenuation performance of a filter and avoiding passband ripple degradation without actually changing the filter's coefficients. The technique is called filter sharpening[34], and is shown as Hs in Figure 13-33.

The delay element in Figure 13-33 is equal to (N–1)/2 samples where N is the number of h(k) coefficients, the unit-impulse response length, in the original H(m) FIR filter. Using the sharpening process results in the improved |Hs(m)| filter performance shown as the solid curve in Figure 13-34, where we see the increased stopband attenuation and reduced passband ripple beyond that afforded by the original H(m) filter. Because of the delayed time-alignment constraint, filter sharpening is not applicable to filters having non-constant group delay, such as minimum-phase FIR filters or IIR filters.

If perhaps more stopband attenuation is needed then the process shown in Figure 13-35 can be used, where again the delay element is equal to (N–1)/2 samples.

The filter sharpening procedure is straightforward and applicable to lowpass, bandpass, and highpass FIR filters having symmetrical coefficients and an odd number of taps. Filter sharpening can be used whenever a given filter response cannot be modified, such as an unchangeable software subroutine, and can even be applied to cascaded integrator-comb (CIC) filters to flatten their passband responses, as well as FIR fixed-point multiplierless filters where the coefficients are constrained to be powers of two[35,36].

There are many digital communications applications where a real signal is centered at one fourth the sample rate, or fs/4. This condition makes quadrature downconversion particularly simple. (See Sections 8.9 and 13.1.) In the event that you'd like to generate an interpolated (increased sample rate) version of the bandpass signal but maintain its fs/4 center frequency, there's an efficient way to do so[37]. Suppose we want to interpolate by a factor of two so the output sample rate is twice the input sample rate, fs-out = 2fs-in. In this case the process is: quadrature downconversion by fs-in/4, interpolation factor of two, quadrature upconversion by fs-out/4, and then take only the real part of the complex upconverted sequence. The implementation of this scheme is shown at the top of Figure 13-36.

The sequences applied to the first multiplier in the top signal path are the real x(n) input and the repeating mixing sequence 1,0,–1,0. That mixing sequence is the real (or in-phase) part of the complex exponential

needed for quadrature downconversion by fs/4. Likewise, the repeating mixing sequence 0,–1,0,1 applied to the first multiplier in the bottom path is the imaginary (or quadrature phase) part of the complex downconversion exponential ![]() . The ↑2 symbol means insert one zero-valued sample between each signal at the A nodes. The final subtraction to obtain y(n) is how we extract the real part of the complex sequence at Node D. (That is, we're extracting the real part of the product of the complex signal at Node C times ej2π(1/4).) The spectra at various nodes of this process are shown at the bottom of Figure 13-35. The shaded spectra indicate true spectral components, while the white spectra represent spectral replications. Of course, the same lowpass filter must be used in both processing paths to maintain the proper time delay and orthogonal phase relationships.

. The ↑2 symbol means insert one zero-valued sample between each signal at the A nodes. The final subtraction to obtain y(n) is how we extract the real part of the complex sequence at Node D. (That is, we're extracting the real part of the product of the complex signal at Node C times ej2π(1/4).) The spectra at various nodes of this process are shown at the bottom of Figure 13-35. The shaded spectra indicate true spectral components, while the white spectra represent spectral replications. Of course, the same lowpass filter must be used in both processing paths to maintain the proper time delay and orthogonal phase relationships.

There are several additional issues worth considering regarding this interpolation process[38]. If the amplitude loss, inherent in interpolation, of a factor of two is bothersome, we can make the final mixing sequences 2,0,–2,0, and 0,2,0,–2 to compensate for that loss. Because there are so many zeros in the sequences at Node B (three/fourths of the samples), we should consider those efficient polyphase filters for the lowpass filtering. Finally, if it's sensible in your implementation, consider replacing the final adder with a multiplexer (because alternate samples of the sequences at Node D are zeros). In this case, the mixing sequence in the bottom path would be changed to 0,–1,0,1.

In the practical world of discrete spectrum analysis, we often want to estimate the frequency of a sinusoid (or the center frequency of a very narrowband signal of interest). Upon applying the radix-2 fast Fourier transform (FFT), our narrowband signals of interest rarely reside exactly on an FFT bin center whose frequency is exactly known. As such, due to the FFT's leakage properties, the discrete spectrum of a sinusoid having N time-domain samples may look like the magnitude samples shown in Figure 13-37(a). There we see the sinusoid's spectral peak residing between the FFT's m = 5 and m = 6 bin centers. (Variable m is an N-point FFT's frequency-domain index. The FFT bin spacing is fs/N where, as always, fs is the sample rate.) Close examination of Figure 13-37(a) allows us to say the sinusoid lies in the range of m = 5 and m = 5.5, because we see that the maximum spectral sample is closer to the m = 5 bin center than the m = 6 bin center. The real-valued sinusoidal time signal has, in this example, a frequency of 5.25fs/N Hz. In this situation, our frequency estimation resolution is half the FFT bin spacing. We often need better frequency estimation resolution, and there are indeed several ways to improve that resolution.

We could collect, say, 4N time-domain signal samples and perform a 4N-point FFT yielding a reduced bin spacing of fs/4N. Or we could pad (append to the end of the original time samples) the original N time samples with 3N zero-valued samples and perform a 4N-point FFT on the lengthened time sequence. That would also provide an improved frequency resolution of fs/4N, as shown in Figure 13-37(b). With the spectral peak located at bin mpeak = 21, we estimate the signal's center frequency, in Hz, using

Both schemes, collect more data and zero-padding, are computationally expensive. Many other techniques for enhanced-precision frequency measurement have been described in the scientific literature—from the close-to-home field of geophysics to the lofty studies of astrophysics—but most of those schemes seek precision without regard to computational simplicity. Here we describe a computationally simple frequency estimation scheme[3].

Assume we have FFT spectral samples X(m), of a real-valued narrowband time signal, whose magnitudes are shown in Figure 13-38. The vertical magnitude axis is linear, not logarithmic.

The signal's index-based center frequency, mpeak, can be estimated using

where real(δ) means the real part of the δ correction factor defined as

where mk is the integer index of the largest magnitude sample |X(mk)|. Values X(mk–1) and X(mk+1) are the complex spectral samples on either side of the peak sample as shown in Figure 13-38. Based on the complex spectral values, we compute the signal's index-based frequency mpeak (which may not be an integer), and apply that value to

to provide a frequency estimate in Hz. Equations (13-73) and (13-74) apply only when the majority of the signal's spectral energy lies within a single FFT bin width (fs/N).

This spectral peak location estimation algorithm is quite accurate for its simplicity. Its peak frequency estimation error is roughly 0.06, 0.04, and 0.03 bin widths for signal-to-noise ratios of 3, 6, and 9 dB respectively. Not bad at all! The nice features of the algorithm are that it does not require the original time samples to be windowed, as do some other spectral peak location algorithms, and it uses the raw FFT samples without the need for spectral magnitudes to be computed.

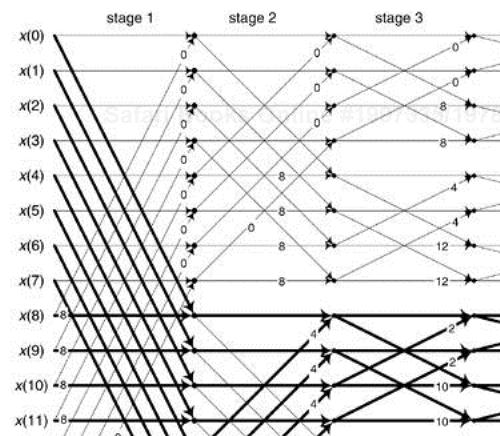

Typical applications using an N-point radix-2 FFT accept N input time samples, x(n), and compute N frequency-domain samples X(m). However, there are non-standard FFT applications (for example, specialized harmonic analysis, or perhaps using an FFT to implement a bank of filters) where only a subset of the X(m) results are required. Consider Figure 13-39 showing a 16-point radix-2 decimation in time FFT, and assume we only need to compute the X(1), X(5), X(9) and X(13) output samples. In this case, rather than compute the entire FFT we only need perform the computations indicated by the heavy lines. Reduced-computation FFTs are often called pruned FFTs[39–42]. (As we did in Chapter 4, for simplicity the butterflies in Figure 13-39 only show the twiddle phase angle factors and not the entire twiddle phase angles.) To implement pruned FFTs, we need to know the twiddle phase angles associated with each necessary butterfly computation as shown in Figure 13-40(a). Here we give an interesting algorithm for computing the 2πA1/N and 2πA2/N twiddle phase angles for an arbitrary-size FFT[43].

The algorithm draws upon the following characteristics of a radix-2 decimation-in-time FFT:

A general FFT butterfly is that shown in Figure 13-40(a).

The A1 and A2 angle factors are the integer values shown in Figure 13-39.

An N-point radix-2 FFT has M stages (shown at the top of Figure 13-39) where M = log2(N).

Each stage comprises N/2 butterflies.

The A1 phase angle factor of an arbitrary butterfly is

where S is the index of the M stages over the range 1 ≤ S ≤ M. Similarly, B serves as the index for the N/2 butterflies in each stage, where 1 ≤ B ≤ N/2. B = 1 means the top butterfly within a stage. The ⌊q⌋ operation is a function that returns the smallest integer ≤ q. Brev[z] represents the three-step operation of: convert decimal integer z to a binary number represented by M–1 binary bits, perform bit reversal on the binary number as discussed in Section 4.5, and convert the bit reversed number back to a decimal integer yielding angle factor A1. Angle factor A2, in Figure 13-40(a), is then computed using

The algorithm can also be used to compute the single twiddle angle factor of the optimized butterfly shown in Figure 13-40(b). Below is a code listing, in MATLAB, implementing our twiddle angle factor computational algorithm.

clear

N = 16; %FFT size

M = log2(N); %Number of stages

Sstart = 1; %First stage to compute

Sstop = M; %Last stage to compute

Bstart = 1; %First butterfly to compute

Bstop = N/2; %Last butterfly to compute

Pointer = 0; %Init Results pointer

for S = Sstart:Sstop

for B = Bstart:Bstop

Z = floor((2^S*(B–1))/N); %Compute integer z

% Compute bit reversal of Z

Zbr = 0;

for I = M–2:–1:0