3

An Absence of Candor

"Don't be afraid of opposition. Remember, a kite rises against, not with the wind."

—Hamilton Wright Mabie

When Jack Welch became CEO of General Electric in April 1981, he found that the company had become incredibly bureaucratic and hierarchical. In some instances, 12 layers of management separated workers on the factory floor from the office of the CEO. Many managers, particularly those serving on the large corporate office staff, spent considerable amounts of time reviewing and approving plans, reports, and memos in a relatively passive manner.

The focal point of many strategy review sessions became GE's infamous, incredibly thick, planning books. Chock full of forecasts and calculations, these books passed through many layers of the hierarchy for review, but they rarely became the basis for an open and frank dialogue between the CEO and the person leading a particular business unit. Welch soon became frustrated that strategic planning sessions had become "dog and pony shows" rather than candid discussions about the future direction of each business. Everyone spoke politely, refrained from ruffling any feathers, and generally played it "close to the vest" rather than openly confronting controversial issues.

Welch described the atmosphere in these sessions as one of "superficial congeniality." By that, he meant that the climate seemed "pleasant on the surface, with distrust and savagery roiling beneath it." As he put it, "The phrase seems to sum up how bureaucrats typically behave, smiling in front of you but always looking for a 'gotcha' behind your back."1 Candor and constructive conflict simply did not characterize most communications within the General Electric organization.

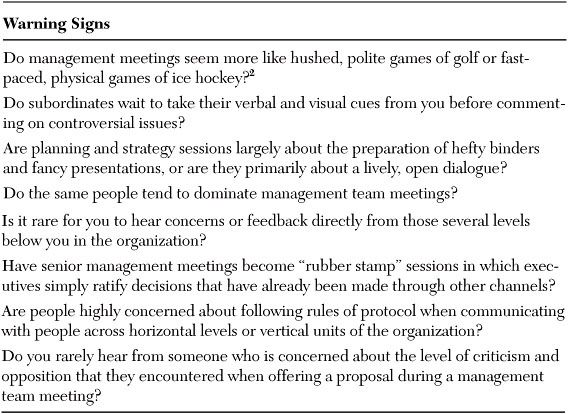

Think for a moment about your own organization. Do you have an atmosphere of candid communication? Are people engaging in "superficial congeniality" during meetings? Do people say "yes" when they really mean "no"? Are people comfortable speaking up when they have concerns or dissenting views? Do you find yourself taking silence to mean consent? Before reading on, review the list of warning signs, found in Table 3-1, that might suggest the existence of a serious communication problem within your organization—namely, a lack of cognitive, or task-oriented, conflict.

Table 3-1. Signals That Insufficient Candor Exists Within Your Organization2

After answering the questions in Table 3-1, you may conclude that your organization has a different problem than the one Welch discovered when he took over at General Electric—namely, the existence of too much cognitive conflict. In short, people may argue a great deal, but so much so that the organization finds it difficult to reach a final decision.3 The later chapters of this book examine this problem in more detail. Specifically, later chapters address how leaders can foster constructive conflict, while also reaching closure in a timely manner. For many of you, however, conflict and candor may be woefully inadequate in your organizations. The remainder of this chapter focuses on understanding that particular problem.

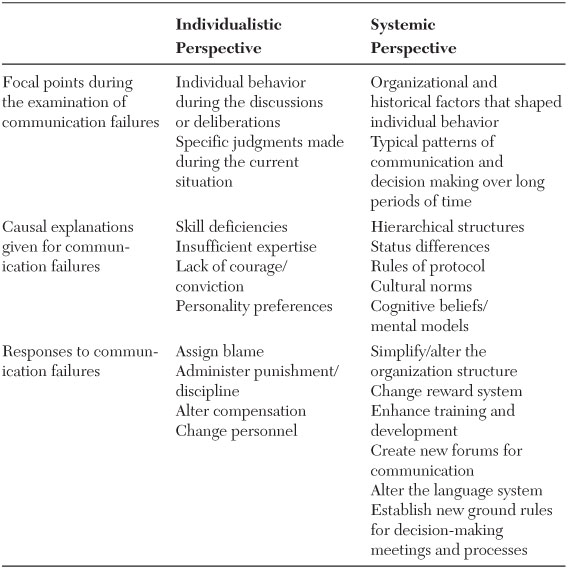

As you consider Welch's description of GE in 1981 (or the assessment that you just completed of your organization), you might conclude that the firms simply need a change in personnel. Remove some of those GE bureaucrats, and the nature of the dialogue within these planning sessions would change. Sounds reasonable, does it not? Perhaps many of the bureaucrats simply did not have the courage to express their opinions on thorny issues, or they had become too comfortable and complacent in their jobs, preferring not to question the status quo to which they had grown so accustomed. Maybe GE had hired a number of managers who did not have the personality to engage in constructive conflict and debate with highly talented peers and superiors. Alternatively, one might argue that these managers refrained from engaging in candid give-and-take during planning sessions because they did not have the capability and expertise to offer informed judgments. Better managers, with greater experience and a deeper understanding of the firm's businesses, might be more willing to engage in frank dialogue and lively debate.

Unfortunately, when it comes to encouraging more candor and constructive dissent within organizations, changing the players often does not change the outcome of the game. In most instances, the unwillingness to speak up, to express dissent, and to challenge prevailing opinions is not simply about the existence of personality flaws or skill deficiencies among key people within the organization. The problem typically runs much deeper; it has structural and cultural roots that have grown over time and become difficult to change. In short, the problem is systemic (see Table 3-2). New people, put in the same situations, might very well behave in a similar manner.4

Table 3-2. Two Perspectives on the Failure to Speak Up

To understand the systemic nature of this problem, let's take a closer look at how NASA managers and engineers behaved during the Columbia space shuttle's catastrophic final flight.

Columbia's Final Mission

When NASA engineers learned of the foam strike that occurred during the launch of Columbia on January 16, 2003, some of them became concerned because of the apparent size of the debris that had impacted the shuttle. Rodney Rocha, a NASA engineer with expertise in this area, recalls that he "gasped audibly" when he viewed photos of the foam strike on the day after the launch.5 Soon, an ad-hoc group formed to investigate the issue. The engineers called themselves the Debris Assessment Team, and they elected Rocha as the co-chair of the group (along with Pam Madera, an engineering manager from NASA's prime contractor on the shuttle program).6

On Flight Day 5, Linda Ham chaired a regular meeting of the Mission Management Team, the group responsible for overseeing the Columbia's mission and resolving outstanding problems that occurred during the flight.7 When the foam strike issue surfaced, she reminded everyone that debris strikes had occurred often on previous missions. Indeed, foam had impacted the shuttle on almost every mission stretching back to the first flight in 1981. Although the original design specifications indicated that no foam shedding should occur, engineers and managers gradually became accustomed to the debris hits, and they grew comfortable with the notion that these foam strikes could not endanger the shuttle. Instead, they simply represented a maintenance problem that would lengthen the turnaround time between missions. During this meeting, Ham also remarked that foam was "not really a factor during the flight because there is not much we can do about it."8 By that, she meant that, even if the foam strike constituted a "safety of flight" risk, she did not believe that NASA could engage in any action during the mission to ensure the shuttle's safe re-entry into the earth's atmosphere.

Meanwhile, the Debris Assessment Team concluded that it needed additional data to make an accurate assessment of the damage imposed by the foam strike. The team decided to petition superiors within the engineering chain of command for additional imagery of the shuttle, a request that required NASA to seek assistance from the Department of Defense, which could employ its spy satellites to take photos of the shuttle in space. Interestingly, they chose not to petition Ham directly, apparently because of concerns that such an action may have contradicted the usual rules of protocol.

In any event, shuttle management chose not to seek additional imagery from defense officials. Rocha became incensed, and he wrote a scathing e-mail detailing how he felt about management's failure to approve the imagery request. He shared the e-mail with his colleagues in his unit, but he chose not to send the e-mail to superiors or to senior shuttle program managers.9 Later, he explained, "Engineers were often told not to send messages much higher than their own rung in the ladder."10 Here, we have a clear instance in which Rocha did not engage in candid communication, and he felt reluctant to express his dissenting views.

Several days later, the foam strike issue resurfaced at a regular meeting of the Mission Management Team. Rocha attended along with a number of others, including Shuttle Program Manager Ron Dittemore. Ham received an update on the Debris Assessment Team's work from a manager who had obtained an update from Rocha and his group. That manager emphasized that the Debris Assessment Team had concluded that the foam strike was not a safety of flight issue based upon computer modeling, but he did not mention the desire for additional imagery or the fact that the computer models were not designed to analyze this type of debris strike. Ham quickly affirmed the conclusion that this was not a safety of flight issue, and she repeatedly emphasized this finding to her team. She asserted forcefully that the foam hit represented a turnaround issue. Despite his deeply held reservations and doubts, Rocha did not speak up during the meeting.

Later, when asked to comment on the fact that engineers did not speak up more forcefully to express their grave concerns, Flight Director Leroy Cain admonished the engineers, saying, "You are duty bound as a member of this team to voice your concerns, particularly as they relate to safety of flight. You wouldn't have to holler. You stand up and say, 'Here's my concern, and this is why I'm uncomfortable.'"11 Rocha disagreed, indicating that it was not nearly that easy to express a dissenting view. He remarked, "I couldn't do it (speak up more forcefully)...I'm too low down...and she's (Ham) way up here."12

When we look at this tragic situation, one can ask: Would things have transpired differently if other people occupied the positions held by Ham and Rocha? Perhaps, but a close look at the situation suggests that this may not necessarily be the case. As the Columbia Accident Investigation Board examined the incident, they found that the behavior of many of the managers and engineers during the Columbia tragedy reflected cultural norms and deeply ingrained patterns of behavior that had existed for years at NASA. The organization had operated according to hierarchical procedures and strict rules of protocol for as long as the shuttles had been flying. Communications often followed a strict chain of command, and engineers rarely interacted directly with senior managers who were several levels higher in the organization. Status differences had stifled dialogue for years. Deeply held assumptions about the lack of danger associated with foam strikes had minimized thoughtful debate and critical technical analysis of the issue for a long period of time. Because the shuttle kept returning safely despite debris hits, managers and engineers developed confidence that foam strikes did not represent a safety of flight risk. Ham's behavior did not simply represent an isolated case of bad judgment; it reflected a deeply held mental model that had developed gradually over two decades as well as a set of behavioral norms that had long governed how managers and engineers interacted at NASA.

In fact, the problems that plagued the communications leading up to the Columbia disaster stretched back to the Challenger catastrophe 17 years earlier. Commenting on the parallels to Challenger, former astronaut Sally Ride, a member of the commissions that investigated each shuttle catastrophe, remarked that, "I think I'm hearing an echo here."13 By that, she meant that, although the technical causes may have been different, the organizational causes seemed remarkably similar. NASA had not solved the systemic problems that had inhibited candid dialogue and debate about technical concerns 17 years earlier. Diane Vaughan, a sociologist who has studied both shuttle disasters, explains why NASA did not have a constructive internal debate about the dangers that ultimately led to each catastrophe:

At a meeting that I attended at NASA, somebody pointed out that both Rodney Rocha and Roger Beaujolais (the engineer who had concerns about the O-Rings prior to the Challenger disaster) were people who were defined as worriers in the organization. The boy who called wolf. So that they didn't have a lot of credibility. And the person's thinking was, 'Isn't it possible that we can just change personnel?' The thought was that this was a personality problem. This was no personality problem. This was a structural and a cultural problem. And if you just change the cast of characters, the next person who comes in is going to be met with the same structure, the same culture, and they're going to be impelled to act in the same way.14

Vaughan may take this argument a bit too far here. One might conclude from these comments (erroneously, in my view) that she believes that the problem lies solely in terms of structure and culture, and that she does not acknowledge the leadership deficiencies of any shuttle program managers. Regardless of how we interpret her statement, an important point remains: To adhere to the systemic view does not necessarily mean that one must absolve individuals of all personal responsibility for discouraging open dialogue and debate.15

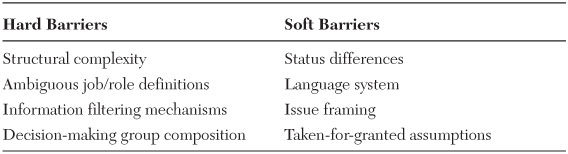

Hard Versus Soft Barriers

When people feel uncomfortable speaking up, we typically can trace the causes of the problem to a combination of "hard" and "soft" barriers to candid communication (see Table 3-3). The hard barriers are structural in nature, whereas the soft barriers constitute cultural inhibitors to frank dialogue and debate.16 Common hard barriers include the complexity of the organizational structure, the clarity of job/role definitions, the presence of information filtering mechanisms, and the composition of decision-making bodies. Typical soft barriers include perceptions of status, the language system used to talk about problems and mistakes, the mental models and cognitive frames that become deeply embedded in the culture over time and shape the way people think about particular issues, and the often "taken-for-granted" assumptions about how people ought to communicate with one another. As you can imagine, the structural factors tend to represent managerial levers that can be more easily modified, whereas the soft barriers often remain more difficult and time-consuming to dismantle or change.

Table 3-3. Hard and Soft Barriers

Hard Barriers

Structural Complexity

Take a moment and try to sketch out an organization chart for your firm. How difficult is this exercise? How many dottedline relationships exist? Do you have a matrix organization? Are some reporting relationships unclear? What type of ad-hoc or informal groups exist within the organization, and how do they fit into the hierarchy? For most of you in large organizations, this exercise will prove rather frustrating. You will become confused at times as you try to draw the chart, or you will find yourself dismayed by the dizzying array of boxes, arrows, solid lines, and dotted lines on the page.

Structural complexity serves as a powerful inhibitor to candid communication and constructive debate. Simplified structures facilitate the efficient flow of information, enhance coordination across multiple units, and increase the likelihood that important messages will not be lost in a maze of dottedline relationships, ad-hoc committees, and stodgy bureaucracies. Welch uses several evocative metaphors to describe how the many layers in the old hierarchy at GE inhibited constructive dialogue:

Sweaters are like [organizational] layers. They are insulators. When you go outside and you wear four sweaters, it's difficult to know how cold it is...Another effective analogy was comparing an organization to a house. Floors represent layers and the walls functional barriers. To get the best out of an organization, these floors and walls must be blown away, creating an open space where ideas flow freely, independent of rank or function.17

During his tenure at GE, Welch reduced the number of layers in the hierarchy, and he sought constantly to simplify the organization structure. In most cases, only 6 layers of management separated the CEO from the shop floor, as opposed to as many as 12 prior to Welch's tenure, and the typical manager had twice as many direct reports as compared to the situation in the 1970s. In making these changes, Welch sought to foster "simple, straightforward communication" on any topic by anyone throughout the organization.18

In the case of Columbia, NASA had a complex matrix organization structure. The shuttle program not only involved thousands of NASA employees, but also people who worked for private contractors that had longstanding relationships with the space agency. People working on the shuttle program also were not co-located; instead, they worked at field centers in Texas, Florida, Alabama, and elsewhere. Many interactions took place via conference calls and e-mails rather than through face-to-face communication. Finally, ad-hoc committees, such as the Debris Assessment Team, often formed to work on specific problems. However, it was not always clear how they fit within the formal hierarchy. Sheila Widnall, former secretary of the Air Force and a member of the Columbia Accident Investigation Board, remarked on the confusion regarding the Debris Assessment Team: "I thought their charter was very vague. It wasn't really clear to whom they reported. It wasn't even clear who the chairman was. And I think they probably were unsure as to how to make their requests to get additional data."19

Role Ambiguity

Speaking candidly and expressing dissenting views becomes extremely difficult if an individual does not have a clear understanding of his or her role and responsibilities within the organization. Take, for example, the case of the 1994 friendly fire incident in northern Iraq, in which two United States F-15 fighter jet pilots mistakenly shot down two U.S. Black Hawk helicopters traveling in the "no fly zone" that had been established to protect the Kurdish people from the Saddam Hussein regime.20 Captain Eric Wickson and Lieutenant Colonel Randy May flew the two F-15 jets involved in this incident. Colonel May served as Wickson's squadron commander. Therefore, in normal day-to-day interactions, May occupied the role of the superior, with Wickson as his subordinate. However, during this particular mission, the roles were temporarily reversed. Captain Wickson served as the flight lead, and Colonel May was his wingman. That arrangement put Wickson in charge while the two pilots were in the air.

How did role ambiguity inhibit candid dialogue and contribute to the tragedy that occurred on that day? Wickson made the initial identification of the aircraft, mistakenly concluding that they were Russian-made Iraqi Hind helicopters. According to protocol, May, as the wingman, should have confirmed that identification. In reality, he stopped short of doing so. When Wickson asked his wingman for confirmation, May responded by saying, "Tally two," meaning that he had indeed seen two helicopters. However, he did not say "Confirm Hinds," indicating that he also believed that they were enemy aircraft. Confronted with an ambiguous response, Wickson took the absence of any clear objection to mean confirmation of his identification. The two men went on to shoot down the helicopters, with May never raising any questions or concerns. Why did May remain silent if he was unsure about the identification? Why did Wickson not request a clearer confirmation? The ambiguity of their reporting relationship appears to have suppressed candid dialogue at this critical moment. Scott Snook, who wrote a fascinating book about this incident, describes the dynamic:

In addition to subtly encouraging Tiger 01 [Wickson] to be more decisive than he otherwise might have been, the inversion [subordinate as flight lead] may also have encouraged him to be less risk averse, to take a great chance with his call, confident that if his call was indeed wrong, surely his more experienced flight commander would catch his mistake...Ironically, we find Tiger 02 [May] similarly seduced into a dangerous mindset...the expectations built into the situation by this unique dyadic relationship with his junior lead induced a surprisingly high degree of mindlessness and conformity...Apparently, there is such a strong norm in the Air Force that, without too much difficulty, we can imagine the wingman, even though he is senior in rank, easily slipping into the role of an obedient subordinate.21

If role ambiguity can take place in a hierarchical organization such as the military, with a clear chain of command, it can certainly happen in business organizations with less formal structures and reporting relationships. People may find themselves leading an ad-hoc team that includes people who have more senior positions in the formal organizational hierarchy. Alternatively, it may not even be clear who is in charge of certain informal committees or groups, or matrix organizational structures may cause some confusion regarding accountability and leadership responsibilities. Over the past year, I have taught the friendly fire case to many of the senior managers at a large global financial services firm. Each time I teach the case, I ask whether any of the managers have encountered the type of role ambiguity experienced by May and Wickson, and if so, whether it inhibited communication. Without hesitation, most of the managers confirm that they have found themselves in this predicament on more than one occasion.

Information-Filtering Mechanisms

In many organizations, structural mechanisms exist that constrain the flow of information. Perhaps most commonly, many business leaders choose to hire someone as a chief operating officer or "number two." That person often becomes the channel by which other managers convey information and ideas to the leader, as well as the mechanism by which the leader communicates many decisions to his subordinates.22

In some cases, leaders may not have a COO type, but they do assign someone, either formally or informally, as their "chief of staff." Much like the White House chief of staff, this person often keeps the leader's schedule, controls subordinates' access to the leader, facilitates meetings, and serves as an intermediary who gathers information from various executives and presents it to the leader in a concise manner.

In both cases, the structure may inhibit candid dialogue and the free expression of dissent. These intermediaries may constrict the flow of information to the leader, though they may not mean any harm. They strive simply to ensure the efficient use of the leader's time; however, their very presence often discourages subordinates from making the effort to express their concerns or dissenting views directly to the leader. They become a buffer between the leader and those doing the actual work, perhaps making the leader insensitive or unaware of the concerns of those on the front lines.

In his study of the decision-making approaches employed by many twentieth-century American presidents, political scientist Alexander George found that the relatively formal use of a chief of staff as an information-filtering mechanism could make it difficult for some presidents to hear for themselves a wide-ranging set of opinions on key issues. George worried that a president could become "unaware or disinterested in the important preliminaries of information processing" as he increasingly used the chief of staff as a "buffer between himself and cabinet heads."23

Recognizing these risks, Secretary of Defense Donald Rumsfeld, in his infamous rules for serving in the White House, offers the following advice for someone playing this intermediary role:

A president needs multiple sources of information. Avoid excessively restricting the flow of paper, people, or ideas to the president, though you must watch his time. If you overcontrol, it will be your "regulator" that controls, not his. Only by opening the spigot fairly wide, risking that some of his time may be wasted, can his "regulator" take control.24

Unfortunately, it can difficult to manage the tension between the need for efficiency and the need for a broad array of information sources. The very presence of a person or persons operating as a filtering mechanism often sends the wrong signals to the organization and discourages the type of open communication that a leader desires, particularly the upward communication of bad news.

Composition of Decision-Making Bodies

The structure and composition of decision-making groups certainly shapes the level and nature of dissent and debate. As noted in the previous chapter, demographic homogeneity affects team dynamics, particularly the level of cognitive conflict. Bringing people together of similar gender and race, as well as functional and educational backgrounds, tends to reduce the cognitive diversity within a group, and therefore makes it less likely that divergent views will emerge. While demographic diversity brings challenges as well, it often helps to spark more divergent thinking and dissenting views.

However, similarity of group member tenure may have a slightly different, more complex effect on team dynamics. Consider, for instance, a team that has recently formed, consisting of people who have not interacted previously as a group. Presumably, in the absence of strong, effective leadership at the outset, they will exhibit low levels of interpersonal trust and psychological safety, much like the members of the Everest expeditions in 1996. Consequently, the new team may not engage in a high level of conflict and dissent, because people are not comfortable speaking up in front of relative strangers. As the team begins to feel more comfortable with one another, the level of candor and debate may rise considerably. Many observers have pointed out, for instance, that the Bay of Pigs fiasco occurred during the very early days of the Kennedy administration, when the president's foreign policy team had only recently been formed. By the time of the Cuban missile crisis, the same group of people had been working together for nearly two years, and the level of interpersonal trust had grown considerably. For that reason, it may have been easier for the group to engage in a candid and vigorous debate about how to handle the crisis.

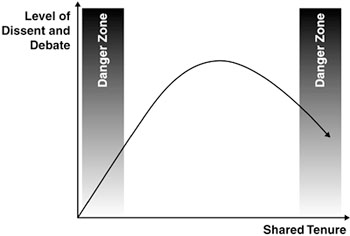

Long-term collective tenure, however, may become problematic. A group may find itself becoming too like-minded and perhaps a bit complacent. Members may begin to adopt such strongly held shared mental models that they do not engage in much divergent thinking. Assumptions about how the world works may become taken for granted and difficult to surface and challenge. Many observers have pointed out that the senior management teams at once-great companies that stumbled badly—such as IBM, Xerox, and Digital Equipment Corporation—included many executives who had worked together for a long time.25 Richard Foster, a senior partner at McKinsey, found that many of these firms that lost their once formidable competitive advantage actually experienced what he called "cultural lock-in," or an inability to adapt their mental models as the external environment changed dramatically.26 In sum, the relationship between the shared tenure of a group and the level of dissent and debate may be curvilinear (see Figure 3-1). Groups may encounter the most challenging communication hurdles either early in their tenure, or at the point where many members have been working together for a long period of time.27

Figure 3-1. The relationship between shared tenure and dissent in many firms

Soft Barriers

Status Differences

As we saw in the Everest case, status differences certainly can dampen people's willingness to share opinions and concerns freely. Status plays a major role in many types of organizations. For instance, in their studies of cardiac surgery teams, my colleagues Amy Edmondson, Richard Bohmer, and Gary Pisano found that the status difference between surgeons and nurses made it difficult for the latter group to voice its concerns or offer sound suggestions during complex medical procedures. Perhaps more importantly, they found that the more effective teams included surgeons who took great care to downplay the status differential and to welcome input from the nurses and other medical professionals on the cardiac surgery teams.28 In short, status differences do not doom organizations to experience serious problems of speaking up; effective leadership can dismantle this barrier to candid communication.

One must remember, however, that status need not correlate with position in the formal organizational hierarchy. A startling example of this phenomenon took place during the friendly fire incident in northern Iraq. On that tragic day, air-force personnel working in an AWACS plane had responsibility for controlling the skies in the no fly zone. They were supposed to warn the fighter pilots about any enemy threats or friendly aircraft in that region. They did not do so. They remained silent as the fighter pilots shot down the two Black Hawk helicopters, not even uttering a word of caution or asking a quick question, despite the fact that they had been tracking the friendly aircraft in that region just a few minutes earlier.

When asked why they remained silent, Captain Jim Wang remarked that AWACS personnel were trained to "shut up and be quiet" during this type of mission.29 Of course, their training instructed them to do no such thing; it directed them to "warn and control" in these situations. Why then did Wang interpret his mandate in such a starkly different manner? Many observers have pointed to the status difference between AWACS personnel and fighter pilots. The latter commanded a great deal of respect and awe for their ability to take out the enemy while flying at remarkable speeds through perilous combat situations. Think Tom Cruise as "Maverick" in the classic movie Top Gun. In contrast, the AWACS officers spent their time watching radar screens and interpreting computer data. Think the administrative and support functions at a Wall Street investment bank, as opposed to the superstar traders, who rake in huge profits for the firm, wear the designer suits, and drive the super-luxury Italian sports cars.30

One of the senior officers on the AWACS plane described his reaction to the visual identification by the flight lead, Captain Wickson: "My initial reaction was—I said—Wow, this guy is good—he knows his aircraft."31 Amazingly, despite the fact that radar screens suggested the possibility of friendly aircraft in the area, the AWACS officers deferred to the fighter pilots who were trying to perform a very challenging visual identification while traveling at 500 miles per hour through a narrow valley with the helicopters between 500 and 1,000 feet away.

Interestingly, the senior AWACS officer involved in the incident held the same rank as the wingman, Lt. Colonel May, and a higher rank than the flight lead who was making the visual identification. In fact, the two other most senior AWACS officers also held ranks that were superior to that of the flight lead, Captain Wickson. Status, then, overwhelmed formal rank in the organizational hierarchy in this case. More senior officers remained silent, in part because they allowed status differences to shape their behavior.

Similar dynamics occur within many business organizations, often with dysfunctional results. For instance, at Enron in the late 1990s, three separate organizations—Wholesale Trading, Gas Pipeline, and International—competed with one another for resources and talent. The wholesale trading unit became the high-status organization within Enron, despite the fact that the old pipeline business continued to generate the strongest cash-flow margins. Nevertheless, trading represented the future, and with Jeffrey Skilling as its leader, it became the darling of senior management and Wall Street analysts. The wholesale trading business became the place to be, and the people who built that business became legends within the firm. One cannot help but to ask whether the status differences that emerged within Enron inhibited candid dialogue about many of the business practices being employed in the late 1990s.32

Language System

Organizations develop their own language systems over time, complete with a whole host of unique terms and acronyms. Language systems, particularly as they relate to the characterization and discussion of problems and concerns, can become a powerful barrier to candid discussion and critical questioning of existing views and practices.

At NASA, for instance, a language system gradually emerged for labeling and categorizing problems associated with the space shuttle. Rather than sticking to the very formal system of declaring an unexpected issue an "anomaly," they began to distinguish between "in-family" and "out-of-family" events. In-family described those problems that they had seen before, and out-of-family characterized the incidents that did not fit within their experience base.

Unfortunately, as time passed, managers and engineers began to treat "out-of-family" events as if they were "in-family." Sheila Widnall found this slippery slide rather disturbing, and she offered a word of caution about the language system that had developed at NASA. She felt that the term family sounded "very comfy and cozy" and made it easier for NASA officials to begin believing that "everything will be okay" even though the issue was quite serious.33

The United States Forest Service (USFS) experienced a similar language problem during the tragic Storm King Mountain fire of 1994, in which 12 wildland firefighters perished in Colorado. In that incident, investigators concluded that the firefighters had not adhered to standard procedures. However, the language system employed by the USFS suggests room for a slippery slide similar to the one experienced at NASA. In 1957, the USFS developed a list of "Standard Orders" for wildland firefighters. Shortly thereafter, it constructed a list of "18 Situations That Shout Watch Out." Note the interesting difference in language. The latter term does not necessarily imply a hard-and-fast rule that must always be obeyed. Instead, it conveys the notion of a cautionary guideline rather than a strict procedure that must always be followed.34

Of course, we also wrote in an earlier chapter about the language system at Children's Hospital in Minnesota. In that case, the terminology often used at the hospital when discussing medical accidents conveyed a culture of "accusing, blaming, and criticizing" individuals. When Morath took charge at Children's, she created a new set of terms for discussing accidents, with the words chosen carefully so as to stress a systemic view of the causes of medical accidents as well as an emphasis on learning from mistakes. The language shift helped to raise people's willingness to discuss medical errors, and in fact, during Morath's first year at the hospital, official reports of medical accidents rose considerably. The evidence clearly indicated that people were not making more errors, but instead, they had become more comfortable talking about problems in an open, frank manner.35

Issue Framing

Chapter 2, "Deciding How to Decide," discussed how leaders frame issues when they take an initial position on a problem, and how these frames may constrain the range of alternative solutions discussed by the organization. However, issue framing occurs at a much broader level as well—not only at the decision level but at the level of a multiyear project or initiative. When broad corporate programs and initiatives begin, leaders often strive to provide ways for people to think about the events that will follow. In short, they provide a frame—a lens by which people can interpret upcoming actions. These broad frames can have much more wide-ranging and long-lasting effects than the frames that may be created by taking a position on a specific problem at a point in time.36

At NASA, when the shuttle program began, the agency justified the huge investment by arguing that the vehicle would eventually pay for itself by carrying commercial and defense payloads into space on a regular basis. When he announced the start-up of the program, President Nixon stated that the shuttle would "revolutionize transportation into near space, by routinizing it."37 Note here the particular use of language and how it helped to establish a very specific and powerful frame for the program. The space vehicle was a "shuttle" that would embark on "routine" travel beyond the earth's atmosphere.

With that, NASA had framed the shuttle as an operational program rather than as a research and development initiative.38 Diane Vaughan explains:

The program was framed within the concept of routine space flight. The shuttle was supposed to operate like a bus: transporting things, objects, people back and forth into space on a regular basis. And in that sense, that whole definition that this program was going to be an operational system was the beginning of the downfall, because they were really operating an experimental technology, but there was pressure to make it look routine to attract customers for payloads.39

As a result of this framing of the shuttle as a routine operational program, people began to behave differently when problems surfaced. Schedule pressures rose considerably, as did the burden of proof required for an engineer who had a concern or a dissenting view about a safety issue that might require a delay in the schedule. Roger Tetrault, the former CEO of McDermott International and a member of the Columbia Accident Investigation Board, remarked that people began to "underemphasize the risks in order to get funding...but nobody in the aircraft industry who builds a new plane who would say after 100 flights or even 50 flights that that plane was operational. Yet, the shuttle was declared an operational aircraft, if you will, after substantially less than 100 flights."40 In sum, the initial framing of the program established an atmosphere in which engineers found it increasingly difficult to speak up or express dissenting views when they had safety concerns.

The most effective leaders take great care, when they launch new initiatives, to anticipate the unintended consequences of a particular frame. For instance, in early 2002, when Craig Coy became CEO of Massport—the agency responsible for operating aviation facilities and shipping ports in the Boston area—he created three business units, and he directed three executives to manage these units as profit centers. In the past, these operating unit managers had some control over expense budgets, but they had no authority over the revenue-generation activities. Now, each business unit leader became accountable for the revenues, costs, and cash flows generated by his or her area.

This change represented a major shock to the culture at the agency. Of course, Coy recognized up front that this new way of looking at the business might encourage managers to compromise on security issues to bolster profits and cash flow. Such actions would be highly damaging for the institution in the wake of the September 11 attacks, particularly due to the role that Boston's Logan Airport played in that tragedy. Therefore, he took quick action to ensure that this would not take place, beginning with a very large commitment of capital to make Logan Airport the first commercial aviation facility in the nation to electronically screen all checked baggage. Coy made the commitment without resorting to the usual return on investment type analysis that he had begun advocating for nonsecurity projects. This early move, although substantively important, also served as an important signal and symbol to the operating unit managers that they should not allow the "profit center" mentality to cloud their judgments about security priorities.41

Taken-for-Granted Assumptions

The final, commonly experienced soft barrier involves assumptions about how people should interact with others in the organization, particularly those at different horizontal levels or in different vertical units. Every organization culture develops these presumptions over time; they become the consensus view of "the way that we work around here." Gradually, these assumptions become taken for granted by most members of the firm. As Edgar Schein notes, many of these cultural norms begin to take root when the founder establishes the organization, and they get propagated through the continuous retelling of stories and myths about the early days of the firm. As new members enter the organization, they gradually become indoctrinated into these informal, yet widespread and commonly understand "ways of working." Naturally, some of these cultural norms change over time, particularly as firms become larger and more bureaucratic.42

At NASA, the evidence from the investigation suggests that adherence to procedure and rules of protocol had become a strong cultural norm by the 1990s. People did not typically communicate with people that were more than one level up in the organization. Deference to seniority and experience also became accepted practice. As one former engineer explained to me, "Everything at NASA reverts back to the most senior person at the table...if they don't buy in, then your idea is just that—an idea."43 Each of these norms tended to stifle open communication at the space agency.

At a large specialty retailer that I studied, senior managers took for granted that contentious debates should be resolved during off-line conversations rather than large meetings of the entire leadership team. This had become routine practice at the firm, and as new members joined the executive team, they soon learned how to act within this set of cultural norms and boundaries. Each person that I interviewed offered a response similar to this one: "The meeting is not a forum where we engage in debate. If there is disagreement, then we quickly tend to agree to take it offline."44

Unfortunately, although many of the new members learned to "play the game," they did not find this practice to be productive. They become increasingly frustrated at the lack of open debate during staff meetings. One senior executive mentioned that he had grown accustomed to the norms at his prior employer, where "real calls were made in the room...there was healthy give-and-take." In this case, people often did not have an opportunity to rebut the ideas and proposals offered by colleagues, because those arguments were put forth in private, offline meetings with the president as opposed to wider group forums.

Of course, not all taken-for-granted assumptions about interpersonal behavior and collective decision-making reflect dysfunctional behavior, but they can develop into a problem because the behaviors do become so deeply embedded in the organizational culture over time. Moreover, because they are often taken for granted, people do not regularly question why they are behaving in this manner. They simply find themselves conforming to time-honored practice at the institution.

Leadership Matters

Systemic factors—both structural and cultural—clearly inhibit candid dialogue and debate within organizations. This chapter has offered a glimpse of some of the most common "hard" and "soft" barriers that arise within organizations. Many more surely do exist. I have argued that these systemic factors often shape people's behavior within firms, both the actions of those who may appear to be suppressing dissent as well as the behavior of those who are failing to speak up. For instance, military culture and history shaped how the AWACS officers interacted with the F-15 pilots during the tragedy in northern Iraq in 1994. We cannot understand the AWACS officers' behavior by viewing it in isolation. We must examine the system in which these individuals worked and made decisions on a daily basis. Similarly, we cannot understand the past behavior of nurses at Children's Hospital in Minnesota, and particularly their reluctance to speak up about accidents or near-miss situations, without recognizing the prevailing cultural norms and status relationships prevalent not just within that hospital, but also within the broader medical profession at that time.

Having said that, one cannot discount the critical role that a particular leader's style and personality can play in encouraging or discouraging candid dialogue within an organization. Leadership does matter. Make no mistake about that. When Rob Hall tells his team that he will not tolerate dissent, he cannot send any more powerful message to his team. Systemic factors do not appear to be shaping his behavior; rather, it seems to reflect his own preferences for how to lead an expedition. Similarly, at the specialty retailer, the president chose to rely heavily on offline conversations largely due to his own nonconfrontational style and aversion to conflict in large group settings.

Even when systemic factors play a more substantial role, such as in the case of the Columbia disaster, one should not absolve the individuals of all personal accountability. Structural and cultural factors certainly shaped the way that shuttle program managers led the decision-making processes that took place during the mission. However, one can easily imagine how reasonably minor changes in the way that leaders gathered information, asked questions, and conducted meetings could have made a significant difference in the level of open dialogue and debate about the foam strikes.

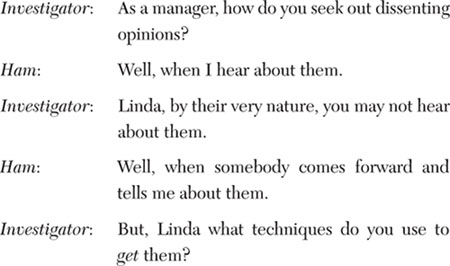

Perhaps most importantly, leaders cannot wait for dissent to come to them; they must actively go seek it out in their organizations. If leaders offer personal invitations to others, requesting their opinions, ideas, and alternative viewpoints, they will find people becoming much more willing to speak freely and openly. The mere existence of passive leadership constitutes a substantial barrier to candid dialogue and debate within organizations. As the Columbia Accident Investigation Board concluded, "Managers' claims that they didn't hear engineers' concerns were due in part to their not asking or listening."45 Journalist William Langewiesche, in an article about the Columbia accident published in Altantic Monthly, reported an exchange between one investigator and Linda Ham. The content of that conversation captures the very essence of what I mean by passive leadership as the ultimate barrier to candid dialogue and debate.

Apparently, Ham did not have an answer to this final question.46