2. Value-Up Processes

Program Manager

Project Manager

“One methodology cannot possibly be the “right” one, but... there is an appropriate, different way of working for each project and project team.”1

—Alistair Cockburn

Figure 2.1 The rhythm of a crew rowing in unison is a perfect example of flow in both the human and management senses. Individuals experience the elation of performing optimally, and the coordinated teamwork enables the system as a whole (here, the boat) to achieve its optimum performance.

In the last chapter, I argued the importance of the value-up paradigm. In this chapter, I cover the next level of detail—the characteristics of such processes, the “situationally specific” contexts to consider, and the examples that you can apply.

Microsoft Solutions Framework

Dozens of documented software processes exist.2 Over the last thirty years, most of these have come from the work-down paradigm, and they have spawned extensive amounts of documentation to cover all possible situations and contingencies.3 Managers have wanted to play it safe without understanding the corresponding drain on productivity. Unfortunately, the idea has backfired. When teams don’t know what they can safely do without, they include everything in their planning and execution process. Barry Boehm and Richard Turner have described the problem well:

Build Your Method Up, Don’t Tailor It Down

Plan-driven methods have had a tradition of developing all-inclusive methods that can be tailored down to fit a particular situation. Experts can do this, but nonexperts tend to play it safe and use the whole thing, often at considerable unnecessary expense. Agilists offer a better approach of starting with relatively minimal sets of practices and only adding extras where they can be clearly justified by cost-benefit.4

Similarly, most processes and tools have not allowed adequately for appropriate diversity among projects and have forced a “one size fits all” approach on their teams. In contrast, VSTS is a collaboration and development environment that allows a process per project. VSTS also assumes that a team will “stretch the process to fit”—that is, to take a small core of values and practices and to add more as necessary. This approach has been much more successful, as the previous quote notes.

Inside Team System are two fully enacted process instances, both based on a common core called Microsoft Solutions Framework (MSF):

• MSF for Agile Software Development. A lightweight process that adheres to the principles of the Agile Alliance.5 Choose the MSF Agile process for projects with short lifecycles and results-oriented teams who can work without lots of intermediate documentation. The MSF Agile process is a flexible guidance framework that helps create an adaptive system for software development. It anticipates the need to adapt to change, emphasizes the delivery of working software, and promotes customer validation as key success measures.

• MSF for CMMI Process Improvement. A process designed to facilitate CMMI Level 3 as defined by the Software Engineering Institute.6 This extends MSF Agile with more formal planning, more documentation and work products, more sign-off gates, and more time tracking. MSF for CMMI clearly maps its practices to the Practice Areas and Goals in order to assist organizations that are using the CMMI as the basis for their process improvement or that are seeking CMMI appraisal. However, unlike previous attempts at CMMI processes, MSF uses the value-up paradigm to enable a low-overhead, agile instantiation of the CMMI framework.7

Both instances of MSF are value-up processes. In both cases, MSF harvests proven practices from Microsoft, its customers, and industry expertise. The primary differences between the two are the level of approval formality, the level of accounting for effort spent, and the depth of metrics used. For example, MSF for CMMI Process Improvement considers the appraiser or auditor as an explicit role and provides activities and reports that the auditor can use to assess process compliance. In its agile sibling, compliance is not a consideration.

In both the agile and CMMI instances, MSF’s smooth integration in Team System supports rapid iterative development with continuous learning and refinement. The common work item database and metrics warehouse answer questions on project health in near real time, and the coupling of process guidance to tools makes it possible to see the right guidance in the context of the tools and act on it as you need it.

Iteration

MSF is an iterative and incremental process. For more than twenty years, the software engineering community has understood the need for iterative development. This is the generally defined as the “software development technique in which requirements definition, design, implementation and testing occur in an overlapping, iterative (rather than sequential) manner, resulting in incremental completion of the overall software product.”8

Iterative development arose as an antidote to sequential “waterfall” development. Fred Brooks, whose Mythical Man Month is still among the world’s most widely admired software engineering books, sums up the waterfall experience as follows:

The basic fallacy of the waterfall model is that it assumes a project goes through the process once, that the architecture is excellent and easy to use, the implementation design is sound, and the realization is fixable as testing proceeds. Another way of saying it is that the waterfall model assumes the mistakes will all be in the realization, and thus that their repair can be smoothly interspersed with component and system testing.9

Why Iterate?

There are many highly compelling arguments for iterative development:

1. Risk management. The desired result is unknowable in advance. To manage risks, you must prove or disprove your requirements and design assumptions by incrementally implementing target system elements, starting with the highest-risk elements.

2. Economics. In an uncertain business climate, it is important to review priorities frequently and treat investments as though they were financial options. The more flexibility gained through early payoff and frequent checkpoints, the more valuable the options become.10

3. Focus. People can only retain so much in their brains. By batching work into small iterations, all the team players focus more closely on the work at hand—business analysts can do a better job with requirements, architects with design, developers with code, and so on.

4. Motivation. The most energizing phenomenon on a software team is seeing early releases perform (or be demo’d) in action. No amount of spec review can substitute for the value of working bits.

5. Control theory. Small iterations enable you to reduce the margin of error in your estimates and provide fast feedback about the accuracy of your project plans.

6. Stakeholder involvement. Stakeholders (customers, users, management) see results quickly and become more engaged in the project, offering more time, insight, and funding.

7. Continuous learning. The entire team learns from each iteration, improving the accuracy, quality, and suitability of the finished product.

A simple summary of all this wisdom is that “Incrementalism is a good idea for all projects. . . and a must when risks are high.”11

Nonetheless, iterative development struggles to be adopted in many IT organizations. In practice, iterative development requires that the team and its project managers have a total view of the work to be done and the ability to monitor and prioritize frequently at short iteration boundaries. This frequent updating requires a readily visible backlog, preferably with automated data collection, such as the VSTS work item database provides.

In the value-up view of iterative development, there are many cycles in which activities overlap in parallel. The primary planning cycle is the “iteration.” An iteration is the fixed number of weeks, sometimes called a “time box,” used to schedule a task set. Use iterations as the interval in which to schedule intended scenarios, measure the flow of value, assess the actual process, examine bottlenecks, and make adjustments (see Figure 2.2). In the chapters on development and testing, I’ll cover the finer-grained cycles of the check-in and daily build, which are often called “increments” and which naturally drive the iteration.12

Figure 2.2 Software projects proceed on many interlocking cycles, ranging from the code-edit-test-debug-check in cycle, measured in minutes, to the iteration of a few weeks, to a program that might span years. If the cycles connect, the process as a whole can be understood.

Length

In practice, the length of iterations varies from project to project, usually two to six weeks. Again, the iteration determines the size of the batch that you use for measuring a chunk of deliverable customer value, such as scenarios and quality of service (QoS) requirements. You need to keep the batch size as small as possible while meeting this objective, as David Anderson explains in Agile Management for Software Engineering:

Small batch sizes are essential for flow! Small batch sizes are also essential for quality. In software development human nature creates a tendency for engineers to be less exacting and pay less attention to detail when considering larger batches. For example, when code reviews are performed on small batches, the code review takes only a short time to prepare and a short time to conduct. Because there is only a small amount of code to review, the reviewers tend to pay it greater attention and spot a greater number of errors than they would on a larger batch of code. It is therefore better to spend 4 hours per week doing code reviews than 2 days at the end of each month or, even worse, one week at the end of each quarter. A large batch makes code reviewing a chore. Small batches keep it light. The result is higher quality. Higher quality leads directly to more production. . . . Reducing batch sizes improves ROI!13

Different Horizons, Different Granularity

One of the benefits of using iterations is that it provides clear levels of granularity in planning. You can plan tasks to one-day detail for the current iteration while maintaining a rough candidate list of scenarios and QoS requirements for future iterations. In other words, you only try to go into detail for the current iteration. (By the way, the person who should plan and estimate the detailed tasks is the one who is going to do them.) Not only does this keep the team focused on a manageable set of work items, but it also gives the maximum benefit from the experience of actual completion of delivered value when planning the next set.

Apply common sense to the depth of planning and prioritization. Obviously, you want to mitigate major risks early, so you will prioritize architectural decisions into early iterations, even if they are incomplete from the standpoint of visible customer value.

Prioritization

There are two complementary ways to plan work: by priority and by stack rank. VSTS supports both. Ranking by priority involves rating the candidate scenarios and QoS into broad buckets, such as Must have (1), Should have (2), Could have (3), and Won’t have (4). This scheme is mnemonically referred to as MoSCoW.14

MoSCoW and similar priority schemes work well for large numbers of small candidates, such as bugs, or scenarios under consideration for future iterations, where it is not worth spending the time to examine each item individually.

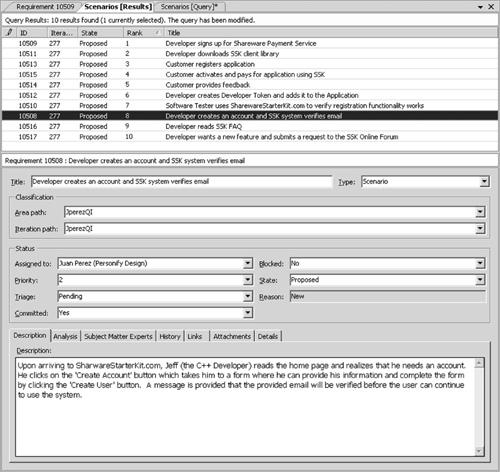

Stack ranking, on the other hand, is the practice of assigning cardinal ranks to a set of requirements to give them a unique order (see Figure 2.3). Stack ranking is well suited to planning scenarios and QoS for near-term iterations. Stack ranking mitigates two risks. One is that you simply end up with too many “Must haves.” The other is that your estimates of effort are wrong. If you tackle scenarios and QoS according to a linear rank order, then you know what’s next, regardless of whether your planned work fits your available time. In fact, it helps you improve your estimates. DeMarco and Lister describe this effect well:

Figure 2.3 In MSF, scenarios are stack ranked, as shown on this work item form. Here the scenarios are shown in the Team Explorer of VSTS.

Rank-ordering for all functions and features is the cure for two ugly project maladies: The first is the assumption that all parts of the product are equally important. This fiction is preserved on many projects because it assures that no one has to confront the stakeholders who have added their favorite bells and whistles as a price for their cooperation. The same fiction facilitates the second malady, piling on, in which features are added with the intention of overloading the project and making it fail, a favorite tactic of those who oppose the project in the first place but find it convenient to present themselves as enthusiastic project champions rather than as project adversaries.15

Adapting Process

Iterations also give you a boundary at which to make process changes. You can tune based on experience, and you can adjust for context. For example, you might increase the check-in requirements for code review as your project approaches production and use VSTS check-in policies and check-in notes to enforce these requirements. Alistair Cockburn has been one of the clearest proponents of adapting process:

There is one more device to mention in the design of methodologies. That is to adjust the methodology on the fly. Once we understand that every project deserves its own methodology, then it becomes clear that whatever we suggest to start with is the optimal methodology for some other project. This is where incremental delivery plays a large role.

If we interview the project team between, and in the middle of, increments, we can take their most recent experiences into account. In the first increment, the mid-increment tune-up address the question, “Can we deliver?” Every other interview addresses, “Can we deliver more easily or better?”16

The process review at the end of an iteration is called a retrospective, a term with better implications than the alternative post-mortem.

Risk Management

The risks projects face differ considerably. Traditional risk management tends to be event-driven, in the form of “What if X were to happen?” MSF complements event-driven risk management with a constituency-based approach, in which there are seven points of view that need to be represented in a project to prevent blind spots.

In some cases, for example, technology is new and needs to be proven before being adopted. Sometimes extreme QoS issues must be addressed, such as huge scalability or throughput. At other times, there are significant issues due to the assembly of a new team with unknown skills and communication styles. VSTS, in turn, tracks risks directly in the work item database so that the project team can take them on directly.

Looking at risk from the standpoint of constituencies changes the approach to mitigation in a very healthy way. For example, all projects face a risk of poorly understood user requirements. Paying attention to user experience and QoS as central concerns encourages ongoing usability research, storyboarding, prototyping, and usability labs. The event-driven version of this approach might be construed as a risk that the customer will refuse acceptance and therefore that requirements need to be overly documented and signed off to enforce a contract. One approach embraces necessary change, whereas the other tries to prevent it. The constituency-based approach follows the value-up paradigm, whereas the event-driven one, when used in isolation, follows the work-down paradigm.

Fit the Process to the Project

The Agile Project Management Declaration of Interdependence speaks of “situationally specific strategies” as a recognition that the process needs to fit the project. VSTS uses the mechanism of process templates to implement this principle. But what contextual differences should you consider in determining what is right for your project?

Some of the considerations are external business factors; others have to do with issues internal to your organization. Even if only certain stakeholders will choose the process, every team member should understand the rationale of the choice and the value of any practice that the process prescribes. If the value can’t be identified, it is very unlikely that it can be realized. Sometimes the purpose may not be intuitive, such as certain legal requirements, but if understood can still be achieved.

The primary dimensions in which contexts differ are as follows:

• Adaptive Versus Plan-Driven

• Tacit Knowledge Versus Required Documentation

• Implicit Versus Explicit Sign-Off Gates and Governance Model

• Level of Auditability and Regulatory Concerns

• Prescribed Organization Versus Self-Organization

• One Project at a Time Versus Many Projects at Once

• Geographic Distribution

• Contractual Obligations

The next sections describe each of these considerations.

Adaptive Versus Plan-Driven

To what extent does your project need to pin down and design all of its functionality in advance, or conversely, to what extent can the functionality and design evolve over the course of the project? There are advantages and disadvantages to both approaches. The primary benefit of a plan-driven approach is stability. If you are assembling a system that has many parts that are being discretely delivered, obviously interfaces need to be very stable to allow integrations from the contributing teams. (Consider a car, a web portal hosting products from many sellers, or a payment clearing system.) Of course, the main drawback to a plan-driven approach is that you often have to make the most important decisions when you know the least.

On the other hand, if your project needs to be highly responsive to competitive business pressure, usability, or technical learning achieved during the project, you can’t pin the design down tightly in advance. (Consider consumer devices and commercial web sites.) The main risk with the adaptive approach is that you discover design issues that force significant rework or change of project scope.

Required Documentation Versus Tacit Knowledge

A huge difference in practice is the amount of documentation used in different processes. For this process discussion, I intend “writing documentation” to mean preparing any artifacts—specifications, visual models, meeting minutes, presentations, and so on—not directly related to the executable code, data, and tests of the software being built. I am not including the help files, manuals, training, and other materials that are clearly part of the product value being delivered to the end user or customer. Nor am I discounting the importance of the educational materials.

Any documentation that you write should have a clear purpose. Usually the purpose concerns contract, consensus, architecture, approval, audit, delegation, or work tracking. Sometimes the documentation is not the most effective way to achieve the intended goal. For example, when you have a small, cohesive, collocated team with strong domain knowledge, it may be easy to reach and maintain consensus. You may need little more than a vision statement, a scenario list, and frequent face-to-face communication with the customer and with each other. (This is part of the eXtreme Programming model.)

On the other hand, when part of your team is twelve time zones away, domain knowledge varies, and many stakeholders need to review designs, much more explicit documentation is needed. (And of geographic necessity, many discussions must be electronic rather than face-to-face.)

The key point here is to write the documentation for its audience and to its purpose and then to stop writing. After the documentation serves its purpose, more effort on it is waste. Instead, channel the time and resources into work products that bring value to the customer directly. If you later discover that your documentation is inadequate for its purpose, update it then. In other words, “fix it when it hurts.”17

Implicit Versus Explicit Sign-Off Gates and Governance Model

Appropriately, business stakeholders usually require points of sign-off. Typically, these gates release funding and are, at least implicitly, points at which a project can be canceled. When development is performed by outside companies or consultants, contractual formality usually prescribes these checkpoints.

Often, setting expectations and communicating with the stakeholders is a major part of the project manager’s responsibility. For correct communication, perhaps the most important issue is whether the sign-offs are based on time (how far did we get in the allotted time?) or functionality (how long did it take us to implement the functionality for this milestone?).

This alignment of IT with the business stakeholders is the domain of IT governance. In MSF, unlike other processes, the governance model is separated from the operational process. Governance concerns the alignment of the project with its customers, whereas the operational activities are managed in much finer-grained iterations (see Figure 2.4). Note that the alignment checkpoints do not preclude any work from being started earlier, but they do enable the delivery team and its customer to pass an agreement milestone without a need to revisit the earlier track.

Figure 2.4 The governance model in MSF for CMMI Process Improvement calls for explicit business alignment checkpoints at the end of each track, whereas MSF for Agile Software Development assumes a more continuous and less formal engagement.

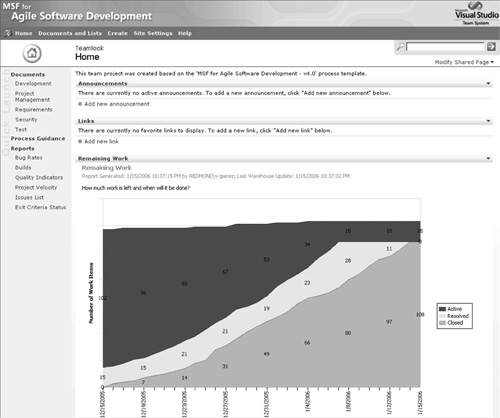

VSTS provides a web-hosted project portal, based on Windows SharePoint Services, to make the project more transparent to all the stakeholders outside the immediate project team (see Figure 2.5). Of course, access to the portal can be controlled by permission and policy.

Figure 2.5 The project portal provides a daily view of the project status so that checkpoints can act to confirm decisions rather than to compile newly available information.

Auditability and Regulatory Concerns

Related to sign-off gates is the need to satisfy actual or potential auditors. In many industries, the risk of litigation (over product safety, customer privacy, business practices, and so on) drives the creation of certain documentation to prove the suitability of the development process. At the top of the list for all publicly traded U.S. companies is Sarbanes-Oxley (SOX) compliance, which requires executive oversight of corporate finance and accordingly all IT development projects that concern money or resources.

Sometimes these auditors are the well-known industry regulators, such as the FDA (pharmaceuticals and medical equipment), SEC (securities), FAA (avionics), or their counterparts in the European Union and other countries. Usually, these regulators have very specific rules of compliance.

Prescribed Versus Self-Organization

High-performance teams tend to be self-directed teams of peers that take advantage of the diverse personal strengths of their members. Such teams strive to organize themselves with flexible roles and enable individuals to pick their own tasks. They follow the principle that “responsibility cannot be assigned; it can only be accepted.”18

In other organizations, this ideal is less practical. People have to assume roles that are assigned. Often a good coach may have a better idea than the players of their capabilities and developmental opportunities. Central assignment may also be necessary to take advantage of specialist skills or to balance resources. This constraint becomes another factor in determining the right process.

In either case, it is important to recognize the distinction between roles on a project and job descriptions. MSF makes a point of describing roles, which consist of related activities that require similar skills, and of emphasizing that one person can fill multiple roles. Most career ladders have conditioned people to think in terms of job descriptions. This is an unpleasant dichotomy. When staffing your project, think about the skills your team offers and then make sure that the roles can be covered, but don’t be slavish about partitioning roles according to job titles.

One Project at a Time Versus Many Projects at Once

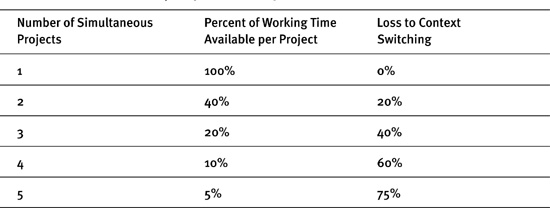

One of the most valuable planning actions is to ensure that your team members can focus on the project at hand without other commitments that drain their time and attention. Twenty years ago, Gerald Weinberg proposed a rule of thumb to compute the waste caused by project switching, shown in Table 2.119

Table 2.1. Waste Caused by Project Switching

In situations of context switching, you need to perform much more careful tracking, including effort planned and spent, so that you can correlate results by project.

A related issue is the extent to which team members need to track their hours on a project and use those numbers for estimation and actual work completion. If your team members are dedicated to your project, then you ought to be able to use calendar time as effectively as hours of effort to estimate and track task completion. However, if their time is split between multiple projects, then you need to account for multitasking.

This issue adds a subtle complexity to many metrics concerning estimation and completion of effort. Not only are they harder to track and report, but also the question of estimating and tracking overhead for task switching becomes an issue.

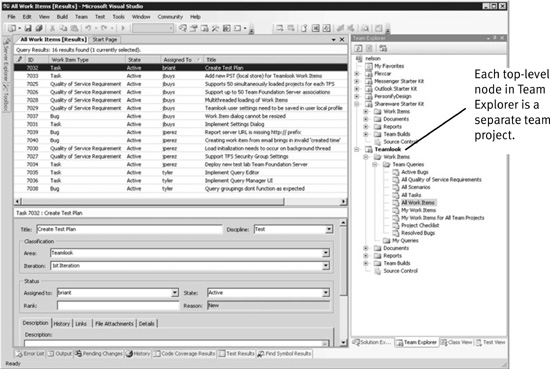

In many organizations, however, it is a fact of life that individuals have to work on multiple projects, and VSTS makes this possible. VSTS keeps all the relevant projects on a Team Foundation Server and displays the active ones you have chosen on the Team Explorer (see Figure 2.6).

Figure 2.6 VSTS makes it possible to switch context between multiple projects (such as new development and maintenance) directly in the development environment. The bookkeeping of code versions, tests, documents, and work items is handled automatically. Although this approach does not remove the cognitive load, it does eliminate many errors that are caused by the mechanical complexity of handling multiple projects.

Geographic and Organizational Boundaries

Teams are often distributed geographically, sometimes around the world. The VSTS team, for example, was distributed in Redmond, Raleigh, Copenhagen, Hyderabad, and Beijing. When you have to work across locations and time zones, you typically need to rely on explicit documentation over tacit knowledge and must be more of a prescribed organization than a self-organizing team. It is important to have clear technical boundaries and interfaces between the local teams and leave decisions within the boundaries of the teams.

A very large amount of IT work is outsourced, that is, contracted with a separate company. The outsourcer might be local or on the other side of the planet. These cases obviously require business contracts, which tend to specify deliverables in high detail. Clearly, these cases also require explicit documentation and a more plan-driven than adaptive approach.

Summary

Chapter 1, “A Value-Up Paradigm”, argued the importance of the value-up paradigm. This chapter addressed the issues of choosing and applying a suitable process in the value-up context.

In VSTS, the two processes delivered by Microsoft are MSF for Agile Software Development and MSF for CMMI Process Improvement. Both are value-up processes, relying on iterative development, iterative prioritization, continuous improvement, constituency-based risk management, and situationally specific adaptation of the process to the project.

MSF for CMMI Process Improvement stretches the practices of its more agile cousin to fit contexts that require a more explicit governance model, documentation, prescribed organization, and auditability. It supports appraisal to CMMI Level 3, but more importantly, it provides a low-overhead, agile model of CMMI.

Endnotes

1. Alistair Cockburn coined the phrase “Stretch to Fit” in his Crystal family of methodologies and largely pioneered this discussion of context with his paper “A Methodology Per Project,” available at http://alistair.cockburn.us/crystal/articles/mpp/methodologyperproject.html.

2. For a comparison of thirteen published processes, see Barry Boehm and Richard Turner, Balancing Agility with Discipline: A Guide for the Perplexed (Boston: Addison-Wesley, 2004), 168–194.

3. As Kent Beck pithily put it: “All methodologies are based on fear.” Kent Beck, Extreme Programming Explained: Embrace Change, First Edition (Boston: Addison-Wesley, 2000), 165.

4. Barry Boehm and Richard Turner, Balancing Agility with Discipline: A Guide for the Perplexed (Boston: Addison-Wesley, 2004), 152.

7. See David J Anderson, “Stretching Agile to fite CMMI Level 3,” presented at the Agile Conference, Denver 2005, available from http://www.agilemanagement.net/Articles/Papers/StretchingAgiletoFitCMMIL.html.

8. [IEEE Std 610.12-1990], 39.

9. Frederick P. Brooks, Jr., The Mythical Man-Month: Essays on Software Engineering, Twentieth Anniversary Edition (Reading, MA: Addison-Wesley, 1995), 266.

10. http://www.csc.ncsu.edu/faculty/xie/realoptionse.htm

11. Tom DeMarco and Timothy Lister, Waltzing with Bears: Managing Risk on Software Projects (New York: Dorset House, 2003), 137.

12. The convention of “iteration” for the planning cycle and “increment” for the coding/testing one is common. See, for example, Alistair Cockburn’s discussion at http://saloon.javaranch.com/cgi-bin/ubb/ultimatebb.cgi?ubb=get_topic&f=42&t=000161.

13. Anderson 2004, op. cit., 89.

14. MoSCoW scheduling is a technique of the DSDM methodology. For more on this, see http://www.dsdm.org.

15. DeMarco and Lister, op. cit., 130.

16. Cockburn, op. cit.

17. Scott W. Ambler and Ron Jeffries, Agile Modeling: Effective Practices for Extreme Programming and the Unified Process (New York: Wiley, 2002).

18. Kent Beck with Cynthia Andres, Extreme Programming Explained: Embrace Change, Second Edition (Boston: Addison-Wesley, 2005), 34.

19. Gerald M. Weinberg, Quality Software Management: Systems Thinking (New York: Dorset House, 1992), 284.