3. Requirements

Business Analyst

Product Manager

User Experience

“The single hardest part of building a software system is deciding precisely what to build.”1

—Frederick Brooks, Mythical Ma-Month

Figure 3.1 Edison patented the light bulb in 1880, but it is still being improved today.

United States Patent and Trademark Office

In the previous chapter, I discussed the choice of software process and the contextual issues that would favor one process over another. Regardless of your process choice, you need to start by envisioning your solution requirements. Usually, this responsibility is led by a business analyst or product manager, and in pure XP, it is done by an onsite customer. Obviously, you have to do much of the solution definition before you start your project. You won’t get funding, recruit team members, or identify the rest of work until you know what you’re trying to do.

MSF defines requirements primarily as scenarios and qualities of service (QoS). VSTS uses specific work item types to capture and track these requirements so that they show up in the project backlog, ranked as an ordinary part of the work. You continue to refine your requirements throughout your project as you learn from each iteration. Because these requirements are work items, when you make changes to them, a full audit is captured automatically, and the changes flow directly into the metrics warehouse.

What’s Your Vision?

Every project should have a vision that every stakeholder can understand. Often the vision statement is the first step in getting a project funded. Even when it’s not, treating the vision as the motivation for funding is helpful in clarifying the core values of the project. Certainly it should capture why a customer or end user will want the solution that the project implements.

The shorter the vision statement is, the better it is. A vision statement can be one sentence long, or one paragraph, or longer only if needed. It should speak to the end user in the language of the user’s domain. A sign of a successful vision statement is that everyone on the project team can recite it from memory and connect their daily work to it.

Strategic Projects

Projects can be strategic or adaptive. Strategic projects represent significant investments, based on a plan to improve significantly over their predecessors. For example, when startup companies form around a new product idea, or when new business units are launched, the rationale is typically a bold strategic vision.

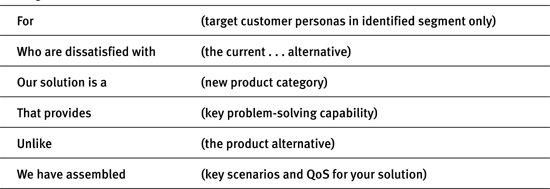

A useful format for thinking about the key values of such a strategic project, and hence the vision statement, is the “elevator pitch.”2 It’s called an elevator pitch because you can recite it to a prospective customer or investor in the brief duration of an elevator ride. It’s useful because it captures the vision crisply enough that the customer or investor can remember it from that short encounter. Geoffrey Moore invented a succinct form for the evaluator pitch.

Adaptive Projects

On the other hand, most IT projects are more adaptive. They tackle incremental changes to existing systems. It is often easiest and most appropriate to describe the vision in terms of business process changes. In these cases, if a business process model or description is available, it is probably the best starting point. Use the as-is model as the current alternative and the proposed one as the solution.

When to Detail Requirements

“Analysis paralysis” is a common condemnation of stalled projects. This complaint reflects the experience that trying too hard to pin down project requirements early can prevent any forward movement. This can be a significant risk in envisioning. There are two factors to keep in mind to mediate this risk: the shelf life of requirements and the audience for them.

Requirements Are Perishable

One of the great insights of the value-up paradigm is that requirements have a limited shelf life, for four reasons:

• The business environment or problem space changes. Competitors, regulators, customers, users, technology, and management all have a way of altering the assumptions on which your requirements are based. If you let too much time lapse between the definition of requirements and their implementation, you risk discovering that you need to redefine the requirements to reflect a changed problem.

• The knowledge behind the requirements becomes stale. When an analyst or team is capturing requirements, knowledge of the requirements is at its peak. The process of communicating their meanings, exploring their implications, and understanding their gaps should happen then. Documentation might capture some of this information for intermediate purposes such as contractual signoff, but ultimately what matters is the implementation. The more closely you couple the implementation to the requirements definition, the less you allow that knowledge to decay.

• There is a psychological limit to the number of requirements that a team can meaningfully consider in detail at one time. The smaller you make the batch of requirements, the more attention you get to quality design and implementation. Conversely, the larger you allow the requirements scope of an iteration to grow, the more you risk confusion and carelessness.

• The implementation of one iteration’s requirements influences the detailed design of the requirements of the next iteration. Design doesn’t happen in a vacuum. What you learn from one iteration enables you to complete the design for the next.

In the discussion about capturing requirements that follows, I risk encouraging you to do too much too early. I don’t mean to. Think coarse and understandable initially, sufficient to prioritize requirements into iterations. The detailed requirements and design can follow.

Who Cares About Requirements

For most projects, the requirements that matter are the ones that the team members and stakeholders understand. On a successful project, they all understand the same requirements; on an unsuccessful one, they have divergent expectations (see Figure 3.2).

Figure 3.2 The X-axis shows the degree of ambiguity (opposite of specificity) in the requirements documents; the Y-axis shows the resulting understandability.

Dean Leffingwell and Don Widrig, Managing Software Requirements (Boston, MA: Addison-Wesley, 2000), 273

This tension between specificity and understandability is an inherently hard issue. The most precise requirements are not necessarily the most intelligible, as illustrated in Figure 3.2. A mathematical formulation or an executable test might be much harder to understand than a more commonplace picture with a prose description.

It is important to think of requirements at multiple levels of granularity. For broad communication, prioritization, and costing to a rough order of magnitude, you need a coarse understanding that is easy to grasp. On the other hand, for implementation of a requirement within the current iteration, you need a much more detailed specification. Indeed, if you practice test-driven development (see Chapter 6, “Development”), the ultimate detailed requirement definition is the executable test.

Think about the audience for your requirements work. The audience will largely determine the breadth and precision you need to apply in specifying requirements. At one end of the scale, in eXtreme Programming, you may have a small, collocated team and an onsite customer. You note future requirements on a 3” × 5” card or as the title on a work item, and you move on until it is time to implement. When it is time to do a requirement, within the same session you discuss the requirement with the customer and write the test that validates it, just before implementation. 3

At the other extreme, your requirements specification may be subject to regulatory approval, audit, or competitive bidding. At this end, you may want every detail spelled out so that every subsequent change can be individually costed and submitted for approval.4

MSF recommends that you plan and detail requirements for one iteration at a time. Effectively, the iteration becomes a batch of requirements that progress through realization. For most projects, this is a balanced medium that maximizes communication, keeps requirements fresh, avoids wasted work, and keeps the focus on the delivery of customer value.

Personas and Scenarios

In a value-up paradigm, quality is measured as value to the customer. When you don’t have a live customer on site, or your customers can’t be represented by a single individual, personas and scenarios are the tools you use to understand and communicate that value.

Start with Personas

To get clear goals for a project, you need to know the intended users. Start with recognizable, realistic, appealing personas. By now, personas have become commonplace in usability engineering literature, but before the term personas became popular there, the technique had been identified as a means of product definition in market research. Moore described the technique well:

The place most . . . marketing segmentation gets into trouble is at the beginning, when they focus on a target market or target segment instead of on a target customer . . . We need something that feels a lot more like real people . . . Then, once we have their images in mind, we can let them guide us to developing a truly responsive approach to their needs.5

In turn, personas are the intended users who are accomplishing the scenarios. For projects with identified users, who are paying customers, you can name the personas for representative actual users. For a broad market product, your personas will probably be composite fictional characters, who don’t betray any personal information. (Note that there are other stakeholders, such as the users’ boss, who may be funding the project but are at least one step removed from the personal experience of the users.) Alan Cooper has described the characteristics of good personas:

Personas are user models that are represented as specific, individual humans. They are not actual people, but are synthesized directly from observations of real people. One of the key elements that allow personas to be successful as user models is that they are personifications (Constantine and Lockwood, 2000). They are represented as specific individuals. This is appropriate and effective because of the unique aspects of personas as user models: They engage the empathy of the development team towards the human target of the design. Empathy is critical for the designers, who will be making their decisions for design frameworks and details based on both the cognitive and emotional dimensions of the persona, as typified by the persona’s goals.6

Good personas are memorable, three-dimensional1, and sufficiently well described to be decisive when they need to be. If you were making a movie, personas would be the profiles you would give to the casting agent. They describe the categories of users not just in terms of job titles and functions but also in terms of personality, work or usage environment, education, computer experience, and any other characteristics that make the imagined person come to life. Assign each persona a name and attach a picture so that it is memorable. Some teams in Microsoft put posters on the wall and hand out fridge magnets to reinforce the presence of the personas.7

Personas can also be useful for describing adversaries. For example, if security and privacy are a concern, then you probably want to profile the hacker who tries to break into your system. The vandal who tries indiscriminately to bring down your web site is different from the thief who tries to steal your identity without detection. With disfavored personas, everyone can be made conscious of anti-scenarios—things that must be prevented.

Scenarios

In MSF, scenarios are the primary form of functional requirements. By “scenario,” MSF means the single interaction path that a user (or group of collaborating users, represented by personas) performs to achieve a known goal. The goal is the key here, and it needs to be stated in the user’s language, such as Place an order. As your solution definition evolves, you will evolve the scenario into a specific sequence of steps with sample data, but the goal of the scenario should not change.

There are many reasons to use scenarios to define your solution:

• Scenarios communicate tangible customer value in the language of the customer in a form that can be validated by customers. Particularly useful are questions in the form of “What if you could [accomplish goal] like [this scenario]?” You can use these questions in focus groups, customer meetings, and usability labs. As the solution evolves, scenarios naturally turn into prototypes and working functionality.

• Scenarios enable the team to plan and measure the flow of customer value. This enables the creation of a progressive measurement of readiness and gives the team a natural unit of scoping.

• Scenarios unite the narrow and extended teams. All stakeholders understand scenarios in the same way so that differences in assumptions can be exposed, biases overcome, and requirements discussions made tangible.

A key is to state goals in the language and context of the user. The goal—such as Place an order or Return goods—defines the scenario, even though the steps to accomplish it may change. Even though you may be much more familiar with the language of possible solutions than with the customers’ problems, and even though you may know one particular subset of users better than the others, you need to stick to the users’ language. Alan Cooper describes this well:

Goals are not the same as tasks. A goal is an end condition, whereas a task is an intermediate step that helps to reach a goal. Goals motivate people to perform tasks . . . Goals are driven by human motivations, which change very slowly, if at all, over time.8

Often, the goals are chosen to address pain points, that is, issues that the user faces with current alternatives, which the new solution is intended to solve. When this is true, you should capture the pain points with the goals. At other times, the goals are intended to capture exciters—be sure to tag these as well so that you can test how much delight the scenario actually brings.

Research Techniques

We find the personas by observing target users, preferably in their own environment. Several techniques can be applied—two widely used ones are focus groups and contextual inquiries. For all these techniques, it is important to select a representative sample of your users. A good rule of thumb is not to be swayed heavily by users who are too novice or too expert and instead to look for intermediates who represent the bulk of your target population for most of your solution’s life.9

Focus groups are essentially directed discussions with a group of carefully chosen interview subjects with a skilled facilitator. When conducted professionally, focus groups usually are closed sessions with observers sequestered behind a one-way mirror (or on the end of a video feed). Open focus groups work too, where observers sit in the back of the room, provided that they sit quietly and let the discussion unfold without interjection.

Focus groups are undoubtedly useful, especially when you are gathering issues expansively. At the same time, you need to be very careful to distinguish between what people say and what they do. There are many foibles that make the distinction important: wishful thinking, overloaded terminology, desires to impress, and human difficulty at self-observation, to name a few.

People often describe their (or their colleagues’) behavior differently from how an outside observer would. Contextual inquiry is a technique that attempts to overcome this problem by directly observing users in their own environment when they are doing their everyday tasks.10

Contextual inquiry is a technique borrowed from anthropology. The observer watches, occasionally asks open-ended journalistic questions (Who, What, When, Where, Why, How), does not try to bias the user’s activities, and does not try to offer solutions. It is important to understand pain points and opportunities—where the user is annoyed, where things can be made better, what would make a big difference. If possible, gather relevant documents and other work products and take pictures of the user’s environment, especially reference material posted on the wall.

My favorite anecdote to illustrate the problem of self-reporting comes from a customer visit to a major bank in 2003 when Microsoft was trying to define Visual Studio Team System requirements. In a morning meeting, which was run as an open focus group, the Microsoft team asked about development practices, especially unit testing. They were told that unit testing was a universal practice among the bank’s developers and indeed was a requirement before checking in code.

One of the Microsoft usability engineers spent that afternoon with one of the developers in his cube. (That’s why it’s called a contextual interview.) The developer went through several coding tasks and checked code in several times. After a couple hours, the usability engineer asked, “I’ve seen you do several check-ins, and I’m wondering, do you do unit testing, too?” “Of course,” came the reply, “I press F5 before checking in—that’s unit testing.” (In case you’re not familiar with Visual Studio, F5 is the compilation key and has nothing to do with testing.) The reported practice of unit testing was simply not found in practice. In designing VSTS, we interpreted this discrepancy as an opportunity to make unit testing easy and practical for developers in organizations like this. (See Chapter 6, “Development.”)

One user does not make a persona, and you must repeat the observation until you feel that you have clear patterns of behavior that you can distill into a persona description.

Get Specific Early

When you have defined personas, you can describe the scenarios for the specific personas. Scenarios, too, were first identified as a market research technique. Again, Geoffrey Moore:

[Characterizing scenarios] is not a formal segmentation survey—they take too long, and their output is too dry. Instead, it is a tapping into the fund of anecdotes that actually carries business knowledge in our culture. Like much that is anecdotal, these scenarios will incorporate fictions, falsehoods, prejudices, and the like. Nonetheless, they are by far the most useful and most accurate form of data to work with at this stage in the segmentation process.11

Start with the scenario goal. Then break down the goal into a list of steps, at whatever granularity is most understandable. Use action verbs to enumerate the steps. Keep the scenarios in your user’s language, not in the language of your implementation. In other words, scenarios should be described first from the problem space, not the solution space.

Don’t try to detail the alternate and exception paths initially—that usually hampers understandability and quickly becomes too complicated to manage—but record just their names as other scenarios and save them for future iterations.

When you are comfortable with the sequence, you can add the second part to the step definition, that is, how the intended solution responds. You now have a list in the form of Persona-Does-Step, Solution-Shows-Result. Tag the steps that will elicit a “Wow!” from your user. (These are the exciters, which are discussed later in this chapter.)

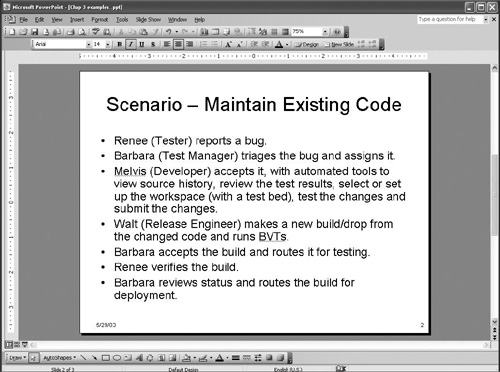

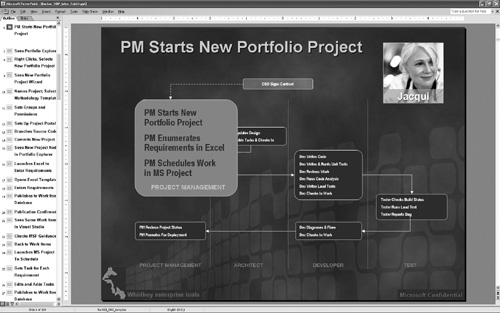

Figure 3.3 When we were developing VSTS, we used high-level scenarios to envision the product scope. This is a very early example. Over time, the lines of this slide became scenario work items in our work item database, and we used this as the primary list to drive execution and testing.

Storyboards

Although scenarios start as goals and then evolve into lists of steps, they don’t stop there. When you are comfortable that the steps and their sequence define the experience you want, elaborate them into wireframes, storyboards, prototypes, and working iterations. Although the goal of each scenario should remain constant, the details and steps may evolve and change with every iteration.

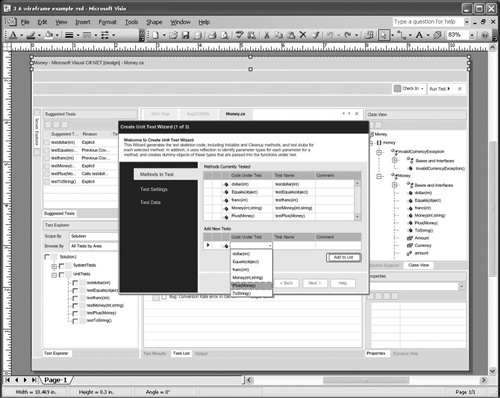

Wireframes and storyboards are often essential to communicate the flow of interaction effectively, especially when you have rich data and state in the solution being designed. Microsoft Visio provides a stencil for drawing wireframes of user interfaces. For each task, with a title in the form of “Persona does step,” draw a wireframe. Sequence the wireframes together, paying attention to the flow and data, and you have a storyboard. This can be a cut-and-paste of the Visio screens into PowerPoint. If you need to distribute the storyboard, you can easily make a narrated movie of it using Producer for PowerPoint or by recording a LiveMeeting.

Figure 3.4 This is a very early Visio wireframe from the initial scenarios for VSTS. Not all the windows shown were implemented, and not all the windows implemented were designed as envisioned in the wireframe. Three successive rounds of testing in usability labs and subsequent revisions were needed before users could achieve the intended goal effectively.

Breadth of Scenarios

It is useful to think of the end-to-end story that your solution needs to tell and to line up the scenarios accordingly. This story should start with the customer deploying your solution and run through the customer realizing the value. This is equally useful whether you are building an internal, bespoke solution or a commercial product.

If you take this approach, you must deal with both the issues of discoverability—how first-time users find the necessary features—and the longitudinal issues—how intermediate users, as they become more experienced, become productive.

How many scenarios do you need? Typically, you should have enough scenarios to define the major flows of the target system and few enough to be easy to remember.

Your scenarios should be sufficient to cover the end-to-end flows of the solution without gaps and without unnecessary duplication. This means that your scenarios probably need to decompose into intermediate activities, which in turn contain the steps.

If you are creating a multiperson scenario, then it is useful to draw a flow with swimlanes to show who does what before whom.

Figure 3.5 This PowerPoint slide is an illustration of the scenarios in an end-to-end story. This particular slide was used in the definition of scenarios for VSTS.

Customer Validation

A key benefit of scenarios and iterations is that you have many opportunities for customers to review your solution as it evolves and to validate or correct your course before you incur expensive rework. Depending on the business circumstances, you might want up to three kinds of signoff you want to see for your solution. Users (or their advocates) need to confirm that the solution lets them productively accomplish their goal. Economic buyers, sometimes the users’ boss, sometimes called business decision makers, need to see that the financial value is being realized from the project. Technical evaluators need to see that the implementation is following the necessary architecture to fit into the required constraints of the customer organization. Sometimes these roles are collapsed, but often they are not. Scenarios are useful in communicating progress to all three groups.

Early in a project, this validation can be done in focus groups or interviews with scenarios as lists and then as wireframes. Storyboards and live functionality, as it becomes available, can also be tested in a usability lab.

Figure 3.6 This is a frame from the streaming video of a usability lab. The bulk of the image is the computer screen as the user sees it. The user is superimposed in the lower right so that observers can watch and listen to the user working through the lab assignments.

Usability labs are settings in which a target user is given a set of goals to accomplish without coaching in a setting that is as realistic as possible. At Microsoft, we have rooms permanently outfitted with one-way mirrors and video recording, which enable spectators to watch behind the glass or over streaming video. Although this setup is convenient, it is not necessary. All you need is a basic video camera on a tripod or a service such as Microsoft LiveMeeting with a webcam and desktop recording.

The three keys to making a usability lab effective are as follows:

• Create a trusting atmosphere in which the user knows that the software is being tested, not the user.

• Have the user think out loud continually so that you can hear the unfiltered internal monologue.

• Don’t “lead the witness”—that is, don’t interfere with the user’s exploration and discovery of the software under test.

Usability labs, like focus groups and contextual interviews, are ways to challenge your assumptions regularly. Remember that requirements are perishable and that you need to revisit user expectations and satisfaction with the path that your solution is taking.

Evolving Scenarios

How do you know when you’ve defined your scenarios well enough? When the team has a common picture of what to build and test in the next iteration. There are three reasonable ways to test whether the scenarios communicate well enough:

• Customers can review the scenarios and provide meaningful feedback on suitability for achieving the goal, (in)completeness of steps, and relevance of the data.

• Testers can understand the sequence sufficiently to define tests, both positive and negative. They can identify how to test the sequence, what data is necessary, and what variations need to be explored.

• The development or architecture team can design the services, components, or features necessary to deliver the flow by identifying the necessary interactions, interfaces, and dependencies.

On the other hand, if you discover that your scenarios don’t capture the intended functionality sufficiently for these stakeholders, you can add more detail or extend the scenarios further.

Demos of newly implemented scenarios during or at the end of an iteration are very motivating. They can energize the team, create valuable peer interaction, and if customers are available, generate great dialog and enthusiasm.

As iterations progress, you should add scenarios. In early iterations, you typically focus on satisfiers and exciters, whereas in later iterations, you should ensure the absence of dissatisfiers. This approach naturally leads you to scenarios that explore alternate flows through the solution. The next chapter shows examples of turning scenario completion into a scorecard for tracking velocity and progress.

Personas and Scenarios and Their Alternatives

Although MSF uses personas and scenarios to capture the vision of users and their requirements, there are alternative terms and techniques used by other processes.

Actors and Use Cases

If you are familiar with methodologies such as Rational Unified Process, you probably know the terms “actor” and “use case,”12 and you might be wondering whether persona and scenario mean the same thing. MSF intentionally distinguishes scenarios from use cases with different terms because MSF advocates capturing a level of specificity that is not ordinarily captured in use cases. Scenarios and use cases start similarly, but they evolve very differently.

One of the MSF mindsets is Get Specific Early. For example, give a persona a picture, a name, and a biography instead of using a stick figure with an anonymous role name. You might have several personas for different demographics, whereas in use case analysis, you would have just one actor. The personas described as movie characters will be much more memorable and much easier to discuss when you envision your intended system behavior.

Similarly to personas, make scenarios specific. Include wireframes or sketches for screens and show the specific flow of data from one screen to the next. In use case analysis, this specificity is usually deferred to the step of designing a “realization.” Don’t defer it—test the intent with the specifics of the example immediately.

Also, unlike with use cases, don’t get bogged down in alternative flows until you need them. Your goal is to deliver working software as quickly as possible and to learn from it. If you deliver a working flow, you will know much more about what the alternatives should be than if you try to define them all in the abstract. The single flow of a scenario is easier to schedule, monitor, and revise.

User Stories

If you are familiar with eXtreme Programming, then you know about user stories.13 In XP, user stories are equivalent to the goals of scenarios that I described earlier—one sentence statements of intent written on 3” × 5” cards. XP does not elaborate the scenarios but requires that an onsite customer be available to describe the intended flow at the time of implementation. This is a good process if you have an onsite customer, a collocated team, no need to explore alternative designs, and no requirement that others sign off on designs.

Unfortunately, many teams need more explicit communication and signoff and cannot rely on a single onsite customer. In those cases, elaborating user stories into scenarios can be really helpful. Scenarios are also typically larger than user stories, especially when conceived around end-to-end value.

Exciters, Satisfiers, and Dissatisfiers

Scenarios tend to focus on requirements of a solution that make users enjoy the solution and achieve their goals. You can think of these as satisfiers. When the goals are important and the scenario compelling enough to elicit a “Wow!” from the customer, we can call the scenario an exciter.

At the same time, there are potential attributes of a solution (or absence of attributes) that can really annoy users or disrupt their experience. These are dissatisfiers.14 Both need to be considered in gathering a solution’s requirements. Dissatisfiers frequently occur because qualities of service haven’t been considered fully. “The system’s too slow,” “Spyware picked up my ID,” “I can no longer run my favorite app,” and “It doesn’t scale” are all examples of dissatisfiers that result from the corresponding qualities of service not being addressed.

Customers and users rarely describe the dissatisfiers—they are assumed to be absent. Consider the Monty Python sketch, The Pet Shoppe, in which a man goes to a pet store to return a parrot he bought. He did not specify that the parrot must be alive, so the pet dealer sells him a dead one. The sketch is hilarious because it reveals such a frequent misunderstanding. Statements such as “You didn’t tell me X,” “The customer didn’t specify X,” and “X didn’t show up in our research” are all symptoms of failing to consider the requirements necessary to eliminate dissatisfiers.

Figure 3.7 The Pet Shoppe

Customer: I wish to complain about this parrot what I purchased not half an hour ago from this very boutique.

Owner: Oh yes, the, uh, the Norwegian Blue . . . What’s, uh . . . What’s wrong with it?

Customer: I’ll tell you what’s wrong with it, my lad. ‘E’s dead, that’s what’s wrong with it! 15

Python (Monty) Pictures LTD

Qualities of Service

Scenarios are extremely valuable, but they are not the only type of requirement. Scenarios need to be understood in the context of qualities of service (QoS). (Once upon a time, QoS were called “non-functional requirements,” but because that term is non-descriptive, I won’t use it here. Sometimes they’re called ’ilities, which is a useful shorthand.)

Most dissatisfiers can be eliminated by appropriately defining qualities of service. QoS might define global attributes of your system, or they might define specific constraints on particular scenarios. For example, the security requirement that “unauthorized users should not be able to access the system or any of its data” is a global security QoS. The performance requirement that “for 95% of orders placed, confirmation must appear within three seconds at 1,000-user load” is a specific performance QoS about a scenario of placing an order.

Not all qualities of service apply to all systems, but you should know which ones apply to yours. Often QoS imply large architectural requirements or risk, so they should be negotiated with stakeholders early in a project.

There is no definitive list of all the qualities of service that you need to consider. There have been several standards,16 but they tend to become obsolete as technology evolves. For example, security and privacy issues are not covered in the major standards, even though they are the most important ones in many modern systems.

The following four sections list some of the most common QoS to consider on a project.

Security and Privacy

Unfortunately, the spread of the Internet has made security and privacy every computer user’s concern. These two QoS are important for both application development and operations, and customers are now sophisticated enough to demand to know what measures you are taking to protect them. Increasingly, they are becoming the subject of government regulation.

• Security: The ability of the software to prevent access and disruption by unauthorized users, viruses, worms, spyware, and other agents.

• Privacy: The ability of the software to prevent unauthorized access or viewing of Personally Identifiable Information.

Performance

Performance is most often noticed when it is poor. In designing, developing, and testing for performance, it is important to differentiate the QoS that influence the end experience of overall performance.

• Responsiveness: The absence of delay when the software responds to an action, call, or event.

• Concurrency: The capability of the software to perform well when operated concurrently with other software.

• Efficiency: The capability of the software to provide appropriate performance relative to the resources used under stated conditions.17

• Fault Tolerance: The capability of the software to maintain a specified level of performance in cases of software faults or of infringement of its specified interface. 18

• Scalability: The ability of the software to handle simultaneous operational loads.

User Experience

While “easy to use” has become a cliché, a significant body of knowledge has grown around design for user experience.

• Accessibility: The extent to which individuals with disabilities have access to and use of information and data that is comparable to the access to and use by individuals without disabilities.19

• Attractiveness: The capability of the software to be attractive to the user.20

• Compatibility: The conformance of the software to conventions and expectations.

• Discoverability: The ability of the user to find and learn features of the software.

• Ease of Use: The cognitive efficiency with which a target user can perform desired tasks with the software.

• Localizability: The extent to which the software can be adapted to conform to the linguistic, cultural, and conventional needs and expectations of a specific group of users.

Manageability

Most modern solutions are multitier, distributed, service-oriented or client-server applications. The cost of operating these applications often exceeds the cost of developing them by a large factor, yet few development teams know how to design for operations. Appropriate QoS to consider are as follows:

• Availability: The degree to which a system or component is operational and accessible when required for use. Often expressed as a probability.21 This is frequently cited as “nines,” as in “three nines,” meaning 99.9% availability.

• Reliability: The capability . . . to maintain a specified level of performance when used under specified conditions.22 Frequently stated as Mean Time Between Failures (MTBF).

• Installability and Uninstallability: The capability . . . to be installed in a specific environment23 and uninstalled without altering the environment’s initial state.

• Maintainability: The ease with which a software system or component can be modified to correct faults, improve performance or other attributes, or adapt to a changed environment.24

• Monitorability: The extent to which health and operational data can be automatically collected from the software in operation.

• Operability: The extent to which the software can be controlled automatically in operation.

• Portability: The capability of the software to be transferred from one environment to another.25

• Recoverability: The capability of the software to re-establish a specified level of performance and recover the data directly affected in the case of a failure.26

• Testability: The degree to which a system or component facilitates the establishment of test criteria and the performance of tests to determine whether those criteria have been met.27

• Supportability: The extent to which operational problems can be corrected in the software.

• Conformance to Standards: The extent to which the software adheres to applicable rules.

• Interoperability: The capability of the software to interact with one or more specified systems.28

What makes a good QoS requirement? As with scenarios, QoS requirements need to be explicitly understandable to their stakeholder audiences, defined early, and when planned for an iteration, they need to be testable. You may start with a general statement about performance, for example, but in an iteration set specific targets on specific transactions at specific load. If you cannot state how to test satisfaction of the requirement when it becomes time to assess it, then you can’t measure the completion.

Kano Analysis

There is a useful technique, called “Kano Analysis” after its inventor, which plots exciters, satisfiers, and dissatisfiers on the same axes.29 The X-axis identifies the extent to which a scenario or QoS is implemented, while the Y-axis plots the resulting customer satisfaction.

In the case of Monty Python’s parrot, life is a satisfier, or in other words, a necessary QoS. (Like many other QoS, the customer assumes it without specifying it and walks out of the store without having tested it. Just as a dysfunctional project manager might, the shopkeeper exploits the lack of specification.)

Of course, removing dissatisfiers only gets you a neutral customer. The quality of service that the parrot is alive and healthy gets you a customer who is willing to look further. On the other hand, satisfying the customer requires adding the requirements that make a customer want to buy your solution or a user want to use it. For the parrot, this might be the scenario that the customer talks to the parrot and the parrot sings back cheerfully.

Health is a must-have requirement of the parrot—its absence leaves the customer disgusted; its presence makes the customer neutral. The singing scenario is a satisfier or “performance requirement”—customers will expect it, and will be increasingly satisfied to the extent it is present.

Exciters are a third group of scenarios that delight the customer disproportionately when present. Sometimes they are not described or imagined because they may require true innovation to achieve. On the other hand, sometimes they are simple conveniences that make a huge perceived difference. For a brief period, minivan manufacturers competed based on the number of cupholders in the back seats, then on the number of sliding doors, and then on backseat video equipment. All these pieces of product differentiation were small, evolutionary features, which initially came to the market as exciters and over time came to be recognized as must-haves.

Figure 3.8 The X-axis shows the extent to which different solution requirements are implemented; the Y-axis shows the resulting customer response.

Returning to the parrot example, imagine a parrot that could clean its own cage.

You can generalize these three groups of requirements as must-haves (absence of dissatisfiers), satisfiers, and exciters, based on the extent to which their presence or absence satisfies or dissatisfies a customer. Must-haves constrain the intended solution, exciters optimize it, and satisfiers are expected but not differentiated. For completeness, there is a fourth set—indifferent features—that don’t make a difference either way (such as the color of the parrot’s cage).

Figure 3.9 The model provides an expressive tool to evaluate solution requirements. It splits them into four groups: must-haves, satisfiers, exciters, and indifferent.

Technology Adoption Lifecycle

One last framework is useful in understanding the categorization of requirements and the differences among separate user segments. This framework is the technology adoption lifecycle, shown in Figure 3.10. It is a widely used model that describes the penetration of a product or technology into widespread use.30

Figure 3.10 The S-curve is the standard representation of the Technology Adoption Lifecycle, broken into four segments for early adopters, early majority, late majority, and laggards.

The relative importance of dissatisfiers and exciters has a lot to do with where on the technology adoption lifecycle your users lie. If they are early adopters, who embrace a new solution out of enthusiasm for the technology, then they are likely to be highly tolerant of dissatisfiers and willing to accept involved workarounds to problems in order to realize the benefits of the exciters. On the other hand, the later in the adoption lifecycle your users lie, the less tolerant they will be of dissatisfiers, and the more they will expect exciters as commonplace. This is a natural market evolution in which users become progressively more sensitive to the entirety of the solution.

Gathering the Data

Although the Monty Python parrot is a comic example, Kano Analysis is a very useful tool for thinking about your requirements. The easiest way to gather data for Kano Analysis is to ask questions in pairs, one about the appropriateness of a feature and the other about the importance, such as the following:31

• To what extent is the feature (scenario) that we just showed you (described to you) on target?

• How important is it that you can do what we just showed (or described)?

Whether you are doing broad statistical research or informal focus groups, you can gain insight into the solution by separating the scenarios and QoS, or the features as implemented, into the four groups.

Because it’s easy to think of requirements, most projects end up having far too many of them (and not always the right ones). The Kano classification lets you see a total picture of requirements readily. This practice can shed light on different viewpoints and hidden assumptions about the relative importance of exciters, satisfiers, and dissatisfiers.

Kano Analysis provides a way of prioritizing scenarios and QoS. When planning an iteration, you can plot a set of requirements and evaluate the likely customer satisfaction. As you evolve a project across iterations, you will want to move up the Y-axis of customer satisfaction. This framework for differentiating the value of requirements gives you a powerful tool for assessing the impact of tradeoff decisions.

Summary

This chapter covered the description of a project’s vision and requirements. As discussed in the previous chapter, MSF advocates iterative software development, in keeping with the value-up paradigm discussed in Chapter 1, “A Value-Up Paradigm.” This implies that requirements be detailed an iteration at a time because they are perishable.

In MSF, the primary forms of requirements are scenarios and qualities of service (QoS). Scenarios are the functional requirements. A scenario addresses the goals of a persona or group of personas. Stated in the user’s language, scenarios describe the sequence of steps and the interaction necessary for the persona to accomplish the intended goal. Scenarios can be elaborated in wireframes and storyboards.

QoS describe attributes of the intended solution in areas such as security, performance, user experience, and manageability. These are both global attributes and specific constraints on scenarios. QoS are often considered architectural requirements, both because they may have significant implications for architecture and because they often require specialist architectural knowledge to enumerate.

Not all requirements are created equal. It is important to understand the customer perception of these requirements as satisfiers, exciters, and must-haves (the removal of dissatisfiers). Scenarios alone rarely eliminate all dissatisfiers, which often lurk in poorly planned QoS. And scenarios by themselves do not design the architecture, the implementation details, or all the variant flows.

With requirements in hand, the next chapter looks at the process of managing a project.

Endnotes

1. Frederick P. Brooks, Jr., The Mythical Man-Month: Essays on Software Engineering, Anniversary Edition (Addison-Wesley, 1995), 199.

2. Adapted from Geoffrey A. Moore, Crossing the Chasm: Marketing and Selling High-Tech Products to Mainstream Customers (New York: HarperCollins, 2002), 154.

3. Beck 2000, op. cit., 60–61.

4. IEEE STD 830 is an example set of guidelines for such specifications.

5. Moore, Crossing the Chasm, 93–4.

6. Alan Cooper and Robert Reimann, About Face 2.0: The Essentials of Interaction Design (Indianapolis: Wiley, 2003), 59.

7. For a closer look at how Microsoft uses personas, see John Pruitt, Persona Lifecycle: Keeping People in Mind Throughout Product Design (Elsevier, 2005).

8. Cooper 2003, op. cit., 12.

9. Ibid., 35.

10. Hugh Beyer and Karen Holzblatt, Contextual Design: Designing Customer-Centered Systems (San Francisco: Morgan Kaufmann, 1998) and Cooper op cit., 44 ff.

11. Moore, op. cit., 98.

12. Originally coined in Ivar Jacobson et al., Object-Oriented Software Engineering: A Use Case Driven Approach (Reading: Addison-Wesley/ACM Press, 1992), 159 ff., and elaborated by many including Alistair Cockburn, Writing Effective Use Cases (Pearson Education, 2000). The body of practice around use cases took a turn away from the intentions of these authors, and by using “scenarios,” MSF connects to the separate tradition of the User Experience community.

13. For example, Mike Cohn, User Stories Applied: For Agile Software Development (Boston: Addison-Wesley, 2004).

14. J.M. Juran, Juran on Planning for Quality (Simon & Schuster, 1988).

15. Monty Python’s Flying Circus, “The Dead Parrot Sketch,” available on The 16-Ton Monty Python DVD Megaset, Disc 3 (A&E Home Video, 2005).

16. For example, ISO/IEC 9126 and IEEE Std 610.12-1990.

17. [ISO/IEC 9126-1/2001]

18. [ISO/IEC 9126-1/2001]

19. Section 504 of the Rehabilitation Act, 29 U.S.C. § 794d, available from http://www.usdoj.gov/crt/508/508law.html.

20. [ISO/IEC 9126-1/2001]

21. [IEEE STD 610.12-1990], 11

22. [ISO/IEC 9126-1/2001]

23. [ISO/IEC 9126-1/2001]

24. [IEEE STD 610.12-1990], 46

25. [ISO/IEC 9126-1/2001]

26. [ISO/IEC 9126-1/2001]

27. [IEEE STD 610.12-1990], 76

28. [ISO/IEC 9126-1/2001]

29. Kano, N., Seraku, N., Takahashi, F. and Tsuji, S. (1996), “Attractive quality and must-be quality,” originally published in Hinshitsu (Quality), The Journal of the Japanese Society for Quality Control, XIV:2, 39–48, April 1984, translated in The Best On Quality, edited by John D. Hromi. Volume 7 of the Book Series of the International Academy for Quality (Milwaukee: ASQC Quality Press, 1996).

30. For example, Moore, op. cit.

31. For well documented questionnaires on gathering the data, see for example, Center for Quality Management Journal, II:4, Fall 1993.